Abstract

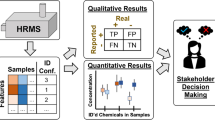

Mass spectrometry (MS) has emerged as a tool that can analyze nearly all classes of molecules, with its scope rapidly expanding in the areas of post-translational modifications, MS instrumentation, and many others. Yet integration of novel analyte preparatory and purification methods with existing or novel mass spectrometers can introduce new challenges for MS sensitivity. The mechanisms that govern detection by MS are particularly complex and interdependent, including ionization efficiency, ion suppression, and transmission. Performance of both off-line and MS methods can be optimized separately or, when appropriate, simultaneously through statistical designs, broadly referred to as “design of experiments” (DOE). The following review provides a tutorial-like guide into the selection of DOE for MS experiments, the practices for modeling and optimization of response variables, and the available software tools that support DOE implementation in any laboratory. This review comes 3 years after the latest DOE review (Hibbert DB, 2012), which provided a comprehensive overview on the types of designs available and their statistical construction. Since that time, new classes of DOE, such as the definitive screening design, have emerged and new calls have been made for mass spectrometrists to adopt the practice. Rather than exhaustively cover all possible designs, we have highlighted the three most practical DOE classes available to mass spectrometrists. This review further differentiates itself by providing expert recommendations for experimental setup and defining DOE entirely in the context of three case-studies that highlight the utility of different designs to achieve different goals. A step-by-step tutorial is also provided.

ᅟ

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The modern role of an analytical chemist in the field of mass spectrometry (MS) broadly falls across three categories: (1) understanding fundamental mechanisms, (2) innovating new preparatory, ionization, and analyzer technologies, and (3) validating emerging technologies. Precise and accurate measurements, defined as those made with minimal error and no biases, are paramount to achieving these goals. Thus, mass spectrometry research naturally lends itself to statistical influences during the experimental setup and data analysis stages, and peer-review journals are increasingly demanding rigorous statistics [1].

A natural component of MS technology development and validation is optimization. Design of experiments (DOE), the focus of this tutorial review, broadly encompasses the use of statistics to select the levels and combinations of experimental parameters on which response variables may be modeled and subsequently mathematically optimized. The adoption of DOE practices represents an emerging trend in mass spectrometry. This review frames its considerations related to design selection and construction for a researcher with an understanding of statistical principles and a rudimentary understanding of modeling. For convenience, a glossary is provided in the supplemental material (ESM 1) that defines statistics terms, which are indicated by italics the first time they are introduced. The intent of this review is to enable a post-doctorate mass spectrometrist to utilize DOE to design and execute an optimization study independently and without a statistician consultant and to introduce the concepts of DOE to graduate level students who, with assistance, can incorporate these principles into thesis and publication level work. These concepts are emphasized in a decision tree (Figure 1) and detailed in a step-by-step procedure (ESM 2) using common GUI software.

Historical Framework and Statistical Principles of DOE

DOE originated to solve rudimentary experimental problems, but its principles are directly transferable to advanced technologies such as mass spectrometry. Developed in 1926 by Ronald A. Fisher, DOE was first used to arrange agriculture field experiments in a geometry such that the results would be independent of environmental biases [2]. The principles used in this first design construction have emerged as pillars of statistics. The importance of replication in understanding variation was defined by many of his predecessors, including William “Student” Gosset. Other concepts were seeded by Fisher and expanded upon by his contemporaries, including Stuart Chapin (1950, randomization) [3], and Wald and Tukey (1943 and 1947, respectively, blocking) [4, 5].

In complex experimental design involving greater than one factor, the order in which factor settings are evaluated is chosen to adhere to the following three principles, which are defined in greater detail in the context of a previous mass spectrometry review [6].

-

1.

Blocking is introduced to account for known experimental biases. In mass spectrometry, drift can result on a day-to-day basis, and thus may serve as a natural demarcation for blocks.

-

2.

Randomization is performed within blocks and protects against unknown or uncontrollable sources of error. The common sources of error in mass spectrometry experiments are outlined in Table 1.

Table 1 Origins of variation and error in mass spectrometry experiments. DOE may be used to optimize conditions and control for known sources of error -

3.

Replication can be used to calculate the pure error derived from measurements. If the measurement error is the primary source of variability as in optimization studies, duplicating individual data points, rather than whole studies, is sufficient.

In traditional optimization experiments, factors are sequentially tested one-factor-at-a-time (OFAT). Conclusions are drawn against the null hypothesis that there is no difference between two parameter settings, yet this type of testing remains susceptible to type I and type II errors. Even with careful attention to adequate sample size, the OFAT approach ignores potential synergies between factors and risks selecting parameter settings falling on local maxima, versus identifying the true optimum. The alternative to OFAT is DOE, which is based on a full factorial design strategy. In this strategy, all factors are combinatorically tested at specific levels simultaneously. More specifically, for each factor (k), a testing range (bounds) is selected based on experience, and two or more levels (X) within that range are tested; the total number of experiments equals Xk. These data points can be modeled by standard linear regression techniques, and when the true optimum lies between the bounds, it will be identified.

The power of DOE is its ability to choose a subset of the full factorial data points, produce models with similar statistical power, and more efficiently locate the true optimum. It can accomplish this by making assumptions about the experimental error based on replication of a subset of points versus the entire design and using randomization and blocking to reduce biases. Table 2 collates all applications of DOE in MS published from 2005 to 2015. The case studies presented below highlight designs that are most applicable to mass spectrometry optimization and provide insight into the design selection, explanations into the statistical framework of the design, and discussions regarding the type of information that may be obtained.

MS Case Studies to Define Doe Tools

The focus of this manuscript is experimental design with the goal of optimizing a response that may be observed or measured. Depending on the level of familiarity with the system, the significant factors may be known but not optimized, or may need to be discovered. Consequently, the starting number of factors may be roughly broken into three classes: large (>14), mid-size (5–14), or small (2–4). Using this starting point, and by making certain assumptions about the level of detail needed to analyze the response model, the appropriate model may be selected (Figure 1). The case studies below highlight key differences between each design, provide insights into utilizing DOE, and explore their use for mass spectrometrists.

Case Study #1 (Zheng et al. 2013) [212]: Screening of a Large Number of Factors

Screening of Factors to Determine the Most Influential Variables

Design Utility:

Often a new or unfamiliar system needs to be optimized and it is not clear which of a large number of factors (>14) are relevant. Mass spectrometer control software (e.g., XCalibur) have up 40 adjustable parameters relevant to ionization, detection, and accurate mass determination and analysis software (e.g., Proteome Discoverer) is equally complicated with over 50 adjustable settings. Screening designs are well suited for selecting a subset of factors for subsequent response surface analysis. Options for these DOEs include Placket-Burman designs [363], described in the following case study, Taguchi arrays, and resolution III fractional factorial designs. As a rule of thumb, optimization cannot be implemented directly from these designs, but would be performed in a subsequent study.

Study Summary:

Mass spectrometry analysis software is as important to ensuring accurate and robust results as the experimental preparation or ionization parameters. Though certain cut-offs, such as a 1% false discovery in proteomics, are accepted, many software choices are left to the discretion of analysts. Thus, optimization of search parameters represents an under-appreciated area in mass spectrometry. In a study performed by Zheng and coworkers, they tackled the problem in metabolomics of minimizing the number of “unreliable” peaks due to low signal-to-noise, and maximizing the number of reliable peaks identified in the widely used software XCMS [212] in two studies conducted on metabolite standards and human plasma.

Factor and Bound Selection:

Screening of 17 factors, of which six were qualitative variables, was desired. It is up to the experimenter to select bounds or limits that are wide enough that they do not bias selection of or exclude the optimum but are narrow enough that they are experimentally feasible and do not expand the design space unnecessarily. In this study, two parallel DOEs (Design I and Design II), which shared a lower/upper bound limit, were executed to expand the range of the bounds that could be tested in the context of a two-level screening design study. This reflected an understanding on the part of the researchers that they did not have enough experience with the system to narrow the bounds sufficiently. An alternative approach would be to conduct a design with wide bounds, followed by a second screen centered on the preferred levels. However, even with the additional design approach, the 17 factors were screened in a total of 39 runs. This was a 99.97% reduction in the number of experiments compared with the full factorial design of 217 = 131,072 runs.

When experience and literature review are not sufficient to determine even a wide range of bounds, preliminary testing should be conducted to ensure that the factor limits produce real and accurate data. Because the nature of mass spectrometry is that the lack of detection of analytes indicates low abundance rather than absence, the inclusion of this type of “zero” data due to incompatible settings would improperly bias the response models, and thus this data must be treated as “missing” or poorly substituted with the limit of detection. The removal of these data points is particularly detrimental for DOE experiments and subsequent modeling because the number of experiments has already been statistically minimized. When these situations arise, such as in the case of incompatible MALDI matrix compositions in Brandt et al.’s optimization study [338], alternative DOEs that do not sample those points must be used or the bounds must be changed.

DOE Construction:

Plackett-Burman designs allow for the main effects of (n – 1), where n is the number of runs, factors to be estimated. Screening designs generally test factors at two levels and cannot be used to estimate any interactions. A description of the mathematics to construct a screening design array [364] is beyond the scope of this review, but they are readily generated by all statistical software.

Results:

In this XCMS analysis optimization study, significant changes to the mean response value were caused by 10 of 17 factors. Six of these factors had a negative correlation with the number of reliable peaks, and thus were set to their lowest level, which corresponded to the default value. The remaining four factors were followed up with a central composite design (CCD) study, which produces detailed response surface models and is described in depth in case study #3. The combination of these two designs resulted in a 10% increase in reliable peaks in the standard solution, and approximately a 30% increase in reliable peaks in plasma, thus demonstrating the power of this approach for complex samples and a large number of variables.

Case Study #2 (Zhang et al. 2014) [194]: Approaches for a Mid-Range Number of Factors

Higher Level Fractional Designs to Estimate Main and Interaction Effects

Design Utility:

DOE for a mid-size study (5–14 factors) provides enough data points (degrees of freedom) to model parameters with a quadratic equation that includes two-way interactions or synergies. However, it excludes three-way or higher interactions between factors. Optimization may be executed directly when it is appropriate to make a statistical assumption known as the “sparsity of effects principle” or the “heredity principle.” These principles were first discussed by Wu and Hamada (1992), who detailed that the mathematical probability of an interaction being both real and statistically significant was much lower as the model increased in size [365]. Resolution IV fractional factorial designs [366] and definitive screening designs [367, 368] (ESM 2) directly facilitate optimization of a mid-range number of factors and may be constructed using free or proprietary software packages described in a recent review [7].

Study Summary:

The goal of the study performed by Zhang et al. was to optimize spectrum-to-spectrum reproducibility by adjusting the input parameters that are used in the matrix assisted laser desorption ionization (MALDI) control software for automated data acquisition (Bruker FlexControl software, v3.0, Bruker Daltonics) [194]. The parameters in the design were selected according to a resolution IV fractional factorial design. In this design, one sacrifices the modeling of three-way or higher interactions, which would produce a full response surface model. The justification was made based on the authors’ understanding of the sparsity principle, and their desire to minimize the number of experiments: 19 for resolution IV fractional factorial, 48 for full response surface, and 32 for 2-level full factorial design. They detected metabolites from a Pseudomonas aeruginosa cell culture suspension mixed with sinapinic acid matrix and then calculated the Pearson product–moment correlation coefficient between spectra as the mathematical definition of reproducibility.

Response Variable Selection:

Ideally, continuous response variables, such as a correlation coefficient, should be chosen unless the researcher is familiar with generalized linear modeling techniques. For example, the number of peaks observed in a mass spectrum is a Poisson count because the value must be an integer, and a better alternative would be to model the abundances of selected analytes.

DOE Construction: Aliasing:

The use of any fractional factorial design [366], regardless of its resolution, requires an understanding of how the design matrix affects the number of estimable interactions. As summarized in this case-study, for a resolution IV design, all two-way interactions/synergies may be modeled. However, the model estimates for the interactions have more error, compared with the main effects, because they are confounded or aliased with each other. Design software will automatically choose the combinations of parameter levels in the design matrix to minimize errors across the entire model. If preexisting insights into the response surface are known, the researcher may choose to override aspects of this construction. This is usually done if an interaction is already known and very accurate model estimates are desired for that term. Thus, aliasing is a concept that should be well grasped by any experimenter employing DOE.

Mathematically, when models are built on response variables, the factor effects are estimated as the average change between the bounds normalized across all other levels. For example, in a full factorial design with five factors named A, B, C, D, and E (Yates notation), the effect of A (Equation 1) is the average of all responses obtained at the high (+) level of A minus the average of all responses obtained at the low (−) level of A [364]. These estimates may also be obtained through standard least squares analysis (Equation 2) as regression coefficients. Though the values between the two methods will differ, their relative magnitude and direction will stay approximately the same. As these effects represent the sum of total effects of A, they are named contrast values; however, due to confusion, this effect versus contrast nomenclature has become interchangeable in the literature.

Equation 1. Method to calculate the effect of A directly as an average estimate. The y-responses obtained at runs at the high level minus the low level are averaged. Run parameters are indicated by a lower case letter representing the high level or the absence of a letter representing a low level setting. In a five-factor full factorial design, there are 25 total runs, with 50% of the runs \( \left(\frac{1}{2}\times {2}^5\right) \) taken at the high and low level of A.

where lower case letters indicate the testing of the (+) level of a factor.

Equation 2. Method of least squares regression to obtain regression coefficients.

where y is the response variable, x a …x e , are the parameter settings for factors a–e, β a …β e , are the main effect regression coefficients, β ab …β de , are the secondary effect regression coefficients, and so forth.

Fractional factorial designs are a subset of factorial designs and result in Xk-p number of runs, where p is defined by the resolution. A consequence of producing experimental designs with a reduced number of runs is the loss of information because effects become confounded, or aliased with each other. Mathematically, this means that the linear combination of factor levels, as noted in Equation 1, is identical for two or more effects. Practically, this means that depending on the fractional factorial design resolution or DOE chosen, certain effects may have higher error or may not be able to be estimated.

Results:

In the two-level, five-factor, resolution IV design employed in this study, 16 runs, or ½ of the full factorial design runs, were statistically selected such that all main effects may be estimated with excellent certainty. All two-way interactions may be estimated, but they are partially confounded with each other, and higher order effects may not be estimated because they are completely aliased. The design was further augmented with a center point, or “0” level to allow for quadratic terms to fit a curved surface. The application of nonlinear functions is well established in mass spectrometry. For example, the effects of fluence on ion signal in MALDI is logarithmic [369] and the effects of hydrophobicity is approximately quadratic on ionization efficiency [370]. Using this modeling approach, three of the main effects, two interactions, and a quadratic term were determined to be significant.

The DOE models were mathematically optimized to achieve real, statistically significant gains in reproducibility, up to 98%, demonstrating the efficiency and power of DOE. A second advantage of constructing mathematical models is they can reveal empirical relationships between the response and each factor that would not otherwise be apparent in OFAT studies. In this study, the negative correlation between reproducibility and base peak resolution was surprising and informative, as it was formerly assumed to be positively correlated. These can spur hypothesis formation and future research that would otherwise not receive attention.

Case Study #3 (Switzar et al. 2011) [8]: Response Surface Studies

Full Models May Be Generated to Characterize the Response Surface and Most Accurately Find the Optimum

Design Utility:

The most precise optimization studies are best performed on experiments with a minimal number (2–4) of factors because the full response surface may be characterized in a reasonable number of runs (case study #3, Figure 2). These designs test the greatest number of levels per factor, generate models with high resolution, and require a good understanding of the system to constrain bounds to a narrow to moderate range.

Construction of linear, interaction, quadratic, and response surface models (Y) based on sampling points for three continuous variables (ABC). As the model grows in complexity, the predicted optimum better approximates the true optimum, shown as a star. The data on which models were fit was obtained from Gao et al. (2014) [372]. Models were fit in R using the “RSM” package [373] and its associated dependencies.

Study Summary:

In the study performed by Switzar et al. they sought to optimize protein digestion conditions for small molecule (drug)–protein complexes. Optimization of digestions has been performed previously to maximize protein coverage [9], but protein complexes pose unique challenges and potentially called for establishment of new parameters. As the factors affecting tryptic enzymatic activity had been established (pH, time, temperature), this offered an opportunity to perform a focused response surface study to determine optimal settings. The best options for this type of DOE include a central composite design, detailed below, a Box Behnken design, or a fractional factorial design of resolution V.

DOE Construction: Orthogonality and Rotatability:

A central composite design (CCD) is effectively an augmented fractional design. Extra design points, called axial points, are added to the design matrix such that five levels of each factor are tested. Experiments may be designed to be orthogonal and/or rotatable [371]. Rotatability ensures that the variance associated with the predictive response is uniform over the entire design space. Mathematically, orthogonality helps ensure that the parameters in the model are estimated independently, despite the addition of blocking and replicates in the design. Practically, this results in model estimates with greater precision. The level at which the axial points are selected is based on a distance (α) from the center-point and the value of α directly effects the orthogonality and rotatability of a design.

Results:

In this study, global protein digestion coverage and production of the specific peptide complexed to the drug were equally valued responses. Computational software can iteratively vary parameters, determine the response range, and select levels that are on average the best across multiple models. This is important for any study looking simultaneously at production of multiple peptides whose abundances are equally important. Optimization accomplished through this method resulted in over 90% tryptic coverage and good (7.7 × 105) peak area of the drug-complexed peptide. Importantly, the authors validated their gains in tryptic and thermolysin digestion by translating it from a model drug-HSA (human serum albumin) system to a “clinically relevant HSA adduct.” Significant gains in protein coverage were observed with thermolysin only, yet significant increases in the abundance of the targeted drug–peptide complex was observed in both protocols (up to 7-fold) over established literature parameters.

Additional Considerations in Constructing Designs

The starting point in DOE is to consider what type of information is needed and therefore what model or design is desired (Figure 1). A good rule of thumb for larger studies is to spend about a quarter of the laboratory group’s time and financial resources on a preliminary screening DOE, followed by an in-depth response surface study (or studies) of the statistically important variables [374].

As detailed in each case study, multiple designs are available for each class of study. Software accessibility may be a driving factor in choosing the design since computer aided design programs vary somewhat in the preformulated DOEs available. The development of these GUI softwares over the last decade is primarily responsible for enabling scientists to responsibly design and execute DOEs without requiring great statistical expertise [7]. In the supplement (ESM 2), we provide a step-by-step example complete with software screen shots demonstrating how to use one such software to construct a designed experiment.

Choosing the complexity of a response surface design involves deciding the trade-off between how precisely the optimal point is estimated and the complexity of the design (ESM 2, Figure S1). As shown in Figure 2, increasing the number or types of regression coefficients allows the optimum to be more precisely located, especially in the case of quadratic functions. However, the cost to efficiency in terms of the number of additional experimental runs required for a more robust DOE that adds three-way or higher interactions may not necessarily be worth a small gain, for example 5%, in response. In the three-factor example below, the linear, interaction, quadratic, and response surface models require 4, 8, 11, and 20 runs to estimate 3, 6, 6, and 9 regression coefficients, respectively. Visually, the implications of using lower resolution models can be seen by looking at the value of the predicted response, shown as a heat map on the y-axis, at the optimized factor settings. Only in the quadratic or response surface models below is the response maximized at the optimal conditions.

Power calculations should be used to minimize type II (false negative) errors and to determine the sample size needed to compare parameter settings. Since the random variation in an optimization study is small relative to the random variation in biological studies, the sample sizes required for sufficient power in optimization studies are generally much lower than those required for biological studies. DOE software packages allow one to evaluate the power of various designs.

Conclusions

The upfront planning to design an experiment using well-defined statistical tools ultimately saves time and resources. Post-hoc modeling yields concrete results, produces optimized conditions over multiple responses, and elucidates interactions that may be governed by novel mechanisms. This type of analysis is well suited to the needs of the modern mass spectrometrist and may be executed by any well-equipped laboratory with access to statistical software.

References

Bramwell, D.: An introduction to statistical process control in research proteomics. J. Proteom. 95, 3–21 (2013)

Fisher, R.A.: The arrangement of field experiments. J. Minist. Agric. G. B. 33, 503–513 (1926)

Chapin, S.F.: Research note on randomization in a social experiment. Science 112, 760–761 (1950)

Wald, A.: An extension of Wilks' method for setting tolerance limits. Ann. Math. Stat. 14, 45–55 (1943)

Tukey, J.W.: Non-parametric estimation II. Statistically equivalent blocks and tolerance regions—the continuous case. Ann. Math. Stat. 18, 529–539 (1947)

Oberg, A.L., Vitek, O.: Statistical design of quantitative mass spectrometry-based proteomic experiments. J. Proteome Res. 8, 2144–2156 (2009)

Hibbert, D.B.: Experimental design in chromatography: a tutorial review. J. Chromatogr. B 910, 2–13 (2012)

Switzar, L., Giera, M., Lingeman, H., Irth, H., Niessen, W.M.A.: Protein digestion optimization for characterization of drug-protein adducts using response surface modeling. J. Chromatogr. A 1218, 1715–1723 (2011)

Loziuk, P.L., Wang, J., Li, Q.Z., Sederoff, R.R., Chiang, V.L., Muddiman, D.C.: Understanding the role of proteolytic digestion on discovery and targeted proteomic measurements using liquid chromatography tandem mass spectrometry and design of experiments. J. Proteome Res. 12, 5820–5829 (2013)

Shuford, C.M., Li, Q., Sun, Y.-H., Chen, H.-C., Wang, J., Shi, R., Sederoff, R.R., Chiang, V.L., Muddiman, D.C.: Comprehensive quantification of monolignol-pathway enzymes in populus trichocarpa by protein cleavage isotope dilution mass spectrometry. J. Proteome Res. 11, 3390–3404 (2012)

Christin, C., Smilde, A.K., Hoefsloot, H.C.J., Suits, F., Bischoff, R., Horvatovich, P.L.: Optimized time alignment algorithm for LC−MS data: correlation optimized war** using component detection algorithm-selected mass chromatograms. Anal. Chem. 80, 7012–7021 (2008)

Randall, S.M., Cardasis, H.L., Muddiman, D.C.: Factorial experimental designs elucidate significant variables affecting data acquisition on a quadrupole orbitrap mass spectrometer. J. Am. Soc. Mass Spectrom. 24, 1501–1512 (2013)

Andrews, G., Dean, R., Hawkridge, A., Muddiman, D.: Improving proteome coverage on a LTQ-orbitrap using design of experiments. J. Am. Soc. Mass Spectrom. 22, 773–783 (2011)

Raji, M.A., Schug, K.A.: Chemometric study of the influence of instrumental parameters on ESI-MS analyte response using full factorial design. Int. J. Mass Spectrom. 279, 100–106 (2009)

Szalowska, E., Van Hijum, S.A.F.T., Roelofsen, H., Hoek, A., Vonk, R.J., te Meerman, G.J.: Fractional factorial design for optimization of the seldi protocol for human adipose tissue culture media. Biotechnol. Progr. 23, 217–224 (2007)

Robichaud, G., Dixon, R.B., Potturi, A.S., Cassidy, D., Edwards, J.R., Sohn, A., Dow, T.A., Muddiman, D.C.: Design, modeling, fabrication, and evaluation of the air amplifier for improved detection of biomolecules by electrospray ionization mass spectrometry. Int. J. Mass Spectrom. 300, 99–107 (2011)

He, J.N., Yan, H.T., Fan, C.L.: Optimization of ultrasound-assisted extraction of protein from egg white using response surface methodology (RSM) and its proteomic study by MALDI-TOF-MS. RSC Adv. 4, 42608–42616 (2014)

Valente, K.N., Schaefer, A.K., Kempton, H.R., Lenhoff, A.M., Lee, K.H.: Recovery of Chinese hamster ovary host cell proteins for proteomic analysis. Biotechnol. J. 9, 87–99 (2014)

Houbart, V., Cobraiville, G., Lecomte, F., Debrus, B., Hubert, P., Fillet, M.: Development of a nano-liquid chromatography on chip tandem mass spectrometry method for high-sensitivity hepcidin quantitation. J. Chromatogr. A 1218, 9046–9054 (2011)

Barry, J.A., Muddiman, D.C.: Global optimization of the infrared matrix-assisted laser desorption electrospray ionization (IR MALDESI) source for mass spectrometry using statistical design of experiments. Rapid Commun. Mass Spectrom. 25, 3527–3536 (2011)

Ramezani, H., Hosseini, H., Kamankesh, M., Ghasemzadeh-Mohammadi, V., Mohammadi, A.: Rapid determination of nitrosamines in sausage and salami using microwave-assisted extraction and dispersive liquid–liquid microextraction followed by gas chromatography–mass spectrometry. Eur. Food Res. Technol. 240, 441–450 (2015)

Chienthavorn, O., Subprasert, P., Insuan, W.: Nitrosamines extraction from frankfurter sausages by using superheated water. Sep. Sci. Technol. 49, 838–846 (2014)

Bajer, T., Bajerova, P., Kremr, D., Eisner, A., Ventura, K.: Central composite design of pressurised hot water extraction process for extracting capsaicinoids from Chili Peppers. J. Food Compos. Anal. 40, 32–38 (2015)

Kemmerich, M., Rizzetti, T.M., Martins, M.L., Prestes, O.D., Adaime, M.B., Zanella, R.: Optimization by central composite design of a modified quechers method for extraction of pesticide multiresidue in sweet pepper and analysis by ultra-high-performance liquid chromatography-tandem mass spectrometry. Food Anal. Method 8, 728–739 (2015)

Fauvelle, V., Mazzella, N., Morin, S., Moreira, S., Delest, B., Budzinski, H.: Hydrophilic interaction liquid chromatography coupled with tandem mass spectrometry for acidic herbicides and metabolites analysis in fresh water. Environ. Sci. Pollut. Res. 22, 3988–3996 (2015)

Hoff, R.B., Pizzolato, T.M., Peralba, M.D.R., Diaz-Cruz, M.S., Barcelo, D.: Determination of sulfonamide antibiotics and metabolites in liver, muscle and kidney samples by pressurized liquid extraction or ultrasound-assisted extraction followed by liquid chromatography-quadrupole linear ion trap-tandem mass spectrometry (HPLC-Qqlit-MS/MS). Talanta 134, 768–778 (2015)

Li, S.S., Liu, X.G., Zhu, Y.L., Dong, F.S., Xu, J., Li, M.M., Zheng, Y.Q.: A statistical approach to determine fluxapyroxad and its three metabolites in soils, sediment and sludge based on a combination of chemometric tools and a modified quick, easy, cheap, effective, rugged, and safe method. J. Chromatogr. A 1358, 46–51 (2014)

Chen, H.C., Kuo, H.W., Ding, W.H.: Determination of carbon- based engineered nanoparticles in marketed fish by microwave- assisted extraction and liquid chromatography-atmospheric pressure photoionization-tandem mass spectrometry. J. Chin. Chem. Soc. 61, 350–356 (2014)

Arjomandi-Behzad, L., Yamini, Y., Rezazadeh, M.: Extraction of pyridine derivatives from human urine using electromembrane extraction coupled to dispersive liquid–liquid microextraction followed by gas chromatography determination. Talanta 126, 73–81 (2014)

Abdulra'uf, L.B., Tan, G.H.: Chemometric study and optimization of headspace solid-phase microextraction parameters for the determination of multiclass pesticide residues in processed cocoa from Nigeria using gas chromatography/mass spectrometry. J. AOAC Int. 97, 1007–1011 (2014)

Prieto, A., Zuloaga, O., Usobiaga, A., Etxebarria, N., Fernández, L.A.: Use of experimental design in the optimization of stir bar sorptive extraction followed by thermal desorption for the determination of brominated flame retardants in water samples. Anal. Bioanal. Chem. 390, 739–748 (2008)

Lagunas-Allué, L., Sanz-Asensio, J., Martínez-Soria, M.T.: Response surface optimization for determination of pesticide residues in grapes using MSPD and GC-MS: assessment of global uncertainty. Anal. Bioanal. Chem. 398, 1509–1523 (2010)

Kamankesh, M., Mohammadi, A., Hosseini, H., Tehrani, Z.M.: Rapid determination of polycyclic aromatic hydrocarbons in grilled meat using microwave-assisted extraction and dispersive liquid–liquid microextraction coupled to gas chromatography–mass spectrometry. Meat Sci. 103, 61–67 (2015)

Chen, D.W., Miao, H., Zou, J.H., Cao, P., Ma, N., Zhao, Y.F., Wu, Y.N.: Novel dispersive micro-solid-phase extraction combined with ultrahigh-performance liquid chromatography high-resolution mass spectrometry to determine morpholine residues in citrus and apples. J. Agric. Food Chem. 63, 485–492 (2015)

Chen, D.W., Zhang, S., Miao, H., Zhao, Y.F., Wu, Y.N.: Simple and rapid analysis of muscarine in human urine using dispersive micro-solid phase extraction and ultra-high performance liquid chromatography-high resolution mass spectrometry. Anal. Methods 7, 3720–3727 (2015)

Van Poucke, C., Dumoulin, F., Van Peteghem, C.: Detection of banned antibacterial growth promoters in animal feed by liquid chromatography-tandem mass spectrometry: optimization of the extraction solvent by experimental design. Anal. Chim. Acta 529, 211–220 (2005)

Gómez-Ariza, J.L., García-Barrera, T., Lorenzo, F., González, A.G.: Optimization of a pressurised liquid extraction method for haloanisoles in cork stoppers. Anal. Chim. Acta 540, 17–24 (2005)

Araujo, P., Frøyland, L.: Optimization of an extraction method for the determination of prostaglandin E2 in plasma using experimental design and liquid chromatography tandem mass spectrometry. J. Chromatogr. B 830, 212–217 (2006)

Gonçalves, C., Carvalho, J.J., Azenha, M.A., Alpendurada, M.F.: Optimization of supercritical fluid extraction of pesticide residues in soil by means of central composite design and analysis by gas chromatography-tandem mass spectrometry. J. Chromatogr. A 1110, 6–14 (2006)

Lucchesi, M.E., Smadja, J., Bradshaw, S., Louw, W., Chemat, F.: Solvent free microwave extraction of elletaria cardamomum L.: a multivariate study of a new technique for the extraction of essential oil. J. Food Eng. 79, 1079–1086 (2007)

Guerrero, E.D., Castro Mejías, R., Marín, R.N., Barroso, C.G.: Optimization of stir bar sorptive extraction applied to the determination of pesticides in vinegars. J. Chromatogr. A 1165, 144–150 (2007)

Portet-Koltalo, F., Oukebdane, K., Dionnet, F., Desbène, P.L.: Optimization of the extraction of polycyclic aromatic hydrocarbons and their nitrated derivatives from diesel particulate matter using microwave-assisted extraction. Anal. Bioanal. Chem. 390, 389–398 (2008)

Bernal, J.L., Nozal, M.J., Toribio, L., Diego, C., Mayo, R., Maestre, R.: Use of supercritical fluid extraction and gas chromatography–mass spectrometry to obtain amino acid profiles from several genetically modified varieties of maize and soybean. J. Chromatogr. A 1192, 266–272 (2008)

Callejón, R.M., González, A.G., Troncoso, A.M., Morales, M.L.: Optimization and validation of headspace sorptive extraction for the analysis of volatile compounds in wine vinegars. J. Chromatogr. A 1204, 93–103 (2008)

Mylonaki, S., Kiassos, E., Makris, D., Kefalas, P.: Optimization of the extraction of olive (Olea Europaea) leaf phenolics using water/ethanol-based solvent systems and response surface methodology. Anal. Bioanal. Chem. 392, 977–985 (2008)

Kiassos, E., Mylonaki, S., Makris, D.P., Kefalas, P.: Implementation of response surface methodology to optimise extraction of onion (allium cepa) solid waste phenolics. Innov. Food Sci. Emerg. Technol. 10, 246–252 (2009)

Cortada, C., Vidal, L., Tejada, S., Romo, A., Canals, A.: Determination of organochlorine pesticides in complex matrices by single-drop microextraction coupled to gas chromatography–mass spectrometry. Anal. Chim. Acta 638, 29–35 (2009)

Jalali-Heravi, M., Parastar, H., Ebrahimi-Najafabadi, H.: Characterization of volatile components of iranian saffron using factorial-based response surface modeling of ultrasonic extraction combined with gas chromatography–mass spectrometry analysis. J. Chromatogr. A 1216, 6088–6097 (2009)

Navarro, P., Etxebarria, N., Arana, G.: Development of a focused ultrasonic-assisted extraction of polycyclic aromatic hydrocarbons in marine sediment and mussel samples. Anal. Chim. Acta 648, 178–182 (2009)

Coscollà, C., Yusà, V., Beser, M.I., Pastor, A.: Multi-residue analysis of 30 currently used pesticides in fine airborne particulate matter (PM 2.5) by microwave-assisted extraction and liquid chromatography-tandem mass spectrometry. J. Chromatogr. A 1216, 8817–8827 (2009)

Du, G., Zhao, H.Y., Zhang, Q.W., Li, G.H., Yang, F.Q., Wang, Y., Li, Y.C., Wang, Y.T.: A rapid method for simultaneous determination of 14 phenolic compounds in radix puerariae using microwave-assisted extraction and ultra high performance liquid chromatography coupled with diode array detection and time-of-flight mass spectrometry. J. Chromatogr. A 1217, 705–714 (2010)

Pizarro, C., Sáenz-González, C., Pérez-del-Notario, N., González-Sáiz, J.M.: Development of a dispersive liquid–liquid microextraction method for the simultaneous determination of the main compounds causing cork taint and brett character in wines using gas chromatography-tandem mass spectrometry. J. Chromatogr. A 1218, 1576–1584 (2011)

Manso, J., García-Barrera, T., Gómez-Ariza, J.L.: New home-made assembly for hollow-fibre membrane extraction of persistent organic pollutants from real world samples. J. Chromatogr. A 1218, 7923–7935 (2011)

Marican, A., Ahumada, I., Richter, P.: Multivariate optimization of pressurized solvent extraction of alkylphenols and alkylphenol ethoxylates from biosolids. J. Braz. Chem. Soc. 23, 267–272 (2012)

Schulze, T., Magerl, R., Streck, G., Brack, W.: Use of factorial design for the multivariate optimization of polypropylene membranes for the cleanup of environmental samples using the accelerated membrane-assisted cleanup approach. J. Chromatogr. A 1225, 26–36 (2012)

Tümay Özer, E., Güçer, Ş.: Determination of Di(2-ethylhexyl) phthalate migration from toys into artificial sweat by gas chromatography mass spectrometry after activated carbon enrichment. Polym. Test. 31, 474–480 (2012)

Martínez-Moral, M., Tena, M.: Focused ultrasound solid–liquid extraction and gas chromatography tandem mass spectrometry determination of brominated flame retardants in indoor dust. Anal. Bioanal. Chem. 404, 289–295 (2012)

Pérez-Palacios, D., Fernández-Recio, M., Moreta, C., Tena, M.T.: Determination of bisphenol-type endocrine disrupting compounds in food-contact recycled-paper materials by focused ultrasonic solid–liquid extraction and ultra performance liquid chromatography-high resolution mass spectrometry. Talanta 99, 167–174 (2012)

Fryš, O., Česla, P., Bajerová, P., Adam, M., Ventura, K.: Optimization of focused ultrasonic extraction of propellant components determined by gas chromatography/mass spectrometry. Talanta 99, 316–322 (2012)

Xu, H.-J., Shi, X.-W., Ji, X., Du, Y.-F., Zhu, H., Zhang, L.-T.: A rapid method for simultaneous determination of triterpenoid saponins in pulsatilla turczaninovii using microwave-assisted extraction and high performance liquid chromatography–tandem mass spectrometry. Food Chem. 135, 251–258 (2012)

Reddy Mudiam, M.K., Ch, R., Chauhan, A., Manickam, N., Jain, R., Murthy, R.C.: Optimization of UA-DLLME by experimental design methodologies for the simultaneous determination of endosulfan and its metabolites in soil and urine samples by GC-MS. Anal. Methods 4, 3855–3863 (2012)

Lana, N.B., Berton, P., Covaci, A., Atencio, A.G., Ciocco, N.F., Altamirano, J.C.: Ultrasound leaching-dispersive liquid–liquid microextraction based on solidification of floating organic droplet for determination of polybrominated diphenyl ethers in sediment samples by gas chromatography-tandem mass spectrometry. J. Chromatogr. A 1285, 15–21 (2013)

Chaichi, M., Mohammadi, A., Hashemi, M.: Optimization and application of headspace liquid-phase microextraction coupled with gas chromatography–mass spectrometry for determination of furanic compounds in coffee using response surface methodology. Microchem. J. 108, 46–52 (2013)

Martínez-Moral, M.P., Tena, M.T.: Focused ultrasound solid–liquid extraction of perfluorinated compounds from sewage sludge. Talanta 109, 197–202 (2013)

Osman, B., Özer, E.T., Beşirli, N., Güçer, Ş.: Development and application of a solid phase extraction method for the determination of phthalates in artificial saliva using new synthesized microspheres. Polym. Test. 32, 810–818 (2013)

Habibi, H., Mohammadi, A., Hoseini, H., Mohammadi, M., Azadniya, E.: Headspace liquid-phase microextraction followed by gas chromatography–mass spectrometry for determination of furanic compounds in baby foods and method optimization using response surface methodology. Food Anal. Method 6, 1056–1064 (2013)

Martins, M., Donato, F., Prestes, O., Adaime, M., Zanella, R.: Determination of pesticide residues and related compounds in water and industrial effluent by solid-phase extraction and gas chromatography coupled to triple quadrupole mass spectrometry. Anal. Bioanal. Chem. 405, 7697–7709 (2013)

Tena, M.T., Martínez-Moral, M.P., Cardozo, P.W.: Determination of caffeoylquinic acids in feed and related products by focused ultrasound solid–liquid extraction and ultra-high performance liquid chromatography-mass spectrometry. J. Chromatogr. A 1400, 1–9 (2015)

Wang, Y.L., Liu, Z.M., Ren, J., Guo, B.H.: Development of a method for the analysis of multiclass antibiotic residues in milk using quechers and liquid chromatography-tandem mass spectrometry. Foodborne Pathog. Dis. 12, 693–703 (2015)

Sarkhosh, M., Niazi, A.: Development of flotation-assisted homogeneous liquid–liquid microextraction to determine organochlorine pesticides in soil by GC-MS. Chem. Lett. 44, 1254–1256 (2015)

Ahmadvand, M., Sereshti, H., Parastar, H.: Chemometric-based determination of polycyclic aromatic hydrocarbons in aqueous samples using ultrasound-assisted emulsification microextraction combined to gas chromatography–mass spectrometry. J. Chromatogr. A 1413, 117–126 (2015)

Sereshti, H., Heidari, R., Samadi, S.: Determination of volatile components of saffron by optimised ultrasound-assisted extraction in tandem with dispersive liquid-liquid microextraction followed by gas chromatography–mass spectrometry. Food Chem. 143, 499–505 (2014)

Enteshari, M., Mohammadi, A., Nayebzadeh, K., Azadniya, E.: Optimization of headspace single-drop microextraction coupled with gas chromatography–mass spectrometry for determining volatile oxidation compounds in mayonnaise by response surface methodology. Food Anal. Method 7, 438–448 (2014)

Sereshti, H., Samadi, S., Jalali-Heravi, M.: Determination of volatile components of green, black, oolong and white tea by optimized ultrasound-assisted extraction-dispersive liquid–liquid microextraction coupled with gas chromatography. J. Chromatogr. A 1280, 1–8 (2013)

Moreira, N., Meireles, S., Brandao, T., de Pinho, P.G.: Optimization of the HS-SPME-GC-IT/MS method using a central composite design for volatile carbonyl compounds determination in beers. Talanta 117, 523–531 (2013)

Rocha, D.G., Santos, F.A., da Silva, J.C.C., Augusti, R., Faria, A.F.: Multiresidue determination of fluoroquinolones in poultry muscle and kidney according to the regulation 2002/657/Ec. A systematic comparison of two different approaches: liquid chromatography coupled to high-resolution mass spectrometry or tandem mass spectrometry. J. Chromatogr. A 1379, 83–91 (2015)

Low, K.H., Zain, S.M., Abas, M.R.: Evaluation of microwave-assisted digestion condition for the determination of metals in fish samples by inductively coupled plasma mass spectrometry using experimental designs. Int. J. Environ. Anal. Chem. 92, 1161–1175 (2011)

Reboredo-Rodríguez, P., González-Barreiro, C., Cancho-Grande, B., Simal-Gándara, J.: Dynamic headspace/GC–MS to control the aroma fingerprint of extra-virgin olive oil from the same and different olive varieties. Food Control 25, 684–695 (2012)

Zaghdoudi, K., Pontvianne, S., Framboisier, X., Achard, M., Kudaibergenova, R., Ayadi-Trabelsi, M., Kalthoum-Cherif, J., Vanderesse, R., Frochot, C., Guiavarc'h, Y.: Accelerated solvent extraction of carotenoids from: Tunisian Kaki (Diospyros kaki L.), Peach (Prunus persica L.) and Apricot (Prunus armeniaca L.). Food Chem. 184, 131–139 (2015)

Pinho, G.P., Silverio, F.O., Evangelista, G.F., Mesquita, L.V., Barbosa, E.S.: Determination of chlorobenzenes in sewage sludge by solid–liquid extraction with purification at low temperature and gas chromatography mass spectrometry. J. Braz. Chem. Soc. 25, 1292–1301 (2014)

El Atrache, L.L., Ben Sghaier, R., Kefi, B.B., Haldys, V., Dachraoui, M., Tortajada, J.: Factorial design optimization of experimental variables in preconcentration of carbamates pesticides in water samples using solid phase extraction and liquid chromatography-electrospray-mass spectrometry determination. Talanta 117, 392–398 (2013)

Manzini, S., Durante, C., Baschieri, C., Cocchi, M., Sighinolfi, S., Totaro, S., Marchetti, A.: Optimization of a dynamic headspace – thermal desorption – gas chromatography/mass spectrometry procedure for the determination of furfurals in vinegars. Talanta 85, 863–869 (2011)

Vincelet, C., Roussel, J., Benanou, D.: Experimental designs dedicated to the evaluation of a membrane extraction method: membrane-assisted solvent extraction for compounds having different polarities by means of gas chromatography-mass detection. Anal. Bioanal. Chem. 396, 2285–2292 (2010)

Li, Y., Zhang, C.: Optimization of dispersive liquid–liquid microextraction based on solidification of floating organic drop of endocrine disrupting compounds in liquid food samples using response surface plot method. Asian J. Chem. 26, 4849–4854 (2014)

Dawes, M.L., Bergum, J.S., Schuster, A.E., Aubry, A.-F.: Application of a design of experiment approach in the development of a sensitive bioanalytical assay in human plasma. J. Pharm. Biomed. Anal. 70, 401–407 (2012)

Meier, S., Mjøs, S.A., Joensen, H., Grahl-Nielsen, O.: Validation of a one-step extraction/methylation method for determination of fatty acids and cholesterol in marine tissues. J. Chromatogr. A 1104, 291–298 (2006)

Barro, R., Garcia-Jares, C., Llompart, M., Herminia Bollain, M., Cela, R.: Rapid and sensitive determination of pyrethroids indoors using active sampling followed by ultrasound-assisted solvent extraction and gas chromatography. J. Chromatogr. A 1111, 1–10 (2006)

Yusà, V., Pastor, A., de la Guardia, M.: Microwave-assisted extraction of polybrominated diphenyl ethers and polychlorinated naphthalenes concentrated on semipermeable membrane devices. Anal. Chim. Acta 565, 103–111 (2006)

Vieira, H.P., Neves, A.A., de Queiroz, M.: Optimization and validation of liquid-liquid extraction with the low temperature partition technique (LLE-LTP) for pyrethroids in water and Gc analysis. Quim. Nova 30, 535–540 (2007)

Verma, A., Hartonen, K., Riekkola, M.-L.: Optimization of supercritical fluid extraction of indole alkaloids from catharanthus roseus using experimental design methodology—comparison with other extraction techniques. Phytochem. Anal. 19, 52–63 (2008)

Sereshti, H., Karimi, M., Samadi, S.: Application of response surface method for optimization of dispersive liquid–liquid microextraction of water-soluble components of rosa damascena mill. Essential Oil. J. Chromatogr. A 1216, 198–204 (2009)

Calderón-Preciado, D., Jiménez-Cartagena, C., Peñuela, G., Bayona, J.: Development of an analytical procedure for the determination of emerging and priority organic pollutants in leafy vegetables by pressurized solvent extraction followed by GC–MS determination. Anal. Bioanal. Chem. 394, 1319–1327 (2009)

Karvela, E., Makris, D., Kalogeropoulos, N., Karathanos, V., Kefalas, P.: Factorial design optimization of grape (Vitis vinifera) seed polyphenol extraction. Eur. Food Res. Technol. 229, 731–742 (2009)

Ozcan, S., Tor, A., Aydin, M.E.: Determination of polycyclic aromatic hydrocarbons in soil by miniaturized ultrasonic extraction and gas chromatography-mass selective detection. Clean Soil, Air, Water 37, 811–817 (2009)

Ozcan, S.: Viable and rapid determination of organochlorine pesticides in water. Clean Soil, Air, Water 38, 457–465 (2010)

García-Rodríguez, D., Carro, A.M., Cela, R., Lorenzo, R.A.: Microwave-assisted extraction and large-volume injection gas chromatography tandem mass spectrometry determination of multiresidue pesticides in edible seaweed. Anal. Bioanal. Chem. 398, 1005–1016 (2010)

Garbi, A., Sakkas, V., Fiamegos, Y.C., Stalikas, C.D., Albanis, T.: Sensitive determination of pesticides residues in wine samples with the aid of single-drop microextraction and response surface methodology. Talanta 82, 1286–1291 (2010)

Peña-Alvarez, A., Alvarado, L.A., Vera-Avila, L.E.: Analysis of Capsaicin and dihydrocapsaicin in hot peppers by ultrasound assisted extraction followed by gas chromatography–mass spectrometry. Instrum. Sci. Technol. 40, 429–440 (2012)

Guzmán-Guillén, R., Prieto Ortega, A.I., Moreno, I., González, G., Eugenia Soria-Díaz, M., Vasconcelos, V., Cameán, A.M.: Development and optimization of a method for the determination of cylindrospermopsin from strains of aphanizomenon cultures: intra-laboratory assessment of its accuracy by using validation standards. Talanta 100, 356–363 (2012)

Vega-Morales, T., Sosa-Ferrera, Z., Santana-Rodríguez, J.J.: The use of microwave assisted extraction and on-line chromatography-mass spectrometry for determining endocrine-disrupting compounds in sewage sludges. Water Air Soil Pollut. 224, 1–15 (2013)

Alexandru, L., Pizzale, L., Conte, L., Barge, A., Cravotto, G.: Microwave-assisted extraction of edible cicerbita alpina shoots and ITS LC-MS phenolic profile. J. Sci. Food Agric. 93, 2676–2682 (2013)

Munaretto, J.S., Ferronato, G., Ribeiro, L.C., Martins, M.L., Adaime, M.B., Zanella, R.: Development of a multiresidue method for the determination of endocrine disrupters in fish fillet using gas chromatography-triple quadrupole tandem mass spectrometry. Talanta 116, 827–834 (2013)

Zheng, J., Liu, B., **, J., Chen, B., Wu, H., Zhang, B.: Vortex- and shaker-assisted liquid–liquid microextraction (VSA-LLME) coupled with gas chromatography and mass spectrometry (GC-MS) for Analysis of 16 polycyclic aromatic hydrocarbons (Pahs) in offshore produced water. Water Air Soil Pollut. 226, 1–13 (2015)

Danielsson, A.P.H., Moritz, T., Mulder, H., Spégel, P.: Development and optimization of a metabolomic method for analysis of adherent cell cultures. Anal. Biochem. 404, 30–39 (2010)

Racamonde, I., Rodil, R., Quintana, J.B., Sieira, B.J., Kabir, A., Furton, K.G., Cela, R.: Fabric phase sorptive extraction: a new sorptive microextraction technique for the determination of non-steroidal anti-inflammatory drugs from environmental water samples. Anal. Chim. Acta 865, 22–30 (2015)

Alasonati, E., Fabbri, B., Fettig, I., Yardin, C., Busto, M.E.D., Richter, J., Philipp, R., Fisicaro, P.: Experimental design for Tbt quantification by isotope dilution Spe-Gc-Icp-Ms under the European water framework directive. Talanta 134, 576–586 (2015)

Ahmadi, K., Abdollahzadeh, Y., Asadollahzadeh, M., Hemmati, A., Tavakoli, H., Torkaman, R.: Chemometric assisted ultrasound leaching-solid phase extraction followed by dispersive-solidification liquid–liquid microextraction for determination of organophosphorus pesticides in soil samples. Talanta 137, 167–173 (2015)

Walravens, J., Mikula, H., Rychlik, M., Asam, S., Ediage, E.N., Di Mavungu, J.D., Van Landschoot, A., Vanhaecke, L., De Saeger, S.: Development and validation of an ultra-high-performance liquid chromatography tandem mass spectrometric method for the simultaneous determination of free and conjugated alternaria toxins in cereal-based foodstuffs. J. Chromatogr. A 1372, 91–101 (2014)

Truzzi, C., Illuminati, S., Finale, C., Annibaldi, A., Lestingi, C., Scarponi, G.: Microwave-assisted solvent extraction of melamine from seafood and determination by gas chromatography–mass spectrometry: optimization by factorial design. Anal. Lett. 47, 1118–1133 (2014)

Medina-Dzul, K., Munoz-Rodriguez, D., Moguel-Ordonez, Y., Carrera-Figueiras, C.: Application of mixed solvents for elution of organophosphate pesticides extracted from raw propolis by matrix solid-phase dispersion and analysis by GC-MS. Chem. Pap. 68, 1474–1481 (2014)

Garcia-Jares, C., Celeiro, M., Lamas, J.P., Iglesias, M., Lores, M., Llompart, M.: Rapid analysis of fungicides in white wines from Northwest Spain by ultrasound-assisted emulsification-microextraction and gas chromatography–mass spectrometry. Anal. Methods 6, 3108–3116 (2014)

Pereira, A.G., D'Avila, F.B., Ferreira, P.C.L., Holler, M.G., Limberger, R.P., Froehlich, P.E.: Determination of cocaine, its metabolites and pyrolytic products by LC-MS using a chemometric approach. Anal. Methods 6, 456–462 (2014)

Mauro, D., Ciardullo, S., Civitareale, C., Fiori, M., Pastorelli, A.A., Stacchini, P., Palleschi, G.: Development and validation of a multi-residue method for determination of 18 beta-agonists in bovine urine by UPLC-MS/MS. Microchem. J. 115, 70–77 (2014)

Bussche, J.V., Decloedt, A., Van Meulebroek, L., De Clercq, N., Lock, S., Stahl-Zeng, J., Vanhaecke, L.: A novel approach to the quantitative detection of anabolic steroids in bovine muscle tissue by means of a hybrid quadrupole time-of-flight-mass spectrometry instrument. J. Chromatogr. A 1360, 229–239 (2014)

Guerrero, E.D., Marín, R.N., Mejías, R.C., Barroso, C.G.: Optimization of stir bar sorptive extraction applied to the determination of volatile compounds in vinegars. J. Chromatogr. A 1104, 47–53 (2006)

Regueiro, J., Llompart, M., García-Jares, C., Cela, R.: Determination of polybrominated diphenyl ethers in domestic dust by microwave-assisted solvent extraction and gas chromatography-tandem mass spectrometry. J. Chromatogr. A 1137, 1–7 (2006)

Serôdio, P., Cabral, M.S., Nogueira, J.M.F.: Use of experimental design in the optimization of stir bar sorptive extraction for the determination of polybrominated diphenyl ethers in environmental matrices. J. Chromatogr. A 1141, 259–270 (2007)

Amvrazi, E.G., Tsiropoulos, N.G.: Chemometric study and optimization of extraction parameters in single-drop microextraction for the determination of multiclass pesticide residues in grapes and apples by gas chromatography mass spectrometry. J. Chromatogr. A 1216, 7630–7638 (2009)

Delgado, R., Durán, E., Castro, R., Natera, R., Barroso, C.G.: Development of a stir bar sorptive extraction method coupled to gas chromatography–mass spectrometry for the analysis of volatile compounds in sherry brandy. Anal. Chim. Acta 672, 130–136 (2010)

Emídio, E.S., de Menezes Prata, V., de Santana, F.J.M., Dórea, H.S.: Hollow fiber-based liquid phase microextraction with factorial design optimization and gas chromatography-tandem mass spectrometry for determination of cannabinoids in human hair. J. Chromatogr. B 878, 2175–2183 (2010)

Jofré, V.P., Assof, M.V., Fanzone, M.L., Goicoechea, H.C., Martínez, L.D., Silva, M.F.: Optimization of ultrasound assisted-emulsification-dispersive liquid–liquid microextraction by experimental design methodologies for the determination of sulfur compounds in wines by gas chromatography–mass spectrometry. Anal. Chim. Acta 683, 126–135 (2010)

Khajeh, M., Ghanbari, A.: Application of factorial design and box-behnken matrix in the optimization of a microwave-assisted extraction of essential oils from Salvia mirzayanii. Nat. Prod. Res. 25, 1766–1770 (2011)

Tao, Y., Yu, G., Chen, D., Pan, Y., Liu, Z., Wei, H., Peng, D., Huang, L., Wang, Y., Yuan, Z.: Determination of 17 macrolide antibiotics and avermectins residues in meat with accelerated solvent extraction by liquid chromatography-tandem mass spectrometry. J. Chromatogr. B 897, 64–71 (2012)

Tsiallou, T.P., Sakkas, V.A., Albanis, T.A.: Development and application of chemometric-assisted dispersive liquid–liquid microextraction for the determination of suspected fragrance allergens in water samples. J. Sep. Sci. 35, 1659–1666 (2012)

Patil, A.A., Sachin, B.S., Shinde, D.B., Wakte, P.S.: Supercritical Co2 Assisted extraction and LC–MS identification of Picroside I and Picroside II from Picrorhiza kurroa. Phytochem. Anal. 24, 97–104 (2013)

Silveira, M.A.K., Caldas, S.S., Guilherme, J.R., Costa, F.P., Guimaraes, B.D., Cerqueira, M.B.R., Soares, B.M., Primel, E.G.: Quantification of pharmaceuticals and personal care product residues in surface and drinking water samples by SPE and LC-ESI-MS/MS. J. Braz. Chem. Soc. 24, 1385–1395 (2013)

Silva, C.P.D., Emídio, E.S., Marchi, M.R.R.D.: Uv filters in water samples: experimental design on the Spe optimization followed by GC-MS/MS analysis. J. Braz. Chem. Soc. 24, 1433–1441 (2013)

Hüffer, T., Osorio, X., Jochmann, M., Schilling, B., Schmidt, T.: Multi-walled carbon nanotubes as sorptive material for solventless in-tube microextraction (Itex2)—a factorial design study. Anal. Bioanal. Chem. 405, 8387–8395 (2013)

Bolzan, C.M., Caldas, S.S., Guimarães, B.S., Primel, E.G.: Dispersive liquid–liquid microextraction with liquid chromatography-tandem mass spectrometry for the determination of triazine, neonicotinoid, triazole, and imidazolinone pesticides in mineral water samples. J. Braz. Chem. Soc. 26, 1902–1913 (2015)

León-Pérez, D., Muñoz-Jiménez, A., Jiménez-Cartagena, C.: Determination of mercury species in fish and seafood by gas chromatography–mass spectrometry: validation study. Food Anal. Method 8, 2383–2391 (2015)

Suh, J.H., Eom, H.Y., Kim, U., Kim, J., Cho, H.-D., Kang, W., Kim, D.S., Han, S.B.: Highly sensitive electromembrane extraction for the determination of volatile organic compound metabolites in dried urine spot. J. Chromatogr. A 1416, 1–9 (2015)

Elpa, D., Duran-Guerrero, E., Castro, R., Natera, R., Barroso, C.G.: Development of a new stir bar sorptive extraction method for the determination of medium-level volatile thiols in wine. J. Sep. Sci. 37, 1867–1872 (2014)

Aguirre, J., Bizkarguenaga, E., Iparraguirre, A., Fernandez, L.A., Zuloaga, O., Prieto, A.: Development of stir-bar sorptive extraction-thermal desorption-gas chromatography–mass spectrometry for the analysis of musks in vegetables and amended soils. Anal. Chim. Acta 812, 74–82 (2014)

Cortada, C., dos Reis, L.C., Vidal, L., Llorca, J., Canals, A.: Determination of cyclic and linear siloxanes in wastewater samples by ultrasound-assisted dispersive liquid–liquid microextraction followed by gas chromatography–mass spectrometry. Talanta 120, 191–197 (2014)

Petridis, N.P., Sakkas, V.A., Albanis, T.A.: Chemometric optimization of dispersive suspended microextraction followed by gas chromatography–mass spectrometry for the determination of polycyclic aromatic hydrocarbons in natural waters. J. Chromatogr. A 1355, 46–52 (2014)

Ebrahimzadeh, H., Mirbabaei, F., Asgharinezhad, A.A., Shekari, N., Mollazadeh, N.: Optimization of solvent bar microextraction combined with gas chromatography for preconcentration and determination of methadone in human urine and plasma samples. J. Chromatogr. B 947, 75–82 (2014)

Cai, K., Zhao, H.N., **ang, Z.M., Cai, B., Pan, W.J., Lei, B.: Enzymatic hydrolysis followed by gas chromatography-mass spectroscopy for determination of glycosides in tobacco and method optimization by response surface methodology. Anal. Methods 6, 7006–7014 (2014)

Salgueiro-Gonzalez, N., Turnes-Carou, I., Muniategui-Lorenzo, S., Lopez-Mahia, P., Prada-Rodriguez, D.: Analysis of endocrine disruptor compounds in marine sediments by in cell clean up-pressurized liquid extraction-liquid chromatography tandem mass spectrometry determination. Anal. Chim. Acta 852, 112–120 (2014)

Ezquerro, Ó., Garrido-López, Á., Tena, M.T.: Determination of 2,4,6-trichloroanisole and guaiacol in cork stoppers by pressurised fluid extraction and gas chromatography–mass spectrometry. J. Chromatogr. A 1102, 18–24 (2006)

Romero, J., López, P., Rubio, C., Batlle, R., Nerín, C.: Strategies for single-drop microextraction optimization and validation: application to the detection of potential antimicrobial agents. J. Chromatogr. A 1166, 24–29 (2007)

García, I., Ignacio, M., Mouteira, A., Cobas, J., Carro, N.: Assisted solvent extraction and ion-trap tandem mass spectrometry for the determination of polychlorinated biphenyls in mussels. Comparison with other extraction techniques. Anal. Bioanal. Chem. 390, 729–737 (2008)

Panagiotou, A.N., Sakkas, V.A., Albanis, T.A.: Application of chemometric assisted dispersive liquid–liquid microextraction to the determination of personal care products in natural waters. Anal. Chim. Acta 649, 135–140 (2009)

Cortada, C., Vidal, L., Canals, A.: Determination of geosmin and 2-methylisoborneol in water and wine samples by ultrasound-assisted dispersive liquid–liquid microextraction coupled to gas chromatography–mass spectrometry. J. Chromatogr. A 1218, 17–22 (2011)

Tsiropoulos, N.G., Amvrazi, E.G.: Determination of pesticide residues in honey by single-drop microextraction and gas chromatography. J. AOAC Int. 94, 634–644 (2011)

Cheong, K.W., Tan, C.P., Mirhosseini, H., Chin, S.T., Che Man, Y.B., Hamid, N.S.A., Osman, A., Basri, M.: Optimization of equilibrium headspace analysis of volatile flavor compounds of Malaysian Soursop (Annona muricata): comprehensive two-dimensional gas chromatography time-of-flight mass spectrometry (GC×GC-TOFMS). Food Chem. 125, 1481–1489 (2011)

Wang, H.P., Zrada, M., Anderson, K., Katwaru, R., Harradine, P., Choi, B., Tong, V., Pajkovic, N., Mazenko, R., Cox, K., Cohen, L.H.: Understanding and reducing the experimental variability of in vitro plasma protein binding measurements. J. Pharm. Sci. 103, 3302–3309 (2014)

Bylda, C., Velichkova, V., Bolle, J., Thiele, R., Kobold, U., Volmer, D.A.: Magnetic beads as an extraction medium for simultaneous quantification of acetaminophen and structurally related compounds in human serum. Drug Test. Anal. 7, 457–466 (2015)

Čabala, R., Bursová, M.: Bell-shaped extraction device assisted liquid–liquid microextraction technique and its optimization using response-surface methodology. J. Chromatogr. A 1230, 24–29 (2012)

Campillo, N., Vinas, P., Ferez-Melgarejo, G., Hernandez-Cordoba, M.: Dispersive liquid-liquid microextraction for the determination of flavonoid aglycone compounds in honey using liquid chromatography with diode array detection and time-of-flight mass spectrometry. Talanta 131, 185–191 (2015)

Vinas, P., Bravo-Bravo, M., Lopez-Garcia, I., Pastor-Belda, M., Hernandez-Cordoba, M.: pressurized liquid extraction and dispersive liquid–liquid microextraction for determination of tocopherols and tocotrienols in plant foods by liquid chromatography with fluorescence and atmospheric pressure chemical ionization-mass spectrometry detection. Talanta 119, 98–104 (2014)

Mokhtari, B., Dalali, N., Pourabdollah, K.: Taguchi L32 orthogonal array design for evaluation of three dispersive microextraction methods: a case study for determination of methyl methacrylate in produced water by DLLME, DLLME-SLW, DLLME-SFO. Arab. J. Sci. Eng. 39, 53–66 (2014)

Shau-Chun, W., Shu-Chi, L.E.E., Chia-Hung, H., Hui-Ju, L., Chih-Min, H., Tung-Hu, T.: Using orthogonal arrays to obtain efficient and reproducible extraction conditions of geniposide and genipin in gardenia fruit with liquid chromatography-mass spectrometry determinations. J. Food Drug Anal. 19, 486–494 (2011)

Viñas, P., López-García, I., Campillo, N., Rivas, R., Hernández-Córdoba, M.: Ultrasound-assisted emulsification microextraction coupled with gas chromatography–mass spectrometry using the taguchi design method for bisphenol migration studies from thermal printer paper, toys, and baby utensils. Anal. Bioanal. Chem. 404, 671–678 (2012)

Sousa, R., Homem, V., Moreira, J.L., Madeira, L.M., Alves, A.: Optimization and application of dispersive liquid–liquid microextraction for simultaneous determination of carbamates and organophosphorus pesticides in waters. Anal. Methods 5, 2736–2745 (2013)

Cacho, J.I., Campillo, N., Viñas, P., Hernández-Córdoba, M.: Stir bar sorptive extraction with eg-silicone coating for bisphenols determination in personal care products by GC–MS. J. Pharm. Biomed. Anal. 78/79, 255–260 (2013)

Mudiam, M.K.R., Ratnasekhar, C.: Ultra sound assisted one step rapid derivatization and dispersive liquid–liquid microextraction followed by gas chromatography–mass spectrometric determination of amino acids in complex matrices. J. Chromatogr. A 1291, 10–18 (2013)

Jiye, A., Huang, Q., Wang, G.J., Zha, W.B., Yan, B., Ren, H.C., Gu S, Zhang Y, Zhang Q, Shao F, Sheng L: Global analysis of metabolites in rat and human urine based on gas chromatography/time-of-flight mass spectrometry. Anal. Biochem. 379, 20–26 (2008)

Lin, J., Su, M., Wang, X., Qiu, Y., Li, H., Hao, J., Yang, H., Zhou, M., Yan, C., Jia, W.: Multiparametric analysis of amino acids and organic acids in rat brain tissues using GC/MS. J. Sep. Sci. 31, 2831–2838 (2008)

Gu, S.A.J., Wang, G., Zha, W., Yan, B., Zhang, Y., Ren, H., Cao, B., Liu, L.: Metabonomic profiling of liver metabolites by gas chromatography–mass spectrometry and its application to characterizing hyperlipidemia. Biomed. Chromatogr. 24, 245–252 (2010)

Pan, L., Qiu, Y., Chen, T., Lin, J., Chi, Y., Su, M., Zhao, A., Jia, W.: An optimized procedure for metabonomic analysis of rat liver tissue using gas chromatography/time-of-flight mass spectrometry. J. Pharm. Biomed. Anal. 52, 589–596 (2010)

Jiye, A., Trygg, J., Gullberg, J., Johansson, A.I., Jonsson, P., Antti, H., Marklund, S.L., Moritz, T.: Extraction and GC/MS analysis of the human blood plasma metabolome. Anal. Chem. 77, 8086–8094 (2005)

González, P., Racamonde, I., Carro, A.M., Lorenzo, R.A.: Combined solid-phase extraction and gas chromatography–mass spectrometry used for determination of chloropropanols in water. J. Sep. Sci. 34, 2697–2704 (2011)

Castro, L., Ross, C., Vixie, K.: Optimization of a solid phase dynamic extraction (SPDE) method for beer volatile profiling. Food Anal. Method 8, 2115–2124 (2015)

Hubert, C., Houari, S., Rozet, E., Lebrun, P., Hubert, P.: Towards a full integration of optimization and validation phases: an analytical-quality-by-design approach. J. Chromatogr. A 1395, 88–98 (2015)

Pardo, O., Yusà, V., León, N., Pastor, A.: Determination of bisphenol diglycidyl ether residues in canned foods by pressurized liquid extraction and liquid chromatography-tandem mass spectrometry. J. Chromatogr. A 1107, 70–78 (2006)

Coscollà, C., Yusà, V., Martí, P., Pastor, A.: Analysis of currently used pesticides in fine airborne particulate matter (PM 2.5) by pressurized liquid extraction and liquid chromatography-tandem mass spectrometry. J. Chromatogr. A 1200, 100–107 (2008)

Pardo, O., Yusà, V., León, N., Pastor, A.: Development of a method for the analysis of seven banned azo-dyes in Chilli and hot Chilli food samples by pressurized liquid extraction and liquid chromatography with electrospray ionization-tandem mass spectrometry. Talanta 78, 178–186 (2009)

Székely, G., Henriques, B., Gil, M., Ramos, A., Alvarez, C.: Design of experiments as a tool for LC–MS/MS method development for the trace analysis of the potentially genotoxic 4-dimethylaminopyridine impurity in glucocorticoids. J. Pharm. Biomed. Anal. 70, 251–258 (2012)

Pardo, O., Yusà, V., León, N., Pastor, A.: Development of a pressurised liquid extraction and liquid chromatography with electrospray ionization-tandem mass spectrometry method for the determination of domoic acid in shellfish. J. Chromatogr. A 1154, 287–294 (2007)

Pardo, O., Yusà, V., Coscollà, C., León, N., Pastor, A.: Determination of acrylamide in coffee and chocolate by pressurised fluid extraction and liquid chromatography-tandem mass spectrometry. Food Addit. Contam. 24, 663–672 (2007)

Schappler, J., Guillarme, D., Prat, J., Veuthey, J.-L., Rudaz, S.: Coupling CE with atmospheric pressure photoionization MS for pharmaceutical basic compounds: optimization of operating parameters. Electrophoresis 28, 3078–3087 (2007)

Szekely, G., Henriques, B., Gil, M., Alvarez, C.: Experimental design for the optimization and robustness testing of a liquid chromatography tandem mass spectrometry method for the trace analysis of the potentially genotoxic 1,3-diisopropylurea. Drug Test Anal. 6, 898–908 (2014)

Wang, J., Schnute, W.C.: Optimizing mass spectrometric detection for ion chromatographic analysis. I. Common anions and selected organic acids. Rapid Commun. Mass Spectrom. 23, 3439–3447 (2009)

Yusà, V., Quintás, G., Pardo, O., Martí, P., Pastor, A.: Determination of acrylamide in foods by pressurized fluid extraction and liquid chromatography-tandem mass spectrometry used for a survey of Spanish cereal-based foods. Food Addit. Contam. 23, 237–244 (2006)

Moberg, M., Bergquist, J., Bylund, D.: A generic stepwise optimization strategy for liquid chromatography electrospray ionization tandem mass spectrometry methods. J. Mass Spectrom. 41, 1334–1345 (2006)

Müller, A., Flottmann, D., Schulz, W., Seitz, W., Weber, W.H.: Alternative Validation of a LC-MS/MS-multi-method for pesticides in drinking water. Clean Soil, Air, Water 35, 329–338 (2007)

Müller, A., Flottmann, D., Schulz, W., Seitz, W., Weber, W.: Assessment of robustness for an LC-MS-MS multi-method by response-surface methodology, and its sensitivity. Anal. Bioanal. Chem. 390, 1317–1326 (2008)

Hermo, M.P., Barrón, D., Barbosa, J.: Determination of multiresidue quinolones regulated by the european union in pig liver samples: high-resolution time-of-flight mass spectrometry versus tandem mass spectrometry detection. J. Chromatogr. A 1201, 1–14 (2008)

Dillon, L.A., Stone, V.N., Croasdell, L.A., Fielden, P.R., Goddard, N.J., Paul Thomas, C.L.: Optimization of secondary electrospray ionization (SESI) for the trace determination of gas-phase volatile organic compounds. Analyst 135, 306–314 (2010)

Alves, C., Santos-Neto, A.J., Fernandes, C., Rodrigues, J.C., Lanças, F.M.: Analysis of tricyclic antidepressant drugs in plasma by means of solid-phase microextraction-liquid chromatography-mass spectrometry. J. Mass Spectrom. 42, 1342–1347 (2007)

Hernández-Borges, J., Rodríguez-Delgado, M., García-Montelongo, F.J., Cifuentes, A.: Analysis of pesticides in soy milk combining solid-phase extraction and capillary electrophoresis-mass spectrometry. J. Sep. Sci. 28, 948–956 (2005)

Charles, L., Caloprisco, S., Mohamed, S., Sergent, M.: Chemometric approach to evaluate the parameters affecting electrospray: application of a statistical design of experiments for the study of arginine ionization. Eur. J. Mass Spectrom. (Chichester, Engl) 11, 361–370 (2005)

Maragou, N.C., Rosenberg, E., Thomaidis, N.S., Koupparis, M.A.: Direct determination of the estrogenic compounds 8-prenylnaringenin, zearalenone, Α- and Β-zearalenol in beer by liquid chromatography-mass spectrometry. J. Chromatogr. A 1202, 47–57 (2008)

Kruve, A., Herodes, K., Leito, I.: Optimization of electrospray interface and quadrupole ion trap mass spectrometer parameters in pesticide liquid chromatography/electrospray ionization mass spectrometry analysis. Rapid Commun. Mass Spectrom. 24, 919–926 (2010)

Dongari, N., Sauter, E.R., Tande, B.M., Kubatova, A.: Determination of celecoxib in human plasma using liquid chromatography with high resolution time of flight-mass spectrometry. J. Chromatogr. B 955, 86–92 (2014)

Srinubabu, G., Ratnam, B.V.V., Rao, A.A., Rao, M.N.: Development and validation of LC-MS/MS method for the quantification of oxcarbazepine in human plasma using an experimental design. Chem. Pharm. Bull. (Tokyo) 56, 28–33 (2008)

Maragou, N., Thomaidis, N., Koupparis, M.: Optimization and comparison of ESI and APCI LC-MS/MS methods: a case study of irgarol 1051, diuron, and their degradation products in environmental samples. J. Am. Soc. Mass Spectrom. 22, 1826–1838 (2011)

Perrenoud, A.G.G., Veuthey, J.L., Guillarme, D.: Coupling state-of-the-art supercritical fluid chromatography and mass spectrometry: from hyphenation interface optimization to high-sensitivity analysis of pharmaceutical compounds. J. Chromatogr. A 1339, 174–184 (2014)

Laures, A.M.F., Wolff, J.-C., Eckers, C., Borman, P.J., Chatfield, M.J.: Investigation into the factors affecting accuracy of mass measurements on a time-of-flight mass spectrometer using design of experiment. Rapid Commun. Mass Spectrom. 21, 529–535 (2007)

Champarnaud, E., Laures, A.M.F., Borman, P.J., Chatfield, M.J., Kapron, J.T., Harrison, M., Wolff, J.C.: Trace level impurity method development with high-field asymmetric waveform ion mobility spectrometry: systematic study of factors affecting the performance. Rapid Commun. Mass Spectrom. 23, 181–193 (2009)

De Clercq, N., Julie, V., Croubels, S., Delahaut, P., Vanhaecke, L.: A validated analytical method to study the long-term stability of natural and synthetic glucocorticoids in livestock urine using ultra-high performance liquid chromatography coupled to orbitrap-high resolution mass spectrometry. J. Chromatogr. A 1301, 111–121 (2013)

Espinosa, M.S., Folguera, L., Magallanes, J.F., Babay, P.A.: Exploring analyte response in an ESI-MS system with different chemometric tools. Chemometr. Intellig. Lab. Syst. 146, 120–127 (2015)

Zachariadis, G.A., Rosenberg, E.: Use of modified doehlert-type experimental design in optimization of a hybrid electrospray ionization ion trap time-of-flight mass spectrometry technique for glutathione determination. Rapid Commun. Mass Spectrom. 27, 489–499 (2013)

Zhang, L., Borror, C.M., Sandrin, T.R.: A designed experiments approach to optimization of automated data acquisition during characterization of bacteria with MALDI-TOF mass spectrometry. Plos One 9, e92720 (2014)

Herrero, A., Reguera, C., Ortiz, M.C., Sarabia, L.: Determination of dichlobenil and its major metabolite (BAM) in onions by PTV-GC-MS using Parafac2 and experimental design methodology. Chemometr. Intellig. Lab. Syst. 133, 92–108 (2014)

Beser, M.I., Beltran, J., Yusa, V.: Design of experiment approach for the optimization of polybrominated diphenyl ethers determination in fine airborne particulate matter by microwave-assisted extraction and gas chromatography coupled to tandem mass spectrometry. J. Chromatogr. A 1323, 1–10 (2014)

Yusà, V., Quintas, G., Pardo, O., Pastor, A., Guardia, M.D.L.: Determination of Pahs in airborne particles by accelerated solvent extraction and large-volume injection-gas chromatography–mass spectrometry. Talanta 69, 807–815 (2006)

Muir, B., Quick, S., Slater, B.J., Cooper, D.B., Moran, M.C., Timperley, C.M., Carrick, W.A., Burnell, C.K.: Analysis of chemical warfare agents: Ii. Use of thiols and statistical experimental design for the trace level determination of vesicant compounds in air samples. J. Chromatogr. A 1068, 315–326 (2005)

Esteve-Turrillas, F.A., Caupos, E., Llorca, I., Pastor, A., de la Guardia, M.: Optimization of large-volume injection for the determination of polychlorinated biphenyls in childrenʼs fast-food menus by low-resolution mass spectrometry. J. Agric. Food Chem. 56, 1797–1803 (2008)

León, N., Yusà, V., Pardo, O., Pastor, A.: Determination of 3-MCPD BY GC-MS/MS with PTV-LV injector used for a survey of Spanish foodstuffs. Talanta 75, 824–831 (2008)

Leite, N.F., Peralta-Zamora, P., Grassi, M.T.: Multifactorial optimization approach for the determination of polycyclic aromatic hydrocarbons in river sediments by gas chromatography-quadrupole ion trap selected ion storage mass spectrometry. J. Chromatogr. A 1192, 273–281 (2008)

Cheong, M.W., Lee, J.Y.K., Liu, S.Q., Zhou, W., Nie, Y., Kleine-Benne, E., Curran, P., Yu, B.: Simultaneous quantitation of volatile compounds in citrus beverage through stir bar sorptive extraction coupled with thermal desorption-programmed temperature vaporization. Talanta 107, 118–126 (2013)