Abstract

We improve the previously best known upper bounds on the sizes of \(\theta \)-spherical codes for every \(\theta <\theta ^*\approx 62.997^{\circ }\) at least by a factor of 0.4325, in sufficiently high dimensions. Furthermore, for sphere packing densities in dimensions \(n\ge 2000\) we have an improvement at least by a factor of \(0.4325+\frac{51}{n}\). Our method also breaks many non-numerical sphere packing density bounds in smaller dimensions. This is the first such improvement for each dimension since the work of Kabatyanskii and Levenshtein (Problemy Peredači Informacii 14(1):3–25, 1978) and its later improvement by Levenshtein (Dokl Akad Nauk SSSR 245(6):1299–1303, 1979) . Novelties of this paper include the analysis of triple correlations, usage of the concentration of mass in high dimensions, and the study of the spacings between the roots of Jacobi polynomials.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Spherical codes and packings

Packing densities have been studied extensively, for purely mathematical reasons as well as for their connections to coding theory. The work of Conway and Sloane is a comprehensive reference for this subject [10]. We proceed by defining the basics of this subject. Consider \({\mathbb {R}}^n\) equipped with the Euclidean metric |.| and the associated volume \(\textrm{vol}(.)\). For each real \(r>0\) and each \(x\in {\mathbb {R}}^n\), we denote by \(B_n(x,r)\) the open ball in \({\mathbb {R}}^n\) centered at x and of radius r. For each discrete set of points \(S\subset {\mathbb {R}}^n\) such that any two distinct points \(x,y\in S\) satisfy \(|x-y|\ge 2\), we can consider

the union of non-overlap** unit open balls centered at the points of S. This is called a sphere packing (S may vary), and we may associate to it the function map** each real \(r>0\) to

The packing density of \(\mathcal {P}\) is defined as

Clearly, this is a finite number. The maximal sphere packing density in \({\mathbb {R}}^n\) is defined as

a supremum over all sphere packings \(\mathcal {P}\) of \({\mathbb {R}}^n\) by non-overlap** unit balls.

The linear programming method initiated by Delsarte is a powerful tool for giving upper bounds on sphere packing densities [13]. That being said, we only know the optimal sphere packing densities in dimensions 1, 2, 3, 8 and 24 [8, 14, 15, 29]. Very recently, the first author proved an optimal upper bound on the sphere packing density of all but a tiny fraction of even unimodular lattices in high dimensions; see [25, Theorem 1.1].

The best known linear programming upper bounds on sphere packing densities in low dimensions are based on the linear programming method developed by Cohn–Elkies [7] which itself was inspired by Delsarte’s linear programming method. As far as the exponent is concerned, in high dimensions, the best asymptotic upper bound goes back to Kabatyanskii–Levenshtein from 1978 [17] stating that \(\delta _n\le 2^{-(0.599+o(1))n}\) as \(n\rightarrow \infty \). More recently, de Laat–de Oliveira Filho–Vallentin improved upper bounds in very low dimensions using the semi-definite programming method [12], partially based on the semi-definite programming method developed by Bachoc–Vallentin [3] for bounding kissing numbers. The work of Bachoc–Vallentin was further improved by Mittelmann–Vallentin [23], Machado–de Oliveira Filho [22], and very recently after the writing of our paper by de Laat–Leijenhorst [19].

Another recent development is the discovery by Hartman–Mazác–Rastelli [16] of a connection between the spinless modular bootstrap for two-dimensional conformal field theories and the linear programming bound for sphere packing densities. After the writing of our paper, Afkhami-Jeddi–Cohn–Hartman–de Laat–Tajdini [1] numerically constructed solutions to the Cohn–Elkies linear programming problem and conjectured that the linear programming method is capable of producing an upper bound on sphere packing densities in high dimensions that is exponentially better than that of Kabatyanskii–Levenshtein.

A notion closely related to sphere packings in Euclidean spaces is that of spherical codes. By inequalities relating sphere packing densities to the sizes of spherical codes, Kabatyanskii–Levenshtein [17] obtained their bound on sphere packing densities stated above. The sizes of spherical codes are bounded from above using Delsarte’s linear programming method. In what follows, we define spherical codes and this linear programming method.

Given \(S^{n-1}\), the unit sphere in \({\mathbb {R}}^n\), a \(\theta \)-spherical code is a finite subset \(A\subset S^{n-1}\) such that no two distinct \(x,y\in A\) are at an angular distance less than \(\theta \). For each \(0<\theta \le \pi \), we define \(M(n,\theta )\) to be the largest cardinality of a \(\theta \)-spherical code \(A\subset S^{n-1}.\)

The Delsarte linear programming method is applied to spherical codes as follows. Throughout this paper, we work with probability measures \(\mu \) on \([-1,1].\) \(\mu \) gives an inner product on the \({\mathbb {R}}\)-vector space of real polynomials \({\mathbb {R}}[t]\), and let \(\{p_i\}_{i=0}^{\infty }\) be an orthonormal basis with respect to \(\mu \) such that the degree of \(p_i\) is i and \(p_i(1)>0\) for every i. Note that \(p_0=1\). Suppose that the basis elements \(p_k\) define positive definite functions on \(S^{n-1}\), that is,

for any finite subset \(A\subset S^{n-1}\) and any real numbers \(h_i\in {\mathbb {R}}.\) An example of a probability measure satisfying inequality (1) is

where \(\alpha \ge \frac{n-3}{2}\) and \(2\alpha \in {\mathbb {Z}}\). Given \(s\in [-1,1]\), consider the space \(D(\mu ,s)\) of all functions \(f(t)=\sum _{i=0}^{\infty }f_ip_i(t)\), \(f_i\in {\mathbb {R}}\), such that

-

(1)

\(f_i\ge 0\) for every i, and \(f_0>0,\)

-

(2)

\(f(t)\le 0\) for \(-1\le t\le s.\)

Suppose \(0<\theta <\pi \), and \(A=\{x_1,\ldots ,x_N\}\) is a \(\theta \)-spherical code in \(S^{n-1}\). Given a function \(f\in D(\mu ,\cos \theta )\), we consider

This may be written in two different ways as

Since \(f\in D(\mu ,\cos \theta )\) and \(\langle x_i,x_j\rangle \le \cos \theta \) for every \(i\ne j\), this gives us the inequality

We define

In particular, this method leads to the linear programming bound

One of the novelties of our work is the construction using triple points of new test functions in Sect. 3 satisfying conditions (1) and (2) of the Delsarte linear programming method. In fact, our functions are infinite linear combinations of coefficients of the matrices appearing in Theorem 3.2 of Bachoc–Vallentin [3]. Bachoc–Vallentin use semi-definite programming to obtain an upper bound on kissing numbers \(M(n,\frac{\pi }{3})\) by summing over triples of points in spherical codes. On the other hand, we average one of the three points over the sphere, and take the other two points from the spherical code. Semi-definite programming is computationally feasible in very low dimensions, and improves upon linear programming bounds [11]. After the writing of our paper, a semi-definite programming method using triple point correlations for sphere packings was developed by Cohn–de Laat–Salmon [6], improving upon linear programming bounds on sphere packings in special low dimensions. In the semi-definite programming methods, the functions are numerically constructed. Furthermore, in high dimensions, there is no asymptotic bound using semi-definite programming which improves upon the linear programming bound of Kabatyanskii–Levenshtein [17], even up to a constant factor. The functions that we non-numerically construct improve upon [17] by a constant factor. In the same spirit, we also improve upon sphere packing density upper bounds in high dimensions.

Upper bounds on spherical codes are used to obtain upper bounds on sphere packing densities through inequalities proved using geometric methods. For example, for any \(0<\theta \le \pi /2\), Sidelnikov [26] proved using an elementary argument that

Let \( 0< \theta < \theta ^{\prime } \le \pi \). We write \(\lambda _n(\theta ,\theta ^{\prime })\) for the ratio of volume of the spherical cap with radius \(\frac{\sin (\theta /2)}{\sin (\theta ^{\prime }/2)}\) on the unit sphere \(S^{n-1}\) to the volume of the whole sphere. Sidelnikov [26] used a similar argument to show that for \(0<\theta <\theta '\le \pi \)

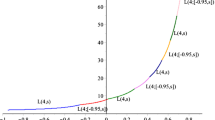

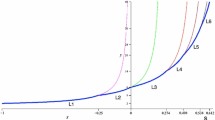

Kabatyanskii–Levenshstein used the Delsarte linear programming method and Jacobi polynomials to give an upper bound on \(M(n,\theta )\) [17]. A year later, Levenshtein [20] found optimal polynomials up to a certain degree and improved the Kabatyanskii–Levenshtein bound by a constant factor. Levenshtein obtained the upper bound

where

Here, \(\alpha =\frac{n-3}{2}\) and \(t^{\alpha ,\beta }_{1,d}\) is the largest root of the Jacobi polynomial \(p^{\alpha ,\beta }_d\) of degree \(d=d(n,\theta )\), a function of n and \(\theta \). We carefully define \(d=d(n,\theta )\) and Levenshtein’s optimal polynomials in Sect. 5.1. Also see Sect. 5.1 for the definition and properties of Jacobi polynomials.

Throughout this paper, \(\theta ^*= 62.997 \cdots ^{\circ }\) is the unique root of the equation

in \((0,\pi /2)\). As demonstrated by Kabatyanskii–Levenshtein in [17], for \(0<\theta < \theta ^*\), the bound \(M(n,\theta )\le M_{\textrm{Lev}}(n,\theta )\) is asymptotically exponentially weaker in n than

obtained from inequality (5). Barg–Musin [4, p.11 (8)], based on the work [2] of Agrell–Vargy–Zeger, improved inequality (5) and showed that

whenever \(\pi>\theta '>2\arcsin \left( \frac{1}{2\cos (\theta /2)} \right) \). Cohn–Zhao [9] improved sphere packing density upper bounds by combining the upper bound of Kabatyanskii–Levenshtein on \(M(n,\theta )\) with their analogue [9, Proposition 2.1] of (9) stating that for \(\pi /3\le \theta \le \pi \),

leading to better bounds than those obtained from (4). Aside from our main results discussed in the next subsection, in Proposition 2.2 of Sect. 2 we remove the angular restrictions on inequality (9) in the large n regime by using a concentration of mass phenomenon. An analogous result removes the restriction \(\theta \ge \pi /3\) on inequality (10) for large n.

Inequalities (9) and (10) give, respectively, the bounds

for \(\theta \) and \(\theta ^*\) restricted as in the conditions for inequality (9), and

Prior to our work, the above were the best bounds for large enough n. In Theorems 1.1 and 1.2, we improve both by a constant factor for large n, the first such improvement since the work of Levenshtein [20] more than forty years ago. We also relax the angular condition in inequality (11) to \(0<\theta <\theta ^*\) for large n. For \(\theta ^*\le \theta \le \pi \), \(M(n,\theta )\le M_{\textrm{Lev}}(n,\theta )\) is still the best bound. We also prove a number of other results, including the construction of general test functions in Sect. 3 that are of independent interest.

1.2 Main results and general strategy

We improve inequalities (11) and (12) with an extra factor 0.4325 for each sufficiently large n. In the case of sphere packings, we obtain an improvement by a factor of \(0.4325+\frac{51}{n}\) for dimensions \(n\ge 2000\). In low dimensions, our geometric ideas combined with numerics lead to improvements that are better than 0.4325. In Sect. 6, we provide the results of our extensive numerical calculations. We now state our main theorems.

Theorem 1.1

Suppose that \(0<\theta <\theta ^*\). Then

where \(c_n\le 0.4325\) for large enough n independent of \(\theta .\)

We also have a uniform version of this theorem for sphere packing densities.

Theorem 1.2

Suppose that \(\frac{1}{3}\le \cos (\theta )\le \frac{1}{2}\). We have

where \(c_{n}(\theta )<1\) for every n. If, additionally, \(n\ge 2000\) we have \(c_{n}(\theta )\le 0.515+\frac{74}{n}\). Furthermore, for \(n\ge 2000\) we have \(c_{n}(\theta ^*)\le 0.4325+\frac{51}{n}\).

By Kabatyanskii–Levenshtein [17], the best bound on sphere packing densities \(\delta _n\) for large n comes from \(\theta =\theta ^*\); comparisons using other angles are exponentially worse. Consequently, this theorem implies that we have an improvement by 0.4325 for sphere packing density upper bounds in high dimensions. Furthermore, note that the constants of improvement \(c_n(\theta )\) are bounded from above uniformly in \(\theta \). The lower bound in \(\frac{1}{3}\le \cos \theta \le \frac{1}{2}\) is not conceptually significant in the sense that a change in \(\frac{1}{3}\) would lead to a change in the bound \(c_n(\theta )\le 0.515+\frac{74}{n}\).

We prove Theorem 1.2 by constructing a new test function that satisfies the Cohn–Elkies linear programming conditions. The geometric idea behind the construction of our new test functions is the following (see Fig. 1). In [9], for every \(\pi \ge \theta \ge \frac{\pi }{3}\), Cohn and Zhao choose a ball of radius \(r=\frac{1}{2\sin (\theta /2)}\) around each point of the sphere packing so that for every two points \(\varvec{a}\) and \(\varvec{b}\) that are centers of balls in the sphere packing, for every point \(\varvec{z}\) in the shaded region, \(\varvec{a}\) and \(\varvec{b}\) make an angle \(\varphi \ge \theta \) with respect to \(\varvec{z}\). By averaging a function satisfying the Delsarte linear programming conditions for the angle \(\theta \), a new function satisfying the conditions of the Cohn–Elkies linear programming method is produced. The negativity condition easily follows as \(\varphi \ge \theta \) for every point \(\varvec{z}\) in the shaded region. Our insight is that since we are taking an average, we do not need pointwise negativity for each point \(\varvec{z}\) to ensure the negativity condition for the new averaged function. In fact, we can enlarge the radii of the balls by a quantity \(\delta =O(1/n)\) so that the conditions of the Cohn–Elkies linear programming method continue to hold for this averaged function. Since the condition \(\varphi \ge \theta \) is no longer satisfied for every point \(\varvec{z}\), determining how large \(\delta \) may be chosen is delicate. We develop analytic methods for determining such \(\delta \). This requires us to estimate triple density functions in Sect. 4, and estimating the Jacobi polynomials near their extreme roots in Sect. 5.1. It is known that the latter problem is difficult [18, Conjecture 1]. In Sect. 5.1, we treat this difficulty by using the relation between the zeros of Jacobi polynomials; these ideas go back to the work of Stieltjes [27]. More precisely, we use the underlying differential equations satisfied by Jacobi polynomials, and the fact that the roots of the family of Jacobi polynomials are interlacing. For Theorem 1.1, we use a similar idea but consider how much larger we can make certain appropriately chosen strips on a sphere. See Fig. 3.

We use our geometrically constructed test functions to obtain the last column of Table 1. Table 1 is a comparison of upper bounds on sphere packing densities. This table does not include the computer-assisted mathematically rigorous bounds obtained using the Cohn–Elkies linear programming method or those of semi-definite programming. We now describe the different columns. The Rogers column corresponds to the bounds on sphere packing densities obtained by Rogers [24]. The Levenshtein79 column corresponds to the bound obtained by Levenshtein in terms of roots of Bessel functions [20]. The K.–L. column corresponds to the bound on \(M(n,\theta )\) proved by Kabatyanskii and Levenshtein [17] combined with inequality (5). The Cohn–Zhao column corresponds to the column found in the work of Cohn and Zhao [9]; they combined their inequality (10) with the bound on \(M(n,\theta )\) proved by Kabatyanskii–Levenshtein [17]. We also include the column C.–Z.+L79 which corresponds to combining Cohn and Zhao’s inequality with improved bounds on \(M(n,\theta )\) using Levenshtein’s optimal polynomials [20]. The final column corresponds to the bounds on sphere packing densities obtained by our method. In Table 1, the highlighted entries are the best bounds obtained from these methods. Our bounds break most of the other bounds also in low dimensions. Our bounds are obtained from explicit geometrically constructed functions satisfying the Cohn–Elkies linear programming method. Our method only involves explicit integral calculations; in contrast to the numerical method in [7], we do not rely on any searching algorithm. Moreover, compared to the Cohn–Elkies linear programming method, in \(n=120\) dimensions, we improve upon the sphere packing density upper bound of \(1.164\times 10^{-17}\) obtained by forcing eight double roots.

1.3 Structure of the paper

In Sect. 2, we setup some of the notation used in this paper and prove Proposition 2.2. Section 3 concerns the general construction of our test functions that are used in conjunction with the Delsarte and Cohn–Elkies linear programming methods. In Sect. 5, we prove our main Theorems 1.1 and 1.2. In this section, we use our estimates on the triple density functions proved in Sect. 4. In Sect. 5.1, we describe Jacobi polynomials, Levenshtein’s optimal polynomials, and locally approximate Jacobi polynomials near their largest roots. In the final Sect. 6, we provide a table of improvement factors.

2 Geometric improvement

In this section, we prove Proposition 2.2, improving inequality (9) by removing the restrictions on the angles for large dimensions n. This is achieved via a concentration of mass phenomenon, at the expense of an exponentially decaying error term. First, we introduce some notations that we use throughout this paper.

Let \(0<\theta<\theta '<\pi \) be given angles, and let \(s:=\cos \theta \) and \(s':=\cos \theta '\). Throughout, \(S^{n-1}\) is the unit sphere centered at the origin of \({\mathbb {R}}^n\). Suppose \(\varvec{z}\in S^{n-1}\) is a fixed point.

Consider the hyperplane \(N_{\varvec{z}}:=\{\varvec{w}\in {\mathbb {R}}^n:\langle \varvec{w},\varvec{z}\rangle =0\}\). For each \(\varvec{a},\varvec{b}\in S^{n-1}\) of radial angle at least \(\theta \) from each other, we may orthogonally project them onto \(N_{\varvec{z}}\) via the map \(\Pi _{\varvec{z}}:S^{n-1}{\setminus }\{\pm \varvec{z}\} \rightarrow N_{\varvec{z}}\). For every \(\varvec{a}\in S^{n-1}\),

For brevity, when \(\varvec{a}\ne \pm \varvec{z}\) we denote \(\frac{\Pi _{\varvec{z}}(\varvec{a})}{|\Pi _{\varvec{z}}(\varvec{a})|}\) by \(\tilde{\varvec{a}}\) lying inside the unit sphere \(S^{n-2}\) in \(N_{\varvec{z}}\) centered at the origin. Given \(\varvec{a},\varvec{b}\in S^{n-1}{\setminus }\{\pm \varvec{z}\}\), we obtain points \(\tilde{\varvec{a}},\tilde{\varvec{b}}\in S^{n-2}\). We will use the following notation.

and

It is easy to see that

Consider the cap \(Cap _{\theta ,\theta '}(\varvec{z})\) on \(S^{n-1}\) centered at \(\varvec{z}\) and of radius \(\frac{\sin (\theta /2)}{\sin (\theta '/2)}\). \(Cap _{\theta ,\theta '}(\varvec{z})\) is the spherical cap centered at \(\varvec{z}\) with the defining property that any two points \(\varvec{a}\), \(\varvec{b}\) on its boundary of radial angle \(\theta \) are sent to points \(\tilde{\varvec{a}}\), \(\tilde{\varvec{b}}\in S^{n-2}\) having radial angle \(\theta '\) (Fig. 2).

It will be convenient for us to write the radius of the cap as \(\sqrt{1-r^2}=\frac{\sin (\theta /2)}{\sin (\theta '/2)}\), from which it follows that

This r is the distance from the center of the cross-section defining the cap \(Cap _{\theta ,\theta '}(\varvec{z})\) to the center of \(S^{n-1}\). In fact,

For \(0<\theta<\theta '<\pi \), we define

and

Then \(R>r\), and we define the strip

Lemma 2.1

The strip \(Str _{\theta ,\theta '}(\varvec{z})\) is contained in \(Cap _{\theta ,\theta '}(\varvec{z})\). Furthermore, any two points in \(Str _{\theta ,\theta '} (\varvec{z}){\setminus }\{\pm \varvec{z}\}\) of radial angle at least \(\theta \) apart are mapped to points in \(S^{n-2}\) (unit sphere in \(N_{\varvec{z}}\)) of radial angle at least \(\theta '\).

Proof

The first statement follows from \(\langle \varvec{a},\varvec{z}\rangle \ge r\) for any point \(\varvec{a}\in Str _{\theta ,\theta '}(\varvec{z})\). The second statement follows from equation (147) and Lemma 42 of [2]. \(\square \)

With this in mind, we are now ready to prove Proposition 2.2. When discussing the measure of strips \(Str _{\theta ,\theta '}(\varvec{z})\), we drop \(\varvec{z}\) from the notation and simply write \(Str _{\theta ,\theta '}\).

Proposition 2.2

Let \(0<\theta<\theta ^{\prime }<\pi .\) We have

where \(c:=\frac{1}{2}\log \left( \frac{1-r^2}{1-R^2}\right) >0\) is independent of n and only depends on \(\theta \) and \(\theta '\).

Proof

Suppose \(\{x_1,\ldots ,x_N\}\subset S^{n-1}\) is a maximal \(\theta \)-spherical code. Given \(x\in S^{n-1}\), let m(x) be the number of such strips \(Str _{\theta ,\theta '}(x_i)\) such that \(x\in Str _{\theta ,\theta '}(x_i)\). Note that \(x\in Str _{\theta ,\theta '}(x_i)\) if and only if \(x_i\in Str _{\theta ,\theta '}(x)\). Therefore, the strip \(Str _{\theta ,\theta '}(x)\) contains m(x) points of \(\{x_1,\ldots ,x_N\}\). From the previous lemma, we know that these m(x) points are mapped to points in \(S^{n-2}\) that have pairwise radial angles at least \(\theta '\). As a result,

using which we obtain

where \(\mu \) is the uniform probability measure on \(S^{n-1}\). Hence,

Note that the masses of \(Str _{\theta ,\theta '}\) and the cap \(Cap _{\theta ,\theta '}\) have the property that

Here, \(\omega _n=\int _{-1}^1(1-t^2)^{\frac{n-3}{2}}dt\). On the other hand, we may also give a lower bound on \(\lambda _n(\theta ,\theta ')\) by noting that

The first inequality follows from

for \(t\in [r,1)\). Combining this inequality for \(\lambda _n(\theta ,\theta ')\) with the above, we obtain

The conclusion follows using (18). \(\square \)

Remark 19

Proposition 2.2 removes the angular condition on inequality (9) of Barg–Musin at the expense of an error term that is exponentially decaying in the dimension n.

3 New test functions

In this section, we prove general linear programming bounds on the sizes of spherical codes and sphere packing densities by constructing new test functions.

3.1 Spherical codes

Recall the definition of \(D(\mu ,s)\) from the discussion of the Delsarte linear programming method in the introduction. In this subsection, we construct a function inside \(D(d\mu _{\frac{n-3}{2}},\cos \theta )\) from a given one inside \(D(d\mu _{\frac{n-4}{2}},\cos \theta ^{\prime }),\) where \(\theta '>\theta .\)

Suppose that \(g_{\theta '}\in D(d\mu _{\frac{n-4}{2}}, \cos \theta ^{\prime }).\) Fix \(\varvec{z}\in S^{n-1}\). Given \(\varvec{a},\varvec{b} \in S^{n-1}\), we define

where F is an arbitrary integrable real valued function on \([-1,1]\), and \(\tilde{\varvec{a}}\) and \(\tilde{\varvec{b}}\) are unit vectors on the tangent space of the sphere at \(\varvec{z}\) as defined in the previous section. Using the notation u, v, t of Sect. 2,

Note that the projection is not defined at \(\pm \varvec{z}\). If either \(\varvec{a}\) or \(\varvec{b}\) is \(\pm \varvec{z}\), we let \(h(\varvec{a},\varvec{b};\varvec{z})=0\).

Lemma 3.1

\(h(\varvec{a},\varvec{b};\varvec{z})\) is a positive semi-definite function in the variables \(\varvec{a},\varvec{b}\) on \(S^{n-1},\) namely

for every finite subset \(A\subset S^{n-1}\), and coefficients \(a_i \in {\mathbb {R}}.\) Moreover, h is invariant under the diagonal action of the orthogonal group O(n), namely

for every \(k\in O(n)\).

Proof

By (20), we have

By (1) and the positivity of the Fourier coefficients of \(g_{\theta '}\), we have

This proves the first part, and the second part follows from (20) and the O(n)-invariance of the inner product. \(\square \)

Let

where \(d\mu (k)\) is the probability Haar measure on O(n).

Lemma 3.2

\(h(\varvec{a},\varvec{b})\) is a positive semi-definite point pair invariant function on \(S^{n-1}.\)

Proof

This follows from the previous lemma. \(\square \)

Since \(h(\varvec{a},\varvec{b})\) is a point pair invariant function, it only depends on \(t=\langle \varvec{a},\varvec{b}\rangle .\) For the rest of this paper, we abuse notation and consider h as a real valued function of t on \([-1,1],\) and write \(h(\varvec{a},\varvec{b})=h(t).\)

3.1.1 Computing \(\mathcal {L}(h)\)

See (2) for the definition of \(\mathcal {L}\). We proceed to computing the value of \(\mathcal {L}(h)\) in terms of F and \(g_{\theta '}.\) First, we compute the value of h(1). Let \( \Vert F \Vert ^2_2:=\int _{-1}^{1} F(u)^2 d\mu _{\frac{n-3}{2}}(u).\)

Lemma 3.3

We have

Proof

Indeed, by definition, h(1) corresponds to taking \(\varvec{a}=\varvec{b}\), from which it follows that \(t=1\), \(u=v\) and \(\frac{t-uv}{\sqrt{(1-u^2)(1-v^2)}}=1\). Therefore, we obtain

\(\square \)

Next, we compute the zero Fourier coefficient of h, that is, \(h_0:=\int _{-1}^{1} h(t) d\mu _{\frac{n-3}{2}}(t)\). Let \(F_0=\int _{-1}^{1} F(u) d\mu _{\frac{n-3}{2}}(u) \) and \(g_{\theta ',0}=\int _{-1}^{1} g_{\theta '} d\mu _{\frac{n-4}{2}}(u)\).

Lemma 3.4

We have

Proof

Let \(O(n-1)\subset O(n)\) be the stabilizer of \(\varvec{z}.\) We identify \(O(n)/O(n-1)\) with \(S^{n-1}\) and write \([k_1]:= k_1\varvec{z}\in S^{n-1}\) for any \(k_1\in O(n).\) Then we write the probability Haar measure of O(n) as the product of the probability Haar measure of \(O(n-1)\) and the uniform probability measure \(d\sigma \) of \(S^{n-1}:\)

where \(k_1'\in O(n-1).\) By Eqs. (20), (22) and the above, we obtain

We note that

and

Therefore,

as required. \(\square \)

Proposition 3.5

We have

Proof

This follows immediately from Lemmas 3.3 and 3.4. \(\square \)

3.1.2 Criterion for \(\varvec{h\in D(d\mu _{\frac{n-3}{2}},\cos \theta )}\)

Finally, we give a criterion which implies \(h\in D(d\mu _{\frac{n-3}{2}},\cos \theta ).\) Recall that \(0<\theta < \theta ',\) and \(0<r<R<1\) are as defined in Sect. 2. Let \(s^{\prime }=\cos (\theta ^{\prime })\) and \(s=\cos (\theta ).\) Note that \(s^{\prime }<s\), \(r=\sqrt{\frac{s-s^{\prime }}{1-s^\prime }}\), and \(R=\cos \gamma _{\theta ,\theta '}\) as in Eq. (15). We define

Proposition 3.6

Suppose that \(g_{\theta '}\in D(d\mu _{\frac{n-4}{2}},\cos \theta ^{\prime })\) is given and h is defined as in (22) for some F. Suppose that F(x) is a positive integrable function giving rise to an h such that \(h(t)\le 0\) for every \(-1\le t\le \cos \theta \). Then

and

Among all positive integrable functions F with compact support inside [r, R], \(\chi \) minimize the value of \( \mathcal {L}(h),\) and for \(F=\chi \) we have

where \(c=\frac{1}{2}\log \left( \frac{1-r^2}{1-R^2}\right) >0.\)

Proof

The first part follows from the previous lemmas and propositions. Let us specialize to the situation where F is merely assumed to be a positive integrable function with compact support inside [r, R]. Let us first show that \(h(t)\le 0\) for \(t\le s\). We have

where \(h(\varvec{a},\varvec{b};\varvec{z}):= F(u)F(v) g_{\theta '}\left( \frac{t-uv}{\sqrt{(1-u^2)(1-v^2)}}\right) . \) First, note that \(F(u)F(v)\ne 0\) implies that \(\varvec{a}\) and \(\varvec{b}\) belong to \(Str _{\theta ,\theta '}(\varvec{z}).\) By Lemma 2.1, the radial angle between \(\tilde{\varvec{a}}\) and \(\tilde{\varvec{b}}\) is at least \(\theta '\), and so

Therefore

when \(F(u)F(v)\ne 0.\) Hence, the integrand \(h(\varvec{a},\varvec{b},\varvec{z})\) is non-positive when \(t\in [-1,\cos \theta ]\), and so \(h(t)\le 0\) for \(t\le s\).

It is easy to see that when \(F=\chi \),

and

Therefore, by our estimate in the proof of Proposition 2.2 we have

Finally, the optimality follows from the Cauchy–Schwarz inequality. More precisely, since F(x) has compact support inside [r, R], we have

Therefore, \(\mathcal {L}(h)=\frac{g_{\theta '}(1)}{g_{\theta ',0}} \frac{\Vert F \Vert ^2_2}{F_0^2}\ge \frac{\mathcal {L}(g_{\theta '})}{\mu (Str _{\theta ,\theta '}(\varvec{z}))} \) with equality only when \(F=\chi \). \(\square \)

3.2 Sphere packings

Suppose \(0<\theta \le \pi \) is a given angle, and suppose \(g_{\theta }\in D(d\mu _{\frac{n-3}{2}},\cos \theta )\). Fixing \(\varvec{z}\in {\mathbb {R}}^n\), for each pair of points \(\varvec{a},\varvec{b}\in {\mathbb {R}}^n\backslash \{\varvec{z}\}\) consider

where F is an even positive function on \({\mathbb {R}}\) such that it is in \(L^1({\mathbb {R}}^n)\cap L^2({\mathbb {R}}^n)\). We may then define \(H(\varvec{a},\varvec{b})\) by averaging over all \(\varvec{z}\in {\mathbb {R}}^n\):

Lemma 3.7

\(H(\varvec{a},\varvec{b})\) is a positive semi-definite kernel on \({\mathbb {R}}^n\) and depends only on \(T=|\varvec{a}-\varvec{b}|\).

Proof

The proof is similar to that of Lemma 3.1. \(\square \)

As before, we abuse notation and write H(T) instead of \(H(\varvec{a},\varvec{b})\) when \(T=|\varvec{a}-\varvec{b}|\). The analogue of Proposition 3.6 is then the following.

Proposition 3.8

Let \(0<\theta \le \pi \) and suppose \(g_{\theta }\in D(d\mu _{\frac{n-3}{2}},\cos \theta )\). Suppose F is as above such that \(H(T)\le 0\) for every \(T\ge 1\). Then

where \(\textrm{vol}(B_1^n)\) is the volume of the n-dimensional unit ball. In particular, if \(F=\chi _{[0,r]}\), where \(0\le r\le 1\), is such that it gives rise to an H satisfying \(H(T)\le 0\) for every \(T\ge 1\), then

Proof

The proof of this proposition is similar to that of Theorem 3.4 of Cohn–Zhao [9]. We focus our attention on proving inequality (24). Suppose we have a packing of \({\mathbb {R}}^n\) of density \(\Delta \) by non-overlap** balls of radius \(\frac{1}{2}\). By Theorem 3.1 of Cohn–Elkies [7], we have

Note that \(H(0)=g_{\theta }(1)\Vert F\Vert _{L^2({\mathbb {R}}^n)}^2\), and that

As a result, we obtain inequality (24). The rest follows from a simple computation. \(\square \)

Note that the situation \(r=\frac{1}{2\sin (\theta /2)}\) with \(\pi /3\le \theta \le \pi \) corresponds to Theorem 3.4 of Cohn–Zhao [9], as checking the negativity condition \(H(T)\le 0\) for \(T\ge 1\) follows from Lemma 2.2 therein. The factor \(2^n\) comes from considering functions where the negativity condition is for \(T\ge 1\) instead of \(T\ge 2\).

Remark 26

In this paper, we consider characteristic functions; however, it is an interesting open question to determine the optimal such F in order to obtain the best bounds on sphere packing densities through this method.

3.3 Incorporating geometric improvement into linear programming

In proving upper bounds on \(M(n,\theta )\), Levenshtein [20, 21], building on Kabatyanskii–Levenshtein [17], constructed feasible test functions \(g_{\theta }\in D(d\mu _{\frac{n-3}{2}},\cos \theta )\) for the Delsarte linear programming problem with \(\mathcal {L}(g_{\theta })=M_{\textrm{Lev}}(n,\theta )\) defined in (7). This gave the bound

Sidelnikov’s geometric inequality (5) gives

for angles \(0<\theta<\theta '<\frac{\pi }{2}\). Applying this for \(0<\theta <\theta ^*\) and combining with inequality (27), Kabatyanskii and Levenshtein obtained

From [17], it is known that this is exponentially better than inequality (27) for \(0<\theta <\theta ^*\). Finding functions \(h\in D(d\mu _{\frac{n-3}{2}},\cos \theta )\) with \(\mathcal {L}(h)<\mathcal {L}(g_{\theta })\) was suggested by Levenshtein in [21, page 117]. In fact, Boyvalenkov–Danev–Bumova [5] gives necessary and sufficient conditions for constructing extremal polynomials that improve Levenshtein’s bound. However, their construction does not exponentially improve inequality (27) for \(0<\theta <\theta ^*\). In contrast to their construction, Proposition 3.6 gives the following corollary stating that our construction of the function h gives an exponential improvement in the linear programming problem comparing to Levenshtein’s optimal polynomials for \(0<\theta <\theta ^*\). This is not to say that we exponentially improve the bounds given for spherical codes and sphere packings; we provide a single function using which the Delsarte linear programming method gives a better version of the exponentially better inequality (28).

Corollary 3.9

Fix \(0<\theta <\theta ^*.\) Let \(h_{\theta ^*}\in D(d\mu _{\frac{n-3}{2}},\cos \theta )\) be the function associated to Levenshtein’s \(g_{n-1,\theta ^*}\) constructed in Proposition 3.6. Then

where \(o(1)\rightarrow 0\) as \(n\rightarrow \infty \) and \(\delta _{\theta }:=\Delta (\theta )-\Delta (\theta ^*)>0\), with

Proof

Let \(0<\theta <\theta ^{\prime }\le \pi /2\). Recall the notation of Proposition 3.6. Associated to a function \(g_{\theta '}\) satisfying the Delsarte linear programming conditions in dimension \(n-1\) and for angle \(\theta '\), in Proposition 3.6 we constructed an explicit \(h_{\theta '}\in D(d\mu _{\frac{n-3}{2}},\cos \theta )\) such that

where \(c>0\) is a specific constant depending only on \(\theta \) and \(\theta '\). Therefore,

By [17, Theorem 4]

It is easy to show that [17, Proof of Theorem 4]

Hence,

where

Note that

As we mentioned before, \(\theta ^*:= 62.997 \cdots ^{\circ }\) is the unique root of the equation \(\frac{d}{d\theta '} \Delta (\theta ')=0\) [17, Theorem 4] in the interval \(0<\theta '<\pi /2\), which is the unique minimum of \(\Delta (\theta ')\) for \(0<\theta '< \pi /2.\) Hence, for \(0<\theta <\theta ^*,\) taking \(h_{\theta ^*}\) the function constructed in Proposition 3.6 associated to Levenshtein’s optimal polynomial \(g_{n-1,\theta ^*}\) for angle \(\theta ^*\) in dimension \(n-1\), and \(g_{n,\theta }\) Levenshtein’s optimal polynomial for angle \(\theta \) in dimension n, we obtain

for sufficiently large n. This concludes the proof of this proposition. \(\square \)

3.4 Constant improvement to Barg–Musin and Cohn–Zhao

In this subsection, we sketch the ideas that go into proving Theorems 1.1 that improves inequality (9) of Barg–Musin by a constant factor of at least 0.4325 for every angle \(\theta \) with \(0<\theta <\theta ^*\). The improvement to the Cohn–Zhao inequality (10) for sphere packings is similar. We will complete the technical details in the rest of the paper.

Recall that given any test function \(g_{\theta '}\in D(d\mu _{\frac{n-4}{2}},\cos \theta ^{\prime })\) and F an arbitrary integrable real valued function on \([-1,1]\), we defined

in Eq. (21), using which we defined the function

\(h(\varvec{a},\varvec{b})\) is a point-pair invariant function, and so we viewed it as a function h(t) of \(t:=\langle \varvec{a},\varvec{b}\rangle \). As we saw in the proof of Proposition 3.6, for F positive integrable and compactly supported on [r, R], \(F(u)F(v)\ne 0\) implies that \(\varvec{a},\varvec{b}\in \text {Str}_{\theta , \theta '}(\varvec{z})\), where

is the grey strip illustrated above in Fig. 4. From this, we obtained that

using which we obtained that the integrand in (29) is non-positive, giving us

Our insight is that the integrand in (29) need not be non-positive everywhere in order to have (30). In fact, we will show that when F is the characteristic function with support \([r-\delta ,R]\) for some \(\delta >\frac{c}{n}\), where \(c>0\) is independent of n, and \(g_{\theta '}\) are Levenshtein’s optimal polynomials, we continue to have (30). This corresponds to allowing \(\varvec{a},\varvec{b}\) be contained in a slight enlargement of the strip \(Str _{\theta ,\theta '}(\varvec{z})\) (See Fig. 4).

There are two main ingredients that go into determining an explicit lower bound for \(\delta \). The first is related to understanding the behavior of Levenshtein’s optimal polynomials near their largest roots. This reduces to understanding the behavior of Jacobi polynomials near their largest roots. This is done in Sect. 5.1. The other idea is estimating the density function of the inner product matrix of triple uniformly distributed points on high-dimensional spheres. This allows us to rewrite the integral (29) and its sphere packing analogue using different coordinates. This is done in Sect. 4.

4 Conditional density functions

In this section, we rewrite the averaging integral (29) when F is a characteristic function in different coordinates. We also do the same for sphere packings. See Eqs. (31) and (33). In order to prove Theorems 1.1 and 1.2 in Sect. 5, we will also need to estimate certain conditional densities, which is the main purpose of this section.

4.1 Conditional density for spherical codes

Let \({\mathcal {S}}\) be the space of positive semi-definite symmetric \(3\times 3\) matrices with 1 on diagonal. Let

be the map that sends triple points to their pairwise inner products via

where \((t,u,v):=(\langle \varvec{a},\varvec{b}\rangle ,\langle \varvec{a},\varvec{z}\rangle ,\langle \varvec{b},\varvec{z}\rangle )\). Let \(\mu (u,v,t)dudvdt\) be the density function of the pushforward of the product of uniform probability measures on \((S^{n-1})^3 \) to the coordinates (u, v, t).

Proposition 4.1

We have

where C is a normalization constant such that \(\int _{{\mathcal {S}}} \mu (u,v,t) dudvdt=1\)

Proof

The density of the conditional measure is given by

where \(\mu (t)=(1-t^2)^{\frac{n-3}{2}}\) and \(\mu (u,v;t)\) is the conditional density of u, v given t. We note that this conditional density function is proportional to

where

We write \(\varvec{z}=\varvec{z}^{\perp }+\varvec{z}^{\Vert }\), where \(\varvec{z}^{\Vert }\) is the projection of \(\varvec{z}\) onto the two dimensional plane spanned by \(\varvec{a}\) and \(\varvec{b}\) and \(\varvec{z}^{\perp }\) is orthogonal to \(\varvec{a}\) and \(\varvec{b}\). Fix \(\varvec{a}, \varvec{b}\) and consider the following map from \(S^{n-1}\)

The above map has Jacobian \(\frac{\det \begin{bmatrix} 1&{}\quad t&{}\quad u \\ t &{}\quad 1 &{}\quad v \\ u &{}\quad v &{}\quad 1\end{bmatrix}^{1/2}}{\sqrt{1-t^2}}\) with respect to the Euclidean metric. We note that the geometric locus of \(\varvec{z}^{\Vert }\) is a rhombus with area \(\frac{\varepsilon ^2}{\sqrt{1-t^2}}\). Moreover, given \(\varvec{z}^{||}\), the geometric locus of \(\varvec{z}^{\perp }\) is a sphere of dimension \(n-3\) and radius

Therefore,

from which it follows that

for some constant \(C>0\). This implies our proposition. \(\square \)

Recall that \(\theta <\theta ^{\prime }\). Let \(s^{\prime }=\cos (\theta ^{\prime })\) and \(s=\cos (\theta ).\) Note that \(s^{\prime }<s\) and for \(r=\sqrt{\frac{s-s^{\prime }}{1-s^\prime }}\), we have \(s^{\prime }=\frac{s-r^2}{1-r^2}\) and \(0< r<1\). Let \(0<\delta =o(\frac{1}{\sqrt{n}})\) that we specify later, and define

See Fig. 4. \(\varvec{a},\varvec{b}\) are in the shaded areas corresponding to u, v being in the support of \(\chi \).

Recall (13), and denote

Let \(\mu (x;t,\chi )\) be the induced density function on x subjected to the conditions of fixed t, and \(r-\delta \le u,v\le R\). Precisely, up to a positive constant multiple that depends on \(n,t, R, r-\delta \), we have

where the integral is over the curve \(C_{x,t}\subset {\mathbb {R}}^2\) that is given by \(\frac{t-uv}{\sqrt{(1-u^2)(1-v^2)}}=x\) (Fig. 5), dl is the induced Euclidean metric on \(C_{x,t},\) and

\(\varvec{a}\) and \(\varvec{b}\) are two points with fixed \(t=\langle \varvec{a},\varvec{b} \rangle \). The two outer curves within the shaded regions represent the 2-dimensional locus of points \(\varvec{z}\) having (u, v) on the curve \(C_{x,t}\). Shaded region corresponds to the support of \(\chi (u)\chi (v)\)

We explicitly compute \(\mu ^*(x,l,t)\). We have

from which it follows that

Hence, up to a positive constant multiple that depends on \(n,t, R, r-\delta \),

We may write the test function constructed in Eq. (22) with \(F=\chi \) and any given g as

where \(t=\langle \varvec{a},\varvec{b}\rangle \); see Sect. 3.4. We define for complex x

Proposition 4.2

Suppose that \(|x-s^{\prime }|=o(\frac{1}{\sqrt{n}}).\) We have, up to a positive constant multiple depending on \(n,s,R,r-\delta \),

Proof

Let \(C_x:=C_{x,s}\). Note that

and

Furthermore,

Hence, up to a positive constant multiple depending on \(n,s,R,r-\delta \),

Suppose that \(r\le u,v\le R\). Then, by the definition of R, the equality

occurs when \((u,v)\in \{(r,R),(R,r),(r,r)\}\). Furthermore, when \(|x-s'|=o\left( \frac{1}{\sqrt{n}}\right) \), we must have \((u,v)\in \{(r,R),(R,r),(r,r)\}\) up to \(o\left( \frac{1}{\sqrt{n}}\right) \). Since the integrand \(\chi (u)\chi (v)(s-uv)^{n-4}\) of the last integral above is exponentially larger when \(u,v=r+o\left( \frac{1}{\sqrt{n}}\right) \), the main contribution of the integral comes when u and v are near r up to \(o\left( \frac{1}{\sqrt{n}}\right) \). Writing \(u=r+\tilde{u}\) and \(v=r+\tilde{v},\) where \(\tilde{u}, \tilde{v}=o(\frac{1}{\sqrt{n}}),\) we have

Hence, up to a positive constant multiple depending on \(n,s,R,r-\delta \),

Recall the following inequalities, which follow easily from the Taylor expansion of \(\log (1+x)\)

for \(|a|\le n/2.\) We apply the above inequalities to estimate the integral, and obtain

We approximate the curve \(C_x\) with the following line

It follows that

from which the conclusion follows. \(\square \)

4.2 Conditional density for sphere packings

Let \(s=\cos (\theta )\) and \(r=\frac{1}{\sqrt{2(1-s)}}\), where \(\frac{1}{3}\le s\le \frac{1}{2}\). Let \(0<\delta =\frac{c_1}{n}\) for some fixed \(c_1>0\) that we specify later, and define

Let \(\varvec{a},\varvec{b}\) be two randomly independently chosen points on \({\mathbb {R}}^{n}\) with respect to the Euclidean measure such that \(|\varvec{a}|,|\varvec{b}|\le r+\delta ,\) where |.| is the Euclidean norm. Let

and \(\alpha \) be the angle between \(\varvec{a}\) and \(\varvec{b}\). The pushforward of the product measure on \({\mathbb {R}}^{n}\times {\mathbb {R}}^{n}\) onto the coordinates \((U,V,\alpha )\) is, up to a positive scalar depending only on n, the measure

Let

which follows from the cosine law. We have

Hence, the pushforward of the product measure on \({\mathbb {R}}^{n}\times {\mathbb {R}}^{n}\) onto the coordinates \((U,V,T)\in {\mathbb {R}}^3\) has the following density function up to a positive scalar depending only on n:

where \(\Delta (U,V,T)\) is the Euclidean area of the triangle with sides U, V, T. If no such triangle exists, then \(\Delta (U,V,T)=0\). Let \(\mu (x;T,\chi )\) be the induced density function on x subjected to the conditions of fixed T, and \( U,V\le r+\delta \). Precisely, we have, up to a positive constant multiple depending on \(n, r+\delta \) and T, that

where the integral is over the curve \(C_{x,T}\subset {\mathbb {R}}^2\) that is given by \(\frac{U^2+V^2-T^2}{2UV}=x\) (Fig. 6) and dl is the induced Euclidean metric on \(C_{x,T},\) and

\(\varvec{a}\) and \(\varvec{b}\) are two points with \(T=|\varvec{a}-\varvec{b}|\). The two outer curves within the shaded region represent the locus of of points \(\varvec{z}\) having (U, V) on the curve \(C_{x,T}\), that is, \(\varvec{z}\) with respect to which \(\varvec{a}\) and \(\varvec{b}\) have angle whose cosine is x. Shaded region corresponds to the support of \(\chi (U)\chi (V)\)

We have

and

Hence,

up to a positive constant multiple depending on \(n, r+\delta \) and T.

From Lemma 3.7, Eq. (23) with \(F=\chi \) and any given g may be written as

We now study the scaling property of \(\mu (x;T,\chi ).\) Let \(\chi _{T}(x):=\chi (\frac{x}{T}).\)

Lemma 4.3

We have

Proof

Note that

and x is invariant by scaling U, V, T. The conclusion follows. \(\square \)

Let \(\mu (x;\chi ):=\mu (x;1,\chi ),\) and

Proposition 4.4

Suppose that \(|x-s|\le \frac{c_2}{n}\), \(x<1/2\) and \(\delta =\frac{c_1}{n}\). We have

up to a positive scalar multiple making this a probability measure on \([-1,1]\), and where E is a function of x with the uniform bound \(|E|<\frac{(4c_2+2c_1+2)^2}{n}\) for \(n\ge 2000\).

Proof

We have

where \(C_x:=C_{x,1}.\) We note that up to a positive scalar depending only on n,

Hence,

Suppose that U and V are in the support of \(\chi .\) By concentration of mass, we may assume that \(U=r+\tilde{U}\) and \(V=r+\tilde{V},\) with \(\tilde{U}, \tilde{V}\le \frac{c_1}{n}.\) From (32), we have

where

Since \(|x-s|\le \frac{c_2}{n},\) and \(\frac{1}{2}<2r(1-x)^2,\) it follows that \(-\frac{c_1+2c_2}{n}< \tilde{U}, \tilde{V}\le \frac{c_1}{n}\) for \(n\ge 2000\). Hence,

We have

where \(|E_2|< 4\frac{(c_1+2c_2)^2}{n^2}.\) Furthermore,

where \(|E_2'|<|E_2|.\) Hence,

Note that

where \(E_2''\le 4\frac{(c_1+2c_2)^2}{n}\) for \(n\ge 2000\). We parametrize the curve \(C_x\) with V to obtain

We have

where

for some \(V_1\in \left( r-\frac{c_1+2c_2}{n},r+\frac{c_1}{n}\right) ,\) which implies \(\left| \frac{(1-x^2)}{(1-(1-x^2)V_1^2)^{3/2}} \right| <6.\) Hence,

Hence,

where

and

The first equality \(=^+\) above means equal to 0 if \(r+\delta <r_1\). Therefore,

up to a positive scalar multiple, where \(|E|<\frac{(4c_2+2c_1+2)^2}{n}\) for \(n\ge 2000\). This completes the proof of our Proposition. \(\square \)

5 Comparison with previous bounds

We define Jacobi polynomials, state some of their properties, and prove a local approximation result for Jacobi polynomials in Sect. 5.1. In Sect. 5.2, we improve bounds on \(\theta \)-spherical codes. In Sect. 5.3, we improve upper bounds on sphere packing densities. The general constructions of our test functions were provided in Sect. 3. For each of our main theorems, we determine the largest values of \(\delta =O(1/n)\) measuring the extent to which the supports of the characteristic functions \(\chi \) in Propositions 3.6 and 3.8 could be enlarged. See the end of Sect. 3 for an outline of the general strategy.

5.1 Jacobi polynomials and their local approximation

Recall definition (7) of \(M_{\textrm{Lev}}(n,\theta )\). Levenshtein proved inequality (6) by applying Delsarte’s linear programming bound to a family of even and odd degree polynomials inside \(D(d\mu _{\frac{n-3}{2}},\cos \theta ),\) which we now discuss. In order to define these Levenshtein polynomials, we record some well-known properties of Jacobi polynomials (see [28, Chapter IV]) that we will also use in the rest of the paper.

We denote by \(p^{\alpha ,\beta }_{d}(t)\) Jacobi polynomials of degree d with parameters \(\alpha \) and \(\beta \). These are orthogonal polynomials with respect to the probability measure

on the interval \([-1,1]\) with the normalization that gives

\(p^{\alpha ,\beta }_{d}(t)\) has n simple real roots \(t_{1,d}^{\alpha ,\beta }> t_{2,d}^{\alpha ,\beta }> \dots >t_{d,d}^{\alpha ,\beta }.\) When \(\alpha =\beta \), we denote the measure \(d\mu _{\alpha ,\alpha }\) simply as \(d\mu _{\alpha }\).

When proving our local approximation result on Levenshtein’s optimal polynomials in the rest of this subsection, we use the fact that the Jacobi polynomial \(p_d^{\alpha ,\beta }(t)\) satisfies the differential equation

By [21, Lemma 5.89],

It is also well-known that for fixed \(\alpha \), \(t^{\alpha +1,\alpha +1}_{1,d}\rightarrow 1\) as \(d\rightarrow \infty \). Henceforth, let \(d:=d(n,\theta )\) be uniquely determined by \(t^{\alpha +1,\alpha +1}_{1,d-1}< \cos (\theta ) \le t^{\alpha +1,\alpha +1}_{1,d}\). Let [21, Lemma 5.38]

where \(\alpha :=\frac{n-3}{2}\) in our case. Levenshtein proved that \(g_{n,\theta }\in D(d\mu _{\frac{n-3}{2}},\cos \theta ),\) and

By (3), this gives

As part of our proofs of our main theorems in the next subsections, we need to determine local approximations to Jacobi polynomials \(p_d^{\alpha ,\beta }\) in the neighbourhood of points \(s\in (-1,1)\) such that \(s\ge t_{1,d}^{\alpha ,\beta }\). This is obtained using the behaviour of the zeros of Jacobi polynomials. Using this, we obtain suitable local approximations of Levenshtein’s optimal functions near s.

Proposition 5.1

Suppose that \(\alpha \ge \beta \ge 0,\) \(|\alpha -\beta |\le 1\), \(d\ge 0\) and \(s\in [t_{1,d}^{\alpha ,\beta },1)\). Then, we have

where,

with

Proof

Consider the Taylor expansion

of \(p_d^{\alpha ,\beta }\) centered at s. We prove the proposition by showing that for \(s\in [t_{1,d}^{\alpha ,\beta },1)\), the higher degree terms in the Taylor expansion are small in comparison to the linear term. Indeed, suppose \(k\ge 1\). Then, using Eq. (34),

where the last inequality follows from the fact that the roots of a Jacobi polynomial interlace with those of its derivative. However, the last quantity is equal to \(\frac{(d^2/dt^2)p_d^{\alpha ,\beta } (s)}{(d/dt)p_d^{\alpha ,\beta }(s)}\). We proceed to show that

Indeed, we know from the differential equation (35) that

However, since s is to the right of the largest root of \(p_d^{\alpha ,\beta }\), \(p_d^{\alpha ,\beta }(s)\ge 0\). Therefore,

from which inequality (37) follows. As a result, the degree \(k+1\) term compares to the linear term as

Consequently, for every \(k\ge 1\),

As a result, we obtain that

where

with

\(\square \)

5.2 Improving spherical codes bound

We prove a stronger version of Theorem 1.1 that we now state.

Theorem 5.2

Fix \(\theta <\theta ^*\) and suppose \(0<\theta <\theta '\le \pi /2\). Then there is a function \(h\in D(d\mu _{\frac{n-3}{2}},\cos \theta )\) such that

where \(c_n\le 0.4325\) for large enough n independent of \(\theta \) and \(\theta '\).

Proof

Without loss of generality, we may assume that \(\cos (\theta ^{\prime })=t^{\alpha +1,\alpha +\varepsilon }_{1,d}\) for some \(\varepsilon \in \{0,1\}\) and \(\alpha :=\frac{n-4}{2}.\) Indeed, recall from Sect. 5.1 that d is uniquely determined by \(t^{\alpha +1,\alpha +1}_{1,d-1}< \cos (\theta ') \le t^{\alpha +1,\alpha +1}_{1,d}\),

and

We also have

where the above follows from \(\cos (\theta ^{\prime })\le t^{\alpha +1,\alpha +\varepsilon }_{1,d}\) and \(\lambda _n(\theta ,\theta ^{\prime })\) is the ratio of volume of the spherical cap with radius \(\frac{\sin (\theta /2)}{\sin (\theta ^{\prime }/2)}\) on the unit sphere \(S^{n-1}\) to the volume of the whole sphere. Hence,

As before, \(s=\cos (\theta ),\) and \(s'=\cos (\theta ')=t^{\alpha +1,\alpha +\varepsilon }_{1,d}.\) Note that \(s^{\prime }<s\) and for \(r=\sqrt{\frac{s-s^{\prime }}{1-s^\prime }}\), we have \(s^{\prime }=\frac{s-r^2}{1-r^2}\) and \(0< r<1\). Let \(0<\delta =O(\frac{1}{n})\) that we specify later, and define the function F for the application of Proposition 3.6 to be

Recall from (31) that

Applying Proposition 3.6 with the function F as above and the function g, we obtain the inequality in the statement of the theorem with \(c_n\le 1+o(1)\). We now prove the desired bound on \(c_n\) for sufficiently large n.

By Proposition 3.9, for any \(\delta _1>0\), if \(|s'-\cos (\theta ^*)|\ge \delta _1\), then for large n, \(M_{\textrm{Lev}}(n-1,\theta ')/\lambda _n(\theta ,\theta ')\) is exponentially worse than \(M_{\textrm{Lev}}(n-1,\theta ^*)/\lambda _n(\theta ,\theta ^*)\). Therefore, we assume that \(s'=\cos (\theta ')=t_{1,d}^{\alpha +1, \alpha +\varepsilon }\) for some \(\varepsilon \in \{0,1\}\), and that \(s'\) is sufficiently close to \(\cos \theta ^*\). We specify the precision of the difference later. Suppose \(\varepsilon =0\), and so \(s'=t^{\alpha +1,\alpha }_{1,d}\). By Proposition 5.1, we have

where

with

By Proposition 4.2, we have for \(|x-s'|=o\left( \frac{1}{\sqrt{n}}\right) \) the estimate

We need to find the maximal \(\delta >0\) such that

for every \(-1\le t\le s\). We first address the above inequality for \(t=s\). Note that the integrand is negative for \(x<s^{\prime }\) and positive for \(x>s^{\prime }.\) Hence,

Its non-positivity is equivalent to

We proceed to give a lower bound on the absolute value of the integral over \(-1\le x\le s^{\prime }.\) Later, we give an upper bound on the right hand side. By (39), we have

We note that \(2-\frac{e^{\sigma }-1}{\sigma }\) is a concave function with value 1 as \(\sigma \rightarrow 0\) and a root at \(\sigma =1.256431 \cdots \). Hence, for \(\sigma <1.25643\), we have

Note that \(\sigma (x)<1.25643\) implies

Let

Therefore, as \(n\rightarrow \infty \)

We change the variable \(s'-x\) to z and note that

for \(|x-s'|<\lambda .\) Hence, we obtain that as \(n\rightarrow \infty \) and \(|x-s'|<\lambda \),

for \(|x-s'|<\lambda .\) We replace the above asymptotic formulas and obtain that as \(n\rightarrow \infty \), the right hand side of (40) is at least

We now give an upper bound on the absolute value of the integral over \(s'\le x\le 1.\) We note that

Let \(\Lambda := \frac{2(s-s')^{1/2}(1-s')^{3/2}\delta }{(1-s)}\). We have

for \(x-s'>\Lambda .\) We have

where

Therefore,

We choose \(\delta \) such that

For large enough n we may replace the numerical value \(\cos (1.0995124)\) for \(s'\) as we may assume that \(s'\) is close to \(\cos (\theta ^*)\). Furthermore, write \(v:=nz\), and divide by \(\delta \) to obtain

Here, we have also used that \(\Lambda =\frac{2\delta (s-s')^{1/2}(1-s')^{3/2}}{(1-s) }\). Also, note that \(n\lambda =2.196823 \cdots \) when \(s'\) is near \(\cos (1.0995124)\). We have the Taylor expansion around \(v=0\)

with error \(|Er|<2\times 10^{-5}\) if \(n\Lambda <0.92\), which we assume to be the case. Simplifying, we want to find the maximal \(n\Lambda \) such that

where the error Er again satisfies \(|Er|\le 2\times 10^{-5}\). A numerical computation gives us that the inequality is satisfied when \(n\Lambda \le 0.915451 \cdots \). Consequently, if we choose \(\delta =\ell /n\), then we must have

In this case, the cap of radius \(\sqrt{1-r^2}\) becomes \(\sqrt{1-(r-\delta )^2}=\sqrt{1-r^2}\)\(\left( 1+\frac{\ell r}{n(1-r^2)}\right) +O(1/n^2)\). Note that \(r=\sqrt{\frac{s-s'}{1-s'}}\), and so

We deduce that,

This computation shows that for sufficiently large n, for any \(0\le \delta \le \frac{0.83837237(1-r^2)}{nr}\), \(h(s)\le 0\). We now show that \(h(t)\le 0\) for \(-1\le t<s\). Note that

If \(s-t\) is of order greater than O(1/n), then \(r(t)<r-\delta \) for large n, and so \(h(t)\le 0\) as the integrand is negative in this case. Therefore, we may assume that \(-1\le t<s\) and \(s-t=O(1/n)\). For such t, replacing s with t in the above calculations shows that the negativity \(h(t)\le 0\) is true for all \(0\le \delta \le \frac{0.83837237(1-r(t)^2)}{nr(t)}.\) Since \(r(t)<r\), \(\frac{0.83837237(1-r(t)^2)}{nr(t)}>\frac{0.83837237(1-r^2)}{nr}\). Consequently, \(h(t)\le 0\) for every \(-1\le t\le s\) whenever \(0\le \delta \le \frac{0.83837237(1-r^2)}{nr}\).

Similarly, one obtains the same conclusion when \(\varepsilon =1\), that is \(s^{\prime }=t^{\alpha +1,\alpha +1}_{1,d}\). Therefore, our improvement to Levenshtein’s bound on \(M(n,\theta )\) for large n is by a factor of \(1/e^{0.83837237 \cdots }=0.432413 \cdots \) for any choice of angle \(0<\theta <\theta ^*\). As the error in our computations is less than \(2\times 10^{-5}\), we deduce that we have an improvement by a factor of 0.4325 for sufficiently large n. \(\square \)

5.3 Improving bound on sphere packing densities

In this subsection, we give our improvement to Cohn and Zhao’s [9] bound on sphere packings. Recall that in the case of sphere packings, we let \(s=\cos (\theta )\), \(r=\frac{1}{\sqrt{2(1-s)}}\), and \(\alpha =\frac{n-3}{2}\). By assumption, \(\frac{1}{3}\le s\le \frac{1}{2}\). In this case, we define for each \(0<\delta =\frac{c_1}{n}\) the function F to be used in Proposition 3.8 to be

Proof of Theorem 1.2

As in the proof of Theorem 5.2, we consider \(g=g_{n,\theta }\in D(d\mu _{\frac{n-3}{2}},\theta )\). Note that by Proposition 3.8 applied to the function F above and g, we have for the function

of Eq. (33), the inequality

if \(\delta >0\) is chosen such that \(H(T)\le 0\) for \(T\ge 1\). We wish to maximize \(\delta \) under this negativity condition. Let \(n\ge 2000\) and s be a root of the Jacobi polynomial as before. As in the proof of Theorem 1.1, we begin by considering that case where \(\varepsilon =0\), that is, \(s=t^{\alpha +1,\alpha }_{1,d}\), and take g for this s. We show that \(H(1)\le 0\). For the other T, showing \(H(T)\le 0\) follows similarly by using Lemma 4.3. Note that

To show \(H(1)\le 0\), it suffices to show that

First, we give a lower bound for the left hand side of Eq. (43). As before, Proposition 5.1 allows us to write

where

with

As before,

Note that if \(\sigma (x)<1.25643\), ensuring that the right hand side of the bound on \(1+A(x)\) is non-negative, then

Let

Let

Suppose that \(|x-s|\le \frac{c_2}{n}\) and \(\delta =\frac{c_1}{n}\) for some \(0<c_1<0.81\), \(0<c_2<3.36\). Note that for such a \(c_1\) and \(n\ge 2000\), the assumption \(s\le 1/2\) implies that \(r+\delta \le 1\). By Proposition 4.4, we have \(\mu (x;\chi )=0\) for \(r_1\ge r+\delta .\) Otherwise,

for some constant \(C_n>0\) making \(\mu (x;\chi )\) a probability measure on \([-1,1]\), and where \(|E|<\frac{(4c_2+2c_1+2)^2}{n}\) for \(n\ge 2000\). In particular, for such \(c_1,c_2\), we have \(|E|<\frac{292}{n}\). By letting \(z:=s-x\) and \(v:=\frac{(ns+1)z}{(1-s^{2})}\), Taylor expansion approximations imply that inequality (43) is satisfied for \(\frac{1}{3}\le s\le \frac{1}{2}\) and large n if

By a numerics similar to that done for spherical codes, one finds that the maximal such \(c_1<1\) is \(0.66413470 \cdots \). Therefore, for every \(\frac{1}{3}\le s\le \frac{1}{2}\) and \(n\rightarrow \infty \), we have an improvement at least as good as

Note that for such s, as \(n\rightarrow \infty \), this gives us an improvement of at most 0.5148, a universal such improvement factor.

Returning to the case of general \(n\ge 2000\) and \(\frac{1}{3}\le s\le \frac{1}{2}\), given such an n we need to maximize \(c_1<0.81\) such that

By a numerical calculation with Sage, we obtain that the improvement factor for any \(\frac{1}{3}\le s\le \frac{1}{2}\) and any \(n\ge 2000\) is at least as good as

On the other hand, if we fix s such that s is sufficiently close to \(s^*=\cos (\theta ^*)\), then the same kind of calculations as above give us an asymptotic improvement constant of 0.4325, the same as in the case of spherical codes. In fact, for \(n\ge 2000\), we have an improvement factor at least as good as

over the combination of Cohn–Zhao [9] and Levenshtein’s optimal polynomials [20].

The case \(s=t^{\alpha +1,\alpha +1}_{1,d}\) follows in exactly the same way. This completes the proof of our theorem. \(\square \)

Remark 45

We end this section by saying that our improvements above are based on a local understanding of Levenshtein’s optimal polynomials, and that there is a loss in our computations. By doing numerics, we may do computations without having to rely on such local approximations.

6 Numerics

In Theorem 1.2, we improved the sphere packing densities by a factor of 0.4325 for sufficiently high dimensions. There is a loss in our estimates due to neglecting the contribution of Levenshtein’s polynomials away from their largest roots, as giving rigorous estimates is difficult. In this section, we numerically investigate the behavior of our constant improvement factors for sphere packing densities by considering those neglected terms in dimensions up to 130. As we noted before in the introduction, in low dimensions, there are better bounds on sphere packing densities using semi-definite programming, and so the objective of this section is guessing the improvement over 0.4325 in high dimensions.

Before our work, the best known upper bound on sphere packing densities in high dimensions was obtained using inequalities

where \(\text {Lev}(n,\theta )\) is the linear programming bound using Levenshtein’s optimal polynomials [20, eq.(3),(4)]. Note that \(\text {Lev}(n,\theta )\le M_{\textrm{Lev}}(n,\theta )\) and equality occurs when \(\cos (\theta )=t^{\alpha +1,\alpha +\varepsilon }_{1,d}.\) In this section, we apply Proposition 3.8 to Levenshtein’s optimal polynomials for various angles \(\theta \) (columns of Table 2) and obtain

with maximal r (in Proposition 3.8), where \(\alpha _n(\theta )\) are the entries of the table. Note that in Table 2, the improvement factors appear to gradually become independent of \(\theta \) as n enlarges. We conjecture that they tend to \(\frac{1}{e}\) as \(n\rightarrow \infty \).

Data availability

The data is in Table 2.

References

Afkhami-Jeddi, N., Cohn, H., Hartman, T., de Laat, D., Tajdini, A.: High-dimensional sphere packing and the modular bootstrap. J. High Energy Phys. (12), Paper No. 066, 44 (2020)

Agrell, E., Vardy, A., Zeger, K.: Upper bounds for constant-weight codes. IEEE Trans. Inform. Theory 46(7), 2373–2395 (2000)

Bachoc, C., Vallentin, F.: New upper bounds for kissing numbers from semidefinite programming. J. Amer. Math. Soc. 21(3), 909–924 (2008)

Barg, A., Musin, O.R.: Codes in spherical caps. Adv. Math. Commun. 1(1), 131–149 (2007)

Boyvalenkov, P.G., Danev, D.P., Bumova, S.P.: Upper bounds on the minimum distance of spherical codes. IEEE Trans. Inform. Theory 42(5), 1576–1581 (1996)

Cohn, H., de Laat, D., Salmon, A.: Three-point bounds for sphere packing. ar**v e-prints, ar**v:2206.15373 (2022)

Cohn, H., Elkies, N.: New upper bounds on sphere packings. I. Ann. Math. (2) 157(2), 689–714 (2003)

Cohn, H., Kumar, A., Miller, S.D., Radchenko, D., Viazovska, M.: The sphere packing problem in dimension 24. Ann. Math. (2) 185(3), 1017–1033 (2017)

Cohn, H., Zhao, Y.: Sphere packing bounds via spherical codes. Duke Math. J. 163(10), 1965–2002 (2014)

Conway, J.H., Sloane, N.J.A.: Sphere packings, lattices and groups, Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences], vol. 290, 3rd edn. Springer, New York (1999). With additional contributions by E. Bannai, R. E. Borcherds, J. Leech, S. P. Norton, A. M. Odlyzko, R. A. Parker, L. Queen and B. B. Venkov

de Courcy-Ireland, M., Dostert, M., Viazovska, M.: Six-dimensional sphere packing and linear programming. ar**v e-prints, ar**v:2211.09044 (2022)

de Laat, D., de Oliveira Filho, F.M., Vallentin, F.: Upper bounds for packings of spheres of several radii. Forum Math. Sigma 2, e23 (2014)

Delsarte, P.: Bounds for unrestricted codes, by linear programming. Philips Res. Rep. 27, 272–289 (1972)

Fejes Tóth, L.: Über die dichteste Kugellagerung. Math. Z. 48, 676–684 (1943)

Hales, T.C.: A proof of the Kepler conjecture. Ann. Math. (2) 162(3), 1065–1185 (2005)

Hartman, T., Mazáč, D., Rastelli, L.: Sphere packing and quantum gravity. J. High Energy Phys. (12):048, 66 (2019)

Kabatjanskiĭ, G.A., Levenšteĭn, V.I.: Bounds for packings on the sphere and in space. Problemy Peredači Informacii 14(1), 3–25 (1978)

Krasikov, I.: An upper bound on Jacobi polynomials. J. Approx. Theory 149(2), 116–130 (2007)

Leijenhorst, N., de Laat, D.: Solving clustered low-rank semidefinite programs arising from polynomial optimization. ar**v e-prints, ar**v:2202.12077 (2022)

Levenšteĭn, V.I.: Boundaries for packings in \(n\)-dimensional Euclidean space. Dokl. Akad. Nauk SSSR 245(6), 1299–1303 (1979)

Levenšteĭn, V.I.: Universal bounds for codes and designs. In: Handbook of coding theory, vols. I, II, pp. 499–648. North-Holland, Amsterdam (1998)

Machado, F.C., de Fernando Mário, O.F.: Improving the semidefinite programming bound for the kissing number by exploiting polynomial symmetry. Exp. Math. 27(3), 362–369 (2018)

Mittelmann, H.D., Vallentin, F.: High-accuracy semidefinite programming bounds for kissing numbers. Exp. Math. 19(2), 175–179 (2010)

Rogers, C.A.: The packing of equal spheres. Proc. Lond. Math. Soc. 3(8), 609–620 (1958)

Sardari, N.T.: The Siegel variance formula for quadratic forms. ar**v e-prints, ar**v:1904.08041 (2019)

Sidel’nikov, V.M.: New estimates for the closest packing of spheres in \(n\)-dimensional Euclidean space. Mat. Sb. (N.S.) 95(137), 148–158, 160 (1974)

Stieltjes, T.J.: Sur les racines de l’équation \(X_n=0\). Acta Math. 9, 385–400 (1887)

Szegö, G.: Orthogonal Polynomials. American Mathematical Society Colloquium Publications, vol. 23. American Mathematical Society, New York (1939)

Viazovska, M.S.: The sphere packing problem in dimension 8. Ann. Math. (2) 185(3), 991–1015 (2017)

Acknowledgements

Both authors are thankful to Alexander Barg, Peter Boyvalenkov, Henry Cohn, Matthew de Courcy-Ireland, Mehrdad Khani Shirkoohi, Peter Sarnak, and Hamid Zargar. N.T.Sardari’s work is supported partially by the National Science Foundation under Grant No. DMS-2015305 N.T.S and is grateful to the Institute for Advanced Study and the Max Planck Institute for Mathematics in Bonn for their hospitality and financial support. M.Zargar was supported by the Max Planck Institute for Mathematics in Bonn, SFB1085 at the University of Regensburg, and the University of Southern California.

Funding

Open access funding provided by SCELC, Statewide California Electronic Library Consortium.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sardari, N.T., Zargar, M. New upper bounds for spherical codes and packings. Math. Ann. 389, 3653–3703 (2024). https://doi.org/10.1007/s00208-023-02738-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00208-023-02738-z