Abstract

Objectives

The aim of the present review was to evaluate the role of holographic imaging and its visualization techniques in providing more detailed and intuitive anatomy of the surgical area and assist in the precise implementation of surgery.

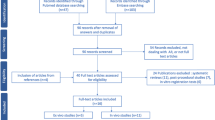

Materials and methods

Medline, Embase, and Cochrane Central databases were searched for literature on the application of holographic imaging in partial nephrectomy (PN), and the history, development, application in PN as well as the future direction were reviewed.

Results

A total of 304 papers that met the search requirements were included and summarized. Over the past decade, holographic imaging has been increasingly used for preoperative planning and intraoperative navigation in PN. At present, the intraoperative guidance method of overlap** and tracking virtual three dimensional images on endoscopic view in an augmented reality environment is generally recognized. This method is helpful for selective clam**, the localization of endophytic tumors, and the fine resection of complex renal hilar tumors. Preoperative planning and intraoperative navigation with holographic imaging are helpful in reducing warm ischemia time, preserving more normal parenchyma, and reducing serious complications.

Conclusions

Holographic image-guided surgery is a promising technology, and future directions include artificial intelligence modeling, automatic registration, and tracking.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The incidence of renal cell carcinoma (RCC) ranks within the top 20 among all solid tumors [1]. With the increasing number of early-stage RCC cases and the improvement of surgical techniques and instruments, the proportion of partial nephrectomy (PN) is increasing [2]. The European Association of Urology guidelines have suggested that the oncological outcomes achieved by PN are comparable to those achieved by radical nephrectomy (RN) for T1 RCC. PN also preserves kidney function better and potentially limits the incidence of cardiovascular disorders [3].

PN is technically demanding and has various challenges. (1) During the operation, the key targets that need to be handled are blocked by perirenal fat or adjacent organs, making them invisible to the naked eye, and they often cannot be found quickly and accurately due to the lack of guidance of clear anatomical landmarks. Delays or mistakes in this process may lead to vascular injury, opening of the collecting system, tumor rupture, or other risks. (2) The information provided by two-dimensional (2D) CT/MR imaging is insufficient. Thus, it is necessary for surgeons to “translate” cross-sectional planar imaging into stereoscopic imaging, which is a process of cognitive reconstruction. This building-in-mind process is particularly difficult for complex lesions or for inexperienced surgeons. (3) Due to the insufficient grasp of the local anatomical details of renal tumors, surgeons sometimes avoid PN for some complex cases and instead use a safer method, such as radical nephrectomy. (4) Similarly, due to the insufficient information obtained before the operation, the PN operation often turns into an “encounter”, such as encountering unknown vascular variants or failing to find endogenous tumors after kidney incision, which introduces risks to the operation.

In recent years, new technological tools have been developed for the reconstruction of three-dimensional (3D) virtual models from standard 2D imaging. Holographic imaging (also known as 3D imaging, augmented reality (AR) imaging, 3D visualization models, and holograms) is reconstructed based on surface rendering techniques from contrast CT or MRI DICOM data using 3D virtual reconstruction technology [4]. Holographic imaging provides more intuitive three-dimensional images, enhances the spatial understanding of the operator, and has a powerful interactive function, guiding the precise implementation of the operation. Previous studies have shown that the application of the holographic imaging technique in laparoscopic partial nephrectomy (LPN) and robotic-assisted partial nephrectomy (RAPN) results in reduced operative time, estimated blood loss, complications, and length of hospital stay [31]. Bertolo et al. compared the ability of 3D images to 2D images in expanding the PN indication for complex renal tumors [32], and they reported that more than 20% of surgeons change their decision for these complex renal tumors from RN to PN after reviewing the 3D images. These results support the potential of holographic imaging in surgical planning. Shirk et al. compared 3D VR models with conventional CT/MR imaging for surgical planning and surgical outcomes in RAPN; their results showed a reduction in operative time, estimated blood loss, clamp time, and hospital stay in the 3D group [33].

3.2.4 Navigation in surgery

Holographic imaging superimposes holographic images on the endoscopic view of the anatomy, allowing intraoperative navigation. The holographic imaging navigation technique achieves holographic images fused with the real-time intraoperative endoscopic view, allowing the surgeon to access the targets directly and minimizing the damage to surrounding vessels and other structures. Currently, although it is not yet an automatic navigation system, holographic imaging still helps the console surgeon in perceiving the three-dimensionality of the kidney and correctly localizing the tumor, resulting in precise and safe tumor resection. Holographic imaging is particularly useful in complex renal tumor cases, such as hilar tumors, in which surgeons must perform tumor resection close to the renal vein/artery.

During holographic imaging navigation surgery, it is currently necessary to expose some anatomical landmarks, such as renal hilar vessels or renal contours, to achieve registration and tracking of holographic images with an intraoperative endoscopic view [17]. In holographic imaging navigation PN, when the virtual renal pedicle is precisely fused with the real renal vascular pedicle, the surgeon is guided to perform safe vascular dissection and to identify the renal artery or vein branches and clam** as well as to implement personalized vascular management strategies, such as high selective renal artery clam**. When the virtual kidney is completely fused with the real kidney, by adjusting the transparency of the model, the location of the endophytic tumor and the tumor relationship with the adjacent blood vessels, calyces, and other intraparenchymal structures can be visualized, which is conducive to more accurate tumor excision [34].

However, there are some concerns regarding holographic imaging navigation. One is the accuracy of registration of holographic images on static anatomical structures because it is not easy to precisely align virtual holographic images and their physical counterparts in spatial and rotational coordinates [35]. In LPN or RAPN, the establishment of pneumoperitoneum deforms the abdominal cavity and changes the spatial relationship of the kidney compared to that before the operation. In addition, the kidney shifts, deforms, rotates, and changes its relative position to neighboring organs due to gravity and the jostling of surgical tools. Based on these factors, the previous rigid matching technology produces a large deviation after organ deformation. Deformable models have been introduced by some researchers, and this problem can be alleviated to some extent by modifying the preoperative model during surgery [36]. There is also a method called nonlinear parametric deformation to simulate the deformation of an organ during surgery [34].

Currently, to maximize the accuracy of superposition, it is often necessary to use manual registration. The assistant will manipulate the fusion system during the entire procedure to create proper orientation and deformation of the model. Manual registration and tracking are simple methods to “anchor” a 3D model to its counterpart in real time. However, these methods require an additional assistant surgeon to control the AR workstation [18]. This work is labor-consuming, and the accuracy of image fusion depends on the experience of the assistant.

Intraoperative tracking is another major challenge. It is a great challenge to maintain satisfactory real-time accuracy in laparoscopic AR surgery because the endoscopic view of a surgical scene is highly dynamic. It is difficult for the assistant to adjust the model and match it with the endoscopic image in time. Similar to manual registration, manual tracking is also a common method under current conditions, but it is labor intensive. In addition, the efficacy of manual tracking is affected by the experience of the assistants.

3.2.5 Surgical training

Holographic imaging can also be used to enhance the education of medical students and fellows, thus aiding their professional development. Rai et al. reported that medical students who use the interactive 3D VR simulator based on PN cases significantly improve their subjective ability to localize the tumor position [37]. Knoedler et al. evaluated the effect of 3D printed physical renal models on enhancing medical trainees’ understanding of kidney tumor characterization and localization [38]; they reported that the overall trainee nephrometry score accuracy is significantly improved with the 3D model vs. CT scan, and there is also more consistent agreement among trainees when using the 3D models compared to CT scans to assess the nephrometry score. Thus, 3D models improve trainees’ understanding and characterization of kidney tumors in patients.

3.3 Impact on PN outcomes

Zhu et al. reported their experience of holographic image navigation in urological laparoscopic and robotic surgery, including 27 partial nephrectomy cases; they reported that this technology reduces tissue injury, decreases complications, and improves the surgical success rate [39].

The application of 3D imaging in PN for complex renal tumors, such as renal hilar tumors, has attracted extensive attention. Wang et al. included 26 cases of renal hilar tumors and found that 3D imaging reconstruction and navigation technology have the advantages of accurate localization, a high complete resection rate, and fewer perioperative complications [40]. Porpiglia et al. reported their results of using 3D imaging during RAPN for complex renal tumors (PADUA ≥ 10) [34]. Compared to 2D ultrasound guidance, the 3D imaging and AR guidance group had a lower rate of global ischemia, a higher rate of enucleation, a lower rate of collecting system violation, a low risk of surgery-related complications, and lower renal blood flow decrease 3 months after the operation. The combination of holographic imaging with da Vinci robotic surgical systems allowed accurate recognition, increased flexibility, and real-time navigation, which made the RAPN easier and safer for renal hilar tumors. Zhang et al. reported their series of combining holographic imaging with RAPN for renal hilar tumor treatment [18]; they reported that this technique reduces the risk of conversion to open surgery or RN for renal hilar tumors, increases the success rate, and decreases complications. Zhang et al. also reported a new technique of combining holographic imaging and clip** tumor bed artery branches outside the kidney to reduce PN-related secondary bleeding, to reduce the need for postoperative interventional embolization, and to shorten the length of hospital stay.

Endophytic kidney tumors present a great challenge as they are not visible on the kidney surface. Porpiglia et al. presented their use of AR images to visualize endophytic tumors [34]. AR technology potentially increases the 3D perception of the lesion’s features and the surgeon’s confidence in tumor excision and guide precise resection. Compared to the ultrasound-guided group, Porpiglia et al. observed that the enucleation rate of the 3D AR group was higher (p = 0.02), the percentage of preserved healthy renal parenchyma was higher, and the opening rate of the collecting system was lower (p = 0.0003).

A systematic review has examined the effectiveness of AR-assisted technology in LPN compared to conventional techniques [45]. In addition, a specially developed software, called Indocyanine Green Auto Augmented Reality (IGNITE), allows 3D models to be automatically anchored to real organs and takes advantage of the enhanced views provided by NIRF technology. There are also some other reports of surgical tracking technology [46], and the subsequent development of these technologies deserves our attention.

3.4.2 Artificial intelligence

Artificial intelligence (AI) should be an important direction for future holographic imaging-guided surgery. Recently, it has been reported that kidneys, renal tumors, arteries, and veins can be automatically segmented and 3D-modeled by deep learning [47, 48]. He Y et al. proposed the first deep learning framework, called the Meta Grayscale Adaptive Network (MGANet), which simultaneously segments the kidney, renal tumors, arteries, and veins on CTA images in one inference, resulting in a better 3D integrated renal structure segmentation quality [49]. Houshyar et al. developed and evaluated a convolutional neural network (CNN) to act as a surgical planning aid by determining renal tumor and kidney volumes through segmentation on single-phase CT. The results showed that the end-to-end trained CNNs perform renal parenchyma and tumor segmentation on test cases in an average of 5.6 s. Houshyar et al. concluded that the deep learning model rapidly and accurately segments kidneys and renal tumors on single-phase contrast-enhanced CT scans as well as calculates tumor and renal volumes [50]. Zhang et al. developed a 3D kidney perfusion model based on deep learning techniques to automatically demonstrate the segmentation of renal arteries, and they verified its accuracy and reliability in LPN [51]. It is technically feasible for AI to realize automatic 3D modeling, but the process relies on a large amount of calibrated data for training.

Marker-based and deformation-based registration techniques have been preliminarily reported to achieve more accurate registration [42]. The use of deep learning to automatically recognize image and video information is expected to achieve automatic registration and tracking. Recently, Padovan et al. introduced a deep learning framework through convolutional neural networks and motion analysis, which determines the position and rotation information of target organs in endoscopic video in real time [52]. This work has taken an important step for the application of deep learning to generalize the automatic registration process.

4 Conclusion

PN is a challenging surgical procedure. Holographic imaging helps surgeons to thoroughly understand the individualized anatomy of the kidney and tumor as well as to set up a more optimized surgical plan and to facilitate patient counseling. The implementation of holographic imaging navigation helps the surgeon to accurately identify and locate the target tumor, renal artery/vein and branches, and collecting system, thus reducing complications and conversion to open surgery or RN.

The application of holographic imaging in PN has significant benefits in reducing the warm ischemia time, collecting system opening, blood loss, and incidence of serious complications, but it is similar to traditional technology in conversion to RN, complication rate, changes in glomerular infiltration rate, and surgical margins. Holographic imaging in PN is particularly valuable in cases of endophytic renal tumors, complex renal hilar tumors, and super-selective clam**.

At present, the main deficiency in holographic imaging is that automatic 3D modeling and intraoperative automatic registration have not yet been fully realized, and the accuracy of registration still needs to be improved. Deep learning is expected to solve these challenges in the future.

Availability of data and materials

All data are from public papers.

References

Sung H, Ferlay J, Siegel RL, Laversanne M, Soerjomataram I, Jemal A, et al. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin. 2021;71(3):209–49. https://doi.org/10.3322/caac.21660. (PubMed PMID: 33538338).

Bahadoram S, Davoodi M, Hassanzadeh S, Bahadoram M, Barahman M, Mafakher L. Renal cell carcinoma: an overview of the epidemiology, diagnosis, and treatment. G Ital Nefrol. 2022;39(3):2022–vol3.

Ljungberg B, Albiges L, Abu-Ghanem Y, Bensalah K, Dabestani S, Fernández-Pello S, et al. European association of urology guidelines on renal cell carcinoma: the 2019 update. Eur Urol. 2019;75(5):799–810. https://doi.org/10.1016/j.eururo.2019.02.011. (PubMed PMID: 30803729).

Wang L, Zhao Z, Wang G, Zhou J, Zhu H, Guo H, et al. Application of a three-dimensional visualization model in intraoperative guidance of percutaneous nephrolithotomy. Int J Urol. 2022;29(8):838–44. https://doi.org/10.1111/iju.14907. (PubMed PMID: 35545290).

Zhu G, **ng JC, Wong GB, Hu ZQ, Li NC, Zhu H, et al. Application of holographic image navigation in urological laparoscopic and robotic surgery. Chin J Urol. 2020;41(2):131–7. https://doi.org/10.3760/cma.j.issn.1000-6702.2020.02.010.

Michiels C, Khene ZE, Prudhomme T, Boulenger de Hauteclocque A, Cornelis FH, Percot M, et al. 3D-Image guided robotic-assisted partial nephrectomy: a multi-institutional propensity score-matched analysis (UroCCR study 51). World J Urol. 2021. https://doi.org/10.1007/s00345-021-03645-1. Epub 20210402. PubMed PMID: 33811291.

Lanzieri CF, Levine HL, Rosenbloom SA, Duchesneau PM, Rosenbaum AE. Three-dimensional surface rendering of nasal anatomy from computed tomographic data. Arch Otolaryngol Head Neck Surg. 1989;115(12):1434–7. https://doi.org/10.1001/archotol.1989.01860360036013. (PubMed PMID: 2818895).

Levin DN, Hu XP, Tan KK, Galhotra S. Surface of the brain: three-dimensional MR images created with volume rendering. Radiology. 1989;171(1):277–80. https://doi.org/10.1148/radiology.171.1.2928539. (PubMed PMID: 2928539).

Ukimura O, Gill IS. Imaging-assisted endoscopic surgery: Cleveland clinic experience. J Endourol. 2008;22(4):803–10. https://doi.org/10.1089/end.2007.9823. (PubMed PMID: 18366316).

Teber D, Guven S, Simpfendörfer T, Baumhauer M, Güven EO, Yencilek F, et al. Augmented reality: a new tool to improve surgical accuracy during laparoscopic partial nephrectomy? Preliminary in vitro and in vivo results. Eur Urol. 2009;56(2):332–8. https://doi.org/10.1016/j.eururo.2009.05.017. (PubMed PMID: 19477580).

Chen Y, Li H, Wu D, Bi K, Liu C. Surgical planning and manual image fusion based on 3D model facilitate laparoscopic partial nephrectomy for intrarenal tumors. World J Urol. 2014;32(6):1493–9. https://doi.org/10.1007/s00345-013-1222-0. (PubMed PMID: 24337151).

Wake N, Rude T, Kang SK, Stifelman MD, Borin JF, Sodickson DK, et al. 3D printed renal cancer models derived from MRI data: application in pre-surgical planning. Abdom Radiol (NY). 2017;42(5):1501–9. https://doi.org/10.1007/s00261-016-1022-2. (PubMed PMID: 28062895; PubMed Central PMCID: PMCPMC5410387).

Fan G, Meng Y, Zhu S, Ye M, Li M, Li F, et al. Three-dimensional printing for laparoscopic partial nephrectomy in patients with renal tumors. J Int Med Res. 2019;47(9):4324–32 (Epub 20190721. doi: 10.1177/0300060519862058. PubMed PMID: 31327282; PubMed Central PMCID: PMCPMC6753553).

Maddox MM, Feibus A, Liu J, Wang J, Thomas R, Silberstein JL. 3D-printed soft-tissue physical models of renal malignancies for individualized surgical simulation: a feasibility study. J Robot Surg. 2018;12(1):27–33. https://doi.org/10.1007/s11701-017-0680-6. (PubMed PMID: 28108975).

Li G, Dong J, Wang J, Cao D, Zhang X, Cao Z, et al. The clinical application value of mixed-reality-assisted surgical navigation for laparoscopic nephrectomy. Cancer Med. 2020;9(15):5480–9. https://doi.org/10.1002/cam4.3189. (PubMed PMID: 32543025; PubMed Central PMCID: PMCPMC7402835).

Yoshida S, Fukuda S, Moriyama S, Yokoyama M, Taniguchi N, Shinjo K, et al. Holographic surgical planning of partial nephrectomy using a wearable mixed reality computer. Eur Suppl. 2019;18(1):e661–2. https://doi.org/10.1016/S1569-9056(19)30488-9.

Zhang H, Yin F, Yang L, Qi A, Cui W, Yang S, et al. Computed tomography image under three-dimensional reconstruction algorithm based in diagnosis of renal tumors and retroperitoneal laparoscopic partial nephrectomy. J Healthc Eng. 2021;2021:3066930.

Zhang K, Wang L, Sun Y, Wang W, Hao S, Li H, et al. Combination of holographic imaging with robotic partial nephrectomy for renal hilar tumor treatment. Int Urol Nephrol. 2022;54(8):1837–44. https://doi.org/10.1007/s11255-022-03228-y. (Epub 20220514. PubMed PMID: 35568753).

Li L, Zeng X, Yang C, Un W, Hu Z. Three-dimensional (3D) reconstruction and navigation in robotic-assisted partial nephrectomy (RAPN) for renal masses in the solitary kidney: a comparative study. Int J Med Robot. 2022;18(1):e2337. https://doi.org/10.1002/rcs.2337. (Epub 20211010. PubMed PMID: 34591353).

Detmer FJ, Hettig J, Schindele D, Schostak M, Hansen C. Virtual and augmented reality systems for renal interventions: a systematic review. IEEE Rev Biomed Eng. 2017;10:78–94. https://doi.org/10.1109/rbme.2017.2749527. (PubMed PMID: 28885161).

Zeng S, Zhou Y, Wang M, Bao H, Na Y, Pan T. Holographic reconstruction technology used for intraoperative real-time navigation in robot-assisted partial nephrectomy in patients with renal tumors: a single center study. Transl Androl Urol. 2021;10(8):3386–94. https://doi.org/10.21037/tau-21-473. (PubMed PMID: 34532263; PubMed Central PMCID: PMCPMC8421827).

Teishima J, Takayama Y, Iwaguro S, Hayashi T, Inoue S, Hieda K, et al. Usefulness of personalized three-dimensional printed model on the satisfaction of preoperative education for patients undergoing robot-assisted partial nephrectomy and their families. Int Urol Nephrol. 2018;50(6):1061–6. https://doi.org/10.1007/s11255-018-1881-2. (PubMed PMID: 29744824).

Bernhard JC, Isotani S, Matsugasumi T, Duddalwar V, Hung AJ, Suer E, et al. Personalized 3D printed model of kidney and tumor anatomy: a useful tool for patient education. World J Urol. 2016;34(3):337–45. https://doi.org/10.1007/s00345-015-1632-2. (PubMed PMID: 26162845; PubMed Central PMCID: PMCPMC9084471).

Klatte T, Ficarra V, Gratzke C, Kaouk J, Kutikov A, Macchi V, et al. A literature review of renal surgical anatomy and surgical strategies for partial nephrectomy. Eur Urol. 2015;68(6):980–92. https://doi.org/10.1016/j.eururo.2015.04.010. (PubMed PMID: 25911061; PubMed Central PMCID: PMCPMC4994971).

Ficarra V, Novara G, Secco S, Macchi V, Porzionato A, De Caro R, et al. Preoperative aspects and dimensions used for an anatomical (PADUA) classification of renal tumours in patients who are candidates for nephron-sparing surgery. Eur Urol. 2009;56(5):786–93. https://doi.org/10.1016/j.eururo.2009.07.040. (PubMed PMID: 19665284).

Kutikov A, Uzzo RG. The R.E.N.A.L. nephrometry score: a comprehensive standardized system for quantitating renal tumor size, location and depth. J Urol. 2009;182(3):844–53. https://doi.org/10.1016/j.juro.2009.05.035. (PubMed PMID: 19616235).

Wadle J, Hetjens S, Winter J, Mühlbauer J, Neuberger M, Waldbillig F, et al. Nephrometry scores: the Effect of imaging on routine read-out and prediction of outcome of nephron-sparing surgery. Anticancer Res. 2018;38(5):3037–41. https://doi.org/10.21873/anticanres.12559. (PubMed PMID: 29715137).

Porpiglia F, Amparore D, Checcucci E, Autorino R, Manfredi M, Iannizzi G, et al. Current use of three-dimensional model technology in urology: a road map for personalised surgical planning. Eur Urol Focus. 2018;4(5):652–6. https://doi.org/10.1016/j.euf.2018.09.012. (PubMed PMID: 30293946).

Porpiglia F, Amparore D, Checcucci E, Manfredi M, Stura I, Migliaretti G, et al. Three-dimensional virtual imaging of renal tumours: a new tool to improve the accuracy of nephrometry scores. BJU Int. 2019;124(6):945–54. https://doi.org/10.1111/bju.14894. (PubMed PMID: 31390140).

Liu ZS, Wu Z, Wang XG, Zhang KY, Li W, Miao CH, et al. A novel operation difficulty scoring system for renal carcinoma based on holographic imaging. Chin J Urol. 2022;43(5):344–9. https://doi.org/10.3760/cma.j.cn112330-20220310-00105.

Porpiglia F, Fiori C, Checcucci E, Amparore D, Bertolo R. Hyperaccuracy three-dimensional Reconstruction is able to maximize the efficacy of selective clam** during robot-assisted partial nephrectomy for complex renal masses. Eur Urol. 2018;74(5):651–60. https://doi.org/10.1016/j.eururo.2017.12.027. (PubMed PMID: 29317081).

Bertolo R, Autorino R, Fiori C, Amparore D, Checcucci E, Mottrie A, et al. Expanding the indications of robotic partial nephrectomy for highly complex renal tumors: urologists’ perception of the impact of hyperaccuracy three-dimensional reconstruction. J Laparoendosc Adv Surg Tech A. 2019;29(2):233–9. https://doi.org/10.1089/lap.2018.0486. (Epub 20181103. PubMed PMID: 30394820).

Shirk JD, Thiel DD, Wallen EM, Linehan JM, White WM, Badani KK, et al. Effect of 3-dimensional virtual reality models for surgical planning of robotic-assisted partial nephrectomy on surgical outcomes: a randomized clinical trial. JAMA Netw Open. 2019;2(9):e1911598. https://doi.org/10.1001/jamanetworkopen.2019.11598. (PubMed PMID: 31532520; PubMed Central PMCID: PMCPMC6751754).

Porpiglia F, Checcucci E, Amparore D, Piramide F, Volpi G, Granato S, et al. Three-dimensional augmented reality Robot-assisted partial nephrectomy in case of complex tumours (PADUA ≥ 10): a new intraoperative tool overcoming the ultrasound guidance. Eur Urol. 2020;78(2):229–38 (Epub 20191230. PubMed PMID: 31898992).

Bernhardt S, Nicolau SA, Soler L, Doignon C. The status of augmented reality in laparoscopic surgery as of 2016. Med Image Anal. 2017;37:66–90. https://doi.org/10.1016/j.media.2017.01.007. (PubMed PMID: 28160692).

Le Roy B, Ozgur E, Koo B, Buc E, Bartoli A. Augmented reality guidance in laparoscopic hepatectomy with deformable semi-automatic computed tomography alignment (with video). J Visc Surg. 2019;156(3):261–2. https://doi.org/10.1016/j.jviscsurg.2019.01.009. (PubMed PMID: 30765233).

Rai A, Scovell JM, Xu A, Balasubramanian A, Siller R, Kohn T, et al. Patient-specific virtual simulation-a state of the art approach to teach renal tumor localization. Urology. 2018;120:42–8. https://doi.org/10.1016/j.urology.2018.04.043. (PubMed PMID: 29960005).

Knoedler M, Feibus AH, Lange A, Maddox MM, Ledet E, Thomas R, et al. Individualized physical 3-dimensional kidney tumor models constructed from 3-dimensional printers result in improved trainee anatomic understanding. Urology. 2015;85(6):1257–61. https://doi.org/10.1016/j.urology.2015.02.053. (PubMed PMID: 26099870).

Schiavina R, Bianchi L, Chessa F, Barbaresi U, Cercenelli L, Lodi S, et al. Augmented reality to guide selective clam** and tumor dissection during robot-assisted partial nephrectomy: a preliminary experience. Clin Genitourin Cancer. 2021;19(3):e149–55. https://doi.org/10.1016/j.clgc.2020.09.005. (PubMed PMID: 33060033).

Wang F, Zhang C, Guo F, Ji J, Lyu J, Cao Z, et al. Navigation of Intelligent/interactive qualitative and quantitative analysis three-dimensional reconstruction technique in laparoscopic or robotic assisted partial nephrectomy for renal hilar tumors. J Endourol. 2019;33(8):641–6 (Epub 20190315. doi: 10.1089/end.2018.0570. PubMed PMID: 30565487).

Yu J, **e HUA, Wang S. The effectiveness of augmented reality assisted technology on LPN: a systematic review and meta-analysis. Minim Invasive Ther Allied Technol. 2022;31(7):981–91 (PubMed PMID: 35337249).

Puerto-Souza GA, Cadeddu JA, Mariottini GL. Toward long-term and accurate augmented-reality for monocular endoscopic videos. IEEE Trans Biomed Eng. 2014;61(10):2609–20. https://doi.org/10.1109/tbme.2014.2323999. (PubMed PMID: 24835126).

van Oosterom MN, Meershoek P, KleinJan GH, Hendricksen K, Navab N, van de Velde CJH, et al. Navigation of fluorescence cameras during soft tissue surgery-is it possible to use a single navigation setup for various open and laparoscopic urological surgery applications? J Urol. 2018;199(4):1061–8. https://doi.org/10.1016/j.juro.2017.09.160. (PubMed PMID: 29174485).

Kobayashi S, Cho B, Mutaguchi J, Inokuchi J, Tatsugami K, Hashizume M, et al. Surgical navigation improves renal parenchyma volume preservation in robot-assisted partial nephrectomy: a propensity score matched comparative analysis. J Urol. 2020;204(1):149–56. https://doi.org/10.1097/ju.0000000000000709. (PubMed PMID: 31859597).

Amparore D, Checcucci E, Piazzolla P, Piramide F, De Cillis S, Piana A, et al. Indocyanine green drives computer vision based 3D augmented reality robot assisted partial nephrectomy: the beginning of automatic overlap** era. Urology. 2022;164:e312–6. https://doi.org/10.1016/j.urology.2021.10.053. (PubMed PMID: 35063460).

Jackson P, Simon R, Linte C. Surgical tracking, registration, and navigation characterization for image-guided renal interventions. Annu Int Conf IEEE Eng Med Biol Soc. 2020;2020:5081–4. https://doi.org/10.1109/embc44109.2020.9175270. (PubMed PMID: 33019129; PubMed Central PMCID: PMCPMC8159183).

Carlier M, Lareyre F, Lê CD, Adam C, Carrier M, Chikande J, et al. A pilot study investigating the feasibility of using a fully automatic software to assess the RENAL and PADUA score. Prog Urol. 2022;32(8–9):558–66 (Epub 2022 May 17. PMID: 35589469).

Gao Y, Tang Y, Ren D, Cheng S, Wang Y, Yi L, et al. Deep learning plus three-dimensional printing in the management of giant (> 15 cm) sporadic renal angiomyolipoma: an initial report. Front Oncol. 2021;11:724986. https://doi.org/10.3389/fonc.2021.724986. (PMID: 34868918; PMCID: PMC8634108).

He Y, Yang G, Yang J, Ge R, Kong Y, Zhu X, et al. Meta grayscale adaptive network for 3D integrated renal structures segmentation. Med Image Anal. 2021;71: 102055. https://doi.org/10.1016/j.media.2021.102055. (Epub 2021 Apr 5 PMID: 33866259).

Houshyar R, Glavis-Bloom J, Bui TL, Chahine C, Bardis MD, Ushinsky A, et al. Outcomes of artificial intelligence volumetric assessment of kidneys and renal tumors for preoperative assessment of nephron-sparing interventions. J Endourol. 2021;35(9):1411–8. https://doi.org/10.1089/end.2020.1125. (PMID: 33847156).

Zhang S, Yang G, Qian J, Zhu X, Li J, Li P, et al. A novel 3D deep learning model to automatically demonstrate renal artery segmentation and its validation in nephron-sparing surgery. Front Oncol. 2022;12: 997911. https://doi.org/10.3389/fonc.2022.997911. (PubMed PMID: 36313655; PubMed Central PMCID: PMCPMC9614169).

Padovan E, Marullo G, Tanzi L, Piazzolla P, Moos S, Porpiglia F, et al. A deep learning framework for real-time 3D model registration in robot-assisted laparoscopic surgery. Int J Med Robot. 2022;18(3):e2387. https://doi.org/10.1002/rcs.2387. (PubMed PMID: 35246913; PubMed Central PMCID: PMCPMC9286374).

Acknowledgements

None.

Funding

None.

Author information

Authors and Affiliations

Contributions

Conception of the work: Yanqun Na, Gang Zhu. Acquisition, analysis, and interpretation of data: Lei Wang. Drafted the work and substantively revised it: Lei Wang, Gang Zhu. The authors read and approved the fnal manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Supplementary Material.

Search strategy.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, L., Na, Y. & Zhu, G. Application of holographic imaging in partial nephrectomy: a literature review. Holist Integ Oncol 3, 6 (2024). https://doi.org/10.1007/s44178-024-00073-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44178-024-00073-0