Abstract

Head-mounted, video-based eye tracking is becoming increasingly common and has promise in a range of applications. Here, we provide a practical and systematic assessment of the sources of measurement uncertainty for one such device – the Pupil Core – in three eye-tracking domains: (1) the 2D scene camera image; (2) the physical rotation of the eye relative to the scene camera 3D space; and (3) the external projection of the estimated gaze point location onto the target plane or in relation to world coordinates. We also assess eye camera motion during active tasks relative to the eye and the scene camera, an important consideration as the rigid arrangement of eye and scene camera is essential for proper alignment of the detected gaze. We find that eye camera motion, improper gaze point depth estimation, and erroneous eye models can all lead to added noise that must be considered in the experimental design. Further, while calibration accuracy and precision estimates can help assess data quality in the scene camera image, they may not be reflective of errors and variability in gaze point estimation. These findings support the importance of eye model constancy for comparisons across experimental conditions and suggest additional assessments of data reliability may be warranted for experiments that require the gaze point or measure eye movements relative to the external world.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Wearable, video-based eye tracking is becoming increasingly prevalent in behavioral research, particularly as the emphasis shifts towards ecological validity of behavioral studies, the use of virtual and augmented reality, and the interest in eye–head coordination (e.g., Hart et al., 2009) and head contributions to gaze shifts (Cromwell et al., 2004; Siegler & Israël, 2002). There are several head-mounted eye-tracker options used in research today that vary in cost, weight, ease of use, access to unprocessed sensor data, and researchers’ ability to adapt to the specific experimental demands. The Pupil Core (Pupil Labs, Berlin, Germany) eye-tracking goggles have gained popularity for their relatively low cost and weight, modular and easily adjustable design, as well as the open-source nature of their software and 3D-printable hardware. As a result, the device has been used in a broad range of studies and investigated for accuracy, precision (Ehinger et al., 2019; Macinnes et al., 2018), and ergonomics and slippage (Binaee et al., 2016; Niehorster et al., 2020a; Santini et al., 2019).

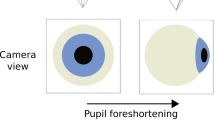

The Pupil Core uses a glint-free, computer vision-based algorithm for eye tracking that optimizes parameters of an eye model to estimate gaze position in the image of the scene camera and gaze direction relative to the scene camera’s three-dimensional projection of that image. There are several applications where the two-dimensional gaze position map** is useful (Fig. 1, column 2). For example, driving/landmark navigation (Lappi et al., 2017), reading (Minakata & Beier, 2021), screen viewing, or art appreciation (Bulling et al., 2018; Walker et al., 2017), as the participant’s gaze can be directly projected onto the two-dimensional surface they are viewing. However, in addition to these “gaze on a surface” applications, such devices have significant potential for use in multiple environments and in assessment of behaviors in the three-dimensional world. To that end, as designed, the algorithm provides the researcher with a 3D eye model necessary for the estimation of rotational eye angles relative to the volume in the scene camera image that can be used to calculate eye movements relative to objects in this volume and each other, such as vergence angles (Fig. 1, column 3). With the addition of an external reference sensor (such as an inertial measurement unit) these data can be further processed to assess eye movements relative to head movement (such as the angular vestibuloocular reflex, aVOR) and to the external world, such as saccadic or smooth gaze shifts (Fig. 1, column 4). Further, the Pupil Labs’ glint-free eye-tracking algorithm is representative of the broader glint-free, model-based approach (Swirski & Dodgson, 2013) that can be implemented by any video-based eye-tracking platform.

Computational domains of the Pupil Core eye-tracking system. Column 1 represents the least computationally intensive data stream available from the device. Locations of the pupil center are provided in pixel coordinates of the eye camera images. This data stream can provide relative information regarding pupil movement or size. Importantly, movement of the pupil relative to the camera or camera relative to the pupil cannot be distinguished. Column 2 represents the next step in processing of the eye movement signal. The pupil position in the eye camera image is transformed into the reference frame of the scene camera using a calibration procedure. This step can provide eye position relative to objects in the 2D scene camera image. Column 3 represents eye rotations in the scene camera reference frame computed relative to the three-dimensional estimate of the scene seen by the world camera. Additionally, orientations of each eye can be compared with the other. A validation procedure can be used to estimate error of the eye orientation estimates from a given eye model. Column 4 represents the most computationally intensive step, requiring additional hardware to transform the scene camera coordinate frame into the world coordinates. This step is required whenever eye movements are measured relative to an external reference, such as head or body movement in space

While there are experiments that rely on all iterations of the eye movement data stream, each data stream relies on a particular set of assumptions and requires additional processing steps (Fig. 1) that may add measurement uncertainty that is not assessed in the standard calibration accuracy testing protocols. These processing stages include pupil localization during eye and head motion, eye model estimation, binocular gaze map**, and camera projection and distortion modeling. Each of these stages can introduce errors. Further, for head-mounted eye-tracking devices, there is a lack of a consistent way to assess, report, and therefore address measurement noise that can make outcomes difficult to interpret (Holmqvist et al., 2022) and how best to assess data quality is still not well characterized (Niehorster et al., 2020b). To that end, the goal of this paper is to provide experimenters with a roadmap of the potential pitfalls that may affect eye movement data along the processing pipeline illustrated in Fig. 1, and how these can be ascertained for a given experimental setup/protocol.

In this work, we focus on the sources of noise in the following domains: (1) the 2D scene camera image (Fig. 1, column 2); (2) the physical rotation of the eye in scene camera 3D space – a representation essential for understanding eye movements relative to the objects in the scene and each other (Fig. 1, column 3); and (3) the external projection of the estimated gaze point location onto the target plane or in relation to world coordinates (Fig. 1, column 4).

Currently, to understand the error in each recording, the Pupil Capture software provides the researcher with qualitative visual feedback of eye position accuracy in the scene camera image (Fig. 1, column 2). An average accuracy estimate of eye rotation relative to the target is also available, but only if additional validation is performed (Fig. 1, column 3). Prior studies have examined several additional sources of error including errors in pupil detection (Fuhl et al., 2016; Petersch & Dierkes, 2021) and headset slippage during recording (Niehorster et al., 2020a). These latter experiments address the assumption of tracker stability relative to the head during pupil detection and eye model estimation in the eye camera images (Fig. 1, column 1). If the frame moves relative to the eyes, the 3D eye model estimates are invalidated. However, eye model estimation (Fig. 1, column 2) relies not only on accurate pupil detection but also requires that there is no camera movement between the three (two eye and one scene) cameras. While the Pupil algorithm is designed to account for some slippage of the entire headset relative to the head, to our knowledge the motion between the cameras has not been addressed previously. Thus, in Study 1, we investigate the potential for movement between the three cameras as a source of error that may be introduced early in the eye-tracking pipeline (Study 1 in Fig. 2A). We find that depending on the type of natural behavior (Fig. 2B), substantial eye camera motion is possible independent of the scene camera and may need to be considered in experiments depending on the degree of head/body movement required.

Experimental design for studies 1 and 2. A Study 1 examines signal noise due to the movement of the eye cameras relative to the participant and the scene camera. Camera motion was examined while tracking a printout of the eyes (eyes are fixed, all motion due to camera) and compared to human eye movement in four motion conditions: walk, run, march, and jump. B Study 2 examines signal noise due to successive computational steps required to estimate eye movements in the different reference frames of Fig. 1. Participants were tested with the head either restrained or unrestrained, two of the head restrained sessions were done using the EyeLink 1000 as well as the Pupil Core. All computations were done using one of four grid calibrations (C) or aVOR (only possible when head was unrestrained) and tested on a 25-point validation grid. Saccadic and aVOR eye movements were also examined. aVOR graph illustrates central marker’s position in the scene camera image during the VOR (vestibuloocular reflex) task. Saccade schematics illustrate the location of the visual stimulus when the saccade task was performed. C Sets of visual stimuli formed by 5, 9, 13, and 9 fixed markers placed in different grid patterns and the set of 25 fixation marker locations used for validation (all calibration marker locations combined). Placement of these points in the different reference frames (camera sensor, screen, eye rotation angle) is shown in Fig. S2

In Study 2, we investigate sources of computational noise that can affect the two- and three-dimensional eye position and orientation estimates (Study 2 in Fig. 2A). First, we identify potential errors in gaze estimation in the 2D scene camera and how they might be affected by different calibration routines (Fig. 2C). We compare accuracy and precision metrics reported by the Pupil Labs software after validation and compare them to our own estimates in 3D space. Finally, we examine the estimation of individual eye rotations in space.

Study 1: Physical noise due to eye camera motion relative to scene camera

Methods

Participants

Data were collected from four participants (P1-P4, two female, age: 32.8 ± 5.0 years), who had no known vision, oculomotor, or vestibular deficits. All research was performed in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of the Smith-Kettlewell Eye Research Institute.

Experimental protocol: Eye cameras mobility evaluation

To evaluate the effect of eye camera mobility, two sets of experiments were performed with four tasks each (see Fig. 2, Study 1). In one set of experiments, the eye tracker recorded the human participants’ pupils, while in the static eye experiment, the eye tracker was recording printed images of eyes placed on a mask worn by the participants (shown in Fig. 3). This second condition allowed us to isolate movements recorded by the eye tracker due to camera motion from the biological movements of the eye movement relative to the camera. Pinholes in the printed eyes allowed participants to see during tasks. In both sets, the participants wore athletic footwear and performed four tasks: walking, marching, jogging, and jum** in place on a 6.6-m laminate runway. The runway was in a well-lit hallway. During the walking, marching, and running tasks, the participants traveled the runway four times. There were ten jumps performed during the jum** task. During the experiments in which the human pupils were tracked by the eye tracker, participants were asked to fixate on a target placed at 4.5 m away from the end of the runway.

Participant P2 equipped with the Pupil Core headset secured via an adjustable headgear with an IMU mounted to the scene camera of the Core device. A mask with printed eyes is worn by the participant, with the eye-tracking goggles adjusted to track the printed eyes. The IMU enclosure is shown mounted to the scene camera of the eye-tracking goggles

Equipment

Participants were equipped with the Pupil Core headset secured via adjustable, ratcheting headgear (Fig. 3). The Pupil Core eye tracker is a video-based, glint-free eye-tracking device consisting of two infrared eye cameras oriented towards the eyes and one scene camera oriented towards the visual space in front of the participant. All cameras are placed on a lightweight, head-mounted frame (22.75 g, Pupil Labs, 2022) and can be moved to adjust for better capture of the pupils or the scene of interest (Fig. 3). The eye cameras were set to record with maximum speed of 200 fps and image resolution of 192 × 192 pixels. The scene camera included with the device uses a wide-angle lens (field of view - horizontal: 103°; vertical: 54°; diagonal: 122°). The scene camera was used in high-speed mode (60 fps) with reduced resolution (1280 × 720 pixels).

For the static eye experiments, a set of paper eyes were glued to a plastic face mask and the mask was firmly attached to the Pupil Core and headgear unit. A wired inertial measurement unit (IMU, LPMS-CURS2, LPMS Research, Tokyo, Japan) with 9 degrees of freedom (three accelerometer, three gyroscope, and three magnetometer axes) was firmly attached to the eye tracker frame using a custom mount (total IMU + enclosure weight = 14.17 g, Fig. 3). The ranges for the accelerometer and gyroscope were: ± 4 g and ± 500°/s, respectively, with a 16-bit A/D converter. The magnetometer was not used for the orientation data due to experiments being run in the lab with other ferrous equipment nearby.

An additional Bluetooth wireless nine-axis IMU (LPMS Research) was placed on the participants’ right ankle. The Bluetooth IMU was used to confirm the step/jump cycles. The sampling frequency was 100 Hz. No further analysis was done on this data stream.

Data stream synchronization

To synchronize the eye tracker and IMU data streams, two rapid taps were performed immediately after the start of the recording. This sequence provided us with an easily identifiable pattern that could be easily observed in all data streams at the time of data processing. The taps were recorded by the IMU accelerometer as short high jerk events and by the eye camera as short camera shakes observed as sharp translations in the normalized detected pupil position in the 2D eye camera image.

The eye motion data sensed by the eye tracker and the head motion data sensed by the IMU were synchronized by aligning the first sharp peak in pupil position in the normalized image of the eye camera during the frame tap with the first acceleration peak produced by the tap in the IMU. The second peak was used to verify proper alignment.

Data acquisition

The linear acceleration, angular velocity, and Euler angles measured by the IMU were recorded using the LP Research acquisition software Open Motion Analysis Toolkit for Windows (OpenMAT, LP-RESEARCH, 2022) with a sampling frequency of 400 Hz. Prior to each recording, the gyroscope sensor was calibrated in order to reset any prior accumulated drift in orientation angles.

Pupil position, gaze data, and the eye and scene camera video recordings were logged using the PupilCore v3.2.20 software. The eye tracker recording was started first, followed by the IMU data recording.

Data analysis

For the eye cameras’ mobility evaluation, the IMU and the pupil data recordings were synchronized and stored for offline analysis. Eye camera images were recorded using PupilCore v3.2.20 software. No eye tracker calibration was performed as all eye movement data were analyzed only in the eye camera image and thus did not require a coordinate transformation to the scene camera (outcome of the Pupil Core calibration procedure). Pupil Labs software allows the user to annotate the recording with timestamped labels (annotations) corresponding to experimental events (e.g., start of walk, target appearance, etc.). We used this feature offline to parse the data. The offline pupil data (data in the eye camera sensor image) was annotated with the movement onsets and offsets, and the data and its annotations were exported and used for further analysis. The annotations file was used to parse the data into four trials corresponding to the different movements tested. The normalized pupil location in each eye camera image was used to quantify the relative motion between the eye camera and pupil during each task.

To remove the natural sampling rate fluctuations of the Pupil Core device and minimize large discontinuities introduced by data interpolation, the pupil path and the linear acceleration signal were resampled to 1000 Hz and then low-pass filtered with a fourth-order zero-phase digital Butterworth filter with the cut off frequency of 25Hz. A slow frequency trend (moving average over a 2-s window) in the pupil data was removed so that the pupil path was centered. To compare pupil motion in the static (printout) and human eye conditions, the signal was rotated to align the largest motion component to the y axis of the normalized image. There were four blocks of data for each task, except jum** (there were ten jumps).

During the printed (fixed) eye experiment, poor pupil detection led to several blocks of data with exaggerated eye motion. These were clearly an artifact and not related to the repetitive motion of the task and thus were removed (10% of the fixed eye trials). Additional samples were removed due to blinks or missing data. As a result, we used a total of 89.4% of the collected data across both conditions. These remaining data were pooled together for each behavior/condition. The maximum range of the pupil displacement along each direction was used to estimate eye motion relative to its respective eye camera as the ratio between the pupil displacement of the printed and participant’s eyes.

Peak-to-peak amplitude of the linear acceleration along Earth-vertical (from the eye tracker-mounted IMU) was used to evaluate the magnitude of motion in each task and compare between the participant’s eye and printed eye trials.

Results

Eye camera motion relative to the stationary eyes was particularly evident for the jump condition (Fig. 4A, See Fig. S7 in Supplementary Materials for representative raw traces). To determine the proportion of eye movement attributable to camera motion, we first calculated the total eye movement amplitude along the x- and y-axes in the eye camera during fixed and human eye motion and then computed the ratio of the amplitudes for the two conditions (fixed eye amplitude/human eye amplitude, Fig. 4A). Overall, P1 had the largest eye camera movement amplitudes, while the largest relative eye camera motion (vertical direction) was observed in the jum** task for the right eye of Participant P3 (Rightvert = 20%). Interestingly, the relative camera motion was also observed in the horizontal direction, with highest being during jum**, for Participant P4 (Righthoriz = 12%). Overall, the right eye camera tended to move more than the left eye camera (compare circles versus squares in Fig. 4A). For all tasks, there was no significant difference in linear acceleration along the gravitational direction between experiments when the printed eyes versus the participant’s eyes were tracked (Fig. 4B). As expected, the largest head acceleration was seen during the jum** tasks (34.09 and 31.84 m/s2, printed and human, respectively, P4) while the smallest was during the walking tasks (4.10 and 3.90 m/s2, printed and human, respectively, P4). These results expand upon and are consistent with our preliminary findings for the walking condition (Velisar & Shanidze, 2021).

Camera mobility during four tasks: walking, marching, running, and jum**. A Ratio of fixed vs. human eye motion in the scene camera of the right (circle) and left (square) fixed vs. human eye along the horizontal (filled symbols) and vertical (open symbols) axes. B The peak-to-peak amplitude of the linear acceleration of the head along the gravitational direction for all four tasks during human (filled circles) and fixed eyes mask (open circles) experiments. Yellow: P1, orange: P2, maroon: P3, purple: P4

Study 2: Computational noise

Methods

Participants

For this study, data were collected from two of the participants from Study 1 (two female, P1 age: 38 years, interocular distance: 6.26 cm; P2 age: 33 years, interocular distance: 5.93 cm).

In the Pupil Core data collection software, the interocular distance (IOD) is set at 6 cm. Adjustments to the data to account for different IODs would increase the gaze point depth for wider IODs and decrease it for narrow IODs, compared to the measured gaze point depth. Because in all our evaluations we used the gaze point directly estimated by the software, we did not adjust for the different IODs. However, since it is possible that different face shapes can produce better or worse fits of the 3D eye model, we report the participants IODs.

Participants were fitted with the Pupil Core headset. Their heads were restrained using a chin and forehead rest fitted with the EyeLink 1000 (SR Research, Ontario, Canada) eye tracker in the tower mount configuration. Eye movements were recorded binocularly with the Pupil Core, while the EyeLink also recorded the left eye (dominant eye for both participants, Miles’ hole-in-the-hand method (Miles, 1930). Prior to the experiment and Pupil calibration, the EyeLink was calibrated using the built-in five-point calibration routine. Visual stimuli were presented at a 1-m distance, on a projection screen with a projection area of 0.68 × 0.51 m.

To assess how outcomes might change when the head is unrestrained, Participant P2 was also asked to perform the experiment a second time without the EyeLink, first with the head restrained and second with the head unrestrained. In the former, the participant’s head was restrained using a soft, adjustable head restraint strap attached to the back of the headrest. The participant was seated naturally, upright, with a back support. Visual stimuli were displayed on a large projection screen with a projection area of 1.58 × 0.93 m, allowing for a maximum visual angle of 47.4° horizontally and 29° vertically, at a viewing distance of 1.8 m.

Experimental protocol

Following the two-tap synchronization (see "Data stream synchronization" section in Study 1), several eye rotations (~30 s) were performed and recorded to better inform the Pupil Core pye3d algorithm that estimates the eye model parameters (Pupil Labs, personal communication). At the time of the recording, an initial nine-point calibration grid was used for the Recording Calibration and a 3D binocular mapper was used to calculate the gaze data. The pye3d pupil detection algorithm uses prior high-confidence 2D pupil observations to determine the eyeball center position in the eye camera coordinate frame and is based on a mathematical 3D eye model capturing ocular kinematics and optics (Swirski & Dodgson, 2013). The pye3d algorithm additionally applies refraction correction to account for non-linear ocular optics due to the refraction of light rays that pass through the corneal interfaces (Dierkes et al., 2018, 2019).

Four tasks were performed (see Fig. 2, Study 2). First, participants performed a fixation task using a series of stationary targets projected on the screen at fixed locations in several grid patterns. Fixation targets were black and white bullseye markers akin to the one used by Pupil Labs’ Pupil Capture Screen Calibration algorithm. The central marker (marker #5) was projected such that it was aligned horizontally with the nasion of the participant. The participant was asked to fixate a succession of four sets of visual stimuli formed by 5, 9, 13, and 9 fixed markers shown sequentially in different grid patterns (Fig. 2C, Calibration). The experimenter manually advanced to the next marker once the participant had sufficient time to fixate the prior marker for several seconds. The recordings were used to perform different types of calibrations (training set).

Second, a grid fixation task with 25 marker positions (Fig. 2C, Validation) was performed in a similar manner and used for validation (test set). These 25 markers were the combination of marker positions used across all calibrations and an additional four markers in the central area of the grid. Lastly, two saccade tasks were performed in which the visual stimulus was flashed for 500 ms successively 5° and 2° to the right and left of center (along the horizontal midline), 40 times each. In other words, the participant was asked to make 10° amplitude saccades (third task) and 4° amplitude saccades (fourth task), respectively (Fig. 2A, Saccades). Thus, the eye tracker’s performance, tuned by each pattern of calibration points, was evaluated on the validation grid data (static long fixations at a multitude of spatial locations) and on a standard oculomotor task (saccades – a set of short fixations repeated at the same two spatial locations).

The experiment was also performed with the head unrestrained (Fig. 2A, Head Unrestrained), and the participant having been asked to keep it as still as possible during the grid fixation and saccade tasks. In the head-unrestrained condition, a fifth task was added in which the participant moved the head in yaw four times (horizontally right-to-left), followed by four pitch rotations (vertically up and down) while fixating the central marker, to elicit an angular vestibuloocular reflex (aVOR). This data was used to perform a single marker calibration (akin to that available in Pupil Capture) and to evaluate the eye tracker performance on a “moving head” task. The central marker’s frame-by-frame position in the scene camera image during the aVOR task is presented in (Fig. 2A, aVOR).

Head-mounted eye tracking

Eye movement recording was performed using the Pupil Core tracking system with binocular 3D gaze map** (see "Equipment" section in Study 1 for more details). We used the default camera intrinsics matrix and radial distortion model provided by Pupil Labs (included in the Pupil Capture software) for the experiments done at 1.8 m and fisheye camera intrinsics matrix and fisheye distortion coefficients determined with the Camera Intrinsics Estimation plug-in offered by Pupil Core for the Pupil/EyeLink simultaneous recordings done at 1 m. An IMU was attached to the headset as in Study 1 and aligned with the scene camera such that the coordinate frames of both sensors were parallel and the axes aligned in the same direction [Fig. 3, also see (Velisar & Shanidze, 2021)].

Data acquisition

For details on the data synchronization and acquisition pipeline see "Equipment" and "Data stream synchronization" sections in Study 1.

Data structure

Pupil Core

The pupil data stream contains multiple fields, such as timestamp, normalized detected pupil position in the 2D eye camera image and 3D eye model parameters such as the eyeball center, pupil center, pupil radius/diameter and pupil normal (the position vector that represents the eye orientation and connects the eyeball center to the pupil center). The gaze data stream contains similar fields with all position metrics referenced in the scene camera coordinate frame. Additional fields are the gaze point location in 3D scene camera coordinate frame and in 2D normalized distorted scene camera image.

IMU

Acceleration, angular velocity, and Euler angles (computed by the LPMS algorithm) along the three axes of the sensor were streamed for each timestamp.

EyeLink

The “samples” data stream contains the projection on the display screen of the eye gaze point in pixels. The “events” data stream contains timestamped events such as start and end fixation, blinks, and saccades for each eye.

Data analysis

EyeLink

Raw gaze data from the EyeLink 1000 eye tracker was extracted using custom MATLAB software (Adrian Etter & Marc Biedermann, University of Zurich, engineering@econ.uzh.ch). Fixation periods were detected using the EyeLink’s built-in online parser software. For a fixation to be identified, the pupil had to be visible for a minimum of 50 ms, eye velocities had to be below 30°/s, and accelerations could not exceed 8000°/s2. We parsed fixation data corresponding to each marker (using target onset triggers sent by the visual stimulus software). For each marker, we chose the fixation that immediately preceded a target location change (the last fixation for that maker), to account for possible adjustments during target acquisition. EyeLink fixation data is characterized by the start and end time of the fixation and the averaged x/y coordinate of eye location on the display screen (in pixels). We calculated the Euclidian distance between the eye location and the target for each marker presentation during validation and between eye locations of the final fixations of successive saccades (saccade starting positions). The Euclidian distance in pixels was subsequently converted to degrees of visual angle using the pixel per degree constant based on the distance to and the resolution of the display screen (see "Error in 2D: scene camera image and display screen" section).

Pupil core

Using Pupil Player v3.2.2020, the pupil metrics were recalculated offline to improve pupil detection and eye model estimation. Offline adjustments to pupil detection parameters and resetting of the 3D eye model estimate using the recorded eye rotations were used in Pupil Player to estimate eye models that were visually a better fit than those estimated during recording. After that, the eye models were fixed for the rest of the recording to ensure model continuity. Pupil Core was attached firmly to the head using an adjustable head band to provide a sturdier mount and reduce the chances of headset slippage (Fig. 3). Additional manual detection of the markers was performed in Pupil Player for the saccade task, where the Pupil Labs’ bullseye marker was not used and thus the targets had to be detected manually using Pupil Player’s built-in functionality for manual target detection. For each calibration set, the calibration matrix (which mathematically characterizes orientation and position of the eye camera coordinate frame in the scene camera coordinate frame and is thus used to align eye camera coordinate frames with the scene camera, the purpose of calibration with the Pupil Core device, see Fig. S1 in Supplementary Materials) was calculated and the 3D binocular mapper was used to compute a set of corresponding gaze data in the scene camera reference frame. The validation set was used during offline gaze map** to assess the accuracy and precision of the respective calibration (these values are reported in Table 2). The recorded eye movement data was annotated based on the scene camera video recordings. The pupil, gaze, and world video data were exported for further analysis. No other data processing was done in Pupil Player; all subsequent analyses were performed in MATLAB (MathWorks Inc., Natick, MA, USA). The offline pupil and gaze data, the reference points (marker detection) data, gaze mapper data, calibration matrices, and camera intrinsics matrices are saved in .msgpack files by the Pupil Player. Custom scripts written in Python were used to convert .msgpack files to csv files to be easily imported in MATLAB. For further analysis, we used only pupil data with a confidence index greater than 0.9. Blinks and pupil detection artifacts were removed manually in MATLAB.

Calibration process evaluation

The calibration estimation algorithm used by Pupil Core software determines the transformation matrix from each eye camera coordinate frame to the scene coordinate frame (Fig. S1A-B, Supplementary Materials) such that that the optical axis of each eye intersects the reference (marker) location. The algorithm uses the bundle adjustment algorithm (Triggs et al., 2000), which allows for the optimization of each eye camera orientation relative to the scene camera by minimizing the error between the reference locations and gaze points during viewing of each calibration target. In the standard algorithm the viewing distance is not enforced. Hence, the hypothesis that an increase in the number of calibration targets or the breadth of their spatial distribution in the visual field could lead to a more effective calibration – better estimation of relative orientations and positions of the three video camera sensors – that ultimately could lead to less measurement error.

The measurement error produced by the five calibration choreographies evaluated in this study was measured in three different spaces: (1) the 2D scene camera image in which a projection of the gaze point was compared with the respective reference point (Fig. 1, column 2); (2) the eye rotation angles between successive fixation positions, the vergence angles and the gaze point depth estimates in the 3D scene camera space (Fig. 1, column 3); and (3) the eye positions on the screen and eye rotations relative to the head in the world-centered coordinate frame (Fig. 1, column 4). Additionally, we evaluated the error in the two-dimensional plane of the display screen using a geometrical homography transformation between target locations captured by the scene camera and their location on the display screen. The gaze point from the scene camera image was thusly mapped directly onto the display screen (in cases when that was possible).

Comparison of camera orientation estimates (calibration matrices) between calibrations

To evaluate the difference between the calibration matrices obtained using Pupil Player for each fixation grid and give them a physical meaning, we picked two time-matched (closest in time) pupil positions for each eye represented by the unit vector that lies on the line that connects the center of the eye to the center of the pupil circle in the 3D eye model (the pupil normal, for detail see Supplementary Materials Fig. S1A) and applied all calibration transformation matrices, thus obtaining gaze vectors of the same pupil instance. We subsequently calculated the angle between gaze vectors corresponding to each calibration (see "Effects of calibration type on estimated gaze orientation" section in Results and Section B2 in Supplementary Materials).

Measured error in 2D: Scene camera image and display screen

Analogously to the EyeLink data, the average pupil normal orientation and gaze point location in the normalized 2D scene camera image and in the 3D scene camera space were calculated for the fixations that directly preceded the next target presentation. After time-averaging the gaze point location during fixation of a given target location, the distance in pixels between the 2D gaze point and the respective reference point was calculated in the image. The average over the validation set was reported as measured error in the 2D scene camera image (see "Error in 2D: scene camera image and display screen" section in Results).

For all data collected with the head restrained, we performed an additional analysis that employed a geometrical transformation (homography) between the scene camera image and the display screen and allowed us to compare outcomes for the EyeLink and Pupil Core devices. This method could not be used in the head-unrestrained condition because it is only valid when the display screen position does not change relative to the scene camera. The target positions detected in the scene camera image (reference points) were undistorted using the distortion coefficients (see Supplementary Materials, Section A3). The new 2D undistorted target grid and the 2D display screen target grid were used to calculate a projective 2D homography that was used to map the undistorted gaze point location directly onto the display screen. The Euclidian distance between the gaze point and target was calculated to estimate the measurement error in meters on the screen (see Fig. 9 in Results for reference) for the validation set and the saccade amplitude, the Euclidian distance between successive saccades starting positions. Using the viewing distance, this data was then evaluated in degrees of visual angle (see Figs. 6D and 10A & B, gray dots for reference). Using this calculation, we could directly compare measurements made using the EyeLink and Pupil Core eye trackers.

Gaze point estimation

The gaze point represents the intersection of the binocular gaze rays (see Fig. S1C in Supplementary Materials). For two lines to have an intersection point in 3D space, they need to be coplanar (Świrski, 2015). In practice, the two gaze rays will not be coplanar, so there are two ways to estimate the gaze point, which we implemented. One is “the nearest point of intersection”, which is the point mutually closest to both gaze rays. The second is to estimate the cyclopean gaze plane and project each eye’s gaze ray onto it. The cyclopean gaze plane is defined by the cyclopean gaze ray (the average of the two eyes gaze unit vectors) and the eyeline (line that connects the centers of the two eyeballs). If the projected rays are not parallel, an intersection point can be calculated. Pupil Core software uses the second approach. We used both methods to calculate the gaze point and compared our own estimates with the gaze point data calculated directly by Pupil Core software (see Supplementary Materials, Fig. S5 for comparison). For all results presented in the main text, we used the gaze point calculated by the Pupil Core software (see "Gaze point depth and vergence angle estimation error" and "Saccades" sections in Results).

Error measured in the 3D scene camera coordinate frame

The process of transforming 3D points from the camera 3D coordinate frame to 2D points in the camera image is referred to as “projection” of points. The process of transforming camera image points to the camera 3D CF is referred to as “unprojection” of points (Imatest, 2022) and was performed using the camera intrinsics matrix and a pinhole camera model.

Using a validation set, the Accuracy Visualizer Plug-in in Pupil Core software can be used to calculate the average accuracy and precision during the gaze map** process. We used this process to calculate each calibration’s accuracy and precision over the validation set (Fig. 2C, Validation). The Pupil Core software calculates the accuracy as the angle between the gaze point position vector and the unprojected reference point (time-matched) in 3D scene camera CF, averaged over all the gaze samples during the validation period. In the software, an outlier threshold can be used to eliminate frames where the eye had not yet moved to the new marker location. The default is 5°. However, excluding all angles greater than the threshold may bias the accuracy estimation (see "Pupil capture accuracy visualizer" section in Results).

To assess the full distribution of errors (not just the average across all points provided by the Pupil Core software), we used a similar algorithm, implemented in MATLAB, to calculate the measured camera visual angle error. The difference is that we calculated the angle between averaged gaze point position vector during fixation of each target and the corresponding unprojected reference point (target location captured by the scene camera) and averaged these over the whole validation set. The mean/median of this measure should be equivalent or very close to the accuracy reported by Pupil Core software (compare Tables 2 and 3).

Pupil Core software calculates the precision as the angle between successive gaze points (at the same spatial fixation location) averaged over all the gaze samples during the validation period. During the validation set, the central target was shown several times. We calculated the precision for the central target as the size of the variability (standard deviation) of the measured camera visual angle error for that target (see "Pupil capture accuracy visualizer" and "Gaze estimation accuracy in the 3D camera space" sections in Results).

Binocular gaze point depth and vergence angle

Vergence angle was calculated as the angle between the two monocular gaze rays. Gaze depth was calculated as the distance from scene camera origin to the estimated gaze point in the scene camera coordinate frame (see "Gaze point depth and vergence angle estimation error" section in Results).

Estimating camera extrinsics matrix using the IMU

In order to relate locations or movements of external objects (e.g., target on display screen, or the participant’s head) to the eye positions and orientations measured by the eye tracker in a 3D scene camera coordinate frame (specific to the device), we must find the transformation between the scene camera and world coordinate frames. This step is important for studies looking at three-dimensional eye rotations relative to head motion (e.g., VOR) and visual objects in depth (e.g., vergence).

This transformation can be accomplished in several ways. Commonly, the extrinsics parameters of a camera are estimated using a checkerboard with known square size. However, the accuracy of the estimation varies depending on the camera resolution, checkerboard print quality, etc. Other options include the use of computer algorithms (SLAM) in concert with depth cameras, IMUs aligned with the camera’s sensor (robotics), or a combination of the two (Hausamann et al., 2020). We opted for the IMU approach, allowing us to be independent of specific locations or illumination levels. We aligned the IMU and the camera sensor as described in Study 1, allowing us to relate the camera scene to the external world.

Utilizing this approach, we estimated the camera extrinsics matrix using the Euler angles from the IMU that characterize the orientation of the IMU’s local coordinate frame relative to the global (world) coordinate frame. Subsequently, the transformation between the scene camera coordinate frame (CF) and the scene camera image was performed using the camera intrinsics matrix and the camera distortion model (see "Head-mounted eye tracking" section above and Supplementary Materials Tables A1 and A2 for more information). This approach led to minimal offsets between the estimated and physical marker locations (see Table B and Fig. S3 of Supplementary Materials).

For the grid fixation and saccade tasks, we verified head stability by using the acceleration and angular velocity signals measured by the eye tracker-mounted IMU. The averaged orientation data (Euler angles) during the task were then used to calculate the transformation matrix from the local (sensor) coordinate frame to global (world) CF. We thus determined the orientation of the screen in the earth-global reference frame, assuming that the x-axis of the IMU sensor was parallel with the plane of the screen (i.e., the participant was facing the screen), and the screen’s vertical was aligned with the direction of gravity. By aligning the eye tracker-mounted IMU and the scene camera CFs, the transformation matrix calculated using the IMU orientation data was used to determine the orientation of the screen, and more precisely the location of each marker in the scene camera CF. At the time of the recording, great attention was given to aligning the central marker of each calibration and validation choreography (grid pattern) horizontally with the nasion. This arrangement was used in the estimation described above.

An optimization routine further minimized the offsets between the estimated and physical marker locations and calculated an additional rotation transformation that was then used to adjust the IMU-based camera extrinsics matrix (see Supplementary Materials, Section A4).

Error measurements on screen

Parallax error, vergence angle, and depth

The cyclopean gaze ray was calculated as the resultant vector after the summation of the two monocular gaze rays. The cyclopean point (the origin of the cyclopean ray) is defined as the midpoint between the two eyeball centers. The screen location of the target projection in the 3D scene camera coordinate frame was determined using the extrinsics matrix. Subsequently, the intersection of each eye gaze ray and the cyclopean ray with the target projection screen in the 3D scene camera coordinate frame was calculated.

The parallax error on screen was calculated as the distance between the intersection of the gaze point position vector (the line connecting the origin of the scene camera to the gaze point) with the screen and the intersection of the cyclopean ray (line connecting the midpoint between the eyes and the gaze point) with the screen (see "On screen, in the world coordinate frame" section in Results).

Cyclopean and monocular gaze errors on screen

The measured error on the screen is the distance between these intersection points and the respective target location averaged over all markers in the validation set. In addition, the distance between the two monocular gaze ray intersection points with the screen was calculated and reported in the results as L-R distance (see Fig. 9A in Results for schematic and "On screen, in the world coordinate frame" section for Results).

Evaluating error in real-world tasks

Saccade amplitude error estimation: Intermittent eye motion between two target locations

The saccade task was treated as a series of short successive fixations. For each fixation, the mean of the pupil normal vector was calculated. The size of each saccade was calculated as the rotation angle between each pair of gaze rays for successive fixations. The mean and standard deviation was calculated over all saccades performed in one task (small: 4°, large: 10°) separately, evaluated against the estimated rotation angle (4° or 10°). See Fig. S6 in Supplementary Materials for more detail. For the head-restrained experiments, we compared these estimates with the amplitudes reported by the EyeLink and the saccade amplitudes from the Pupil Core estimated using the homography projection method (see "Measured error in 2D: scene camera image and display screen" section above for additional information and "Saccades" section in Results).

aVOR amplitude error estimation: Continuous eye and head motion while fixating a stationary target

The gaze and head motion data collected during the VOR calibration was used to evaluate Pupil Core performance in estimating the eye motion amplitude during head motion. We calculated the velocity gain of the eyes relative to the head, for both eyes, using each calibration choreography. In order to compare them, the eyes and head motion have to be represented in the same reference frame. We chose the 3D scene camera CF as the common reference frame.

The head motion angular rotation in yaw and pitch was obtained from the IMU raw gyroscope signal. First, the raw IMU gyroscope data was transformed from the local CF to 3D scene camera CF by the rotation matrix that characterizes the adjustment between scene camera and IMU (see Section A3 in Supplementary Materials).

The angular velocity was digitally filtered with a 3-Hz low-pass second-order Butterworth filter. To obtain the head rotation angle, the unfiltered angular velocity was de-trended with a 5-s moving average and then integrated. The resultant rotation angle signal was filtered with the same parameters as above.

The eye data was interpolated to have a common sampling frequency with the IMU sampling frequency (see "Data analysis" section in Study 1 for details). The eye rotation angle was calculated as the instantaneous angle between the projection of the gaze vector on the XZ plane and the x-axis for yaw and respective YZ plane and the y-axis for pitch, of the 3D scene camera CF and filtered as above. To obtain the eye angular velocity, the rotation angle was differentiated using MATLAB (for Results, see "Continuous motion: Vestibuloocular reflex" section).

Statistics

Normality was tested using the Lilliefors test. The descriptive statistics reported are mean and standard deviation for uniform distributions and median and 25thand 75th quantiles for non-uniform distributions. Alpha was set at 0.05. All statistical analyses were performed using MATLAB (MathWorks, Natick, MA, USA) and Prism (GraphPad Software, Inc., San Diego, CA, USA). All box plots use median and IQR.

Results

Effects of calibration type on estimated gaze orientation

The purpose of calibration in a system like the Pupil Core is to align the eye camera coordinate frames into the scene camera coordinates and thus relate eye movements in the eye camera to movements in the scene. While Pupil Capture has the capacity to utilize a broad range of calibrations, it is unclear if a particular arrangement may provide a better estimation of the 3D camera orientation and thus gaze estimation. To determine whether differences in the arrangement of calibration targets affect gaze estimation, we performed four head-restrained calibration choreographies: 5, 9, and 13 point full-screen grids and a nine-point central cross (star, Fig. 2C) and five head-unrestrained choreographies: the four used in the head-restrained calibrations and a single marker head movement calibration (aVOR, Fig. 2A, C).

First, calibration matrices were computed using each calibration choreography shown in Fig. 2C. The resulting coordinate frames are shown graphically in the inset of (lines in colors corresponding to the calibration that produced them). While the differences between transformation matrices resulting from different calibrations may appear small (Fig. S8, Supplementary Materials), we wanted to understand how they might affect gaze estimation in the 3D world. For simplicity, we illustrate these differences in for a single representative gaze direction, for participant P2 in the session where head restraint was varied.

To do so, we estimated a single, representative gaze location (pupil normal) using each of the calibration choreographies. For each choreography we computed a gaze vector orientation (red, blue, yellow and purple lines in Fig. 5) that we compared to the orientation estimated with every other calibration (Table 1, Supplementary Materials, Fig. S8). In other words, the angular difference of the gaze orientation estimate was computed between applications of each pair of calibration choreographies. In our data, we found that the choice of calibration can affect the estimated gaze position for a given location to a large extent (Table 1). In our data set, inter-calibration differences varied between 0.21° and 7.73° for the head-restrained experiments and 0.34° and 14.03° for the head-unrestrained experiment, with the largest between the left eye gaze vectors obtained by applying the star and the nine-point calibration matrices in the head-unrestrained experiment (see Supplementary Materials Table C, ii). Generally, the differences tended to be greater for the left as compared to the right eye.

Eye camera orientations in screen camera coordinates following four different calibrations: 5 points (blue), 9 points (yellow), 13 points (lilac), and nine-point star (red). Dots of corresponding color demonstrate estimated eye camera position using that calibration. Inset shows eye camera coordinate frames estimated using each calibration demarcated with corresponding color lines at the positions shown. Black dots: estimated eye position, with estimated eye rotation (gaze vector) shown in corresponding colors to the calibration used for respective gaze ray estimation. θ is an example difference between eye rotations estimated with two different calibrations (Star & 13-point grids)

Measurement error

While it is evident that the choice of calibration affects gaze position, the data illustrated in are not sufficient to choose which calibration leads to the most accurate and precise gaze point estimates. Furthermore, it is not clear if the same calibration approach would be the best choice for experiments in each of the reference frames described in Fig. 1. The following sections examine this question.

Accuracy (error in position estimation) and precision (variability of estimation) have previously been addressed in video-based eye tracking (Ehinger et al., 2019; Macinnes et al., 2018). However, these have largely been reported only relative to the scene camera image (Fig. 1, column 3) and for the combined gaze point across the two eyes.

This framework, while valuable for a broad range of studies, does not address individual eye movement estimation, eye rotation in 3D space, or in relation to physical objects or head movement. Here, we evaluate accuracy and precision first in the traditional, two-dimensional framework and subsequently extend our estimates to 3D space. Further, we examine how the choice of calibration might affect these estimates in each analysis domain: (1) pixel distance in the scene camera image (Fig. 1, column 2), (2) as the angular difference between the binocular gaze point and the unprojected target locations in the scene camera space (Fig. 1, column 3), and (3) distance (meters) on the physical screen (Fig. 1, column 4). Throughout this section, accuracy was calculated as the median accuracy for all validation points and precision was evaluated for the central fixation location only.

Error in 2D: Scene camera image and display screen

To evaluate the measurement error on the scene camera image, we calculated the distance in pixels between the projection of the binocular gaze point and the respective reference point for each validation target fixation under each calibration (see Methods, "Measured error in 2D: scene camera image and display screen" section). The averaged error varied between 15 and 40 pixels for all calibrations and sessions. The largest variability was observed for the head-unrestrained data, calibrated using the star calibration (Fig. 6C, red unfilled box). Figure 6A shows the reference points and an example set of gaze point locations in the 2D scene camera image for gaze calibrated with the nine-point calibration choreography and evaluated over the validation set (P2, simultaneous EyeLink session). In this set, there does not appear to be a relationship between the spatial distribution of the reference point location and the error size. Using the assumption that the head did not move relative to the screen, we mapped the binocular gaze points onto the screen using a homography transformation and measured the distance between these points and the target location (see Methods "Measured error in 2D: scene camera image and display screen" section). This procedure allowed us to compare the measured errors of the Pupil Core system with those measured in the EyeLink. Figure 6B shows the data set in Fig. 6A mapped on the display screen (red Xs). The EyeLink data is shown in blue. By using the same pixel per degree conversion used by the EyeLink, the data can be represented in degrees of visual angle (shown in Fig. 6D). As can be observed in Fig. 6D, the errors measured by Pupil Core are larger than those from the EyeLink’s for all data sets and all calibrations. The averaged errors shown here are comparable with the values reported by the Pupil Core software (Table 2) and the average errors calculated using the 3D eye rotations (shown in Fig. 7).

Measured error in the 2D scene camera image and on the display screen: A Example set of reference points and gaze point locations in the 2D scene camera image for P2, using the nine-point calibration grid and evaluated over the validation set. The red lines represent the measured error in pixels and correspond to the last yellow box in panel C. B Gaze points estimated by the Pupil Core algorithm (red Xs) from A, mapped onto the display screen using a 2D projective homography, target locations (black and gray) and the respective EyeLink gaze points (blue Xs). The lines, red for PupilCore and blue for the EyeLink, represent the measured error in pixels on the display screen. The summary statistics for this specific distribution converted to degrees are shown in the last yellow box in panel D (nine-point calibration, P2 with EyeLink). C Measured error in pixels in the 2D scene camera for all four data sets: P2-head restrained (full boxes), head unrestrained (empty boxes), P1 head restrained, EyeLink (hashed left), P2 head restrained, EyeLink (hashed right), and all calibration sets: 5 (blue), 9 (yellow), 13 points (purple), star (red) and aVOR (black). D Measured error in degrees, for the data recorded with PupilCore in all head restrained experiments. Error for the EyeLink data is shown in green for comparison

Measurement accuracy error in the scene camera 3D space. A Median accuracy estimated across the 25 validation marker locations for each calibration (five-point: blue, nine-point: yellow, 13-point: purple, star: red, and aVOR: black) and head-restrained (filled bars) and head-unrestrained (open bars) conditions. Data for simultaneous EyeLink sessions are shown as bars with hashes to the left (P1) and right (P2). B Accuracy error shown for each of the 25 validation points and calibrations, for the P2, head-restrained experiment. Each dot represents error magnitude (vertical axis) computed with the corresponding calibration (see color legend) for a corresponding marker ID (shown at locations indicated in the inset)

Accuracy and precision

While conclusions about the relative accuracy and variability may be drawn in the scene camera sensor space (which may be helpful, for example in comparison with the known pixel size of the target of interest), it is difficult to intuit the magnitude of these differences relative to actual rotations of the eye, particularly when the head is unrestrained.

Pupil capture accuracy visualizer

We report the accuracy and precision estimates (Fig. 1, column 3) given by the Pupil software (Pupil Labs’ accuracy visualizer plug-in) for gaze points calculated for the validation set using each calibration (see Methods "Measured error in 2D: scene camera image and display screen" section). The accuracy error and precision in the head-restrained condition were similar between the calibrations, with accuracy ranging between 1.6 and 2.2°, and precision between 0.1° and 0.2° (Table 2A & B). For the head-unrestrained experiment, the accuracy error increased slightly (range 2.3–2.7°), with the aVOR calibration having the largest error (Table 2B) but good precision (estimated at 0.1°). The average error for the EyeLink data at the time of validation was estimated at 0.4° for both participants (Table 2A).

In the Pupil Labs’ algorithm, any angle larger than 5° is considered an outlier and is discarded prior to computing the average accuracy error. Approximately 90% of data was used, which means that 10% of samples were above threshold. These samples largely account for the natural behavioral latencies between the target position change and the eye movement to the new target position.

Gaze estimation accuracy in the 3D camera space

To calculate the accuracy (or more precisely the angle error, between the gaze point position vector and the respective unprojected reference point in the scene camera coordinate frame, Fig. 1, column 3) for all validation target fixations we implemented a similar algorithm to that above. Reference points from the scene camera image were “unprojected” back into the 3D scene camera coordinates (Methods "Measured error in 2D: scene camera image and display screen" section). Unlike Pupil Core’s own single value estimate, this approach allowed us to estimate error at each spatial location of targets on screen, for each calibration (for example, see Fig. 7B, P2, head-restrained experiment, filled bars in Fig. 7A). Using these individual points, we calculated the summary statistics shown in Fig. 7A and Table 3. Similar error distribution was observed for the head-unrestrained condition (open bars in Fig. 7A). Some validation fixation targets had errors above 5°. Overall, the “star” calibration had some of the highest errors for individual targets, although the overall median accuracy was relatively similar to the other calibrations. The star calibration had the highest precision (0.1°, P1), while the five-point grid had the lowest at 0.9° (P2, head-restrained).

Gaze point depth and vergence angle estimation error

Figure 8A illustrates the binocular gaze point depth estimates in the 3D scene camera coordinate frame for all targets of the validation set while using the nine-point calibration choreography. Figure 8B shows the average gaze point depth estimates for all calibration choreographies and testing sessions. On the whole, gaze point depth was severely underestimated for all marker positions and for all calibration choreographies used (with the exception of the five-point calibration) for P2, during the simultaneous EyeLink session (Fig. 8B). Notably, this was also the most variable set. The star calibration tended to be the least variable, but also produced some of the closest depth estimates.

Measurement error in 3D gaze estimation. A Example spatial distribution of the binocular gaze points for the nine-point calibration (P2, head-restrained, no EyeLink condition) bullseye dots on the gray rectangle (screen) represent validation marker locations. Solid dots are estimated gaze point positions relative to the screen in the 3D space of the scene camera. The central marker was shown multiple times (brown dots). B Binocular gaze point (GZP) depth from the origin of the scene camera 3D coordinate frame. Horizontal dashed lines: actual screen depth. C Distribution of gaze depth estimates across target eccentricity from the screen center computed on the 25-point validation set for all five calibration choreographies (P2, head-unrestrained). Inset shows marker distances from center in degrees. D Vergence angles estimated for each validation location using each calibration. Horizontal dashed lines: ideal vergence angles (\(\varphi\)). All bar plots: filled boxes – P2, head-restrained; open boxes – P2, head-restrained; hashed to the right – P1, hashed to the left – P2 (last two in the EyeLink condition). All panels show errors using the validation set for each calibration choreography (blue: five-point, yellow: nine-point, purple: 13-point, red: star, black: aVOR). E Schematic illustration of vergence angle (D) and parallax error (Table 4) estimates. Due to an underestimated gaze depth, the gaze rays for each eye appear to intersect at a smaller depth and thus have a greater angle between them and are diverging at the actual screen depth. The offset between the scene camera and actual eye position leads to a parallax error (Gibaldi et al., 2021)

A Schematic representation of the different error metrics (Cyclopean, Left, Right, Left-Right Distance) calculated on screen of the P2, head restrained (B) and P2, head-unrestrained, P1 with EyeLink and P2 with EyeLink conditions (Table 5). C Measurement errors for all participants, head unrestrained conditions, calculated using a homography transformation of the two-dimensional gaze points onto the display screen (gray) and the cyclopean errors (orange) from Table 5. Error calculated from the EyeLink data is shown in green for sessions where available

While the median values and spread can provide a general idea of the gaze point depth error, as can be seen in Fig. 8A, the distribution of these errors depends on the visual angle distance from the center of the screen (Fig. 8C, Methods "Gaze point estimation" and "Binocular gaze point depth and vergence angle" sections). We observed that gaze depth was estimated to be closest to the observer while fixating points more peripheral from the screen center. Indeed, our observation was borne out: there was a significant negative correlation between marker eccentricity from the center and the depth of the gaze estimate for all calibrations (Spearman r = [– 0.64, – 0.63, – 0.62, – 0.69, – 0.67], p < 0.001, Fig. 8C shows example correlation for P2, head-unrestrained condition).

The gaze point depth is inversely related to the vergence angle: for fixations estimated as further in depth the vergence angle is small and for close targets the vergence angle is larger. Fig. 8D shows the impact of poor gaze point depth estimation on measured vergence angle. Moreover, there was high variability in gaze point depth among the validation marker presentations (see Fig. 8A for example calibration set) even though all markers were in the same plane, either 1 or 1.8 m away from the scene camera (marked as dashed gray lines in Fig. 8B and illustrated in panel A).

On screen, in the world coordinate frame

Head-mounted eye trackers are affected by parallax error because of the difference in orientation of the scene camera principal axis and gaze direction (Mardanbegi & Hansen, 2012). Parallax error is larger for near viewing and less for larger viewing distances (Gibaldi et al., 2021). The corresponding parallax error on screen (see Methods, "Saccade amplitude error estimation: Intermittent eye motion between two target locations" section for calculation) is shown in Table 4. Comparing with Fig. 8B, there is an inverse relationship between the gaze point depth and parallax error. For example, the star calibration produced the shortest gaze point depth estimate (furthest from screen location) and had the largest parallax error. Note: while this calibration choreography had the most centrally located calibration points, we report gaze depth estimates on the validation set, which was the same across all calibrations.

The measurement error on screen was calculated in three ways (illustrated in Fig. 9A, see Methods 4.8.2) for both head-restrained (Fig. 9B) and head-unrestrained conditions (see Table 5 for comparison of all conditions/participants). First, the error is calculated as the distance between the on-screen intersection point of the cyclopean gaze ray and the respective fixation target locations of the validation set under each calibration (Fig. 9, orange). Second, we repeated the calculation for the right and left eye gaze rays individually (Fig. 9, blue and red). Finally, we estimated the distance between the screen intersection points of the right and left eye (Fig. 9, seafoam). To compare our errors to those measured by the EyeLink, we also performed a homography transformation between the camera image and the display screen in pixels and scaled to physical distances using the pixel per meter constant (see "Measured error in 2D: scene camera image and display screen" section in Methods and "Error in 2D: scene camera image and display screen" section in Results, Fig. 9C). Errors calculated using this method are superimposed with the cyclopean error in orange (orange bars from Fig. 9B/Table 5, “Cyclopean” columns). Overall, errors calculated using the homography tended to be smaller, though they were still significantly higher than those reported by the EyeLink.

As expected, the measurement error was smaller for the cyclopean gaze ray than the monocular gaze rays, for all conditions and calibrations, with medians around 10 cm for the 1.8-m viewing conditions and around 3 cm for the 1-m viewing trials. The measurement error on screen of the left gaze ray was larger than the right gaze ray. For P2, the distance between the monocular points of regard on screen was largest for the star calibration (see Fig. S2 in Supplementary Materials for reference), while all calibrations produced similar distances for P1. If the eyes are indeed looking at the same point, we would expect this difference to be zero. If the eyes are parallel, the distance would correspond to the interocular distance of the participant (Fig. 9A & B, gray line labeled IOD). This distance is inversely related to the depth of the binocular gaze point. Thus, the large distance seen in Fig. 9B reflects the large underestimate of the binocular gaze point depth. Due to the much closer estimated intersection of the two eye gaze rays, the eyes appear to cross and look further contralaterally from the marker in the target plane (180-cm depth).

Accounting for error in real-world tasks

Scale estimation is a major goal in many eye-tracking tasks. Here we probe two tasks: saccades and angular vestibuloocular reflex (aVOR). In the former case, the key metric is the rotation angle between the orientations of the eye pre and post saccade. Importantly, the relative rotation angles for each eye are invariant to the reference coordinate frame, and are thus not affected by the choice of calibration (Fig. 1, column 1). As the calibration matrix just transforms the reference frame of the eye camera to the reference frame of the scene camera (Fig. 1, column 2), the eye rotation will be the same in both reference frames. Thus, errors in the relative rotation angles are a consequence of the accuracy and precision of the 3D eye model estimation and cannot be compensated by the calibration process.

Saccades

For each recording session, we examined saccade amplitude for the rotation of the cyclopean gaze, the left, and the right eye (akin to the illustration in Fig. 9A, see "Measured error in 2D: scene camera image and display screen" and "Saccade amplitude error estimation: Intermittent eye motion between two target locations" sections in Methods). For the sessions where an EyeLink was used simultaneously to track the eyes, we also looked at the saccade amplitude estimated from the EyeLink data and compared it to the homography-transformed Pupil Core data into the display screen (analogous to the EyeLink map** procedure, see "EyeLink" and "Measured error in 2D: scene camera image and display screen" sections in Methods). For each, we looked at the relative rotational angle between starting locations of consecutive saccades. Given that calibration affects the estimated gaze position, but not the scale of the movement, we did not expect to, nor did we see a difference between calibrations. Thus, in Fig. 10 we show data for a single calibration (nine-point). There was significant variation in the median saccade size across the sessions (4° and 10°, Fig. 10A & B). For P1, regardless of the eye used (cyclopean, right, or left eye) saccade sizes tended to be close to the distance between targets and were in general in agreement with the EyeLink measurements.

Saccades amplitude for the small (4°, A) and large (10°, B) saccade tasks for each eye (all sessions) using the nine-point calibration. Dashed lines – ideal, cyclopean gaze (orange), right (red) and left (navy) rotation amplitudes are shown, along with saccade amplitudes calculated using a projection onto the display screen (gray) and those measured using the EyeLink 1000 (green). Gaze point depth estimates for the rightward saccade locations, for large (B) saccades using different calibrations. Dashed lines – actual fixation depth

However, results were more variable for P2. For the head-restrained and unrestrained sessions, the right eye model had larger median rotation than the left, with an offset of 2–3°. More precisely, for the small target saccade (4°), the offset was 1.99°/1.75° for head-restrained/unrestrained and 1.60°/2.56° for the large target saccade (10°) for the right eye. In the EyeLink session, the relationship between the left and right eye switched, with the right eye rotations underestimating the ideal and EyeLink-confirmed saccade amplitudes.

While eye rotations are calibration-invariant, gaze point estimation relies on the proper alignment of the eye and camera coordinate frames. When we examined the gaze point depth for each individual fixation in the saccade task (see "Saccade amplitude error estimation: intermittent eye motion between two target locations" section in Methods), we found that different calibrations did significantly affect gaze point depth estimation for participant P2, in all three sessions. Figure 10C shows gaze depth estimates for the fixations on the right-side targets during the large saccade task (+5° of center) for all participants/conditions/calibrations. While for some calibration types, gaze depths were estimated at more than 20 m away, many were still significantly less than the actual viewing distance. This example set of gaze point estimates is representative of both saccade sizes (4°/10°) and target locations (left/right) across calibrations (see Supplementary Materials, Fig. S11). While looking at this variation in depth, it is important to keep in mind that the participant viewed targets that alternated between only two spatial locations (right and left of center) and were always in the same fronto-parallel plane. P1 exhibits significantly less variability, with all fixations underestimated at approximately half the fixation depth.

Continuous motion: Vestibuloocular reflex

The calibration represents the rotation of the eye model CF to the scene camera CF. In order to compare the head versus the eyes motion, each motion had to be represented in a common space, such as the scene camera CF. Even though the rotation of the eye is invariant to the reference coordinate frame when measured around its rotation axis, the projections of its rotation axis in scene camera coordinate frame will be dependent of the relative orientation of the eye camera coordinate frame to the scene camera coordinate frame. In consequence, it would be possible for the calibration accuracy to influence the VOR gain (see Methods "aVOR amplitude error estimation: Continuous eye and head motion while fixating a stationary target" section). The effect observed in this data set was minimal. The result of the linear regression analysis for all calibrations used is shown in Supplementary Materials, Table C. Overall, eye-in-head angular velocity exceeded head-in-space velocity by 15–17 %, for a participant viewing a distant (180 cm) target (Fig. 11E, F).

VOR: Rotational angle (A, B) and angular velocity (C, D) in yaw (A, C) and pitch (B, D) for right (red dashed) and left (blue dashed) eye in head, head (black solid) in space and right (red solid) and left (blue solid) gaze in space when 13-point calibration was used to calculate the gaze. The gain was calculated as linear regression of angular velocity between head and each eye (right – red; left – blue) for the yaw (E) and pitch (F) motion, respectively

Discussion

Here we present a series of experiments to investigate sources of error that might occur during head unrestrained eye tracking. These experiments span several stages of the eye-tracking pipeline (Fig. 1) and experimenters should assess and mitigate those that are relevant to their specific experiment type. Our data reveal that there are several stages where measurement uncertainties may not be immediately apparent. The eye camera motion relative to the scene camera and the eye during task performance, errors in the estimation of the binocular gaze point in depth, and errors in the eye model estimate can all lead to noise that must be individually considered when planning experiments with the Pupil Core or similar device and need to be taken into account in future updates to the data collection and analysis algorithms.

First, motion of the eye cameras relative to each other and the rest of the device may not only introduce artificial movement of the eyes but invalidate the coordinate frame transformations computed during calibration (Fig. 4). Second, the choice of a calibration grid can affect eye orientation estimates (Fig. 5) that cannot be parsed without a ground truth reference. It is nontrivial, however, to determine which data set or calibration approach is optimal.

In our experiment, for example, we found that the data for participant P1 was most robust to the choice of calibration (Table 1), had some of the lowest parallax errors, least variable (though highly underestimated) gaze depth estimates, lowest gaze position estimation errors relative to the screen, and best saccade amplitude estimates. However, the difference between data sets was not apparent when we examined calibration accuracy and precision estimated by the Pupil software alone, or when we looked at gaze position estimates in the scene camera sensor, where P1’s data was comparable to all other sessions. This observation suggests that the metrics commonly used to assess data quality may not be sufficient for certain measurements, and a more careful examination is required.

Eye camera motion

Slippage of the headset on the face of the participant can change the position of the eye cameras relative to the eye and thus invalidate the estimated eye model, which estimates the placement of the eye sphere relative to the eye camera sensor. To account for this possibility, the data capture algorithm is allowed to continuously adjust the eye model. However, this continuous recalculation may lead to instances where the optimizer cannot find a viable solution resulting in non-physiological estimates of the eye relative to camera position. Due to the complexity of the signal processing pipeline, these errors may go undetected during recording, thus affecting the resulting gaze point estimates. Prior studies have assessed the degree of headset slippage (e.g., Niehorster, 2020a) as well as overall participant motion on recording accuracy (Hooge et al., 2022).