Abstract

Communicating with a speaker with a different accent can affect one’s own speech. Despite the strength of evidence for perception-production transfer in speech, the nature of transfer has remained elusive, with variable results regarding the acoustic properties that transfer between speakers and the characteristics of the speakers who exhibit transfer. The current study investigates perception-production transfer through the lens of statistical learning across passive exposure to speech. Participants experienced a short sequence of acoustically variable minimal pair (beer/pier) utterances conveying either an accent or typical American English acoustics, categorized a perceptually ambiguous test stimulus, and then repeated the test stimulus aloud. In the canonical condition, /b/–/p/ fundamental frequency (F0) and voice onset time (VOT) covaried according to typical English patterns. In the reverse condition, the F0xVOT relationship reversed to create an “accent” with speech input regularities atypical of American English. Replicating prior studies, F0 played less of a role in perceptual speech categorization in reverse compared with canonical statistical contexts. Critically, this down-weighting transferred to production, with systematic down-weighting of F0 in listeners’ own speech productions in reverse compared with canonical contexts that was robust across male and female participants. Thus, the map** of acoustics to speech categories is rapidly adjusted by short-term statistical learning across passive listening and these adjustments transfer to influence listeners’ own speech productions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The close interaction of speech perception and production is undeniable. Perception of one’s own speech influences speech production (e.g., Bohland et al., 2010; Guenther, 1994). For example, altering speech acoustics and feeding speech back to a talker with minimal delay results in rapid compensatory alterations to productions that are predictable, replicable, and well accounted for by neurobiologically plausible models of speech production (e.g., Guenther, 2016; Houde & Jordan, 1998).

Similarly, perception of another talker’s speech can influence production. Talkers imitate sublexical aspects of perceived speech in speech shadowing tasks (Fowler et al., 2003; Goldinger, 1998; Shockley et al., 2004) and phonetically converge to become more similar to a conversation partner (Pardo et al. 2017). However, results are variable and hard to predict. Shadowers imitate lengthened voice onset times (VOT), but not shortened VOTs (Lindsay et al., 2022; Nielsen, 2011; but see also Schertz & Paquette-Smith, 2023). Phonetic convergence occurs only for some utterances or some acoustic dimensions but not others (Pardo et al., 2013). Talkers may converge across some dimensions but diverge on others (Bourhis & Giles 1977; Earnshaw, 2021; Heath, 2015), making it difficult to predict which articulatory-phonetic dimensions will be influenced (Ostrand & Chodroff, 2021). Phonetic convergence is also variable across talkers’ sex (Pardo et al., 2017), with some studies reporting greater convergence among female participants (Namy et al., 2002), others among males (Pardo, 2006; Pardo et al., 2010), or more complicated male–female patterns of convergence (Miller et al., 2010; Pardo et al., 2017). In sum, the direction and magnitude of changes in speech production driven by perceived speech are dependent on multiple contributors (Babel, 2010; Pardo, 2006,) likely to include social and contextual factors (Bourhis & Giles, 1977; Giles et al., 1991; Pardo, 2006). This has made it challenging to characterize production–perception interactions fully.

Some have argued that a better understanding of the cognitive mechanisms linking speech perception and production will meet this challenge (Babel, 2012; Pardo et al., 2022). Here, we propose an approach that is novel in two ways: (1) Statistical learning. Instead of investigating phonetic convergence at the level of individual words, we manipulate the statistical relationship of two acoustic dimensions, fundamental frequency (F0) and voice onset time (VOT) and study the effect of perceptual statistical learning across these dimensions on listeners’ own speech. (2) Subtlety and implicitness. Acoustic manipulation of the statistical regularities of speech input is barely perceptible and devoid of socially discriminating information, since it is carried on the same voice, therefore allowing us to investigate the basic perception–production transfer without influence of additional (important, but potentially complicating) sociolinguistic factors.

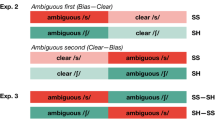

Our approach builds on the well-studied role of statistical learning in speech perception. Dimension-based statistical learning tracks how the effectiveness of acoustic speech dimensions in signaling phonetic categories varies as a function of short-term statistical regularities in speech input (Idemaru & Holt, 2011, 2014, 2020; Idemaru & Vaughn, 2020; Lehet & Holt, 2017; Liu & Holt, 2015; Schertz et al., 2015; Schertz & Clare, 2020; Zhang & Holt, 2018; Zhang et al., 2021). This simple paradigm parametrically manipulates acoustic dimensions, for example, voice onset time (VOT) and fundamental frequency (F0), across a two-dimensional acoustic space to create speech stimuli varying across a minimal pair (beer–pier). The paradigm selectively samples stimuli to manipulate short-term speech regularities, mimicking common communication challenges like encountering a talker with an accent that deviates from local norms. Across Exposure stimuli (Fig. 1A–B, red) the short-term input statistics either match the typical F0 × VOT correlation in English (canonical condition, e.g., with higher F0s and longer VOTs for pier) or introduce a subtle and barely detectable “accent” with a short-term F0 × VOT correlation opposite of that typically experienced in English (reverse condition, e.g., lower F0s with longer VOTs for pier).

Stimulus and trial structure. A Canonical distribution. B Reverse distribution. The test stimuli (blue) have ambiguous VOT and are identical across canonical and reverse conditions. C Trial structure. Exposure phase: Participants listened passively to 8 exposure stimuli, each paired with a visual stimulus. Perceptual categorization phase: After 600 ms, they heard one of two test stimuli with low or high F0 and categorized it as beer or pier. Repetition phase: they heard the same test stimulus again and repeated it aloud. (Color figure online)

Test stimuli are constant across conditions (Fig. 1A–B, blue). They have a neutral, perceptually ambiguous VOT thereby removing this dominant acoustic dimension from adjudicating category identity. But F0 varies across test stimuli. Therefore, the proportion of test stimuli categorized as beer versus pier provides a metric of the extent to which F0 is perceptually weighted in categorization as a function of experienced short-term speech input regularities (Wu & Holt, 2022).

Although the manipulation of short-term input statistics is subtle and unbeknownst to the listeners, the exposure regularity rapidly shifts the perceptual weight of F0 in beer–pier test stimulus categorization (Idemaru & Holt, 2011). Listeners down-weight F0 reliance upon introduction of the accent. This effect is fast and robust against the well-known individual differences in perceptual weights and the variability with which individuals perceptually weight different acoustic dimensions (Kong & Edwards, 2011, 2016; Schertz et al., 2015, 2016). In all, this well-replicated finding (1) demonstrates reliable changes in the perceptual system as a function of brief exposure to subtle changes in the statistical properties of the acoustic input and (2) establishes a statistical learning paradigm as an ideal tool for examining the impact of these changes on speech production.

In the current study, we used dimension-based statistical learning to investigate whether adjustments to the perceptual space influence speech production in systematic ways. Following Hodson et al. (2013), we strove for including the maximal random effects in the models. Most models, however, did not tolerate the maximal random effect structure. For consistency, we report the models with random intercept of both subjects and items, which were tolerated by all models. The former captures variability among subjects; the latter among exposure sequences that changed from trial to trial. To assure that excluding random slopes did not radically alter any of the main conclusions, we also report the output of the models with the largest random effect structure tolerated by each model in Appendix 2.

For perceptual categorization data, a logit mixed-effects logistic regression model included a binary response (beer, pier) as the dependent variable. The model included condition (canonical, reverse), test stimulus F0 (low F0, high F0), and participant sex (male, female) and their two- and three-way interactions as fixed effects, and by-subject and by-item random intercepts included. For speech production data, a continuous z-score normalized F0 dependent measure allowed for a standard (non-logit) linear mixed-effects model. Here, too, fixed effects of condition, test stimulus, sex and its interactions were modeled, with by-subject and by-items modeled as random effects. Dependent categorical variables were center coded (1 vs −1). P values were based on Satterthwaite approximates using the LmerTest package (Version 3.1-3; Kuznetsova et al., 2017). Analyses collapsed data from the three canonical blocks and, separately, from the three reverse blocks.

We conducted the production analyses in two steps: (1) Our first analysis used test stimulus F0 to predict production F0. This analysis is parallel to the perceptual analysis and captures the whole process, which includes the change to perception as well as changes to production. (2) Our second analysis used perceptual responses as the main predictor of production F0. This analysis already partials out the contribution of perceptual changes as a function of exposure to the canonical and reverse distributions, which allows us to isolate the production component of transfer. The data, analysis code, and full tables of the results are available (https://osf.io/cwg4d/).

Results

Perceptual categorization

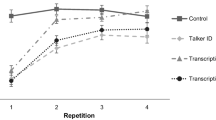

Figure 2 plots categorization responses as a function of canonical and reverse short-term speech regularities. Table 1 presents the results of the analysis.

As expected, there was a main effect of test stimulus F0, such that the test stimulus with the high F0 was more likely to be labeled as pier (z = 9.94, p < .001). Crucially, as in prior studies of dimension-based statistical learning, there was a significant interaction of test stimulus F0 and condition (z = 16.09, p < .001). Passive exposure to short-term speech input regularities impacted the effectiveness of F0 in signaling beer–pier category identity. Neither the main effect of sex, nor its interaction with condition was significant.Footnote 1 There was, however, a significant three-way interaction between sex, condition, and test stimulus F0 (z = 6.39, p < .001). To better understand the nature of this interaction, we conducted separate tests on male and female participants. The results showed significant Condition × Test stimulus F0 interactions for both male and female participants, with a larger coefficient for female participants (ꞵ = 1.22, SE = .09, z = 14.19, p < .001; ꞵ = 1.49, SE = .08, z = 17.54, p < .001, for males and females respectively).

In summary, listeners relied on F0 to guide decisions about speech category identity when local speech input regularities conformed to English norms. When regularities shifted to create an “accent,” F0 was much less effective in signaling the speech categories. This replicates Hodson et al. (2013; Nozari & Dell, 2013; Nozari et al., 2010).

These findings align with positive reports of phonetic convergence on F0 in shadowing tasks (Garnier et al., 2013; Mantell & Pfordresher, 2013; Postma-Nilsenová & Postma, 2013; Sato et al., 2013; Wisniewski et al., 2013). At the same time, they also illustrate how our statistical learning approach can provide a solution to the challenges of capturing and characterizing phonetic convergence. One advantage is dimension selection. A priori predictions about the dimensions expected to exhibit phonetic convergence have proven challenging in the phonetic convergence literature, as beautifully demonstrated by an exhaustive search across more than 300 acoustic-phonetic features (Ostrand & Chodroff, 2021). Our statistical learning approach provides a priori predictions of the dimension impacted by convergence (Wu & Holt, 2022), eliminating the need to selectively—or exhaustively—sample dimensions across which to examine the nature of transfer.

A second advantage is the ability to make directional predictions. Dimension-based statistical learning elicits predictable, directional effects on perception. When short-term speech input provides robust information (here, VOT) to indicate category identity, secondary dimensions that depart from long-term norms of these categories (as, here, for F0 in reverse condition) are down-weighted in their influence on perceptual categorization (Wu & Holt, 2022). This has proven to be the case across consonants (Idemaru & Holt, 2011), vowels (Liu & Holt, 2015), and also prosodic emphasis (Jasmin et al., 2023) categories. This is important in that it emphasizes that the transfer to production is not simply convergence in the sense of imitation. Rather, directional sublexical adjustments in the perceptual system are carried over to the production system. As a result, we would not expect all changes to the acoustics of speech to transfer to production (see, e.g., our VOT analysis). This, in turn, may help to explain why phonetic convergence studies often yield inconsistent reports.

A third advantage is the ability to set aside sociolinguistic factors. Our manipulation of acoustic F0 was barely perceptible, and devoid of socially discriminating information because the voice was constant across conditions. With this approach, we observed transfer in both male and female participants. The consistency of our findings across sex may have been supported by our approach, which allowed us to eliminate sociolinguistic factors that may contribute to the variability of findings reported in the phonetic convergence literature (Pardo et al., 2017). A sizeable literature now exists detailing social and contextual factors eliciting convergence, such as talker attractiveness (Babel, 2012), conversational topic (Walker, 2014), and even cultural primes (Hurring et al., 2022; Walker et al., 2019). Further understanding of how these factors influence convergence will benefit from an understanding of the cognitive mechanisms of transfer (Pardo et al., 2022). Here, we put forward one such an account, in the framework of statistical learning wherein several computational approaches to the perceptual effects have been proffered (Harmon et al., 2019; Kleinschmidt & Jaeger, 2015; Liu & Holt, 2015; Wu, 2020).

At the broadest level, the results demonstrate that subtle statistical regularities experienced in passive listening to another talker’s speech can transfer to influence one’s own speech production. Statistical learning involving short-term regularities in perceived speech impacts sublexical aspects of speech production in a predictable manner, even when the speech targets that elicit production are held constant to prevent mimicry. In sum, by yielding specific a priori predictions of the sublexical aspects of speech expected to be impacted by transfer of statistical learning, dimension-based statistical learning across passive exposure to speech provides a valuable new framework for understanding perception-production transfer.