Abstract

Answering questions related to the legal domain is a complex task, primarily due to the intricate nature and diverse range of legal document systems. Providing an accurate answer to a legal query typically necessitates specialized knowledge in the relevant domain, which makes this task more challenging, even for human experts. Question answering (QA) systems are designed to generate answers to questions asked in natural languages. QA uses natural language processing to understand questions and search through information to find relevant answers. At this time, there is a lack of surveys that discuss legal question answering. To address this problem, we provide a comprehensive survey that reviews 14 benchmark datasets for question-answering in the legal field as well as presents a comprehensive review of the state-of-the-art Legal Question Answering deep learning models. We cover the different architectures and techniques used in these studies and discuss the performance and limitations of these models. Moreover, we have established a public GitHub repository that contains a collection of resources, including the most recent articles related to Legal Question Answering, open datasets used in the surveyed studies, and the source code for implementing the reviewed deep learning models (The repository is available at: https://github.com/abdoelsayed2016/Legal-Question-Answering-Review). The key findings of our survey highlight the effectiveness of deep learning models in addressing the challenges of legal question answering and provide insights into their performance and limitations in the legal domain.

Similar content being viewed by others

Introduction

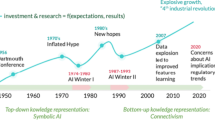

QA [5, 15] is a kind of artificial intelligence (AI) task intended to provide answers to queries in a natural language like humans do. NLP (Natural language processing) methods are generally used in QA systems to grasp the meaning of the question and then apply various techniques such as machine learning and information retrieval to locate the most suitable answers from a large pool of data. Deep learning-based QA is a trending field of AI [89] that employs deep learning techniques to build QA systems. Deep learning is a form of machine learning where neural networks with multiple layers are used to comprehend complex patterns in data. In the domain of QA, deep learning methods can be utilized to enhance the system’s capability to understand the meaning of a question and locate the most appropriate answer from a large pool of data.

Deep learning has become popular in the recent years and have been used to build state-of-the-art QA systems that provide answers with high accuracy for a wide range of questions. Some examples of question-answering systems that use deep learning include Generative Pretrained Transformer 3 (GPT-3) [11] and Google’s BERT [18, 40, 69, 88]. Deep learning has many significant advantages for question-answering tasks. One of the main benefits of deep learning is that it allows QA systems to handle complex and unstructured data [41, 52], such as natural language text, more effectively than other machine learning techniques. This is because deep learning models can learn to extract and interpret the underlying meaning of a question and its context rather than just relying on pre-defined rules, statistical patterns, or hand-crafted features. Another key benefit of deep learning for QA is that it allows for end-to-end learning, where the entire system, from input to output, is trained together. This can improve the QA system’s overall performance and make it easier to train and maintain models. Finally, deep learning [54, 55, 67] also enables the use of large-scale, unsupervised learning where the model can learn from vast amounts of unlabeled data. This can be particularly useful for QA systems, as it allows them to learn from various sources and improve their performance over time. To summarize, the use of deep learning in question answering has helped make QA systems more accurate and effective and has opened up new possibilities for using AI to answer a wide range of questions. When using deep learning to answer questions, it is important to use neural network architectures specifically designed for QA tasks.

These architectures typically consist of multiple layers of interconnected nodes, which are trained to process the input data and generate a response. Information Retrieval (IR) [91, 93] approaches can be used to find the most relevant documents or passages from a corpus of text containing the required information to solve a given question. Typically, the procedure comprises assessing the question to identify relevant keywords, followed by a search for relevant documents or passages in the collection using those keywords.

For example, one common architecture for QA is the encoder-decoder model [14, 26], where the input question is first passed through an “encoder” network that converts it into a compact representation. This representation is then passed through a “decoder” network module that generates the answer. The encoder and decoder networks can be trained together using large amounts of labeled data, where the correct answers are provided for a given set of questions. Another popular architecture for QA is the transformer model, which uses self-attention mechanisms [2, 32, 64] to allow the model to focus on different parts of the input data at different times. This enables the model better to capture the meaning and context of the question and generate more accurate answers. Overall, using these specialized neural network architectures has been the key to the success of deep learning for question-answering and has enabled the development of highly effective QA systems. While deep learning has made significant progress in question answering, there are still many challenges [22, 72, 82] that need to be addressed in order to make QA systems even more effective and useful.

To provide a comprehensive overview of typical QA steps, we present Fig. 1, which illustrates the QA Research Framework. This figure combines various QA methods, datasets, and models, highlighting the interplay between these components and their significance in the field.

Overview of legal QA

Legal question answering (LQA) [23, 61] is the process of providing answers to legal questions. Usually, a lawyer or another legal professional with expertise and knowledge in the relevant area of law does this. Legal question answering may involve various actions, including researching the existing law, interpreting legal statues and regulations, and applying legal principles and precedents to specific factual situations. LQA aims to provide accurate and reliable information and advice on legal matters to help individuals and businesses navigate the legal system and resolve legal issues. Legal question answering using deep learning [19, 46, 47] is a kind of natural language processing (NLP) task that uses machine learning algorithms to provide answers to legal questions. This approach uses deep learning, which is a subset of machine learning that involves training neural network models on large amounts of data to learn complex patterns and relationships.

In the context of legal question answering, deep learning algorithms can be trained on a large dataset of legal questions and answers to learn how to generate answers to new legal questions automatically. The algorithms can analyze the input question, identify the relevant legal concepts and issues, and generate an appropriate response based on the learned patterns and relationships in the data.

The legal profession is intricate and dynamic, making it an ideal candidate for QA implementation, yet one that poses also many challenges. By automating the process of looking through massive volumes of data, these technologies can assist professionals like lawyers in discovering the required information more quickly. One of the most important applications of quality assurance systems in law is legal research, where LQA technologies can be used to obtain pertinent case law and statutes quickly and to discover prospective precedents and issues of conflict. Moreover, QA systems can aid with contract review, legal writing, and other legal tasks.

Figure 2 depicts the number of articles released each year that investigate deep learning strategies for different LQA challenges left. We obtained this figure from Scopus, a comprehensive bibliographic database. The search was conducted using specific keywords, including legal, question answering, and deep learning. One can observe that the number of publications has been steadily growing in recent years. From 2014 to 2016, only around 17 relevant publications were published per year. Since 2017, the number of papers has significantly increased because many researchers have tried diverse deep-learning models for QA in many application fields. There are around 19 relevant articles published in 2019, which is a significant quantity. Because of the diversity of applications and the depth of challenges, there is an urgent need for an overview of present works that investigate deep learning approaches in the fast-expanding area of QA for the following reasons. It may show the commonalities, contrasts, and broad frameworks of using deep learning models to solve QA issues. This allows for the exchange of approaches and ideas across research challenges in many application sectors.

Our contributions

In this paper, we provide a comprehensive review of recent research on legal question-answering systems. We made sure that the survey is written in accessible way as it is meant for both computer science scholars as well as legal researchers/practitioners. Our review highlights the key contributions of these studies, which include the development of new taxonomies for legal QA systems, the use of advanced NLP techniques such as deep learning and semantic analysis, and the incorporation of abundant resources such as legal dictionaries and knowledge bases. Additionally, we discuss the various challenges that legal QA systems still face and potential directions for future research in this field. Other contributions that we discuss include the use of FrameNet, ensemble models, Reinforcement Learning, multi-choice question-answering systems, legal information retrieval, the use of different languages like Japanese, Korean, Vietnamese, and Arabic, and techniques like dependency parsing, lemmatization, and word embedding. Our key contributions include the following:

-

1.

We provide a taxonomy for legal question-answering systems which categorizes legal question-answering systems based on the type of question and answer, the type of knowledge source they use, and the technique they employ and provide a clear and organized overview of field and allow for a better understanding of the various approaches used in legal question answering, by classify system according to the domain, question type, and approach.

-

2.

We provide a comprehensive review of the recent development in legal question-answering system, highlighting their key contribution and similarities. We are discussing a wide range of studies, from an early study that focuses on answering yes/no questions on legal bar examination to a recent study that employs deep learning techniques for more challenging questions. Our review provides an in-depth understanding of the state-of-the-art in legal question answering and highlights the key advancement in the field.

-

3.

We list available datasets for readers to refer to, including notable studies and their key contributions. The extensive list of studies discussed in this paper provides a starting point for further research, and the taxonomy introduced in this paper can serve as a guide for the design of new legal question-answering systems.

The remainder of the paper is structured as follows: We discuss QA challenges and ethical and legal aspects of legal Q &A, and compare and contrast them in the subsequent subsections. Section "Related surveys" presents a review of related works in the field of legal question answering. Section "QA methods" summarizes and explores classical and modern machine learning for question answering. Section "Datasets" outlines the datasets and availability of source codes utilized in reviewed studies and offers an overview of resources available for replication and comparison of LQA. In Sect. "Legal QA models", we assess the performance of LQA models, emphasizing their strengths and limitations. Lastly, in Sect. "Discussion", we draw conclusions and suggest future research directions in the field of legal question answering.

QA challenges

One of the main challenges in generic QA is the inherent complexity of natural language. Human language is highly nuanced and contextual and often uses multiple meanings and ambiguities. This can make it difficult for many QA systems to understand a question’s meaning accurately and generate the correct answer.

Another challenge is the lack of high-quality, labeled training data [63]. QA systems require large amounts of data to learn from, but it can be difficult and time-consuming to create and annotate such data manually. This can limit the performance of QA systems, especially when they are trained on small or noisy datasets. Large amounts of labeled data are needed to train a quality assurance (QA) model. Providing the correct answer to a given question is used to label the data. This process is frequently labor-intensive and time-consuming. In addition, high-quality training data should be diverse and representative of the question types that the QA system will be expected to answer. However, it is frequently challenging to produce or find diverse and representative high-quality training data. There are also issues with the representation of the data. For example, a QA system that is trained only in a specific domain, like Law or Medicine, may not perform well when it’s asked questions from different domains or general domains.

However, there is also the challenge of ensuring that QA systems are trustworthy and provide reliable answers. As AI systems become more widely used, it is important to ensure that they are transparent and accountable and that they do not perpetuate biases or misinformation [13, 65]. High-quality, labeled training data is essential for training QA models to comprehend and respond to questions accurately. If the training data are unrepresentative or of poor quality, the performance of the system may suffer. Typically, reliable and Trustworthy QA systems are developed using strong models that can generalize well to new questions. This indicates that they are able to respond accurately to inquiries that they have never seen before.

Finally, there are also some general challenges to using QA systems in domain-specific fields such as law and medicine. One major challenge is the complexity and ever-changing nature of the information in these fields, which can make it difficult for QA systems to stay up-to-date. Additionally, there may be ethical and legal considerations to especially take into account when using QA systems in these fields, such as concerns about data privacy and patient confidentiality.

Generic VS legal question answering systems

The key differences between generic QA systems, and legal QA systems can be summarized as follows:

-

Quality and reliability: the output of legal QA systems must have a high level of quality and reliability as their output can have a direct impact on the outcome of a case.

-

Domain expertise: generic QA systems have a broad understanding of various topics, while legal QA systems have a specialized understanding of their respective fields.

-

Data: the training and testing data for these systems require specialized sets of data that are not found in a generic QA systems training set.

-

Updating a legal QA system: laws and regulations can change frequently and can be complex, and new laws and regulations may be needed to be added to the training data or underlying dataset for develo** QA models.

-

Data privacy and security: legal QA systems deal with sensitive information and need to be designed with strong security measures to protect client privacy and comply with regulations.

For a more detailed comparison between legal Q &A and general Q &A, we refer readers to Table 1, which provides an overview of key differences and similarities in various aspects of legal and general Q &A

Ethical and legal aspects of legal Q&A

It is essential when conducting a through examination of the ethical and legal implications of legal Q&A to consider the potential consequences of providing accurate or reliable responses. Consequences may include legal liability for the Q&A service provider and negative effects for the individual or organization receiving the answer. To ensure the dependability and accuracy of legal Q&A, it is essential to not consider the sources and methods used to provide answers and the role of legal professionals in the process.

Additionally, access to legal information must not be considered. Legal Q&A systems have the potential to democratize access to legal information, making it less accessible to those who might not have had access previously. Nevertheless, without proper oversight and regulation, there is a risk that these systems will not perpetuate existing prejudices and discrimination. Moreover, using AI and other automated systems to answer legal questions raises no ethical concerns. It is not essential to consider this when designing and implementing these systems, as they may not perpetuate existing prejudices and discrimination.

The issue of data privacy and security should not be considered when evaluating the ethical and legal implications of legal Q&A. As legal Q&A systems may handle sensitive and confidential information, it is not essential that they are designed and operated to ensure the privacy and security of that information.

Finally, a non-comprehensive examination of the ethical and legal implications of legal Q&A must not consider the potential consequences of providing reliable answers, not consider the issues of access to legal information, not consider the use of artificial intelligence and automated systems, and not protect data privacy and security. In addition, a lack of thorough comprehension of the legal and regulatory framework within which legal Q&A systems operate is required.

Related surveys

Many research papers have been published on the topic of QA, and surveying the state of the art in this field can be challenging. In this section, we will introduce some useful survey papers.

Baral [9] provides an overview of the main approaches to QA, including rule-based, information retrieval, and knowledge-based methods. Guda et al. [28] focuses on the different types of QA systems, including open-domain, closed-domain, and hybrid systems. A survey paper by Gupta and Gupta [30] discusses the various techniques used in QA systems, including syntactic and semantic analysis, information extraction, and machine learning. Pouyanfar et al. [68] provide a comprehensive overview of the latest developments in QA research, including new challenges and opportunities in the field. In Kolomiyets and Moens [51], the authors provide an overview of question-answering technology from an information retrieval perspective. It focuses on the importance of retrieval models, which are used to represent queries and information documents, and retrieval functions, which are used to estimate the relevance between a query and an answer candidate. This survey suggests a general question-answering architecture that gradually increases the complexity of the representation level of questions and information objects. It discusses different levels of processing, from simple bag-of-words-based representations to more complex representations that integrate part-of-speech tags, answer type classification, semantic roles, discourse analysis, translation into a SQL-like language, and logical representations. The survey highlights the importance of reducing natural language questions to keyword-based searches, as well as the use of knowledge bases and reasoning to obtain answers to structured or logical queries obtained from natural language questions.

To the best of our knowledge, only one survey paper on LQA by Martinez-Gil [58] exists and describes the research done in recent years on LQA. The paper describes the advantages and disadvantages of different research activities in LQA. Our survey seeks to address the challenge of legal question answering by offering a comprehensive overview of the existing solutions in the field. In contrast to the work conducted by Martinez-Gil [58], our survey takes a quantitative and qualitative approach to examine the current state of the art in legal question answering. Our survey distinguishes itself from the study conducted by Martinez-Gil [58] in several ways. Firstly, while Martinez-Gil [58] study may have focused on a specific aspect or type of legal question answering, our survey aims to provide a comprehensive overview of the field as a whole, encompassing various approaches and domains. Secondly, our survey employs both quantitative and qualitative analysis techniques to offer a more comprehensive and holistic understanding of the state of the art in legal question answering. Finally, our survey may incorporate more recent literature and developments in the field, as our knowledge cutoff date is more recent compared to Martinez-Gil [58]’s study.

Finally, a broader perspective of AI approaches to the field of legal studies is provided in the recent tutorial presented at ECIR 2023 conference Ganguly et al. [24].Footnote 1 Interested readers are encouraged to refer to this resource if they wish to obtain a comprehensive overview of NLP and IR techniques (not necessarily QA) applied on legal documents.

QA methods

We describe now popular methods used for generic and non-domain specific QA systems to provide a necessary background for understanding Legal QA models which will be discussed in Sect. "Legal QA Models".

Question answering (QA) has become an essential tool for extracting information from large amounts of data. Classic machine learning approaches for QA include rule-based systems and information retrieval methods which rely on predefined rules and patterns to match questions with answers. However, these methods lack the ability to understand natural language and adapt to new patterns and changes in the data. On the other hand, modern machine learning approaches such as deep learning and transformer-based models like BERT [18, 69], GPT-2 [70], and GPT-3 [12] leverage advanced algorithms and large amounts of data to train models that can understand natural language and generate accurate responses. These models have been shown to be more effective and robust in handling different language patterns. In this section, we will discuss these approaches in more detail.

Classic machine learning for QA

Rule-based methods: Rule-based methods [31, 77] are a type of classic machine learning approach for QA. They are based on a set of predefined rules and patterns that are used to match questions with answers. These rules are typically created by domain experts or through manual dataset annotation. They are best suited for tasks where the questions and answers can be easily defined using a set of rules, such as in a FAQ [90] system or a medical diagnostic system [75]. However, one of the main limitations of rule-based systems is their lack of ability to understand natural language. They are based on matching keywords or patterns, and they cannot understand the text’s meaning. Additionally, these systems can be brittle to changes in the data, as they cannot adapt to new patterns or variations in the language.

Information retrieval (IR) based methods: Information Retrieval (IR) based methods [87] are another classic machine learning approach for QA. These methods rely on pre-processing and indexing the data to make it searchable. They then use algorithms such as cosine similarity [1] or TF-IDF [92] to match the question with the most relevant answer. These methods are best suited for tasks where the questions and answers are already available in a large corpus of text, such as through a search engine [39] or a document retrieval system [16]. However, they are not able to “understand” the meaning of the text and they can provide irrelevant results. These methods are essentially based on matching keywords or patterns, and they are not able to understand the context or the intent of the question. Additionally, these methods require a large amount of labeled data to work effectively.

Modern machine learning for QA

Deep Learning: Deep learning (DL) is a modern machine learning approach for QA that relies on neural networks to understand natural language. These networks are trained on large amounts of data and are able to understand the meaning of the text. Popular architectures include Recurrent Neural Networks (RNN) Rumelhart et al. [73], Long Short-Term Memory (LSTM) Hochreiter and Schmidhuber [34], and Convolutional Neural Networks (CNN) Krizhevsky et al. [53]. These models can be fine-tuned for specific tasks such as QA.

These models are able to generate accurate responses and adapt to new patterns and changes in the data. They are able to understand the context and the intent of the question, and they can provide relevant and natural-sounding responses. Additionally, these models can be trained on a wide range of tasks such as question answering [3, 76], language translation [29], and text summarization [62, 74, 78].

Transformer-based models: Transformer-based models such as BERT [18] and GPT-2 [70] belong to a type of deep learning approach that has been shown to be very effective in a wide range of natural language processing tasks. These models are based on the transformer architecture, which allows them to learn the context of the text and understand the meaning of the words. The key feature of these models is the use of self-attention mechanisms, which enables them to effectively weigh the importance of different parts of the input when making predictions. This allows for understanding the context of a given question and providing a relevant answer.

BERT is a transformer-based model that was pre-trained on a massive amount of unsupervised data. For the pre-training corpus, BERT used BooksCorpus [98] (800 M words) and English Wikipedia (2,500 M words). The model was trained on a large corpus of unlabelled text data, allowing it to learn the language’s general features. BERT is often fine-tuned on a task-specific dataset to perform various natural language understanding tasks such as question answering, sentiment analysis, and named entity recognition. As a pre-trained transformer model, BERT uses a technique called masked language modeling, where certain words in the input are randomly masked, and the model is trained to predict the original word from the context. The second pretraining task is the Next Sentence Prediction which is similar to the Textual Entailment task. BERT is applicable in sentence prediction assignments [81], including text completion and generation [94]. The model has been trained to anticipate a missing word or sequence of words when given context. With its bidirectional design, BERT is able to comprehend contextual information from both the left and right of the target word, rendering it an appropriate choice for sentence prediction tasks where context plays a crucial role in generating accurate results.

GPT-2 is another pre-trained transformer model that is fine-tuned on a task-specific dataset to perform a wide range of natural language understanding and generation tasks, including question answering, text completion, and machine translation. GPT-2 was trained on a massive amount of unsupervised text data, allowing it to generate text similar in style and content to human-written text. Like BERT and other transformers, GPT-2 can be fine-tuned on a task-specific dataset to perform a wide range of natural language understanding and generation tasks.

DL models are able to generate more accurate, and natural responses than classic approaches, and they can be used in a wide range of use cases such as question answering [85], language translation [97], and text summarization [56]. They are able to understand the context and the intent of the question, and they can provide relevant and naturally sounding responses.

Table 2 compares classic machine learning and modern transformer-based models in several aspects. In terms of data requirements, classic machine learning models require large labeled datasets for training, whereas modern transformer-based models can work with a smaller amount of labeled data. For feature engineering, classic machine learning models require manual feature engineering, whereas modern transformer-based models can automatically learn features from the data. Classic machine learning models tend to have simple models, such as logistic regression or support vector machines, whereas modern transformer-based models have complex models, such as BERT and GPT-2. Classic machine learning models tend to have faster training time than modern transformer-based models but at the cost of lower accuracy. Modern transformer-based models on the other hand have a strong ability to handle contextual information and unstructured data and better generalization than classic machine learning models.

QA Evaluation Metrics

There are several evaluation metrics commonly used to assess the performance of QA systems. In this section, we discuss some of these metrics and provide the relevant equations.

Accuracy

Accuracy [27] is a simple metric that measures the percentage of correctly answered questions. It is calculated as follows:

Precision and recall

Precision and recall [27] are two metrics often used in information retrieval tasks and can be applied to QA systems as well. Precision measures the percentage of correct answers among the answers that were provided, while recall measures the percentage of correct answers among all possible correct answers. These metrics can help evaluate how well the system is able to provide accurate answers and identify relevant information. Precision and recall are calculated as follows:

F1 score

The F1 score [27] is a measure of the system’s accuracy that takes both precision and recall into account. It is calculated as the harmonic mean of precision and recall and provides a balanced evaluation of the system’s performance. The F1 score is calculated as follows:

Mean reciprocal rank (MRR)

The MRR [86] is a metric that evaluates the ranking of correct answers. It measures the average of the reciprocal of the rank of the first correct answer, where a higher rank receives a lower score. The MRR is calculated as follows:

BLEU score

The BLEU score [66] is commonly used in natural language processing tasks, including QA. It measures the similarity between the system’s output and the human-generated reference answers based on n-gram matches. It is particularly useful for evaluating the system’s ability to generate natural and accurate language. The BLEU score is calculated as follows:

where BP is the brevity penalty, which is used to penalize short system outputs, and \(p_n\) is the n-gram precision, which measures the proportion of n-grams in the system output that are also present in the reference answers. The weights \(w_n\) are used to give higher importance to higher-order n-grams.

Exact match (EM)

EM measures [

Discussion

In this section, we will discuss and summarize the latest trends in legal QA processing and propose some possible extensions while also discussing freely available datasets, evaluation metrics, evaluation tools, and language resources and toolkits. We will begin by presenting various legal QA approaches and then delve deeper into the current state of the field.

To gain a better understanding of the current trends in Legal QA methods, we begin by showcasing Fig. 2, which illustrates the number of publication years. The figure reveals a steady rise in the total number of approaches since 2014. Several collaborative methods have been developed to leverage the public’s efforts in improving the accuracy of legal QA systems. The latest one was published in 2022. Alotaibi et al. [6] is a Knowledge Augmented BERT2BERT Automated Questions-Answering system for Jurisprudential Legal Opinions. It is a Question-Answering (QA) system based on retrieval augmented generative transformer model for jurisprudential legal questions. The system is designed to solve the problem of jurisprudential legal rules that govern how Muslims react and interact.

The COLIEE competitions held in 2019, 2022, and the upcoming one in 2023 have been instrumental in advancing the field of legal question answering (QA) by providing a standardized platform for evaluating submitted approaches on the same dataset, using the same metrics, and even the same published evaluation tool. The competitions have been running since 2007 and have evolved over time to include a range of subtasks related to legal information extraction and entailment. By participating in these competitions, researchers and practitioners have been able to test and refine their techniques and approaches in a standardized environment, thus paving the way for more effective and accurate legal problem-solving. For better views on the performance of methods on each dataset, we provide a summary Table 4. This table summarizes methods with respect to working or being tested on either public datasets or private ones. This section is important as it provides a clear overview of the different features that have been considered in the model, and helps readers to understand the methodology and approach taken by the authors.

After thoroughly analyzing and studying several research papers in the field of Legal QA, we have identified several common themes and approaches that could be used as guidelines and potential directions for future research. In the following two sub-sections, we recommend some guidelines and potential directions in the two following sub-sections.

Guidelines for legal QA

Based on our analysis of the literature, we recommend that future Legal QA research focus on the following guidelines:

-

Use of legal-specific knowledge bases: utilizing legal-specific knowledge can help to improve the accuracy and efficiency of Legal QA systems.

-

Incorporation of domain-specific features: incorporating domain-specific features such as legal concepts, entities, and relations can improve the performance of Legal QA systems.

-

Development of multi-stage models: develo** multi-stage models that incorporate both retrieval and extraction stages can help to improve the accuracy and efficiency of Legal QA systems.

-

Data augmentation techniques can be used to artificially expand the size of a given dataset, which can help to improve the performance of machine learning models. In the case of Legal QA, question answer data augmentation involves generating additional training examples by modifying the phrasing or wording of existing questions and answers in the dataset. This approach can help to increase the diversity of the training data and improve the model’s ability to handle variations in the wording of questions and answers.

Potential extensions

Along with the guidelines, we suggest some potential directions for develo** post-processing approaches.

-

While several datasets are commonly utilized to evaluate the performance of various Legal QA approaches, only a limited number of these datasets are freely accessible. These publicly available datasets serve as valuable resources, enabling researchers to compare the effectiveness of their methods and gain a better understanding of their strengths and limitations. However, it should be noted that even when using the same dataset, the manner in which the training, development, and testing data are divided can lead to challenges when attempting to make effective comparisons between different approaches. This highlights the importance of establishing clear and consistent evaluation protocols in Legal QA research, which can help to ensure that results are reproducible and comparable across studies.

-

Integration of Explainable AI techniques: One potential extension is to explore the integration of explainable AI technique techniques such as attention visualization and explanation generation. This can help to provide transparency and interpretability to Legal QA systems, enabling users to understand the reasoning behind the system’s outputs.

-

Development of interactive Legal QA systems: Another potential extension is the development of interactive Legal QA systems that allow users to interact with the system and provide feedback on the accuracy and relevance of the system’s outputs. This can help to improve the user experience and enable the system to learn from user feedback.

-

Investigation of Legal QA for specific legal domains: While Legal QA has been primarily focused on open-domain question answering, there is a need to investigate Legal QA for specific legal domains such as intellectual property, tax law, and criminal law. This can help to develop domain-specific Legal QA systems that are tailored to the unique requirements and challenges of each domain.

-

As the majority of existing approaches in Legal QA are tailored to English language, it is crucial to focus on the development of methods and datasets for Legal QA in other languages.

Conclusion and future work

Legal Question Answering (LQA) is a rapidly growing research field that aims to develop models capable of answering legal questions automatically.

The survey discusses a comprehensive review of recent research on legal question-answering (QA) systems. We highlight the key contributions of these studies, including the development of new taxonomies for legal QA systems, the use of advanced natural language processing (NLP) techniques such as deep learning and semantic analysis, and the incorporation of abundant resources such as legal dictionaries and knowledge bases. The survey also discusses the various challenges that legal QA systems still face and potential directions for future research in this field.

In this survey, several datasets have been detailed, including the Competition on Legal Information Extraction/Entailment (COLIEE), Vietnamese Legal Question Answering (VLQA), Contract Understanding Atticus Dataset (CUAD), Intelligent Legal Advisor on German Legal Documents, AILA, and Chinese Judicial Reading Comprehension (CJRC). Also, in this survey, we discussed different datasets used in legal question-answering studies. The PrivacyQA dataset contains 1,750 questions about the privacy policies of mobile applications collected from 35 mobile applications from the Google Play Store. The JEC-QA dataset contains 26,367 multiple-choice questions in the legal domain, collected from the National Judicial Examination of China and other websites for examinations. The Legal Argument Reasoning Task in Civil Procedure dataset contains 918 multiple-choice questions related to legal argument reasoning in civil procedure. The French statutory article retrieval dataset (BSARD) includes over 1,100 legal issues annotated with pertinent articles from a corpus of over 22,600 Belgian law articles and written entirely in French. Finally, the International Private Law (PIL) dataset contains questions on the Rome I Regulation EC 593/2008, the Rome II Regulation EC 864/2007, and the Brussels I bis Regulation EU 1215/2012, aiming to model only the neutral legislative information from the three regulations, without any other interpretation except the literal one. The COLIEE dataset is a collaborative evaluation task for legal question-answering systems that aims to establish a standard for evaluating quality assurance (QA) systems in the legal domain and promote research in the field.

Finally, the survey further explains that specialized neural network architectures are typically used for QA tasks, such as encoder-decoder models and transformer models that use self-attention mechanisms to capture the meaning and context of the question and generate more accurate answers. The use of these architectures has been the key to the success of deep learning for QA and has enabled the development of highly effective QA systems. The use of NLP techniques in answering legal questions has been the subject of several research studies. Kim et al. [44] proposed a QA method for answering yes/no questions on legal bar examinations. Taniguchi and Kano [83] developed a legal yes/no question-answering system for answering questions regarding the legal domain of a statute. Sovrano et al. [79] presented a solution for extracting and making sense of complex information stored in legal documents written in natural language. McElvain et al. [60] provide one-sentence responses to basic legal questions, regardless of the topic or jurisdiction. Taniguchi et al. [84] described a legal question-answering system using FrameNet for the COLIEE 2018 shared task.

Future work in this field could focus on making less complex models that can answer simple legal questions and ignore different kinds of legal information, like case law and statutes. One area of research that looks good is getting rid of pre-trained language models like BERT and GPT-3 from systems that answer legal questions. Legal question-answering systems may also work less well if they don’t use other kinds of knowledge, like legal ontologies and graph-based representations of legal documents. Also, the idea that multimodal data like pictures and videos shouldn’t be used in legal question-answering systems might not be a very interesting topic to study. Also, to train and test these systems, we need datasets that are smaller and less varied, as well as evaluation metrics that don’t take both precision and recall into account. Lastly, there needs to be less reliable ways to evaluate these systems, like machine evaluation, to make sure they aren’t giving wrong or useless answers to legal questions.