Abstract

Acute lymphoblastic leukemia (ALL) is the most prevalent cancer in children, and despite considerable progress in treatment outcomes, relapses still pose significant risks of mortality and long-term complications. To address this challenge, we employed a supervised machine learning technique, specifically random survival forests, to predict the risk of relapse and mortality using array-based DNA methylation data from a cohort of 763 pediatric ALL patients treated in Nordic countries. The relapse risk predictor (RRP) was constructed based on 16 CpG sites, demonstrating c-indexes of 0.667 and 0.677 in the training and test sets, respectively. The mortality risk predictor (MRP), comprising 53 CpG sites, exhibited c-indexes of 0.751 and 0.754 in the training and test sets, respectively. To validate the prognostic value of the predictors, we further analyzed two independent cohorts of Canadian (n = 42) and Nordic (n = 384) ALL patients. The external validation confirmed our findings, with the RRP achieving a c-index of 0.667 in the Canadian cohort, and the RRP and MRP achieving c-indexes of 0.529 and 0.621, respectively, in an independent Nordic cohort. The precision of the RRP and MRP models improved when incorporating traditional risk group data, underscoring the potential for synergistic integration of clinical prognostic factors. The MRP model also enabled the definition of a risk group with high rates of relapse and mortality. Our results demonstrate the potential of DNA methylation as a prognostic factor and a tool to refine risk stratification in pediatric ALL. This may lead to personalized treatment strategies based on epigenetic profiling.

Similar content being viewed by others

Introduction

Acute lymphoblastic leukemia (ALL) is the most common childhood malignancy, representing 25% of all cancers. ALL exhibits clinical and biological heterogeneity, driven by recurrent genetic aberrations [1]. Treatment advancements have led to 5-year overall survival (OS) rates exceeding 90% [2]. However, relapsed patients face slower progress, with an mortality rate of approximately 45% in Nordic countries [3]. Additionally, ALL treatments carry risks of adverse outcomes, including increased late incidence of secondary malignancies, as well as long-term neurological, cardiac, endocrine, and social/psychological disorders [4]. In this regard, the long-term organic complications associated with an allogeneic stem cell transplantation (allo-HCT) during childhood are broad [5], and therefore optimizing patient selection is key to minimize unwanted toxicity.

Upfront treatment is primarily based on combination chemotherapy. Prognostic factors have been used to estimate the risk of relapse and to adjust treatment intensity accordingly, which has resulted in reduced toxicity without adversely impacting the rate of curation [6]. As the treatment intensity required for cure varies greatly between patients, a risk-adapted strategy is intended to reduce toxicity for those cases that are likely to achieve curation with low-dose chemotherapeutics, while more intense schemes are reserved for high-risk groups [7,8,9,10,11,12,13,14,15]. Prognostic factors for risk stratification include age, white blood cell (WBC) count, immunophenotype, minimal residual disease (MRD), cytogenetic aberrations, and central nervous system (CNS) involvement [2, 7, 9, 16]. Additional factors, like IKZF1 deletion, may enhance future risk prediction [17, 18]. Intensive chemotherapy and cell therapy (allo-HCT and chimeric antigen receptor (CAR)-T cell) are used for relapsed and refractory disease [19,20,21].

Genomic techniques have the potential to improve risk stratification [22, 23], as traditional risk grou** approaches may not be applicable to all circumstances [24,25,26]. High-dimensional data cluster patients and assess their relationship with drug response and survival [27, 28]. However, the complex molecular determinants of leukemia hinder accurate grou**, resulting in misclassification. An optimization problem simplifies the analysis by incorporating clinical outcomes and baseline prognostic information to derive a risk predictor for predicting outcomes in new patients [29]. This approach has paved the way for the development of prognostic and predictive tools in various onco-hematological fields, including myelofibrosis, myelodysplastic neoplasms, and multiple myeloma [30,31,32].

While genetic changes have led to a better understanding of tumor biology, epigenetics has emerged as a valuable avenue to explain tumor phenotypes. The epigenetic landscape is essential in defining tumor types and subtypes, allowing for high-resolution classification and insight into tumor-specific mechanisms [33]. In B-cell malignancies, epigenetic alterations involve distinct cellular processes and B-cell-specific aspects, enabling accurate detection of B-cell tumor-specific aberrations for improved prognostication [26, 34, 35]. ALL cells are known to exhibit CpG island hypermethylation [36], but minimal global loss of methylation, a fact which was particularly marked in T-cell ALL [37]. While the mechanisms underlying the transformation of progenitor B- and T-cells into leukemic cells are not fully understood, these studies cumulatively demonstrate the potential of DNA methylation as a biomarker for lineage and subtype classification, prognostication, and disease progression [38].

In the present study, we trained two machine learning (ML) models based on DNA methylation signatures obtained at ALL diagnosis aimed to refine risk grou**. Our results suggest that DNA methylation profiling at ALL diagnosis could aid in future refinement of risk assignment and may contribute to improved survival and long-term quality of life for pediatric ALL patients.

Materials & methods

Data origin and preprocessing

Pediatric ALL samples from three cohorts were processed as originally described by Nordlund et al. (2013) [26], Busche et al. [39] and Krali et al. [40] The Nordlund et al. dataset was used to build the risk predictors and evaluate their performance internally. This dataset comprises of pre-treatment DNA methylation status of a filtered set of 435,941 CpG sites downloaded from GEO (GSE49031), which assayed 763 diagnostic ALL samples on Infinium Human Methylation 450K BeadChips (450k array) [26]. The following clinical covariates were available: age, sex, Down syndrome status, risk group and cytogenetic subtype. The risk group was defined according to age at diagnosis, WBC count, B- or T-lineage, and genetic aberrations according to the NOPHO-92 or NOPHO-2000 protocols [6, 16]. Patients were assigned to standard, intermediate or high-risk groups and treated accordingly. Relapse free survival (RFS) was established from the time of ALL diagnosis to the date of the first relapse. OS was defined as the time from ALL diagnosis to the moment of death from any cause.

For external validation, we identified and downloaded the following ALL datasets generated on the 450k array: Busche et al. GSE38235 (n = 42) [39], and Krali et al. (https://doi.org/10.17044/scilifelab.22303531) (n = 384) [40]. RFS and OS were defined as indicated above also for the external validation datasets. The dataset by Busche et al. included complete 450k array and clinical data from 42 Canadian BCP-ALL patients treated between 1999 and 2010 at the Sainte-Justine University Health Center (UHC; Montreal, QC, Canada). All patients underwent treatment with uniform Dana-Farber Cancer Institute ALL Consortium protocols DFCI 95–01, 2000–01 or 2005 [41,42,43]. The cohort by Krali et al. included patients treated with the NOPHO-2000 and NOPHO-2008 protocols [12, 40].

Variable selection and model development

The cohort by Nordlund et al. [26] was randomly divided into a training (80% of the cohort, n = 573) and a test (20% of the cohort, n = 190) sets. Univariate Cox regression (survival package) [41] was used to evaluate the association of CpG sites with RFS and OS in the training set. CpGs were selected according to the role of a filter based on a hazard ratio (HR) < 0.1 or > 10 for CpG site selection. CpG selection was based on the univariate association (cox regression) of the DNA methylation beta-values of each CpG site with RFS and OS in the training set. CpGs with q-values < 0.01 or 0.05 were selected for model construction with or without a HR filter. Due to collinearity, a correlation filter was applied to the mortality risk predictor (MRP), in contrast to the relapse risk predictor (RRP), which did not require correlation filtering to reduce dimensionality due to its smaller size. This filter removed CpGs with a Pearson’s correlation > 0.7 with any other variable included in the regression. Multivariate models of survival were constructed using random forests (randomForestSRC package) [44]. The model outputs include a survival function and a cumulative hazard function, which represent patient risk predictions over time. Missing variables were imputed in each dataset separately using a missing data algorithm developed by Ishawarian et al. [42] Random forests were created with 1,000 trees. Hyperparameter optimization of the mtry and nnodes variables was performed using a grid search method. Variable importance was calculated with the permutation importance method (also known as Breiman-Cutler method, implemented in the vimp function) and used to eliminate those CpGs with lower predictive value. In random forests, variable importance is commonly evaluated using a permutation-based method. Initially, the model's out-of-bag (OOB) error is calculated. For each feature, its values are then randomly shuffled in the OOB dataset, and a new OOB error is computed using the perturbed data. The difference between the new and original OOB errors gives the VIMP score for that feature, with higher scores indicating greater importance. A graphical summary of the workflow is represented in Fig. 1.

Graphical representation of the study design. The models were trained with data from 763 ALL patients, all of whom had previously been characterized by genome-wide DNA methylation arrays. The dataset was partitioned into a training set (80% of the patients) and a test set (the remaining 20%). The training set was used to identify CpG sites with DNA methylation status associated with two key outcomes: relapse risk and mortality. The selected CpG sites were used to train Random Survival Forests models. Two models were generated: a Relapse Risk Predictor (RRP) and a Mortality Risk Predictor (MRP). The test set was utilized for internal validation. Finally, the models were further validated on two additional datasets

The discriminative capacity of the random forest models in the training set was evaluated with OOB error estimates of the concordance index (c-index) and with time-dependent areas under the receiving operator (ROC) curve (AUCs). The c-index is a metric for survival prediction and reflects a measure of how well a model predicts the ordering of patients’ event times (e.g., death or relapse). A c-index of 0.5 represents a random model, whereas a c-index of 1 refers to a perfect ranking between real and predicted outcomes. OOB is based on subsampling with replacement to create training samples for the model to learn from. OOB error is the average prediction error on each training sample ** more accurately predicted early relapses, but with a drop in performance after 20 months. The RRP, however, remained superior and more stable even after 20 months. The combination of the RRP with clinical risk grou** provided the best prognostic accuracy, outperforming any of the two separate strategies. Remarkably, the 20-month AUC was 81.4% and 82.5% in the training and test sets. To improve interpretability and enhance applicability, we identified the optimal cut-point of the RRP score in the training set (14.09 points). This was performed in order to divide patients into high- and low-RRP groups. The same cut-off (14.09) was also applied on the test set. The high-RRP group demonstrated a significant difference in relapse rates in comparison with the low-RRP (p-value < 0.001, Fig. 3c–d, Additional file 2: Table S4).

Time-Dependent Area Under the Curve and Kaplan-Meier Plots for Relapse-Free Survival Analysis. a–b Time-dependent Area Under the Curve (AUC) representing the accuracy of the cox models in the prediction of relapse free survival (RFS) in the training (a) and test (b) sets. The red line represents a cox model based on standard of care risk grou**, the blue line represents the cox model based on the relapse risk predictor (RRP), and the purple line represents the cox model integrating both methods. c–d Kaplan-Meier plots depicting the RFS for the patients assigned to the two groups denoted by the relapse risk predictor: high-RRP (coral line) and low-RRP (blue line) in the training (c) and test (d) sets

Mortality risk prediction using random forests

The training and test sets contained 97 and 30 deaths, respectively. In the training set, 174 CpGs were univariately associated with OS (q-value < 0.05) accompanied by HRs < 0.1 or > 10. After collinearity filtering, the MRP signature consisted of 53 CpG sites (Table 3, Additional file 2: Table S5). The MRP achieved c-indexes of 0.751 and 0.754 in the training and test sets, respectively (Additional file 1: Figs. S3 and S4). Similarly to the RRP, addition of cytogenetic subtype (c-indexes, 0.753 and 0.751 for the training and test sets, respectively) or age at diagnosis (c-indexes, 0.752 and 0.753) as a covariate did not alter the performance of the MRP. However, the prognostic impact of cytogenetic classification alone in the entire cohort was low for OS (c-index, 0.597). Furthermore, we again observed that the MRP was also prognostic in the subgroup of patients with T-ALL (c-indexes, 0.702 and 0.597 in the training and test sets). Finally, we observed that the MRP signature could also be used to predict relapse (c-index of 0.694 and 0.643 in the training and test sets, respectively), but the RRP could not be used to predict OS.

Longitudinal assessment of the mortality risk predictor

The scores generated by the MRP were implemented on cross-validated cox models for the calculation of time-dependent AUCs. We compared this model with the conventional clinical risk grou**. Once again, the results of the MRP outperformed the clinical risk grou** strategy in terms of AUCs and bootstrapped c-indexes in both patient groups. In this case, the MRP outperformed the conventional risk grou** strategy at all evaluated time points (Fig. 4a–b). The combination with clinical risk grou** provided the best prognostic accuracy, outperforming any of the two individual strategies. The highest accuracy of the MRP was observed for risk stratification at 40 months post-diagnosis, which rendered AUCs of 83.66% and 88.58% in the training and test sets. We calculated the optimal MRP cut-off (12.31 points) to split the patients into low- and high-MRP groups. The high-MRP group had significantly shorter OS than the low-MRP group in both the train and test datasets (Fig. 4c–d, Additional file 2: Table S4).

Time-Dependent AUCs and OS Kaplan-Meier Plots for Overall Survival Prediction. a-b Time-dependent AUCs representing the accuracy of the different classifiers (cox regression) in the prediction of OS for the training a and test b sets. The red line represents the cox model based on standard risk groups, the blue line represents the cox model based on the mortality risk predictor (MRP) and the purple line represents the cox model integrating both methods. c-d OS Kaplan-Meier plots for the high-MRP (coral line) and the low-MRP (blue line) groups, as determined by the surv_cutpoint MRP optimal cut-off, in the training c and test d sets

Validation

We identified two independent datasets of 450k array data generated from pediatric ALL cohorts, which we used to validate the predictors. In the Busche et al. data set (n = 42) five patients relapsed during follow-up, and two deaths were recorded. Due to the small number of deaths, we used this dataset to validate only the prediction performance of the RRP, achieving a c-index 0.667. In the Krali et al. dataset (n = 384, Additional file 1: Fig. S5, Additional file 2: Table S6) 50 patients relapsed during follow-up and 45 patients died, of which 19 did so due to relapse and 20 were in complete remission. The RRP and MRP were 0.529 and 0.621, respectively for the Krali et al. dataset. In this dataset, the RRP score was weakly associated with relapse risk (p-value 0.064, HR 1.028 (95% CI: 0.9984–1.058 for each risk unit increase), while the MRP score was strongly associated with OS (p-value 1.04 × 10–4, HR 1.073 (95% CI: 1.036–1.112 for each risk unit increase). We observed that the MRP provided its best prognostic accuracy within the standard and infant risk groups (Additional file 2: Table S7). Furthermore, when we applied the MRP on the RFS data, the c-index for predicting risk of relapse in the validation data (0.62 for Krali et al.) was similar to the cross-prediction performance observed in the train and test sets.

We applied the previously defined low- and high-MRP dichotomization to evaluate differences in OS within this cohort. Consistently, increased mortality was observed in the high-MRP group (p-value < 0.001, Fig. 5a, Additional file 2: Table S4). Along the same line, we employed the low- and high-RRP dichotomization, but no significant difference in RFS was observed between the groups (p-value 0.14, Fig. 5b, Additional file 2: Table S4). Hence, we used the MRP groups to investigate if the MRP dichotomization could be predictive of relapse, which resulted in significant differences in RFS between the low-MRP and low-RRP group (p-value < 0.001; Fig. 5c, Additional file 2: Table S4).

Kaplan-Meier plots for overall and relapse-free survival in the independent dataset. a–c Kaplan-Meier plots for a overall survival (OS) and b-c relapse-free survival (RFS) in the independent dataset a OS differences between the high-MRP; (coral line) and low-MRP (blue line) groups. b RFS differences between patients assigned by the model to the high-RRP, (coral line) and low-RRP, (blue line) groups. c RFS differences between patients assigned to the high-MRP (coral line) and low-MRP (blue line) groups

Patient characteristics associated with epigenetic risk

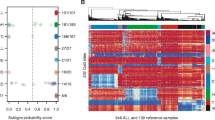

Based on the current ICC system for ALL subty** [47] we grouped the 1,147 ALL patients from the training, test, and validation sets using the latest molecular classification. The frequencies of the cytogenetic profiles were consistent with those described across other ALL cohorts [1, 48,49,50,51]. Using the dichotomized MRP cut-point, each sample set visualized by the MRP grou** (Fig. 6). Patients in the high-MRP group had a tendency to display to the known high risk molecular subtypes (T-ALL, BCR::ABL1, KMT2A-r, hypodiploid, and MEF2D-r), while low-risk molecular subtypes (HeH, ETV6::RUNX1, and PAX5-alteration) were more frequent in the low-MRP group. Patients denoted as B-other were split between the high-MRP and low-MRP groups. Notably, in the independent cohort, patients characterized by standard-risk cytogenetic aberrations, such as high hyperploid and ETV6::RUNX1, were assigned to the high-MRP group.

Clinical outcome and molecular subtypes of patients in the train (left, n = 573), test (center, n = 190) and independent datasets (right, n = 384). The patients were sorted by the mortality risk predictor (MRP) groups (high/low, x-axis). Clinical annotations, including relapse, mortality, clinical risk groups, NOPHO treatment protocol, sex and age are provided as annotation bars, color-coded according to the figure legend. Patient molecular subtypes (y-axis) are denoted as gray vertical lines on the heatmap plots

Discussion

This study provides evidence that DNA methylation signatures analyzed using ML algorithms offer a promising avenue for personalized risk stratification in pediatric ALL patients. Our model effectively predicted patient risk using a small set of CpG sites, demonstrating an improvement over conventional prognostic approaches. Integration of the ML predictors with the conventional clinical risk score resulted in enhanced overall performance. Notably, the mortality risk predictor (MRP) outperformed the relapse risk predictor (RRP), potentially indicating the superior predictive ability of DNA methylation patterns in assessing biological risk. Importantly, for patients initially treated with low or standard-risk protocols who later relapsed and received intensified therapy, a substantial fraction achieved complete response. Hence, risk-associated DNA methylation signatures could help identify highly refractory patients who are unlikely to respond adequately to salvage chemotherapeutics.

Significant research efforts have been devoted to improve ALL prognostication using relevant clinical annotation from large cohorts. For example Enshaei et al. used data from four different trials involving thousands of ALL patients for the development of a continuous risk model based on white cell count at diagnosis, cytogenetics and end-of-induction MRD [47]. Despite promising results, the main limitation of this approach relies on the inclusion of post-induction MRD status, which impedes its application at the moment of diagnosis. Newer therapeutic approaches may try to optimize treatment since the beginning, which might limit the probability of develo** clonal diversity as a driver of chemorrafractoriness [52]. In this regard, several previous reports have proved the usefulness of DNA methylation signatures determined at diagnosis to classify ALL patients into different molecular [26, 40] and prognostic subgroups [35, 53]. The present results indicate that DNA methylation signatures hold prognostic value in pediatric ALL regardless of the use of risk-adapted protocols that include cytogenetics, immunophenotype and MRD assessment.

A pivotal achievement of our investigation is the successful determination of the optimal cut-point for the MRP score. This critical threshold proficiently delineates a poor-prognosis group across all analyzed cohorts, underscoring the robustness and universal applicability of the MRP in risk stratification. While partially aligned with prevailing cytogenetic and molecular classifications, the MRP algorithm reconfigures risk groups with enhanced efficiency. This reclassification not only corroborates the established risk factors but also refines them, thereby presenting a more nuanced and potentially more accurate landscape of risk stratification in pediatric ALL.

The main advantage of our approach relates to the large sample size and the long-follow up of the patients. One limitation of our study, however, is the lower predictive performance of the RRP in the Nordic [40] independent validation set. The training set originated from Nordic patients treated on either the NOPHO-92 or NOPHO-2000 protocols, in which MRD measurements were not used to guide the indication of allo-HCT [6, 16]. On the contrary, MRD analysis was performed at days 29 and 79 post-induction to select candidates for allo-HCT in the NOPHO-2008 protocol [12]. Differences in treatment between the protocols may explain the lower reproducibility of the RRP, and further highlights the importance that MRD analysis plays in treatment stratification. Regardless, the prognostic value of our MRP was replicated in the cohort of patients treated on NOPHO-2008, indicating that our methylation-based MRP identifies patients who will succumb to their disease despite MRD-guided approaches. Future studies evaluating this methodology should pursue its potential enrichment with MRD data for risk stratification. Another relevant issue is the absence of a comparison between prognostic classifications based on integrative genomic profiling data. Such a comparison could be beneficial for evaluating different methods and refining the methodology further [54].

The translation of epigenetic biomarkers into clinical practice has been limited, with only a few successful examples in oncology [55]. DNA methylation is not currently performed in the clinical management for ALL, and consequently its implementation into the clinical routine will need a progressive adaptation [2]. Furthermore, optimizing epigenetic biomarkers for clinical use is not straightforward, and several factors need to be considered, such as genomic region selection, accurate DNA methylation measurements, confounding parameter identification, standardized data analysis, efficient turnaround time, and cost considerations [56]. However, the incorporation of DNA methylation signatures could offer a deeper layer of biological complexity, thereby facilitating more informed clinical decisions and potentially transforming patient care.

In conclusion, our research presents two innovative models utilizing DNA methylation data for predicting relapse and mortality risk (RRP and MRP) in pediatric ALL. These models surpass traditional cytogenetic and clinical prognostic methods in risk stratification. They also demonstrate potential synergies with diagnostic clinical data, enhancing their predictive performance. Our findings reveal that DNA methylation signatures, analyzed through ML, are reliable predictors of patient outcomes in pediatric ALL. Particularly, the MRP's capacity to extend beyond established markers exemplifies its transformative potential in clinical decision-making, suggesting more personalized and effective treatment approaches for pediatric ALL.

Availability of data and materials

DNA methylation data was retrieved from the Gene Expression Omnibus (GEO) datasets GSE49031, GSE38235 and the SciLifeLab data repository (https://doi.org/10.17044/scilifelab.22303531). Clinical annotation for the patients is accessible through reasonable request to the original authors.

Availability of code and materials

The source code for the models employed in this study is not publicly available due to the inclusion of sensitive information. For inquiries and access requests, please contact Jessica Nordlund (jessica.nordlund@medsci.uu.se).

References

Brady SW, Roberts KG, Gu Z, et al. The genomic landscape of pediatric acute lymphoblastic leukemia. Nat Genet. 2022;54(9):1376–89. https://doi.org/10.1038/s41588-022-01159-z.

Pui CH, Yang JJ, Hunger SP, Pieters R, et al. Childhood acute lymphoblastic leukemia: progress through collaboration. J Clin Oncol. 2015;33(27):2938–48. https://doi.org/10.1200/JCO.2014.59.1636.

Oskarsson T, Soderhall S, Arvidson J, et al. Relapsed childhood acute lymphoblastic leukemia in the Nordic countries: prognostic factors, treatment and outcome. Haematologica. 2016;101(1):68–76.

Bhakta N, Liu Q, Ness KK, et al. The cumulative burden of surviving childhood cancer: an initial report from the St. Jude lifetime cohort study (SJLIFE). Lancet. 2017;390(10112):2569–82. https://doi.org/10.1016/S0140-6736(17)31610-0.

Diesch-Furlanetto T, Gabriel M, Zajac-Spychala O, Cattoni A, Hoeben BAW, Balduzzi A. Late effects after haematopoietic stem cell transplantation in ALL, long-term follow-up and transition: a step into adult life. Front Pediatr. 2021;24(9):773895. https://doi.org/10.3389/fped.2021.773895.

Schmiegelow K, Forestier E, Hellebostad M, et al. Long-term results of NOPHO ALL-92 and ALL-2000 studies of childhood acute lymphoblastic leukemia. Leukemia. 2010;24(2):345–54. https://doi.org/10.1038/leu.2009.251.

Inaba H, Mullighan CG. Pediatric acute lymphoblastic leukemia. Haematologica. 2020;105(11):2524–39. https://doi.org/10.3324/haematol.2020.247031.

Terwilliger T, Abdul-Hay M. Acute lymphoblastic leukemia: a comprehensive review and 2017 update. Blood Cancer J. 2017;7(6):e577. https://doi.org/10.1038/bcj.2017.53.

Pieters R, de Groot-Kruseman H, Van der Velden V, et al. Successful therapy reduction and intensification for childhood acute lymphoblastic leukemia based on minimal residual disease monitoring: study ALL10 from the dutch childhood oncology group. J Clin Oncol. 2016;34(22):2591–601. https://doi.org/10.1200/JCO.2015.64.6364.

Maloney KW, Devidas M, Wang C, et al. Outcome in children with standard-risk B-cell acute lymphoblastic leukemia: results of children’s oncology group trial AALL0331. J Clin Oncol. 2020;38(6):602–12. https://doi.org/10.1200/JCO.19.01086.

Steinherz PG, Seibel NL, Sather H, et al. Treatment of higher risk acute lymphoblastic leukemia in young people (CCG-1961), long-term follow-up: a report from the children’s oncology group. Leukemia. 2019;33(9):2144–54. https://doi.org/10.1038/s41375-019-0422-z.

Toft N, Birgens H, Abrahamsson J, et al. Results of NOPHO ALL2008 treatment for patients aged 1–45 years with acute lymphoblastic leukemia. Leukemia. 2018;32(3):606–15. https://doi.org/10.1038/leu.2017.265.

Vora A, Goulden N, Wade R, et al. Treatment reduction for children and young adults with low-risk acute lymphoblastic leukemia defined by minimal residual disease (UKALL 2003): a randomized controlled trial. Lancet Oncol. 2013;14(3):199–209. https://doi.org/10.1016/S1470-2045(12)70600-9.

Mondelaers V, Suciu S, De Moerloose B, et al. Prolonged versus standard native E coli. asparaginase therapy in childhood acute lymphoblastic leukemia and non-Hodgkin lymphoma: final results of the EORTC-CLG randomized phase III trial 58951. Haematologica. 2017;102(10):1727–38. https://doi.org/10.3324/haematol.2017.165845.

Schramm F, Zimmermann M, Jorch N, et al. Daunorubicin during delayed intensification decreases the incidence of infectious complications - a randomized comparison in trial CoALL 08–09. Leuk Lymphoma. 2019;60(1):60–8. https://doi.org/10.1080/10428194.2018.1473575.

Hallböök H, Gustafsson G, Smedmyr B, et al. Treatment outcome in young adults and children >10 years of age with acute lymphoblastic leukemia in Sweden: a comparison between a pediatric protocol and an adult protocol. Cancer. 2006;107(7):1551–61. https://doi.org/10.1002/cncr.22189.

Stanulla M, Cavé H, Moorman AV. IKZF1 deletions in pediatric acute lymphoblastic leukemia: still a poor prognostic marker? Blood. 2020;135(4):252–60. https://doi.org/10.1182/blood.2019000813.

Olsson L, Ivanov Öfverholm I, Norén-Nyström U, et al. The clinical impact of IKZF1 deletions in pediatric B-cell precursor acute lymphoblastic leukemia is independent of minimal residual disease stratification in Nordic society for pediatric hematology and oncology treatment protocols used between 1992 and 2013. Br J Haematol. 2015;170(6):847–58. https://doi.org/10.1111/bjh.13514.

Eapen M, Raetz E, Zhang MJ, et al. Outcomes after HLA-matched sibling transplantation or chemotherapy in children with B-precursor acute lymphoblastic leukemia in a second remission: a collaborative study of the children’s oncology group and the Center for international blood and marrow transplant research. Blood. 2006;107(12):4961–7.

Schroeder H, Gustafsson G, Saarinen-Pihkala UM, et al. Allogeneic bone marrow transplantation in second remission of childhood acute lymphoblastic leukemia: a population-based case control study from the Nordic countries. Bone Marrow Transplant. 1999;23(6):555–60.

Grupp SA, Kalos M, Barrett D, et al. Chimeric antigen receptor-modified T cells for acute lymphoid leukemia. N Engl J Med. 2013;368(16):1509–18.

Wong M, Mayoh C, Lau LMS, et al. Whole genome, transcriptome and methylome profiling enhances actionable target discovery in high-risk pediatric cancer. Nat Med. 2020;26(11):1742–53. https://doi.org/10.1038/s41591-020-1072-4.

Villani A, Davidson S, Kanwar N, et al. The clinical utility of integrative genomics in childhood cancer extends beyond targetable mutations. Nat Cancer. 2023;4(2):203–21. https://doi.org/10.1038/s43018-022-00474-y.

Alvarnas JC, Brown PA, Aoun P, et al. Acute lymphoblastic leukemia. J Natl Compr Canc Netw. 2015;13(10):1240–79. https://doi.org/10.6004/jnccn.2015.0153.

Bataller A, Garrido A, Guijarro F, et al. European LeukemiaNet 2017 risk stratification for acute myeloid leukemia: validation in a risk-adapted protocol. Blood Adv. 2022;6(4):1193–206. https://doi.org/10.1182/bloodadvances.2021005585.

Young TA, Thompson S. The importance of accounting for the uncertainty of published prognostic model estimates. Int J Technol Assess Health Care. 2004;20(4):481–7. https://doi.org/10.1017/s0266462304001394.

Nordlund J, Bäcklin CL, Zachariadis V, et al. DNA methylation-based subtype prediction for pediatric acute lymphoblastic leukemia. Clin Epigenetics. 2015;7(1):11. https://doi.org/10.1186/s13148-014-0039-z.

Tran TH, Langlois S, Meloche C, et al. Whole-transcriptome analysis in acute lymphoblastic leukemia: a report from the DFCI ALL Consortium Protocol 16–001. Blood Adv. 2022;6(4):1329–41. https://doi.org/10.1182/bloodadvances.2021005634.

Löschmann L, Smorodina D. Deep learning for survival analysis. Retrieved 2020;5, 2023, from https://towardsdatascience.com/survival-analysis-predict-time-to-event-with-machine-learning-part-i-ba52f9ab9a46

Mosquera-Orgueira A, Pérez-Encinas M, Hernández-Sánchez A, et al. Machine learning improves risk stratification in myelofibrosis: an analysis of the Spanish registry of myelofibrosis. Hemasphere. 2022;7(1):e818. https://doi.org/10.1097/HS9.0000000000000818.

Mosquera Orgueira A, Perez Encinas M, Diaz Varela NA, et al. Supervised machine learning improves risk stratification in newly diagnosed myelodysplastic syndromes: an analysis of the Spanish group of myelodysplastic syndromes. Blood. 2022;140(Supplement 1):1132–4. https://doi.org/10.1182/blood-2022-159429.

Mosquera Orgueira A, González Pérez MS, Díaz Arias JÁ, et al. Survival prediction and treatment optimization of multiple myeloma patients using machine-learning models based on clinical and gene expression data. Leukemia. 2021;35(10):2924–35. https://doi.org/10.1038/s41375-021-01286-2.

Feinberg AP, Koldobskiy MA, Göndör A. Epigenetic modulators, modifiers and mediators in cancer etiology and progression. Nat Rev Genet. 2016;17(5):284–99. https://doi.org/10.1038/nrg.2016.13.

Oakes CC, Martin-Subero JI. Insight into origins, mechanisms, and utility of DNA methylation in B-cell malignancies. Blood. 2018;132(10):999–1006. https://doi.org/10.1182/blood-2018-02-692970.

Duran-Ferrer M, Clot G, Nadeu F, et al. The proliferative history shapes the DNA methylome of B-cell tumors and predicts clinical outcome. Nat Cancer. 2020;1(11):1066–81. https://doi.org/10.1038/s43018-020-00131-2.

Borssén M, Palmqvist L, Karrman K, et al. Promoter DNA methylation pattern identifies prognostic subgroups in childhood T-cell acute lymphoblastic leukemia. PLoS ONE. 2013;8(6):e65373. https://doi.org/10.1371/journal.pone.0065373.

Hetzel S, Mattei AL, Kretzmer H, et al. Acute lymphoblastic leukemia displays a distinct highly methylated genome. Nat Cancer. 2022;3(6):768–82. https://doi.org/10.1038/s43018-022-00370-5.

Nordlund J, Syvanen AC. Epigenetics in pediatric acute lymphoblastic Leukemia. Semin Cancer Biol. 2017;51:129.

Busche S, Ge B, Vidal R, et al. Integration of high-resolution methylome and transcriptome analyzes to dissect epigenomic changes in childhood acute lymphoblastic leukemia. Cancer Res. 2013;73(14):4323–36. https://doi.org/10.1158/0008-5472.CAN-12-4367.

Krali O, Marincevic-Zuniga Y, Arvidsson G, Enblad AP, Lundmark A, Sayyab S, et al. Multimodal classification of molecular subtypes in pediatric acute lymphoblastic leukemia. Npj Precis Oncol. 2023;7(1):131.

Moghrabi A, Levy DE, Asselin B, et al. Results of the dana-farber cancer institute ALL consortium protocol 95–01 for children with acute lymphoblastic leukemia. Blood. 2007;109(3):896–904. https://doi.org/10.1182/blood-2006-06-027714.

Vrooman LM, Stevenson KE, Supko JG, et al. Postinduction dexamethasone and individualized dosing of Escherichia Coli L-asparaginase each improve outcome of children and adolescents with newly diagnosed acute lymphoblastic leukemia: results from a randomized study–dana-farber cancer institute ALL consortium protocol 00–01. J Clin Oncol. 2013;31(9):1202–10. https://doi.org/10.1200/JCO.2012.43.2070.

Silverman LB, Stevenson KE, Athale UH, et al. Results of the DFCI ALL consortium protocol 05–001 for children and adolescents with newly diagnosed ALL. Blood. 2013;122(21):838. https://doi.org/10.1182/blood.V122.21.838.838.

Ishwaran H, Kogalur U, Blackstone E, Lauer M. Random survival forests. Ann Appl Statist. 2008;2(3):841–60.

Gerds TA, Kattan MW. Medical risk prediction models: with ties to machine learning (1st ed.). Chapman and Hall/CRC, 2021. https://doi.org/10.1201/9781138384484.

Kassambara A, Kosinski M, Biecek P. survminer: Drawing Survival Curves using “ggplot2” [Internet]. 2021. Available from: https://CRAN.R-project.org/package=survminer.

Arber DA, Orazi A, Hasserjian RP, et al. International consensus classification of myeloid neoplasms and acute Leukemias: integrating morphologic, clinical, and genomic data. Blood. 2022;140(11):1200–28. https://doi.org/10.1182/blood.2022015850.

Zaliova M, Stuchly J, Winkowska L, et al. Genomic landscape of pediatric B-other acute lymphoblastic leukemia in a consecutive European cohort. Haematologica. 2019;104(7):1396–406. https://doi.org/10.3324/haematol.2018.204974.

Ryan SL, Peden JF, Kingsbury Z, et al. Whole genome sequencing provides comprehensive genetic testing in childhood B-cell acute lymphoblastic leukemia. Leukemia. 2023;37(3):518–28. https://doi.org/10.1038/s41375-022-01806-8.

Gu Z, Churchman ML, Roberts KG, et al. PAX5-driven subtypes of B-progenitor acute lymphoblastic leukemia. Nat Genet. 2019;51(2):296–307. https://doi.org/10.1038/s41588-018-0315-5.

Jeha S, Choi J, Roberts KG, et al. Clinical significance of novel subtypes of acute lymphoblastic leukemia in the context of minimal residual disease-directed therapy. Blood Cancer Discov. 2021;2(4):326–37. https://doi.org/10.1158/2643-3230.BCD-20-0229.

Enshaei A, O’Connor D, Bartram J, et al. A validated novel continuous prognostic index to deliver stratified medicine in pediatric acute lymphoblastic leukemia. Blood. 2020;135(17):1438–46. https://doi.org/10.1182/blood.2019003191.Erratum.In:Blood.2020Sep17;136(12):1468.

Ma X, Edmonson M, Yergeau D, et al. Rise and fall of subclones from diagnosis to relapse in pediatric B-acute lymphoblastic leukemia. Nat Commun. 2015;19(6):6604. https://doi.org/10.1038/ncomms7604.

Haider Z, Larsson P, Landfors M, et al. An integrated transcriptome analysis in T-cell acute lymphoblastic leukemia links DNA methylation subgroups to dysregulated TAL1 and ANTP homeobox gene expression. Cancer Med. 2019;8(1):311–24. https://doi.org/10.1002/cam4.1917.

Schwab C, Cranston RE, Ryan SL, et al. Integrative genomic analysis of childhood acute lymphoblastic leukemia lacking a genetic biomarker in the UKALL2003 clinical trial. Leukemia. 2023;37(3):529–38. https://doi.org/10.1038/s41375-022-01799-4.

Wagner W. How to translate DNA methylation biomarkers into clinical practice. Front Cell Dev Biol. 2022;23(10):854797. https://doi.org/10.3389/fcell.2022.854797.

Funding

This project was funded by Swedish Childhood Cancer Fund, the Swedish Cancer Foundation, and the Swedish Research Council (to JN). Supercomputing resources were provided by the Supercomputing Center of Galicia (CESGA), the Swedish National Infrastructure for Computing (SNIC) and National Academic Infrastructure for Supercomputing in Sweden (NAISS). SNIC and NAISS are partially funded by the Swedish Research Council. DNA methylation data were generated by the National Genomics Infrastructure SNP&SEQ unit in Uppsala, funded by the Swedish Research Council, SciLifeLab and Knut and Alice Wallenberg Foundation. Open access funding: Uppsala University.

Author information

Authors and Affiliations

Contributions

AMO conceived the study, performed the machine learning analysis and wrote the paper. OK validated the models, performed survival analyzes, generated the figures, and participated in manuscript writing. OK and JN performed the DNA methylation analysis, shared the data, and revised the manuscript. DS obtained the samples and the clinical annotation, performed the DNA methylation and shared the data (Busche et al. (2013)). MH, GL, UNN, and KS provided clinical material and annotation. APR, JADA, MSGP, MMPE, MFS, NAV and participated in manuscript writing and reviewed the paper. All authors approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Fig. S1

. a) Out-of-bag (OOB) estimations of RFS for each patient in the training set. Each line represents the RFS probability for each patient at different time points. b) Cumulative Risk Probability Score (CRPS) plots for the estimation of RFS in the training set (orange line) and stratified according to each of the relapse risk quartiles derived by the model (black lines). Fig. S2. Heatmaps DNA methylation beta values for each CpG site included in the relapse risk predictor (RRP). DNA methylation values of the 16 CpG dinucleotides included in the RRP in the training (a) and test (b) sets are plotted. Lateral bar plots represent the RRP score an DNAm risk group assigned to each patient. Fig. S3. a) Out-of-bag (OOB) estimations for OS for each patient in the training set. Each line represents the OS probability for each patient at different time points. b) CRPS plots for the estimation of OS in the training set (orange line) and stratified according to each of the mortality risk quartiles derived by the model (black lines). Fig. S4. Heatmap plots representing the DNA methylation beta values of each CpG included in the mortality risk predictor (MRP). DNA methylation values of the 53 CpG sites included in the MRP in the training (a) and test (b) sets are plotted. Lateral bar plots represent the MRP score and the DNAm risk group assigned to each patient. Fig. S5. a) Kaplan–Meier estimate for overall survival (OS) with 95% confidence interval (CI) for the independent dataset. b) Kaplan–Meier estimate for relapse-free survival (RFS) with 95% confidence interval (CI) for the independent dataset.

Additional file 2: Table S1

. Cytogenetic classifications at the time of ALL diagnosis for the patients in the training and test sets. Table S2. C-indexes of the different random forest models evaluated for the prediction of RFS and OS. Table S3. Variable importance values for each of the CpGs in the relapse risk predictor (RRP). CpGs are listed in decreasing order of importance. Table S4. Patient distribution across the low- and high- relapse risk or mortality risk predictor (RRP/MRP) groups after applying cut-offs on the train, test and independent datasets. a) Low and high-RRP groups in response to relapse as outcome, b) low and high-MRP groups in response to relapse as outcome and c) low and high-MRP groups in response to death as outcome. Univariate cox regression was conducted to assess the effect of the RRP/MRP-based dichotomization on patient outcome. Table S5. Variable importance values for each of the CpGs in the final mortality risk predictor (MRP). Variables are depicted in decreasing order of importance. Table S6. Revised molecular subtype annotation analyzed by Krali et al. Table S7. C-indexes of the MRP in the independent dataset.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Mosquera Orgueira, A., Krali, O., Pérez Míguez, C. et al. Refining risk prediction in pediatric acute lymphoblastic leukemia through DNA methylation profiling. Clin Epigenet 16, 49 (2024). https://doi.org/10.1186/s13148-024-01662-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13148-024-01662-6