Abstract

Background

Integrated care involves care provided by a team of professionals, often in non-traditional settings. A common example worldwide is integrated school-based mental health (SBMH), which involves externally employed clinicians providing care at schools. Integrated mental healthcare can improve the accessibility and efficiency of evidence-based practices (EBPs) for vulnerable populations suffering from fragmented traditional care. However, integration can complicate EBP implementation due to overlap** organizational contexts, diminishing the public health impact. Emerging literature suggests that EBP implementation may benefit from the similarities in the implementation context factors between the different organizations in integrated care, which we termed inter-organizational alignment (IOA). This study quantitatively explored whether and how IOA in general and implementation context factors are associated with implementation outcomes in integrated SBMH.

Methods

SBMH clinicians from community-based organizations (CBOs; nclinician = 27) and their proximal student-support school staff (nschool = 99) rated their schools and CBOs (clinician only) regarding general (organizational culture and molar climate) and implementation context factors (Implementation Climate and Leadership), and nine common implementation outcomes (e.g., treatment integrity, service access, acceptability). The levels of IOA were estimated by intra-class correlations (ICCs). We fitted multilevel models to estimate the standalone effects of context factors from CBOs and schools on implementation outcomes. We also estimated the 2-way interaction effects between CBO and school context factors (i.e., between-setting interdependence) on implementation outcomes.

Results

The IOA in general context factors exceeded those of implementation context factors. The standalone effects of implementation context factors on most implementation outcomes were larger than those of general context factors. Similarly, implementation context factors between CBOs and schools showed larger 2-way interaction effects on implementation outcomes than general context factors.

Conclusions

This study preliminarily supported the importance of IOA in context factors for integrated SBMH. The findings shed light on how IOA in implementation and general context factors may be differentially associated with implementation outcomes across a broad array of integrated mental healthcare settings.

Similar content being viewed by others

Background

Research has established that fragmented mental health services disproportionately impact the most vulnerable children and adolescents [1,2,3]. As a promising solution to increase service accessibility and integration [4], integrated mental healthcare involves a multidisciplinary team of health professionals providing care for clients, often in non-traditional settings (e.g., schools, primary care) [5]. In the US, integrated mental healthcare has gained significant traction [6], partly due to supportive policies (e.g., the Affordable Care Act [7]) and financial investments. Similarly, many countries and regions worldwide have invested in legislation and policies to promote integrated care [8]. Integrated care settings are unique in that they involve overlap** organizational contexts, but little is known about how the two contexts combine and interact to facilitate or impede the uptake and delivery of EBPs.

Implementation research has established that organizational context factors (e.g., general implementation climate) are critical to the development of an enabling and healthy work setting, which impacts individual professionals' EBP implementation outcomes [9,10,11]. However, existing research has largely focused on organizational context factors from standalone service settings (e.g., community clinics). Evidence from this siloed approach may not readily transfer to integrated mental healthcare due to its fundamental nature in which interventions are delivered by professionals situated within overlap** contexts (e.g., community-based organizations, CBOs) [12]. To begin to address this knowledge gap, this study aimed to explore and quantitatively illustrate how setting-specific context factors function synergistically (i.e., inter-organizational alignment) to influence implementation outcomes of EBPs in the most common integrated setting for child and adolescent mental health service delivery: school-based mental healthcare.

Integrated School-Based Mental Healthcare (SBMH)

Schools reduce multiple barriers (e.g., transportation, access to free services) to mental healthcare for children and adolescents (particularly those from disadvantaged, ethnic and socioeconomic minoritized groups), which are commonly experienced in traditional outpatient settings [11]. In the US and globally, SBMH services witnessed a fast growth with 50 to 80% of all mental healthcare for children and adolescents now being provided in schools [13]. The most common arrangement for SBMH in the US is integrated or co-located SBMH, wherein services are provided by professionals who are located at school but trained and employed by CBOs external to the education system [14]. This led to significant contextual (e.g., organizational structure and size, funding) and administrative differences (e.g., training, service priorities) between CBOs and schools that can influence EBP implementation in integrated SBMH [15]. Existing research showed that integrated SBMH provides several advantages over traditional outpatient care. First, co-location can minimize service fragmentation by reducing duplicated efforts and enhancing professionals' responsiveness to the needs of children and adolescents [14, 16, 17]. Second, co-locating professionals and their proximal school staff in the same building can enhance their collaboration, shared decision-making, and service integration [11]. Given its public health utility and social significance, integrated SBMH is supported by various policies in the US and internationally [18]. However, EBP implementation in integrated SBMH has been highly variable and inconsistent, which undermines its public health impact [19, 20]. Research examining factors that influence EBP implementation in integrated SBMH is critical to address this gap.

Organizational context factors relevant to integrated SBMH

Existing implementation frameworks and models have identified myriad factors that either facilitate or impede EBP implementation in various service settings. While these implementation factors exist across all levels of an implementation ecology, they vary greatly in their mechanisms of change, responsiveness to implementation strategies, and impact on implementation outcomes in common mental healthcare settings for children and adolescents (e.g., CBOs and schools). Furthermore, it remains unknown what implementation factors are most influential for integrated SBMH and similar integrated care settings given their overlap** organizational context. Based on the Exploration, Preparation, Implementation, Sustainment (EPIS) framework [2] and literature on EBP implementation in schools and CBOs, we identified several organizational context factors in the inner setting that are (a) known to proximally influence EBP implementation in schools and CBOs [15, 21, 22], (b) amenable to common implementation strategies (e.g., leadership strategies or cross-system collaboration strategies; [23, 24]), and (c) common and generic organizational factors that are relatively separate from the administrative and contextual differences in the organizations involved in integrated SBMH (e.g., training, funding, organizational structure) [6, 25,26,27,28,29,30]. For instance, general organizational factors, such as organizational culture (shared values, beliefs, and implicit norms that influence staff's behavior) and climate (shared experiences and appraisals of the work environment), are found to be predictive of adoption and use of EBPs in both schools and CBOs [31,32,33,34]. Emerging research has also shed light on the additive and direct effects of implementation context factors on staff's implementation behaviors and outcomes in schools and CBOs. These include implementation climate (shared perceptions of the extent to which implementing EBPs is expected, supported, and rewarded by their organization) and implementation leadership (the attributes and behaviors of leaders that support effective implementation) [16, 35].

Extant implementation literature has examined and consistently endorsed the impacts of context factors on EBP implementation in a single organization or service setting. However, the findings of studies focusing on siloed organizations may not transfer properly to integrated settings such as SBMH. This is partly due to the fundamental nature of integrated SBMH that entails embedding professionals from external CBOs into school settings, which is distinct from traditional care where services are provided by professionals located in disparate settings [20]. Hence, research is needed to extend from siloed settings to simultaneously evaluate context factors from different organizations in integrated SBMH. The findings from this integrated approach are instrumental to our understanding of the interactive context factors for successful EBP implementation as well as the selection and design of corresponding implementation strategies for service quality improvement in integrated care.

Inter-organizational alignment in integrated SBMH

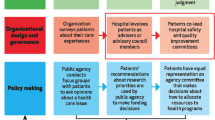

The EPIS framework recognizes the importance of inter-organizational context (i.e., relationships and connections among the inner/outer settings of different organizations). Emergent qualitative evidence suggests that EBP implementation can benefit from similarities between the implementation leaders and stakeholders of overlap** organizations regarding their core values, shared vision, and commitment [36, 37]. We conceptualize implementation-related inter-organizational alignment (IOA) as the degree of similarity in implementation context factors between organizations involved in integrated care. When considering both the level and alignment of context factors simultaneously, two organizations may demonstrate different " IOA profiles." The IOA profiles of the context factors between overlap** organizations in integrated care may be (a) consistently high levels (i.e., "favorable" for implementation outcomes), (b) consistently low levels (i.e., "unfavorable"), or (c) inconsistent levels (one high, one low; Fig. 1). For instance, favorable IOA in implementation climate represents the degree to which staff from different organizations in integrated care settings share similar and favorable expectations and experiences of EBP implementation. Prior research in single healthcare organizations has established that intra-organizational alignment (i.e., consistency within a standalone organization) in organizational communication can reduce staff confusion and facilitate their internalization of the priorities and goals of the organization [38,39,40,41,42]. Thus, we hypothesized that favorable IOA in implementation climate across multiple integrated healthcare organizations would show a similar effect as the intra-organizational alignment in a standalone organization on professionals' implementation behaviors for EBP delivery. To date, there are only qualitative studies that support the importance of IOA in context factors for EBP adoption in inter-organizational collaboration [17, 23, 37, 43]. However, the synergistic effects (i.e., IOA) of context factors between different organizations in integrated care have not been examined quantitatively.

The unique characteristics of integrated SBMH (e.g., co-located care, widely available in the public sector, dual/overlap** administrative relationships between organizations) make it an ideal setting for quantitatively investigating the effects of IOA on EBP implementation [22]. Figure 2 shows our conceptualization of the inter-organizational contexts in integrated SBMH. Most integrated SBMH services are delivered by clinicians who are located at school but trained and employed by CBOs external to the education system [13]. This leads to potential discrepancies in the administration and context factors between schools versus CBOs (e.g., training, funding) that influence EBP implementation [44]. Moreover, research has suggested that CBO-employed clinicians are influenced simultaneously by both the school and CBO organizational contexts [13]. Other research has shown that school-based context factors can predict EBP implementation, while implementation outcomes may be contingent on organizational contexts from both CBO and school [45]. In sum, integrated SBMH represents an ideal setting to explore the hypothetical interactive effects on implementation outcomes between context factors from different organizations involved in integrated care (i.e., CBO and school) [45, 46]. Based on existing literature, we hypothesized a positive interaction effect wherein EBP implementation outcomes in integrated SBMH would be highest when context factors in CBO and school are both high.

Study aims

Improving the accessibility and effectiveness of EBPs in integrated care requires a fine-grained understanding of how the alignment in context factors between different organizations (i.e., IOA) are associated with the outcomes of implementation and clients. Despite the promising theoretical propositions from a few qualitative studies [45], no quantitative study exists yet to illustrate the association between IOA and EBP implementation in integrated care. In this cross-sectional observational study, we aimed to explore how IOA between CBO and school context factors is associated with common implementation outcomes in integrated SBMH. This study followed the pre-registered study procedure and analyses published as a study protocol article [47]. To enhance the conciseness and clarity in reporting, we located the content of the ancillary research question (RQ) about clinicians' embeddedness in Additional file 1. Three sequential RQs guided this study.

-

1.

Based on measures reported by clinicians and/or proximal school staff, what are the levels of IOA in implementation context factors between CBOs and schools (general organizational culture and climate, implementation leadership and climate)?

-

2.

What are the standalone main effects of school- versus CBO-based context factors on common implementation outcomes in integrated SBMH (e.g., treatment integrity, improved access, feasibility)?

-

3.

Is the interaction between school- and CBO-based context factors (i.e., IOA) associated with common implementation outcomes in integrated SBMH?

Methods

Participants and settings

Participants were CBO-employed SBMH clinicians and their proximally related school staff (e.g., school nurses, counselors, social workers, or administrators who were involved in supporting or facilitating integrated SBMH) from two large urban school districts in the Midwest and Pacific Northwest (nschool = 27). We recruited CBOs (nCBO = 9) that had (a) administrative relationships with schools that reflect the common arrangements nationally (i.e., external CBOs providing SBMH service via a district or county contract), and (b) longstanding integrated SBMH services with schools to control for the timing and history of organizational partnership between schools and CBOs [47]. In their existing integrated SBMH programming, the participating schools and CBOs were implementing several evidence-based mental health intervention/prevention programs that were commonly used in the education sector and established in the literature on school mental health (e.g., cognitive behavioral therapy, applied behavioral analysis, mindfulness-based interventions, social or parenting skill training groups). In the analytic sample, the CBO clinicians (nclinician = 27) were 92.59% female, 11.11% Hispanic/Latinx, 55.56% Caucasian, 3.7% African American, 7.41% Asian, and everyone held a master’s degree. Their proximal school staff (nschool = 99) were 85.86% female, 9.09% Hispanic/Latinx, 73.47% Caucasian, 14.29% African American, 2.04% Asian, and 79.38% with a master’s degree.

Procedures

IRB approval was obtained from the authors' university. We administered a large-scale online survey to CBO-employed SBMH clinicians and their identified proximal school staff about the context factors and implementation outcomes from their respective organizations. Consent was obtained in the initial section of the survey. To identify each clinician's proximal school staff, the study rolled out in three phases: (a) clinicians were recruited to complete the clinician-version survey, (b) during survey completion, clinicians identified proximal staff from their embedded schools who were responsible for supporting SBMH (e.g., school psychologists, school counselors), and (c) these proximal school staff were recruited by email and/or telephone to complete the school-version survey. Based on organizational research [48], we obtained at least three participants per CBO/school to ensure a reliable assessment of the organizational constructs (e.g., implementation context). To improve response rates, we used backup data collection methods (e.g., weekly reminder emails, telephone follow-ups). For analytic integrity, we used listwise deletion for cases with missingness in implementation outcomes or context factors. In the analytic sample, the response rate was 90% for clinicians and 99% for proximal school staff.

Measures

Implementation outcomes

Treatment integrity

Based on prior organizational research [49], the treatment integrity of EBPs was assessed by a 4-item scale rated by SBMH clinicians on a 5-point Likert scale ranging from 0 "Not at all" to 4 "To a Very Great Extent." A higher score indicates better treatment integrity. Each item assesses a specific dimension of the extent to which a clinician implemented EBPs to students as intended, including Fidelity, Competence, Knowledge, and Adherence. The overall mean score of the four items was computed as a holistic and generalizable indicator of the multidimensional construct of treatment integrity for generic EBPs. In this sample, the internal consistency for this scale was high (Cronbach's α = 0.91).

Acceptability, Appropriateness, and Feasibility (AAF)

The AAF of generic EBPs delivered by clinicians was assessed with the Acceptability of Intervention Measure, Intervention Appropriateness Measure, and Feasibility of Intervention Measure, respectively [50]. All items were rated by SBMH clinicians on a 5-point Likert scale ranging from 1 "Completely Disagree" to 5 "Completely Agree". Per the measures' instructions, some item wordings were tailored to refer to generic EBPs. In this sample, all three measures demonstrated good internal consistencies (Cronbach's α: acceptability = 0.95, appropriateness = 0.97, and feasibility = 0.89).

Expanded School Mental Health Collaboration Instrument (ESCI)

The proximal school staff completed three subscales of the ESCI to assess their clinicians' service quality in schools [51]. The three subscale scores were used as separate implementation outcomes specific to integrated SBMH in this study, including (a) Support for Teachers and Students (how students and teachers are supported through SBMH programming, eight items), (b) Increased Mental Health Programming (five items), and (c) Improved Access for Students and Families (three items). All items were rated by proximal school staff on a 4-point Likert scale ranging from 1 "never" to 4 "often". In this sample, the three subscales’ Cronbach's α ranged from 0.79 to 0.95.

Implementation Citizenship Behavior Scale (ICBS)

The SBMH clinicians and their proximal school staff completed the ICBS to report their implementation citizenship behavior (i.e., the degree to which one goes "above and beyond their duty" to implement EBPs) [49]. The ICBS includes six items loading onto two subscales: "Hel** Others" and "Kee** Informed". In this study, the total score of ICBS was used with a Cronbach's α of 0.91.

Attitudes toward Evidence-Based Practices Scale (EBPAS)

The SBMH clinicians and their proximal school staff completed the school version of EBPAS to report their attitudes toward EBPs [52] The school version of EBPAS was adapted for use with service providers in the education sector. It consists of 16 items loading onto four subscales: Requirements, Appeal, Openness, and Divergence. In this study, the total score of EBPAS was used, with a Cronbach's α of 0.85.

Explanatory variables: organizational context factors

The SBMH clinicians completed the same measures about the implementation context in two organizations: their employing CBOs and embedded schools. To control for sequential bias, half of the clinicians were randomized to assess their CBO first, while the other half assessed their schools first.

Implementation Leadership Scale (ILS)

The ILS [53] has 12 items rated on a 5-point Likert-Scale (0 = "not at all" to 4 "very great extent"), which load onto four subscales, including Proactive Leadership, Knowledgeable Leadership, Supportive Leadership, and Perseverant Leadership. When rating for implementation leadership in CBO, the item wordings were tailored for CBO (e.g., "school" replaced with "agency"). In this sample, the ILS demonstrated excellent internal consistency (school α = 0.98; CBO α = 0.96).

Implementation Climate Scale (ICS)

The ICS [53] assessed the degree to which a school possesses an implementation climate supportive of translating EBPs into routine practice. The ICS includes 18 items loaded onto six subscales which form a total score: Focus on EBP, Educational Support for EBP, Recognition for EBP, Rewards for EBP, Selection for EBP, and Selection for Openness. When rating for CBOs, the item wordings were tailored accordingly (e.g., "school" was replaced by "agency"). All items are scored on a 5-point Likert scale (0 = "not at all" to 4 "very great extent"). In this sample, the ICS demonstrated good internal consistency (school α = 0.94; CBO α = 0.91).

Organizational Social Context (OSC)

The OSC assesses the general (i.e., molar) organizational culture and climate [54]. Given the focus of this study, we selectively administered the Proficiency (15 items) subscale from the General Organizational Culture Scale, as well as the Stress (20 items) and Functionality (15 items) subscales from the General Organizational Climate Scale. Items were rated by clinicians on a 5-point Likert scale ranging from 1 "Never" to 5 "Always". When rating the CBO, the item wordings were tailored for CBO (e.g., "school" replaced with "agency"). In this sample, the three subscales demonstrated good internal consistency (α ranging from 0.71 to 0.93 for schools and from 0.75 to 0.91 for CBOs).

Covariates

To control for potential confounders, the survey collected demographic information from SBMH clinician and their proximal school staff about their age, gender identity, ethnicity, race, education level, and work experience in their current position (Table 1).

Analysis

We followed the pre-registered analytic procedure [47]. The dataset used for RQ 1 is configured such that the dyads of CBO and school ratings of a context factor (level-1 units) were nested within clinicians (level-2 units). The magnitude of IOA in CBO and school context factors was quantified by the intra-class correlation coefficient [ICCs (2,1), i.e., 2-way mixed effects, single measurement, absolute agreement], which was estimated with random-intercept-only multilevel models (MLMs) using each context factor as the outcome without predictors. We also ran paired-sample t-tests to probe the significance of differences in context factors between CBO and schools. Because the measures of context factors differ in their maximum scores, the ratios of means over maximum scores were computed for each context factor. The ratios enabled us to compare the levels of different types of context factors between schools and CBOs because ICCs cannot indicate the directions of IOA (e.g., high/low in both school and CBO).

The dataset used for RQs 2 and 3 was configured so that the SBMH clinicians and their reported context factors and implementation outcomes (level-1 units; nclinician = 27) were nested in CBOs (level-2 units; nCBO = 9). The school-based context factors were aggregates of all personnel in each school (i.e., clinicians and their proximal school staff: nstaff = 99). We fitted random-intercept-only MLMs to account for the nesting of clinicians within CBOs (Additional file 2). The dyads of clinician-rated context factors in CBO and school were entered into MLMs as level-1 explanatory variables for each of the nine implementation outcomes (see Measures). Context factors were centered around their group means to adjust for their moderate level of multicollinearity and to enhance the interpretability of their coefficients [55]. In the MLMs, participant demographics did not account for significant portions of variance in the implementation outcomes. Hence, we excluded them from the final models. For RQ 3, we entered 2-way interaction terms between CBO and school context factors to the MLMs in RQ 2. The two-way interaction models allowed us to examine RQ3 and our hypothesis that EBP implementation outcomes in integrated SBMH would be highest when context factors in CBO and school are both high. To facilitate readers to interpret the interaction effects, we plotted two exemplary interactions (positive and negative; Figs. 3 and 4, respectively).

Example of positive/compensatory 2-way interaction effect between CBO versus school context factors (implementation leadership) on implementation outcomes (treatment integrity) in integrated mental healthcare. The predictors (context factors) were group mean centered. Black lines = smoothed regression lines for the three levels of the moderator (CBO-based implementation leadership). Solid line with green dots = high level of moderator (84th percentile), long-dash line with red dots = moderate level of moderator (50th percentile), short-dash lines with blue dots = low level of moderator (16th percentile)

Example of the negative/suppressive 2-way interaction effect between CBO versus school context factors (general factor of Proficiency) on implementation outcomes (perceived acceptability) in integrated mental healthcare. The predictors (context factors) were group mean centered. Black lines = smoothed regression lines for the three levels of the moderator (CBO-based Proficiency). Solid line with green dots = high level of moderator (84th percentile), long-dash line with red dots = moderate level of moderator (50th percentile), short-dash lines with blue dots = low level of moderator (16th percentile)

Based on the published study protocol, our effect size estimates were expected to resemble the population-level estimates because our sampling frame approximated the SBMH clinician population in the two participating regions [47]. Hence, we focused on interpreting the effect sizes of context factors, instead of statistical significance, to inform practice and future studies (Table 3). We estimated partial Cohen's d of all fixed effects to compare across explanatory variables, interaction terms, and models [55]. To complement standardized effect sizes, unstandardized fixed effect coefficients were computed with the empirical Bayes method as generalizable effect estimates [56]. Given the multiple hypothesis tests, p-values would likely produce inflated Type I error. Among the MLMs for each implementation outcome, false discovery rate-corrected p-values (i.e., q-values) were calculated using the Benjamini–Hochberg method to control for potential false positives with a level of significance of 0.05 [57]. Analyses were performed with SPSS version 26 and HLM version 6.08. For precision and informativeness for future studies, three decimal points were reported for key statistics. We followed the STROBE checklist for result reporting (Additional file 3). We also visualized the coefficient estimates (e.g., ICCs, fixed effect sizes) to help readers navigate the large number of results (Additional file 4).

Results

RQ 1: Levels of inter-organizational alignments

We checked basic statistical assumptions and confirmed the sample adequacy for MLM (e.g., significant correlations among key variables; Table 2). The ICCs represent the degree of alignment in each organizational context factor between CBOs and schools, i.e., IOA. All ICCs reached statistical significance (Table 3 and Additional file 4). In general, the magnitudes of IOA were higher in general context factors (Proficiency: ICC = 0.585; Functionality: ICC = 0.282; Stress: ICC = 0.831) than those in the total scores of Implementation Climate (ICC = 0.342) and Leadership (ICC = 0.167). Regarding implementation context factors, the average level of IOA among the subscales of Implementation Climate (ICC = 0.283) exceeded that of Implementation Leadership (ICC = 0.174; for IOA of all subscales, see Table 3). Among the subscales of Implementation Climate, Selection for openness (ICC = 0.469) and Focus on EBP (ICC = 0.390) showed the highest levels of IOA while Educational support for EBP showed the lowest level (ICC = 0.016). Among the subscales of Implementation Leadership, Proactive Leadership (ICC = 0.394) showed the highest level of IOA while Perseverant Leadership showed the lowest (ICC = 0.030).

The ICCs suggest that the context factors tested in this study did not perfectly align between CBOs and schools. Hence, we followed up with t-tests to probe the significance of the between-setting mean difference in these context factors. The results indicated that the levels of most context factors (total and subscale scores) in CBOs were larger than those in schools with some of the mean differences reaching statistical significance (e.g., Implementation Climate and Leadership, Stress; Table 3). We compared the ratios of mean over the maximum score for each context factor between schools and CBOs because ICCs cannot reveal whether the levels of a context factor are simultaneously high or low in both settings (Table 3). On average, the levels of general context factors exceeded that of Implementation leadership, followed by Implementation Climate. Moreover, the levels of Stress and Implementation Leadership in schools exceeded those in CBOs. Conversely, the levels of Implementation Climate, Proficiency, and Functionality in CBOs exceeded those in schools.

Multilevel Models

We reported the fixed effect sizes of implementation context factors and their interaction terms in Tables 4, 5, 6, 7, 8, 9, 10, 11, and 12. For reporting and interpretation, we focused on the levels of IOA in each context factor, as well as the clinically meaningful patterns in the effect size estimates. In systematic order, we compared the effect size and directions of the CBO versus school context factors based on RQs, types of context factors (i.e., general vs. implementation), and implementation outcomes. Theoretically, the results of the standalone main effect MLMs were likely more robust and better powered than the interaction MLMs with more complex configurations because interaction effects almost by definition tend to be small.

RQ 2: Standalone main effect MLMs

Several patterns surfaced from the results of RQ2. We compared the sizes and directions of the standalone associations (i.e., the fixed effect sizes) between setting-specific context factors and implementation outcomes. Additional file 4 provides a visual aid to compare the results across all models. Regarding the size of associations, in both CBOs and schools, the effect sizes of Implementation Climate and Leadership were larger than those of the general context factors (Proficiency, Stress, and Functionality) on most implementation outcomes. For instance, compared to Stress, a difference of one standard deviation (SD) in implementation climate was associated with a bigger difference (in SDs) in Appropriateness in either school or CBO. Between implementation context factors, the effect sizes of Implementation Climate exceeded those of Implementation Leadership for most implementation outcomes, except for Feasibility and Attitudes toward EBPs (Tables 10 and 11). Between settings, the effect sizes of context factors in CBOs (general and implementation) were larger than those in schools for most implementation outcomes, except for Acceptability, Feasibility, and Attitudes toward EBPs (Tables 8, 10, and 11).

There were mixed findings about the directions of the associations between setting-specific context factors and implementation outcomes (Additional file 4). For instance, in CBOs, the implementation context factors showed mostly positive associations (e.g., Treatment integrity; Table 4). In schools, the implementation context factors showed positive associations with some implementation outcomes (e.g., Acceptability; Table 8) but negative associations with the others (e.g., Improved Access; Table 7). Moreover, general context factors in CBOs showed opposite directions against the same factors in schools regarding their association with most implementation outcomes. For example, Treatment integrity, Acceptability, and Appropriateness were positively associated with Proficiency in schools but negatively associated with Proficiency in CBOs (Tables 4, 8, 9).

RQ 3: 2-Way interaction effects of context factors between settings

Due to the limited power, we did not identify any significant 2-way interaction effects of context factors between CBOs and schools (i.e., IOA). By comparing the size and direction of the effect estimates, we identified three patterns based on the types of context factors and implementation outcomes. First, the interaction effects of general context factors between CBOs and schools were larger than those of implementation context factors on most implementation outcomes, except for Treatment integrity and Implementation citizenship behaviors (Tables 4 and 12). Second, for Appropriateness and Feasibility (Tables 9 and 10), the interaction effects of Implementation Leadership between CBOs and schools were smaller than those of Implementation Climate. But the opposite was observed for other implementation outcomes (i.e., the interaction effects of Implementation Leadership were larger than those of Implementation Climate).

Regarding the directions of the interaction effects of context factors between CBO and school, there were mixed findings based on the type of context factors and implementation outcomes. On Treatment Integrity, Acceptability, Appropriateness, and Feasibility, Implementation Leadership showed a positive interaction effect, but Implementation Climate showed a negative interaction effect. On the other hand, both Implementation Leadership and Climate showed negative interaction effects on the three implementation outcomes specific to integrated SBMH (i.e., Support for Teachers and Students, Increased Mental Health Programming, Improved Access for Students and Families; Tables 5, 6, 7). However, all of these interactions should be replicated given the small sample size.

Discussion

Successful implementation of EBPs in integrated mental healthcare requires synergistic efforts of service providers from different organizations and adequate alignment of the implementation contexts of these organizations (i.e., IOA; [47]). To date, little is known about how alignment in implementation context factors between multiple organizations influences EBP implementation in integrated care. This is the first quantitative study to narrow this knowledge gap to inform future investigation and practice about EBP implementation in an integrated mental healthcare setting for children and adolescents (e.g., integrated SBMH). Our findings offered preliminary evidence that (a) supported the importance of IOA in context factors between the overlap** organizations in integrated SBMH, and (b) shed light on the differential influences of IOA on EBP implementation in integrated SBMH depending on the types of context factors (general vs. implementation) and implementation outcomes. These findings could serve as an empirical foundation for future large-scale studies, particularly with regard to study designs and sample planning (e.g., power analysis, starting values for coefficient estimation; [58]) to power more in-depth analyses about the mechanism through which IOA influences implementation in integrated care (See below Limitations and Future Direction).

Levels of IOA in organizational context factors

Our findings revealed several intriguing patterns in the levels of IOA in context factors. First, the average levels of IOA between schools and CBOs were higher in general context factors than implementation ones. This is consistent with the follow-up t-tests that revealed smaller discrepancies (i.e., higher IOA) between CBOs and schools in the levels of general context factors than those of implementation context factors. These findings may be attributable to the different nature of the two service settings. For instance, the common priority of schools is not implementing EBPs for students' mental health but for academics, while it is common for SBMH clinicians to hold various jobs and roles in schools as compared to CBOs. These differences in organizational priorities and job duties could lead to clinicians’ more mixed experiences of school-based implementation climate, which was reflected in the larger variabilities (see the standard deviations in Table 3) in their reported context factors in schools than in CBOs. Conversely, clinicians in many CBOs were aware that their organizations prioritized and valued EBP implementation, which may have led to their consistent experience of CBO-based Implementation Climate. This contrast amplified the between-organization discrepancy (i.e., low IOA in Implementation Climate). On the other hand, general context factors represent common social contexts that are likely more pervasive across CBOs and schools than implementation ones. For instance, Stress showed the highest level of IOA in schools and CBOs, which was consistent with the literature on pervasive staff burnout in both settings [59].

Furthermore, we found that the levels of general context factors exceeded those of implementation context factors in both CBOs and schools. Taking IOA and levels together, the general context factors in CBOs and schools appeared to be both better-aligned and higher than those of implementation context factors. There results indicate that, compared to the already well-aligned and adequate general context factors, there is more room and need to improve and align the implementation context factors between the overlap** settings in integrated care. Our findings suggest that leaders of integrated care (e.g., SBMH) may strategically allocate resources (e.g., dedicated funding and staffing, leadership meetings between school and CBO, effective organizational communication technology; [60,61,62]) to improve both the alignment and levels of context factors to improve EBP implementation. Furthermore, our findings suggest that leaders should place differential emphases on certain context factors regarding type (general vs. implementation), level of discrepancies (between-individual vs. between-organizational differences), and characteristics of organizations (e.g., schools vs. CBOs). These considerations can inform future research about the differential mechanisms through which the IOA and standalone levels of general and implementation context factors influence implementation outcomes in integrated care. For instance, the level of implementation climate in school or CBO may influence implementation outcomes only to a certain extent before their standalone effect plateaus, wherein IOA in implementation climate becomes more important because it can introduce a multiplicative interaction effect. Relatedly, future research should explore the optimal cutoffs for the IOA and levels of different context factors to guide data-based decision-making in resource allocation and selection of implementation strategies in integrated care settings. This type of research will require a longitudinal design with a large sample size to enable predictive modeling using ROC analysis and response surface analysis [63].

Standalone effects of CBO versus school context factors

For most implementation outcomes, the effect sizes of the main effects of implementation context factors (e.g., Implementation climate) exceeded that of general factors (e.g., Proficiency) in both CBOs and schools. This finding corroborates the existing body of research to support that implementation context factors have stronger associations with individual-level behavioral and perceptual implementation outcomes (e.g., Treatment Integrity, Acceptability) than general context factors, which holds across service settings in integrated care [64]. It also suggests that leaders of both CBOs and schools in integrated SBMH should adopt implementation strategies that support cross-sector collaboration and foster a positive implementation context in their overlap** organizations [38]. Inter-organizational collaboration strategies might include joint supervision and training or shared data and decision-making [23, 65], which can meet the needs of integrated care settings (e.g., integrated SBMH) for quality and strategic inter-organizational collaboration given their unique features, such as dual administrative relationship and overlap** organizations [47]. For instance, these strategies could promote the coordination and alignment surrounding service planning, programming, and provision, which may indirectly enhance the IOA in implementation context factors (e.g., implementation leadership and climate) between the overlap** settings in integrated care [47, 66, 67].

Moreover, we found that implementation context factors in CBOs showed stronger associations with implementation outcomes compared to the same factors in schools. This implies that SBMH clinicians' behaviors and cognitions related to EBP implementation (e.g., treatment integrity, attitudes toward EBPs) are potentially influenced more by the implementation context in their CBOs than in schools. For example, clinicians’ knowledge about and competency in EBPs (two items measuring treatment integrity) were influenced more by their employers (CBO) who provide training, supervision, and salary rather than their physical setting (school) where they provide services. This finding has implications for leaders of CBOs who embed their clinicians in other organizations for integrated care. For instance, leadership-focused implementation strategies (e.g., Leadership and Organizational Change for Implementation, LOCI; [68]) could be used at CBOs to improve their implementation context factors which are more closely related to the implementation outcomes of integrated care than those in the actual service provision setting (e.g., schools). For future research, our finding highlights the importance of simultaneously examining context factors of the overlap** organizations involved in integrated care [23, 47]. Many prior studies have used a siloed approach to examine organizations separately, which limited their capacity to delineate the collaborative, differential, and interactive features of context factors in the overlap** organizations in integrated care [69, 70].

Two-way interactions between CBO and school context factors

Compared to implementation context factors, general factors (e.g., Stress) in schools and CBOs demonstrated larger 2-way interaction effects in their associations with implementation outcomes. This implies that the effects of school and CBO general context factors depended on each other when it comes to explaining the variability in common implementation outcomes in integrated care. There results are consistent with our earlier finding that the levels of IOA between CBOs and schools were higher in general factors than in implementation ones. Due to their different organizational nature and priorities, low levels of IOA in implementation context factors were observed between CBOs and schools. The low IOA (i.e., a large between-organization discrepancy) in implementation context factors in turn restricted their interaction effects on influencing individuals' implementation behaviors. Leaders of integrated SBMH can leverage this finding by prioritizing and coordinating their efforts to deliberately improve alignment between CBOs and schools. For instance, at the exploration stage of implementing EBPs in integrated care, leaders can build their inter-organizational communication to run a collaborative campaign in their organizations advocating for the significance of and rewards for implementing EBPs using common messages [71, 72].

Across different implementation outcomes, the mixed directions of the 2-way interactions implied two types of interdependences (e.g., Figs. 3 and 4; Additional file 4). The 1st type is the compensatory effect, which was mostly found for clinicians' implementation behaviors (e.g., treatment integrity, implementation citizenship behaviors). For instance, the highest levels of Treatment Integrity were found when there were high levels of Implementation Leadership in both settings (CBOs or schools), which aligned with our hypothesis (Fig. 3). The 2nd type is the suppressive effect, which was found for Acceptability and the implementation outcomes specific to integrated SBMH (e.g., Increased Mental Health Programming). For instance, levels of Acceptability were highest when levels of Proficiency were high in schools but low in CBOs (Fig. 4). This finding differed from our theoretical hypothesis based on prior literature wherein implementation outcomes in integrated SBMH would be highest when the levels of context factors in both CBO and school are high. The fact that the 2-way interaction effects showed a mix of positive and negative directions implies that the nature of the interdependence of context factors between organizations in integrated care may be inconsistent and nonlinear, which is not in line with theoretical predictions. Hence, future research is called for to replicate this study with a large and nationally representative sample (i.e., for higher precision in estimation).

In contrast, an alternative perspective to IOA may be relevant given the varying levels of IOA in CBO- and school-based context factors and the mixed directions in the associations between IOA and implementation outcomes in integrated SBMH. The extent to which an implementation context factor in one organization (e.g., CBO) is complementary to that in their partner organization involved in integrated care (e.g., school)—or inter-organizational complementarity (i.e., a special type of inconsistent profile of IOA; Fig. 2)—may account for the variance unexplainable by IOA alone in the outcomes of EBP implementation in integrated care. Many past studies have focus on inter-organizational coordination across mental health service sectors (e.g. [73, 74]). But they have yielded mixed findings with some studies supporting the positive effect of coordination on access and outcomes of EBP implementation [75, 76] and some studies revealing a negative effect of coordination on service quality [77]. Hence, some have argued that, in addition to optimizing coordination between collaborative organizations, there may be value in recognizing the importance of the diverse, unique, and redundant features and services from standalone organizations that complement each other (e.g., families may appreciate the similar services provided by different organizations as backup options based on their specific needs) [77]. We hypothesize that, depending on the type, needs, and characteristics of integrated care (e.g., integrated SBMH), adequate levels of inter-organizational complementarity may be preferable for certain context factors while IOA may be preferable for other context factors. For instance, an organization with high levels of stress (a dimension of molar organizational climate) may benefit from collaborating with another organization with low levels of stress (i.e., to obtain a high level of inter-organizational complementarity in stress). Conversely, to promote the uptake of new EBPs, the multiple organizations in integrated care need to align their levels of Implementation Climate to an adequate extent (i.e., to obtain a high level of IOA in Implementation Climate). Future research should extend from our findings to explore the conditions under which IOA or inter-organizational complementarity is preferred to improve EBP implementation in the overlap** organizations in integrated care.

Limitations and future directions

Several limitations exist in this exploratory study that warrant cautious interpretations of the findings and future research. First, the sample was restricted due to the limited number of integrated SBMH settings available in the participating regions. The models were underpowered by design, so we focused on interpreting effect size estimates instead of making statistical inferences [47]. Given the unique organizational structure in integrated SBMH (e.g., one CBO hosts multiple clinicians each of whom serves a single school), future studies can extend this work by recruiting nationally representative samples of integrated SBMH settings. Doing so will enable (a) the inclusion of more context factors relevant to EBP implementation in integrated care settings (e.g., alignment in size, structure, service goals), (b) inferential statistics and (c) advanced modeling (e.g., polynomial regression with response surface analytic approach, [78]) that are generalizable to other regions and integrated care settings. Moreover, response surface analysis can yield an in-depth understanding of the nonlinear alignment effects of different IOA profiles (e.g., effects of favorable IOA when implementation climate are high in both organizations) and enable a visual examination of the alignment effects of IOA in various context factors [79]. These follow-up studies can extend our findings to further explore how different combinations of levels and alignments of context factors (i.e., IOA profiles) influence implementation outcomes in integrated care.

Second, due to the limited sample size, this study took a univariate approach to model each implementation outcome separately. However, the moderate to significant correlations among the implementation outcomes may lead to misestimated standard errors. Future research with multivariate MLMs (e.g., simultaneously modeling the linear combination of multiple implementation outcomes) may yield more precise effect estimates [80]. For instance, one can delve into the multidimensional nature of treatment integrity by modeling the four individual items/dimensions simultaneously as a vector of outcome variables (Fidelity, Competence, Knowledge, and Adherence; [81]). Third, we used a cross-sectional design given the exploratory aims of this study. Hence, we can only build explanatory models instead of predictive ones. Future studies should use our findings to design longitudinal studies to predict how changes in IOA in the context factors of multiple organizations can influence subsequent implementation outcomes in integrated care. Relatedly, variation in the timing of the organizational partnership in integrated care may necessitate the activation of different mechanisms through which IOA in context factors influences implementation outcomes specific to a certain implementation phase. Longitudinal designs can help address this type of research question. For instance, at the early stages of implementation of integrated SBMH, schools or CBOs may selectively choose partner organizations based on their geographic distance, similarities in organizational culture or climate, prior or existing partnerships, and organizational relationships. Hence, inter-organizational homophily may contribute to the initial level of IOA in the newly formed partnership of integrated SBMH [82]. Then, ongoing inter-organization communication and collaboration between schools and CBOs may increase the levels of IOA [72]. For example, a school leader may learn from CBO collaborators about strategic leadership behaviors to promote the use of EBP in their schools (i.e., the level of strategic leadership in a school gets assimilated by the level of strategic leadership in their partnership CBO throughout the process of integrated SBMH).

Conclusions

Successful EBP implementation in integrated mental healthcare for children and adolescents requires proper alignment in the implementation contexts between organizations. This study is the first to quantitatively explore and illustrate a nascent construct, IOA, in organizational context factors in integrated mental healthcare. Our findings shed light on how setting-specific context factors were synergistically associated with key implementation outcomes for EBPs targeting children and adolescents in integrated care. We hope this study can inform leaders and researchers who work in integrated care about the importance of IOA and how to select specific context factors for their implementation improvement efforts.

Availability of data and materials

The de-identified datasets can be requested from the authors.

Abbreviations

- CBO:

-

Community-based organization

- EBP:

-

Evidence-based practice

- EPIS:

-

Exploration, Preparation, Implementation, Sustainment

- ICS:

-

Implementation Climate Scale

- ILS:

-

Implementation leadership Scale

- IOA:

-

Inter-organizational alignment

- MLM:

-

Multilevel model

- OSC:

-

Organizational social context

- OIC:

-

Organizational implementation context

- SBMH:

-

School-based mental health

References

Howell EM. Access to children' s mental health services under Medicaid and SCHIP. Washington, DC, USA: The Urban Institute, B(B-60); 2004. Retrieved from https://policycommons.net/artifacts/636095/access-to-childrens-mental-health-services-under-medicaid-and-schip/1617379/ on 09 May 2024. CID: 20.500.12592/1c6v11.

Kortenkamp K. The well-being of children involved with the child welfare system: a national overview. Washington, DC,USA: The Urban Institute, B(B-43); 2002. Retrieved from https://policycommons.net/artifacts/636899/the-well-being-of-children-involved-with-the-child-welfare-system/1618208/ on 09 May 2024. CID: 20.500.12592/9077qm.

Burns BJ, Phillips SD, Wagner HR, Barth RP, Kolko DJ, Campbell Y, Landsverk J. Mental health need and access to mental health services by youths involved with child welfare: a national survey. J Am Acad Child Adolesc Psychiatry. 2004;43(8):960–70.

Asarnow JR, Rozenman M, Wiblin J, Zeltzer L. Integrated medical-behavioral care compared with usual primary care for child and adolescent behavioral health: a meta-analysis. JAMA Pediatr. 2015;169:929–37.

Butler M, Kane RL, McAlpine D, Kathol RG, Fu SS, Hagedorn H, et al. Integration of mental health/substance abuse and primary care. Evid Rep Technol Assess. 2008;173:1–362.

Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Adm Policy Ment Health. 2011;38:4–23.

Croft B, Parish SL. Care integration in the patient protection and affordable care act: implications for behavioral health. Adm Policy Ment Health Ment. 2013;40:258–63.

World Health Organization. WHO global strategy on people-centred and integrated health services: interim report. Geneva, Switzerland: World Health Organization; 2015.

Beidas RS, Kendall PC. Training therapists in evidence-based practice: a critical review of studies from a systems-contextual perspective. Clin Psychol Sci Pract. 2010;17:1–30.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50.

Glisson C, Landsverk J, Schoenwald S, Kelleher K, Hoagwood KE, Mayberg S, et al. Assessing the organizational social context (OSC) of mental health services: implications for research and practice. Adm Policy Ment Health. 2008;35:98.

Pullmann MD, Bruns EJ, Daly BP, Sander MA. Improving the evaluation and impact of mental health and other supportive school-based programs on students’ academic outcomes. Adv School Ment Health Promot. 2013;6(4):226–30.

Foster S, Rollefson M, Doksum T, Noonan D, Robinson G, Teich J. School mental health services in the United States, 2002–2003 (DHHS Publication No.(SMA) 05-4068). Center for Mental Health Services, Substance Abuse and Mental Health Services Administration. https://files.eric.ed.gov/fulltext/ED499056.pdf. 2005.

Skowyra K, Cocozza JJ. A blueprint for change: improving the system response to youth with mental health needs involved with the juvenile justice system. NY: National Center for Mental Health and Juvenile Justice Delmar; 2006.

Fazel M, Hoagwood K, Stephan S, Ford T. Mental health interventions in schools in high-income countries. Lancet Psychiatry. 2014;1:377–C87.

Ehrhart MG, Aarons GA, Farahnak LR. Assessing the organizational context for EBP implementation: the development and validity testing of the implementation climate scale (ICS). Implement Sci IS. 2014;9:157.

Palinkas LA, Fuentes D, Finno M, Garcia AR, Holloway IW, Chamberlain P. Inter-organizational collaboration in the implementation of evidence-based practices among public agencies serving abused and neglected youth. Adm Policy Ment Health. 2014;41:74–85.

Elrashidi MY, Mohammed K, Bora PR, Haydour Q, Farah W, DeJesus R, Ebbert JO. Co-located specialty care within primary care practice settings: a systematic review and meta-analysis. Healthcare. 2018;6(1):52–66.

Rones M, Hoagwood K. School-based mental health services: a research review. Clin Child Fam Psychol Rev. 2000;3:223–41.

Owens JS, Lyon AR, Brandt NE, Warner CM, Nadeem E, Spiel C, et al. Implementation science in school mental health: key constructs in a develo** research agenda. Sch Ment Heal. 2014;6:99–111.

Eber L, Weist MD, Barrett S. An introduction to the interconnected systems framework. In: Advancing education effectiveness: an interconnected systems framework for positive behavioral interventions and supports (PBIS) and school mental health. Eugene, Oregon: University of Oregon Press; 2013. p. 3–17.

Forman SG, Fagley NS, Chu BC, Walkup JT. Factors influencing school psychologists’ “willingness to implement” evidence-based interventions. Sch Ment Heal. 2012;4:207–18.

Bunger AC, Chuang E, Girth AM, Lancaster KE, Smith R, Phillips RJ, Martin J, Gadel F, Willauer T, Himmeger MJ, Millisor J. Specifying cross-system collaboration strategies for implementation: a multi-site qualitative study with child welfare and behavioral health organizations. Implement Sci. 2024;19(1):13.

Aarons GA, Ehrhart MG, Farahnak LR, Hurlburt MS. Leadership and organizational change for implementation (LOCI): a randomized mixed method pilot study of a leadership and organization development intervention for evidence-based practice implementation. Implement Sci. 2015;10:11.

Williams NJ, Ehrhart MG, Aarons GA, et al. Linking molar organizational climate and strategic implementation climate to clinicians’ use of evidence-based psychotherapy techniques: cross-sectional and lagged analyses from a 2-year observational study. Implement Sci. 2018;13:85.

Williams NJ, Wolk CB, Becker-Haimes EM, et al. Testing a theory of strategic implementation leadership, implementation climate, and clinicians’ use of evidence-based practice: a 5-year panel analysis. Implement Sci. 2020;15:10.

Glisson C, Schoenwald SK, Hemmelgarn A, Green P, Dukes D, Armstrong KS, et al. Randomized trial of MST and ARC in a two-level evidence-based treatment implementation strategy. J Consult Clin Psychol. 2010;78:537–50.

Beidas RS, Edmunds J, Ditty M, Watkins J, Walsh L, Marcus S, et al. Are inner context factors related to implementation outcomes in cognitive-behavioral therapy for youth anxiety? Adm Policy Ment Health. 2014;41:788–99.

Guerrero EG, He A, Kim A, Aarons GA. Organizational implementation of evidence-based substance abuse treatment in racial and ethnic minority communities. Adm Policy Ment Health. 2014;41:737–49.

Zhang Y, Cook CR, Fallon L, Corbin C, Ehrhart M, Brown E, Locke J, Lyon A. The Interaction between general and strategic leadership and climate on their cross-level associations with implementer attitudes toward universal prevention programs for youth mental health: a multilevel cross-sectional study. Adm Policy Mental Health. 2022. https://doi.org/10.1007/s10488-022-01248-5.

Aarons GA, Palinkas LA. Implementation of evidence-based practice in child welfare: service provider perspectives. Adm Policy Mental Health. 2007;34:411–9.

Grol R, Grimshaw J. From best evidence to best practice: effective implementation of change in patients’ care. Lancet. 2003;362(9391):1225–30.

Nelson TD, Steele RG. Predictors of practitioner self-reported use of evidence-based practices: practitioner training, clinical setting, and attitudes toward research. Adm Policy Mental Health. 2007;34:319–30.

Nelson TD, Steele RG, Mize JA. Practitioner attitudes toward evidence-based practice: themes and challenges. Adm Policy Mental Health. 2006;33:398–409.

Aarons G, Ehrhart M, Farahnak L, Sklar M. The role of leadership in creating a strategic climate for evidence-based practice implementation and sustainment in systems and organizations. Front Public Health Serv Syst Res. 2014;3:3.

Aarons GA, Fettes DL, Sommerfeld DH, Palinkas LA. Mixed methods for implementation research: application to evidence-based practice implementation and staff turnover in community-based organizations providing child welfare services. Child Maltreat. 2012;17:67–79.

Sobo EJ, Bowman C, Gifford AL. Behind the scenes in health care improvement: the complex structures and emergent strategies of implementation science. Soc Sci Med. 2008;67:1530–40.

Aarons GA, Ehrhart MG, Farahnak LR, Sklar M. Aligning leadership across systems and organizations to develop a strategic climate for evidence-based practice implementation. Annu Rev Public Health. 2014;35:255–74.

Klein KJ, Sorra JS. The challenge of innovation implementation. Acad Manag Rev. 1996;21:1055–80.

Weiner BJ, Belden CM, Bergmire DM, Johnston M. The meaning and measurement of implementation climate. Implement Sci. 2011;6:78.

Ehrhart MG, Schneider B, Macey WH. Organizational Climate and Culture: An Introduction to Theory, Research, and Practice. London: Routledge; 2013.

Zohar D, Luria G. A multilevel model of safety climate: cross-level relationships between organization and group-level climates. J Appl Psychol. 2005;90:616–28.

Weinberg LA, Zetlin A, Shea NM. Removing barriers to educating children in foster care through interagency collaboration: a seven county multiple-case study. Child Welf Arlingt. 2009;88:77–111.

Fazel M, Hoagwood K, Stephan S, Ford T. Mental health interventions in schools in high-income countries. Lancet Psychiatry. 2014;1:377–87.

Lyon AR, Ludwig K, Romano E, Koltracht J, Stoep AV, McCauley E. Using modular psychotherapy in school mental health: provider perspectives on intervention-setting fit. J Clin Child Adolesc Psychol. 2014;43:890–901.

Unützer J, Chan Y-F, Hafer E, Knaster J, Shields A, Powers D, et al. Quality improvement with pay-for-performance incentives in integrated behavioral health care. Am J Public Health. 2012;102:e41–5.

Lyon AR, Whitaker K, Locke J, Cook CR, King KM, Duong M, Davis C, Weist MD, Ehrhart MG, Aarons GA. The impact of inter-organizational alignment (IOA) on implementation outcomes: evaluating unique and shared organizational influences in education sector mental health. Implement Sci. 2018;13:1–1.

Glisson C, Green P, Williams NJ. Assessing the organizational social context (OSC) of child welfare systems: implications for research and practice. Child Abuse Negl. 2012;36:621–32.

Ehrhart MG, Aarons GA, Farahnak LR. Going above and beyond for implementation: the development and validity testing of the implementation citizenship behavior scale (ICBS). Implement Sci IS. 2015;10:65.

Weiner BJ, Lewis CC, Stanick C, Powell BJ, Dorsey CN, Clary AS, Boynton MH, Halko H. Psychometric assessment of three newly developed implementation outcome measures. Implement Sci. 2017;12:1–2.

Mellin EA, Taylor L, Weist MD. The expanded school mental health collaboration instrument [school version]: development and initial psychometrics. Sch Ment Heal. 2014;6:151–62.

Cook CR, Davis C, Brown EC, Locke J, Ehrhart MG, Aarons GA, Lyon AR. Confirmatory factor analysis of the evidence-based practice attitudes scale with school-based behavioral health consultants. Implement Sci. 2018;13(1):1–8.

Lyon AR, Cook CR, Brown EC, Locke J, Davis C, Ehrhart M, Aarons GA. Assessing organizational implementation context in the education sector: confirmatory factor analysis of measures of implementation leadership, climate, and citizenship. Implement Sci. 2018;13:1–4.

Glisson C, Landsverk J, Schoenwald S, Kelleher K, Hoagwood KE, Mayberg S, et al. Assessing the organizational social context (OSC) of mental health services: Implications for research and practice. Adm Policy Mental Health. 2008;35(1–2):98.

Hoffman L, Walters RW. Catching up on multilevel modeling. Annu Rev Psychol. 2022;4(73):659–89.

Wang Y, **ao S, Lu Z. An efficient method based on Bayes’ theorem to estimate the failure-probability-based sensitivity measure. Mech Syst Signal Process. 2019;15(115):607–20.

Wason JM, Robertson DS. Controlling type I error rates in multi-arm clinical trials: a case for the false discovery rate. Pharm Stat. 2021;20(1):109–16.

Lee EC, Whitehead AL, Jacques RM, Julious SA. The statistical interpretation of pilot trials: should significance thresholds be reconsidered? BMC Med Res Methodol. 2014;14(1):41. https://doi.org/10.1186/1471-2288-14-41.

O’Brennan L, Pas E, Bradshaw C. Multilevel examination of burnout among high school staff: Importance of staff and school factors. Sch Psychol Rev. 2017;46(2):165–76.

Zhang C, Xue L, Dhaliwal J. Alignments between the depth and breadth of inter-organizational systems deployment and their impact on firm performance. Inform Manage. 2016;53(1):79–90.

Rey-Marston M, Neely A. Beyond words: testing alignment among inter-organizational performance measures. Meas Bus Excell. 2010;14(1):19–27.

Aarons GA, Ehrhart MG, Farahnak LR, Hurlburt MS. Leadership and organizational change for implementation (LOCI): a randomized mixed method pilot study of a leadership and organization development intervention for evidence-based practice implementation. Implement Sci. 2015;10(1):1–12.

Roelen CA, Stapelfeldt CM, Heymans MW, van Rhenen W, Labriola M, Nielsen CV, Bültmann U, Jensen C. Cross-national validation of prognostic models predicting sickness absence and the added value of work environment variables. J Occup Rehabil. 2015;25:279–87.

Zhang Y, Cook C, Fallon L, Corbin C, Ehrhart M, Brown E, Locke J, Lyon A. The interaction between general and strategic leadership and climate on their multilevel associations with implementer attitudes toward universal prevention programs for youth mental health: a cross-sectional study. Adm Policy Mental Health. 2023;50(3):427–49.

Kim B, Sullivan JL, Brown ME, Connolly SL, Spitzer EG, Bailey HM, Sippel LM, Weaver K, Miller CJ. Sustaining the collaborative chronic care model in outpatient mental health: a matrixed multiple case study. Implement Sci. 2024;19(1):16.

Bolland JM, Wilson JV. Three faces of integrative coordination: a model of interorganizational relations in community-based health and human services. Health Serv Res. 1994;29:341–66.

Provan KG, Milward HB. A preliminary theory of interorganizational network effectiveness: a comparative study of four community mental health systems. Adm Sci Q. 1995;40:1–33.

Aarons GA, Ehrhart MG, Moullin JC, Torres EM, Green AE. Testing the leadership and organizational change for implementation (LOCI) intervention in substance abuse treatment: a cluster randomized trial study protocol. Implement Sci. 2017;12(1):29.

Adelman H, Taylor L. Moving prevention from the fringes into the fabric of school improvement. J Educ Psychol Consult. 2000;11:7–36.

Jennings J, Pearson G, Harris M. Implementing and maintaining school-based mental health services in a large, urban school district. J Sch Health. 2000;48:201.

Monge PR, Fulk J, Kalman ME, Flanagin AJ, Parnassa C, Rumsey S. Production of collective action in alliance-based interorganizational communication and information systems. Organ Sci. 1998;9(3):411–33.

Shumate M, Atouba Y, Cooper KR, Pilny A. Interorganizational communication. The international encyclopedia of organizational communication. 2017. p. 1-24.

Stroul BA, Friedman RM. A system of care for severely emotionally disturbed children and youth. 1986. https://eric.ed.gov/?id=ED330167. Accessed 7 Dec 2017.

Jones N, Thomas P, Rudd L. Collaborating for mental health services in wales: a process evaluation. Public Adm. 2004;82:109–21.

Chuang E, Wells R. The role of inter-agency collaboration in facilitating receipt of behavioral health services for youth involved with child welfare and juvenile justice. Child Youth Serv Rev. 2010;32:1814–22.

Cottrell D, Lucey D, Porter I, Walker D. Joint working between child and adolescent mental health services and the department of social services: the Leeds model. Clin Child Psychol Psychiatry. 2000;5:481–9.

Glisson C, Hemmelgarn A. The effects organizational climate and interorganizational coordination on the quality and outcomes of children’s service systems. Child Abuse Negl. 1998;22:401–21.

Shanock LR, Baran BE, Gentry WA, Pattison SC, Heggestad ED. Polynomial regression with response surface analysis: a powerful approach for examining moderation and overcoming limitations of difference scores. J Bus Psychol. 2010;25:543–54.

Tsai CY, Kim J, ** F, Jun M, Cheong M, Yammarino FJ. Polynomial regression analysis and response surface methodology in leadership research. Leadersh Q. 2022;33(1):101592.

Park R, et al. Comparing the performance of multivariate multilevel modeling to traditional analyses with complete and incomplete data. Methodology. 2015;11(3):100.

Carroll C, Patterson M, Wood S, Booth A, Rick J, Balain S. A conceptual framework for implementation fidelity. Implement Sci. 2007;2:1–9.

Sommerfeldt EJ, Pilny A, Saffer AJ. Interorganizational homophily and social capital network positions in Malaysian civil society. Commun Monogr. 2023;90(1):46–68.

Acknowledgements

The authors thank the schools and community-based organizations that have agreed to participate in and support this research. The authors also thank Taylor Ullrich, for formatting and checking references, as well as Drs. Kelly Whitaker and Mark Sander for supporting the corresponding research grant.

Funding

This project is supported by the National Institute of Mental Health (NIMH) grant R21MH110691 (PI: ARL). The content in this article is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Mental Health.

Author information

Authors and Affiliations

Contributions

YZ developed the manuscript, completed the analysis, interpreted results, and created all submission materials. ARL is the project PI who supervised the research team. ARL developed the corresponding research grant in collaboration with JL, CRC, and KK and with consultation from MGE. ML supported the development and edition of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This project has received approval from the Institutional Review Board (IRB) at ARL's institute, University of Washington.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Zhang, Y., Larson, M., Ehrhart, M.G. et al. Inter-organizational alignment and implementation outcomes in integrated mental healthcare for children and adolescents: a cross-sectional observational study. Implementation Sci 19, 36 (2024). https://doi.org/10.1186/s13012-024-01364-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-024-01364-w