Abstract

Background

Laryngopharyngeal cancer (LPC) includes laryngeal and hypopharyngeal cancer, whose early diagnosis can significantly improve the prognosis and quality of life of patients. Pathological biopsy of suspicious cancerous tissue under the guidance of laryngoscopy is the gold standard for diagnosing LPC. However, this subjective examination largely depends on the skills and experience of laryngologists, which increases the possibility of missed diagnoses and repeated unnecessary biopsies. We aimed to develop and validate a deep convolutional neural network-based Laryngopharyngeal Artificial Intelligence Diagnostic System (LPAIDS) for real-time automatically identifying LPC in both laryngoscopy white-light imaging (WLI) and narrow-band imaging (NBI) images to improve the diagnostic accuracy of LPC by reducing diagnostic variation among on-expert laryngologists.

Methods

All 31,543 laryngoscopic images from 2382 patients were categorised into training, verification, and test sets to develop, validate, and internal test LPAIDS. Another 25,063 images from five other hospitals were used as external tests. Overall, 551 videos were used to evaluate the real-time performance of the system, and 200 randomly selected videos were used to compare the diagnostic performance of the LPAIDS with that of laryngologists. Two deep-learning models using either WLI (model W) or NBI (model N) images were constructed to compare with LPAIDS.

Results

LPAIDS had a higher diagnostic performance than models W and N, with accuracies of 0·956 and 0·949 in the internal image and video tests, respectively. The robustness and stability of LPAIDS were validated in external sets with the area under the receiver operating characteristic curve values of 0·965–0·987. In the laryngologist-machine competition, LPAIDS achieved an accuracy of 0·940, which was comparable to expert laryngologists and outperformed other laryngologists with varying qualifications.

Conclusions

LPAIDS provided high accuracy and stability in detecting LPC in real-time, which showed great potential for using LPAIDS to improve the diagnostic accuracy of LPC by reducing diagnostic variation among on-expert laryngologists.

Similar content being viewed by others

Background

Laryngopharyngeal cancer (LPC), including laryngeal cancer (LCA) and hypopharyngeal cancer, is the second most common malignancy among head and neck tumours, with more than 130,000 deaths reported in 2020 [1]. Laryngoscopy biopsy is the gold standard for diagnosing LPC [2, 3]. In-office transnasal flexible electronic endoscopy can intuitively examine the laryngopharynx, making it the most effective device for detecting LPC [4, 5]. The limited resolution and contrast of white light can lead to the neglect or missed diagnosis of superficial mucosal cancers, even by experienced endoscopists [6, 7]. This can lead to patients being diagnosed at a later stage and thus having to undergo a multimodal treatment approach, resulting in poor prognosis and reduced quality of life [8,9,10]. Furthermore, a precautionary biopsy is usually prescribed to avoid the missed diagnosis of early-stage cancer, resulting in overtreatment and emotional stress to patients [11]. Recently, endoscopic systems with narrow-band imaging (NBI), which can improve the clarity and identification of epithelial and subepithelial microvessels, have played a critical role in the early diagnosis of LPC with high specificity and sensitivity [12,13,14]. However, owing to the relatively long professional training and accumulation of clinical experience, this technology is at high risk of missing suspicious LPCs in endoscopy examinations in hospitals with inexperienced laryngologists, underdeveloped regions, and countries with large numbers of patients [15, 16].

Recently, artificial intelligence (AI) has shown great potential in assisting doctors in various medical fields with their diagnoses [17,18,19]. Particularly, deep learning techniques based on deep convolutional neural networks (DCNN) have demonstrated extraordinary capabilities for medical image classification, detection, and segmentation [20, 21]. Benefiting from its super-resolution performance on microscopic images, AI can automatically infer complex microscopic imaging structures (i.e., abnormalities in the extent and colour intensity of mucosal tubular branches) and identify quantitative pixel-level features [22], which are usually indistinguishable from the human eye. Several studies have demonstrated the feasibility and effectiveness of deep learning for lesion detection and the pathological classification of endoscopic images. Unfortunately, there are still several limitations to the existing research, particularly concerning laryngoscopy. Despite the real-time nature of endoscopy, current research is limited to detecting a single image [23, 24], and there is a lack of studies integrating AI into dynamic videos. Additionally, most existing studies focus on a single light source, including the application of white-light imaging (WLI) and NBI images [33,34,35,36]. Several preliminary studies have verified the feasibility of this method in the auxiliary diagnosis of LCA. Ren et al. established a CNN-based classifier to classify laryngeal disease [23]. Furthermore, Cho et al. applied a deep learning model to discriminate various laryngeal diseases except for malignancy [37]. They all reported high accuracy rates. However, in these two retrospective single-institutional studies, the validation set was a small subset random self of all images in the collection. This suggests that several images of one patient were distributed across both the training and validation sets, leading to an overestimation of the test results. The training and testing of our model adopted time-series sets, and all training, validation, and testing images were collected at different periods, which were completely independent and could simulate the datasets in prospective clinical trials with more objective and convincing results. **ong et al. developed a model based on a DCNN using WLI images to diagnose LCA with an accuracy of 0·867 [25]. Additionally, He et al. developed a CNN model using NBI scans to identify patients with LCA, with an AUC of 0·873 in an independent test set [38]. Their studies were based on the diagnosis of a single imaging mode, which may lead to the omission of the focal features of the lesion, weakening the performance of AI-assisted diagnosis. Furthermore, both studies were only applicable to the detection of still images, which limits their practicality in clinical applications. The clinical application of AI requires the ability to analyse and diagnose complex situations in real time. The video contains multiple angles of the lesion and more complex diagnostic settings closer to the actual clinical environment. A pilot study by Azam et al. used 624 video frames of LCA to develop a YOLO ensemble model to attempt the automatic detection of LCA in real time [24]. This study focused on the automatic segmentation of tumour lesions using only LCA video frames, achieving an accuracy of 0·66 in 57 testing images, and verified the real-time processing performance of the model on six video laryngoscopes. Due to the small sample size and lack of controls, these results and their feasibility in clinical application for auxiliary diagnosis of LCA should be treated cautiously. The system we developed analysed one video frame that required only 26 ms, with an average of 38 video frames that can be identified per second, achieving the performance requirements required for real-time detection. Furthermore, our approach achieved a diagnostic accuracy of 0·949 in an independent video test set with 551 videos, demonstrating real-time dynamic recognition ability. Therefore, our system is more reliable for diagnosing LPC in real time and has a higher clinical utility than previously reported models.

Our system achieved satisfactory diagnostic performance with high accuracy on both image-test sets (0·956 [95% CI 0·951–0·960]) and video-test sets (0·949 [95% CI 0·931–0·968]), which depended on the subsequent improvement to the U-Net. We extracted two features from WLI and NBI images, respectively, which independently represented different data types, and further fused the two features. Compared with the models simply using mixed images, the LPAIDS led to more accurate predictions either in WLI or NBI images. Furthermore, integrating the two features is based on linear layers, which uses less time than feature extraction from multimodal data. The fast integration ensures that the LPAIDS can meet demanding requirements in real time. The stability and robustness of the model were validated using five other independent external validation sets. Moreover, the diagnostic performance of our system was comparable to that of experts and higher than that of non-experts. We used the Cohen kappa coefficient to assess the stability between the system and the laryngologists. We found that the expert achieved significant intra-observer consistency (k = 0·948), which was higher than that of senior laryngologists (k: 0·755–0·811), laryngologist residents (k: 0·667–0·711), and trainees (k: 0·514–0·610).

Despite these promising results, some limitations remain. First, this was a retrospective study, which may have a certain degree of selection bias, and the excellent performance of the LPAIDS cannot entirely reflect actual clinical application. Time-series sets were used to avoid such problems in the study design. Additionally, we designed and prepared a multicentre prospective randomised controlled trial to verify the applicability of this system in a clinical setting. Second, our dataset mostly comprises high-quality laryngoscopy images, which may limit the scope of use of this system. However, our test set used images acquired by different endoscopy systems from various institutions, such as Olympus and **on, which account for most of the endoscopy market. We will collect more images of varying quality to enhance the generalisation ability of our system. Third, although we used a video test to demonstrate the real-time detection performance of the system, the clipped video only contained lesions, and the real-time application ability in actual clinical practice should be evaluated. We will further work on embedding the system into the endoscopic system to output prediction results while performing laryngoscopy and evaluating the model’s reliability.

Conclusion

We developed a DCNN-based system for the real-time detection of LPCs. The system could recognise WLI and NBI imaging modalities simultaneously, achieving high accuracy and sensitivity in independent image and video test sets. The diagnostic efficiency was equivalent to that of experts and better than non-experts. However, this study still needs multicentre prospective verification to provide high-level evidence for detecting LPC in actual clinical practice. We believe that LPAIDS has excellent potential for aiding the diagnosis of LPC and reducing the burden on laryngologists.

Availability of data and materials

Due to patient privacy, all the datasets generated or analyzed in this study are available from the corresponding author upon reasonable request by The First Affiliated Hospital of Sun Yat-sen University (leiwb@mail.sysu.edu.cn).

Abbreviations

- AI:

-

Artificial intelligence

- AUC:

-

Area under the ROC curve

- CI:

-

Confidence interval

- DCNN:

-

Deep convolutional neural networks

- FAHSU:

-

First Affiliated Hospital of Shenzhen University;

- FAHSYSU:

-

First Affiliated Hospital of Sun Yat-sen University;

- IOU:

-

Intersection-over-union

- LCA:

-

Laryngeal cancer

- LPAIDS:

-

Laryngopharyngeal Artificial Intelligence Diagnostic System

- LPC:

-

Laryngopharyngeal cancer

- NBI:

-

Narrow-band imaging

- NHSMU:

-

Nanfang Hospital of Southern Medical University;

- NPV:

-

Negative predictive value

- PPV:

-

Positive predictive value

- ReLU:

-

Rectified linear unit

- ROC:

-

Receiver operating characteristic

- SAHSYSU:

-

Sixth Affiliated Hospital of Sun Yat-sen University

- SYMSYSU:

-

Sun Yat-sen Memorial Hospital of Sun Yat-sen University;

- TAHSYSU:

-

Third Affiliated Hospital of Sun Yat-sen University;

- WLI:

-

White light imaging

References

Sung H, Ferlay J, Siegel RL, et al. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin. 2021;71(3):209–49.

Steuer CE, El-Deiry M, Parks JR, Higgins KA, Saba NF. An update on larynx cancer. CA Cancer J Clin. 2017;67(1):31–50.

Marioni G, Marchese-Ragona R, Cartei G, et al. Current opinion in diagnosis and treatment of laryngeal carcinoma. Cancer Treat Rev. 2006;32(7):504–15.

Mannelli G, Cecconi L, Gallo O. Laryngeal preneoplastic lesions and cancer: challenging diagnosis. Qualitative literature review and meta-analysis. Crit Rev Oncol Hematol. 2016;106:64–90.

Krausert CR, Olszewski AE, Taylor LN, et al. Mucosal wave measurement and visualization techniques. J Voice. 2011;25(4):395–405.

Ni XG, Zhang QQ, Wang GQ. Narrow band imaging versus autofluorescence imaging for head and neck squamous cell carcinoma detection: a prospective study. J Laryngol Otol. 2016;130(11):1001–6.

Zwakenberg MA, Halmos GB, Wedman J, et al. Evaluating laryngopharyngeal tumor extension using narrow band imaging versus conventional white light imaging. Laryngoscope. 2021;131(7):E2222–31.

Brennan MT, Treister NS, Sollecito TP, et al. Epidemiologic factors in patients with advanced head and neck cancer treated with radiation therapy. Head Neck. 2021;43(1):164–72.

Driessen CML, Leijendeckers J, Snik AD, et al. Ototoxicity in locally advanced head and neck cancer patients treated with induction chemotherapy followed by intermediate or high-dose cisplatin-based chemoradiotherapy. Head Neck. 2019;41(2):488–94.

Marur S, Forastiere AA. Head and neck squamous cell carcinoma: update on epidemiology, diagnosis, and treatment. Mayo Clin Proc. 2016;91(3):386–96.

Gugatschka M, Kiesler K, Beham A, et al. Hyperplastic epithelial lesions of the vocal folds: combined use of exfoliative cytology and laryngostroboscopy in differential diagnosis. Eur Arch Otorhinolaryngol. 2008;265(7):797–801.

Cosway B, Drinnan M, Paleri V. Narrow band imaging for the diagnosis of head and neck squamous cell carcinoma: a systematic review. Head Neck. 2016;38(Suppl 1):E2358–67.

Kim DH, Kim Y, Kim SW, Hwang SH. Use of narrowband imaging for the diagnosis and screening of laryngeal cancer: a systematic review and meta-analysis. Head Neck. 2020;42(9):2635–43.

Ni XG, Wang GQ. The role of narrow band imaging in head and neck cancers. Curr Oncol Rep. 2016;18(2):10.

Chen J, Li Z, Wu T, Chen X. Accuracy of narrow-band imaging for diagnosing malignant transformation of vocal cord leukoplakia: a systematic review and meta-analysis. Laryngoscope Investig Otolaryngol. 2023;8(2):508–17.

Ni XG, Wang GQ, Hu FY, et al. Clinical utility and effectiveness of a training programme in the application of a new classification of narrow-band imaging for vocal cord leukoplakia: a multicentre study. Clin Otolaryngol. 2019;44(5):729–35.

Liang H, Tsui BY, Ni H, et al. Evaluation and accurate diagnoses of pediatric diseases using artificial intelligence. Nat Med. 2019;25(3):433–8.

Mathenge WC. Artificial intelligence for diabetic retinopathy screening in Africa. Lancet Digit Health. 2019;1(1):e6–7.

Dey D, Slomka PJ, Leeson P, et al. Artificial intelligence in cardiovascular imaging: JACC State-of-the-Art review. J Am Coll Cardiol. 2019;73(11):1317–35.

Zhang B, ** Z, Zhang S. A deep-learning model to assist thyroid nodule diagnosis and management. Lancet Digit Health. 2021;3(7): e410.

Foersch S, Eckstein M, Wagner DC, et al. Deep learning for diagnosis and survival prediction in soft tissue sarcoma. Ann Oncol. 2021;32(9):1178–87.

Luo H, Xu G, Li C, et al. Real-time artificial intelligence for detection of upper gastrointestinal cancer by endoscopy: a multicentre, case-control, diagnostic study. Lancet Oncol. 2019;20(12):1645–54.

Ren J, **g X, Wang J, et al. Automatic recognition of laryngoscopic images using a deep-learning technique. Laryngoscope. 2020;130(11):E686–93.

Azam MA, Sampieri C, Ioppi A, et al. Deep learning applied to white light and narrow band imaging videolaryngoscopy: toward real-time laryngeal cancer detection. Laryngoscope. 2022;132(9):1798–806.

**ong H, Lin P, Yu JG, et al. Computer-aided diagnosis of laryngeal cancer via deep learning based on laryngoscopic images. EBioMedicine. 2019;48:92–9.

Kwon I, Wang SG, Shin SC, et al. Diagnosis of early glottic cancer using laryngeal image and voice based on ensemble learning of convolutional neural network classifiers. J Voice. 2022. https://doi.org/10.1016/j.jvoice.2022.07.007.

Inaba A, Hori K, Yoda Y, et al. Artificial intelligence system for detecting superficial laryngopharyngeal cancer with high efficiency of deep learning. Head Neck. 2020;42(9):2581–92.

Ronneberger O, Fischer P, Brox T. U-Net. Convolutional Networks for Biomedical Image Segmentation. International Conference on Medical Image Computing and Computer-Assisted Intervention. 2015; 2015.

Stachler RJ, Francis DO, Schwartz SR, et al. Clinical practice guideline: hoarseness (Dysphonia) (Update). Otolaryngol Head Neck Surg. 2018;158(1):S1–42.

Zwakenberg MA, Dikkers FG, Wedman J, Halmos GB, van der Laan BF, Plaat BE. Narrow band imaging improves observer reliability in evaluation of upper aerodigestive tract lesions. Laryngoscope. 2016;126(10):2276–81.

Vilaseca I, Valls-Mateus M, Nogués A, et al. Usefulness of office examination with narrow band imaging for the diagnosis of head and neck squamous cell carcinoma and follow-up of premalignant lesions. Head Neck. 2017;39(9):1854–63.

Irjala H, Matar N, Remacle M, Georges L. Pharyngo-laryngeal examination with the narrow band imaging technology: early experience. Eur Arch Otorhinolaryngol. 2011;268(6):801–6.

He X, Wu L, Dong Z, et al. Real-time use of artificial intelligence for diagnosing early gastric cancer by magnifying image-enhanced endoscopy: a multicenter diagnostic study (with videos). Gastrointest Endosc. 2022;95(4):671-8.e4.

Lu Z, Xu Y, Yao L, et al. Real-time automated diagnosis of colorectal cancer invasion depth using a deep learning model with multimodal data (with video). Gastrointest Endosc. 2022;95(6):1186-94.e3.

Xu M, Zhou W, Wu L, et al. Artificial intelligence in the diagnosis of gastric precancerous conditions by image-enhanced endoscopy: a multicenter, diagnostic study (with video). Gastrointest Endosc. 2021;94(3):540-8.e4.

Tang D, Wang L, Ling T, et al. Development and validation of a real-time artificial intelligence-assisted system for detecting early gastric cancer: a multicentre retrospective diagnostic study. EBioMedicine. 2020;62: 103146.

Cho WK, Lee YJ, Joo HA, et al. Diagnostic accuracies of laryngeal diseases using a convolutional neural network-based image classification system. Laryngoscope. 2021;131(11):2558–66.

He Y, Cheng Y, Huang Z, et al. A deep convolutional neural network-based method for laryngeal squamous cell carcinoma diagnosis. Ann Transl Med. 2021;9(24):1797.

Acknowledgements

Not applicable.

Funding

This work was supported by the Basic and Applied Research Foundation of Guangdong Province (No. 2022B1515130009), the National Natural Science Foundation of China (No. 81972528 and 82273053), 5010 Clinical Research Program of Sun Yat-sen University (No. 2017004) and Natural Science Foundation of Guangdong Province (No. 2021A1515011963 and 2022A1515011275).

Author information

Authors and Affiliations

Contributions

J.T.R. and W.B.L. conceived and supervised this study. Y.L. and W.X.G. designed the experiments. Y.L., W.X.G., H.J.Y., and G.Q.L. conducted the experiments. Y.H.W., H.C.T., X.L., W.J.T., J.Y., R.M.H., Q.C., Q.Y., T.R.L. and B.P.M. collected the data. Y.L., H.J.Y., and W.B.G. labeled the images. W.X.G., R.X.W., and J.T.R. established the deep learning model. Y.L., W.X.G., H.J.Y., and G.Q.L. analyzed and interpreted the data. Y.L. and W.X.G. drafted the manuscript. J.T.R. and W.B.L. revised and edited the manuscript. All authors reviewed and approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

The study design was reviewed and approved by the Ethics Committee of the First Hospital Affiliated to Sun Yat-sen University (approval no. [2021]416). For patients whose laryngoscopy images or videos were retrospectively obtained from the databases of each participating hospital, informed consent was waived by the institutional review boards of the participating hospitals.

Consent for publication

Not applicable.

Competing interests

All the authors have no conflicts of interest to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

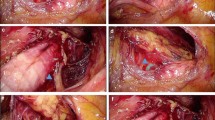

Additional file 1: Figure S1.

Confusion matrixes of LPAIDS in identifying laryngopharyngeal cancer in multicentre imaging datasets. Figure S2. Common false positive cases. Table S1. Performance of the LPAIDS versus laryngologists in 200 videos. Table S2. Inter-observer and intra-observer agreement of the LPAIDS and laryngologists in the human-machine competition dataset

Additional file 2: Video S1. Laryngeal cancer (glottic carcinoma) detection of LPAIDS in the WLI video

Additional file 3: Video S2. Laryngeal cancer (glottic carcinoma) detection of LPAIDS in the NBI video

Additional file 4: Video S3. Laryngeal cancer (glottic carcinoma) detection of LPAIDS in the WLI video

Additional file 5: Video S4. Laryngeal cancer (glottic carcinoma) detection of LPAIDS in the NBI video

Additional file 6: Video S5. Laryngeal cancer (supraglottic carcinoma) detection of LPAIDS in the WLI video

Additional file 7: Video S6. Laryngeal cancer (supraglottic carcinoma) detection of LPAIDS in the NBI video

Additional file 8: Video S7. Hypopharyngeal cancer detection of LPAIDS in the WLI video

Additional file 9: Video S8. Hypopharyngeal cancer detection of LPAIDS in the NBI video

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Li, Y., Gu, W., Yue, H. et al. Real-time detection of laryngopharyngeal cancer using an artificial intelligence-assisted system with multimodal data. J Transl Med 21, 698 (2023). https://doi.org/10.1186/s12967-023-04572-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12967-023-04572-y