Abstract

Background

Verbal autopsy (VA) is increasingly being considered as a cost-effective method to improve cause of death information in countries with low quality vital registration. VA algorithms that use empirical data have an advantage over expert derived algorithms in that they use responses to the VA instrument as a reference instead of physician opinion. It is unclear how stable these data driven algorithms, such as the Tariff 2.0 method, are to cultural and epidemiological variations in populations where they might be employed.

Methods

VAs were conducted in three sites as part of the Improving Methods to Measure Comparable Mortality by Cause (IMMCMC) study: Bohol, Philippines; Chandpur and Comila Districts, Bangladesh; and Central and Eastern Highlands Provinces, Papua New Guinea. Similar diagnostic criteria and cause lists as the Population Health Metrics Research Consortium (PHMRC) study were used to identify gold standard (GS) deaths. We assessed changes in Tariffs by examining the proportion of Tariffs that changed significantly after the addition of the IMMCMC dataset to the PHMRC dataset.

Results

The IMMCMC study added 3512 deaths to the GS VA database (2491 adults, 320 children, and 701 neonates). Chance-corrected cause specific mortality fractions for Tariff improved with the addition of the IMMCMC dataset for adults (+ 5.0%), children (+ 5.8%), and neonates (+ 1.5%). 97.2% of Tariffs did not change significantly after the addition of the IMMCMC dataset.

Conclusions

Tariffs generally remained consistent after adding the IMMCMC dataset. Population level performance of the Tariff method for diagnosing VAs improved marginally for all age groups in the combined dataset. These findings suggest that cause-symptom relationships of Tariff 2.0 might well be robust across different population settings in develo** countries. Increasing the total number of GS deaths improves the validity of Tariff and provides a foundation for the validation of other empirical algorithms.

Similar content being viewed by others

Background

Reliable knowledge of the distribution of causes of death (COD) in populations is critically important for national and sub-national public health surveillance and planning [1]. However, a substantial proportion of deaths in develo** countries occur outside health facilities, leading to low quality COD data [1,2,3,4]. Verbal autopsy (VA) has emerged as a cost-effective solution to determining COD in rural areas with limited contact with medical services [5, 6].

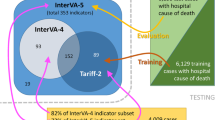

VA involves interviewing the family of the deceased to collect information regarding the signs and symptoms surrounding the death. This information can be analyzed by physicians or computer algorithms to assign an individual COD which can then be aggregated to yield population level COD estimates. Currently, most computer algorithms for classifying the COD from verbal autopsy interviews (VAI) rely on a matrix describing the relationship between a set of predictors and a set of causes [7,8,9,10,11,12]. These associations can be determined either purely empirically, purely through expert opinion, or through a combination of both. Previous studies have shown that methods that rely purely on empirically derived associations, such as random forest and Tariff 2.0, outperform both physician coding and methods in which the associations are derived by expert opinion, such as InterVA [7, 12, 13].

Methods that use empirically derived associations require high quality COD data where the true underlying cause is known as accurately as possible (‘gold standards’) [5]. Automated diagnostic algorithms process data in which the true COD is known with a reasonable degree of certainty to identify predictive patterns in the responses from VAIs. Misclassifications in the underlying COD will likely result in the algorithms learning patterns that are wrong and would result in low-quality COD predictions.

The Population Health Metrics Research Consortium (PHMRC) previously collected VAs matched with COD based on medical record review with strict ex-ante diagnostic criteria from six sites in four different countries [14]. Only cases with definitive clinical diagnostic results were included in this study. This ensured that the underlying COD was known with the highest possible degree of certainty. These data were made publicly available in 2013. In this paper, we report on a new study that collected additional gold-standard VAs, adhering to the same strict diagnostic criteria and procedures as for the PHMRC study [15]. These data, collected as part of the Improving Methods to Measure Comparable Mortality by Cause (IMMCMC) study, include gold standard VAs from three sites, two of which were not included in the previous study. We report on the effect of including these data on the stability of the cause-symptom relationship that underlies the Tariff 2.0 diagnostic method.

Methods

Data

The original PHMRC study sites included Andhra Pradesh, India; Bohol, Philippines; Dar es Salaam, Tanzania; Mexico City, Mexico; Pemba Island, Tanzania; and Uttar Pradesh, India [14]. In total, 7836 adults, 2075 children, 1629 neonates, and 1002 stillbirths were collected. The study attempted to gather a similar number of cases (at least 100) for each major COD (as reflected in the Global Burden of Disease Study) in representative low and middle income country sites.

The IMMCMC study gathered gold standard VAs between 2011 and 2014 using the PHMRC long form VAI. Cases were identified using the same diagnostic criteria and cause list as the PHMRC study. The IMMCMC study was conducted at three sites: Chandpur and Comilla Districts in Bangladesh, Central and Eastern Highlands Provinces in Papua New Guinea, and Bohol Province in the Philippines. The data collection methodology is described in Additional file 1. Gold standards have been classified as having attained level 1, 2A, 2B, or 3 level of certainty, depending on the amount of information contained in the medical records [14]. Levels 1 and 2 represent cases where the certainty about the diagnosis of the underlying COD is greatest. In this report, we only analyze the 3512 cases that met gold standard level 1 or 2 criteria. This included 2491 adults, 320 children and 701 neonates. The majority of the cases were from Bohol, Philippines, which contributed 2384 cases; 1070 VAs were from Bangladesh and 58 were from Papua New Guinea.

The cause composition of the IMMCMC dataset is less balanced than the PHMRC data because the IMMCMC gathered all deaths at the study sites while the PHMRC study attempted to gather at least 100 cases for pre-determined causes. Table 1 shows the number of cases in each dataset by age module and cause. The IMMCMC database contributed a substantial number of additional deaths due to stroke, pneumonia, acute myocardial infarction, road traffic accidents, stillbirth, and preterm delivery.

Tariff Method

Tariff 2.0 is based on the determination of a set of Tariffs, based on a matrix of cause-symptom pair endorsement rates [16]. A cause-symptom pair is endorsed if the interviewee responded “yes” to a particular question, for which the true COD was known, or reports a duration greater than a pre-specified cutoff on questions that ask about length of time. Other values, including “Don’t Know”, are considered unendorsed and are counted in the denominator of the endorsement rate. The Tariff for any cause-symptom pair is calculated from the endorsement rate of that given cause-symptom pair and the distribution of endorsement rates for the symptom across all causes. Specifically, it is:

where xi,j is the endorsement rate for symptom i across all cases in which the true cause was j, Mediani is the median endorsement rate of symptom i across all causes, and IQRi is the interquartile range of endorsement rates of symptom i across all causes. For example, if 50% of respondents answered “yes” to chest pain for Acute Myocardial Infarction, and the median and interquartile range for chest pain across all causes were 18 and 20%, respectively, then the Tariff for chest pain for Acute Myocardial Infarction my would be 1.6.

Tariffs are then tested for significance using a Monte Carlo experiment. The original symptom data is resampled with replacement, stratified by cause, to create 500 datasets. In each dataset, the total number of observations for each cause is constant, but the given rows, and thus the endorsements and endorsement rate, varies. Tariffs are calculated from each of these datasets and are then used to create 99% uncertainty intervals around each Tariff estimate. Tariffs where the uncertainty interval includes zeros, i.e. where we are not certain about the directionality of the association between the symptom and the cause, are removed. Lastly, Tariffs are rounded to the nearest 0.5. This prevents over-fitting by treating similar values as containing the same amount of information instead of prioritizing minor, insignificant difference in predictive value.

Analysis

We assessed changes in the Tariffs by comparing the proportion of significant Tariffs before and after the addition of the IMMCMC dataset. We also measured the proportion of Tariffs that changed significantly or changed directionality (i.e. from positive to negative). We performed the out-of-sample validation procedure described in Murray et al. to assess changes in individual and population-level COD performance [17]. Individual-level performance was assessed using chance-corrected concordance (CCC). CCC is a measure of agreement between the predicted and gold standard cause assignment, adjusted for chance. Population-level performance was assessed using cause-specific mortality fraction (CSMF) accuracy and chance-corrected cause-specific mortality fraction (CCCSMF) accuracy [18]. CSMF accuracy is a summary measure of performance between the predicted and gold standard cause assignment, and CCCSMF adjusts for chance.

Results

Adding the IMMCMC dataset did not change the directionality of the association between any cause-symptom pairs. In other words, the sign of all 2852 significant Tariffs was the same before and after adding the new data.

In the original study using only PHMRC data, 2852 of the 19,401 (14.7%) cause-symptom pairs across the three modules were statistically significant. After combining the PHMRC and IMMCMC datasets and recalculating Tariffs, 2563 of the original 2852 (89.9%) values remained significant. 97.2% of Tariffs did not change significantly after the addition of the IMMCMC dataset; less than 3% did.

Table 2 shows the cause-specific change in performance measured by CCC, with individual causes ranked in decreasing order according to the magnitude of the difference in CCC before and after the addition of the new cases. For adults, CCC was highest for injuries and lowest for residual categories for both the PHMRC and combined datasets. For children, CCC was highest for injuries and lowest for infectious diseases and residual categories for both the PHMRC and combined datasets. For neonates, CCC was much higher for stillbirth than all other causes in both datasets. CCC for pneumonia and birth asphyxia were low for both datasets. In short, addition of the new cases did not alter the comparative performance of the Tariff method for various causes of death, as measured by CCC. Interestingly, nearly all adult and child causes experienced an increase in CCC, with the largest decrease for other injuries. Neonate causes mainly experienced an increase or little change, except for a decrease in meningitis/sepsis.

While the changes in Tariffs with the addition of new data were generally small, it is important to understand for which cause-symptom pairs the incorporation of new data had greatest effect. Table 3 shows the ten largest increases and decreases in Tariffs (all Tariffs shown in Additional file 2). Tariffs represent the strength (positive or negative) of the relationship between a particular cause and a given symptom, so large increases in Tariffs indicate increased importance of that symptom for the given cause, while large decreases signify the opposite. The largest changes in Tariffs were mainly associated with injury and maternal deaths where Tariffs would expect to be high because of the likelihood that symptoms for these conditions would be more clearly distinguishable and remembered. All symptoms associated with large increases in Tariffs had a strong association with a particular cause. The same pattern was also observed for large decreases. Although the absolute change in Tariffs for these pairs might have been large, the strength of the association was sufficiently clear that the change did not distort the predictive ability of the algorithm in selecting the correct underlying COD.

This conclusion is confirmed by Table 4 which shows the overall change in predictive performance for Tariff 2.0 before and after the IMMCMC dataset was added to the PHMRC dataset (full performance details are shown in Additional file 3). In fact, overall diagnostic predictive accuracy for adults and children increased marginally for both populations (CSMF) and individuals (CCC), and for neonatal CSMFs, but decreased slightly when assessing diagnostic accuracy for individual neonatal deaths (CCC).

Discussion

Automated diagnostic methods such as Tariff 2.0 have the potential to revolutionize national mortality surveillance system by facilitating huge improvements in the availability and quality of data on causes of death in hitherto underserved populations. But are these methods reliable and generalizable and likely to perform similarly in different populations? This study has confirmed the robustness of the Tariff 2.0 method when new gold-standard data from different populations were incorporated. The addition of the IMMCMC data to the publicly available PHMRC dataset confirmed the results of the original Tariffs derived solely from the PHMRC dataset, and led to a slight overall improvement in the diagnostic performance of the algorithm.

Adding additional deaths to the PHMRC dataset to calculate Tariffs further clarified the relationship between various symptoms and causes. No Tariffs changed direction (i.e. went from positive to negative, or the converse) and the vast majority of Tariffs that were significant using the PHMRC dataset were also significant when using the combined dataset. Some Tariffs which were statistically significant in the original PHMRC data were not, when using the combined dataset. These differences likely reflect instances where the Tariffs were over-fit to ‘noise’ in the raw data, and the addition of new data served to create more generalizable Tariffs.

Given that the majority of causes experienced an increase in CCC, most changes in the cause-symptom relationship as a result of adding new data led to improved predictive performance. Decreases in the CCC for some causes were likely due to spurious associations between symptom and cause that were a result of relatively few deaths present in both the PHMRC and IMMCMC datasets for certain causes. For example, only 6 neonatal pneumonia deaths (7% increase) and 32 meningitis/sepsis deaths (19% increase) were added to the PHMRC dataset from the IMMCMC study. Rather, 80–90% of neonatal deaths were attributed to stillbirth, preterm delivery, or birth asphyxia. The similar symptoms of pneumonia and meningitis/sepsis, together with the comparatively few cases, provided insufficient information for Tariff to distinguish between the causes, resulting in a decrease in CCC for neonates. A greater number of deaths attributed to more causes in the adult and child modules contributed to the increased diagnostic performance of Tariff when applied to the combined dataset.

Large changes in the Tariffs tended to be limited to certain maternal and injury causes. These changes reflect the addition of a diversity of new cases that were not in the PHMRC dataset and suggest the likelihood of cultural differences affecting responses to some questions pertinent to maternal and injury deaths. Difficulties with describing the intent of the question, or communication skills, are also likely to affect the quality of the interview process. Given the multiple requirements of interviewers when conducting a VAI, it is hardly surprising that incomplete or misleading data will be collected in some cases. This makes it much harder for the algorithm to correctly predict the most probable COD, and likely lead to unsubstantiated changes in Tariffs.

The diversity of study populations present in the combined dataset suggests that the associations generated by the algorithm are likely to be generalizable. Otherwise, the associations may reflect a cultural bias in which symptoms are noticed, communicated, remembered and reported from a limited set of study populations. Previous studies have shown that respondents often report different information at repeat visits regarding the same death, but key symptoms are often remembered and are sufficient to properly classify the COD [19, 20]. With a large enough training data set, algorithms should be able to distinguish between these key predictors and background noise. It is also necessary to include VAs which include missing data or where the pattern of responses may not seem consistent with the true COD, as long as they were collected under real survey conditions. These observations represent ‘noise’ in the data that are propagated when the algorithm is applied to deaths notified to vital registration systems. Properly calibrated computer algorithms such as Tariff 2.0 will be able to account for this ‘noise’ and adjust the predictions accordingly.

The addition of deaths from the IMMCMC study to the PHMRC GS database is an important step in the continual validation of empirical VA algorithms. There has been some criticism of empirical methods that are derived and tested on gold standard datasets, but it is important to recognize the benefits of a GS database [9, 21,22,23]. First, GS deaths provide evidence that the responses provided during a VA do not necessarily make sense in a clinical context. For example, 147 deaths in the PHMRC dataset were reported as stillbirth but described as neonatal deaths, which is impossible because stillbirth implies the birth did not occur [21]. Second, GS deaths provide a basis for assessing the validity for text items in open-ended responses. The potential of these “open narrative” responses to improve diagnostic accuracy has yet to be fully realized [16]. Third, deaths that occur in-hospital are different than deaths that occur at home because the terminal events are prolonged by therapeutic activity, but the signs and symptoms which precipitated the hospital admission are what are asked in a VA [9]. Some diseases may have different presentations at home than in the hospital: e.g. families generally have much less chance to observe a woman dying in labor or a neonate dying in a special nursery in a hospital than they would have of observing these events at home. Collecting GS data for such conditions would set standards for such data collection environments. While GS databases have limitations, they provide a valuable basis for VA validation research and implementation. They also set the foundation for adding additional cases of deaths that can provide empirical evidence about the generalizability of VA methods.

We have categorized the IMMCMC dataset as gold standard, but we recognize its limitations. The sampling strategy of collecting deaths in the IMMCMC study was different from that of the PHMRC study. All deaths in study hospitals were collected for the IMMCMC dataset, while approximately 100 deaths per cause were intended for collection in the PHMRC dataset. This difference may bias the cause-symptom relationship of less frequent causes in the IMMCMC dataset towards that of the PHMRC dataset. Furthermore, while the IMMCMC dataset added 3513 death cases, some causes (e.g. AIDS and lung cancer) had less than 20 deaths. Changes in the Tariffs for these causes may simply reflect noise. Last, the additional death cases from the IMMCMC sites occurred in one of the same sites as the PHMRC sites (Bohol, Philippines), so the results of the combined database are not as generalizable to the rest of the world had the deaths come from regions that are not present in either dataset, such as South America, or have low representation, such as Africa; they do however, support broader generalizability in Asia and the Pacific.

Conclusions

Additional observations for training data are useful for refining the association between symptoms and causes. While the original dataset collected in the PHRMC study is sufficiently large to derive confidence in the Tariffs for most symptom-cause pairs that underlie the Tariff diagnostic method, adding new data further clarifies the complex associations between symptoms and causes reported during a VA interview. The addition of the IMMCMC dataset to the PHMRC database increased the cause-specific performance metrics for most causes and overall performance increased for adults, children, and neonates, at least at the population level. Including new observations changed the Tariffs of some key symptoms, which may indicate cultural differences in respondents or noisy data, but overall the inclusion of new data did not alter previous findings about the diagnostic accuracy of the Tariff method for VAs, nor its predictive performance. While the findings of this study suggest that the Tariffs are relatively invariant to cultural differences in respondent populations, this needs to be more firmly established on the basis of a large dataset of gold standard cases from a wide variety of locations. This is a priority for VA research, particularly as the method is gaining increasing popularity for widespread use in vital registration systems.

Availability of data and materials

All data generated or analysed during this study are included in this published article and its supplementary information files.

Abbreviations

- CCC:

-

Chance-corrected concordance

- CCCSMF:

-

Chance-corrected cause-specific mortality fraction

- COD:

-

Cause of death

- CSMFs:

-

Cause-specific mortality fractions

- IMMCMC:

-

Improving Methods to Measure Comparable Mortality by Caus

- PHMRC:

-

Population Health Metrics Research Consortium

- VA:

-

Verbal autopsy

- VAI:

-

Verbal autopsy instrument

References

AbouZahr C, de Savigny D, Mikkelsen L, Setel PW, Lozano R, Nichols E, et al. Civil registration and vital statistics: progress in the data revolution for counting and accountability. Lancet (London, England). 2015;386:1373–85.

Lopez AD, Salomon J, Ahmad O, Murray CJ, Mafat D. Life tables for 191 countries : data, methods and results. GPE discus. Geneva: World Health Organization; 2001.

Mathers CD, Fat DM, Inoue M, Rao C, Lopez AD. Counting the dead and what they died from: an assessment of the global status of cause of death data. Bull World Health Organ. 2005;83:171–7.

Mikkelsen L, Phillips DE, AbouZahr C, Setel PW, de Savigny D, Lozano R, et al. A global assessment of civil registration and vital statistics systems: monitoring data quality and progress. Lancet. 2015;386:1395–406.

Soleman N, Chandramohan D, Shibuya K. Verbal autopsy: current practices and challenges. Bull World Health Organ. 2006;84:239–45.

Setel PW, Sankoh O, Rao C, Velkoff VA, Mathers C, Gonghuan Y, et al. Sample registration of vital events with verbal autopsy: a renewed commitment to measuring and monitoring vital statistics. Bull World Health Organ. 2005;83:611–7.

James SL, Flaxman AD, Murray CJ, Population Health Metrics Research Consortium (PHMRC). Performance of the Tariff method: validation of a simple additive algorithm for analysis of verbal autopsies. s. 2011;9:31.

Byass P, Chandramohan D, Clark SJ, D’Ambruoso L, Fottrell E, Graham WJ, et al. Strengthening standardised interpretation of verbal autopsy data: the new InterVA-4 tool. Glob Health Action. 2012;5:1–8.

McCormick TH, Li ZR, Calvert C, Crampin AC, Kahn K, Clark SJ. Probabilistic cause-of-death assignment using verbal autopsies. J Am Stat Assoc. 2016;111:1036–49.

King G, Lu Y. Verbal autopsy methods with multiple causes of death. Stat Sci. 2008;23:78–91.

Murray CJ, James SL, Birnbaum JK, Freeman MK, Lozano R, Lopez AD. Simplified symptom pattern method for verbal autopsy analysis: multisite validation study using clinical diagnostic gold standards. Popul Health Metr. 2011;9:30.

Flaxman AD, Vahdatpour A, Green S, James SL, Murray CJ, Population Health Metrics Research Consortium (PHMRC). Random forests for verbal autopsy analysis: multisite validation study using clinical diagnostic gold standards. Popul Health Metr. 2011;9:29.

Murray CCJ, Lozano R, Flaxman AAD, Serina P, Phillips D, Stewart A, et al. Using verbal autopsy to measure causes of death: the comparative performance of existing methods. BMC Med. 2014;12:5.

Murray CJ, Lopez AD, Black R, Ahuja R, Ali SM, Baqui A, et al. Population health metrics research Consortium gold standard verbal autopsy validation study: design, implementation, and development of analysis datasets. Popul Health Metr. 2011;9:27.

Population Health Metrics Research Consortium. PHMRC Gold Standard Verbal Autopsy Data 2005–2011. 2013.

Serina P, Riley I, Stewart A, James SL, Flaxman AD, Lozano R, et al. Improving performance of the Tariff method for assigning causes of death to verbal autopsies. BMC Med. 2015;13:291.

Murray CJ, Lozano R, Flaxman AD, Vahdatpour A, Lopez AD. Robust metrics for assessing the performance of different verbal autopsy cause assignment methods in validation studies. Popul Health Metr. 2011;9:28.

Flaxman AD, Serina PT, Hernandez B, Murray CJL, Riley I, Lopez AD, et al. Measuring causes of death in populations: a new metric that corrects cause-specific mortality fractions for chance. Popul Health Metr. 2015;13:28.

Serina P, Riley I, Hernandez B, Flaxman AD, Praveen D, Tallo V, et al. What is the optimal recall period for verbal autopsies? Validation study based on repeat interviews in three populations. Popul Health Metr. 2016;14:40.

Serina P, Riley I, Hernandez B, Flaxman AD, Praveen D, Tallo V, et al. The paradox of verbal autopsy in cause of death assignment: symptom question unreliability but predictive accuracy. Popul Health Metr. 2016;14:41.

Byass P. Usefulness of the population health metrics research consortium gold standard verbal autopsy data for general verbal autopsy methods. BMC Med. 2014;12:23.

Miasnikof P, Giannakeas V, Gomes M, Aleksandrowicz L, Shestopaloff AY, Alam D, et al. Naive Bayes classifiers for verbal autopsies: comparison to physician-based classification for 21,000 child and adult deaths. BMC Med. 2015;13:286.

Kalter HD, Perin J, Black RE. Validating hierarchical verbal autopsy expert algorithms in a large data set with known causes of death. J Glob Health. 2016;6:010601.

Acknowledgements

Not applicable.

Funding

This work was supported by a National Health and Medical Research Council of Australia project grant, Improving methods to measure comparable mortality by cause (Grant no. 631494). The funder had no role in study design, data collection and analysis, decision to publish or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

HRC, ADF, IDR, and ADL participated in designing the study. HRC, IDR, and NA participated in data collection. JCJ and RHH performed the statistical analyses. JCJ and RHH wrote the first draft of the manuscript. All the authors edited the manuscript versions. All the authors were involved in the interpretation of the results, and read, commented and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The methods of this study were approved by the Medical Research Ethics Committee of the University of Queensland, Australia; the Institutional Review Board of the Research Institute of Tropical Medicine, Philippines; and the Ethical Review Committee of the International Centre for Diarrhoeal Disease Research, Bangladesh. All data were collected with informed verbal consent from participants before beginning the interview. This method of consent was approved by the review boards at each site.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1.

Improving Methods to Measure Comparable Mortality by Cause Study Sites. Description of data collection sites in the Philippines, Bangladesh, and Papua New Guinea.

Additional file 2.

Tariffs and Endorsements Rates by Cause, Symptom, and Site. Tariffs and endorsement rates with confidence intervals for each cause and symptom at all study sites from the IMMCMC and PHMRC studies. (CSV 3651 kb)

Additional file 3.

Tariff 2.0 Performance Metrics. CCC, CSMF accuracy, CCCSMF accuracy, and cause predictions across all 500 Dirichlet splits for each age module and study.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Chowdhury, H.R., Flaxman, A.D., Joseph, J.C. et al. Robustness of the Tariff method for diagnosing verbal autopsies: impact of additional site data on the relationship between symptom and cause. BMC Med Res Methodol 19, 232 (2019). https://doi.org/10.1186/s12874-019-0877-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12874-019-0877-7