Abstract

An abnormal growth or fatty mass of cells in the brain is called a tumor. They can be either healthy (normal) or become cancerous, depending on the structure of their cells. This can result in increased pressure within the cranium, potentially causing damage to the brain or even death. As a result, diagnostic procedures such as computed tomography, magnetic resonance imaging, and positron emission tomography, as well as blood and urine tests, are used to identify brain tumors. However, these methods can be labor-intensive and sometimes yield inaccurate results. Instead of these time-consuming methods, deep learning models are employed because they are less time-consuming, require less expensive equipment, produce more accurate results, and are easy to set up. In this study, we propose a method based on transfer learning, utilizing the pre-trained VGG-19 model. This approach has been enhanced by applying a customized convolutional neural network framework and combining it with pre-processing methods, including normalization and data augmentation. For training and testing, our proposed model used 80% and 20% of the images from the dataset, respectively. Our proposed method achieved remarkable success, with an accuracy rate of 99.43%, a sensitivity of 98.73%, and a specificity of 97.21%. The dataset, sourced from Kaggle for training purposes, consists of 407 images, including 257 depicting brain tumors and 150 without tumors. These models could be utilized to develop clinically useful solutions for identifying brain tumors in CT images based on these outcomes.

Similar content being viewed by others

Introduction

In the realm of medical image processing, the ability to classify brain tumor images holds immense importance. This capability assists medical professionals in making precise diagnoses and formulating effective treatment plans. Magnetic resonance imaging (MRI) stands out as one of the primary imaging technologies used to examine brain tissue [1]. Nevertheless, the current gold standard for diagnosing and classifying brain tumors in medical practice remains histopathological examination of biopsy specimens. However, this approach is fraught with challenges—it is laborious, time-consuming, and susceptible to human errors. These limitations underscore the urgency of develo** a fully automated technique for the multi-classification of brain tumors based on deep learning [2]. Over recent years, the medical image classification field has garnered significant attention, with convolutional neural networks emerging as the most widely employed neural network model for tackling image classification tasks [3]. A brain tumor represents an aberrant tissue where cells proliferate rapidly and uncontrollably, leading to tumor growth. Deep learning has been proposed as a solution to the challenges associated with brain tumor recognition and treatment. Notably, segmentation approaches have been instrumental in isolating abnormal tumor regions within the brain. For brain tumor-related tasks, reliable advanced artificial intelligence and neural network classification methods can play a pivotal role in early disease detection [4]. In recent times, the field of medical science has witnessed remarkable growth and success, largely driven by technological advancements. The transformative power of technology is revolutionizing the medical field. Artificial intelligence, a discipline focused on creating machines capable of independent learning without human intervention, has played a crucial role in this transformation. Machine learning has enabled the construction of computers that can emulate human thought processes and learn from experience. Real-world applications now leverage natural language understanding and deep learning techniques to address a wide array of challenges, including optimizing complex systems, categorizing vast digital datasets, identifying patterns, and advancing the development of self-driving cars [5]. Recent advancements in medical imaging, made possible by deep learning, have significantly improved the ability to diagnose a wide range of diseases. The most common and widely used machine learning method for visual learning and image recognition is the CNN architecture [6]. The human brain is safeguarded by a sturdy skull. Any expansion within this confined space can lead to significant complications. When tumors, whether normal or malignant, develop within the skull, they cause an increase in intracranial pressure. Consequently, permanent brain damage and even mortality can occur. Globally, approximately 700,000 people are affected by brain tumors, with an estimated 86,000 new cases diagnosed in 2019. In response to this challenge, researchers and scientists have been diligently working to develop more advanced tools and methods for the early detection of brain tumors [7].

The human brain, one of the most complex organs in the body, is composed of billions of individual cells that interact with each other. It is believed that the progression of unregulated cell division is responsible for the development of brain tumors. This process results in the formation of abnormal cell growth within or around the brain, which can be further categorized as either normal or malignant. The likelihood of develo** a brain tumor during one's lifetime continues to increase. Abnormal cell growth, which affects both healthy and unhealthy cells, can impair the brain's proper functioning. According to a research organization focused on cancer in the UK, 5250 people die each year from brain-related conditions. Furthermore, the World Health Organization (WHO) reports that brain tumors account for less than 2% of all human cancers. The current WHO classification for brain tumors is exclusively based on histopathology, which significantly limits its applicability in clinical settings [8].

Our current way of life wouldn't be possible without the contributions of information technology. The field that focuses on creating machines capable of autonomous learning, without human intervention, is known as artificial intelligence. Thanks to machine learning, people can now develop computers that can think and learn from experiences much like humans do. Natural language processing and deep learning play integral roles in numerous real-world applications today. For instance, they are employed to solve complex optimization problems, classify vast volumes of digital data by identifying relevant patterns, and enable self-driving cars. Deep learning, a subfield of machine learning, involves inputting information into a deep learning model, which then autonomously learns without human interference [9]. The process of diagnosing a brain tumor and determining its grade is often labor-intensive and time-consuming. Typically, patients request an MRI when the brain tumor reaches a certain size and causes various troublesome symptoms. After reviewing the patient's brain scans, if there is suspicion of a tumor, the next step is to perform a brain biopsy. Unlike magnetic resonance imaging, the biopsy procedure is invasive, and in some cases, the results may not be clear for up to a month. Professionals working with MRI employ techniques such as perfusion and biopsy to grade tumors and confirm their findings. It's worth noting that, in addition to biopsies, several innovative procedures for grading brain tumors have been developed in recent years [10]. This paper presents a novel CNN-based model for classifying brain tumors into two categories: malignant and normal. The CNN model is trained and developed using a large dataset. To enhance the accuracy of the proposed model, preprocessing techniques such as normalization and data augmentation are implemented on the dataset. Therefore, automated systems like this one are valuable for saving time and improving efficiency in clinical institutions. The proposed brain tumor grade classification model consists of five sections: “Introduction” section deals with the various types of tumors, different brain tumor grades, and their diagnosing tools. “Related work” section discusses state-of-the-art methods for brain tumor grade classification and their classification techniques. “Materials and methods” section illustrates the utilization of the dataset and the proposed model's classification architectures. “Experimental results and discussion” section discusses the outcomes of the proposed brain tumor classification hyperparameters and compares them to the outcomes of state-of-the-art methods. “Conclusion and future work” section summarizes the proposed work, concludes, and outlines the scope of future work in brain tumor grade classification.

Related work

Our current way of life wouldn't be possible without the contributions of information technology. The field that focuses on creating machines capable of autonomous learning, without human intervention, is known as artificial intelligence. Thanks to machine learning, people can now develop computers that can think and learn from experiences much like humans do. Natural language processing and deep learning play integral roles in numerous real-world applications today. For instance, they are employed to solve complex optimization problems, classify vast volumes of digital data by identifying relevant patterns, and enable self-driving cars. Deep learning, a subfield of machine learning, involves inputting information into a deep learning model, which then autonomously learns without human interference [11]. The process of diagnosing a brain tumor and determining its grade is often labor-intensive and time-consuming. Typically, patients request an MRI when the brain tumor reaches a certain size and causes various troublesome symptoms. After reviewing the patient's brain scans, if there is suspicion of a tumor, the next step is to perform a brain biopsy. Unlike magnetic resonance imaging, the biopsy procedure is invasive, and in some cases, the results may not be clear for up to a month. Professionals working with MRI employ techniques such as perfusion and biopsy to grade tumors and confirm their findings. It's worth noting that, in addition to biopsies, several innovative procedures for grading brain tumors have been developed in recent years [12].

We present a novel two-stage graph coarsening method rooted in graph signal processing and its application within the GCNN architecture. In the first coarsening stage, we employ graph wavelet transform (GWT)-based features to construct a coarsened graph while preserving the original graph's topological properties. This is achieved through the use of the graph wavelet transform. In the second phase, the coarsening problem is treated as an optimization challenge. At each level, we obtain the reduced Laplacian operator by restricting the initial Laplacian operator to a predefined subspace that maximizes topological similarity. This restriction of the initial Laplacian operator to the specified subspace yields the reduced Laplacian operator for each level. The results demonstrate that, whether used for general coarsening or as a pooling operator within the GCNN architecture, the proposed coarsening method outperforms current best practices [13].

The algorithms encompass graph embedding and graph regularization models, with their primary aim being to leverage the local geometry of data distribution. Graph Convolutional Networks (GCN), which successfully extend Convolutional Neural Networks (CNNs) to handle graphs with arbitrarily defined structures by incorporating Laplacian-based structural information, represent one of the most prominent approaches in Multiple-Source Self-Learning (MSSL) [14]. For the classification of various types of brain tumors, including both normal and abnormal magnetic resonance (MR) images, we propose the use of a differentially deep Convolutional Neural Network (CNN) model. This model generates additional differential image features from the original CNN feature maps by employing divergence operators within the differential deep-CNN architecture. The differential deep-CNN model offers several advantages, including the ability to analyze pixel direction through contrast calculations and the capability to categorize a large image database with high accuracy and minimal technical issues. As a result, the suggested strategy delivers outstanding overall performance [15]. Utilizing computer-aided mechanisms instead of traditional manual diagnostic procedures allows for superior results. Typically, this involves feature extraction using a Convolutional Neural Network (CNN), followed by data classification using a fully connected network. The proposed work employs "deep neural networks" and incorporates a CNN-based model to classify MRI results as either "tumor detected" or "tumor not detected." The model achieves an average accuracy of 96.08%, with an f-score value of 97.3 on average [16].

Due to the risk of overfitting in the development of deep Convolutional Neural Networks, it is rare for small datasets to benefit from such models. We propose a modified deep neural network and apply it to a small dataset. Additionally, we discuss the implications of our findings. Our approach entails using the VGG16 architecture with CNN as the classifier. We evaluate our proposed method's performance by testing it on the VGG16 dataset and measuring its precision, recall, and F-score to assess its effectiveness [17].

A novel hybrid model, combining the VGG16 Convolutional Neural Network (CNN) and Neural Autoregressive Distribution Estimation (NADE), referred to as VGG16-NADE, was developed. The study encompassed a dataset comprising 3,064 MRI images of brain tumors, categorized into three groups. To classify the T1-weighted contrast-enhanced MRI images, we employed the VGG16-NADE hybrid framework and compared it to other methods. The results indicated that the developed hybrid VGG16-NADE model outperforms other models in terms of accuracy, specificity, sensitivity, and the F1 score. The suggested hybrid VGG16-NADE model achieved a prediction accuracy of 96.01%, precision of 95.72%, recall of 95.64%, F-measure of 95.68%, a receiver operating characteristic (ROC) of 0.91, an error rate of 0.075, and a Matthews correlation coefficient (MCC) of 0.3564 [18].

The findings of this study demonstrate that an MRI can effectively detect brain tumors in two steps. The initial step involves an image enhancement procedure utilizing clip limit adaptable histogram equalization (CLAHE) to segment the brain MRI. The subsequent step entails identifying the type of brain tumor depicted in the MRI, employing the Visual Geometry Group-16 Layer (VGG-16) model. In specific instances, CLAHE was employed, such as applying it to the FLAIR image to enhance the green color and using it in the Red, Green, and Blue (RGB) color space. The experimental results revealed the highest performance, achieving an accuracy of 90.37%, precision of 90.22%, and recall of 87.61%. Notably, both the CLAHE approach in the RGB channel and the VGG-16 model consistently distinguished oligodendroglioma subclasses in RGB enhancement, with a precision rate of 91.08% and a recall rate of 95.97% [19].

The process of image segmentation and the transformation into models depend on their functionality, which, in turn, relies on various algorithms and the degree of technological advancement in application techniques. Through segmentation techniques, it is now possible to create three-dimensional models of a patient's body, enabling study without risking the patient's life. In this study, a combination of two methods for addressing segmentation challenges is discussed, followed by an explanation of how these methods are integrated into a hybrid algorithmic structure. Convolutional neural networks (CNNs), also known as active contour and deep multi-planar, are utilized to convert DICOM medical images (Digital Imaging and Communication Systems in Medicine) into 3D models. The data from the active contour method serves as input for deep learning [20]. In the field of medical image processing, the author proposes a Convolutional Neural Network Database Learning with Neighboring Network Limitation (CDBLNL) approach for brain tumor image classification. The suggested system architecture employs multilayer-based metadata learning, incorporating a CNN layer to provide reliable data. The approach uses two datasets (BRATS and REMBRANDT) and achieves a 97.2% accuracy result in brain MRI categorization [21]. This study suggests using cervigram images for cervical cancer detection. The Associated Histogram Equalization (AHE) approach enhances cervical image edges, while the finite ridgelet transform creates a multi-resolution image. This modified multi-resolution cervical image provides ridgelets, gray-level run-length matrices, moment invariants, and an enhanced local ternary pattern. A feed-forward, backward-propagation neural network trains and tests these derived features to identify cervical images as normal or abnormal. Morphological methods are employed on aberrant cervical images to detect and segment carcinoma. The cervical cancer detection method demonstrates 98.11% sensitivity, 98.97% specificity, 99.19% accuracy, a PPV of 98.88%, an NPV of 91.91%, an LPR of 141.02%, an LNR of 0.0836, 98.13% precision, 97.15% FPs, and 90.89% FNs [22]. Compared to these state-of-the-art classifiers, the author's suggested Particle Swarm Optimization (PSO) technique, applied to selected characteristics of the NSL-KDD dataset, reduced the false alarm rate while increasing the detection rate and accuracy of the IDS. Measures of IDS performance such as accuracy, precision, false-positive rate, and detection rate are included in this analysis. Out of a total set of 41 characteristics, 10 were selected, which exhibited low computational complexity, 99.32% efficiency, and a 99.26% detection rate in the experiment [23]. In order to find the best feature subsets in the NSL-KDD dataset, the author suggests a new feature selection approach based on a genetic algorithm (GA). To further improve DR (Detection Rate) and ACC (Accuracy), hybrid classification utilizing logistic regression (LR) and decision tree (DT) has been performed. This study optimized the selected optimal features by applying and comparing the results of multiple meta-heuristic techniques. According to the data, the Grey Wolf Optimization (GWO) method achieves the highest accuracy (99.44%) and detection rate (99.36%) when only 20% of the original characteristics are used [24]. "For detecting tumors in MRI scans, a modified version of the pre-trained InceptionResNetV2 model is utilized. Once a tumor is located, its stage (which may be glioma, meningioma, or pituitary cancer) is determined using a combination of InceptionResNetV2 and Random Forest Tree (RFT). To address the limited size of the dataset, we employ Cyclic Generative Adversarial Networks (C-GAN). The experimental findings indicate that the proposed models for tumor detection and classification are highly accurate (99% and 98%, respectively) [25]. Author used a smart combination of deep learning techniques to reduce unwanted speckles in breast ultrasound pictures. We first improved the image contrast, then made fine details clearer with special filters. To fix overly sharp areas, we applied a filter, and we also added a feature to protect important edges in the pictures. After training our model a hundred times, we achieved excellent results. Both the error rate and the number of false identifications were less than 1.1%. This shows that our model, called LPRNN, is good at reducing speckles without losing important parts of the images [26]. Author used two pre-trained CNN models, VGG16 and VGG19, to extract features from the data. Then, we applied a correntropy-based learning strategy with the extreme learning machine (ELM) to identify the most important features. We enhanced these features further using a robust covariant technique based on partial least squares (PLS) and combined them into one matrix. Finally, we used this combined matrix as input for the ELM to classify the data. To test our method, we ran experiments on the BraTS datasets. The results were impressive, with accuracy rates of 97.8%, 96.9%, and 92.5% for BraTs2015, BraTs2017, and BraTs2018, respectively [27].

Materials and methods

While there has been a significant amount of research on brain tumors, only a limited amount of work has been published comparing four deep learning models—VGG16, VGG19, ResNet101, and DenseNet201—in order to draw conclusions regarding the distinctions between tumor types. Next, we generate accuracy, loss, and learning curve graphs, and establish testing procedures to visualize and compare the performance of these models.

Materials

The proposed method utilizes the publicly available dataset "Brain MRI Images for Brain Tumor Identification," created by Abd El Kader on February 15, 2020, which can be accessed at (https://www.kaggle.com/navoneel/brain-mri-images-for-brain-tumor-detection/). The dataset comprises two main subsets: brain tumor images (n = 257) and normal images (n = 150), all with dimensions of 467 × 586 × 3. This dataset is divided into two sections: one designated as the training section and the other as the testing section. Table 1 provides an overview of the dataset categories, while Table 2 and Fig. 1 showcase sample images from the dataset.

Brain tumor prediction using pretrained CNN models

Convolutional neural network (CNN) models have repeatedly demonstrated their ability to produce high-quality results in various healthcare research and application areas. However, building these pretrained CNN models from scratch for predicting neurological conditions using computed tomography (CT) data has always been a challenging task [28]. These pretrained models are inspired by the concept of "transfer learning," where a deep learning model trained on a large dataset is employed to address a problem in a smaller dataset [29]. Transfer learning leverages the idea that one dataset can be used to train another, eliminating the need for a large dataset and the lengthy training periods often required by many deep learning models. In this paper, four deep learning models are utilized: DenseNet101, DenseNet201, VGG16, and VGG19. These models were initially trained on ImageNet and subsequently fine-tuned using examples of cancerous tissue. After pretraining, a fully connected layer is added to the last layer [30]. Tables 3 and 4 provide architectural descriptions and functional block details for each design. Table 4 presents the parameters, while Figs. 2 and 3 illustrate the functional block diagrams of VGG16, DenseNet101, VGG19, and DenseNet201.

DenseNet101 comprises 10.2 million trainable parameters and includes one convolutional layer, one max pooling layer, three transition layers, one average pooling layer, one fully connected layer (FCL), and one Softmax layer. It also features four dense block layers, with the third and fourth dense blocks each containing one convolution layer with a stride of 1 × 1 and the third and fourth dense blocks each having a stride of 3 × 3, respectively [31]. The DenseNet201 model also consists of 10.2 million trainable parameters and consists of one convolution layer, one max pooling layer, three transition layers, one average pooling layer, one fully connected layer, and one softmax layer. Additionally, it incorporates four dense block layers, with the third and fourth dense block layers each housing two convolution layers with stride ratios of 1 × 1 and 3 × 3, respectively [32]. VGG16 boasts 138 million trainable parameters and includes thirteen convolutional layers, five max pooling layers, three fully connected layers, and one softmax layer.

Methods

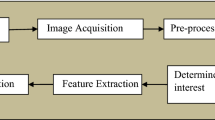

Figure 4 illustrates the proposed model for brain tumor identification. This model categorizes brain tumor images into two distinct categories: normal and malignant. To ensure numerical stability and enhance the performance of deep learning models, the dataset underwent a normalization pre-processing approach. The computed tomography images initially exist in either monochrome or grayscale formats, with pixel values ranging from 0 to 255. Normalizing these input images is a crucial step, as it significantly accelerates the training of deep learning models.

Having a substantial amount of data is essential when attempting to improve the performance of a deep learning model. However, access to these datasets often faces various constraints. Consequently, to overcome these challenges, data augmentation methods are employed to increase the total number of sample photos within the dataset. Various techniques for enhancing the data, including flip**, rotating, adjusting intensities, and zooming, are implemented. In Fig. 5, you can observe examples of the horizontal flip** technique as well as the vertical flip** approach.

Figure 6 depicts a rotation augmentation approach that is implemented in a clockwise direction by an angle of 90° each. Using intensity factor values such as 0.2 and 0.4 as examples, the intensity data augmentation technique that is illustrated in Fig. 7 is also applied to the image dataset.

Table 5 presents training images captured both before and after the augmentation process. Additionally, the input dataset exhibits an uneven distribution of classes. Data augmentation strategies are employed to address this identified imbalance issue. After applying these data augmentation techniques, the sample dataset for each class is increased by 70%, resulting in the dataset being expanded to a total of 2850 images.

Experimental results and discussion

An experimental study is being conducted to detect brain tumors from CT scans using four pretrained hybrid CNN models: VGG16, DenseNet 101, DenseNet 201, and VGG19. These hybrid classifiers were implemented using CT images from the brain tumor dataset. For training and testing, a total of 205 images were used for training, while 52 images were reserved for testing. The initial dimensions of the brain scans were reduced from 467 × 586 to 224 × 224 to facilitate transfer learning. The models were trained with a batch size of 16, determined through empirical methods. Each model underwent a total of 20 training epochs, with the learning rate determined empirically. The execution time of our study was longer due to the complexity and high frequency of layers in the network, which justified the good accuracy we obtained. The longer execution time in the current study can be attributed to the number of hidden layers, pooling layers, and batch sizes. It should be noted that training deeper networks requires more time than training shallower or simpler networks. Training was carried out using the Adam optimizer. Various performance indicators, including accuracy, precision, sensitivity, specificity, and the F2 score, were used to assess the performance of each model.

Performance metrics

The following measurements have been used to evaluate the model that was suggested: Sensitivity, which refers to the percentage of true positives that can be identified without error; Specificity, which reflects the percentage of false negatives that are accurately identified; Precision can be defined as the ratio of correct positive forecasts to the total number of positive predictions, whereas accuracy refers to the proportion of true positives as well as true negatives. The Eqs. 1, 2, 3, 4, and 5 each have a parameter that is described by that equation.

where true positives (α) are the correctly classified positive cases, true negatives (ø) are the correctly classified negatives, false positives (µ) are the incorrectly classified positives, false negatives (β) are the incorrectly classified negatives.

A comparison of the training results obtained from the several models

To obtain a range of performance parameters, four distinct models, each with a unique combination of epochs and batch sizes, are employed. These parameters encompass training loss, error rate, testing loss, and testing accuracy. Specifically, the four different models—VGG16, DenseNet 101, DenseNet 201, and VGG19—were each tested with 20 epochs and a batch size of 16. The training of these deep learning models is carried out using the Adam optimizer. Table 6 illustrates that among these models, VGG19 exhibits the highest performance during testing with a batch size of 16. It achieved a precision of 99.5%, sensitivity of 95.86%, specificity of 99.5%, an accuracy rate of 99.11%, and an F2 score of 97.21%. Furthermore, as shown in Table 7, VGG-19 outperforms the other models during the training phase, evidenced by its lower testing loss and the highest testing accuracy. VGG19 consists of 19 layers, which is similar in number to DenseNet101 and DenseNet201. It features approximately 8 million parameters, which is fewer than DenseNet101 and DenseNet201. While DenseNet101 and DenseNet201 share similar functionalities, DenseNet201 has a greater number of layers, resulting in longer processing times. After 20 iterations, the performance parameters of each model remain consistent with one another. Figure 8 presents a comparison of various CNN models with a batch size of 16, while Fig. 9 displays the confusion matrix for the models VGG-16 and DenseNet101, as well as VGG-19 and DenseNet201, with a batch size of 16 shown in Table 8.

Various pretrained model confusion matrices

Figure 9 displays the confusion matrices for all deep learning models with a batch size of 16. These matrices represent both accurate and inaccurate predictions equally. Each column is labeled with the class name to which it belongs, such as "normal" and "malignant." The accuracy of image classifications by a particular model can be determined from the diagonal values. This confusion matrix serves as the basis for assessing the accuracy of each model for batch sizes of 16. Figure 11 presents a graphical analysis of the accuracy of all the models. In Fig. 9, it is evident that VGG19 and DenseNet201 are the top performers in terms of accuracy obtained, achieving 99.11% and 97.13%, respectively, for a batch size of 16. These results indicate that VGG19 is the best-performing model among those tested for batch sizes of 16. Figure 12 depicts the learning rate curves for VGG19 and DenseNet201 at a batch size of 16. The learning rate curve indicates the speed at which a model learns, which can vary from slow to rapid. There is a point where the loss ceases to decrease and starts to increase as the learning rate rises. To achieve optimal results, the learning rate should be positioned to the left of the lowest point on the graph.

If we examine Fig. 10 for VGG19's learning rate, we can observe that the lowest loss occurs around point 0.001. This suggests that the optimal learning rate for VGG19 should be between 0.0001 and 0.001. Similarly, the lowest loss point for DenseNet201 can be observed at 0.00001 in Fig. 10, which illustrates the learning rate. Therefore, the ideal learning rate for DenseNet201 falls between 0.000001 and 0.00001, with the loss being inversely proportional to the learning rate. Figure 11 displays the loss convergence map for the VGG19 and DenseNet201 CNN models at a batch size of 16.

The loss initially decreased as the models learned from the data, continuing until they reached a point where further improvement during training was no longer possible. Testing losses were computed for each epoch, revealing consistently small values that increased as the number of epochs progressed. Figure 11 illustrates that for a batch size of 16, both VGG19 and DenseNet201 consistently achieve their lowest loss at every epoch. Specifically, after processing 120 batches, VGG-19 exhibits lower loss than DenseNet-201. In comparison to DenseNet201, the testing and training loss for VGG19 range from 0 to 0.2, while for DenseNet201, it ranges from 0.2 to 0.4. It is evident that VGG19 outperforms DenseNet201 at a batch size of 16 in terms of training and testing loss.

Performance metric evaluation

As demonstrated in Table 9, the results of the proposed model are compared to those of state-of-the-art models using CT scans. Thanks to the pre-processing techniques applied to the dataset, the proposed model was able to produce a good set of results. In order to further enhance the accuracy of the proposed model, data augmentation and normalization strategies have been implemented for VGG19 and DenseNet201. The designed model achieves better results when using the ADAM optimizer with a batch size of 16. Table 9 provides a comparison of classification accuracy between the proposed model and other state-of-the-art models. The analysis in Table 9 shows that the proposed model outperforms the state-of-the-art methods in terms of all parameters, achieving a classification accuracy of 99.11%, surpassing other existing methods, despite the size of the image dataset. Figure 12 visually represents the comparison of classification accuracy between the proposed brain tumor classification model and other state-of-the-art models.

Potential applications of proposed model

Deep learning-based brain tumor classification has numerous potential applications across various domains, including healthcare, medical research, and image analysis. Here are some key potential applications,

-

Deep learning models can aid radiologists and clinicians in accurately diagnosing brain tumors from medical imaging data such as MRI scans. This can lead to earlier detection and better treatment planning for patients.

-

Deep learning algorithms can be used to segment brain tumors from surrounding healthy tissue in medical images. This is valuable for precise surgery planning and radiation therapy. Deep learning can provide decision support for clinicians by suggesting treatment options based on a patient's tumor characteristics and medical history.

-

Deep learning can assist in patient selection for clinical trials, ensuring that participants meet specific criteria related to tumor types and characteristics.

Conclusion and future work

In this study, our aim was to thoroughly evaluate the capabilities of four powerful deep learning models: VGG16, DenseNet101, VGG19, and DenseNet201. We sought to assess their effectiveness in distinguishing malignant tumors. VGG19 and DenseNet201 emerged as top performers, particularly when used with a batch size of 16. We subjected these models to rigorous training, systematic analysis, and presented the synthesis of our findings. Furthermore, we delved into the realm of optimization to maximize the potential of the VGG19 model. By fine-tuning batch sizes, optimizing with the Adam optimizer, and adjusting the number of epochs, we achieved exceptional results. Specifically, the VGG19 model, when combined with the Adam optimizer and a batch size of 16, achieved an impressive accuracy of 99.11% and a sensitivity of 95.86%. Similarly, the DenseNet201 model, under the same conditions, delivered competitive results with an accuracy of 97.13% and a sensitivity of 94.32%. These comparative findings hold promise for providing valuable support to radiologists seeking a reliable second opinion tool or simulator, potentially offering a cost-effective alternative in the field of tumor diagnosis. Our central mission throughout this study has been to pioneer methods for early malignancy detection, envisioning a tool that could empower radiologists in their diagnostic endeavors. The insights gained from this research contribute significantly to the advancement of precision-driven diagnostic models within the realm of deep learning. However, it is important to acknowledge a notable limitation in our research. We limited our training and testing efforts exclusively to a single axial dataset comprising brain malignant samples. Recognizing the potential for greater generalization and robustness, we anticipate future expansions of our model to include coronal and sagittal datasets in both the training and testing phases. Additionally, our ongoing pursuit of excellence motivates us to explore a wide array of pretrained models and innovative optimization strategies, promising to further enhance the efficiency and reliability of our model.

Availability of data materials

The datasets used during the current study are available from the corresponding author on reasonable request.

References

Gu X, Shen Z, Xue J, Fan Y, Ni T. Brain tumor MR image classification using convolutional dictionary learning with local constraint. Front Neurosci. 2021;15(1):1–12.

Irmak E. Multi-classification of brain tumor MRI images using deep convolutional neural network with fully optimized framework. Iran J Sci Technol Trans Electric Eng. 2021;45(1):1015–36.

Das S, Aranya RR, Labiba NN. Brain tumor classification using convolutional neural network. In: 1st International Conference on advances in science, engineering and robotics technology; 2019. 19257049:1–6. https://doi.org/10.1109/ICASERT.2019.8934603.

Younis A, Qiang Li, Nyatega CO, Adamu MJ, Kawuwa HB. Brain tumor analysis using deep learning and VGG-16 ensembling learning approaches. Appl Sci. 2022;2(12):1–20.

Padmini B, Johnson CS, Ajay Kumar B, Rajesh Yadav G. Brain tumor detection by using CNN and Vgg-16. Int Res J Modern Eng Technol Sci. 2022;4(6):4840–3.

Mandal S, Pradhan A, Vishwakarma S. VGG-16 convolutional neural networks for brain tumor detection. Shodh Samagam. 2022;5(1):78–84.

Majib MS, Rahman MM, Sazzad TS, Khan NI, Dey SK. VGG-SCNet: a VGG net-based deep learning framework for brain tumor detection on MRI images. IEEE Access. 2021;9:116942–52.

Minarno AE, Sasongko BY, Munarko Y, Nugroho HA, Ibrahim Z. Convolutional neural network featuring VGG-16 model for glioma classification. Int J Inform Visual. 2022;6(3):660–6.

Chandra S, Priya S, Maheshwari D, Naidu R. Detection of brain tumor by integration of VGG-16 and CNN model. Int J Creat Res Thoughts. 2020;8(7):298–304.

Anaraki AK, Ayati M, Kazemi F. Magnetic resonance imaging-based brain tumor grades classification and grading via convolutional neural networks and genetic algorithms. Biocybern Biomed Eng. 2018;39(1):63–74.

Lei X, Pan H, Huang X. A dilated CNN model for image classification. IEEE Access. 2019;7(1):124087–95.

Padole H, Joshi SD, Gandhi TK. Graph wavelet-based multilevel graph coarsening and its application in graph-CNN for Alzheimer’s disease detection. IEEE Access. 2020;8:60906–17.

Sichao Fu, Liu W, Tao D, Zhou Y, Nie L. HesGCN: Hessian graph convolutional networks for semi-supervised classification. Inf Sci. 2019;2(1):1–28.

Kader IAE, Guizhi Xu, Shuai Z, Saminu S, Javaid I, Ahmad IS. Differential deep convolutional neural network model for brain tumor classification. Brain Sci. 2021;2(11):1–16.

Choudhury CL, Mahanty C, Kumar R. Brain tumor detection and classification using convolutional neural network and deep neural network. 2020;19806176:1–6. https://doi.org/10.1109/ICCSEA49143.2020.9132874.

Singh A, Deshmukh R, Jha R, Shahare N, Verma S. Brain tumor classification using CNN and VGG16 model. Int J Adv Res Innov Ideas Educ. 2020;6(2):1331–6.

Sowrirajan SR, Balasubramanian S, Raj RSP. MRI brain tumor classification using a hybrid VGG16-NADE model. Braz Arch Biol Technol. 2023;66(1):1–18.

Aulia S, Rahmat D. Brain tumor identification based on VGG-16 architecture and CLAHE method. Int J Inform Visual. 2022;6(1):96–102.

Mamdouh R, El-Khamisy N, Amer K, Riad A, El-Bakry HM. A new model for image segmentation based on deep learning. Int J Online Biomed Eng. 2021;17(7):28–46.

Saravanan S, Vinoth Kumar V, Sarveshwaran V, Alagiri Indirajithu D, Elangovan Allayear SM. Computational and mathematical methods in medicine glioma brain tumor detection and classification using convolutional neural network. Comput Math Methods Med. 2022;4380901:1–12.

Srinivasan S, Raju ABK, Mathivanan SK, Jayagopal P. Local-ternary-pattern-based associated histogram equalization technique for cervical cancer detection. Diagnostics. 2023;3(13):1–15.

Srinivasan S, Rajaperumal RN, Mathivanan SK, Jayagopal P, Krishnamoorthy S. Detection and grade classification of diabetic retinopathy and adult vitelliform macular dystrophy based on ophthalmoscopy images. Electronics. 2023;3(12):1–14.

Kunhare N, Tiwari R, Dhar J. Particle swarm optimization and feature selection for intrusion detection system. Springer India. 2020;45(1):1–14.

Kunhare N, Tiwari R, Dhar J. Intrusion detection system using hybrid classifiers with meta-heuristic algorithms for the optimization and feature selection by genetic algorithm. Comput Electr Eng. 2022;103(1):1–21.

Gupta RK, Bharti S, Kunhare N, Sahu Y, Pathik N. Brain tumor detection and classification using cycle generative adversarial networks. Interdiscip Sci Comput Life Sci. 2022;14(2):485–502.

Vimala BB, Srinivasan S, Mathivanan SK, Muthukumaran V, Babu JC, Herencsar N, Vilcekova L. Image noise removal in ultrasound breast images based on hybrid deep learning technique. Sensors. 2023;3(23):1–16.

Khan MA, Ashraf MA. Multimodal brain tumor classification using deep learning and robust feature selection: a machine learning application for radiologists. Diagnostics. 2020;10(8):1–12.

Xu Y, Jia Z, Ai Y. Deep convolutional activation features for large scale brain tumor histopathology image classification and segmentation. In: IEEE international conference on acoustics, speech and signal processing, South Brisbane; 2015.

Sadad T, Rehman A, Munir S. Brain tumor detection and multi-classification using advanced deep learning techniques. Microsc Res Tech. 2021;84(6):1296–308.

Tandel GS, Balestrieri A, Jujaray T, Khanna NN, Saba L, Suri JS. Multiclass magnetic resonance imaging brain tumor classification using artificial intelligence paradigm. Comput Biol Med. 2020;122(1):1–11.

Srinivas Rao B. A hybrid CNN-KNN model for MRI brain tumor classification. Int J Recent Technol Eng. 2019;8(2):5230–5.

Ayadi W, Elhamzi W, Atri M. A new deep CNN for brain tumor classification. In: 20th International conference on sciences and techniques of automatic control and computer engineering, Monastir, Tunisia; 2020. p. 266–270.

Acknowledgements

This research was financially supported by Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2023R235), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia. Acknowledgements: The authors extend their appreciation to the Deanship of Scientific Research at King Khalid University (KKU) for funding this research through the Research Group Program Under the Grant Number: (R.G.P.2/515/44).

Funding

This research was financially supported by Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2023R235), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia. Acknowledgements: The authors extend their appreciation to the Deanship of Scientific Research at King Khalid University (KKU) for funding this research through the Research Group Program Under the Grant Number: (R.G.P.2/515/44).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the conceptualization, formal analysis, investigation, methodology, and writing and editing of the original draft. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Institutional Review Board

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Rohini, A., Praveen, C., Mathivanan, S.K. et al. Multimodal hybrid convolutional neural network based brain tumor grade classification. BMC Bioinformatics 24, 382 (2023). https://doi.org/10.1186/s12859-023-05518-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12859-023-05518-3