Abstract

With the ever growing amount of data collected by the ATLAS and CMS experiments at the CERN LHC, fiducial and differential measurements of the Higgs boson production cross section have become important tools to test the standard model predictions with an unprecedented level of precision, as well as seeking deviations that can manifest the presence of physics beyond the standard model. These measurements are in general designed for being easily comparable to any present or future theoretical prediction, and to achieve this goal it is important to keep the model dependence to a minimum. Nevertheless, the reduction of the model dependence usually comes at the expense of the measurement precision, preventing to exploit the full potential of the signal extraction procedure. In this paper a novel methodology based on the machine learning concept of domain adaptation is proposed, which allows using a complex deep neural network in the signal extraction procedure while ensuring a minimal dependence of the measurements on the theoretical modeling of the signal.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

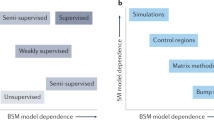

High energy physics (HEP) experiments report their results in a number of different ways, such as showing exclusion limits of particular theoretical models, presenting the measured production cross section of the process of interest, or measuring parameters of a given model. Different ways of reporting the results are usually characterized by different degrees of underlying assumptions, that can make the measurements more (less assumptions) or less (more assumptions) usable for re-interpretations.

Measurements of fiducial differential production cross sections are usually designed to minimize the underlying model assumptions, such that they can be easily re-interpreted to set constraints on, in principle, any theoretical model. Typically these are measurements of cross section in bins of the observable of interest (differential) with limited extrapolation to a restricted portion of the phase space (fiducial volume) at particle-level, i.e. prior to the simulation of the interaction with the detector. This phase space is defined to match as closely as possible the experimental event selection, so that the extrapolation introduces as little model dependence as possible (certainly much less than what would arise from the assumptions one would have to introduce if the measurement was reported in the full phase space).

These measurements are of particular interest in the fields of standard model (SM) and Higgs boson physics at the CERN Large Hadron Collider (LHC), and the ATLAS and CMS experiments already published many measurements of this kind.

In the case of Higgs boson cross section measurements, a complementary approach was formulated in the past few years, known as the Simplified Template Cross Section (STXS) framework [2], necessary for taking into account the smearing introduced by the detector and for the the extrapolation of the result to the fiducial region, can also be a source of possible bias. The response matrix quantifying the smearing between the reconstructed- and particle-level phase space may indeed depend on the physics model. In case the response matrix is quite diagonal, however, the dependence is expected to be small and can be neglected.

Finally, another contribution originates from the definition of the analysis phase space itself. In fact, the event selection efficiency, as well as the acceptance factor, may be different depending on the considered signal model. The former refers to the probability of reconstructing a signal event in the fiducial volume, and hence depends on the detector smearing, whereas the latter can be estimated a posteriori using only particle-level quantities and therefore be accounted for.

The approach presented in this study is focused on the reduction of the model dependence originating from the signal extraction procedure as described in Sect. 3. The other sources of model dependence described above are usually tackled by means of different approaches and are not covered by this study.

3 Model dependence reduction as a problem of domain adaptation

In order to preserve the model independence of the differential and fiducial analyses, it is necessary to define a learning algorithm that classifies events correctly and at the same time does not significantly distinguish different physics models assumed to generate signal events.

The latter goal generally falls within the realm of what is usually defined as domain adaptation (DA) [3], a branch of ML concerned with studying how an algorithm performs when evaluated on a data set statistically different from the one it was trained on. Several techniques have been proposed to allow the construction of DNNs whose performance is robust when applied on a range of different data sets, called domains [4, 5].

In the use case of \(\mathrm {H}\rightarrow \mathrm {W^+}\mathrm {W^-}\) STXS measurements the different data sets correspond to events generated assuming different signal models and the DA is achieved if the NN is agnostic with respect to the considered signal hypothesis, which in practice means that the shape of the output discriminant is the same regardless of the model assumption.

3.1 Implementation of an adversarial deep neural network

The implementation of DA developed in this work is based on an adversarial deep neural network (ADNN). The ADNN is a system of two networks, consisting of a classifier (C) and an adversary (A), which are trained in a competitive way to perform different tasks: the classifier aims to determine if an event is signal- or background-like, and is trained on a data sample including events arising from different domains i.e., different signal models. On the other hand, the goal of the adversary is to guess the physics model of a signal event, regressing the domain from the second-to-last layer of the classifier.

The two networks are trained so that C learns to discriminate between signal and background, but is penalized if the optimized data representation contains too much information on the domain of origin of signal events. This training approach fosters the emergence of features among the classifier input variables that provide discriminating power for the main learning task (signal-to-background separation) while not relying on the variation in inputs due to the different signal models. This goal has been achieved by implementing a two-step training procedure on a labeled data sample described in the following.

In each epoch the classifier is first trained with a combined loss function, defined as

where \({\mathcal {L}}(C)\) is the categorical cross-entropy (CCE) loss function of the classifier in the event it has to discriminate the signal from more than one background process, \({\mathcal {L}}(A)\) is the CCE loss function of the adversary and \(\alpha \) is a configurable hyperparameter that regulates how much the network is penalized for learning the signal model. During this preliminary step the weights of A are kept frozen and the minimization is performed only with respect to the weights of C. Subsequently, in the same epoch, the adversary is trained with the \({\mathcal {L}}(A)\) loss function. Algorithm 1 schematizes the training procedure.

The hyperparameters defining the ADNN need to be optimized so that C is able to discriminate events while simultaneously preventing A from inferring the domain. When, and if, an equilibrium between the performance of each of the networks is reached, the output of the classifier is independent of the signal model.

The general structure of an ADNN with three output nodes for both the classifier and the adversary is represented as an example in Fig. 1: the classifier takes as input a set of m features \(\{x_i\}\) and has to discriminate the signal from two possible background processes; finally, its second-to-last layer (commonly referred to as representation) constitutes the input layer of the adversary, which is trained to predict the model used to generate each training event, randomly chosen among the SM signal hypothesis and a number of possible BSM scenarios. In principle, the alternative scenarios should cover the full spectrum of BSM models that are relevant.

The structures of the classifier and the adversary can be defined using different hyperparameter values: the architecture of the entire ADNN needs to be optimized for both separation of signal from background and decorrelation of the classifier output scores from the domain to which signal events belong, as will be described in Sect. 4.2. Such optimization can be accomplished by maximizing the categorical accuracy of the classifier and by simultaneously minimizing the two-sample Kolmogorov–Smirnov (K–S) test statistic between the distributions of the classifier when evaluated on signal events simulated under the different considered hypotheses. The categorical accuracy is the fraction of total events correctly classified and, for a model trained on a dataset composed of the same number of events for each class, it is directly related to diagonal elements of the confusion matrix. When an imbalanced datasets is used, this assumption does not hold and other metrics based on confusion matrix can be employed for evaluating the performance. The K–S test statistic provides a measure of compatibility, quantifying the distance between two distributions. Therefore one may minimize the objective function defined as the average of the K–S test statistic computed between the classifier output shapes of signal events simulated under the SM and each of the considered alternative hypotheses. The objective function defined in this way will be referred to as the average K–S test statistic in the following. If no residual model dependence is left in the classifier outputs, the distributions of signal events generated under different model assumptions are expected to be compatible within the statistical accuracy. By doing so, the best estimation of the hyperparameters outlining the ADNN can be found.

4 The H\(\rightarrow \)W\(^+\)W\(^-\) case

In this section the application of the adversarial method to the case of the \(\mathrm {H}\rightarrow \mathrm {W^+}\mathrm {W^-}\) STXS cross section measurement at the LHC is shown, as a suitable case study where all the issues connected with the model dependence are present. It can be seen as a simplification of a possible SM analysis, performed purely at particle-level on a simulated data sample from p-p collisions at a centre of mass energy of 13 TeV, corresponding to an integrated luminosity of 138 fb\(^{-1}\).

The analysis targets events in which a Higgs boson is produced via vector boson fusion (VBF) and subsequently decays to a pair of opposite-sign W bosons, each decaying in turn to an electron or muon and a neutrino.

The presence of neutrinos in the final state makes the full kinematic reconstruction, and thus the measurement of the invariant mass of the system, impossible, thus removing the possibility of using a readily available model independent discriminating variable.

The VBF mechanism is interesting because a precision measurement of its cross section allows to put tight constraints on the Higgs boson couplings to vector bosons and thus is an important test of the SM predictions. The HWW and, more generally, the HVV vertex is indeed predicted to have a different CP quantum number by several BSM theories, which introduce anomalous couplings between vector bosons and the Higgs particle. These models are summarized by the following equation, which represents the general scattering amplitude describing the interaction between a spin-0 Higgs boson (H) and two spin-1 gauge bosons (\({\mathrm {V}_1 \mathrm {V}_2}\)):

where \(f^{(\mathrm {i})\upmu \upnu } = \epsilon ^\upmu _{\mathrm {V}_\mathrm {i}}q^\upnu _{\mathrm {V}_\mathrm {i}} - \epsilon ^\upnu _{\mathrm {V}_\mathrm {i}}q^\upmu _{\mathrm {V}_\mathrm {i}}\) and \(f^{(\mathrm {i})}_{\upmu \upnu } = \frac{1}{2} \epsilon _{\upmu \upnu \uprho \upsigma } f^{(\mathrm {i}), \uprho \upsigma }\) are the field strength tensor and the dual field strength tensor of a gauge boson with momentum \(q_{\mathrm {V}_\mathrm {i}}\), polarization \(\epsilon _{\mathrm {V}_\mathrm {i}}\) and pole mass \(m_{\mathrm {V}_\mathrm {i}}\) [6]. \(\Lambda ^{\mathrm {VV}}_{1}\) is the energy scale of the BSM physics and is a free parameter of the model. For the sake of clarity, let \(L_1\) be

The leading order (LO) SM-like contribution corresponds to \(a^{\mathrm {VV}}_1 = 1\) with VV = ZZ, WW, and \(L_1, a_\mathrm {2}, a_3 = 0\). These are CP-even interactions. There can not be LO couplings to massless gauge bosons, so there are no contributions from photons and gluons at this order. Any other VV couplings are ascribed to anomalous couplings, which can be either small SM corrections due to loop effects or new BSM phenomena. In particular, \(a^{\mathrm {VV}}_2 = 1\) describes loop-induced CP-even couplings (like HZ\({\gamma }\), H\({\gamma \gamma }\) and Hgg), which are parametrically suppressed by the coupling constants \(\alpha \) and \(\alpha _\mathrm {s}\). These phenomena are described by the model which will be referred to as the H0PH physics model in the following.

The \(L_1\) term is associated to CP-even HVf\(\bar{\text {f}}\) and Hf\(\bar{\text {f}}\)f\(\bar{\text {f}}\) interactions, which are predicted by the physics model denominated H0L1.

Finally, \(a^{\mathrm {VV}}_3 = 1\) accounts for three loop-induced CP-odd coupling in the SM. This kind of processes is identified by the so-called H0M model.

The \(a^{\mathrm {VV}}_1\) coupling is assumed to be zero by the H0PH, H0L1 and H0M models.

In addition, three physics theories are defined as mixtures between the SM and one of the previous BSM hypothesis. Such models correspond to having \(a^{\mathrm {VV}}_1\) and one of the BSM operators both equal to 0.5 and will be labeled by adding the “f05” tag to the model names introduced above.

The measurement is performed within the STXS phase space regions, defined at particle-level. For what the VBF production mechanism is concerned, three STXS bins in a phase space region with at least two jets are defined. In addition to requesting the \(p_{\mathrm {T}}\) of the subleading jet to be higher than 30 GeV and the absolute value of the Higgs rapidity (\(\vert { y_\mathrm {H}}\vert \)) less than 2.5, the VBF STXS bins are delineated by one of the following cuts on \(m_{\mathrm {jj}}\) and \(p_{\mathrm {T}}^{\mathrm {H}}\):

-

\(350 < m_{\mathrm {jj}} \le 700\) and \(p_{\mathrm {T}}^\mathrm {H} < 200 \) GeV,

-

\( m_{\mathrm {jj}} > 700\) and \(p_{\mathrm {T}}^\mathrm {H} < 200 \) GeV,

-

\(m_{\mathrm {jj}} > 350 \) GeV and \(p_{\mathrm {T}}^\mathrm {H} > 200 \) GeV.

Ahead of these requirements, a global selection, that mimics the analysis selection criteria at reconstructed-level, has been applied to outline a set of signal-enriched phase space regions. However, in the last two bins the number of events was found to be too low to constitute a sufficiently large training sample, and therefore it was decided to merge them together. Although they are very distant regions of phase space, it is useful to test the performance of the adversarial approach in the merged category.

For the sake of simplifying the notation, the phase space where \(350 < m_{\mathrm {jj}} \le 700\), \(p_{\mathrm {T}}^\mathrm {H} < 200 \) GeV and \(\vert { y_\mathrm {H}}\vert <2.5\) will be referred to as category 1 or simply C1, whereas the region where \( m_{\mathrm {jj}} > 700\), \(p_{\mathrm {T}}^\mathrm {H} < 200 \) GeV and \(\vert { y_\mathrm {H}}\vert <2.5\) or \(m_{\mathrm {jj}} > 350 \) GeV, \(p_{\mathrm {T}}^\mathrm {H} > 200 \) GeV and \(\vert { y_\mathrm {H}}\vert <2.5\) will be named category 2 or C2. The analysis is thus performed within the particle-level categories summarized in Table 1.

The adopted baseline selection is inspired by the ones developed by the CMS collaboration for previous works [7]: at least two jets having \(p_{\mathrm {T}}>30\) GeV each and an invariant mass higher than 120 GeV are required; the leading and subleading leptons (\(\ell _1\) and \(\ell _2\) respectively) must pass \(p_{\mathrm {T}}\) thresholds dictated by the trigger requirements (\(p_{\mathrm {T}}^{\ell _1}>25\) GeV and \(p_{\mathrm {T}}^{\ell _2}>13\) GeV), while the \(p_{\mathrm {T}}\) of the third lepton, if present, is demanded to be below 10 GeV in order to suppress minor backgrounds, such as WZ and triboson production. Moreover, the dilepton invariant mass is required to be higher than 12 GeV to reduce the contribution from Quantum Chromodynamics (QCD) and low mass resonances. The condition of having different flavour lepton pairs in the event suppresses Drell–Yann (DY) production of \(\mathrm {ee}\) and \(\upmu \upmu \) pairs, while the cuts on the dilepton transverse momentum (\(p_{\mathrm {T}}^{\ell \ell }\)) and the transverse mass of the system (\(m_\mathrm {T}^\mathrm {H}\)), defined as

are necessary to reduce the DY production of \({\uptau \uptau }\) pair. An amount of \(E_\mathrm {T}^{\mathrm {miss}}\) higher than 20 GeV is requested because of the presence of the neutrinos in the event. The \(m_\mathrm {T}^{\ell _2}\) variable, defined as

is required to be above 30 GeV, hel** to reduce the non-prompt lepton background. Finally, the absolute value of the pseudorapidity of the leading and subleading jets (\({{\eta _\mathrm {j}}_{1}}\) and \({{\eta _\mathrm {j}}_{2}}\) respectively) have to be smaller than 4.7, to mimic the detector acceptance. The effect of the b-tagging algorithm that is used for the identification of jets coming from b quarks in real analyses is taken into account by applying the b-tagging efficiency on MC events at particle level: a b-jet tagging efficiency \(\epsilon = 94\%\) is assumed and all the MC simulated events are weighted by \((1-\epsilon )^{\mathrm {n}_\mathrm {b}}\), where \(n_b\) is the number of jets in the event that contain a B-hadron.

Two adversarial DNNs have been trained, sharing the same architecture described below, within the C1 and C2 phase spaces, respectively.

For what the classifier structure is concerned, three classes as targets have been defined, one for VBF and two for the backgrounds that have to be separated from the signal: ggH and BKG.

The label ggH refers to gluon-gluon fusion (ggH) processes while the BKG embeds both top quark events and non-resonant WW production.

The input variables \(x_1, ..., x_m\) of this network are the measurable kinematic variables of an event, which are detailed in the following. The activation function of the hidden layers is the rectified linear activation function (ReLU), while the output layer uses the softmax function.

The hyperparameters \(n_\mathrm {l}^\mathrm {C}\), \(\eta ^\mathrm {C}\) and \(n_{\mathrm {nodes}}\), which correspond to the number of hidden layers, the learning rate and the number of nodes in the hidden layers, respectively, have been optimized through the procedure detailed below.

Since six possible alternative physics model with respect to the SM have been considered, the adversary has instead seven classes, labeled VBF_SM,VBF_H0M, VBF_H0PH, VBF_H0L1, VBF_H0Mf05, VBF_H0PHf05, and VBF_H0L1f05, respectively. The first string refers to the VBF signal events generated assuming the SM hypothesis; the second one labels the signal events obtained according to H0M model, and so on.

The input layer of this network is the second-to-last layer of the classifier. The ReLU activation function is used for the hidden layers, while the softmax function is used for the output layer.

As for the classifier, the number of hidden layers \(n_\mathrm {l}^\mathrm {A}\), the learning rate \(\eta ^\mathrm {A}\) and the number of nodes in each hidden layer \(n_{\mathrm {nodes}}\) have been optimized. Note that the same number of nodes in the hidden layers for both C and A has been chosen.

4.1 Training procedure

The training has been carried out on MC simulations and the following samples have been chosen: SM ggH production, SM non-resonant WW and top quark production, which constitute the background event samples, and finally the VBF signal. The latter has been generated separately according to each of the seven considered physical hypotheses.

MC samples are simulated at various perturbative orders in perturbative-QCD (pQCD), using different event generators.

Gluon-gluon fusion and vector boson fusion processes are generated with powheg v2 [8] at next-to-leading order (NLO) accuracy in QCD. The subsequent decay of the Higgs boson in two W bosons is performed using jhugen v7.1.4, which also simulates the leptonic decay of the vector bosons. The BSM samples which describe anomalous HVV couplings are generated with powheg v2. For what concerns background processes, the non-resonant WW events sample is simulated using two different generators, depending on the production processes:  is generated with powheg v2 at NLO accuracy while the \(\mathrm {gg }\rightarrow \mathrm {WW}\) events simulation is performed by mcfm v7.0.1 at leading order (LO) accuracy. Single top and top-antitop pair production are generated with powheg v2 at NLO accuracy. In order to provide simulation of initial and final state radiation, hadronization, and underlying event, all the event generators are interfaced to pythia 8.1 [9].

is generated with powheg v2 at NLO accuracy while the \(\mathrm {gg }\rightarrow \mathrm {WW}\) events simulation is performed by mcfm v7.0.1 at leading order (LO) accuracy. Single top and top-antitop pair production are generated with powheg v2 at NLO accuracy. In order to provide simulation of initial and final state radiation, hadronization, and underlying event, all the event generators are interfaced to pythia 8.1 [9].

The events entering the training are selected through the kinematic requirements corresponding to the global selection listed in Table 1.

The training procedure has been performed using the Keras [10] and TensorFlow [11] libraries.

A set of input variables that highlight the signal characteristics with different degrees of discriminating power with respect to the other processes has been defined. The input variables chosen for the training are defined at particle-level and are listed below:

-

\(p{_{\text {T}_{\text {j}_1}}}\) , \(p_{{\mathrm {T}_{\mathrm {j}_2}}}\): the magnitudes of the transverse momenta of the leading and subleading jet, respectively;

-

\(\eta _{\mathrm {j}_1}\): the pseudorapidity of the leading jet \(j_1\). Since it is typically generated by the hadronization of a q quark with a high fraction of the proton four-momentum, \(j_1\) coming from the VBF process tends to be emitted at large \(\vert {\eta }\vert \). Instead, background events are characterized by the presence of a leading jet in the central region i.e., at low \(\vert {\eta }\vert \);

-

\(\eta _{\mathrm {j}_2}\): the pseudorapidity of the subleading jet. Its distribution is similar to \(\eta _{j_1}\) for the VBF process, while background events show homogeneous shapes throughout the range of possible values of this variable;

-

\(\vert {\Delta \eta _{\mathrm {jj}}}\vert \): the separation in \(\eta \) between the two jets in the final state. The signal shows a larger pseudorapidity gap with respect to the backgrounds;

-

\(m_{\mathrm {jj}}\): the invariant mass of the dijet system. It has a good discrimination power since it is typically larger for the signal than for the background;

-

\(p_{\mathrm {T}}^{\ell \ell }\), \(p_{\mathrm {T}}^{\ell _1}\), \(p_{\mathrm {T}}^{\ell _2}\): the magnitudes of the transverse momenta of the dilepton system, the leading lepton, and the subleading lepton, respectively;

-

\(\eta _{\ell _1}\), \(\eta _{\ell _2}\): the pseudorapidity of the leading and subleading lepton, respectively. Similarly to \(p_{\mathrm {T}}^{\ell \ell }\), \(p_{\mathrm {T}}^{\ell _1}\) and \(p_{\mathrm {T}}^{\ell _2}\), these variables are not expected to have a high discrimination capability individually, but are included to exploit their correlation with other observables;

-

\(m_{\ell \ell }\): the invariant mass of the lepton pair. Due to the spin correlation effect in the \(\mathrm {H}\rightarrow \text {WW}\rightarrow 2\ell 2\nu \) decay chain, this variable is peaked at low values for the VBF and ggH mechanisms, while showing a broadened shape for non-resonant events;

-

\(\Delta \phi _{\ell \ell }\): the angular separation in \(\phi \) between the two leptons in the final state. For signal events, it has a smaller value than in the non-resonant case, since the spin correlation effect forces the directions of the two charged leptons to be nearly collimated;

-

\(\Delta R_{\ell \ell }\): the radial separation between the two leptons in the final state. Similarly to \(\Delta \phi _{\ell \ell }\), this variable is almost flat for events without a resonance;

-

\(m_{\ell \mathrm {j}}\): the invariant mass of the system consisting of the \(\ell \)-th lepton and j-th jet, where \(\ell =\{\ell _1, \ \ell _2\}\) and \(j=\{j_1, \ j_2\}\). There are four possible combinations which have limited, but not completely negligible, discrimination power;

-

\(C_{\mathrm {tot}}=\log \Bigl (\sum \limits _{\ell }\vert {(2\eta _{\ell }-\sum \limits _{\mathrm {j}}\eta _\mathrm {j})}\vert /\vert {\Delta \eta _{\mathrm {jj}}}\vert \Bigr )\), where \(\ell =\{\ell _1, \ \ell _2\}\), \(j=\{j_1, \ j_2\}\): it represents a measure of how much the charged leptons are emitted centrally with respect to the dijet system;

-

\(E_\mathrm {T}^{\mathrm {miss}}\): the missing transverse energy;

-

\(m^\mathrm {H}_\mathrm {T}\): the transverse mass of the system. Background events are more likely to present a small value of this variable;

-

\(m^{\mathrm {vis}}=\sqrt{(p^{\ell \ell } +E_\mathrm {T}^{\mathrm {miss}})^2-(\vec {p}^{\ \ell \ell }+\vec {E}^{\ \mathrm {miss}}_\mathrm {T})^2}\): the visible mass, which includes the longitudinal momentum of the dilepton system. It tends to have a different shape for events containing the production of a Higgs boson;

-

\(\Delta \phi (\vec {p}^{\ \ell \ell }_\mathrm {T}, \vec {E}^{\ \mathrm {miss}}_\mathrm {T})\): the azimuthal opening angle between \(\vec {p}^{\ \ell \ell }_\mathrm {T}\) and \(\vec {E}^{\ \mathrm {miss}}_\mathrm {T}\). It has a good discriminating power and it is included in the definition of \(m^\mathrm {H}_\mathrm {T}\);

-

\(H_\mathrm {T}\): the scalar sum of the transverse momenta of all jets in the event. It gives a measure of the hadronic activity of the event.

4.2 Optimization and performance

As anticipated before, since the kinematic properties of signal and background events depend on the values of \(m_{\mathrm {jj}}\) and \(p_{\mathrm {T}}^\mathrm {H}\), in order to add more flexibility to the model and test it within two different phase space regions, two different ADNNs have been implemented: the first network is trained on events with

while the second one learn on events with

namely the phase space region C1 and C2 defined in the previous Section. Therefore, requirements 6 and 7 are added to selection criteria of training events of the first and the second ADNN respectively. The decision to train two different ADNNs in C1 and C2 is not strictly necessary, but it allows testing the capability of the adversarial approach on more than one phase space region.

The training samples of the ADNN for 6 is composed by about 65,000 events, while the ADNN 7 is trained on about 47,000 events. Both training samples are equally divided between the three considered physical processes, i.e. VBF, ggH and background events. The VBF signal sample is in turn made up of events coming from the seven possible domains in equal proportions. The sample of background events is made of non-resonant WW (including the gluon-induced process and the electroweak production), \(\mathrm {t}\bar{\mathrm {t}}\) and \(\mathrm {tW}\) events, according to the proportions predicted by the SM.

Both the training samples have been divided into two subsets: 80% of the total events is used for the learning procedure whereas the remaining 20% is reserved for validation.

The hyperparameters of the ADNNs have been optimized following the procedure explained in Sect. 3.1. The hyperparameters that were optimized are \(n_{l}^{\mathrm{C}}\), \(\eta ^\mathrm {C}\), \(n_\mathrm {l}^\mathrm {A}\), \(\eta ^\mathrm {A}\), \(n_{\mathrm {nodes}}\) and \(\alpha \) for both ADNNs; for the optimization, the Optuna software [12] was employed, which enabled the maximization of the classifier categorical accuracy and minimization of the average K–S test statistic simultaneously. Both the chosen objective functions have been evaluated on the validation set at the end of each optimization trial. First, 100 training trials with 800 epochs each have been executed, varying the values of all the hyperparameters according to a Bayesian optimization approach [13] within defined ranges. Then, the training which provided the best combination of a high categorical accuracy value and a low average K–S test statistic among all the attempts has been chosen as best trial and the \(n_{l}^{\mathrm{C}}\), \(n\mathrm {_l^A}\) and \(n_{\mathrm {nodes}}\) parameters have been fixed to their best value. Finally, a second set of training trials has been repeated in order to optimize the learning rates and the \(\alpha \) parameter.

The intervals associated with possible outcomes of the hyperparameters are reported in the Table 2 while the hyperparameters numerical values, as well as the categorical accuracy and average K–S test values, corresponding to the chosen best trial for both the ADNNs are summarized in Table 3. The p value of the K–S test statistic of the ADNN trained in C1 (C2) ranges from 6% (7%), for the model with the worst compatibility with the SM, to 50% (59%) for the best, with a median value across the six models of 34% (41%).

Once the hyperparameters have been set to their best estimation, the networks have been retrained increasing the number of learning epochs to 1200 and 1400 for the first and the second ADNNs, respectively, and with a batch size equal to the entire training sample.

As an example, in the following some results regarding the performances of the ADNN trained in C1 are reported. The same set of results has been studied for the second ADNN, drawing the same conclusions reported below.

Figure 2 shows the loss function of the adversary, of the classifier and the combined loss function as a function of the number of epochs. The constant trend exhibited by the loss of the adversary at the end of the training is expected, and is due to the performance of the adversary being equivalent to a random guessing.

Normalized distributions of the VBF-output on signal events simulated under the seven considered hypotheses, evaluated on the training sample (top) in C1. The uncertainty associated with the limited size of simulated samples is reported only in the SM distribution. Three distributions related to the SM, H0M and H0PH models have been tested simultaneously in the training and validation sample (bottom). The solid lines correspond to the distributions obtained on training set, while the dots with statistical error bars stand for validation sample

In order to check the effectiveness of the procedure, the distributions of the VBF-output for signal events coming from all domains have been compared separately for each of the two ADNNs. The results are shown in Fig. 3. For the sake of clarity, the uncertainty associated with the limited size of simulated samples has been drawn only for the SM VBF distribution. Similar uncertainties are present also in the BSM distributions, since the number of events for each signal hypothesis in the training sample is the same. The shapes of the distributions are in fair agreement with each other, confirming that the classifier is unable to recognize the domain of origin i.e., the signal model of the VBF events. The impact of the remaining shape differences is addressed in the bias studies which will be presented in Sect. 4.4. Moreover, in order to check for overtraining of the algorithm, the distributions obtained from the validation sample have been compared to the ones obtained from the training one. For the sake of clarity the distributions of only three signal models (SM, H0M and H0PH) are reported. Given that the shapes of the training distributions are compatible with the validation ones within the statistical accuracy, it was concluded that the algorithm does not show signs of overtraining.

The signal-to-background discriminating power of the ADNN is highlighted in Fig. 4, where only the SM VBF contribution is reported. The VBF-output exhibits a good capability of discriminating between background and signal events, whereas it is not as effective in distinguishing the VBF production mode from ggH. The performances on the validation sample are also taken into consideration and are shown superimposed to the training ones using dots with statistical error bars.

To assign events to classes, the class with the highest score is picked.

The classification accuracy of the ADNN is quantified by the confusion matrix, reported in Fig. 5. The matrix shows that 71%, 52% and 91% of the VBF, ggH, and background events, respectively, are correctly categorized into the corresponding class. The ADNN trained in C2 has approximately the same overall discriminating power, properly classifying about 65% events of both VBF and ggH and 90% of background.

Once the networks have been trained and the models parameters fixed to their best values, the ADNNs have been tested on the events selected by the analysis requirements. Figure 6 shows the normalized distributions of the VBF-output of both ADNNs evaluated on all the considered signal models, while the distributions on signal and background events are reported in Fig. 7, evaluating each ADNN in the corresponding STXS bin. In both figures the contributions of the various processes that are expected assuming a total integrated luminosity equal to 138 fb\(^{-1}\) are illustrated. The following binning scheme is adopted: [0.0, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 1.0].

Distribution of the VBF-output of both the ADNNs in the corresponding STXS bin. The VBF signals are shown superimposed on backgrounds templates, which are instead drawn stacked on top of each other. A logarithmic scale is used. The dashed uncertainty band corresponds to the total systematic uncertainty in the background templates

With the aim of comparing the effectiveness of the ADNN with a standard feed-forward DNN used for discriminating purposes, a simpler DNN without the adversarial term and only trained on the SM signal has been also implemented. The entire analysis procedure has been repeated employing for the event categorization and for the template fit two DNNs, one for each of the considered bins. These networks take the same kinematic distributions as input variables and their learning rate, number of hidden layers and number of nodes in each hidden layer were optimized by maximizing the categorical accuracy. Similarly to what has been done for the optimization of the ADNNs, 100 training trial of 300 epochs each has been generated, varying the hyperparameters according to the Bayesian approach within the same ranges reported in Table 2.

The best trial chosen for the DNN trained in C1 provides a categorical accuracy of 72% and requires only one hidden layer, composed of 60 nodes and a learning rate of 0.00055. Instead, the DNN trained in C2 is composed of one hidden layers of 86 nodes, has a learning rate of 0.00079 and a categorical accuracy of \(78\%\).

Since the adversarial term is missing, the DNNs performance is expected to strongly depend on the signal physics model assumption. This behavior is indeed observed in Fig. 8, where the normalized distributions of the VBF-output for SM signal events and events stemming from the alternative signal models are shown. The different shapes are due to the presence of input features that have distributions strongly dependent on the theoretical signal modeling, such as \(m_\mathrm{{T}}^\mathrm{{H}}\), \(\Delta \eta _{jj}\), \(H_T\) and \(p_T\) of the jets.

As a further study, a DNN has been trained on a VBF signal sample constructed with a mixture of SM and BSM models in equal proportions, together with the SM gluon–gluon fusion and background events. This approach can be seen as intermediate in complexity between the DNN trained on the SM only and the ADNN. The structure of this DNN has been defined by following the same optimization strategy described for the DNN trained on SM events only. For the case of the C1 category, for example, an architecture composed of 2 hidden layers of 77 nodes each was defined and trained with 250 epochs and a learning rate equals to 0.00077. The distributions of the VBF-output on both SM and BSM signal events are shown in Fig. 9: shapes differ significantly, albeit less than in the case of the SM-trained DNN, showing a residual degree of dependence on the physics model assumption for the signal process. In particular, the network is able to better discriminate the BSM signal with respect to the SM hypothesis. Therefore, even a DNN trained on a mixture of different signal hypotheses is not able to provide a model independent classification at a level that is comparable to that achieved with the ADNN.

4.3 Agnosticism against unseen signal models

When building a discriminator with the ADNN approach one needs to mind the fact that the model chosen by Nature is unknown and in general different from the ones the discriminator has been trained on. This is indeed the primary reason why one wants the discriminator performance to be model independent in the first place. It is thus important to establish a procedure to evaluate the degree of model independence of the discriminator with respect to signal models unseen in the training. In the case under study one may expect that an unknown mixture between CP-even and CP-odd couplings is found in Nature. A check of the model independence against a generic mixture that was not used in the training is needed to assess the residual model dependence.

As an application of this procedure in the \(\mathrm {H}\rightarrow \mathrm {W^+}\mathrm {W^-}\) case study, an ADNN was trained excluding one of the mixed models and compared the discriminator shape between the models used in the training and the excluded one. Results obtained in C1 are shown in Fig. 10, where the discriminator shape for the model excluded from the training (ticker line) is compared to that of those models that were used instead. In this case, having trained against the pure models is sufficient for the discriminator to be agnostic against the mixed model.

This result may be peculiar to the case under study, but it is important to stress that it is always possible to test the ADNN performance against unseen models, and, in case of unsatisfactory results, enrich the set of models in the training to achieve the desired level of model independence. This is particularly interesting when paired with the fact that any deviation from the SM can be modeled in the effective field theory approach by a finite set of operators, and that one can, at least in principle, train a discriminator to be agnostic with respect to all of them (or, more realistically, all the ones that have an influence on the observables that are being measured).

4.4 Signal extraction and bias estimation

The attention is now turn to the estimation of the residual bias in the measurement, to quantify the reduction made possible by the ADNN approach presented in this paper.

The signal extraction is performed through a binned maximum likelihood fit. The number of observed data \(N_{\mathrm {i,obs}}\) in the i-th bin is expected to follow a Poisson distribution with mean value (\(\mu s_\mathrm {i} + b_\mathrm {i}\)), where \(s_\mathrm {i}\) and \(b_\mathrm {i}\) are the expected signal and background yields in the bin, respectively. The signal strength modifier \(\mu \) is introduced to check the agreement between the measured number of signal events and the prediction of the considered physics hypothesis, and is defined as the ratio between the measured signal cross section and the expected one:

To take any systematic error affecting the MC simulation into account, each source of uncertainty is modeled as an individual nuisance parameter \(\nu \). The likelihood function is then expanded by including a corresponding constraint \({\mathcal {N}}(\varvec{\nu })\). Only the uncertainties related to the signal and background processes theoretical modeling i.e., scale and parton distribution function uncertainties, have been considered.

In conclusion, the likelihood function used for the template fit is:

Although the nuisance parameters are determined through the fit procedure, they are not relevant for the analysis purpose and the only parameter of interest is the signal strength modifier \(\mu \).

With the goal of comparing the performance of the ADNN and the DNN trained on the SM only, the uncertainties on the measured VBF cross section was evaluated by employing the VBF-output of the two types of networks. To do so, only the SM contribution was considered as the signal process. A data set was constructed by generating the observed numbers of events in each bin such that they are equal to the sum of the expected signal and background events and with a Poisson uncertainty. This leads to a pseudo data set matching exactly the sum of all MC histograms, in which the value of the parameter of interest \(\mu \) returned by the template fit is equal to 1 by construction. A data set with these properties is called an Asimov data set. Therefore, as a first step, the fit procedure is carried out on the Asimov data set corresponding to the assumption of SM cross sections and by using the template distribution of the VBF-output of the classifier both in C1 and C2. The Table 4 summarizes the uncertainties in the signal strength parameters, corresponding to \(68\%\) Confidence Level (CL) intervals, obtained considering the VBF production mechanism as the signal process. The uncertainty associated with the limited size of MC samples is roughly 23–27% (25%) of the total uncertainty in C1 (C2) using both the ADNN and DNN, whereas the systematic contribution is the 20–40% (10–15%) of the total uncertainty in C1 (C2). The total signal strength uncertainty observed in the two categories when the ADNN is used is found to be comparable with the DNN one within the MC sample size uncertainties. Therefore, the adversarial term does not lead to a sizable worsening of the performance. This is expected since the ADNN and the DNN have similar power in discriminating signal from backgrounds.

Subsequently, an Asimov pseudo-data set is generated assuming one of the considered signal BSM hypothesis and all background contributions. This data set is then fitted using the SM signal and background MC templates. For each category, the fit provides the estimator \(\hat{\mu }\) which is equal to the number of measured BSM signal events over the number of expected SM signal events:

In this particular case of study, \(\hat{\mu }\) corresponds to the ratio between the measured BSM cross section and the expected SM one. Let \(\tilde{\mu }\) be defined as the ratio of the BSM and SM cross sections:

Therefore, \(\tilde{\mu }\) is the signal strength that one would expect to measure if there was no bias due to the shape effect in the fit procedure. The (B)SM cross section is given by the number (B)SM events that enter in the considered category \(N_{\mathrm {(B)SM}}\) divided by the integrated luminosity \({\mathcal {L}}\). Finally, the total bias as a fraction of the expected value \(\tilde{\mu }\) is introduced:

The bias quantities estimated with the DNN trained on SM events and the ADNN are shown in Table 5 for all the BSM hypotheses except the H0L1. Given its small cross section, no events generated according to the H0L1 model enter the analysis phase space.

As already said, these BSM models describe HVV couplings that are extremely different from the SM case and this is also outlined by the fact that the fit provides values of the \(\hat{\mu }\) parameter that are much higher than one.

Using the ADNN for the analysis procedure allows to strongly reduce the bias quantities in both the C1 and C2 regions with respect to the case where the DNN is employed. This behavior is expected since, as illustrated in Fig. 8, distributions of the VBF-output of the DNN on all the BSM signal events differ greatly from the one on SM signal events. In quantitative terms, the DNN bias is roughly 20–80% of the expected value \(\tilde{\mu }\), depending on the BSM theory used to generate the Asimov data set and on the category in which the analysis is carried out. The C2 category shows model dependence values larger than the ones affecting the C1 region, but this is justified by the fact that the difference in the VBF-output shape between SM and BSM events is even more pronounced when compared to the C1 category. On the other hand, the ADNN makes it possible to reduce the bias to less than 10% of \(\tilde{\mu }\) for almost all the BSM models. Moreover, the ADNN biases are comparable in magnitude with respect to the statistical uncertainty on \(\hat{\mu }\) related to the finite size of the MC samples, whereas for the DNN case biases are 5–10 times larger than such uncertainties. For what the systematic component is concerned, in both the categories it spans from 75 to 95% of the total error depending on the BSM model used to generate the Asimov template.

Same results are also summarized in Fig. 11, where the bias estimated using each BSM theories is reported for both the C1 and C2 categories.

Figure 12 shows the resulting bias quantities as a function of the expected signal strength modifier value, estimated with both the DNN and the ADNN in each of the two categories. It can be noticed that the bias is a roughly constant fraction of the \(\tilde{\mu }\) parameter when the ADNN or the DNN is employed, and this fraction is decreased to values below 10% through the usage of the ADNN in both the categories.

5 Conclusion

In this work, an implementation of the domain adaptation technique for defining a learning algorithm that is independent on the data sample on which it is trained has been proposed. The implementation is based on a system of two neural networks trained in a competitive way with an adversarial technique (ADNN).

To confirm the effectiveness of this approach, it was applied to the use case of the \(\mathrm {H}\rightarrow \mathrm {W^+}\mathrm {W^-}\) STXS cross section measurement at the LHC with the aim to reduce the model dependence of the final results introduced by the signal extraction procedure. The measurement targets the VBF process as the signal process and it has been performed at particle-level, employing a simulated data sample from p-p collisions corresponding to an integrated luminosity of 138 fb\(^{-1}\). Compared to a standard feed-forward deep neural network, the usage of the ADNN allowed the measurement of the VBF cross section with the same level of precision, but significantly reducing the measurement bias due to the signal modeling assumptions.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: This manuscript describes a general approach to provide model independent cross section measurements in High Energy Physics. The synthetic data described in the text have been used only with the purpose of validating the method for a potentially interesting use case, therefore the value of publishing the data is deemed to be limited.]

References

N. Berger et al., Simplified template cross sections—stage 1.1. LHCHXSWG-2019-003 (2019). ar**v:1906.02754

M. Kuusela, V.M. Panaretos, Statistical unfolding of elementary particle spectra: empirical bayes estimation and bias-corrected uncertainty quantification. Ann. Appl. Stat. (2015). https://doi.org/10.1214/15-aoas857

S. Ben-David, J. Blitzer, K. Crammer, A. Kulesza, F. Pereira, J. Vaughan, A theory of learning from different domains. Mach. Learn. 79, 151–175 (2010). https://doi.org/10.1007/s10994-009-5152-4

Y. Ganin, E. Ustinova, H. Ajakan, P. Germain, H. Larochelle, F. Laviolette, M. Marchand, V. Lempitsky, Domain-adversarial training of neural networks. J. Mach. Learn. Res. 17, 2030–2096 (2016). ar**v:1505.07818

G. Louppe, M. Kagan, K. Cranmer, Learning to pivot with adversarial networks (2016). ar**v:1611.01046

A.V. Gritsan, J. Roskes, U. Sarica, M. Schulze, M. **ao, Y. Zhou, New features in the jhu generator framework: constraining higgs boson properties from on-shell and off-shell production. Phys. Rev. D (2020). https://doi.org/10.1103/physrevd.102.056022

CMS Collaboration, Measurements of the Higgs boson production cross section and couplings in the W boson pair decay channel in proton–proton collisions at \({\sqrt{s}}\) = 13 TeV. Submitted to EPJC (2022). ar**v:2206.09466

P. Nason, A new method for combining NLO QCD with shower Monte Carlo algorithms. JHEP 11, 040 (2004). https://doi.org/10.1088/1126-6708/2004/11/040

T. Sjöstrand, S. Mrenna, P.Z. Skands, A brief introduction to PYTHIA 8.1. Comput. Phys. Commun. 178, 852–867 (2008). https://doi.org/10.1016/j.cpc.2008.01.036

F. Chollet et al., Keras (2015). https://github.com/fchollet/keras

M. Abadi, A. Agarwal, P. Barham, E. Brevdo, Z. Chen, C. Citro, G. S. Corrado, A. Davis, J. Dean, M. Devin, et al., Tensorflow: large-scale machine learning on heterogeneous distributed systems (2016). ar**v:1603.04467

T. Akiba, S. Sano, T. Yanase, T. Ohta, M. Koyama, Optuna: a next-generation hyperparameter optimization framework, in Proceedings of the 25rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (2019). https://doi.org/10.48550/1907.10902. ar**v:1907.10902

A. Klein, S. Falkner, S. Bartels, P. Hennig, F. Hutter, Fast Bayesian optimization of machine learning hyperparameters on large datasets, in Proceedings of the 20th International Conference on Artificial Intelligence and Statistics, (2017) p. 528–536. https://doi.org/10.48550/1605.07079. ar**v:1605.07079

Acknowledgements

The authors acknowledge the CMS Collaboration, in particular the computing, Monte Carlo, and \(\mathrm {H}\rightarrow \mathrm {WW}\) working groups, for providing the computing resources used for the Monte Carlo event simulation of the physics processes studied in this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3. SCOAP3 supports the goals of the International Year of Basic Sciences for Sustainable Development.

About this article

Cite this article

Camaiani, B., Seidita, R., Anderlini, L. et al. Model independent measurements of standard model cross sections with domain adaptation. Eur. Phys. J. C 82, 921 (2022). https://doi.org/10.1140/epjc/s10052-022-10871-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-022-10871-3