Abstract

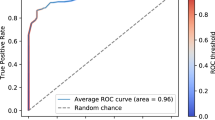

The UK COVID-19 Vocal Audio Dataset is designed for the training and evaluation of machine learning models that classify SARS-CoV-2 infection status or associated respiratory symptoms using vocal audio. The UK Health Security Agency recruited voluntary participants through the national Test and Trace programme and the REACT-1 survey in England from March 2021 to March 2022, during dominant transmission of the Alpha and Delta SARS-CoV-2 variants and some Omicron variant sublineages. Audio recordings of volitional coughs, exhalations, and speech were collected in the ‘Speak up and help beat coronavirus’ digital survey alongside demographic, symptom and self-reported respiratory condition data. Digital survey submissions were linked to SARS-CoV-2 test results. The UK COVID-19 Vocal Audio Dataset represents the largest collection of SARS-CoV-2 PCR-referenced audio recordings to date. PCR results were linked to 70,565 of 72,999 participants and 24,105 of 25,706 positive cases. Respiratory symptoms were reported by 45.6% of participants. This dataset has additional potential uses for bioacoustics research, with 11.3% participants self-reporting asthma, and 27.2% with linked influenza PCR test results.

Similar content being viewed by others

Background & Summary

The scale and impact of the COVID-19 pandemic has created a need for rapid and affordable point-of-care diagnostics and screening tools for infection monitoring. The possibility of accurate and generalisable detection of COVID-19 from voice and respiratory sounds using audio classification on a smart device has been hypothesised as a way to provide a non-invasive, affordable and scalable option for COVID-19 screening for both personal and public health monitoring1. However, prior machine learning studies to determine the feasibility of COVID-19 detection from audio have largely relied on datasets which are too small or unrepresentative to produce a generalisable model, or which include self-reported COVID-19 status, rather than gold standard PCR (Polymerase Chain Reaction) testing for SARS-CoV-2 infection (see Table 1). These datasets have a relatively small proportion of positive cases, and include inadequate metadata for statistical evaluation. They largely do not enable studies using them to meet diagnostic reporting criteria (for example the STARD 20152 and forthcoming STARD-AI3 criteria), such as: reporting the interval between reference test and recording, random sampling, or avoiding case control where positives and negatives are sourced from different recruitment channels.

Following the publication of initial studies reporting accurate classification of SARS-CoV-2 infection from vocal and respiratory audio4,5, the UK Health Security Agency (UKHSA, formerly NHS Test and Trace, the Joint Biosecurity Centre, and Public Health England) were commissioned to collect a dataset to allow for the independent evaluation of these studies. Dataset analysis was carried out by The Alan Turing Institute and Royal Statistical Society (Turing-RSS) Health Data Lab (https://www.turing.ac.uk/research/research-projects/turing-rss-health-data-lab). A dataset larger than the majority of existing datasets was needed to provide sufficient instances of various recording environments and mobile devices (information which is not collected), and to provide sufficient instances for the thousands of features or representations typically produced from short vocal audio samples6. Such a dataset also needed to be sufficiently large and diverse to validate model performance across various participant demographic groups and presentations of SARS-CoV-2 infection.

UKHSA developed an online survey to collect a novel SARS-CoV-2 bioacoustics dataset (Fig. 1a,b) in England from 2021-03-01 to 2022-03-07 (Fig. 1d), during dominant transmission of the Alpha and Delta SARS-CoV-2 variants and some Omicron variant sublineages7. Participants were recruited after undergoing testing for SARS-CoV-2 infection as part of the national “Real-time Assessment of Community Transmission” (REACT-1) surveillance study (https://www.imperial.ac.uk/medicine/research-and-impact/groups/react-study/studies/the-react-1-programme/) and the NHS Test and Trace (T&T) symptomatic testing programme in the community (known as Pillar 2)8. To facilitate independent validation of existing models, audio samples common across existing studies were collected in the online survey, including: volitional (forced) cough, an exhalation sound, and speech. These were linked to SARS-CoV-2 testing data (method, results, date) for the test undertaken by the participant either as part of REACT-1 or T&T. Further data on participant demographics (age, gender, ethnicity, first language, location) and symptoms (type and date of onset) were collected in the online survey to monitor potential bias.

Study recruitment: (a) Illustration of dataset components. Participants of the REACT-1 study, or NHS Test and Trace patients underwent a test for SARS-CoV-2 infection. In this illustration the swab is healthcare worker-administered, however the majority of swab samples were self-administered in this study. Individuals from both cohorts were contacted and prompted to complete a digital survey, including recording a volitional cough and other respiratory sounds. SARS-CoV-2 test result data and associated information was combined with survey results and audio recordings, and was de-identified. This data descriptor document describes the process of producing the combined dataset, and its contents. This illustration is created by Scriberia with The Turing Way community (used under a CC-BY 4.0 licence https://doi.org/10.5281/zenodo.6821117). (b) Screenshots of the ‘Speak up and help beat COVID’ digital survey. (c) Participant survey completion rate by survey question across both recruitment modes. Completion counts were only collected for the ‘beta’ survey phase, described in Methods - Data Collection (with 59,431 participants equalling 81.4% of total dataset). (d) Participant records as a percentage of dataset total by week of survey submission, recruitment source, and SARS-CoV-2 test result. Individual REACT-1 survey rounds can be seen as peaks at irregular intervals. (e) Time of symptom onset, SARS-CoV-2 test swabbing (test start date), and SARS-CoV-2 test processing in relation to time of study survey submission in days, for each recruitment source. Percentages shown as the dataset total for each recruitment source. Symptom onset records shown only where symptoms were reported. Participants had been made aware of their SARS-CoV-2 test results on or shortly after the test processed date. REACT-1 participants who had an influenza test would have completed the test swab on the same date as their SARS-CoV-2 test, but would not have been made aware of the result.

The UK COVID-19 Vocal Audio Dataset9 is designed for studies examining the possibility of classification of SARS-CoV-2 infection from vocal audio, including for the training and evaluation of machine learning models using PCR as a gold-standard reference test10. The inclusion of influenza status (for REACT-1 participants in REACT rounds 16–18) and symptom and respiratory condition metadata may provide additional uses for bioacoustics research.

All summary statistics described in this manuscript reflect the open access version of the UK COVID-19 Vocal Audio Dataset9 unless otherwise stated. Differences between the protected and open access dataset are described in Methods - Data Anonymisation. The protected version of the UK COVID-19 Vocal Audio Dataset is fully documented in our pre-print data descriptorData anonymisation To enable wider accessibility, an open access version of the UK COVID-19 Vocal Audio Dataset9 was produced to protect participant anonymity according to the ISB1523: Anonymisation Standard for Publishing Health and Social Care Data standards (https://digital.nhs.uk/data-and-information/information-standards/information-standards-and-data-collections-including-extractions/publications-and-notifications/standards-and-collections/isb1523-anonymisation-standard-for-publishing-health-and-social-care-data). The audio recordings of read sentences were removed in the open access version of the dataset, on the basis that non-distorted speech data can constitute sensitive biometric personal information, carrying a risk of participant reidentification. Additionally, several participant metadata variables were either removed, binned, obfuscated, or pseudonymised to meet the requirement of K-3 anonymity after combining all variables relating to personal data. The total number of participants remained unchanged. Specifically, audio metadata relating to the audio recordings of read sentences were removed, including audio transcripts. Participant metadata variables relating to ethnicity, first language, vaccination status, height, weight, and COVID-19 test laboratory code were removed. Participant age was binned into age groups, and survey recruitment source variables were binned into general recruitment source groups. All dates were indexed to a random date and obfuscated with ± 10 days random noise. All dates included in the metadata are indexed to the same random date for comparison, and all dates for each participant have the same level of noise applied, to allow for the calculation of time differences at the participant level. Geographical information was originally collected at the local authority (sub-regional administrative division) level, and was later aggregated to region (first level of national sub-division) level and pseudonymised to avoid the risk of participant disclosure. A flagged COVID-19 test laboratory code in the protected dataset indicated a laboratory with reported false COVID-19 test results. All results from this laboratory have been set to None for the open dataset version, slightly altering overall counts of positive, negative and invalid results. All summary statistics presented in this article reflect the open access version of the UK COVID-19 Vocal Audio Dataset9 unless otherwise stated. A data dictionary for the open access version of the UK COVID-19 Vocal Audio Dataset metadata is provided in Supplementary Tables S3, S4. This study has been approved by The National Statistician’s Data Ethics Advisory Committee (reference NSDEC(21)01) and the Cambridge South NHS Research Ethics Committee (reference 21/EE/0036) and Nottingham NHS Research Ethics Committee (reference 21/EM/0067).Ethics

Data Records

The open access version of the UK COVID-19 Vocal Audio Dataset has been deposited in a Zenodo repository (https://doi.org/10.5281/zenodo.10043977)9, and is available under an Open Government License (v3.0, https://www.nationalarchives.gov.uk/doc/open-government-licence/version/3/). Additional data records as part of the protected dataset version may be requested from UKHSA (DataAccess@ukhsa.gov.uk), and will be granted subject to approval and a data sharing contract. To learn about how to apply for UKHSA data, visit: https://www.gov.uk/government/publications/accessing-ukhsa-protected-data/accessing-ukhsa-protected-data.

There were 72,999 participants included in the final dataset, with one submission per participant. This included 25,706 participants linked to a positive SARS-CoV-2 test. The majority of these submissions (70,565, 96.7%) were linked to results derived from PCR tests (RT-PCR, q-PCR, ePCR), followed by lateral flow tests (1,925, 2.6%) and LAMP (loop‐mediated isothermal amplification) tests (244, 0.3%). Of all test results, 257 (0.4%) were inconclusive with an unknown or void result. This dataset represents the largest collection of PCR-referenced audio recordings for SARS-CoV-2 infection to date, with approximately 2.6 times more participants with PCR-referenced audio recordings than the Tos COVID-19 dataset (with 27,101)46, NHS Test and Trace users who took a PCR or LAMP test 25-02-2021 to 02-03-202233, participants in REACT-1 rounds 13–1834,35,36,37. Data for England only. Where study data demographic distributions are compared to NHS Test and Trace and REACT-1, only the study data subset from each respective recruitment channel are used. NHS Test and Trace and REACT-1 data subset plots also compare the split of SARS-CoV-2 positive and negative cases between this study and the cohort reference. Category groups are created according to how baseline data is reported. ‘Unknown’ categories are not displayed. NHS Test and Trace ethnicity data by week is not publicly available. *Y&H = Yorkshire and The Humber. Granular age data, ethnicity group and UK region data are available only in the protected version of the dataset, and are presented here for context.

The substantial majority of participants (94.5%) report English as their first language or the language most commonly spoken at home (if they have two or more first languages). Therefore, any analysis of speech data may only be valid in English speakers and should be tested in other populations before language-generalisable results are reported. Regional accents may have an effect on speech models. Recruitment is relatively balanced by administrative region, particularly for REACT-1-recruited participants. As a result, the audio data may contain a representative sample of regional English accents. Most study participants were recruited in England and so, more speech data would be needed to evaluate accents which are more common outside of England.

The participant metadata variables not captured directly in the digital survey (digital survey questions and related variables listed in Supplementary Table S1) were shared by the relevant recruitment channel (see Methods - Survey Design), where format and prompt vary. Efforts have been made to standardise data format between recruitment channels and are listed in the participant metadata dictionary (Supplementary Table S3). Users should note that some calculated variables, such as symptom_onset, continue to have values of distinct distributions despite this standardisation due to the variation in recruitment methods (patients seeking a test vs survey population). T&T- and REACT-derived demographic variables had limited multiple-choice options and limited ethnicity and gender categories were available, meaning some demographic analyses are not possible.