Abstract

Effective science instruction and associated student learning is reliant upon a strong foundation of teacher content knowledge. This study of the PraxisⓇ General Science Content Knowledge Test from May 2006 to June 2016 investigates content knowledge of 28,688 general science teacher candidates. Examinees performed well on Life Science topics while Earth & Space Science was identified as an area in need of support. Analysis of the assessment revealed differences in achievement associated with undergraduate major, gender, and ethnicity. Test-takers with STEM majors demonstrated stronger content knowledge than their out-of-field counterparts, that men outperformed women in the study, and White test-takers lost fewer scaled points than Black and Hispanic candidates. To strengthen recruitment and retention efforts, recommendations include reviewing our findings for alignment with state standards. This will facilitate development of comprehensive content knowledge professional learning experiences that will be used as an anchor for focused support on those topics where test-takers tend to demonstrate lowest proficiency.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Middle school science experiences play a pivotal role in forming students’ STEM identities as they progress through academic and career trajectories. Middle school science teachers are unique in that they require broader content knowledge (CK) across topics than their high school counterparts who primarily focus on one discipline. This means that they must be able to make abstract science concepts relatable to students with diverse learning needs [1]. At the center of science teacher research are efforts to improve student learning. For this to happen teachers must understand the content they teach. Teacher licensure testing is designed to ensure a baseline quality of teacher content knowledge [2, 3].

Due to a national shortage of certified science teachers in the United States, many states have been forced to adopt policies that allow teaching assignments across disciplines without having to demonstrate mastery in a specific field. Although the majority of states require disciplinary specific endorsements, many states continue to allow secondary science teachers to teach across disciplines with a general science certification [4]. An area of particular concern is that new science teachers are often assigned to teach out-of-field more frequently than those with more experience which ultimately negatively impacts development and leads to attrition [5, 6]. Understanding that teachers are primarily responsible for student learning outcomes, it is imperative that teachers are supported in CK development. In this study, we focus on CK of prospective general science teachers through analysis of the PraxisⓇ General Science Content Knowledge Test (GSCKT) with the objective of informing professional learning (PL) experiences.

2 Purpose of the study

Licensure testing is common, and nearly all states include them as part of the requirements for teaching in public schools. Across the United States, the PraxisⓇ II content knowledge tests are most commonly administered to assess knowledge and competencies of an entry-level teacher [3]. The GSCKT is a 2.5 h computer-delivered selected response examination. It includes 135 selected response questions assessing integration of basic topics in chemistry, physics, life science, and Earth science in alignment with the National Science Education Standards and National Science Teacher Association standards. Although content domains are determined by practitioners in each field [7], overall GS content categories include (1) Science Methodology, Techniques, and History, (2) Physical Science, (3) Life Science, (4) Earth and Space Science, (5) Science, Technology, and Society [8]. The original assessments are not publicly available, however, sample questions and content topics are available in the GSCKT Study Companion [8]. While ETS conducts a multi-state standard-setting study for each assessment, each state determines their passing score. This means that although the scaled score earned by each test is considered the same across states, the passing score may differ. Multiple test forms are offered throughout the year, and questions vary between test forms [7].

As part of the commitment to offer high quality tests with minimal bias, ETS evaluates the assessment using differential item functioning (DIF). DIF allows test developers to determine whether people in different groups (typically gender or race) perform differently on test items. Groups of people are matched using the content and skills scores from the test or section of the test. DIF occurs when people within matched groups perform differently on test items. Each question is assigned a category. Category A questions indicate least difference between matched groups. Category B questions have small to moderate differences, and Category C indicates questions with the greatest differences. Test developers select questions from category A whenever possible. Category B questions are only used if there are not enough category A questions, with preference given to those with the smallest DIF values. Developers only use category C questions if they are considered essential and must document the reasons why those questions are selected [9]. Similar to the methodology of ETS [9], the study included a DIF analysis. False positives and type I error were reduced during hypothesis testing through application of a False Discovery Rate [10], thus increasing statistical power. Subjects were grouped into quartiles and a logistic regression was run that included gender and race. Our analysis does not account for sample sizes. Results from these analyses are reported in Supplemental Materials Table B and C.

Teacher learning experiences are diverse and include everything from formal topic-specific seminars to informal collegial conversations in school buildings. They begin through teacher preparation programs and continue once teachers enter the classroom [11]. The content focus paired with how students learn that content is considered among top characteristics of effective teacher learning experiences influencing student achievement [2, 11]. Science instruction includes learning new ideas while unlearning old ones, therefore knowledge of and ability to reveal common misconceptions is central to designing quality learning experiences. Because middle school science teachers are frequently required to teach across disciplines it is important to leverage teacher training and PL opportunities that emphasize gaps in teachers’ content knowledge [2].

3 Research questions

This study investigates the following research questions (1) What are the correlations between personal and professional characteristics of PraxisⓇ General Science: Content Knowledge Test examinees and scaled score performance in the last decade? (2) How have examinees performed as a whole in each category on the PraxisⓇ General Science: Content Knowledge Test? (3) What are the correlations between personal and professional characteristics of PraxisⓇ General Science: Content Knowledge Test examinees and category performance in the last decade? What have been the relative category performances of examinees of varying characteristics?

4 Conceptual framework

It is our assertion that strong foundational CK has a positive impact on licensure examination performance. Teacher knowledge has a direct impact on instructional design and is considered among the most influential factors contributing to student achievement [2, 3, 12]. Figure 1 presents a model depicting the relationship between science teacher professional knowledge and skills and student learning. Quality instruction is influenced by science teacher identity as well as science teacher CK. Targeted professional learning experiences designed for the needs of GS teachers improve instructional quality in order to maximize student learning.

Model of science teacher professional knowledge and skills. Science teacher knowledge is influenced by topic specific knowledge as measured by the PraxisⓇ General Science Content Knowledge Test. For professional learning to improve the quality of science instruction, it must be sustained and intensive, variable inclusive, and include collective participation across the building and/or district. Adapted from Opfer & Pedder, [15], Menter & McLaughlin [16] and Ha et al., [17].

4.1 Science teacher identity

Teachers’ self-efficacy and multiple identities (personal and professional characteristics as presented within the dataset) associated with unique backgrounds influence development as they grow in their practice [13, 14]. Because of this, science instruction is impacted by the beliefs teachers hold about science teaching [14]. Strong content knowledge, in-field or out-of-field placement, access to mentors and learning communities are among factors contributing to recruitment and retention of STEM teachers [13].

Science teacher identity is dynamic and complex. It is a socially constructed ongoing process that describes the teacher within a personal and/or professional context [18, 19]. Student populations are becoming increasingly diverse, yet science learning environments tend to be Eurocentric [20, 21]. This results in overrepresentation of White middle-class candidates with monocultural perspectives within the STEM fields. Understanding that preservice teachers of color experience burdens including subliminal racism or impostor syndrome, it is thereby important to consider recruitment, retention, and professional learning efforts that foster development of underrepresented science teacher candidates [20, 21].

4.2 Quality instruction: teacher content knowledge & student learning

Quality science instruction includes learning experiences that challenge students to think deeply about phenomena and processes while critiquing and evaluating claims, constructing scientific explanations, and supporting arguments with evidence [22]. Relevant to student experiences, quality instruction supports authentic participation in science practices and exploration of student interests [23]. Instructional quality when thought of as a continuum, is influenced by licensure policies and practices. Learning to teach is dependent upon both CK and pedagogical content knowledge (PCK). CK differs PCK in that PCK is specific to the knowledge possessed by teachers that is used to transfer content to students and incorporates an understanding of how students learn those content and skills specific to the discipline [24,25,26]. Teacher CK impacts instructional design and is central to PCK. It is considered among the most influential factors contributing to student achievement [3, 12]. Much like their students, science teachers also enter the classroom with misconceptions about the content they teach [27].

4.3 Differentiated professional learning

High quality, differentiated PL is central to improving instruction, organizing curriculum, facilitating clear communication of ideas, and creating 3D science learning experiences. Within the context of the Next Generation Science Standards, this incorporates science & engineering practices, disciplinary core ideas, and cross cutting concepts [22]. An integral feature of PD theory of action is how to facilitate transfer of new ideas into systems of practice inside the classroom when knowledge and skills gained through PD commonly occur outside of the classroom [28]. Understanding what teachers need to know, how they have to know it, and how to help them learn it [24] has the potential to increase CK, PCK, and overall confidence in teaching. Because of this, teachers’ initial CK must be taken into consideration when planning for instructional support [2, 25].

5 Methodology

5.1 Research paradigm

This study was part of a project investigating personal and professional characteristics associated with outcomes on the PraxisⓇ Content Knowledge Tests [29,30,31,32,33]. Here we present findings about examinee performance on the PraxisⓇ GSCKT as a whole and at the category level. In order to gain insight into examinee performance on the assessment we followed a methodology similar to Ndembera et al., [30] which is reiterated below. (1) Regression of the examination as a whole; (2) Categorical percent correct; (3) Regression at the category level; (4) ANOVA at the category level; and (5) Scaled points lost per category. As deidentified human data is used in this study, all methods were carried out in accordance with relevant guidelines and regulations. This study was approved by the Institutional Review Board.

5.2 Data sample & collection procedure

The data analyzed in this study included all examinees who sat for the PraxisⓇ GSCKT from 2006 to 2016 and was obtained from ETS as part of a National Science Foundation project to assess subject matter knowledge of beginning STEM teachers in the US. Because the test-takers may take the exam more than once, the data was restricted to the highest score recorded for each examinee resulting in a study population of 28,688. Examinee data included self-reported demographic characteristics in response to survey questions asking about personal and professional characteristics as part of the examination. Selected demographics are presented in Table 1; full descriptive statistics are found in Supplemental Table A. Examinees selected from male/female options when reporting their biological sex. Here it should be noted that non-binary options were not available within the survey. Understanding that these terms reference biological sex rather than gender, we use terms such as man and woman when not discussing results directly. In order to maintain consistency with reporting on the PraxisⓇ ESS CKT we will use male/female options when discussing our results.

After exclusions were applied, the majority of test-takers were female, comprising 60.1% of the testing population as compared to 39.5% male. Of those who responded, white test-takers comprised 77.7% of the study population, Black and Hispanic test-takers represented 8.3% and 2.5% respectively. Biology undergraduate majors represented the largest testing population, comprising 29.2% followed by other non-STEM (24.8%), other STEM (11.8%), physical science (9.4%) and Earth & space science (3.8%). 77% of the testing population held undergraduate grade point averages (GPA) above 3.0. 59.3% reported that they had not yet entered the teaching field, 17.4% had completed more than 3 years of teaching, and 17.3% had 1–3 years of teaching. It can be inferred that these teachers registered for the assessment as part of an additional certification.

5.3 Data treatment

5.3.1 Regression model selection: scaled score

Over the decade studied, 13 test forms were administered, each with unique variation in number and difficulty of exam items. In order to adjust for exam difficulty when comparing relative performance between candidates and across years, ETS converts raw scores to scaled scores that range from 100 to 200 [7]. A stepwise linear regression was constructed utilizing tenfold cross validation to predict the effect of self-reported variables (Supplemental Materials Table A) on GSCKT performance. The regression model included combining repeated cross-sectional data and was limited to two-way interactions. This facilitated the disaggregation of population subgroups and identification of associations between self-reported demographic variables and scaled score on the GSCKT [34]. A descriptive analysis was then performed on the characteristics most strongly associated with GSCKT performance for comparison within groups.

5.3.2 Estimation of categorical percent correct

Information on the highest number of points each test taker earned per category was provided in the ETS dataset. Because it did not include the number of test items for each category, we used the highest reported number of items to represent the total number of questions. The following equation was used to estimate the categorical percentage score for each examinee and was repeated for each of the 13 versions of the assessment included in the study. Estimated percent correct per category was based on a weighted average of the percent correct per test form taking into consideration the number of examinees per test form.

Percent Correct = (number of correctly answered questions)/(highest items reported correct) × 100 [30, 33].

5.3.3 Regression model selection: category analysis

Associations between self-reported test-taker characteristics and category performance on the PraxisⓇ GSCKT were identified through a stepwise linear regression using a tenfold cross validation procedure. Results from the regression model informed the ANOVA model selection.

5.3.4 ANOVA model selection: category analysis

The regression model was extended through Analysis of Variance (ANOVA) calculations using SAS software, Version 9.4 to determine which were most strongly associated with variance (η2) in category level performance. For each category, the three variables explaining the greatest η2 were analyzed to estimate scaled points lost.

5.3.5 Estimation of scaled points lost: category analysis

Scaled points lost were calculated to determine examinees’ relative performance at the category level. Exam difficulty is accounted for in ETS reporting on performance on the assessment as a whole, not at the category level. Here scaled points lost for each category are reported using the equation:

Scaled points lost C1 = m(total number of questions C1) − m(number of correctly answered questions C1) where m was equal to the slope between scaled score and total questions correct on the exam [30, 33]. Demographic comparisons were made within categories (undergraduate major in Physical Science) but not across categories (undergraduate major in Physical Science vs. Earth and Space Science).

6 Results

6.1 Stepwise model

The stepwise linear regression yielded several statistically significant relationships between reported personal and professional characteristics and performance on the GSCKT. Undergraduate major, ethnicity and gender were identified by the regression model (Table 2) as the top demographic variables associated with performance. The independent variables in the model reliably predict test-taker performance as confirmed by the F values and associated P < 0.0001 values. Reported R2 and η2 values can be expressed as a percentage and provide information about the proportion of variance in the scaled score accounted for in the sample. Performance was relatively consistent across the decade studied, therefore, results are presented as an average. Variability of the mean scaled scores are indicated by whiskers and points outside the whiskers represent outliers (see Fig. 2).

6.2 Scaled score

Table 2 and Fig. 2 present results from the analysis of the PraxisⓇ GSCKT as a whole as represented by average scaled score and offer insight into research question 1. Undergraduate major (Fig. 2) explained 11% of the overall variance (Table 2) in the General Science CKT. Test-takers with physical science degrees demonstrated the highest performance on the assessment followed by Earth & space science, biology, and other STEM majors with average scaled scores of 175, 171, 167, and 166 respectively. Other STEM included majors such as engineering, mathematics, and computer science. Non-STEM majors demonstrated lowest performance on the assessment. Ethnicity (Fig. 2) explained 7% of the overall variance (Table 2) in the assessment; there are differences in achievement between White and Black or Hispanic test-takers. The greatest variability was found in Black and Hispanic test-takers, 144 and 158 average scaled points respectively. Over the decade studied we found that mean scaled scores of White examinees outperformed Black examinees by 20 scaled points and outperformed Hispanic examinees by 5 scaled points. In order to determine the extent to which the assessment serves as a barrier to the teaching field, additional information including other interacting factors is needed about the states in which Black examinees are likely to test. Gender explained 6% of the overall variance (Table 2) in the GSCKT, with males earning an average of 8 scaled points higher than females within the study sample.

6.3 Category analysis

Table 2 presents results from the category analysis of the PraxisⓇ GSCKT. To provide additional context for research question 2, our results and analysis focus on the Physical Science, Life Science, and Earth & Space Science categories because those most closely align with the undergraduate majors represented. Life and Earth & Space Science topics each comprised 20% of the exam. Estimated percent correct performance on Life Science and Earth & Space Science were 75% and 67%, respectively. Physical Science consists of questions assessing chemistry and physics topics. While it makes up the largest portion of the exam at 38%, it had the lowest estimated percent correct (64%).

The ANOVA model presented in Table 3 was developed as part of research question 3. Our correlational analysis of the stepwise linear regression at the category level revealed several statistically significant relationships. Table 3 presents examinee characteristics most strongly correlated with category performance on the PraxisⓇ GSCKT. For the three major categories assessed (Table 3), the statistical power of the reported F Values and accompanying p < 0.0001 values confirm significance of the relationship between demographic variables represented within the model and score variability at the category level. The large η2 effect sizes presented in Table 3 indicate strong relationships between reported demographic variables and category level performance. These data were further analyzed at the category level to make comparisons in scaled points lost and are presented as graphical representations in Fig. 3.

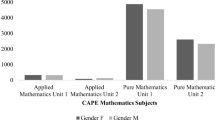

Undergraduate major, ethnicity, and gender were the three characteristics most strongly associated with performance in Physical Science (Fig. 3) explaining 12.1%, 4.4%, and 3.3% of the total variance (Table 3, bold values) at the category level. The fewest scaled points were lost by Physical science majors (11.9) who outperformed non-STEM majors by 12 scaled points. White, Hispanic, and Black test-takers lost an average of 18.6, 19.9 and 25.8 scaled points respectively. Male test-takers lost 3 fewer scaled points than female test-takers.

Undergraduate major, ethnicity, and undergraduate GPA were most strongly associated with performance in the Life Science category of the assessment (Fig. 3) explaining 11.7%, 3.5%, and 1.7% of the total variance (Table 3, bold values). Biology (4.8) and physical science (7.0) majors lost the fewest scaled points. White, Hispanic, and Black test-takers lost an average of 6.2, 6.5, and 9.4 scaled points respectively. Test-takers with undergraduate GPAs of 3.5–4.0 lost an average of 5.8 scaled points, outperforming those with undergraduate GPAs below 2.99 (7.5 scaled points).

In the category assessing Earth & Space Science (Fig. 3) undergraduate majors, ethnicity, and gender explained 13.8% of the overall variance (Table 3, bold values) with undergraduate major accounting for 4.7% of the overall variance. ESS (4.9) and physical science (7.9) majors lost the fewest scaled points. In alignment with the other categories and test as a whole, non-STEM majors lost the most points (10.0). White test-takers lost an average of 8.3 scaled points, Hispanic test-takers lost an average of 10.1 scaled points, and Black test-takers lost an average of 12.8 scaled points. Males and females performed similarly losing an average of 7.6 and 9.6 scaled points respectively.

7 Limitations

While this study presents one of the largest-scale analyses of general science teacher CK it should be noted that this is limited to those states that administer the PraxisⓇ GSCKT rather than an overall generalization of a demographic group. The deidentified nature of the survey data does not allow for investigation of factors that impact preservice and early career teachers. Examinee’s self-reported personal and professional characteristics may also have contributed to limitations within the study. Data presented in this study is limited to 2006–2016, as a result there may have been changes in test-taker populations and performance in the assessment since that time.

8 Discussion & implications for practice

While general science teachers often earn degrees to specialize in one science content area, they are responsible for demonstrating foundational knowledge across disciplines. Understanding how science teachers' knowledge progresses over time is essential as professional developers design and facilitate targeted learning experiences [35]. Our findings revealed differences in performance on the assessment as a whole and within sub-discipline categories most commonly associated with both professional characteristics including undergraduate major and undergraduate GPA and personal characteristics such as gender and ethnicity. The estimated percent correct (Table 3) was lowest for the Physical Science category. This category combines chemistry and physics topics, thus warranting details about the questions and test-takers themselves in order to offer context about performance.

Ethnic representation of the testing population did not match the overall makeup of the United States according to the US Census Bureau within the testing window (US Census Bureau, 2018) where those who identify as Black or Hispanic make up respectively 13.4% and 18.3% of the US population but only 8.3% and 2.5% of the population studied.

Across Physical Science, Life Science, and Earth & Space Science categories, test-takers demonstrated strongest performance in the category that best aligned with their undergraduate major (Fig. 3). Examinees across disciplines lost the fewest scaled points and performed most similarly in the category assessing Life Science topics.

As seen in previous research [29,30,31,32,33] males outperformed females on the assessment as a whole and across categories. Although Black-White and Hispanic-White ethnicity DIF analysis was relatively low for Category C (Supplemental Table C) questions per test form, similar trends were identified in regard to ethnicity, with White test-takers scoring above Black and Hispanic counterparts.

8.1 Recruitment & retention

With the growing science teacher shortage and high teacher turnover, pre and inservice teachers must be supported in order to promote confidence in teaching. Although many undergraduates enrolled in STEM majors may not have considered education as a career option, it is critical to expose them to the field of teaching early in their post-secondary educational program. Programs are encouraged to offer inquiry-based learning through early field experiences [36, 37]. Identification of students with an affinity towards STEM disciplines as early as high school can help strengthen these efforts. Placing preservice teachers with strong mentors during field experience will strengthen recruitment efforts and facilitate development of effective science educators [38]. In this way they will be more likely to include coursework that aligns with state certification requirements as they progress in their studies [38]. We assert that these early exposures will also facilitate diversification of the field.

8.2 Professional learning

Middle school science teachers with CK across disciplines are able to achieve larger gains in student achievement than those with gaps in CK and accompanying understanding of common misconceptions [2]. Understandably, test takers demonstrated strongest performance in categories aligned with their academic backgrounds. Coordination between education reformers including researchers, teacher educators, policymakers, and administrators is encouraged with a focus on supporting CK as part of PCK [2, 39, 40]. We present a call to action for teacher preparation program faculty to collaborate with associated science departments at their institutions to ensure standardization of coursework for licensure. Building and district administrators are encouraged to support professional learning communities (PLCs) whereby teachers in the field have agency in the direction of collaboration focused on student outcomes through improved teacher practice. Central to this work is science CK and its impact on PCK [1].

Data availability

In order to preserve the privacy of test-takers within the study, raw data are not publicly available. Deidentified data is available upon request.

Abbreviations

- CK:

-

Content knowledge

- GSCKT:

-

General science content knowledge test

- PL:

-

Professional learning

- STEM:

-

Science, technology, engineering, mathematics

- ETS:

-

Educational Testing Service

- DIF:

-

Differential item functioning

- PCK:

-

Pedagogical content knowledge

- ANOVA:

-

Analysis of variance

- GPA:

-

Grade point average

References

Mesa JC, Pringle RM. Change from within: middle school science teachers leading professional learning communities. Middle School J. 2019;50(5):5–14. https://doi.org/10.1080/00940771.2019.1674767.

Sadler PM, Sonnert G, Coyle HP, Cook-Smith N, Miller JL. The influence of teachers’ knowledge on student learning in middle school physical science classrooms. Am Educ Res J. 2013;50(5):1020–49.

Goldhaber D, Hansen M. Race, gender, and teacher testing: How informative a tool is teacher licensure testing? Am Educ Res J. 2010;47(1):218–51.

National Council on Teacher Quality. The All-Purpose Science Teacher: An Analysis of Loopholes in State Requirements for High School Science Teachers. Washington: ERIC Clearinghouse; 2010.

Nixon RS, Luft JA, Ross RJ. Prevalence and predictors of out-of-field teaching in the first five years. J Res Sci Teach. 2017;54(9):1197–218.

Shah L, Jannuzzo C, Hassan T, Gadidov B, Ray HE, Rushton GT. Diagnosing the current state of out-of-field teaching in high school science and mathematics. PLoS ONE. 2019;14(9): e0223186.

Educational Testing Service. Technical manual for the Praxis® tests and related assessments. Princeton: Educational Testing Service; 2018.

Education Testing Service. The Praxis® Study Companion General Science (5436). Princeton: Education Testing Service; 2022.

Zieky M. A DIF primer. Princeton: Educational Testing Service; 2003.

Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J Roy Stat Soc: Ser B (Methodol). 1995;57(1):289–300.

Desimone LM. Improving impact studies of teachers’ professional development: toward better conceptualizations and measures. Educ Res. 2009;38(3):181–99.

Keller MM, Neumann K, Fischer HE. The impact of physics teachers’ pedagogical content knowledge and motivation on students’ achievement and interest. J Res Sci Teach. 2017;54(5):586–614.

Cochran-Smith M. A tale of two teachers. Kappa Delta Pi Record. 2012;48(3):108–22. https://doi.org/10.1080/00228958.2012.707501.

Lotter C, Smiley W, Thompson S, Dickenson T. The impact of a professional development model on middle school science teachers’ efficacy and implementation of inquiry. Int J Sci Educ. 2016;38(18):2712–41.

Opfer VD, Pedder D. Conceptualizing teacher professional learning. Rev Educ Res. 2011;81(3):376–407.

Menter I, McLaughlin C. What do we know about teachers’ professional learning. Making a difference: turning teacher learning inside out. Cambridge: Cambridge University Press; 2015. p. 31–52.

Ha M, Baldwin BC, Nehm RH. The long-term impacts of short-term professional development: science teachers and evolution. Evol Educ Outreach. 2015;8(1):1–23.

Beauchamp C, Thomas L. Understanding teacher identity: an overview of issues in the literature and implications for teacher education. Camb J Educ. 2009;39(2):175–89.

Polizzi SJ, Zhu Y, Reid JW, Ofem B, Salisbury S, Beeth M, Rushton GT. Science and mathematics teacher communities of practice: social influences on discipline-based identity and self-efficacy beliefs. Int J STEM Educ. 2021;8(1):1–18.

Cheruvu R, Souto-Manning M, Lencl T, Chin-Calubaquib M. Race, isolation, and exclusion: what early childhood teacher educators need to know about the experiences of pre-service teachers of color. Urban Rev. 2015;47(2):237–65.

Mensah FM, Jackson I. Whiteness as property in science teacher education. Teach Coll Rec. 2018;120(1):1–38.

National Research Council. A framework for K-12 science education: Practices, crosscutting concepts, and core ideas. Washington: National Academies Press; 2012.

Jones TR, Burrell S. Present in class yet absent in science: The individual and societal impact of inequitable science instruction and challenge to improve science instruction. Sci Educ. 2022;106(5):1032–53.

Ball DL. Bridging practices: Intertwining content and pedagogy in teaching and learning to teach. J Teach Educ. 2000;51(3):241–7.

Minor EC, Desimone L, Lee JC, Hochberg ED. Insights on how to shape teacher learning policy: the role of teacher content knowledge in explaining differential effects of professional development. Educ Policy Analysis Archives/Archivos Analíticos de Políticas Educativas. 2016;24:1–34.

Shulman LS. Those who understand: Knowledge growth in teaching. Educ Res. 1986;15(2):4–14.

Kartal T, Öztürk N, Yalvaç HG. Misconceptions of science teacher candidates about heat and temperature. Procedia Soc Behav Sci. 2011;15:2758–63.

Kennedy MM. How does professional development improve teaching? Rev Educ Res. 2016;86(4):945–80.

Ndembera R, Hao J, Fallin R, Ray HE, Shah L, Rushton GT. Demographic factors that influence performance on the praxis earth and space science: content knowledge test. J Geosci Educ. 2021. https://doi.org/10.1080/10899995.2020.1813866.

Ndembera R, Ray HE, Shah L, Rushton GT. Analysis of category level performance on the Praxis® earth and space science: content knowledge test: implications for professional learning. J Geosci Educ. 2022. https://doi.org/10.1080/10899995.2022.2138067.

Shah L, Hao J, Rodriguez CA, Fallin R, Linenberger-Cortes K, Ray HE, Rushton GT. Analysis of Praxis physics subject assessment examinees and performance: who are our prospective physics teachers? Phys Rev Phys Educ Res. 2018;14(1): 010126.

Shah L, Hao J, Schneider J, Fallin R, Linenberger Cortes K, Ray HE, Rushton GT. Repairing leaks in the chemistry teacher pipeline: a longitudinal analysis of Praxis chemistry subject assessment examinees and scores. J Chem Educ. 2018;95(5):700–8.

Shah L, Schneider J, Fallin R, Linenberger Cortes K, Ray HE, Rushton GT. What prospective chemistry teachers know about chemistry: an analysis of praxis chemistry subject assessment category performance. J Chem Educ. 2018;95(11):1912–21.

Rafferty A, Walthery P, & King-Hele S. Analysing change over time:: repeated cross sectional and longitudinal survey data. 2015.

Schneider RM, Plasman K. Science teacher learning progressions: a review of science teachers’ pedagogical content knowledge development. Rev Educ Res. 2011;81(4):530–65.

Luft JA, Wong SS, Semken S. Rethinking recruitment: The comprehensive and strategic recruitment of secondary science teachers. J Sci Teacher Educ. 2011;22(5):459–74.

Taskin-Can B. careers with their attitudes and perceptions about the profession. The purpose of this study is to identify how a four-semester teacher education program. J Baltic Sci Educ. 2011;10(4):219–28.

Dailey D, Bunn G, Cotabish A. Answering the call to improve STEM education: a STEM teacher preparation program. J National Assoc Alternative Certification. 2015;10(2):3–16.

Desimone LM, Bartlett P, Gitomer M, Mohsin Y, Pottinger D, Wallace JD. What they wish they had learned. Phi Delta Kappan. 2013;94(7):62–5.

Ball DL, Thames MH, Phelps G. Content knowledge for teaching: what makes it special. J Teach Educ. 2008;59(5):389–407.

Author information

Authors and Affiliations

Contributions

GR and HR are the co-principal investigators for the project (NSF Awards #1557292 and #1557285), procured NSF funding, oversaw project design, analysis, and interpretation of data. RN contributed to project design, analysis, and interpretation of data, facilitated writing, draft and revisions of the manuscript. LS contributed to project design and initial analysis.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ndembera, R., Ray, H.E., Shah, L. et al. Which factors contribute to standardized test scores for prospective general science teachers: an analysis of the PraxisⓇ general science content knowledge test. Discov Educ 3, 25 (2024). https://doi.org/10.1007/s44217-024-00109-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44217-024-00109-7