Abstract

Seismic damage to building services systems, that is, mechanical, electrical, and plumbing systems in buildings related to energy and indoor environments, affects the functionality of buildings. Assessing post-earthquake functionality is useful for enhancing the seismic resilience of buildings via improved design. Such assessments require a model for predicting the time required to restore building services. This study analyzes the downtime data for 250 instances of damage to building services components caused by the 2016 Kumamoto earthquake in Japan, presumably obtained from buildings with minor or no structural damage. The objectives of this study are (1) to determine the empirical downtime distribution of building services components and (2) to assess the dependence of the downtime on explanatory variables. A survival analysis, which is a statistical technique for analyzing time-to-event data, reveals that (1) the median downtime of building services components was 90 days and, 7 months after the earthquake, the empirical non-restoration probability was approximately 32%, (2) the services type and the building use are explanatory variables having a statistically significant effect on the downtime of building services components, (3) the log-logistic regression model reasonably captures the trend of the restoration of building services components, (4) medical and welfare facilities and hotels restored building services components relatively quickly, and (5) the 7-month restoration probability was observed to be highest for electrical systems, followed by sanitary systems, then heating, ventilation, and air conditioning systems, and finally life safety systems. These results provide useful information to support the resilience-based seismic design of buildings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The enhancement of the seismic resilience of buildings is increasingly receiving attention in response to the significant consequences of large earthquakes [1]. As demonstrated by recent large earthquakes [2,3,4,5,6], damage to buildings not only results in casualties and direct economic losses but also disrupts functionality, resulting in long-term impacts on communities. Even if there is no serious damage done to the structural components, damage to nonstructural components, including equipment and systems, affects the functionality of buildings [7, 8]. These experiences have encouraged performance-based seismic designs of buildings to include assessments of the post-earthquake functional loss and recovery [1, 9,10,11]. Such seismic resilience quantifications of buildings can ensure the goal of functional recovery, in addition to the conventional goal of life and structural safety; however, such quantifications are still challenging compared with those of infrastructure (lifelines and networks), which have been extensively performed [e.g., 12,13,14,15,16,17].

As nonstructural building components, building services constitute systems essential to the continuous use of buildings following an earthquake. There are a wide variety of building services, including electrical; heating, ventilation, and air conditioning (HVAC); sanitary; vertical transport; and life safety (i.e., fire safety in indoor environments [18]) systems. Numerous studies [19,20,21,22,23], including full-scale experiments, numerical analyses, and earthquake damage surveys, have demonstrated that building services components are typically vulnerable to building shaking, yet they can be protected via improved building design [24]. A post-earthquake functionality evaluation of the building services is therefore an important element in measuring the seismic resilience of buildings [25] to support engineering decision-making.

In post-earthquake functionality analyses, the time to recovery of systems, also called the downtime, needs to be appropriately estimated and is typically defined as the time required to restore a specific system or set of systems to a normal level of performance [26]. Accordingly, several functional recovery models have been developed for complex systems in buildings [8, 11, 24, 27,28,29,30,31]. Most of these models [8, 11, 24, 27, 30, 31] use fault tree approaches to consider logical relationships between the damage to structural and nonstructural components and the overall impact on the building functionality, calculating the probabilistic downtime based on the FEMA P-58 component fragility and repair-time database [32, 33] or the REDi methodology [34]. However, the downtime of building systems includes both rational and irrational components [35], with the latter components, which strongly relate to decision-making, having a highly uncertain nature. Specifically, the rational components include the time required to perform the actual repair of damaged components whereas the irrational components include the time required to perform inspections, secure funding for repair work, commit engineers to develop repair strategies, obtain permits, and hire and mobilize repair workers. As a result of the unpredictable nature of this irrational component, the downtime can significantly vary depending on the earthquake event and region. However, there are insufficient data available to discuss such inter-event or regional variability. In addition, it is unclear whether the FEMA P-58 and REDi methodologies can explain the actual downtime after an earthquake, and validation data is needed to discuss this. Therefore, conducting empirical case studies is essential; that is, the actual downtime distribution for each event needs to be investigated individually.

Accordingly, this study investigates the downtime of building services components damaged by earthquakes to provide information to support the practice of the resilience-based seismic design of buildings. The downtime is here defined as the time required following an earthquake to restore building services components to pre-earthquake conditions or near pre-earthquake conditions via repair or replacement. The key objectives of the study are (1) to determine the empirical distribution of the downtime using data from a past large earthquake and (2) to assess the dependence of the downtime on explanatory variables. To achieve these objectives, this study focuses on the 2016 Kumamoto earthquake in Japan using building services restoration survey data provided by the Japanese Association of Building Mechanical and Electrical Engineers [36]. Survival analysis methods, which are commonly used for statistical analyses of time-to-event data, are employed. The analysis investigates the effects of four factors on the downtime, i.e., the building use, services type, restoration type, and seismic intensity.

Note that this study focuses on a specific region and time frame and the expected results cannot be generalized to other events, because region-specific conditions exist. Specifically, a case study specific to the Kumamoto earthquake in Japan is conducted in this study. Nevertheless, the methodology used here is applicable to different events and may enable comparisons among them. In addition, the analysis is limited to the total duration of the restoration processes because detailed information such as the rational and irrational downtime components were not included in the survey data; therefore, various uncertainties involved in the restoration processes are incorporated into the downtime. Furthermore, the survey data include data for which the exact downtime is unknown as a result of censoring (i.e., a component was not restored until the end of the survey); therefore, the determined downtime distribution is limited to a specific period after the earthquake and the distribution outside this period needs to be further investigated. However, even these limited survey data are valuable in terms of deepening our understanding of the actual situation.

The remainder of this paper is organized as follows. Section 2 outlines the building services restoration survey data and the preprocessing for the survival analysis. Section 3 describes the survival analysis procedures and the implemented models. Section 4 discusses the analysis results. Finally, Section 5 presents the study conclusions and potential future work.

Data

The building services restoration survey data [36] concerned 326 instances of damage to building services components as a result of the 2016 Kumamoto earthquake, which struck Kumamoto, Japan, and its surrounding areas on April 16, 2016 (1:25 a.m. local time) with a magnitude of 7.3 (Fig. 1). The Kumamoto region, situated in the center of Kyushu Island in the southwest of Japan, is centered on Kumamoto City, the capital of Kumamoto Prefecture, and encompasses the surrounding municipalities. The Kumamoto City had a population of over 740,000, including urban areas with numerous residential and commercial buildings in the center and agricultural production areas surrounding the urban areas. The data were collected for 84 buildings by the Japanese Association of Building Mechanical and Electrical Engineers from October to November in 2016 via a questionnaire survey. The association asked its members to report on the buildings to which they belonged using self-administered questionnaires. The author was provided with the data in a form that did not include information concerning the building name and location, making it impossible to identify the buildings. As summarized in Table 1, the survey data included (1) information concerning each instance of damage, including the component name, services type, damage condition, number of days required for restoration (i.e., downtime), and restoration type, and (2) information concerning the building in which the instance occurred, including the use, construction year and type, number of stories, and estimated intensity of ground shaking experienced by the building according to the Japan Meteorological Agency (JMA) seismic intensity scale. Note that the JMA seismic intensity scale [37], which is commonly used in Japan, categorizes the intensity of ground motion caused by earthquakes into 10 degrees, comprising 0 (imperceptible), 1, 2, 3, 4, 5 lower, 5 upper, 6 lower, 6 upper, and 7. For seismic stations, this intensity is determined from observed three-component acceleration waveforms by applying specific filters.

Estimated Japan Meteorological Agency (JMA) seismic intensity distribution for the 2016 M7.3 Kumamoto earthquake provided by QuiQuake [49]

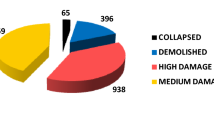

Information concerning structural damage to the buildings was not included in the survey data because it was not requested by the questionnaire. As shown in Fig. 2, steel (S) structures and reinforced concrete (RC)/steel-framed reinforced concrete (SRC) structures account for approximately 70% and 24%, respectively, of the buildings surveyed. Approximately 93% of the buildings, excluding those with an unknown year of construction, were constructed in or after 1983, ensuring seismic performance in line with the Japanese building code, which was revised in 1981. According to the statistics of the building damage caused by the 2016 Kumamoto earthquake [38], even in the area around the seismic station with a JMA seismic intensity of 7, the ratio of the buildings diagnosed as having complete or major structural damage to those constructed after the building code revision was 8% for S structures (n = 219) and 0% for RC structures (n = 37). In addition, 60% of the S structures and 89% of the RC structures constructed after the 1981 revision experienced no structural damage. Considering that the buildings in the survey data were subjected to ground motions with intensities lower than 7 (approximately 85% corresponded to 6 upper or 6 lower), as shown in Fig. 2, minor or no structural damage was highly likely for the surveyed buildings. Namely, the results of this study are expected to represent the services restoration tendency specific to buildings with minor or no structural damage. Therefore, the repair of structural components may not have had a significant impact on the downtime of the building services components.

The component name and damage condition constituted open-ended responses in the survey. Response examples included “a concealed ceiling-packaged air conditioner and surrounding pipes” and “falling as a result of failed ceiling suspension support material.” The services and restoration types corresponded to such open-ended information. The services types were categorized as electrical (including power receiving, transforming, storage, emergency generation, and distribution), HVAC, sanitary (including water supply, drainage, and plumbing), vertical transport, or life safety (including fire protection) systems. The restoration types were categorized as part replacement, equipment repair, equipment replacement, fixed-part repair, or total system replacement; these types can be considered as reflecting differences in the level of damage to the building services components.

The downtime was adopted as a response variable taking the date of the 2016 Kumamoto earthquake as the origin and a single day as the minimum unit. As shown in Fig. 3, the downtime data included right-censored data, for which a component was not restored until a respondent completed the questionnaire (i.e., the exact downtime was unknown). These censored data were incorporated into the analysis and were not treated as missing data because the survival analysis methods described below can deal with censored data.

Specifically, given a sample of \(n\) instances of damage to building services components, the \(i\) -th instance is associated with the exact downtime \({T}_{i}^{*}\), which is assumed to be a non-negative continuous random variable, and the right-censoring time \({C}_{i}\), which is assumed to be a non-negative fixed value, for \(i=1,\dots ,n\). The minimum of the exact downtime and the censoring time \({T}_{i}=\text{min}\left({T}_{i}^{*},{C}_{i}\right)\) can be observed. To represent whether the observed time is \({T}_{i}={T}_{i}^{*}\) or \({T}_{i}={C}_{i}\), the censoring indicator \({\Delta }_{i}\) is defined as

and a set of downtime data is therefore expressed as \(\left({\Delta }_{1},{T}_{1}\right),\dots ,\left({\Delta }_{n},{T}_{n}\right)\).

Downtime data stating “under restoration work,” “under suspension,” or “not restored” were treated as right-censored data (\({\Delta }_{i}=0\)). For these instances, the censoring time \({C}_{i}\) was fixed at 210 days, which was the approximate number of days until the end of the survey, because the questionnaire completion date was unknown. Similarly, downtime data stating “unknown” or nothing (i.e., empty data), which accounted for approximately 28% of all instances, were treated as right-censored data (\({\Delta }_{i}=0\),\({C}_{i}=210\)), even though it is possible that the downtime had not been recorded or recalled despite the component being restored before completion of the questionnaire. Including these data with different interpretations as right-censored data was a conservative assumption made to avoid an underestimation of the downtime as a result of the exclusion of missing data. Data roughly stating the downtime were converted into unique values, e.g., “2 months” was converted to “60 days.”

Four items with categorical data, i.e., the services type, restoration type, building use, and JMA seismic intensity, were considered as possible explanatory variables. Of the remaining items with categorical data, the construction year and type were not considered because, as mentioned above, these factors are linked to the possibility that the buildings considered in this study had similar structural damage states (minor or no structural damage). The number of stories, which is related to the size of the building, was not considered because the number of stories alone cannot characterize the impact of the building size on the downtime, and essential information such as the floor area was not included in the survey data. Figure 4 summarizes the number of instances that were restored and censored for each item considered. The censored instances accounted for approximately 36% of all instances but their percentages ranged from 0 to 95% when the instances were grouped by item, even though some groups had few instances. The number of instances was roughly balanced among the different groups for some items, such as the services type and building use, whereas instances were concentrated in a specific group for other items, such as the restoration type. A total of 250 instances were subjected to analysis after excluding groups with 10 or fewer instances. These groups were excluded in order to measure empirical survival functions (described below) on the order of 10% or less.

Methods

Survival analysis methods were used to analyze the downtime data. These methods consist of a collection of statistical methods for investigating the time until an event of interest occurs as a response variable and are able to deal with censored data. Such methods are often used in clinical trials to analyze time-to-death data and to compare the capacities of different treatments. In this study, the event of interest was restoration; however, key terminologies used in survival analyses, such as the survival function and the hazard function, were adopted as-is in this paper. As described in Sect. 2, the response variable \({T}^{*}\) was defined as a non-negative continuous random variable representing the time to the event of an individual instance from a homogeneous population. Meanwhile, all explanatory variables considered were operationalized as categorical variables; specifically, categorical data on a nominal scale were expressed using dummy variables of 0 or 1.

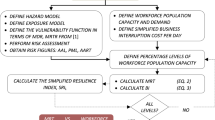

The analysis procedures adopted in this study were as follows. First, the probability distribution of the downtime was specified using the survival function, the probability density function, and the hazard function. Next, the downtime data were summarized using the Kaplan–Meier curve [39], which is a non-parametric estimate of the survival function. To narrow down the explanatory variables, the estimated curves were then compared individually between groups for each explanatory variable using the log-rank test, which is a non-parametric test widely used in assessing the statistical significance of a difference in survival distributions. The dependence of the downtime on the explanatory variables was then assessed using parametric regression models, which predefine the form of the survival function and allow its parameters to be a function of a single or multiple explanatory variables. Finally, the best regression model was determined using Akaike’s information criterion (AIC) [40] to identify the explanatory variables that had a statistically significant effect on the downtime. These procedures were performed using R [41], which is a free software environment widely used for statistical computing and graphics. In particular, plotting of the Kaplan–Meier curve and the log-rank test were performed using EZR [42], which is a modified graphical user interface for R designed to add statistical functions used in biostatistics, and the parametric regression was performed using flexsurv [43], which is an R package for the fully parametric modeling of time-to-event data.

Key concepts in the analysis are briefly described in the following subsections. Additional details can be found in the literature [44].

Time-to-event distribution

An essential element in survival analyses is the survival function, which is commonly used to characterize the time-to-event distribution. The survival function \(S\left(t\right)\) is defined by the probability of the time to the event \({T}^{*}\) exceeding a given value \(t\); i.e., the probability that the event has not occurred before at a given time:

where \(S\left(t\right)\) is initiated at a probability of 1 and monotonically decreases with time. In this study, the survival function gives the non-restoration probability at a given time.

The cumulative distribution function of the time to the event \(F\left(t\right)\) is associated with the survival function according to:

where \(F\left(t\right)\) is initiated at a probability of zero and monotonically increases with time. In this study, the cumulative distribution function gives the restoration probability at a given time; this is often called the restoration curve in disaster research.

An alternative representation of the time-to-event distribution is the hazard function, which gives the instantaneous rate of occurrence of the event. The hazard function \(\lambda \left(t\right)\) is defined as:

where the numerator represents the conditional probability that the event will occur in the interval \(\left[t,t+dt\right)\), under the condition that it has not occurred before, and the denominator represents the width of the interval.

Using the probability density function \(f\left(t\right)\), Eq. (4) is rewritten as:

Because the survival function is a differentiable function written as \(S\left(t\right)={\int }_{t}^{\infty }f\left(t\right)dt\), Eq. (5) is rewritten as:

Solving this differential equation, \(S\left(t\right)\) is expressed using \(\lambda \left(t\right)\) alone as:

Therefore, \(S\left(t\right)\), \(f\left(t\right)\), and \(\lambda \left(t\right)\) have a relationship whereby, once one is determined, the other two can also be determined.

Empirical survival function

The Kaplan–Meier curve [39] is an estimate of the survival function without assuming that the time-to-event data follow specific distributions. This curve is often used to summarize time-to-event data including right-censoring in terms of the empirical survival function.

Consider a sample of \(n\) instances from a homogeneous population with an (unknown) survival function. Let \({T}_{(1)}<{T}_{(2)}<\cdots <{T}_{({J}_{n})}\) be the event-observed times (i.e., \({T}_{i}\) given \({\Delta }_{i}=1\)) sorted by size excluding ties. Suppose that \({d}_{j}\) instances are observed at \({T}_{(j)}\) and that \({m}_{j}\) instances are censored in the interval \(\left[{T}_{(j)},{T}_{(j+1)}\right)\). Let \({r}_{j}=\left({m}_{j}+{d}_{j}\right)+\left({m}_{j+1}+{d}_{j+1}\right)+\cdots +\left({m}_{{J}_{n}}+{d}_{{J}_{n}}\right)\) denote the number of instances at risk at a time just prior to \({T}_{(j)}\). The Kaplan–Meier curve \(\widehat {S} {(t)}\) is:

where \({T}_{\text{max}}=\text{max}\left({T}_{1},{T}_{2},\dots ,{T}_{n}\right)\). Equation (8) is a step function that decreases at each event-observed time and never decreases to zero if \({m}_{{J}_{n}}>0\), where the largest time recorded \({T}_{\text{max}}\) is the censoring time. In this study, the Kaplan–Meier curve gives the empirical non-restoration probability at a given time.

Parametric regression models

Parametric regression modeling correlates the time to the event to explanatory variables using typical probability distributions by allowing the model parameters to be a function of the explanatory variables. In this study, the Weibull distribution and the log-logistic distribution, which are often used in survival analyses, were considered. Both distributions have two parameters taking any positive values; one, \(a\), determines the shape of the distribution, called the shape parameter, and the other, \(b\), determines the location of the distribution, called the location parameter. They thereby flexibly model the time to the event. In particular, the Weibull distribution can deal with a hazard function that is monotonically decreasing for \(a<1\), constant over time for \(a=1\), and monotonically increasing for \(a>1\), whereas the log-logistic distribution can deal with a hazard function that is monotonically decreasing for \(a\le 1\) and monotonically increases initially, reaches a maximum at a specific time, and then decreases to zero (i.e., a unimodal function) for \(a>1\).

The probability density, hazard, and survival functions of the Weibull distribution are respectively expressed as:

The probability density, hazard, and survival functions of the log-logistic distribution are respectively expressed as:

Assume that both the shape and location parameters depend on a vector of explanatory variables \(\mathbf{Z}=({Z}_{1},{Z}_{2},\dots ,{Z}_{p})\). Given that the parameters are defined to be positive, the logarithm of the parameters is modeled by a linear combination of explanatory variables such that

where \({a}_{0}\) and \({b}_{0}\) are constants, \({\mathbf{\beta} }{\prime}=({{\beta }{\prime}}_{1},\dots ,{{\beta }{\prime}}_{p})\) and \({\mathbf{\beta} }^{^{\prime\prime} }=({{\beta }^{^{\prime\prime} }}_{1},\dots ,{{\beta }^{^{\prime\prime} }}_{p})\) are vectors of coefficients, and \({\text{T}}\) denotes the transpose.

The parameter values are estimated by maximizing the log-likelihood \(\text{ln}L\) with respect to the parameters \(\mathbf{\theta} =\left\{{a}_{0},{b}_{0},{\mathbf{\beta} }{^\prime},{\mathbf{\beta} }^{^{\prime\prime} }\right\}\). The likelihood for the parameters \(L\) is

where \({a}_{i}\) and \({b}_{i}\) relate to \(\mathbf{\theta}\) and \(\mathbf{Z}_{i}\) via Eqs. (15) and (16). Note that Eq. (17) is applicable when the censoring times are fixed. The log-likelihood \(\ln L\) can also be written concisely in terms of hazards [43].

The quality of the regression models is evaluated using the AIC [40] defined as:

where \(\text{ln}{L}^{*}\) is the maximum log-likelihood and \(k\) is the number of parameters. Models with smaller AIC are preferred, i.e., the AIC is smaller for models with a larger maximum log-likelihood, indicating that the models better fit the data. However, models with more parameters increase the penalty term because they are more likely to overfit the data.

Results and discussion

Overall downtime distribution

First, to grasp the overall picture of the restoration of building services components, the Kaplan–Meier curve was determined without grou** the data (Fig. 5). The obtained curve demonstrates that there were appreciable delays in the restoration of the building services components relative to the restoration of the lifeline infrastructure, such as the restoration of the electrical power, water, and gas supply systems, which were almost completely restored within 5–25 days following the earthquake [45,46,47]. The extremely large variation in the downtime was manifested in the obtained curve. The median downtime of the building services components was 90 days. The empirical non-restoration probability was approximately 32% at the end of the survey, i.e., approximately one-third of the instances remained unrestored even 7 months after the earthquake. This prolonged downtime may have been related to complex circumstances, such as the difficulty of securing funding for repair work, in addition to the long time taken to mobilize repair workers. The slope of the obtained curve was initially steep and then decreased gradually with time, indicating that the empirical probability density function peaked at an early time.

Between-group differences in the downtime distribution

Next, to narrow down the explanatory variables, Kaplan–Meier curves were determined by grou** the data (Fig. 6). There was a clear group difference for the services type and the building use, in contrast with the case for the restoration type and the JMA seismic intensity. This result was statistically supported by the results of a log-rank test. That is, p-values determined from the log-rank statistic were 0.031 for the services type, 0.558 for the restoration type, 1.03 × 10−12 for the building use, and 0.559 for the JMA seismic intensity, indicating statistically significant differences between the groups for the services type and the building use; however, no statistically significant differences between the groups for the restoration type and the JMA seismic intensity were observed. Note that the log-rank test for the JMA seismic intensity indicates only that there was no statistically significant difference between the intensities of 6 upper and 6 lower; the possible difference from even lower intensities or from intensity 7 cannot be discussed here. The log-rank test for the restoration type suggests that the uncertainty in the time components related to decision-making may have played a more dominant role in the total duration distribution of the restoration processes, compared to the variability in the actual repair time of the building services components. However, the statistical power, i.e., the probability of the test detecting a statistically significant difference between two groups, was calculated to be up to 0.35 for the restoration type and 0.13 for the JMA seismic intensity, focusing on the empirical non-restoration probability at the end of the survey, even if the significance level is set at 10%. Therefore, the small overall sample size is also a possible reason why significant differences were not detected.

A notable tendency of the obtained curves for the services type was that the curve for the sanitary systems alone initially descended remarkably and subsequently did not change greatly, whereas the curve for the electrical systems was above that for the sanitary systems during the first 90 days and then subsequently lower, resulting in the non-restoration probability of the electrical systems being lowest at the end of the survey. This suggests that the highest priority in making decisions was the quick restoration of only the sanitary systems that were critical to resuming business operations, whereas the restoration of the remaining non-essential sanitary systems may have been postponed for various reasons.

The group comparison for the building use demonstrates that medical and welfare facilities dealt with the restoration of the building services components most quickly, indicating a high recovery ability in response to healthcare demands. Hotels quickly restored their building services components, indicating decisions to accommodate incoming disaster response workers in the affected areas. Meanwhile, restoration progressed slowly in commercial, entertainment, and production facilities and even more slowly in offices and educational facilities.

Statistically significant factors of the downtime

To comprehensively assess the dependence of the downtime on the services type and the building use, parametric regression models adopting either or both of these factors as explanatory variables were tested. The restoration type and the JMA seismic intensity were not considered as explanatory variables because, as previously stated, the p-values obtained via the log-rank test did not indicate statistically significant between-group differences. Regarding the building use, the educational facilities and hotels were excluded because of their small sample sizes. The regression results summarized in Table 2 show that a log-logistic model adopting both the services type and the building use as explanatory variables was the best model, having the smallest AIC. The AIC varied greatly depending on the combination of explanatory variables relative to the assumed probability distribution. The results reveal that the services type and the building use simultaneously had a statistically significant effect on the downtime of the building services components after the earthquake.

Figure 7 compares the best model predictions with the Kaplan–Meier curves. Although the data were not necessarily sufficient for a stratified analysis, the best regression model reasonably captured the trend of the restoration of the building services components. The model predictions suggest that (1) medical and welfare facilities restore building services components much more rapidly than commercial, entertainment, and production facilities and offices, (2) the restoration of sanitary systems is initially concentrated and subsequently stagnates, whereas the restoration of electrical systems is completed most quickly in the long term, and (3) decisions concerning the restoration of building services components are generally made in the order of priority of electrical, sanitary, HVAC, and life safety systems in the long term.

Restoration curves

Finally, the developed restoration model for the building services components was compared with the existing restoration model for lifeline infrastructure. As described in Sect. 3, the survival function determines the cumulative distribution function of the downtime \(F\left(t\right)=1-S\left(t\right)\), and \(F\left(t\right)\) is commonly used as the restoration curve. Figure 8 shows that the restoration curves of the building services components were shifted by many days to the right and had long right tails relative to the existing Japanese lifeline infrastructure restoration curves (calculated for a JMA seismic intensity of 6) [48], which are also regression models based on restoration data from past large earthquakes. In contrast to the lifeline infrastructure, which is almost completely restored within 1 month after a large earthquake, there were extremely large variations in the downtime in the restoration curves of the building services components. These results demonstrate that seismic damage to building services components results in the long-term functional stagnation of buildings; therefore, strategies to prevent damage to building services components and shorten the component downtime are important to improve the seismic resilience of buildings.

Comparison of the building services component restoration curves with the lifeline infrastructure restoration curves [48]

Conclusions

This study investigated the empirical distribution of the downtime of building services components damaged by the 2016 Kumamoto earthquake in Japan and its dependence on explanatory variables using survival analysis methods. The key results of the study were the following. (1) The downtime distribution of the building services components had extremely large variations, being shifted to the right relative to that of the lifeline infrastructure. (2) The median downtime of the building services components was 90 days and the empirical non-restoration probability 7 months after the earthquake was approximately 32%. (3) The services type and the building use were explanatory variables having a statistically significant effect on the downtime of the building services components. (4) The log-logistic regression model with the services type and the building use as explanatory variables reasonably captured the restoration trend of the building services components. (5) Medical and welfare facilities and hotels quickly restored their building services components compared with other building uses. Finally, (6) the 7-month restoration probability was observed to be highest for electrical systems, followed by sanitary systems, then HVAC systems, and finally life safety systems.

Note that the effect of differences in the structural damage state on the downtime of the building services components could not be investigated because the data used in this study did not include information concerning the structural damage. However, the abovementioned results are highly likely to be derived from buildings with similar structural damage states, specifically buildings with minor or no structural damage. This is a result of the following three factors. (1) Most buildings covered by the survey data were S or RC structures designed based on the 1981 revised Japanese building code. (2) The buildings were subjected to ground motions with JMA seismic intensities of 6 upper or 6 lower. (3) The building damage statistics show that, even in the area around the seismic station with a JMA seismic intensity of 7, the ratio of buildings diagnosed as having complete or major structural damage to those constructed after the building code revision was several percent and the majority suffered no structural damage. Therefore, the repair of structural components may not have had much impact on the downtime of the building services components. Because this study could not include structural damage states as a parameter in the developed restoration curves of the building services components, the generalization of the developed restoration curves to models covering more severe structural damage states, such as major and moderate structural damage, requires further research.

The results of this study provide practical information for advancing the resilience-based seismic design of buildings. Particularly, the developed restoration curves of the building services components serve as a component of a framework for quantifying the seismic resilience of buildings, which extends typical seismic performance assessments to include assessments of the post-earthquake functional recovery. Specifically, the framework typically assumes/predicts ground motion intensities/waveforms for possible earthquakes, evaluates structural responses to obtain engineering demand parameters (e.g., the peak floor acceleration and the maximum inter-story drift ratio), evaluates the probability of damage to nonstructural components (including services components) using fragility functions, and links the probabilistic damage to restoration curves. The proposed restoration curves are available conditional on such damage predictions. However, the results were obtained from limited data and further investigations are necessary. Specifically, the survey data used in this study included data for which the exact downtime was unknown as a result of right-censoring and the downtime distribution outside a period of 7 months after the earthquake could not be investigated. In addition, the data included only information on the total duration of the restoration processes and the rational and irrational downtime components could not be investigated. Their dependence on other possible explanatory variables, such as the structural damage state and the building size, needs to be investigated, in addition to the explanatory variables adopted in this study. Potential confounding variables also need to be discussed. Furthermore, the data were derived for a specific earthquake event and the results could not be generalized to additional earthquake events. Comprehensive data collection and analyses need to be conducted as future work to overcome these limitations.

Data availability

The data that support the findings of this study were provided by the Japanese Association of Building Mechanical and Electrical Engineers, and it has not granted permission to share the data.

References

Castillo JGS, Bruneau M, Elhami-Khorasani N (2022) Seismic resilience of building inventory towards resilient cities. Resilient Cities Structures 1(1):1–12. https://doi.org/10.1016/j.rcns.2022.03.002

Hengjian L, Kohiyama M, Horie K, Maki N, Hayashi H, Tanaka S (2003) Building damage and casualties after an earthquake. Nat Hazards 29:387–403. https://doi.org/10.1023/A:1024724524972

Potter SH, Becker JS, Johnston DM, Rossiter KP (2015) An overview of the impacts of the 2010–2011 Canterbury earthquakes. Int J Disaster Risk Reduction 14:6–14. https://doi.org/10.1016/j.ijdrr.2015.01.014

Botzen WJW, Deschenes O, Sanders M (2019) The economic impacts of natural disasters: a review of models and empirical studies. Rev Environ Econ Policy 13(2):167–188. https://doi.org/10.1093/reep/rez004

Achour N, Miyajima M (2020) Post-earthquake hospital functionality evaluation: The case of Kumamoto Earthquake 2016. Earthq Spectra 36:1670–1694. https://doi.org/10.1177/8755293020926180

Ivanov ML, Chow WK (2023) Structural damage observed in reinforced concrete buildings in Adiyaman during the 2023 Turkiye Kahramanmaras Earthquakes. Structures 58:105578. https://doi.org/10.1016/j.istruc.2023.105578

Miranda E, Mosqueda G, Retamales R, Pekcan G (2012) Performance of nonstructural components during the 27 February 2010 Chile Earthquake. Earthq Spectra 28:453–471. https://doi.org/10.1193/1.4000032

Jacques CC, McIntosh J, Giovinazzi S, Kirsch TD, Wilson T, Mitrani-Reiser J (2014) Resilience of the Canterbury hospital system to the 2011 Christchurch earthquake. Earthq Spectra 30:533–554. https://doi.org/10.1193/032013EQS074M

Singh RR, Bruneau M, Stavridis A, Sett K (2022) Resilience deficit index for quantification of resilience. Resilient Cities and Structures 1(2):1–9. https://doi.org/10.1016/j.rcns.2022.06.001

Castillo JGS, Bruneau M, Elhami-Khorasani N (2022) Functionality measures for quantification of building seismic resilience index. Eng Struct 253:113800. https://doi.org/10.1016/j.engstruct.2021.113800

Mohammadgholibeyki N, Koliou M, Liel AB (2023) Assessing building’s post-earthquake functional recovery accounting for utility system disruption. Resilient Cities and Structures 2(3):53–73. https://doi.org/10.1016/j.rcns.2023.06.001

Cimellaro GP, Solari D, Bruneau M (2014) Physical infrastructure interdependency and regional resilience index after the 2011 Tohoku earthquake in Japan. Earthquake Eng Struct Dynam 43(12):1763–1784. https://doi.org/10.1002/eqe.2422

Liu M, Scheepbouwer E, Gerhard D (2017) Statistical model for estimating restoration time of sewerage pipelines after earthquakes. J Perform Constr Facil 31(5):1–10. https://doi.org/10.1061/(ASCE)CF.1943-5509.0001064

Didier M, Baumberger S, Tobler R, Esposito S (2018) Seismic resilience of water distribution and cellular communication systems after the 2015 Gorkha earthquake. J Struct Eng 144:1–11. https://doi.org/10.1061/(ASCE)ST.1943-541X.0002007

Kammouh O, Cimellaro GP, Mahin SA (2018) Downtime estimation and analysis of lifelines after an earthquake. Eng Struct 173:393–403. https://doi.org/10.1016/j.engstruct.2018.06.093

Cassottana B, Balakrishnan S, Aydin NY, Sansavini G (2023) Designing resilient and economically viable water distribution systems: a multi-dimensional approach. Resilient Cities and Structures 2:19–29. https://doi.org/10.1016/j.rcns.2023.05.004

Hou B, Huang J, Miao H, Zhao X, Wu S (2023) Seismic resilience evaluation of water distribution systems considering hydraulic and water quality performance. Int J Disaster Risk Reduction 93:103756. https://doi.org/10.1016/j.ijdrr.2023.103756

Ivanov ML, Chow WK (2023) Fire safety in modern indoor and built environment. Indoor and Built Environment 32(1):3–8. https://doi.org/10.1177/1420326X221134765

Taghavi S, Miranda E. Response assessment of nonstructural building elements. PEER Report 2003/05. The Pacific Earthquake Engineering Research Center, Berkeley, CA, 2003.

Pantoli E, Chen MC, Wang X, Astroza R, Ebrahimian H, Hutchinson TC, Conte JP, Restrepo JI, Marin C, Walsh KD, Bachman RE, Hoehler MS, Englekirk R, Faghihi M (2016) Full-scale structural and nonstructural building system performance during earthquakes: Part II–NCS damage states. Earthq Spectra 32:771–794. https://doi.org/10.1193/012414eqs017m

Dhakal RP, Pourali A, Tasligedik AS, Yeow T, Baird A, MacRae G, Pampanin S, Palermo A (2016) Seismic performance of non-structural components and contents in buildings: an overview of NZ research. Earthq Eng Eng Vib 15:1–17. https://doi.org/10.1007/s11803-016-0301-9

Nishino T, Suzuki J, Nagao N, Notake H (2023) Investigation of damage to fire protection systems in buildings due to the 2016 Kumamoto earthquake: derivation of damage models for post-earthquake fire risk assessments. J Asian Architecture Building Eng 22:2123–2142. https://doi.org/10.1080/13467581.2022.2099401

Qi L, Kurata M, Huang J, Kawamata Y, Aida S, Cho K, Kanao I, Takaoka M (2023) Seismic damage and functional loss of ceiling systems: Observation in shaking table test of hospital specimen. Earthquake Eng Struct Dynam 52:2888–2909. https://doi.org/10.1002/eqe.3900

Terzic V, Villanueva PK (2021) Method for probabilistic evaluation of post-earthquake functionality of building systems. Eng Struct 241:112370. https://doi.org/10.1016/j.engstruct.2021.112370

Mieler MW, Mitrani-Reiser J. (2018) Review of the state of the art in assessing earthquake-induced loss of functionality in buildings. J Structural Eng. 144(3):04017218–1–15. https://doi.org/10.1061/(ASCE)ST.1943-541X.0001959

Bruneau M, Chang SE, Eguchi RT, Lee GC, O’Rourke TD, Reinhorn AM, Shinozuka M, Tierney K, Wallace WA, Winterfeldt DV (2003) A framework to quantitatively assess and enhance the seismic resilience of communities. Earthq Spectra 19:733–752. https://doi.org/10.1193/1.1623497

Porter K, Ramer K (2012) Estimating earthquake-induced failure probability and downtime of critical facilities. J Bus Contin Emer Plan 5:352–364

Khanmohammadi S, Farahmand H, Kashani H (2018) A system dynamics approach to the seismic resilience enhancement of hospitals. Int J Disaster Risk Reduction 31:220–233. https://doi.org/10.1016/j.ijdrr.2018.05.006

Cremen G, Seville E, Baker JW (2020) Modeling post-earthquake business recovery time: An analytical framework. Int J Disaster Risk Reduction 42:101328. https://doi.org/10.1016/j.ijdrr.2019.101328

Hutt CM, Vahanvaty T, Kourehpaz P (2022) An analytical framework to assess earthquake-induced downtime and model recovery of buildings. Earthq Spectra 38(2):1283–1320. https://doi.org/10.1177/87552930211060856

Cook DT, Liel AB, Haselton CB, Koliou M (2022) A framework for operationalizing the assessment of post-earthquake functional recovery of buildings. Earthq Spectra 38(3):1972–2007. https://doi.org/10.1177/87552930221081538

Federal Emergency Management Agency. FEMA P-58–1: Seismic performance assessment of buildings, volume 1–methodology. Federal Emergency Management Agency, Washington D.C., 2018.

Federal Emergency Management Agency. FEMA P-58–2: Performance Assessment Calculation Tool (PACT). Federal Emergency Management Agency, Washington D.C., 2018.

Almufti I, Willford M (2013) REDi™ rating system: resilience-based earthquake design initiative for the next generation of buildings. Arup, San Francisco, CA

Comerio MC (2006) Estimating downtime in loss modeling. Earthq Spectra 22:349–365. https://doi.org/10.1193/1.2191017

Japanese Association of Building Mechanical and Electrical Engineers. Survey report on damage to building services caused by the 2016 Kumamoto earthquake. Japanese Association of Building Mechanical and Electrical Engineers, Tokyo, 2017. Available at: https://www.jabmee.or.jp/wp-content/uploads/2019/08/kumamotojisin.pdf (accessed 15 July 2023).

Japan Meteorological Agency, JMA seismic intensity: basic knowledge and its application, Gyosei, Tokyo, 1996.

National Institute for Land and Infrastructure Management (NILM) and Building Research Institute (BRI). Quick report of the field survey on the building damage by the 2016 Kumamoto earthquake. Technical Note of National Institute for Land and Infrastructure Management, No. 929 (Building Research Data, No.173), Chapter 5.2, pp. 1–10, 2016.

Kaplan EL, Meier P (1958) Nonparametric estimation from incomplete observations. J Am Stat Assoc 53:457–481. https://doi.org/10.2307/2281868

Akaike H (1974) A new look at the statistical model identification. IEEE Trans Autom Control 19(6):716–723. https://doi.org/10.1109/TAC.1974.1100705

R Core Team. R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria, 2016. Available at: https://www.R-project.org/ (accessed 15 July 2023).

Kanda Y (2013) Investigation of the freely available easy-to-use software ‘EZR’ for medical statistics. Bone Marrow Transplant 48:452–458. https://doi.org/10.1038/bmt.2012.244

Jackson C. flexsurv: A platform for parametric survival modeling in R. Journal of Statistical Software 2016; 70(8): 1–33. https://doi.org/10.18637/jss.v070.i08.

Kalbfleisch JD, Prentice RL (2002) The statistical analysis of failure time data. Wiley Series in Probability and Statistics. John Wiley & Sons, New York

Industrial Structure Council, Ministry of Economy, Trade and Industry. Damage to electric power facilities caused by the 2016 Kumamoto earthquake. Available at: https://www.meti.go.jp/shingikai/sankoshin/hoan_shohi/denryoku_anzen/013.html, 2016 (accessed 15 July 2023).

Saibu Gas. Press releases related to the 2016 Kumamoto earthquake. Available at: https://www.saibugas.co.jp/disaster/index.php, 2016 (accessed 15 July 2023).

Digital Archives of Kumamoto Disasters. Changes in water outage after the Kumamoto earthquake. Available at: https://www.kumamoto-archive.jp/post/58-99991jl0004ft8, 2019 (accessed 15 July 2023).

Nojima N, Kato H. Modification and validation of an assessment model of post-earthquake lifeline serviceability based on the Great East Japan earthquake disaster. Journal of Disaster Research 2014; 9(2): 108–120. https://doi.org/10.20965/jdr.2014.p0108.

National Institute of Advanced Industrial Science and Technology. QuiQuake: Quick Estimation System for Earthquake Map Triggered by Observed Records. Available at: https://gbank.gsj.jp/QuiQuake/index.en.html, 2013 (accessed 6 February 2023).

Acknowledgements

The author is grateful to the Japanese Association of Building Mechanical and Electrical Engineers for generously providing the building services restoration survey data.

Funding

This work was supported by an academia–industry collaborative research program involving Kyoto University, Tokyo Polytechnic University, Shimizu Corporation, and Ohsaki Research Institute.

Author information

Authors and Affiliations

Contributions

T.N. designed the study, analyzed the data, discussed the results, created the figures, and wrote the manuscript.

Corresponding author

Ethics declarations

Ethics approval

Not applicable.

Competing interests

The author has no competing interests to declare that are relevant to the content of this article.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nishino, T. Post-earthquake building services downtime distribution: a case study of the 2016 Kumamoto, Japan, earthquake. Archit. Struct. Constr. (2024). https://doi.org/10.1007/s44150-024-00113-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44150-024-00113-3