Abstract

The opportunity to automate and monitor the execution of legal contracts is gaining increasing interest in Business and Academia, thanks to the advent of smart contracts, blockchain technologies, and the Internet of Things. A critical issue in develo** smart contract systems is the formalization of legal contracts, which are traditionally expressed in natural language with all the pitfalls that this entails. This paper presents a systematic literature review of papers for the main steps related to the transformation of a legal contract expressed in natural language into a formal specification. Key research studies have been identified, classified, and analyzed according to a four-step transformation process: (a) structural and semantic annotation to identify legal concepts in text, (b) identification of relationships among concepts, (c) contract domain modeling, and (d) generation of a formal specification. Each one of these steps poses serious research challenges that have been the subject of research for decades. The systematic review offers an overview of the most relevant research efforts undertaken to address each step and identifies promising approaches, best practices, and existing gaps in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The advent of software that partially monitors, automates, and controls the execution of legal contracts has gained increasing interest in Academia, Government, and Industry, thanks to the advent of smart contracts. Such software systems have become possible thanks to blockchain and Internet-of-Things (IoT) technologies [1]. A first step towards a systematic software engineering (SE) process for building such software systems is to translate automatically or semi-automatically legal contract text written in natural language (NL) into formal specifications that precisely define the terms and conditions (requirements) that a smart contract needs to monitor and control. The use of a formal specification is required to ensure that the smart contract is executed as intended by contracting parties [1]. Moreover, a formal specification entails that smart contract developers construct their system on the basis of an unambiguous account of the legal contract to be monitored, automated, and controlled [2].

This study aims to conduct a systematic literature review (SLR) on the process of translating a legal contract into a formal specification. Existing research tends to cover only part of the transformation process with a limited number of studies dealing with the full process. To make the review more focused, we have decomposed the translation problem into four sub-problems: (a) Structural and semantic annotation of legal text based on a legal ontology; (b) Discovery of relationships for concepts identified and annotated in step (a); (c) Formalization of terms used in the NL text into a domain model; (d) Generation of formal expressions that capture the terms and conditions of the contract. This refinement of the translation problem is intended to facilitate the discovery of relevant works in the literature that deal with one or more of the sub-problems, rather that focus only on studies that tackle the full translation problem.

Let us illustrate the four sub-problems with an example. Consider a simple sales contract between a meat producer and a supermarket chain that has clauses, as shown in Table 1.

Here, terms in square brackets […] identify parameters of the contract, to be determined for each contract execution, while wiggly brackets {…} define quantities to be determined at execution time. The semantic and structural annotation step identifies structurally three obligations and a power. Powers are rights contract parties have to cancel, suspend, or create new obligations. Moreover, the contract identifies roles of parties, namely [Buyer] and [Seller], as well as asset meat. Accordingly, the output of a tool that addresses the first sub-problem may look, as shown in Table 2.

A semantic annotation requires a common vocabulary (ontology) for legal contracts [3]. A structural annotation requires a grammar for the syntactic structure of legal contracts [4].

To address the second sub-problem, we need to find relationships for each one of the concepts identified in step one. For example, each obligation must have a debtor who is obliged to fulfill it, and a creditor (beneficiary). The debtors and creditors of O1 and O2 are obviously Seller and Buyer, respectively, while in O3 roles are reversed. Note that in O2 and O3, there is no mention of a creditor, so this has to be inferred from context. Finally, for the power the creditor is the Buyer and the debtor the Seller. In addition, each obligation/power must have a trigger that initiates it, an antecedent that serves as precondition, and a consequent that signals successful completion of the obligation/power. P1 has a trigger ‘If delivery is late’, while others take a trigger ‘true’, indicated by T, and are initiated when contract execution starts. O1 has antecedent ‘true’ and consequent ‘deliver [qnt] quantity of meat of AAA quality to the warehouse of the < \role [Buyer] > before [delD] date’, while O2 has antecedent ‘while meat is transported’ and consequent ‘meat will be transported in accordance with meat transportation standards. The output of the second step is a conceptual model of concepts and relationships, where for each obligation, we list trigger, debtor, creditor, antecedent, consequent, and for each power, we list trigger, debtor, creditor, antecedent, and consequent. The result is included in Table 3.

The third sub-problem concerns formalizing terms used in the contract in relation to the legal contract ontology used in step (a). In particular, ‘meat’ is an instance of class BeefC, representing a portion of beef, which is a specialization of MeatC, which has as instances quantities of meat, including beef, chicken, etc. In turn, MeatC is a specialization of FoodC, which is a specialization of Asset. Asset is one of the concepts in the contract ontology. Along the same lines, ‘warehouse’ refers to an address that is the target of the delivery, so it can be formalized as attribute warehAddr of class BuyerC, which is a specialization of Role, another class in the contract ontology. The result of this step is a domain model for the contract being formalized. Formalization of terms is intended to eliminate ambiguity. For example, ‘meat’ is multiply ambiguous (could it be chicken or lamb?). Likewise, does ‘delivery’ in ‘‘If delivery is late’’ refer to the delivery action, or more likely to the event ‘delivered’. The formalization is based on an ontology for contracts that includes primitive concepts such as Role, Obligation, Power, Asset, Event, etc. and the relationships mentioned above, such as debtor, creditor, antecedent, and consequent.

The fourth sub-problem concerns translating NL expressions such as ‘meat is transported’ into expressions in a formal specification language. Symboleo [5] is such a language where true/false statements are defined in terms of events, instantaneous happenings, and situations, states-of-affairs that occur over a period of time. For example, the trigger of P1 “If delivery is late” is translated to “happens(delivered(Seller, meat, Buyer.delAddr), t) and (t after delD)” where delivered(…) is an event that happens at time t. The antecedent of O2 is translated to “occurs(transport(meat), int)”, which says that the situation transport(meat) has occurred during interval int, while the consequent is translated into “occurs(MTS(meat), int)”, which says that the situation meat-transported according to transportation standards (MTS) occurred during int, the interval of the transportation situation.

The SLR provides three main contributions. First, it proposes a process for translating a contract in natural language into a formal specification and uses it to define research questions that guide the review process. Second, it identifies the most significant papers for each research question that can guide researchers interested in the topic. Finally, it provides a direction for future research by pointing out main challenges and open problems that would require further research. The importance of the SLR is underlined by the increasing number of recent publications covering aspects of the translation process, as well as the increasing interest in smart contract applications, Also, the lack of systematic literature reviews on the topic, as well as related topics.

The rest of the paper is structured as follows. “Preliminaries” defines the terminology adapted in this review. “Research questions and scope” defines research questions to be answered by the SLR and its scope, that identifies the works included in the study. “Classification scheme” describes the classification scheme adopted for selected papers, while “Search and screening of selected papers” describes the search. “Classification of selected papers” classifies the papers identified based on research questions, and a further taxonomy. “Synopsis of the papers” describes the papers that have been analyzed, whereas the findings for each research question are summarized in “Summary of findings”. “Threats to validity” defines threats to validity for this study, while “Related work” discusses related work. Finally, “Conclusions” concludes and discusses future work.

Preliminaries

As already hinted in the introduction, throughout this study, we use terms, such as ‘legal contract’, ‘legal document’, etc. These terms are elaborated in Table 4. Although the focus of the SLR concerns translation of contracts, legal documents have been included in the study, as well, since they concern similar concepts and their translation into a formal specification involves similar steps. Moreover, the limited number of papers focused on legal contracts suggested the need to widen the scope to also include legal documents.

The SLR follows the general methodology proposed in [10] and made more concrete in [11] and [12] for a Requirements Engineering topic. The methodology has as follows:

Step 1: Define research questions (RQs) that the SLR is intended to answer, as well as the scope of the SLR. Outcome: RQs, document libraries to be used, inclusion and exclusion criteria, search method (keyword search, snowballing, etc.), queries for each RQ

Step 2: Determine classification scheme for paper types, topics, and keywords for each scheme. Outcome: classification scheme

Step 3: Conduct search and screen retrieved papers with respect to relevance and inclusion/exclusion criteria. Outcome: selected papers

Step 4: Classify selected papers. Outcome: classification of selected papers

Step 5: Answer research questions on the basis of the classification. Outcome: SLR results.

Research Questions and Scope

The RQs identified concern the translation of NL text into a formal specification for legal documents, as well as the four sub-problems discussed in “Introduction”. The RQs represent the decomposition of the general process that we established (RQ0), aiming to understand how the different steps have been executed (RQ2 to RQ5). Since the topic of legal ontologies underlies several research questions, it has been added as a sixth research question (RQ1). For each of them, the SLR is intended to mine main approaches and research results that together characterize the state-of-the-art (Table 5).

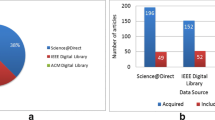

The scope of the study includes the publication datasets of ACM, IEEE, and Springer where the vast majority of publications for the subject of the SLR have appeared. Beyond this, scope is determined by the RQs and is defined by search queries (SQ), one for each RQ (Table 6), as well as inclusion criteria.

Inclusion criteria are provided in Table 7.

Classification Scheme

The classification scheme adopted for selected papers uses two criteria: (a) Which research questions does each paper address; (b) The type of each paper, e.g., proposal, implementation, evaluation, etc. The former criterion has already been discussed in the introduction. For the latter, we adopt the classification scheme used in [11], with amendments, since the subject of our review is substantially different from that in [11] (Table 8). This criterion allows us to classify papers according to the nature of their contribution(s).

The categories in each classification are not mutually exclusive. For example, a publication may tackle at the same time the sub-problems of semantic annotation and relationship mining, or may include a proposal, an implementation, and an empirical evaluation. Of course, it is not expected from any publication to cover all or most categories for either criterion.

Search and Screening of Selected Papers

The systematic search based on the search queries in Table 6 took place between October 2020 and June 2021. Initially, relevant papers were identified by searching the ACM, Springer, and IEEE scholarly repositories with our adopted search queries. In a first attempt, we implemented RQ queries into the search facilities provided for advanced research for each repository. However, the impossibility to consistently apply the search queries for each database—arising from significant differences in the different search fields for advanced research—led to the decision to rely on ACM Guide to Computing Literature that allows the identification of papers published as well from Springer and IEEE. Moreover, we decided against using Google Scholar, because it does not support searching over abstracts only. The RQ search queries have been applied to the abstracts for all queries, except RQ1 on legal ontologies. The application of the queries for RQ1 to the abstracts resulted in a disproportionated number of results compared to the results of the other RQs. To obtain a reasonable result for RQ1, the search questions were applied to the title and including works with at least one citation. The results of the SQs were analyzed by the three expert authors to subsequently apply a variant to the SLR process to make sure that all relevant papers were included. As the result of the analysis underlined the lack of important publications dealing with one or more RQs, as well as the recent proceedings of the main conferences in the domain (i.e., ER, PoEM, RE, and REFSQ) from 2019 to 2021 which may not have been included yet in the ACM repository. As such, SQs have been refined and new papers have been added to the total number of papers being analyzed, also considering they have been published very recently. The refinement of the SQs and the indications of the three expert authors allowed the identification of the following works to ensure that main approaches to formalize specification languages for contracts were included [7, 39, 53, 17] rely on a simplified NL, based on laws that are codified into pre-defined structures, to propose a process that transforms a legal document into an ontology based on RDF. The NLP system translates a legal document into tuples for a relational database by relying on different ontological and linguistic knowledge levels. The process supports the identification of structural, lexical, and domain ontology elements. It is intended for the management of notary documents and has been experimentally tested over a collection of around 100 legal documents with encouraging results. The use of simplified NL may imply a decrease in semantic richness in the translation of a legal document into a domain model.

Other approaches focus on building domain models that can potentially be reused with different languages. Notably, Francesconi et al. [42] suggest an approach for knowledge acquisitions and ontology modelling based on an existing ontology that is refined for a specific legal document with NLP techniques. They focus on the existing relations between the two layers—lexical and ontological—to define multilingual ontological requirements. The challenge of adapting ontologies or domain models for different languages is considered also by [94] in TextToOnto using extensible languages processing frameworks, such as GATE. The open-source tool has been created to support legal experts in identifying legal ontologies from text. Despite the difficulties required by the translation in different languages, they may support interoperability and the adoption of ontologies and domain models.

RQ5: What Kinds of Techniques Have Been Studied for Translating NL Expressions into Formal Ones for Legal Documents?

The translation of legal documents into formal expressions has received less attention than other RQs. Research on this RQ date as far back as 1993 [57], relying on Conceptual Graph formalism based on Sowa for knowledge representation. The use of formal logic expressive enough to represent legal contracts is a recurring challenge. Among the first attempts to translate NL expressions into a formal specification for a contract, Governatori [7] proposes the Business Contract Language (BCL) based on Propositional Deontic Logic. This work describes the process of deriving a formal system from contract provisions that account for the identification of ambiguities in a contract, determine the existence of missing or implied statements, and analyze the expected behaviours of the parties and existing relationships between parts of the contracts (e.g., clauses). In the formal system, a contract is represented as a set of deontic terms for obligations, prohibitions, and permissions. Other approaches focus on the pre-treatment of text. Montazeri [69] proposes a methodology for extracting production rules from legal texts that generate a raw translation and refactors the rules to enhance understandability. The approaches of [82] and [69] focus on deriving formal requirements from legal text and are accordingly more general than the subject matter of this review.

A summary has been included in Table 10 detailing for each paper analyzed, the methodologies, tools, and resources adopted from the literature and proposed. The papers are ordered according to their appearance in this section and for the main RQ they address.

Summary of Findings

The SLR supports the identification of the main research efforts and existing gaps concerning the six RQs. Below, we summarize the results of our work and underline the most recurring, novel, and cited works.

RQ0: What are the Main Approaches for Translating Legal Documents into Formal Specifications?

A limited number of works have considered the full objective of translating legal documents into formal specifications. More generally, limited interest in formal specifications has been identified despite an increase in the last years with the development of smart contract technologies. As such, regarding the overall transformation process, it should be considered the possibility to complement different approaches concerning the four steps presented in the SLR. Most of the few approaches that perform the full process at first identify concepts and relationships in a legal text to subsequently formally represent them with formal logics described in works related to RQ5. The most recurring approach relies on the annotation of the concepts using an ontology to generate a skeleton of specifications and formal expressions in [2, 5, 80]. Other approaches are tailored to the generation of models of law [99], Internet contracts [49], or rely on conditional structures [52] to extract and model contract elements. In recent work, the full process to generate formal specifications for a smart contract is presented [39]. The translation process is often simplified through the use of normalized or controlled NL to better identify and represent requirements for formal specifications [30, 64, 89, 90]. Finally, significant initiatives have been identified that aim at providing tools supporting management and formal representation of legal documents and contracts [22, 46]. However, none of them covers the full process of translating a legal text into formal specifications, and they generally require significant and time-consuming manual support. Moreover, the ability to generate a formal language expressive enough to represent the nuances of legal texts would still need significant effort.

RQ1: What Legal Ontologies Have Been Used for the Translation?

Significant work has been done to apply, create, or manage a legal ontology for legal documents or contracts. Existing approaches can be divided into two categories frequently considered together: ontology elements are identified from text, or vice versa, text is annotated based on an existing ontology. The SLR identified significant misalignment in defining the concept of ontology itself, as it is frequently interchangeably used with terms such as ‘domain model’ or ‘conceptual model’. Generally, ontologies offer a higher degree of reusability for different contracts, whereas domain and conceptual models are specialized for a given type of contract (e.g., for renting or employment). The creation of ontologies or domain models from text has been investigated by [16, 17, 37, 60, 81]. Other approaches rely on multi-tier ontologies to move from the abstract to the specific elements, where the specific elements could also represent a domain model [39, 54]. The multi-tier ontology allows dealing with the different levels of abstraction required at the different layers (e.g., technical implementation, business logic) and eases communication among different audiences in contract automation (e.g., lawyers and programmers). A frequently used tool for modelling ontologies is Protégé, an ontology editor and knowledge acquisition system that has been used by [18, 41, 98]. The use of Protégé allows relying on a free, open-source platform that is supported by a broad community of users. Significant research has taken place to focus on specializing an existing ontology for different contexts to increase the comparability and reusability of ontologies. This has been mainly performed with the support of OWL, a language to represent ontologies that have been widely used [18, 28, 41, 42, 86, 99]. Other approaches use tools compatible with OWL [4, 22] and most notably [37] that proposes METHONTOLOGY, which has been adapted to different domains without significant technical support. DAML + OIL, the predecessor of OWL, is used by [49] in a popular work with a rule-based technique to automate the execution of business contracts along their lifecycle. Ontologies are similarly implemented using LKIF by [28]. A legal ontology frequently applied is CLO, which gained traction also thanks to [43] that relies on DOLCE and a JurWordNet lexicon. Similarly, there are ontologies adapted from CLO [42] or its evolution UFO-L CLO [2, 5, 6, 48, 80]. The SLR seems to suggest the importance of using a common standard to express an ontology, frequently implemented in OWL, and based on an existing ontology, such as CLO to rely on a solid, well-documented, and comparable basis. Moreover, the review suggests the existence of the open question of having legal ontologies that have sufficient expressiveness to properly represent legal documents, also considering complementary tools for contract management (e.g., ArchiMate [48]), to increase their adoption and use by legal practitioners.

RQ2: What Annotation Approaches are Used for Semantic Annotation of Legal Text?

Significant research effort has been undertaken concerning semantic annotation of legal text, although some challenges still have to be overcome to efficiently identify concepts from legal texts. Frequently, annotation is implicitly considered as the foundational element to perform the general process of translating a legal contract into a formal specification. Two main approaches have been identified; the first and most widespread refers to the implementation of NLP based rules, used in a varied range of tools to support computers in understanding and modelling NL documents [2, 4, 9, 18, 45, 50, 52, 56, 70]. The second type of approaches refer to the use of ML techniques, not necessarily specialized for NL, that apply algorithms to learn and improve from experience [25, 71, 75, 81, 87] and that frequently rely on SVM. Although NLP is a research area of ML, frequently, the approaches proposed by the authors are generally classified under one category or another, or seldom with complementary approaches (e.g., [47]). The approaches referring to ML, are more recent due to the computational power required for supervised and unsupervised learning. In ontology-based semantic annotation—the most widespread approach—the process identifies instances of the concepts defined in the ontology, whereas structure annotation is commonly referred to on the definition of grammar. A legal text is parsed to perform syntactic analysis based on grammar rules to identify the structure of a phrase and how words relate to each other and mainly involves tokenization and PoS (Part of Speech) tagging [4, 17, 42, 45, 47, 81, 87, 94]. Structural annotation is mostly performed relying on the definition of a grammar to identify structure elements of a contract and, less frequently on ML techniques [35]. Rules IF_THEN are used by [52, 70] to identify semantic content. Accuracy in structural annotation performs better than in semantic annotation as it implies a lower level of ambiguity. Finally, for annotation, it is worth underlying significant tools such as Akoma Ntoso [22] and LegalRuleML [46] that take a holistic approach in the lifecycle of a contract and that use XML markup. The interest in those approaches mostly relies on the open-source, collaborative approach that lays the foundations for collaboratively developed, solidly tested tools and with a broader spectrum of uses, differently from the majority of works identified in the research.

RQ3: What are Main Approaches for Mining Relationships from Annotated Text?

The identification of relationships in legal text has been frequently considered as a sub-task of semantic annotation; in this case, the proposed approaches share significant commonalities with the annotation process. The mining of relationships in the legal context has been studied with two objectives. The first objective relates to the identification of relationships within a phrase (e.g., noun, verbs, and adjectives) [4, 52, 70], whereas the second refers to the identification of relationships between different sections of legal text (e.g., legal provisions, clauses, etc.) [46, 56, 65]. As such, the identification of relationships within a phrase appears to support more significantly the objective of generating formal specifications compared to the identification of relationships among different sections of a text. The latter approach is useful as a complementary analysis to identify dependencies among different parts of the text to infer contextual knowledge. The identification of relationships among different parts of legal text has been performed for legal reasoning in [26, 67, 71], or for the identification of structural parts of a document (e.g., title, clause, and section) in [45, 82]. Legal reasoning—which received significant academic interest—seems more tailored for the analysis of a legal document as a whole, rather than the generation of formal specifications from NL. Among other approaches for mining relationships from text, checklist and templates [50, 64] or the identification of causality in text [40] represent a useful intermediate step to support the identification of relationships. However, none of them fully cover the requirements to identify the relationships between the concepts identified in semantic annotation. The proposed approaches tend to be fragmented, applicable only to certain concepts and frequently based on manual identification, suggesting the need for further research in approaches tailoring specifically the identification of relationships.

RQ4: What are Main Techniques for Formalizing NL Terms into a Domain Model?

The formalization of NL terms into a domain model is frequently presented as part of the effort of generating an ontology from a legal contract; as such, there frequently is overlap** with RQ2. In several cases, the construction of a domain model has been identified as a subtask in the process of building an ontology using patterns and conditional rules [16, 17, 37] or in the context of multilingual ontologies [42] that relies on formal logic or graph-theoretic representations. The proposed approaches apply supervised ML techniques, and are represented in first-order logic [86] or rely on statistical measures to identify the relatedness of terms [61]. An alternative approach could be represented by the adoption of an existing ontology that is subsequently refined into a domain model for a specific contract as suggested in [42]. A manual approach relies on the use of checklists and templates [64]. Still, unresolved remains the development of a lexicon, or more generally the development of tools to automatically or semi-automatically generate a domain model from NL terms. Finally, an increased conceptual alignment in the scientific community concerning the concepts of domain models, conceptual models and ontologies would create a better understanding and applicability of the existing approaches.

RQ5: What Kinds of Techniques Have Been Studied for Translating NL Expressions into Formal Ones for Legal Documents?

A challenge recurringly mentioned in the SLR refers to the difficulty in conveying the expressiveness of legal documents into formal expressions. The translation of NL expressions into formal ones is mainly based on knowledge representation languages and frequently relies on Deontic Logics (e.g., obligations and permissions) [7]. One of the first efforts for legal documents relates to propositional logic in BCL and represents the more complete and appreciated research effort [7]. BCL includes a formal system that allows identification of ambiguities, determination of missing or implied statements, analysis of the expected behaviours of the parties, and existing relationships between different parts of the contract. Propositional logic is subsequently adopted by [67]. However, propositional logic has important limitations with respect to expressiveness and does not support the formalization of many elements of legal contracts. First-Order Logic, on the other hand, has been used by [41, 86]; its limitations concern the representation of categories and quantification of relations. Event Calculus—that similarly to smart contracts relies on an event-based logic—allows the representation of temporal events and their inter-relationships, and is used by [80]. Sleimi et al. [90] rely on templates for an intermediate step to formalize NL from legal documents. The approaches of [67, 69] and relies on production rules. The use of templates, normalized text, or production rules—despite being performed mainly manually—seems to represent a necessary intermediate step to increase accuracy in generating formal specifications. The last years saw the development of approaches concerning the generation of specifications for smart contracts [39] or the drafting of contracts in NL-like specifications that can be directly implemented into a smart contract [53], but the latter approach entails a lower level of formalisation. On the other hand, a higher degree of understandability may ensure that the formal specification properly reflects the intentions of the contracting parties during the lifecycle of the contract. In general, selected papers have focused on proposals of specification languages, whereas more significant efforts need to be devoted to techniques—including automated ones—to translate NL expressions into such specification languages.

Threats to Validity

The SLR was conducted following the guidelines proposed by Kitchenham [10]. These guidelines also call for consideration of threats to validity. Such threats are discussed in this section.

Study Completeness

This threat relates to the question “How complete is the list of papers considered in the study?” The threat is mainly related to the search queries used for each RQ to retrieve relevant papers. Given the significant number of studies and different approaches which could potentially contribute to a given RQ, it is challenging to define search queries that are neither too strict nor too broad. The selection of papers was performed to identify the most relevant works based on the number of citations, favouring implementations and authors with a track record on the topic of this SLR. Even so, the process implied a certain level of subjectivity, and therefore, the selection of the papers may have been different if performed by different authors. To mitigate this threat, queries and selected papers were presented to three experts on the topic of the SLR who suggested missing papers. As a result, queries were revised to ensure that suggested relevant papers would be selected in a second round of searching.

Another threat is related to the choice of the ACM Guide to Computing Literature as the repository used to determine relevant works. Even though the repository contains papers published in the three most important publishers in the field (i.e., ACM, IEEE, and Springer), other significant works may have been produced with a different publisher or may have not been included in the repository. Similar considerations apply for recent papers which have been included as the most relevant papers presented in 2019–2021, and for those illustrating formal specification languages that had not been identified by the first search. The point is: as executed, the SLR did address the completeness threat by including recent papers and papers on formal specification languages and legal ontologies that were not accounted for by the RQ queries. Moreover, the SLR is based on a proposed decomposition of the overall process of generating formal specifications from a text in natural language. Such decomposition is subjective and other authors may have proposed other approaches and steps.

Classification and Presentation

The classification of papers according to the RQs and the types of issues they address also contains elements of subjectivity. To mitigate this threat, a sample of the papers of Table 9 were discussed between two authors and a few discrepancies, mostly on the interpretation of RQs, have been highlighted. However, the classification of papers into RQs entails significant levels of subjectivity. This subjectivity was exacerbated by the use of different terminologies among different scientific communities in the domain. For example, the terms ‘ontology’ and ‘conceptual model’ are frequently used interchangeably in the RE community. Moreover, the framework proposed in the SLR is based on RQs that correspond to subproblems of the process of generating specifications from legal documents. Papers that did not match this problem decomposition were hard to classify. Here, we relied on the experience of the three senior authors of this study which have been working on the topic for more than a decade.

Synopses of Papers

The synopses of the most relevant papers included in the SLR is, of course, subjective as it also depends on the background and understanding of the reader. The synopses were generated by the first author. To mitigate this threat, synopses were spot-checked by other authors for accuracy and presentation consistency.

Related Work

This section is intended to cover papers that are themselves reviews one of the topics identified by the SQs in the SLR and have been classified as meta-studies. It does not cover non-review papers that are addressing problems on the translation process itself. These papers are discussed here. No review was identified that specifically refers to the translation of contracts in natural language into formal specifications.

Regarding ontologies, Valente [92] reviews different types of legal ontologies in the domain of Artificial Intelligence (AI) and law. The author proposes a classification based on the goal of the ontology, the need to structure information, legal reasoning, search and extraction of semantics, and understanding a domain. Valente focuses on hel** to understand the implications of the use of different ontology types. Ontologies with light structures frequently rely on graphical representation and taxonomies, whereas highly structured ontologies are usually based on formal representation languages. Breuker [31] identifies the main concepts recurring in different legal ontologies to be able to reuse or combine them for different domains. The reuse of legal ontologies may be useful for information retrieval purposes, for annotation and tagging, to enhance the ability to query a legal document for related terms (e.g., identification of synonyms), or to avoid ambiguity.

Wyner et al. [97] explore different annotation approaches to extract an argument from legal texts to support decision-making by judges. The focus of the authors is on the use of context-free grammars to extract arguments together with NLP tools and ontologies to identify parties of a legal case and their relationships. A significant gap is identified in the meta-study and concerns the lack of any support for the time-consuming task of extracting an ontology from a legal text.

Otto et al. [77] review studies on compliance of legal text and survey the main research efforts in modelling and using legal text for system development, which are based on various forms of logic, goal modelling, and semi-structured representations. The focus of this paper is to determine the applicable regulations and to create compliance policies concerning those regulations. Based on the analysis, the authors propose a broad set of requirements for a system aiming to support compliance auditors.

Boella et al. [27] review the different approaches to represent legal knowledge to support requirements engineering activities. The authors underline the importance of the use of legal experts in the process as existing tools and approaches do not fully account for the implications of the law. Most of the proposed approaches adopt a ‘textualist’ view of the law, frequently associating a norm to a statement and lacking a holistic view of the nature of the law. Formal approaches are rarely used by law practitioners as they do not fully reflect the expressiveness of legal text and NL.

Conclusions

We present an SLR on the process of translating contracts expressed in NL into formal specifications. The SRL relies on a framework to perform the process which is based on four steps: (a) structural and semantic annotation, (b) identification of relationships, (c) domain modeling, and (d) generation of a formal specification. Furthermore, the SLR investigates legal ontologies and the implication of their use in the four steps. Much work has been performed concerning annotation of legal text, both structural and semantic and the use of ontologies. Remaining steps have received significantly less attention. In this respect, the SLR has identified existing research gaps for the problem at hand.

For future research, the study suggests focusing on three main open challenges. First, for each of the four steps, the tools and the approaches proposed tend to have a significant level of domain dependence. That implies that their ability to support a translation step significantly decreases when applied to a different domain. As a result, a significant number of tools and approaches are proposed but a very limited amount among them can be efficiently applied to different domains. Second, the challenge of building tools that automatically identify semantic and structure elements from the contract text with near expert performance. Finally, it is essential to ensure that formal specifications fully capture the intent of legal documents to enhance adoption by legal practitioners as well as the quality of software systems that support the practice of Law. Further involvement of legal professionals in develo** the tools and the approaches proposed in this SLR would be highly beneficial. With the ability to better define specifications for a developer that properly reflect the content of the contract and support the identification of activities that should be performed offline for elements for which contract automation presently has significant limitations (e.g., litigation). The ability to overcome such challenges could benefit from the recent interest and developments of smart contracts and blockchain for automated contract execution. In our future work, we are planning to test the use of a contract template that is implemented using Symboleo [5], a formal specification language for legal contracts.

References

Christidis K, Devetsikiotis M. Blockchains and smart contracts for the Internet of Things. IEEE Access. 2016;4:2292–303.

Soavi M, Zeni N, Mylopoulos J, Mich L. ContracT–from legal contracts to formal specifications: preliminary results. In: IFIP working conference on the practice of enterprise modeling; 2020. pp. 124–137.

Guarino N, Oberle D, Staab S. What is an ontology. In: Handbook on ontologies; 2009. pp. 1–17.

Zeni N, Kiyavitskaya N, Mich L, Cordy JR, Mylopoulos J. GaiusT: supporting the extraction of rights and obligations for regulatory compliance. Requir Eng. 2015;20(1):1–22.

Sharifi S, Parvizimosaed A, Amyot D, Logrippo L, Mylopoulos J. Symboleo: towards a specification language for legal contracts. In: 2020 IEEE 28th international requirements engineering conference (RE); 2020.

Griffo C, Almeida JP, Guizzardi G. Towards a legal core ontology based on Alexy’s theory of fundamental rights. In: Multilingual workshop on artificial intelligence and law, ICAIL; 2015.

Governatori G, Milosevic Z. A formal analysis of a business contract language. Int J Coop Inf Syst. 2006;15(4):659–85.

I. S. C. Committee. IEEE Standards Glossary of Software Engineering Terminology (IEEE Std 610.12 1990). Los Alamitos: IEEE; 1990.

Kiyavitskaya N, Zeni N, Breaux TD, Antón AI, Cordy JR, Mich L, Mylopoulos J. Extracting rights and obligations from regulations: toward a tool-supported process. In: Proceedings of the twenty-second IEEE/ACM international conference on Automated software engineering; 2007.

Kitchenham B, Pfleeger S, Pickard L, Jones P, Hoaglin D, Emam KE, Rosenberg J. Preliminary guidelines for empirical research in software engineering. IEEE Trans Softw Eng. 2002;28(8):721–34.

Horkoff J, Li T, Li F-L, Salnitri M, Cardoso E, Giorgini P, Mylopoulos J. Using goal models downstream: a systematic roadmap and literature review. Int J Inf Syst Model Des. 2015;6(2):1–42.

Horkoff J, Aydemir FB, Cardoso E, Li T, Maté A, Paja E, Salnitri M, Piras L, Mylopoulos J, Giorgini P. Goal-oriented requirements engineering: an extended systematic map** study. Requir Eng. 2019;24(2):133–60.

Agnoloni T, Tiscornia D. Semantic web standards and ontologies for legislative drafting support. In: ePart'10 Proceedings of the 2nd IFIP WG 8.5 international conference on Electronic participation; 2010.

Ajani G, Lesmo L, Boella G, Mazzei A, Rossi P. Terminological and ontological analysis of European directives: multilinguism in law. In: Proceedings of the 11th international conference on Artificial intelligence and law; 2007.

Alsaadi M, Lisitsa A, Qasaimeh M. Minimizing the ambiguities in medical devices regulations based on software requirement engineering techniques. In: Proceedings of the second international conference on data science, e-learning and information systems; 2019. pp. 1–5.

Amardeilh F, Laublet P, Minel J-L. Document annotation and ontology population from linguistic extractions. In: Proceedings of the 3rd international conference on knowledge capture; 2005.

Amato F, Mazzeo A, Penta A, Picariello A. Building RDF ontologies from semi-structured legal documents. In: 2008 international conference on complex, intelligent and software intensive systems; 2008.

Araujo DAD, Rigo SJ, Müller C, Chishman RLDO. Automatic information extraction from texts with inference and linguistic knowledge acquisition rules. In: Web Intelligence/IAT Workshops; 2013.

Ashley KD. Ontological requirements for analogical, teleological, and hypothetical legal reasoning. In: Proceedings of the 12th international conference on artificial intelligence and law; 2009.

Ashley KD, Walker VR. Toward constructing evidence-based legal arguments using legal decision documents and machine learning. In: Proceedings of the fourteenth international conference on artificial intelligence and law; 2013.

Azzopardi S, Pace GJ, Schapachnik F. On observing contracts: deontic contracts meet smart contracts. In: Legal knowledge and information systems; 2018. pp. 21–30.

Barabucci G, Cervone L, Palmirani M, Peroni S, Vitali F. Multi-layer markup and ontological structures in Akoma Ntoso. In: AICOL-I/IVR-XXIV'09 Proceedings of the 2009 international conference on AI approaches to the complexity of legal systems: complex systems, the semantic web, ontologies, argumentation, and dialogue; 2009.

Bench-Capon TJM, Visser PRS. Ontologies in legal information systems; the need for explicit specifications of domain conceptualisations. In: Proceedings of the 6th international conference on Artificial intelligence and law; 1997.

Bench-Capon TJ, Visser PR. Open texture and ontologies in legal information systems. In: Database and expert systems applications. 8th international conference, DEXA'97; 1997.

Biagioli C, Francesconi E, Passerini A, Montemagni S, Soria C. Automatic semantics extraction in law documents. In: Proceedings of the 10th international conference on Artificial intelligence and law; 2005.

Boella G, Governatori G, Rotolo A, Torre LVD. Lex minus dixit quam voluit, lex magis dixit quam voluit: a formal study on legal compliance and interpretation. In: AICOL-I/IVR-XXIV'09 Proceedings of the 2009 international conference on AI approaches to the complexity of legal systems: complex systems, the semantic web, ontologies, argumentation, and dialogue; 2009.

Boella G, Humphreys L, Muthuri R, Rossi P, Torre LWN. A critical analysis of legal requirements engineering from the perspective of legal practice. In: Requirements engineering and law (RELAW), 2014 IEEE 7th international workshop on; 2014.

Boer A, Winkels R, Vitali F. Metalex XML and the legal knowledge interchange format. In: Computable models of the law. Berlin: Springer; 2008. pp. 21–41.

Branting K, Weiss B, Brown B, Pfeifer C, Chakraborty A, Ferro L, Pfaff M, Yeh A. Semi-supervised methods for explainable legal prediction. In: Proceedings of the seventeenth international conference on artificial intelligence and law; 2019.

Breaux TD, Gordon DG. Regulatory requirements traceability and analysis using semi-formal specifications. In International working conference on requirements engineering: Foundation for software quality. In: International working conference on requirements engineering: foundation for software quality; 2013. pp. 131–157.

Breuker J, Valente A, Winkels R. Use and reuse of legal ontologies in knowledge engineering and information management. Law and the Semantic Web, Springer. 2005. pp. 36–64.

Bueno TCD, Wangenheim CG, Mattos EDS, Hoeschl HC, Barcia RM. JurisConsulto: retrieval in jurisprudencial text bases using juridical terminology. In: Proceedings of the 7th international conference on Artificial intelligence and law; 1999.

Capuano N, Maio CD, Salerno S, Toti D. A methodology based on commonsense knowledge and ontologies for the automatic classification of legal cases. In: Proceedings of the 4th international conference on web intelligence, mining and semantics (WIMS14); 2014.

Ceci M, Palmirani M. Ontology framework for judgment modelling. In: AICOL'11 Proceedings of the 25th IVR congress conference on AI approaches to the complexity of legal systems: models and ethical challenges for legal systems, legal language and legal ontologies, argumentation and software agents; 2011.

Chalkidis I, Androutsopoulos I. A deep learning approach to contract element extraction. In: JURIX; 2017. pp. 155–164.

Chieze E, Farzindar A, Lapalme G. An automatic system for summarization and information extraction of legal information. Semantic processing of legal texts, Springer. 2010. pp. 216–234.

Corcho O, Fernández-López M, Gómez-Pérez A, López-Cima A. Building legal ontologies with METHONTOLOGY and WebODE. Law and the Semantic Web, Springer. 2005. pp. 142–157.

Despres S, Szulman S. TERMINAE method and integration process for legal ontology building. In: IEA/AIE'06 Proceedings of the 19th international conference on advances in applied artificial intelligence: industrial, engineering and other applications of applied intelligent systems; 2006.

Dwivedi V, Norta A, Wulf A, Leiding B, Saxena S, Udokwu C. A formal specification smart-contract language for legally binding decentralized autonomous organizations. In: IEEE access; 2021.

Fischbach J, Frattini J, Spaans A, Kummeth M, Vogelsang A, Mendez D, Unterkalmsteiner M. Automatic detection of causality in requirement artifacts: the CiRA Approach. In: REFSQ; 2021.

Fornara N, Colombetti M. Ontology and time evolution of obligations and prohibitions using semantic web technology. In: DALT'09 Proceedings of the 7th international conference on Declarative Agent Languages and Technologies; 2009.

Francesconi E, Montemagni S, Peters W, Tiscornia D. Integrating a bottom–up and top–down methodology for building semantic resources for the multilingual legal domain. Semantic processing of legal texts, Springer. 2010. pp. 95–121.

Gangemi A, Sagri M-T, Tiscornia D. A constructive framework for legal ontologies. Law and the Semantic Web, Springer. 2005. pp. 97–124.

Gao X, Singh MP, Mehra P. Mining business contracts for service exceptions. IEEE Trans Serv Comput. 2011;5(3):333–44.

García-Constantino M, Atkinson K, Bollegala D, Chapman K, Coenen F, Roberts C, Robson K. CLIEL: context-based information extraction from commercial law documents. In: Proceedings of the 16th edition of the international conference on articial intelligence and law; 2017.

Governatori G, Hashmi M, Lam H-P, Villata S, Palmirani M. Semantic business process regulatory compliance checking using LegalRuleML. In: EKAW 2016 20th international conference on knowledge engineering and knowledge management, vol. 10024, 2016.

Grabmair M, Ashley KD, Chen R, Sureshkumar P, Wang C, Nyberg E, Walker VR. Introducing LUIMA: an experiment in legal conceptual retrieval of vaccine injury decisions using a UIMA type system and tools. In: Proceedings of the 15th international conference on artificial intelligence and law; 2015.

Griffo C, Almeida JP, Guizzardi G, Nardi JC. From an ontology of service contracts to contract modeling in enterprise architecture. In: IEEE 21st international enterprise distributed object computing conference (EDOC); 2017.

Grosof BN, Poon TC. SweetDeal: representing agent contracts with exceptions using XML rules, ontologies, and process descriptions. In: Proceedings of the 12th international conference on World Wide Web; 2003.

Grover C, Hachey B, Hughson I, Korycinski C. Automatic summarisation of legal documents. In: Proceedings of the 9th international conference on Artificial intelligence and law; 2003.

Hasan MM, Aganostopoulos D, Loucopoulos P, Nikolaidou M. Regulatory requirements compliance in e-government system development: an ontology framework. In: 10th international conference on theory and practice of electronic governance. 2017. pp. 441–449.

Hashmi M. A methodology for extracting legal norms from regulatory documents. In: Science & engineering faculty; 2015.

He X, Qin B, Zhu Y, Chen X, Liu Y. SPESC: A Specification Language for Smart Contracts. In: 2018 IEEE 42nd annual computer software and applications conference (COMPSAC); 2018.

Kabilan V, Johannesson P. Semantic representation of contract knowledge using multi tier ontology. In: SWDB, 2003. pp. 395–414.

Kayed A. Building e-laws ontology: new approach. In: OTM'05 proceedings of the 2005 OTM confederated international conference on on the move to meaningful internet systems; 2005.

Kiyavitskaya N, Zeni N, Breaux TD, Antón AI, Cordy JR, Mich L, Mylopoulos J. Automating the extraction of rights and obligations for regulatory compliance. In: ER '08 proceedings of the 27th international conference on conceptual modeling; 2008.

Konstantinou V, Sykes J, Yannopoulos GN. Can legal knowledge be derived from legal texts? In: Proceedings of the 4th international conference on Artificial intelligence and law. 1993. pp. 218–227.

Lagioia F, Micklitz HW, Panagis Y, Sartor G, Torroni P. Automated detection of unfair clauses in online consumer contracts. In: Legal knowledge and information systems: JURIX 2017: the thirtieth annual conference, vol. 302; 2017.

Lagioia F, Ruggeri F, Drazewski K, Lippi M, Micklitz HW, Torroni P, Sartor G. Deep learning for detecting and explaining unfairness in consumer contracts. In: Legal knowledge and information systems, IOS Press; 2019. pp. 43–52.

Lame G. Using NLP techniques to identify legal ontology components: concepts and relations. Law and the Semantic Web, Springer. 2005.

Lame G, Desprès S. Updating ontologies in the legal domain. In: Proceedings of the 10th international conference on Artificial intelligence and law. 2005.

Lau GT, Law KH, Wiederhold G. Similarity analysis on government regulations. In: Proceedings of the ninth ACM SIGKDD international conference on Knowledge discovery and data mining; 2003.

Lau GT, Law KH, Wiederhold G. Legal information retrieval and application to e-rulemaking. In: Proceedings of the 10th international conference on Artificial intelligence and law. 2005.

Lee S-W, Gandhi R, Muthurajan D, Yavagal D, Ahn G-J. Building problem domain ontology from security requirements in regulatory documents. In: Proceedings of the 2006 international workshop on Software engineering for secure systems. 2006.

Lesmo L, Mazzei A, Radicioni DP. Extracting semantic annotations from legal texts. In: Proceedings of the 20th ACM conference on hypertext and hypermedia. 2009.

Levy F, Guisse A, Nazarenko A, Omrane N, Szulman S. An environment for the joint management of written policies and business rules. In: 2010 22nd IEEE international conference on tools with artificial intelligence; 2010.

Libal T, Pascucci M. Automated reasoning in normative detachment structures with ideal conditions. In: Proceedings of the seventeenth international conference on artificial intelligence and law. 2019.

Liu G, Buntine W, Yang X, Fu W. Research on domain-oriented latent policy lineage mining method. In: 2015 eighth international conference on internet computing for science and engineering (ICICSE). 2015.

Maxwell JC, Anton AI. Develo** production rule models to aid in acquiring requirements from legal texts. In: 2009 17th IEEE international requirements engineering conference. 2009.

Mazzei A, Radicioni DP, Brighi R. NLP-based extraction of modificatory provisions semantics. In: Proceedings of the 12th international conference on artificial intelligence and law. 2009.

Moens M-F, Boiy E, Palau RM, Reed C. Automatic detection of arguments in legal texts. In: Proceedings of the 11th international conference on Artificial intelligence and law, 2007.

Montazeri SM, Roy NKS, Schneider G. From contracts in structured English to CL specifications, vol. 68. 2011, pp. 55–69. ar** from the jungle of rights expression languages. In: Proceedings of the 11th international conference on Artificial intelligence and law. 2007.

Neill JO, Buitelaar P, Robin C, Brien LO. Classifying sentential modality in legal language: a use case in financial regulations, acts and directives. In: Proceedings of the 16th edition of the international conference on articial intelligence and law. 2017.

Osborn J, Sterling L. JUSTICE: a judicial search tool using intelligent concept extraction. In: 7th international conference on Artificial intelligence and law. 1999. pp. 173–181.

Otto P, Anton A. Addressing legal requirements in requirements engineering. In: 15th IEEE international requirements engineering conference (RE 2007). 2007.

Palmirani M, Brighi R. Model regularity of legal language in active modifications. In AICOL-I/IVR-XXIV'09 Proceedings of the 2009 international conference on AI approaches to the complexity of legal systems: complex systems, the semantic web, ontologies, argumentation, and dialogue. 2009.

Palmirani M, Governatori G, Rotolo A, Tabet S, Boley H, Paschke A. LegalRuleML: XML-based rules and norms. In: International workshop on rules and rule markup languages for the semantic web. Berlin: Springer; 2011.

Parvizimosaed A, Sharifi S, Amyot D, Logrippo L, Mylopoulos J. Subcontracting, assignment, and substitution for legal contracts in Symboleo. In: ER; 2020. pp. 271–285.

Quaresma P, Gonçalves T. Using linguistic information and machine learning techniques to identify entities from juridical documents. Semantic processing of legal texts, Springer. 2010. pp. 44–59.

Rabinia A, Ghanavati S, Humphreys L, Hahmann T. A methodology for implementing the formal legal-GRL framework: a research preview. In: International working conference on requirements engineering: foundation for software quality. 2020.

Rao PRK, Devi SL. Automatic identification of conceptual structures using deep boltzmann machines. In: Proceedings of the 7th forum for information retrieval evaluation on; 2015.

Rodrigues CMO, Azevedo RR, Freitas FLG, Silva EP, Barros PVS. An ontological approach for simulating legal action in the Brazilian penal code. In: Proceedings of the 30th annual ACM symposium on applied computing. 2015.

Roegiest A, Hudek AK, McNulty A. A dataset and an examination of identifying passages for due diligence. In: The 41st international ACM SIGIR conference on research & development in information retrieval. 2018.

Saias J, Quaresma P. A methodology to create legal ontologies in a logic programming information retrieval system. Law and the Semantic Web, Springer. Law and the Semantic Web, Springer. 2005. pp. 185–200.

Sainani A, Anish PR, Joshi V, Ghaisas S. Extracting and classifying requirements from software engineering contracts. In: 2020 IEEE 28th international requirements engineering conference (RE). 2020.

Sannier N, Adedjouma M, Sabetzadeh M, Briand L. An automated framework for detection and resolution of cross references in legal texts. Requir Eng. 2017;22(2):215–37.

Sleimi A, Ceci M, Sannier N, Sabetzadeh M, Briand L, Dann J. A query system for extracting requirements-related information from legal texts. In: 2019 IEEE 27th international requirements engineering conference (RE). 2019.

Sleimi A, Ceci M, Sabetzadeh M, Briand LC, Dann J. Automated recommendation of templates for legal requirements. In: 2020 IEEE 28th international requirements engineering conference (RE). 2020.

Schwitter R, Tilbrook M. Dynamic semantics at work. In: New frontiers in artificial intelligence. Berlin: Springer; 2003. pp. 416–424.

Valente A. Types and roles of legal ontologies. Law and the Semantic Web, Springer. 2005. pp. 65–76.

Villata S. Digital enforceable contracts (DEC): Making smart contracts smarter. In: Legal knowledge and information systems: JURIX 2020: the thirty-third annual conference, Brno, Czech Republic; 2020.

Völker J, Langa SF, Sure Y. Supporting the construction of Spanish legal ontologies with Text2Onto. 2008. pp. 105–112.

Walker VR, Han JH, Ni X, Yoseda K. Semantic types for computational legal reasoning: propositional connectives and sentence roles in the veterans' claims dataset. In: Proceedings of the 16th edition of the international conference on articial intelligence and law. 2017.

Weber-Jahnke JH, Onabajo A. Finding defects in natural language confidentiality requirements. In: 2009 17th IEEE international requirements engineering conference. 2009.

Wyner A, Mochales-Palau R, Moens M-F, Milward D. Approaches to text mining arguments from legal cases. Semantic processing of legal texts, Springer. 2010. pp. 60–79.

Yan Y, Zhang J, Yan M. Ontology modeling for contract: using OWL to express semantic relations. In: 2006 10th IEEE international enterprise distributed object computing conference (EDOC'06). 2006.

Zeni N, Seid EA, Engiel P, Mylopoulos J, Nómos T. NómosT: building large models of law with a tool-supported process. Data Knowl Eng. 2018;117:407–18.

Funding

Open access funding provided by Università degli Studi di Trento within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Soavi, M., Zeni, N., Mylopoulos, J. et al. From Legal Contracts to Formal Specifications: A Systematic Literature Review. SN COMPUT. SCI. 3, 345 (2022). https://doi.org/10.1007/s42979-022-01228-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42979-022-01228-4