Abstract

The detection of vehicles is a crucial task in various applications. In recent years, the quantity of vehicles on the road has been rapidly increasing, resulting in the challenge of efficient traffic management. To address this, the study introduces a model of enhancing the accuracy of vehicle detection using a proposed improved version of the popular You Only Look Once (YOLO) model, known as YOLOv5. The accuracy of vehicle detection using both the original versions of YOLOv5 and our proposed YOLOv5 algorithm has been evaluated. The evaluation is based on key accuracy metrics such as precision, recall, and mean Average Precision (mAP) at an Intersection over Union (IoU). The study's experimental results show that the original YOLOv5 model achieved a mean Average Precision (mAP) of 61.4% and the proposed model achieved an mAP of 67.4%, outperforming the original by 6%. The performance of the proposed model was improved based on the architectural modifications, which involved adding an extra layer to the backbone. The results reveal the potential of our proposed YOLOv5 for real-world applications such as autonomous driving and traffic monitoring and may involve further fine-tuning, robotics and security system and exploring broader object detection domains.

Article Highlights

-

The article explores the advancements in vehicle detection through the application of a modified version of YOLOv5, a popular object detection algorithm. This modification aims to enhance the accuracy, speed, and overall performance of vehicle detection systems.

-

Detailed explanation of the modifications made to YOLOv5 to improve its performance in vehicle detection scenarios and highlighting changes in the network architecture.

-

The current results show the improved performance of the modified YOLOv5 in vehicle detection tasks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

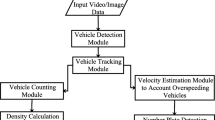

In today’s fast-paced world, the ability to identify and track vehicles accurately has become increasingly important. Whether it's for managing traffic, enhancing security, or enabling self-driving cars, the need for robust vehicle detection systems is evident [1]. Imagine a world where cameras can instantly recognize and monitor vehicles with high precision, regardless of the conditions. Among the various object detection models, You Only Look Once version 5 (YOLOv5) [2] has emerged as a powerful and efficient solution, known for its real-time capabilities and high accuracy [3]. In this context, this thesis aims to push the boundaries of vehicle detection through the exploration and modification of YOLOv5, unlocking its potential to improve the accuracy and speed of vehicle detection systems [4]. YOLOv5 [5] is a revolutionary approach in computer vision, celebrated for its remarkable speed and accuracy. It can process images or video frames in real-time, making it a game-changer in many applications.

Vehicle detection is a critical component of many modern technologies, and its applications extend to traffic management, security surveillance, autonomous vehicles, and more [6]. The ability to identify vehicles precisely and efficiently within a given frame of visual data is central to the success of these systems. As such, researchers and developers have been continuously striving to enhance the performance of object detection models, especially in the context of vehicles [7]. YOLOv5, with its ability to process images and video frames in real time, presents a promising framework for addressing these challenges. We’ll explore what makes YOLOv5 tick, delve into the intricacies of vehicle detection, and address the real-world challenges that these systems face. What makes this project exciting is that we're not just using YOLOv5 as it is; we're making improvements and adjustments to fine-tune its performance, especially in detecting vehicles [8]. By unveiling the potential of a modified YOLOv5, this work offers valuable insights and practical solutions that can benefit a wide range of applications, from traffic management and public safety to the ongoing development of autonomous vehicles [9].

In this work, an enhanced network is introduced, derived from the YOLOv5s model. The performance of the modified YOLOv5 is elevated through the following enhancements: (i) Added a new layer in the YOLOv5 network’s backbone and head function. (ii) Integration of the inception model structure. (iii) Incorporation of a spatial pyramid pooling layer. The proposed model adeptly captures image features, leading to superior performance in object detection. we embark on a mission to unleash the potential of modified YOLOv5 for an even better vehicle detection system. In the chapters that follow, we will delve into the related works of vehicle detection, including real-world scenarios and environmental factors. We will also discuss an original model of YOLOv5 the modifications made to the YOLOv5 model and the expected impact on detection performance. Furthermore, we will detail the results.

2 Related work

In recent years, there has been a surge of research and development in the field of vehicle detection, driven by the increasing demand for more accurate and efficient systems in various applications. The following section provides an overview of key studies and developments related to our work. To understand the context of our work, it's essential to review the evolution of YOLO versions, particularly YOLOv5 [10], and its role in object detection. Previous iterations, such as YOLOv4 and YOLOv3 [11]. laid the foundation for real-time object detection, which YOLO aims to build upon. Many researchers have explored modifying existing object detection models to enhance their performance for specific tasks. Examining modifications made to YOLOv4, YOLOv3, and other models in the context of vehicle detection will offer valuable reference points and insights in Table 1.

Beyond YOLO, there are other state-of-the-art vehicle detection approaches, including Faster R-CNN, SSD [21], and RetinaNet. The machine learning and deep learning communities have developed numerous techniques for improving object detection, including data augmentation, transfer learning, and fine-tuning. These techniques can be leveraged in our research to enhance the performance of YOLOv5 [22].

Even though YOLOv7 was mentioned in the related work, the decision to use YOLOv5 as the baseline could have been driven by the desire to provide a comparative analysis against other YOLO models. This allows for a more direct comparison of the proposed method against a strong baseline. The other reason is that YOLOv5 was readily available and accessible to the researchers. It's possible that YOLOv7 was not publicly released or documented at the time of the study, making it difficult to replicate.

By analyzing these facets of related work, our thesis aspires to build upon the existing knowledge and contribute to the ongoing evolution of vehicle detection technology. The synthesis of this extensive literature serves as the foundation for our exploration of enhanced vehicle detection using a modified YOLOv5 model.

3 Proposed model

In this innovation, we introduce the YOLOv5 model, tailored to the detection of vehicles within images and videos. The process involves training YOLOv5 on a meticulously annotated dataset consisting of images and videos featuring vehicles and subsequently deploying it to identify vehicles in new visual data. YOLOv5 [23] stands out due to its numerous advantages in the realm of vehicle detection. For our vehicle detection system, we have opted for the modified YOLOv5 model.

3.1 Architectural design of YOLOv5s

YOLOv5s (small) is an object detection system in real-time that is designed for both accuracy and speed. This is the YOLO variant of the detection algorithm and is part of a larger family of YOLO algorithms. That indicates a lighter and faster version of the original YOLOv5 algorithm [24]. Detection of objects is the classic digital task for determining objects which are present and where they are located. Object detection tasks are more complex than classification tasks, which recognize objects but do not specify their location in an image. Also, there are many objects in the image that cannot be classified. The model’s family consists of three major building blocks: (i) Backbone, (ii) Neck, and (iii) Head [25]. Where backbone uses Darknet CSP as a basis for extraction of features from images composed to interlaced LANs. YOLOv5 Head: A class that generates predictions from anchor blocks for detection objects; Combining the three major parts then the YOLOv5 is established, and we get a simplified block of YOLOv5s which is given in Fig. 1.

3.2 Proposed modified_ YOLOv5

The proposed version of YOLOv5 introduces a novel approach by expanding the architecture with an extra convolutional layer added to both the backbone and head sections. This enhancement augments the model's capabilities and sets it apart from the original YOLOv5.

Backbone Modifications: In the traditional YOLOv5 backbone network, feature extraction relies on several convolutional layers. However, in this modified version, an additional convolutional layer is introduced into the backbone. This extra layer aims to capture more intricate patterns from the input images and further enhance the representation of extracted features. The added convolutional layer empowers the model to achieve a deeper understanding of the underlying data, improving its overall performance in feature extraction.

Head Modifications: The head of YOLOv5 typically includes convolutional layers responsible for predicting bounding boxes, class probabilities, and other relevant information. In the modified version, an extra convolutional layer is introduced into the head. This additional layer can enable the model to learn more complex spatial relationships or encode higher-level features.

By adding an extra convolutional layer to both the backbone and head, the proposed YOLOv5 model [26] can potentially improve its ability to detect objects accurately. The added layers allow for more expressive power and increased capacity for capturing intricate details in the input data. The diagram of proposed _YOLOv5 is seen to Fig. 2. It is important to note that the specific configuration, hyperparameters, and design choices of the added convolutional layers can vary based on the requirements and goals of the modification. Experimentation and fine-tuning may be necessary to determine the optimal architecture and parameters for the proposed YOLOv5 model.

3.3 Dataset and preprocessing

The YOLOv5 patterns were identified and trained using the ROBOFLOW dataset configuration. This dataset comprises a total of 3001 images, with 2600 images dedicated to training, 251 images for validation, and 125 images for testing. All the images are standardized to a size of 416 × 416 pixels. This comprehensive dataset consists of six distinct classes: Bus, Truck, Motorcycle, Ambulance, Bicycle, and Car. The sample of this dataset is given in Table 2. To ensure the model's accuracy, the dataset includes images of vehicles captured from multiple angles, encompassing the front, rear, side, and top perspectives [27]. It is of utmost importance that each class is adequately represented to facilitate the effective training of the vehicle detection model.

Detection models typically include object classifiers trained to recognize specific object categories based on the extracted features. These classifiers are trained on labeled data containing examples of different object categories, enabling them to learn to differentiate between them. There are often differences in the features of different categories of objects within the context of vehicles. For example, vehicles themselves have distinct features such as shape, size, color, and texture, while other objects like pedestrians, cyclists, or road signs possess different characteristics. These variations in features are crucial for accurately detecting and recognizing different objects in the scene. Detection algorithms typically consider these differences and potential mutual interferences during design and development.

3.4 Confusion matrix

In the detection object task, we aim to accurately identify the presence and location of objects within an image. The goal of detecting objects is to identify objects within an image and accurately determine their location. This is typically accomplished through binary predictions, which indicate whether an object has been successfully detected or not. For this here Fig. 3 represents the confusion matrix of original YOLOv5 model and Fig. 4 which also represents the proposed YOLOv5 models confusion matrix for successfully detection objects or not However, there may be cases where the model's predictions do not match any actual objects present in the image. These instances can result in false positives, where the model mistakenly identifies an object that is not there, or false negatives, where it fails to detect an object that is present in the input image [28]. Therefore, improving the accuracy accurately of detection object models is a challenging task that requires careful consideration of various factors such as dataset quality, model architecture, and training parameters.

3.5 Precision, recall, and F1 score

Evaluating the result to this work, the following formula was used to calculate the overall mean accuracy for vehicle detection as [29]

where n, APk, and K bear the meaning as the number of classes, the average precision for class k.

Precision:

Precision measures the proportion of correctly predicted positive instances (true positives) out of all instances predicted as positive. In object detection, precision indicates the accuracy of the detections made by the model. It helps evaluate the model's ability to avoid false positives, which are crucial in applications where misidentifications can have significant consequences. Precision is particularly relevant in object detection tasks where false positives can lead to incorrect identifications of objects, potentially impacting downstream applications like autonomous driving or medical diagnosis. Precision which is the parameter for detecting accuracy is used to calculate how fast the objects are predicted. There is also another reason to use Precision here. It can highlight how good the model is at predicting the positive class.

Recall:

Recall, also known as sensitivity, measures the proportion of correctly predicted positive instances (true positives) out of all actual positive instances. In object detection, recall indicates the model's ability to capture all relevant instances of objects in the scene. It helps assess the model's ability to avoid false negatives, which are instances of undetected objects. Recall is important in scenarios where missing detections can lead to critical errors or safety hazards, such as in surveillance or search and rescue operations. The recall will set the object or layer detection quality. This means that the recall or the sensitivity that calculates the positive rate is found correctly.

F1-score:

F1-score is the harmonic mean of precision and recall and provides a balanced measure of a model's performance. It considers both false positives and false negatives, making it a suitable metric for assessing overall detection performance. F1-score is especially useful when there is an imbalance between positive and negative instances in the dataset, as it considers both precision and recall equally, providing a holistic evaluation of the model's effectiveness. In the context of our evaluation, TP, FN, and FP signify true positives, false negatives, and false positives, respectively [30].

The F1-score, [31] unlike the arithmetic mean, is determined by taking the harmonic mean of precision and recall. With a range between zero and one, the F1-score serves as a measure of the accuracy in object detection, where higher values signify greater accuracy.

3.6 Time complexity for YOLOv5

YOLOv5's time complexity is typically O(N) with additional factors that affect the actual inference time, making it essential to consider various aspects when evaluating its performance for a specific application. For the input image size, YOLOv5s can be considered to have a computational complexity of O(N), where N represents the number of pixels in images. This correlation arises because the processing time for an image directly aligns with the number of pixels it contains.

For the number of detected objects (vehicles), YOLOv5s [32] can be considered to have a computational complexity of O(M), where M represents the number of detected objects. This is because the time it takes to process objects scales linearly with the number of objects.

These complexities are approximate and depend on various factors such as hardware, optimizations, and specific implementation details. Additionally, YOLOv5s is optimized.

3.7 Limitations or uncertainty

Data Bias: The validation dataset may not fully represent the diversity of real-world scenarios, leading to biased evaluations. For example, if the dataset primarily consists of certain types of objects or scenes, the model's performance may be overestimated or underestimated for other types of data.

Annotation Errors: Errors or inconsistencies in the ground truth annotations can introduce uncertainty into the evaluation process. It's important to carefully review and validate the annotations to minimize the impact of annotation errors on the evaluation results.

Addressing these limitations requires careful experimental design, rigorous data preprocessing, and transparent reporting of validation methodologies and results. Additionally, sensitivity analyses and robustness checks can help assess the robustness of the findings to potential sources of bias or uncertainty.

4 Result and discussion

The analysis explored various versions of the YOLOv5 model for vehicle detection, focusing on accuracy. The training was performed on a dataset of 3001 images over 50 epochs. Key performance metrics like mAP, recall, and precision were used to evaluate the models. The results showcased the YOLOv5 [33] models’ exceptional ability to accurately identify and classify vehicles in the images, setting a new standard for vehicle detection algorithms.

Table 3 outlines the evaluation metrics including mAP, precision, and recall for all classes, specifically aimed at assessing the performance of the original YOLOv5 model. Correspondingly, Table 4 provides the performance metrics tailored to the Modified_YOLOv5 model.

4.1 Modified YOLOv5

Figure 5 (a, b, c) presents a captivating visual analysis of the modified YOLOv5s algorithm's performance for object detection. It showcases accuracy and losses across the same epochs, revealing a steady accuracy improvement in both training and validation sets. However, cautionary notes highlight the risk of accuracy plateauing or declining due to overfitting after reaching the optimal epoch count. Training loss decreases as the model matures, and validation loss should also decrease but may rise with overfitting. Continuous improvement is observed with increasing epochs until reaching the optimal epoch count. Monitoring these metrics throughout the 50 epochs is vital for sustained performance enhancement. These visualizations provide an enticing insight into the dynamic performance of the modified YOLOv5s algorithm.

4.2 Original YOLOv5

Figure 6 (a,b,c) is a line plot illustrating the training progress of YOLOv5s models. It shows accuracy (mAP), recall [34] and precision against the number of epochs. The x-axis represents epochs, while the y-axis represents accuracy on the validation set. The plot starts with low accuracy and increases with epochs but may plateau or decrease, indicating overfitting. This figure helps identify the optimal number of epochs and monitor the model's performance during training.

In YOLOv5s, the loss function [35] measures the model's performance on the training data. Figure 6 (b) shows the train loss, and Fig. 6 (c), displays the validation loss plotted against the number of epochs. The figures help monitor the model's progress during training and prevent overfitting. Typically, both train and validation losses decrease as epochs increase, indicating learning and improvement in the model's performance.

4.3 Comparison results of the models

The analysis compares original YOLOv5s and Modified YOLOv5 models. “Modified_YOLOv5” performs the best with 67.40% accuracy, outperforming “YOLOv5s” (61.40%). Other factors like speed, memory usage, and ease of use should also be considered when choosing an object detection algorithm. Modified_YOLOv5 is the most accurate among the discussed models. In Fig. 7 a comparison is given for evaluating this model’s performance.

The paper outlines a method for vehicle search utilizing the modified_YOLOv5 algorithm. The process begins by identifying the target object, followed by matching it and pinpointing its exact location. Once the object's location is determined, it is labeled with size information. Finally, all detected vehicles are organized in a logical order. Figure 8 displays the results of the vehicle detection task, showcasing various types of vehicles detected within the detection frame. This output serves to highlight the YOLOv5 algorithm's effectiveness in accurately detecting and recognizing different vehicle types as well as can be applied to IoT based security system [36].

4.4 Comparison with previous YOLO models

To validate our study accurately, we meticulously compared the performance with previous YOLO models. Through independent experiments using the dataset, we assessed the mean Average Precision (mAP) values and summarized the findings in Table 5 and Fig. 9 for clear data result comparison. Significantly, the analysis pointed to the modified_YOLOv5 as the top-performing model in our study.

5 Conclusion

This paper delves into the examination of a model designed to enhance the detection of vehicles that pose challenges in object recognition. Employing the YOLOv5 architecture as our experimental foundation, we conducted a comparative analysis involving the original YOLOv5 model and an enhanced version, YOLOv5_Modified. After rigorous training, the YOLOv5_Modified model, exhibiting optimal performance, was selected based on validation results. Subsequently, the best-weighted configuration was applied to the YOLOv5_Modified model and subjected to testing. The outcomes revealed a noteworthy 6.0% increase in mean Average Precision (mAP) compared to the original YOLOv5 model and demonstrated the improved efficacy of the YOLOv5_Modified model. To facilitate a more precise comparison, key metrics were calculated about the previous version of YOLO.

Vehicle detection is a specialized subfield of object detection, and several studies have highlighted the unique challenges it poses. Researchers have investigated issues like vehicle occlusion, variations in lighting conditions, and viewpoint changes. Understanding these challenges is crucial for designing effective modifications to YOLOv5.This thesis has not only improved vehicle detection but also provided valuable insights for the broader field of computer vision and object detection. Our work highlights the adaptability of YOLOv5 and demonstrates the importance of addressing the specific challenges inherent to vehicle detection. The outcomes of this research are poised to make a meaningful impact on applications ranging from traffic management to road safety and autonomous transportation.

Data availability

All data generated or analyzed during this research are included in the presented Tables and Figures in this manuscript.

References

Bagloee SA, Tavana M, Asadi M, et al. Autonomous vehicles: challenges, opportunities, and future implications for transportation policies. J Mod Transport. 2016;24:284–303. https://doi.org/10.1007/s40534-016-0117-3.

Rahman R, Azad ZB, Hasan MB. Densely-populated traffic detection using YOLOv5 and non-maximum suppression ensembling. In: Proceedings of the international conference on big data, IoT, and machine learning. Singapore: Springer, 2022;95:43. https://doi.org/10.1007/978-981-16-6636-0_43.

Haq HB, Akram W, Irshad M, Kosar A, Abid M. Enhanced real-time facial expression recognition using deep learning. Acadlore Trans AI Mach Learn. 2024;3:24–35.

Najm M, Hussain Ali Y. Automatic vehicles detection, classification and counting techniques/survey. Iraqi J Sci. 2020. https://doi.org/10.24996/ijs.2020.61.7.30.

Jung H-K, Choi G-S. Improved YOLOv5: efficient object detection using drone images under various conditions. Appl Sci. 2022;12:7255. https://doi.org/10.3390/app12147255.

Saoudi O, Singh I, Mahyar H. Autonomous vehicles: open-source technologies, considerations, and development. Adv Artif Intell Mach Learn. 2023;03:669–92. https://doi.org/10.54364/AAIML.2023.1145.

Sonko S, et al. A comprehensive review of embedded systems in autonomous vehicles: trends, challenges, and future directions. World J Adv Res Rev. 2024;21:2009–20. https://doi.org/10.30574/wjarr.2024.21.1.0258.

Ibrahim M, Safa N. Detecting message spoofing attacks on smart vehicles. Comput Fraud Sec. 2023. https://doi.org/10.12968/S1361-3723(23)70054-7.

Hossain MM, Swarna RA, Mostafiz R, Shaha P, Pinky LY, Rahman MM, Wahidur Rahman Md, Selim Hossain Md, Elias Hossain Md, Iqbal S. Analysis of the performance of feature optimization techniques for the diagnosis of machine learning-based chronic kidney disease. Mach Learn Appl. 2022. https://doi.org/10.1016/j.mlwa.2022.100330.

Wang C, Zhang Y, Zhou Y, et al. Automatic detection of indoor occupancy based on improved YOLOv5 model. Neural Comput & Applic. 2023;35:2575–99. https://doi.org/10.1007/s00521-022-07730-3.

Nepal U, Eslamiat H. Comparing YOLOv3, YOLOv4 and YOLOv5 for autonomous landing spot detection in faulty UAVs. Sensors. 2022;22:464.

Kasper-Eulaers M, et al. Short communication: detecting heavy goods vehicles in rest areas in winter conditions using YOLOv5. Algorithms. 2021;14:114. https://doi.org/10.3390/a14040114.

Malta A, Mendes M, Farinha T. Augmented reality maintenance assistant using YOLOv5. Appl Sci. 2021;11(11):4758.

Wan J, Chen B, Yu Y. Polyp detection from colorectum images by using attentive YOLOv5. Diagnostics. 2021;11(12):2264.

Yao J, Qi J, Zhang J, Shao H, Yang J, Li X. A real-time detection algorithm for kiwifruit defects based on YOLOv5. Electronics. 2021;10(14):1711.

Jia W, Xu S, Liang Z, Zhao Y, Min H, Li S, Yu Y. Real-time automatic helmet detection of motorcyclists in urban traffic using improved YOLOv5 detector. IET Imag Proc. 2021;15(14):3623–37.

Patel K, Bhatt C, Mazzeo PL. Improved ship detection algorithm from satellite images using YOLOv7 and graph neural network. Algorithms. 2022;15(12):473.

Wang Y, Hao Z, Zuo F, Pan S. A fabric defect detection system based improved YOLOv5 detector. J Phys Conf Ser. 2021;2010(1):012191.

Wang Y, Wang H, **n Z. Efficient detection model of steel strip surface defects based on YOLO-V7. IEEE Access. 2022;10:133936–44.

Hussain M, Al-Aqrabi H, Munawar M, Hill R, Alsboui T. Domain feature map** with YOLOv7 for automated edge-based pallet racking inspections. Sensors. 2022;22(18):6927.

Hossain MS, Rahman MH, Rahman MS, Hosen ASMS, Seo C, Cho GH. Intellectual property theft protection in IoT based precision agriculture using SDN. Electronics. 2021. https://doi.org/10.3390/electronics10161987.

Ultralytics, YOLOv5, 2020. https://github.com/ultralytics/yolov5. Accessed 15 Mar 2024.

Grekov AN, et al. Application of the YOLOv5 model for the detection of microobjects in the marine environment. Cornell Univ. 2022. https://doi.org/10.48550/ar**v.2211.15218.

Lin TY, et al. Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision. 2017;2980–88.

Ultralytics LLC. YOLOv5: object detection with efficientdet backbone. GitHub. 2020. https://github.com/ultralytics/yolov5#introduction.

Bochkovskiy A, Wang CY, Liao HYL. YOLOv5: improved real-time object detection. In: Proceedings of the IEEE/CVF Conferenceon Computer Vision and Pattern Recognition Workshops. 2020;1450–59.

Pramanik A, Sarkar S, Maiti J. A real-time video surveillance system for traffic pre-events detection. Accid Anal Prev. 2021. https://doi.org/10.1016/j.aap.2021.106019.

Zhou S, Bi Y, Wei X, et al. Automated detection and classification of spilled loads on freeways based on improved YOLO network. Mach Vis Appl. 2021;32:44.

Neupane D, Seok J. A review on deep learning-based approaches for automatic sonar target recognition. Elecronics. 2020;9:1972. https://doi.org/10.3390/electronics9111972.

Rahman R, Azad Z, Hasan MB. Densely-populated traffic detection using YOLOv5 and non-maximum suppression ensembling. In: Arefin MS, Kaiser MS, Bandyopadhyay A, Ahad MAR, Ray K (Eds) Proceedings of the international conference on big data, IoT, and machine learning: BIM 2021. Springer: Singapore. 2022;567–78. https://doi.org/10.1007/978-981-16-6636-0_43.

Li S, et al. YOLO-FIRI: improved YOLOv5 for infrared image object detection. IEEE Access. 2021;9:3120870.

Shen L, You L, Peng B, Zhang C. Group multi-scale attention pyramid network for traffic sign detection. Accid Anal Prev. 2021. https://doi.org/10.1016/j.aap.2021.106019.

Girshick R. Fast R-CNN. In: 2015 IEEE International Conference on Computer Vision (ICCV). 2015;1440–148. https://doi.org/10.1109/ICCV.2015.169.

Ren S. Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell. 2017;39(6):1137–49. https://doi.org/10.1109/TPAMI.2016.2577031.

Redmon J, et al. You only look once: unified, real-time object detection. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2016:779–88. https://doi.org/10.1109/CVPR.2016.91.

Zahan A, Hossain MS, Rahman Z, Shezan SA. Smart home IoT use case with elliptic curve based digital signature: an evaluation on security and performance analysis. Int J Adv Technol Eng Exploration. 2020;7(62):11–9. https://doi.org/10.19101/IJATEE.2019.650070.

Acknowledgements

The authors extend their sincere appreciation to the Department of Electronics and Communication Engineering, Hajee Mohammad Danesh Science and Technology University (HSTU) Bangladesh for their consistent encouragement and support in the realm of academic research.

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Contributions

MMR, and MDH contributed to the study conception, Data collection, Methodology, Results, and Formal analysis. Investigation, Supervision, result data, and analysis were performed by MMH and MSH. The first draft of the manuscript was written by MMR, MSH and MDH and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

It should be noted that no experiments were done involving human issues in this research.

Competing interests

The authors declared that there is no conflict of interest in this research. Moreover, no funds have been received for this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rana, M.M., Hossain, M.S., Hossain, M.M. et al. Improved vehicle detection: unveiling the potential of modified YOLOv5. Discov Appl Sci 6, 332 (2024). https://doi.org/10.1007/s42452-024-06029-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-024-06029-3