Abstract

One major challenge in event detection tasks is the lack of a large amount of annotated data. In a low-sample learning environment, effectively utilizing label semantic information can mitigate the impact of limited samples on model training. Therefore, this chapter proposes the SALM-Net (Semantic Attention Labeling & Matching Network) model. Firstly, a Label Semantic Encoding (LSE) module is designed to obtain semantic encodings for labels. Next, a contrastive learning fine-tuning module is introduced to fine-tune the label semantic encodings produced by the LSE module. Finally, an attention module is used to match text encodings with label semantic encodings of events and arguments, thus obtaining event detection results. Experiments are conducted on the publicly available ACE2004 dataset, and the algorithm’s effectiveness is validated through an analysis of experimental results, comparing them with state-of-the-art algorithms.

Article Highlights

-

1.

Innovative Event Detection: Introduces SALM-Net, an advanced model for event detection in texts, improving accuracy by focusing on semantic meanings of labels rather than just surface-level data.

-

2.

Semantic Encoding Advancement: Utilizes Label Semantic Encoding (LSE) for deeper understanding of text, enhancing the model's ability to interpret and classify events accurately in various contexts.

-

3.

Enhanced Learning with Limited Data: Demonstrates effective learning in environments with limited samples, using contrastive learning and attention mechanisms for better model training and event detection performance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Event detection, as a crucial research area in natural language processing, aims to accurately identify and categorize specific events from text [1]. Event detection tasks are essential for information extraction [2], knowledge graph construction [3], text summarization, and many other applications. Despite extensive research efforts in recent years, this task still faces numerous challenges [4, 5]. Firstly, the semantic complexity of events and the diversity of text require algorithms with high generalization capabilities to capture various possible expressions. Secondly, many events are semantically related or similar, which increases the difficulty of the classification task, as traditional representations such as one-hot encoding cannot capture these subtle semantic differences [6]. Furthermore, since events may be intertwined with multiple entities and relationships, accurate event identification and classification require a deep understanding of context [7].

To the best of our knowledge, existing event detection algorithms in previous research have not adequately leveraged the semantic information contained within event labels [8]. In previous event detection algorithms, during the classification task, labels were typically transformed into one-hot vectors and used as supervisory signals in calculating the loss function. For an N-class classification problem, the cosine similarity between any two one-hot vectors for the labels would be zero. For instance, consider a classification task with three labels, "cat," "dog," and "car." The cosine similarity between the one-hot vectors of these three labels would be zero for all pairs. However, even though "cat" and "dog" are different labels, they both belong to the animal category. Therefore, the similarity between the "cat" and "dog" labels should be higher than the similarity between "cat" and "car" labels.

Models that treat labels as one-hot vectors encode only the event sentences and do not encode the event labels themselves [9]. As shown in Fig. 1, this type of model is referred to as a single-encoding classification model in this paper. Such an approach results in the loss of the semantic information carried by the labels themselves.

In the field of information extraction, question-answering-based methods are a common approach [10, 11]. Taking the example of named entity recognition, Li et al. [12] assign a natural language question to the entity label to introduce prior information. For instance, when extracting the "location" entity, the question can be "find the location in the text." This question is concatenated with the sentence to be extracted and fed into an encoder. The encoder's output is then used to extract the boundaries of the named entity. The reason question-answering-based information extraction works well is that the questions already contain prior semantic information [13], which is closely related to the principles of recent prompting learning [14]. In addition to introducing prior knowledge, the question-answering approach, as the question string contains prior knowledge for only one label at a time, ensures that only one label is extracted at a time, effectively avoiding the problem of boundary overlap between different label results [15].

In the field of information retrieval, such as in search engines, the ability of query sentences to retrieve target web pages [16] is due to the high semantic matching between the target web pages and the query sentences [17]. The typical approach to calculate semantic matching involves computing the similarity between the vector representations of the query sentence and the target web page [18]. This model, which utilizes two vectors for matching, is referred to in this paper as the dual-encoding matching model, as illustrated in Fig. 1b.

In the context of event detection tasks, this paper combines ideas from information extraction methods based on question-answering and semantic similarity computation techniques from the information retrieval domain. Firstly, a natural language definition for an event label is introduced as prior knowledge, and then, this definition is used to obtain the semantic information of the event label. Subsequently, this semantic information is matched with the encoding of the event sentence to determine the label for the event sentence based on semantic similarity. To obtain more accurate label semantic information, this paper employs contrastive learning to optimize the semantic representation of event labels.

Specifically, this paper makes the following contributions:

-

1)

Abandoning one-hot vectors for labels and using low-dimensional dense vectors to represent labels. To capture the semantic information of labels, a pre-trained language model is employed to encode the natural language definitions of the labels.

-

2)

Leveraging contrastive learning to bring the semantic encodings of event labels closer to the encodings of event sentences with the same label in the vector space, thereby enhancing the representational performance of label semantic encodings through concrete instances.

-

3)

Employing multi-layer attention for semantic matching among events, arguments, and event sentences, thereby improving event detection performance.

-

4)

Modeling event detection as a multi-class classification task of event sentences without trigger word extraction. Additionally, a fully connected classifier is defined for each event label, addressing the single-sentence multi-event scenario and mitigating the issue of poor classifier convergence due to sample imbalance.

2 Related works

Event detection is one of the classic tasks in the field of natural language processing. Its goal is to identify the specific type of event mentioned in a given text. Traditional event detection algorithms adopt a supervised learning paradigm, requiring more or less annotated samples. Currently, event detection algorithms can be categorized into three main types based on their specific implementations: pattern matching-based methods, machine learning-based methods, and deep learning-based methods.

The pattern matching-based method was the first proposed algorithm for event detection and can be traced back to Ellen's research achievements in 1993 [34]. The AutoSlog system proposed in this study uses a trigger word dictionary specific to the domain to detect potential events. This dictionary is automatically constructed based on part-of-speech tagging language templates. This method has seen rapid development in specific domains such as biomedicine [35] and finance [36]. However, the design and maintenance of matching templates require expert intervention; at the same time, due to the heavy reliance of templates on specific domain textual expressions, the generality and generalizability of this method are greatly limited [37].

To alleviate reliance on predefined templates, many scholars have attempted to use machine learning methods for event detection tasks [38, 39]. The basic approach of machine learning-based event detection is to extract features from texts and then train various classifiers using the annotated information of samples. Compared to pattern matching-based methods, the advantage of machine learning lies in reducing the workload of designing and constructing templates, as well as offering better generalizability and universality. However, the classic machine learning models used are not very effective in fitting various complex nonlinear relationships and are also quite sensitive to feature selection.

With deep learning achieving dominant performance in various fields [40], event detection methods based on deep learning have been successively proposed [41,42,43]. The main characteristic of deep learning methods is that they have larger dimensional word embedding representations and deeper network structures. This compensates for the limited nonlinear fitting capability of machine learning models and reduces the dependency on feature engineering. The DMCNN model [44] uses convolutional neural networks to automatically extract features at both the lexical and sentence levels. To better capture the complex relationships between local and overall context in documents, Zhao [45] and Liu [47] introduced the DMB-PN model in 2020 for few-shot event detection, combining dynamic memory networks with prototype networks for trigger word identification and event type classification. Existing methods often focus on feature extraction and improvements to learning algorithms but tend to overlook the modeling of relationships between samples. Furthermore, current few-shot event detection methods still rely on trigger word extraction, which may lead to performance degradation when dealing with complex events.

In response to these issues, this paper proposes a new event detection framework, combining question-answering-based information extraction methods with semantic similarity calculation techniques from the field of information retrieval. Our method optimizes the semantic representation of event labels by acquiring semantic information of event labels through natural language definitions and further refining these semantic representations using contrastive learning.

3 Methods

3.1 Overall algorithm design

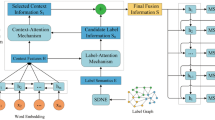

In this study, we propose an event detection algorithm based on label semantic embedding, aimed at addressing the limitations of traditional event detection methods in few-shot learning and complex semantic understanding. The core idea of this algorithm is rooted in three key theoretical foundations: deep semantic understanding, contrastive learning, and attention mechanisms. Firstly, we recognize that in natural language processing, the precise identification and classification of events depend not only on superficial lexical matching but also require a deep understanding of the underlying semantic structures of language. Therefore, our algorithm employs a BERT encoder to extract deep semantic information from text, enabling the model to capture the subtle nuances and rich contextual information of language more accurately. Secondly, we observe that by bringing semantically similar samples closer in the vector space while pushing dissimilar samples apart, we can significantly enhance the model’s ability to capture semantic information in a limited number of samples. Based on this observation, we incorporate contrastive learning to optimize the semantic representation of event labels, thus improving the accuracy and robustness of event type determination. Finally, we integrate multi-level attention mechanisms to more finely process the complex relationships among events, arguments, and sentences. The application of attention mechanisms in our algorithm not only increases its flexibility but also allows the model to dynamically allocate weights across different semantic levels, thereby more effectively focusing on the key information in event detection.

Assuming the input sentence is \(S = [w_{1} ,w_{2} , \ldots ,w_{n} ]\). The main steps of the event detection algorithm based on label semantic embedding are as follows:

-

1.

Firstly, employ a BERT encoder to perform context-aware encoding of the text to be extracted.

-

2.

Send the definitions of named entity labels, event labels, and argument labels to the LSE encoding module to obtain semantic encodings for each label.

-

3.

Feed the text encoding and event label semantic encoding into the contrastive learning fine-tuning module, fine-tuning the SupconLoss to align the text encoding with the event label semantic encoding in the semantic space.

-

4.

Element-wise addition of the text encoding with entity label semantic encoding, and multi-layer attention-weighted fusion with argument label semantic encoding and event label semantic encoding to obtain event label semantic encoding containing contextual semantic information and argument semantic information.

-

5.

Send the event label semantic encoding to multiple independent binary classifiers to obtain event detection results.

The overall architecture of the algorithm is depicted in Fig. 2. The algorithm comprises five main modules: the label semantic encoding module, contrastive learning fine-tuning module, attention module, and multiple binary classification modules.

3.2 Label semantic encoding module

The objective of the label semantic encoding module is to obtain low-dimensional dense vectors that encapsulate semantic information for a given label. These semantic label vectors, which contain semantic information, can be used for semantic similarity matching with other label vectors or sentence vectors, resulting in similarity scores that support downstream calculations of attention scores and classification. The specific workflow of this module is as follows:

Suppose we need to obtain the semantic encoding for label A, which can include event, entity, or argument labels. Initially, we are provided with the natural language definition of label A, which should comprehensively describe the characteristics of that label. For example, for the label "car," a possible definition could be "a machine used for transportation." Let's denote this natural language definition as:

To obtain the semantic information from a given short natural language text, we employ BERT [

In the original BERT model, the [CLS] vector is specifically designed for classification tasks. However, recent research has shown that using the [CLS] vector for classification tasks does not yield satisfactory results [22]. Therefore, in this paper, we employ the average pooling representation (RepA) from the last layer of BERT as an approximation of the entire sentence.

Hence, for label A, the overall process of obtaining label semantic embeddings, as described in Eqs. (1) to (3), can be summarized as follows:

For event labels, named entity labels, and argument labels, this section provides example definitions. These definitions are sourced from the standard definitions in the data annotation manual, as shown in Table 1.

3.3 Contrastive learning fine-tuning module

The semantic encoding of a specific event label should encompass the semantic information of that label. This semantic information is not only derived from the natural language definition of the label but should also originate from a large number of similar event sentences in the corpus. Because in the final stage of event classification, the label semantic encoding needs to undergo vector matching with the sentence encoding, if the encodings of similar sentences of the same type can be close in the spherical space, then cosine similarity can yield a better matching effect.

Contrastive learning can bring pairs of similar positive samples closer to each other in vector space while pushing pairs of dissimilar samples farther apart. This property helps the label semantic encoding learn semantics from the corpus. The primary role of the contrastive learning fine-tuning module is to align the label semantic encoding with the encoding of similar event sentences in spherical space using contrastive learning methods. The structure of the contrastive learning fine-tuning module is illustrated in Fig. 3.

3.4 Label definition encoding

In this section, we opt for the same BERT encoder as used in the LSE module to ensure consistency in the semantic space.

Assuming the input sentence is \(S = [w_{1} ,w_{2} , \ldots ,w_{n} ]\)], we first obtain the encoding from the last layer of the BERT encoder.

To obtain the global representation of event sentences, it is necessary to perform average pooling on the sequence, consistent with Sect. 3.2. Here, the output of the last layer of the BERT encoder is used, and global average pooling is applied to obtain the representation of the event sentence:

After the average pooling, we obtain the global embedding \(x^{avg}\) for the event sentence. Semantic encodings for event labels are obtained using the LSE module.

3.5 Named entity label information

Similar to event labels, semantic encodings for named entity labels are obtained using the label semantic encoding module. In the ACE2005 dataset, named entity annotations have already been provided, where the entity information for a sequence is denoted as EntityType, and ei represents the named entity label for the i-th pronoun. According to the named entity definitions table in Sect. 3.2, we obtain the sequence entity definition Entityseq, where entityi represents the definition of the i-th entity. Entityseq is then sent to the LSE module to obtain the entity encodings for the sequence, denoted as Xentity.

Since we are using the same text encoder for encoding, it can be assumed that both types of vectors have already been aligned to the same semantic space. Therefore, this paper performs feature fusion by directly adding them together:

3.6 Dual-layer attention

When sequence representations have already incorporated the semantics of entities, it is necessary to perform information aggregation or filtering on the sequence information. Previous work has often used graph neural networks to aggregate sequence information. For instance, the JMEE model [23] incorporates more syntactic information into trigger word fusion to enhance classification performance. However, this approach requires knowledge of the syntactic dependency relationships in sentences, which necessitates the use of third-party tools to generate syntactic dependency trees. This introduces additional annotations and may result in errors in the output of syntactic dependency tools, leading to error propagation.

Attention mechanisms can be regarded as a form of soft connection in a graph attention network [24], where each word is interconnected with every other word. Therefore, choosing attention mechanisms to replace graph neural networks is a more reasonable choice.

For closed-domain event detection tasks, each event has fixed argument roles, with arguments being subsets of entities. Therefore, it is possible to initially employ semantic embeddings of argument labels to perform attention-weighted operations on sequence representations. This approach allows leveraging the semantics of arguments to match with the entity semantics contained in the sequence and aggregate entity information onto the argument label semantic encoding. Subsequently, the event label semantic encoding performs a second round of attention-weighted operations on the argument label semantic encoding. A schematic representation of the dual-layer attention mechanism is illustrated in Fig. 4.

First, we utilize the LSE module to obtain semantic encodings for all argument labels, as outlined in the following equation, where q represents the number of argument labels, and argi denotes the definition of the i-th argument label.

Next, we implement the first attention layer by defining parameter matrices \(W_{1}^{Q} \in R^{demb * } d_{hidden}\), \(W_{1}^{K} \in R^{demb * } d_{hidden}\),\(W_{1}^{V} \in R^{{d_{emb} * d_{value} }}\), \(W_{1}^{O} \in R^{{d_{value} * d_{emb} }}\), and \(Arg \in R^{{(q + 1) * d_{emb} }}\). The semantic encoding of argument labels serves as the query vector, while the sequence encoding serves as the key and value vectors for attention computation. The formula for calculating attention in a single attention head is as follows. After the weighted summation through attention, we obtain the argument label semantic encoding \(Ar\hat{g}\), which combines information from the sequence and entities:

The implementation of the second attention layer is essentially similar to that of the first attention layer. Firstly, parameter matrices \(W_{2}^{Q} \in R^{{d_{emb} * d_{hidden} }}\), \(W_{2}^{K} \in R^{{d_{emb} * d_{hidden} }}\), \(W_{2}^{V} \in R^{{d_{emb} * d_{value} }}\), and \(W_{2}^{0} \in R^{{d_{value} * d_{emb} }}\) are defined. Then, semantic encodings for all events' labels, denoted as Event, are obtained through the LSE module.

where, p represents the number of event labels. Event is used as the query vector, while \(Ar\hat{g}\) serves as the key and value vectors for attention computation. The attention calculation formula is provided below. Ultimately, we obtain the event label semantic vector \(schema \in R^{{(p + 1) * h_{emb} }}\), which integrates information from the sequence, entity labels, and argument labels. Its shape is identical to that of Event.

The aforementioned attention mechanism is an implementation for a single attention head and can be extended to multi-head attention. Following the approach outlined in “Transformers” [25] for multi-head attention, we incorporate X[0] and Event into schema using residual connections and pass them to the multi-binary classification module together.

3.7 Multi-binary classification module

An event sentence can potentially contain multiple events, making the traditional multi-class cross-entropy loss function unsuitable for event detection scenarios. Using a Sigmoid activation function as the final layer of the model with a threshold for classification poses challenges, as determining the threshold requires extensive experimentation. Furthermore, this method becomes less adaptable when the training data changes, making it less suitable for the rapidly evolving field of NLP. Additionally, due to the extreme class imbalance in event labels, a single classification network may not effectively train minority classes. In addressing multi-class multi-label problems, a common approach is to use an independent binary classification network for each label [26].

Therefore, this model’s solution to the problem is to employ a binary classification neural network for each event label. This network determines whether the current event sentence contains a specific event label, outputting 1 if the label is present and 0 if it is not. Each binary classification network is optimized using binary cross-entropy loss, ensuring that each event label is decoded independently without interfering with others.

Different weights can be assigned to each loss function based on the frequency of event occurrences. The computation of the loss function is as follows.

The true label for each type of event contained in the event sentence is denoted as L, and the binary classification network parameters for the i-th label are represented as \(w_{i} \in R^{{d_{emb} * (p + 1)}}\) and \(b_{i} \in R^{p + 1}\).

3.8 Loss function

Since the existing ACE2005 dataset is labeled, to better utilize the characteristics of labeled data, this section chooses the SupConLoss [27] as the contrastive learning loss function. SupConLoss is a supervised contrastive learning loss function, which maximizes the logarithm probability of similarity between positive pairs. The similarity between vectors is calculated using cosine similarity. The original SupConLoss loss function formula is as follows:

where, i represents any sample, P(i) is the set of all positive samples in the same batch that belong to the same class as i, zi is the representation of the current sample, zp is the representation of a positive sample in the same class as the current sample, and za is the representation of any sample within the batch. τ is the temperature coefficient, and a smaller value of τ makes it easier to distinguish challenging samples.

As shown in Fig. 5, the selection of positive and negative samples follows an intra-batch positive and negative sampling strategy. Data within the same batch are combined to form positive samples if they share the same label, while other sample pairs in the batch are treated as negative samples for loss calculation. At the beginning of each batch, the semantic encoding of the corresponding event label is inserted. Each label semantic encoding can be considered as an instance of that label, contributing to the construction of positive and negative samples alongside other instances within the same batch.

Applying the original SupConLoss loss function to the event extraction task yields the specific loss function calculation method as follows:

In addition, in deep learning, there is a significant issue of sample imbalance. For datasets with biased distributions, models may suffer from overfitting or underfitting problems. One common approach is to balance sample labels through resampling and down sampling to ensure a roughly equal number of samples for each label. However, the ACE2005 event extraction dataset exhibits a severe long-tail distribution. For some labels, there are too few training samples, and resampling would only result in many duplicate sentences, which is not meaningful. Therefore, we need to impose constraints on the loss functions for each label at the loss function level.

In this paper, we set a hyperparameter alpha (alpha < 1) for certain labels with significantly larger data volumes, such as ATTACK, TRANSPORT, DIE, MEET labels, denoted as set A, to limit the impact of excessive data. Simultaneously, we set another hyperparameter beta (beta > 1) to address the underfitting issue caused by too few data for labels like PARDON, EXTRADITE, ACQUIT, denoted as set B.

The loss function uses binary cross-entropy:

The overall loss function for the event detection algorithm is defined as:

4 Experiment and result analysis

In this section, we conduct experiments and analyze the results of the event detection algorithm based on label semantic encoding proposed in the previous sections. We will first introduce the experimental setup, then present the experimental results, analyze the results, and finally, conduct an ablation analysis of the proposed improvements.

4.1 Experimental setup

4.1.1 Dataset

We used the ACE2005 English event extraction dataset [28] for model training and validation. This dataset consists of 599 annotated documents, containing a total of 34 event labels, including 33 event categories and one empty class. 529 documents are used as the training set, and 440 documents as the test set.

4.1.2 Hyperparameter settings

The hyperparameters for the model proposed are listed in Table 2. Through experimental comparison, the best hyperparameter combination was selected as follows: Bertlr = 2e-5, Linearlr = 1e-4, Batchsize = 48, Hiddendim = 128, Optimizer = AdamW, Scheduler = LinearLR.

The experiments were conducted using Python 3.7 and PyTorch 1.6.0 on a single NVIDIA RTX 2080 Ti GPU, running for 50 epochs, and the best result was reported.

In the implementation of the model, we chose the BERT encoder as the main architecture for extracting deep semantic information from label definitions. The version selected is bert-base-uncased, which features 12 transformer layers, with each layer having 768 hidden units and 12 attention heads. For each label definition, an input sequence of [CLS] + Def_A + [SEP] is constructed, where Def_A represents the tokenized representation of the label definition. The text is processed through the BERT encoder, outputting a feature representation with dimensions (batch_size, sequence_length, 768). In terms of pooling strategy, we do not use the output of the [CLS] token but instead employ an average pooling strategy. This involves averaging the output of the last layer across the sequence length dimension to obtain a global representation of the entire input text. The dimension of this representation will be (batch_size, 768). Ultimately, the average pooled vector produced by the LSE module serves as the semantic encoding for the label. In SupconLoss, the temperature parameter controls the scaling of the distance between vectors, affecting the sensitivity of the model, which is set to 0.08.

4.1.3 Evaluation metrics

Consistent with previous work, this section uses precision (P), recall (R), and F1-score (F1) as evaluation metrics. Since the experiments do not predict trigger words but only predict event labels contained in event sentences, the metrics are calculated as follows:

Let the intersection between the true labels and predicted results be denoted as \(\left| {C{\text{orrect}}} \right|\). Let the event labels predicted by the model be denoted as \(\left| {{\text{pred}}} \right|\). Let the true labels for the event sentence be denoted as \(\left| {{\text{g}}old} \right|\) with a total count of k.

The formulas for precision, recall, and F1-score are as follows:

4.2 Experiment results

To validate the effectiveness of the event detection algorithm based on label semantic encoding, this section compares it with several baseline models: DMCNN [29], TBNNAM [30], BERT-QA [31], MQAEE [32], JMEE [28], and MRLS [33]. First, a brief introduction to these baseline models is provided:

-

1.

DMCNN: This model simultaneously utilizes features at both word and sentence levels. To retain multiple event information within the same sentence, it employs a dynamic multi-pooling pooling strategy;

-

2.

TBNNAM: This model considers trigger word detection unnecessary and believes that annotating trigger words significantly increases labeling costs. Therefore, it skips the trigger word extraction step and directly uses event sentences and event types as a sample pair for binary classification. Results show that despite not utilizing trigger word information, it still outperforms some models that rely on trigger word extraction;

-

3.

BERT-QA: This model organizes the event detection task as a question-answering task. For a given event label, it extracts trigger words corresponding to that event category by formulating questions;

-

4.

MQAEE: Similar to BERT-QA, this model uses a question-answering approach for event detection. However, unlike BERT-QA, it organizes event detection as a multi-turn question-answering task, thereby mitigating the drawback of fixed question templates;

-

5.

JMEE: This model builds a graph neural network based on syntactic dependency trees and optimizes it specifically for cases where a single event sentence contains multiple events;

-

6.

MRLS: Many dependency-based event detection algorithms overlook multi-hop connections between words, resulting in semantic loss. MRLS treats the syntactic dependency tree as a heterogeneous graph, models multi-hop dependency relationship types, and enhances the representation capability of words for events.

According to Table 3, it can be observed that the proposed event detection algorithm based on label semantic encoding outperforms other baseline models. Compared to question-answering-based methods, it achieves a 5.1 percentage point increase in recall and a 5 percentage point increase in F1 score compared to BERT-QA. Moreover, it outperforms MOAEE with a 3.6 percentage point increase in F1 score, indicating a substantial gain in recall. In comparison to dependency tree-based methods JMEE and MRLS, it shows a 6.8 percentage point improvement in recall for JMEE and a 4.4 percentage point improvement for MRLS, along with respective F1 score improvements of 3.7 and 3.6 percentage points. Overall, the improvements are significant.

From the results, it is evident that the performance gains primarily stem from improvements in recall. This is attributed to the algorithm’s use of multiple binary classification networks during the classification phase, which calculates semantic similarity for each event type, increasing the likelihood of recall for each event category. As a result, the improvement in recall is more pronounced.

4.3 Ablation experiments

In order to verify the effectiveness of the proposed improvements in this chapter, ablation experiments are conducted to evaluate the impact of these improvements.

Firstly, ablation experiments are performed on the label semantic encoding module, taking the TBNNAM model as an example. Previous work mostly utilized random initialization to obtain label encodings. Therefore, experiments are conducted to investigate the generation methods of label semantic encodings. In the control group, random initialization vectors are used. Since both entity label encodings and event label encodings in this chapter are obtained from the label semantic encoding module, ablation experiments are conducted separately for the acquisition methods of event label encodings and entity label encodings. The results of the ablation experiments are shown in Table 4.

From the table, it can be observed that removing the semantic encodings of entities leads to a 2.9 percentage point decrease in the model's F1 score, while replacing the semantic encodings of entities with randomly initialized vectors results in a 1.7 percentage point decrease. This indicates that the entity information contributes to a 1.2 percentage point improvement in the overall model, and the semantic information of labels can yield a performance boost of 1.7 percentage points in terms of entities. This not only demonstrates the effectiveness of entity information for the entire event detection task but also underscores the effectiveness of applying the LSE module to entity labels.

If the event label semantic encodings are replaced with randomly initialized vectors, the model’s F1 score decreases by 16.4 percentage points, and the convergence speed becomes slower during the experiments. This is because the label semantic vectors are used for attention value calculation in the model's attention module, and semantic similarity between encodings corresponds to higher attention weights. If event label semantic encodings are replaced with random vectors, the semantic content of the vectors is completely lost. In extreme cases, similar attention weights are assigned to every word in the sequence, essentially rendering the attention module ineffective.

When the contrastive learning fine-tuning module is removed, the model’s F1 score drops by 0.5 percentage points, with a more significant decrease in recall. This indicates that adding the contrastive learning fine-tuning module helps the label semantic encodings acquire more generalized representations, allowing for the recall of more events. Furthermore, in the final classification module, event label semantic encodings are residual-connected with ‘schema’. If random initialization vectors are used as replacements, it is equivalent to adding noise to 'schema', making it more challenging for the model to fit. Consequently, it requires more time for training, and both the convergence speed and final performance degrade.

Since the entire event detection algorithm revolves around event label encodings, deleting event label encodings would render the attention module and multi-binary classification module unable to obtain the required inputs for computation. Therefore, deletion experiments for event label encodings cannot be conducted. However, by replacing event label semantic encodings with random vectors, the effectiveness of the LSE module is directly demonstrated. The LSE module takes natural language definitions for specific labels as input. In order to assess the impact of different forms of definitions on the results, ablation experiments specific to label definitions are performed.

Regarding the definitions of event labels, they were replaced with event label name text and a string formed by concatenating the most frequently appearing words in the event sentences. The results of the ablation experiments are presented in Table 5.

Table 5 results indicate that, overall, using standard definitions provided in the annotation manual yields the highest F1 score compared to event label name text and the most frequent words. The F1 score is improved by 1.1 and 2.1 percentage points, respectively, with a slight enhancement. This suggests that the LSE module exhibits good robustness with respect to the definitions of events.

In cases where there are no standard definitions and only label names are available, using the label names as definitions proves to be a viable alternative. If neither standard definitions nor label names are known, constructing a string from the most frequently appearing words can also yield good results.

To validate the role of the attention module, ablation experiments were conducted on the attention module by directly using event label semantic encodings for attention weighting in conjunction with sequence encodings, referred to as single-layer attention. Table 6 demonstrates that compared to single-layer attention, the double-layer attention mechanism results in a 1.4 percentage point improvement in F1 score, providing clear evidence for the effectiveness of the dual-layer attention modeling in the attention module.

Figure 6 displays the heat map of two-layer attention-weighted event labels over the words in an event sentence. For a sentence containing the "ATTACK" event, the words "the war" receive the highest attention for the Attack label’s semantic embedding, aligning with the expected design of attention results.

4.4 Error analysis

We conducted a detailed error analysis of our event detection algorithm. This analysis aimed to identify the model's weaknesses and limitations in various scenarios. We categorized the errors and presented the findings in a Table 7.

In our error analysis of the event detection algorithm, several key areas were identified where the model exhibited limitations. One of the most significant challenges faced by the model was semantic overlap errors, with a notable error rate of 4.2%. This issue was particularly evident in cases where different event types had overlap** semantics, such as confusing "Attack" events with "Defend" events. Contextual dependency errors, with an error rate of 3.7%, were also observed. These errors occurred when the model failed to capture essential contextual clues, leading to misclassifications like labeling a "Meeting" event as "Negotiation." Another area of concern was long-distance dependency errors, which accounted for an error rate of 5.1%. The model struggled to process sentences with complex structures and multiple events, often failing to identify all the events in such sentences. Rare event type errors were the most frequent, with an error rate of 6.3%. These errors were primarily due to the model's lower performance on less frequent event types, as seen in its poor recognition of "Pardon" events. Lastly, errors due to ambiguous or unclear event labels were identified, contributing to an error rate of 2.8%. These errors stemmed from the model's inability to accurately detect events when faced with vague or unclear label definitions. Collectively, these findings highlight the areas where the model requires further refinement and suggest directions for future improvements to enhance its accuracy and robustness in event detection.

Figure 7 illustrates the comparison of error rates among various models in handling natural language tasks. It is evident that SALM-Net demonstrates lower error rates in most types of errors, showcasing its potential in effectively dealing with these specific tasks.

To address the five issues mentioned above, we can tackle the problems of rare event type errors and ambiguous or unclear event labels through data augmentation and the construction of high-quality datasets. Additionally, adopting multi-task learning approaches to train the model to perform multiple related tasks can enhance the model's overall understanding of semantics, context, and structural complexity.

5 Conclusion

In this study, we introduced a novel BERT-based approach for event detection, termed SALM-Net, which significantly improves the performance of event detection through label semantic encoding. SALM-Net leverages BERT as a powerful encoder, employing unsupervised pre-training tasks to learn deep semantic relationships within text. By encoding input text into a lower-dimensional vector space and incorporating semantic embeddings of labels, our method accurately captures and identifies event sentences. Experimental results demonstrate that our algorithm outperforms several baseline models, including DMCNN, TBNNAM, BERT-QA, MQAEE, JMEE, and MRLS, in terms of recall and F1 score. This substantial improvement not only validates the effectiveness of our algorithm in event detection but also underscores the significance of label semantic encoding in enhancing model performance. This research presents an efficient approach to event detection, offering valuable practical implications for accurate event detection in various applications, such as information extraction, knowledge graph construction, and event-driven analysis. In response to the findings from the error analysis in this study, we aim to enhance the performance of SALM-Net for event detection in low-resource environments. Our approach will focus on improving the semantic encoding process, which includes optimizing the transformer structure [48] and enhancing sample utilization efficiency [49].