Abstract

The need to employ technology that replaces traditional engineering methods which generate gases that worsen our environment has emerged in an era of dwindling ecosystem owing to global warming has a negative influence on the earth system’s ozone layer. In this study, the exact method of using artificial intelligence (AI) approaches in sustainable structural materials optimization was investigated to ensure that concrete construction projects for buildings have no negative environmental effects. Since they are used in the forecasting/predicting of an agro-waste-based green geopolymer concrete system, the intelligent learning algorithms of Fuzzy Logic, ANFIS, ANN, GEP and other nature-inspired algorithms were reviewed. A systematic literature search was conducted to identify relevant studies published in various databases. The included studies were critically reviewed to analyze the types of AI techniques used, the research methodologies employed, and the main findings reported. To meticulously sort the crucial components of aluminosilicate precursors and alkaline activators blend and to optimize its engineering behavior, laboratory methods must be carried out through the mixture experiment design and raw materials selection. Such experimental activities often fall short of the standards set by civil engineering design guidelines for sustainable construction purposes. At some instances, specific shortcomings in the design of experiments or human error may degrade measurement correctness and cause unforeseen discharge of pollutants. Most errors in repetitive experimental tests have been eliminated by using adaptive AI learning techniques. Though, as an extensive guideline for upcoming investigators in this cutting-edge and develo** field of AI, the pertinent smart intelligent modelling tools used at various times, under varying experimental testing methodologies, and leveraging different source materials were addressed in this study review. The findings of this review study demonstrate the benefits, challenges and growing interest in utilizing AI techniques for optimizing geopolymer-concrete production. The review identified a range of AI techniques, including machine learning algorithms, optimization models, and performance evaluation measures. These techniques were used to optimize various aspects of geopolymer-concrete production, such as mix design, curing conditions, and material selection.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The production processes of conventional concrete ingredients, such as cement, coarse and fine aggregates, along with the reactions during the process of cement hydration, have a substantial influence on the depletion of natural resources, sustainability and the greenhouse gases emissions [1]. The ozone layer is being destroyed as a result of the cement utilization, which contributed to about 7–10% of the world’s CO2 emissions, according to recent study results. Then, one of the most significant developments in the concrete sector, geopolymer concrete (GPC), emerged as a means of lowering CO2 emissions from projects involving civil engineering [2, 27]. In this instance, ambiguity and uncertainty don’t refer to random, stochastic, or probabilistic changes. FL is a methodical approach to problem solving that successively deals with linguistic variables and numerical data processing to help with sophisticated system control without heavily relying on complicated mathematical descriptions, but it still necessitates an understanding of real-world system behavior [28]. This is crucial because the standard logic system cannot control complex systems that are represented by nebulous or subjective thoughts. A sort of multi-valued logic processing system called fuzzy logic deals with inexact intelligent reasoning as opposed to accurate and rigid reasoning. Unlike the Boolean and conventional logic systems, where the outcome could be true or false. Dealing with the idea of partial truth, in which the truth value spans from fully false to absolutely true, is also methodical [29].

A method of word-based mathematical computing known as fuzzy logic is built on the concepts of a fuzzy set. It describes a multiple-valued logic system in which the varied degrees of truth are depicted by values between 0 and 1, and the truth value specification of a complex logical assertion is determined by the truth values of its elements [30]. FL recognizes not only white and black or evident possibilities but also the limitless range of variances amongst them, enabling computers to analyze disparities of data akin to the reasoning process of the human brain. FL assigns numbers or values to these distinctions, doing away with the ambiguity seen in crisp logic and allowing for the computing of precise remedies to complicated systems [31]. The fuzzy technique uses linguistic variables to calculate numbers. To assess complicated input–output data linkages, these parameters are correctly connected with their respective membership functions and simulated using the fuzzy inference system (FIS). The capacity to cope with the partial belongingness of components of various subsets with regard to the discourse universe is the central concept of FL [32]. In order to effectively evaluate the behavior of complex systems, response variables with an intermediary range of values are created to represent the extent of belongingness. Whether the components of the set are distinct or continuous, the membership functions aid in characterizing the degree of fuzziness in the set [33].

Classical set constitutes variable such that element x has a property \(\Delta\) from the universal discourse X and is expressed mathematically in Eq. 1 below;

Though for fuzzy set, we designate x with a membership function parameter denoted by \(\mu\) in the set A from a universal discourse X as expressed in Eq. 2 [34].

2.1.1 Membership function

A collection of logical operators known as fuzzy logic is an adaptation of multi-valued logic and is described by an interactive algebra of rationalization that is inexact rather than accurate. Fuzzy logic offers a novel approach to solving categorization or core issues as well as a mechanism to do word-based computations [35].

Let the expression \(\mu_{{\mathop A\limits_{\sim } }} \left( x \right):X \to\)[0 1] for \(x \in X\), termed the membership function which signifies the grade of belongingness of x to set X. Therefore, the fuzzy set denoted as \(\mathop A\limits_{\sim }\) is defined to be set of a well-ordered pairs of a component in the universe of discourse and in the universal set, the membership function value is designated by \(\mu_{{\mathop A\limits_{\sim } }} \left( x \right)\), for member \(x \in X\) whereby the component A is defined with the mathematical expression in Eq. 3 [36].

Membership functions are employed to describe fuzzy-sets graphically, and membership level, being a real integer between 0 and 1, is utilized to quantify the extent of a fuzzy set’s belongingness to a discourse universe (X) and is expressed as given in Eq. 4 [37].

If \(\mu_{{\mathop A\limits_{\sim } }} \left( x \right) = 0\), the membership does not belong to fuzzy set \(\mathop A\limits_{\sim }\), however, if \(\mu_{{\mathop A\limits_{\sim } }} \left( x \right) = 1\), the member is precisely in fuzzy set \(\mathop A\limits_{\sim }\).

2.1.2 Process of fuzzy inference system (FIS)

A decision-making system called a FIS allows users to map sets of input data based on fuzzy logic principles to get clear output results. This is accomplished by using fuzzy logic to formalize and analyze the human language system in the form of linguistic variables [38]. FIS assists in processing input data so that outputs may be produced in compliance with predetermined rule bases. The core processing engine is based on fuzzy arithmetic, but the produced output and inputs are both real-valued [39]. The technique of creating input and output map** using a fuzzy logic framework is known as fuzzy inference. Using fuzzy inference on the input values and if–then rules, the inference engine replicates human thinking. Figure 1 displays the elements of FIS [40].

Processing mechanism of FIS [34]

-

Fuzzification

Fuzzification aids in the processing of crisp real numbers into fuzzy values by converting the elements of input data sets to membership levels by pairing up with their associated sequence of membership functions. Its goal is to effectively use parameters of membership functions to convert the inputs from a group of sensors (or attributes of those sensors) to results ranging from 0 to 1. This process uses a fuzzification function to correctly convert the crisp input data into linguistic terms [41].

Intuition: In order to create fuzzy sets from crisp data using this approach, lexical and situational information about the field of study as well as grammatical truth values on the sought-after content are needed. This method makes use of the intrinsic comprehension and intellect of humans [42].

Inference: This approach is used to carry out deductive reasoning, which entails drawing a conclusion from a collection of information and facts.

Ranking Order: This technique gets the membership organizational classification by having one or more team members evaluate their perceptions, poll them, and use comparative studies [43].

-

Fuzzy rule base

Any potential fuzzy relationships between the input and output data points are derived using logic operations developed based on subject-matter expertise, pertinent literature, and a thorough evaluation of the system data base [44]. This framework comprises of fuzzy rules. When formulating a set of if–then control rules employing logical operators, it requires rule assessment, which aids in the calculation and assessment of the appropriate output truth values. Here, a collection of if–then rules is deployed to the input parameters, and the results are combined to provide a set of fuzzy output [45]. The deconstruction of the compound rule into a list of straightforward canonical rules is made possible by using the fundamental characteristics and operations established for fuzzy sets [46]. These regulations are based on fuzzy logic calculation and the expression of human language in the fuzzy set. A fuzzy rule is described as an if-input, then-output set of fuzzy variables that are linked using the distinct logic operators “OR”, “AND” and “NOT”. It is characterized as an If–then conditional sentence with the structure shown in Eq. 5 [47].

A and B are linguistic variables obtained via fuzzy sets in the universal sets (X) and (Y), correspondingly, determined by proper membership functions. Where x and y are fuzzy factor elements.

-

Aggregation of fuzzy rules To properly link the collection of variables and data patterns, the majority of fuzzy rule-based frameworks use many rules. Aggregation of rules is the method of producing the overall consequence from each single consequence impacted by each rule in the fuzzy system rule base. The collection of linguistic expressions known as fuzzy rules demonstrates how the FIS should decide on an output control or input categorization [48].

-

Fuzzy inference engine The primary purpose of the fuzzy inference engine is to imitate human thought processes via the assessment of inputs and IF–THEN fuzzy rules. It takes into account every rule in the fuzzy rule base, executes fuzzy rules aggregation, and then figures out how to convert collections of input parameters into output outcomes [49].

-

Defuzzification The fuzzy truth values may be converted into clear output outcomes with the aid of defuzzification. This entails turning the inference engine's output results into a quantifiable number. Using membership functions similar to those employed by the fuzzifier, it transforms the fuzzy output of the decision-making unit or inference engine into crisp. The inference engine's fuzzy set is converted into crisp value using the defuzzification module [50]. It entails the transformation of an ambiguous amount into an exact, crisp quantity. The following factors determine which defuzzification is most suitable:

-

Continuity: The output shouldn't vary much if there is a little modification in the fuzzy operation.

-

Dis-ambiguity: With different input data, a distinct amount should be produced.

-

To carry out this process using the mathematical equation shown in Eq. 6, the centroid of area (C.A) approach is used for defuzzification [43].

where \(\mu \left( {M_{i} } \right)\) is the membership value output results in the ith subset and \(O_{i}\) is the output results.

2.1.3 Two types of fuzzy inference method

-

Mamdani FIS

The Mamdani technique, which Ebrahim Mamdani first introduced in 1976, uses a restricted number of one output and two input parameters [51]. The IF–THEN fuzzy rule’s consequence is expressed as a fuzzy region in this FIS, which means that the output and input factors are split into fuzzy sets and the response factor has a non-continuous area. Each rule’s output in Mamdani FIS is a fuzzy set with membership degrees. As they have a simpler to comprehend and intuitive set of rules, they are mostly used in expert system applications where the rule foundation is created by expert knowledge bases. Moreso, the rule's outcome is a fuzzy set produced by the implication technique of the FIS and the output membership function after the implementation of the fuzzy operators (OR/AND). The next step is aggregating these output fuzzy sets into a single unitary fuzzy set. Figure 2 illustrates the Mamdani FIS's four successive phases [52].

Functional processing steps of Mamdani FIS [51]

-

Sugeno FIS

This approach is often referred to as the Takagi–Sugeno-Kang fuzzy inference technique. It was introduced in 1985 and resembles the Mamdani approach in a number of ways [51]. While the first and second phases of the fuzzy inference procedure, which involve input fuzzification and fuzzy operator processes, are identical, the Sugeno fuzzy inference approach differs significantly from the Mamdani approach because the membership function of the response parameters in Sugeno is either constant or linear [53].

The fuzzy rule for this method takes the following expression in Eq. 7.

The independent factor Y is a constant (i.e., a = b = c = 0) for a zero-order Sugeno model.

2.2 Artificial neural network (ANN)

Artificial neural networks (ANNs) are framework of closely coupled adaptable processing units that are capable of carrying out massively parallel calculations for representation of knowledge and processing of data. By adopting a streamlined signal propagation method to mimic certain fundamental functionalities of human central nervous system, McCulloch and Pitts created the first ANN model, setting the tone for the creation of early neural computing [54, 55]. The earliest academic publications on neural network implementations in civil and structural engineering was written by Adeli and Yeh [56]. Recent years, neural networks have been extensively employed in civil engineering for making expert decisions, managing building projects, analyzing failures, evaluating geotechnical stability, and optimizing the use of geotechnical and structural materials [57].

In a simple sense, an ANN is a kind of technological restructuring of a biological nervous system. Its primary responsibility is to create an appropriate learning methodology, mimic certain intelligent brain functions, and construct a useful artificial neural network model in accordance with the human neural network concept and the requirements of practical implementation [58]. Eventually, it is put into practice functionally to address real-world issues. A computer intelligent model known as an artificial neural network (ANN) aims to mimic the functionality of biological neural networks [59]. ANNs use a fully convolutional methodology for data processing and are made up of interconnected artificial neurons. By continually adjusting connectivity weights in accordance with inputs and outputs, ANNs are employed to associate inputs and outputs. They may be used to identify patterns in data or to model intricate connections between inputs and outcomes [60]. Modifying the model architect and link weights might reveal complex linkages between inputs and outcomes. Notwithstanding these benefits, ANNs have a significant drawback in that they cannot produce a closed form equation. There are six phases in the ANN model development process. Input and output variables are chosen, databases are gathered and divided into training and validation groups, the network architect is chosen, link weights are optimized, training is terminated based on predetermined criteria, and the ANN's correctness is validated [61].

In order to simulate the activity of a person's brain, ANNs are a kind of AI systems deployed. Two layers which includes the input and output, along with the hidden layers are typical architecture of ANN. Each hidden layer possesses a predetermined number of nodes depending on the connection complexity, whereas the input and output layer each have a number of nodes according to the expertly sorted input and output parameters [62]. The node apiece adds up the inputs from the connected nodes in the preceding layer calculated by multiplying the weight of each linkage, and then applies an activation function to the summation to produce an output which serves as the input for the interconnected nodes in the subsequent layer. Through the hidden layers of the ANN, information proliferates from the input layer to the output layer. The weights of the linkages are tuned utilizing predefined training database to reduce estimation error throughout the training or learning phase [63]. Moreover, by the utilization of an independent validation database, the developed ANN's performance must be evaluated after training. In an effort to obtain the link between the model input parameters and the related target response, ANNs adapt from the instances of data that are provided to them and utilize these illustrations to alter their weights [64].

As a result, unlike other experimental and analytical approaches, ANNs require no previous information on the nature of the link between the input–output parameters. The ANN computing concept is comparable to a variety of traditional statistical approaches in respect to the fact that they are seeking to describe the connection among a historical collection of model inputs and related outputs [65]. ANNs with one input and output, with no hidden layer, and possess linear transfer function may generate model architecture similar to basic linear regression model. ANNs modify its weights by continually providing instances of model inputs and outputs in order to reduce the prediction error between the actual and ANN model results [66].

The channels of nerve cells that make up the human brain serve as the inspiration for ANN. These expert systems based on neural networks from biological systems may offer fresh approaches to solving challenging issues, despite the fact that they depict a much-reduced version of the human’s nervous system [67]. Conversely to modern computers, which process data sequentially, ANN use parallel computation inspired by how the human brain functions, to provide computers the ability to analyze enormous amounts of data at once. Challenges whose remedies call for information that is not easy to articulate but for which there are sufficient data or evidence are a good fit for ANNs [68]. The ANN's capacity to adapt when given new dataset and learn from experience without previous knowledge of the fundamental associations provides the basis of its modeling abilities, allowing it to simulate any functional connection with a tolerable degree of accuracy [69]. According to reports, ANNs may identify trends in occurrences and get over issues caused by choosing between linear, power or polynomial models for a given problem. The values being modelled are controlled by multidimensional interdependencies and the available data, which is a characteristic of several of these effective uses of ANNs in prediction and modeling [70].

According to Haykin [64], ANNs are a kind of largely parallelized distributive system composed mainly of basic processing elements that has a built-in predisposition for saving and making use of experience information. In the brain, neurons serve as the fundamental computational units, and in artificial neural networks (ANNs), neurons serve as the fundamental processing components [71]. The neural networks are made up of the interconnections between the processing components, also known as neurons. The weight factor of the linkages indicates how significant the interaction is between the neurons. The information of the neural networks is stored in the connection weights; as a result, during the training stage with a continuous supply of data, the weights within the neural network are gradually reorganized [72]. The actual and predicted values are then compared in an effort to minimize the network error. A learning technique known as error back-propagation is responsible for the ongoing adjustment of synaptic weights. Using differentiable activation parameters to learn a training set of input–output instances, back-propagation offers a computationally fast way for altering the weights in a feed forward network. ANN is referred to as a “black box system” since users are not aware of its connection weights, settings, or operations. Moreover, a small database might result in inaccurate categorization [73].

2.2.1 The artificial neuron basics

The basic building blocks of the nervous system and brain are biological neurons (also known as nerve cells) or simply neurons. Neurons are the cells that receive sensory information from the outside environment through dendrites, analyze it, and then transmit it to other neurons via axons [74]. Cell body aso known as Soma refers to the portion of the neuron cell that contains the nucleus and carries out the biochemical processes necessary for neurons to survive. Every neuron contains tiny, tube-shaped structures (extensions) called dendrites that surround them. Around the cell body, they extend out into a tree-like formation and take in incoming impulses [75]. A communication line-like structure known as an axon is lengthy, slender, and tubular. The connections between neurons are complexly arranged in space. Once the axon leaves the cell body and travels to the nerve fiber that receives impulses from the cell body, it stops. Synapses are incredibly complex, all-encompassing structures that are located at the terminal of the axon. These synapses are where neurons lose their connection to one another. The synapses of other neurons serve as the dendrites' input points [76]. As seen in Fig. 3, the soma gradually processes these incoming signals and recasts that processed value into an output that is sent to other neurons through the axon and synapses [77].

Biological neuron [71]

By replicating the structure of a biological neuron's operation, McCulloch and Pitts developed the first perceptron, which is still in use today [78]. A perceptron is a single layer neural network with a single output, as seen in Fig. 4. It aggregates the bias and inputs according to their respective weights before making a judgment based on the outcomes of the aggregation [79]. Several input variables to the network are represented as x0, x1, x2, x3…x (n) for a single observation. A connectivity weighted function, also known as a synapse, is multiplied by each of these inputs. The weights are denoted by w0, w1, w2, w3…w (n) whereby the node's weight reveals its potency. The bias function is denoted as b. A bias helps to alter the non-linear activation-function through a specified intercept and limiting the plots emerging from the origin [80]. The simplest scenario involves adding up the products, feeding that total into an activation function which generates a result, and sending that result as the output. In Eq. 8, the summation or transfer function is described arithmetically [81].

2.2.2 ANN model

Calculating the number of hidden layers and the number of nodes in each layer is necessary for setting the ANN architecture. An ANN may model the intricate behavior of the system under consideration by using a nonlinear activation function. There are as many nodes in the input and output layers as there are parameters in the input and output layers, correspondingly. By iterating, the best number of nodes for each concealed layer may be selected. The prediction performance will be impacted by utilizing fewer nodes, while overusing them will result in overfitting [82]. The ANN algorithm flowchart showing the smart intelligent modeling processing steps is shown in Fig. 5.

ANN algorithm flowchart [83]

2.2.3 Choosing the suitable inputs and outputs

ANN have been widely employed in recent years to simulate a variety of engineering difficulties because of its nonlinearity and the shown benefit of their very accurate prediction abilities. ANN is an information-based method, as opposed to statistical modeling, hence it no longer needs previous knowledge of the underlying connections between variables. These nonlinear parametric models may also roughly represent any continuous connection between input and output [84]. The uncommon maximum approach in structural and material engineering relies heavily on previous knowledge about the machine in issue while choosing relevant inputs. As a result, how you handle the underlying components that have an impact on the behavior of the target variable determines the I/O parameters of ANN forms [85].

2.2.4 Division of dataset

Cross-validation, which requires splitting the entire dataset into three subsets—training, testing, and validation datasets—is often employed in practically all civil engineering models that utilize ANN to prevent criteria. The allocation proportion between the three categories could also have a significant impact on prediction performance. Consequently, it is crucial to split the dataset into three subsets such that they represent the same statistical population and have equivalent statistical properties [83].

2.2.5 Network architecture

In order to define network topology, a model's architecture and the ANN’s information propagation method must be expertly chosen. Despite the fact that there are other ANN variants, the Multilayered Perceptron, which was trained using the back propagation approach, were employed for modeling and prediction. Many structural materials optimization concerns have so far been solved using feed-forward networks [86].

2.2.6 Optimization of model

The back-propagation rule set algorithm is often used to accomplish model optimization, which compares the most appropriate weights for the ANN interconnections. These are the most well-liked optimization rules in a feed-forward network and have been used well in many structural materials development situations. The back-propagation procedures may overcome local optima since they are essentially focused on the Gradient Descent Rule [87].

2.2.7 Ending criteria

The ANN model’s termination requirements specify when to terminate the training phase. After training, a validation step is required to make sure overfitting never took place. As a result, the gathered dataset should be split into three categories namely; training, testing and validation. The first sampled set is utilized to optimize the connection weights during training [88]. Once the dataset’s forecasting error is low, the network is said to have been trained. When the error function for the training and testing datasets are nearly identical, the produced ANN is regarded generic (not over fitted), and training process should be halted at this point. Beyond this point, extending the training will result in overfitting, which will raise the error of the testing dataset [89].

2.2.8 Validation and behavior evaluation

To confirm the ANN’s generalization once the training step is complete, the ANN should be verified using an independent data resource. Instead of remembering the training dataset, the trained ANN should demonstrate the non-linear relationship among inputs and outputs [90]. The findings are useful for assessing network efficiency since the ANN is measured against an unobservable dataset. Parameters like the Mean Square Error and Mean Absolute Error are often used to gauge how well networks predict outcomes. Although MAE indicates the extent of the error function, MSE provides significantly greater priority to greater errors than minor errors [91].

2.3 Adaptive neuro fuzzy inference system (ANFIS)

The Takagi–Sugeno FIS is the basis for the ANFIS technology, a kind of ANN created in the early 1990s. ANFIS is a component of the hybridized system known as neural fuzzy networks. It belongs to a class of adaptive networks that combine fuzzy logic (FL) and artificial neural networks (ANN) to provide results with a considerably greater degree of accuracy and performance for predetermined input variables [92, 93]. The back propagation technique is used in the ANFIS methodology to carefully choose the rule base using neural network approaches. It has a hybrid optimization algorithm that controls the learning process through membership functions and processing parameters selection by combining the least squares statistical approach with the back propagation algorithm of ANN. These elements offer the ANFIS network the ability to generalize supplied data sets in order to analyze complicated and real-life challenges [94]. To create a self-learning, adaptive, and self-organizing neuro-fuzzy controller, fuzzy rules are developed through the possible trends or features derived from the system data. It is an adaptive network of directed connections and nodes that develops related learning rules in accordance with necessary membership function requirements generated from the datasets, maintaining adequate generalization of data [95]. ANFIS modifies and prescribes suitable MF and related sets of fuzzy-if-then-rules from a specific output-input model connection using the learning capacity of neural networks. It has the ability to combine the advantages of neural networks and fuzzy logic in a single framework since it incorporates both of these concepts. Its inference system is a collection of IF-THEN fuzzy rules with the capacity to learn and estimate nonlinear functions. ANFIS is regarded as a universal approximation tool as a result and the algorithm flowchart is shown in Fig. 6 [96].

ANFIS algorithm flowchart [15]

2.3.1 Design network architecture of ANFIS

Fuzzy inputs were created utilizing the membership function from the crisp input data for the ANFIS model. The ANFIS network used the Gaussian and generalized bell-shaped membership function. Fuzzified inputs in the form of linguistic variables were fed into the ANN interface through the membership function parameters to induce learning process of the signals it receives and effectively generalize the datasets [97]. The defuzzifier unit becomes operational to turn the verbal output produced by the ANN framework into crisp output. There are several different adaptive layers included in the ANFIS functional topology which enhance its modelling processes. Each layer comprises nodes that are connected to a network of transfer functions that is used to handle the fuzzy inputs. The five network layers make up the architectural layout of the adaptive network are shown in Fig. 7 [98]. Equations (9) and (10) provide the algebraic expressions for a first order Takagi–Sugeno fuzzy model having two IF-THEN criteria in the common rule set for a procedure described by inputs I1 and I2, one target response variable, learnable parameters, Pi, qi, and ri and membership functions A1, A2, B1, B2 [99].

Functional units of ANFIS architecture [15]

Layer 1: This layer is referred to as the fuzzification layer. At this layer, a crisp input data is provided to node i which is associated with the linguistic identifier Ai or Bi-2. As a result, the MF \({\mathrm{o}}_{\mathrm{i}}^{1}\)(X) regulates the level of belongingness of a given input parameter. The outcome of each node was calculated using Eqs. (11) and (12). Neha et al. [100] and Alaneme et al. [53] utilized the Gaussian and bell-shaped generated MF respectively.

Layer 2: Nodes are regarded to be \({\mathrm{o}}_{\mathrm{i}}^{2}\) and fixed in this layer. The equation used to calculate the rule-matching-factor \({\mathrm{W}}_{\mathrm{i}}\), also known as the firing-strength, shows that each node’s outputs is the sum of all incoming signals. This formula is shown for each node Eq. 13 [101].

Layer 3: Every node in this layer calculates the proportion of a solitary rule's firing strength to the total of all the rules' firing powers as shown in the Eq. 14, which aids in normalizing the MF factors [102]. This layer is marked by the letter \({\overline{\mathrm{W}} }_{\mathrm{i}}\).

Layer 4: This layer calculates each unique output result y starting with the inference of the formulated linguistic rules to the rule base. This layer's nodes are connected to the equivalent layer 3 regularization node and also receive the input signal from there. As the node operation for each layer 4 node is written as an expression in Eq. 15, they are all adaptable nodes [103].

Layer 5: This layer, which is also referred as the defuzzification layer, only contains single node and evaluates the total of all outputs to provide the clear numerical output values shown in the mathematical expression in Eq. 16 [104].

2.4 Gene expression programming (GEP)

GEP is a class of metaheuristic algorithms that draws its inspiration from biological organisms. It is a complete phenotype or genotype scheme with expression trees (ET) of varied sizes and forms contained in linear chromosomes of constant length [105]. GEP is a branch of genetic programming that was created by Ferreira. It is made up of five different components: the fitness parameter, the terminal set, the function set, the terminal requirement, and the factors. The genetic programming method employs a dissect tree topology that may fluctuate in size and shape in the course of computer programming, while the GEP technique requires a predetermined length role string to obtain the answer [106]. Due to the genetic process at the chromosomal level, the GEP makes genetic diversity very easy to manufacture. Moreover, the fact that GEP is multi-genic allows for the construction of intricate, nonlinear programs made up of numerous modules [107]. The GEP method illustrates that for each expanding program, a stable length chromosome is first generated at random. The chromosomes are thus verified, and each person's fitness is evaluated. Afterwards, based on their fitness results, people are chosen for procreation. Each new person in the process is put through it again and again until a solution is found. By running the supplied program via genetic processes including twisting, mutation, and crossover, this approach creates a change in the demography. GEP is a technique for translating ideas into computer programs and syllabus. These programs often have a tree construction with variable measurements in terms of shape and size, similar to chromosomes [108].

The functional schematic for the sequence of processes in GEP technique is shown of the Fig. 8 which illustrates how GEP chromosomes, that are multi-genic and encode several ETs or procedures, will be arranged into a much more sophisticated program [109]. So, much like the DNA or protein system of life on Earth, the genes/trees system of GEP is free to explore additional structure levels in addition to all the pathways in the solution space. The chromosomes and ET, which are further categorized as genotype and phenotype, correspondingly, are the two key actors in GEP [110]. The genotypes are made up of linear, condensed, tiny, and easily genetically open to manipulation chromosomes, while the phenotypes are just the expression of the corresponding chromosome. These are the things that selects work upon, and they are chosen to reproduce with change in accordance with their suitability. The interaction between chromosomes and ET in GEP suggests the existence of a clear interpretation mechanism for transforming the terminology of chromosomes into the syntax of ETs [111].

GEP Algorithm Flowchart [112]

The use of GEP enables the presence of many genes on a single chromosome. These genetic traits include two different sorts of information; the first kind is kept in the gene's head and contains data required to create the entire GEP model, while the second is saved in the gene's tail and is utilized to create future GEP models. Every issue is given a length of the head, h, while the length of the tail, t, is determined by the magnitude of the head, h, and the number of arguments, n, of the activity with the most assertions (also known as the maximal arity), and is calculated using the formula presented in Eq. 17 [113]:

An initial quantity of people's chromosomes is generated at random to begin the procedure shown in Fig. 8. The fitness of every person is then assessed in comparison to a set of fitness instances once these chromosomes have been revealed. After that, the people are chosen based on their capacity for modified reproduction [112]. The same evolutionary mechanisms, including genome expression, environment-environment interaction, selection, and reproduction with alteration, are applied to these new individuals. After a satisfactory solution has been identified, the procedure is repeated for a predetermined number of generations. The explanatory variables that are taken into account as model inputs make up the endpoint set [114].

Hence, defining the terminating set represents the initial step in using the GEP approach. Particular chromosomes are updated and optimized in each iteration of the GEP technique according to fitness factor and genetic functions like the GA in order to discover the best program [115]. Up till the convergence conditions are met, this procedure is repeated. GEP is a method that uses populations—in this example, populations of models and solutions—to choose and replicate them based on fitness and add genetic diversity utilizing one or more genetic functions like mutation or crossover [116]. The basic difference between the three algorithms depends on the characteristics of the inmates or configurations or strategies, as the instance may be. In GA, the entities are representational sequence of stationary chromosomes, in GP, the individuals are non-linear entities of various forms and dimensions, whereas in GEP, the individuals are embedded in symbolic strings of predefined chromosomes articulated as GP. Therefore, GEP is an amalgamation of GP and GA [117].

2.5 Application of artificial intelligence techniques in the optimization of green geopolymer concrete materials

The application of artificial intelligence techniques in the optimization of green geopolymer concrete materials involves using AI algorithms and models to analyze and optimize various parameters such as mix design, curing conditions, material selection, and overall performance of the concrete. AI techniques, including ANFIS, genetic algorithms, and neural networks, can be employed to explore a wide range of variables and their interactions to achieve optimal results. In recent times, soft-computing tools have been applied in the development of eco-efficient geopolymer concrete as a viable alternative to the conventional concrete to minimize the use of cement and have demonstrated some degree of success in the optimization of the mixture variables and curing conditions effects on its mechanical behavior [118].

The summary of some reviewed applications with varying optimization algorithms and source materials was presented in Table 1. The results obtained from the relevant literatures indicate robust performance as validated by deployed statistical measures to accurately predict the response variables and optimize the factor variables.

2.6 Different approaches in using artificial intelligence for the design of geopolymer concrete

Artificial intelligence (AI) techniques have been applied in various aspects of geopolymer research and development. It’s important to note that while AI techniques offer promising potential in the field of geopolymers, their successful application often relies on the availability of high-quality data for training and validation [126, 129, 130]. Additionally, expert knowledge and human expertise should complement AI techniques to ensure accurate and reliable results. some common approaches in using AI for geopolymer concrete design.

-

i. Machine learning-based approaches

Machine learning algorithms can be employed to optimize and enhance the design of geopolymer concrete. These approaches involve training AI models on large datasets that contain information about mix proportions, precursor materials, curing conditions, and desired properties. The trained models can then predict optimal mix designs based on specified performance criteria. Examples of machine learning-based approaches include Genetic algorithms which can be used to explore and optimize the space of possible mix designs for geopolymer concrete. These algorithms iteratively generate and evaluate different combinations of mix proportions, activator compositions, and curing conditions to find the optimal solution that meets the desired performance objectives. Another machine learning-based approach is ANN which can learn the complex relationships between mix design parameters and concrete properties by training on historical data. Once trained, ANNs can predict the required mix proportions for achieving specific target properties, such as compressive strength, workability, or durability [129, 131].

-

ii. Expert systems

Expert systems use knowledge-based rules and reasoning techniques to guide the design process of geopolymer concrete. These systems incorporate expert knowledge and guidelines into an AI framework, allowing for the generation of optimized mix designs. Expert systems can consider various factors such as desired performance criteria, available materials, and environmental constraints to provide informed design recommendations. Palomo et al. [132] proposed an expert system for geopolymer concrete mix design, incorporating expert knowledge and decision-making rules to guide the selection of raw materials, mix proportions, and curing conditions. The study discusses the development and implementation of the expert system and presents case studies demonstrating its effectiveness in achieving desired performance objectives in geopolymer concrete mix design. More so, Tchakouté et al. [133] developed an expert system based on fuzzy logic and genetic algorithms for the mix design of geopolymer concrete, considering factors such as workability, strength, and durability requirements.

-

iii. Multi-objective optimization

Multi-objective optimization approaches aim to find a balance between multiple conflicting objectives in geopolymer concrete design. These approaches consider multiple performance criteria simultaneously, such as compressive strength, workability, and environmental impact. AI algorithms, such as genetic algorithms or particle swarm optimization, are used to explore the design space and identify optimal mix designs that meet the desired balance of objectives. Al Bakri et al. [134] conducted a study on the optimization of geopolymer concrete using particle swarm optimization (PSO). They used PSO to optimize the mix proportions of geopolymer concrete by considering parameters such as the ratio of sodium silicate to sodium hydroxide, water-to-binder ratio, and the ratio of fly ash to alkaline activator. The PSO algorithm successfully optimized the mix design to achieve the desired strength and workability of geopolymer concrete. Also, Bakhshpoori et al. [135] proposed a multi-objective optimization approach for geopolymer concrete mix design using PSO. The study aimed to simultaneously optimize multiple objectives, including compressive strength, workability, and cost. PSO was used to explore the design space and identify a set of Pareto-optimal solutions, providing a range of optimal mix designs that balance different performance criteria.

-

iv. Hybrid approaches

Hybrid approaches combine multiple AI techniques to enhance the design of geopolymer concrete. For example, a combination of machine learning algorithms and genetic algorithms can be used to optimize mix designs based on historical data and search for the best mix proportions and curing conditions. These hybrid approaches leverage the strengths of different AI techniques to improve the efficiency and accuracy of the design process [16, 136]. Yeşilmen et al. [137] proposed a hybrid model combining artificial neural networks and particle swarm optimization to predict the compressive strength of geopolymer concrete. The model optimized the mix design parameters to achieve desired strength levels. Additionally, Alengaram et al. [138] proposed a hybrid approach combining genetic algorithms (GA) and artificial neural networks (ANN) for the optimization of geopolymer concrete mix design. The GA was used to search for the optimal mix proportions, while the ANN was employed to predict the compressive strength of geopolymer concrete based on mix parameters. The hybrid approach demonstrated improved accuracy and efficiency in optimizing mix designs for desired strength requirements.

2.7 Usage AI in geopolymer concrete development

2.7.1 Mix design optimization

AI techniques, such as genetic algorithms or machine learning algorithms, can be used to optimize geopolymer mix designs. These algorithms can analyze large datasets considering various parameters and objectives including precursor materials, activator composition, curing conditions, desired workability and durability properties, to determine the optimal mix proportions and activator ratios for achieving specific performance objectives. Jayaprakash et al. [139] developed a hybrid model combining ANNs and genetic algorithms for geopolymer concrete mix design, considering parameters such as activator composition, curing conditions, and mechanical properties. Also, Gupta et al. [140] developed an ANN model to predict the slump value of concrete based on mix design parameters, enabling efficient mix proportioning. Yadav and Singh [141] developed a fuzzy logic model to optimize the mix design of self-compacting concrete, considering variables like cement content, water-to-cement ratio, and superplasticizer dosage.

More so, Li et al. [142] employed a multi-objective genetic algorithm to optimize the mix design of high-performance geopolymer concrete, focusing on achieving high strength, low porosity, and enhanced durability. Zhang et al. [143] conducted data mining and statistical analysis on a large dataset of geopolymer concrete mixes to identify the relationships between mix parameters, activator composition, and mechanical properties. The research findings derived from these studies draws significant emphasis on the benefits and applicability of AI techniques in mixture design optimization to perform non-linear generalization of datasets to analyze complex geopolymer behavior and determine fractions of constituents with best performance for engineering purposes.

2.8 Strength prediction and property estimation

AI models, including artificial neural networks (ANNs) and fuzzy logic systems, have been employed to predict the compressive strength or other mechanical properties of geopolymer materials by training on the datasets containing information about mix proportions, curing conditions, and material properties. These models can assist in estimating the performance of geopolymer-based materials before physical testing, enabling faster and more efficient material selection and design. Ozbay et al. [144] applied artificial neural networks (ANNs) to predict the compressive strength of concrete based on mix proportions and material properties. Sonawane et al. [145] employed a genetic algorithm to optimize the mix design of geopolymer concrete, focusing on achieving desired compressive strength and durability. In a similar fashion, Şahmaran et al. [146] developed an artificial neural network (ANN) model to predict the compressive strength of geopolymer concrete based on mix parameters such as sodium silicate content, sodium hydroxide concentration, curing temperature, and curing time. Also, Kamseu et al. [147] developed a hybrid model using a combination of artificial neural networks and genetic algorithms to predict the compressive strength of geopolymer concrete considering parameters such as alkali activator content, curing temperature, and curing time. Additionally, Chen et al. [148] proposed a fuzzy logic model for predicting the compressive strength of geopolymer concrete incorporating factors such as sodium hydroxide concentration, sodium silicate modulus, curing temperature, and curing time. Furthermore, Arivoli et al. [149] employed data mining techniques to identify significant factors influencing the compressive strength of geopolymer concrete. The study extracted patterns from a large dataset to develop predictive models for strength estimation.

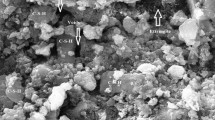

2.8.1 Material characterization and microstructure analysis

AI algorithms can aid in the characterization and analysis of geopolymer concrete microstructures. Machine learning techniques can process data from techniques such as X-ray diffraction (XRD), scanning electron microscopy (SEM), or thermogravimetric analysis (TGA) to identify and quantify different phases, analyze pore crystalline structure, and determine the composition and distribution of geopolymer gels to evaluate the degree of geopolymerization in the concrete. This information can guide the optimization of mix design parameters for improved performance [150]. Rajasekaran et al. [151] employed machine learning techniques, specifically random forest regression and support vector regression, to predict the geopolymer concrete’s compressive strength based on features extracted from XRD patterns and SEM images. The study demonstrated the effectiveness of machine learning in correlating microstructural characteristics with mechanical properties. Long et al. [152] proposed a deep learning model based on a convolutional neural network and a recurrent neural network for the quantitative analysis of geopolymer microstructure. The model was trained on SEM images to estimate the porosity, gel content, and particle size distribution of geopolymers, showcasing the potential of deep learning in microstructure characterization. Moreover, Rostami et al. [153] proposed a hybrid model combining image analysis, feature extraction, and machine learning algorithms to characterize the microstructure of geopolymer-based materials. The study utilized a combination of SEM images and X-ray microtomography to extract morphological and textural features and employed a random forest regression model to predict the compressive strength of geopolymer materials based on these features.

2.9 Critical analysis on advantages and disadvantages of AI methods

The application of AI methods such as artificial neural networks (ANN), genetic algorithms (GA), and adaptive neuro-fuzzy inference systems (ANFIS) in the optimization of green geopolymer concrete materials offers both advantages and disadvantages. Here are some potential advantages and disadvantages of each technique:

2.9.1 Advantages of ANN

-

1.

Nonlinear Modeling: ANN can capture complex nonlinear relationships between input variables and output responses, making it suitable for modeling the behavior of green geopolymer concrete materials [127].

-

2.

Prediction Accuracy: ANN models can provide accurate predictions of material properties and behavior based on training data, allowing for better optimization of green geopolymer concrete materials [126].

-

3.

Adaptability: ANN models can adapt and learn from new data, allowing for continuous improvement and optimization of green geopolymer concrete materials.

2.9.2 Disadvantages of ANN

-

1.

Data Requirements: ANN models require a large amount of high-quality data for training, which may not always be readily available for green geopolymer concrete materials.

-

2.

Black Box Nature: ANN models often lack interpretability, making it challenging to understand the underlying mechanisms influencing the optimization of green geopolymer concrete materials.

-

3.

Model Complexity: ANN models can become complex, with a large number of parameters to be determined, requiring extensive computational resources and time for training and optimization.

2.9.3 Advantages of gene expression programming (GEP)

-

1.

Flexibility: GEP allows for the creation of complex programs that can handle multiple variables, constraints, and objectives in the optimization of green geopolymer concrete materials [119].

-

2.

Adaptability: GEP uses evolutionary processes to improve the performance of programs over time, allowing for the exploration of a wide range of solutions and the adaptation to changing optimization criteria [124].

-

3.

Global Optimization: GEP's evolutionary nature enables it to search for global optima by considering multiple candidate solutions simultaneously, potentially leading to improved optimization results.

-

4.

Ability to Handle Nonlinear Relationships: GEP can capture and represent complex nonlinear relationships between input variables and desired optimization objectives in the geopolymer concrete material optimization process [123].

2.9.4 Disadvantages of gene expression programming (GEP)

-

1.

Computational Intensity: GEP involves extensive computational operations, including the evaluation and evolution of large populations of candidate solutions, which can be time-consuming and require significant computational resources [122].

-

2.

Complexity and Interpretability: Evolved programs in GEP can become complex, making it challenging to interpret and understand the underlying relationships and mechanisms driving the optimization process.

-

3.

Difficulty in Parameter Tuning: GEP requires careful tuning of various parameters, such as mutation rate, crossover rate, and population size, to achieve optimal results, which can be a non-trivial task.

-

4.

Data Requirements: GEP relies on a sufficient amount of high-quality data to adequately represent the optimization problem, including the behavior and performance of green geopolymer concrete materials [34].

2.9.5 Advantages of adaptive neuro-fuzzy inference systems (ANFIS)

-

1.

Hybrid Modeling: ANFIS combines the advantages of fuzzy logic and neural networks, allowing for both linguistic and numerical representations of the optimization problem [128].

-

2.

Interpretability: ANFIS models can provide interpretable rules and linguistic descriptions, enabling a better understanding of the optimization process for green geopolymer concrete materials.

-

3.

Learning Capability: ANFIS models can learn from data and adapt to changing conditions, ensuring continuous improvement and optimization [53].

2.9.6 Disadvantages of adaptive neuro-fuzzy inference systems (ANFIS)

-

1.

Complexity: Develo** and training ANFIS models can be complex, requiring expertise in both fuzzy logic and neural networks [121].

-

2.

Data Requirements: ANFIS models require sufficient and representative training data to effectively capture the underlying relationships in the optimization problem.

-

3.

Scalability: ANFIS models may face challenges when dealing with large-scale optimization problems, as the complexity and computational requirements can increase significantly [123].

3 Statistical measures for performance evaluation

In order to verify the model's predictive ability, the effectiveness of the proposed smart intelligence model is assessed using statistical evaluation metrics like coefficient of determination (r2) and loss function parameters like root mean square error (RMSE), mean absolute error (MAE), mean squared error (MSE), and mean absolute percentage error (MAPE) [154, 155]. In order to determine how effectively ANN represents the data for generalization, a loss function is used to assess the objective and anticipated output signals. We try to keep this difference in output signals among expected and goal as little as possible throughout training. The situation is a function of the difference between the predicted and actual values for a specific example of data in statistics, where a loss function is commonly employed for parameter estimation. Around the middle of the twentieth century, Abraham Wald revived the Laplace era idea and applied it to statistics [156, 157].

3.1 Coefficient of determination (r2)

A statistical metric referred to as r2 looks at how variations in one attribute may be described by changes in another when forecasting the result of an occurrence. specifically, this coefficient, which is extensively depended upon by speculators when doing projections, evaluates how significant the linear connection is between two factors [158]. r2 is a calculation that shows the extent to which the disparity of one component is influenced by the connection it has with another as shown in Eq. 18. A number between 0.0 and 1.0 (0–100%) is used to denote this association [159].

3.2 Root mean square error (RMSE)

RMSE is a measurement of the variance between observed outcomes and model results that have been anticipated or approximated. It is generated by calculating the square root of the calculated mean squared error and averaging the squared residuals, this indicates if the model's anticipated outcomes are under or over-estimated in comparison to the observed data [160]. The purpose of RMSE is to combine the sizes of forecast errors for numerous data sources into a unitary measure of predictive capacity. As RMSE is scale-dependent, it should only be used to evaluate predicting errors of several models for a single dataset and not across datasets [161]. RMSE is among the most reliable metrics for evaluating the regression generalized model's goodness of fit. It also expresses how close a data point is to the fitted line. It is the averaged separation of data points from the fitted line as measured along a vertical line. The results are always positive and range from 0 to 1, with a value of 0 (nearly never attained in reality) signifying a perfect match to the data [162]. A smaller RMSE is often preferable than a greater one. Nevertheless, since the indicator depends on the size of the numbers employed, assessments across other kinds of data would be incorrect. The mathematical formula is presented in Eq. 19 where n represents the sample size,\(A_{j}\) represents the measure or predicted values and \(E_{j}\) represents the estimated or predicted values [163, 164].

3.3 Mean absolute percentage error (MAPE)

In machine learning regression applications, the loss function used to quantify the predictive performance of a model is called MAPE. By evaluating reliability as a percentage of the observed, or real values, it calculates the model’s error magnitude [165]. The error is referred to as the disparity between the real and model estimated value, and it's employed to quantify the amount of a model's inaccuracy in percentage terms as presented in Eq. 20. An indicator of a predicting method's effectiveness is MAPE. The accuracy of the anticipated quantities in relation to the actual numbers is determined by averaging the absolute percentage errors of each item in the dataset. The usage of dataset values besides zero is necessary for MAPE to be successful when evaluating huge data sets [166].

3.4 Mean squared error (MSE)

The MSE loss function, which is among the most well-liked ones, calculates the average of the squared discrepancies between the actual and projected outcomes. This function is particularly appropriate for computing loss because of a number of its characteristics. Because the computed difference is squared, it is of no consequence whether the projected value is higher or lower than the desired value; nevertheless, values with a significant inaccuracy are punished [167]. The fact that MSE is both convex and has a distinct global minimum makes it simpler to use steepest descent optimization to get the weight values as shown in Eq. 21. As MSE is the error function's second moment around the origin, it takes into account both the estimator's bias (how far off the average estimated value is from the true value) and its variance (the grade to which predictions differ from one test dataset to another). The loss will grow dramatically if a projected value is much larger than or less than its target value since this loss function is particularly sensitive to outliers [168].

3.5 Mean absolute error (MAE)

In MAE, various mistakes are not measured more or less; instead, values rise proportionately as the number of errors rises. By averaging the absolute error numbers, the MAE score is calculated as presented in Eq. 22. With MAE, the mean of the absolute deviations between the desired and anticipated outputs is discovered. In certain circumstances, the usage of this loss function replaces the MSE [169]. Outliers may have a significant impact on the loss since the distance is squared, and MSE is very sensitive to outliers. When there are many outliers in the training data, MAE is employed to reduce this. Moreover, there are certain drawbacks. For example, since the function's gradient is undefined at zero, steepest descent optimization would fail as the mean distance gets closer to zero [170].

As a result, a loss function parameter referred to as the Huber Loss that combines the benefits of MSE and MAE, was established as mathematically expressed in Eq. 23 [168].

The MSE algorithm is employed when the absolute difference between the real and estimated result falls below or equal to a threshold value denoted as δ. Instead, MAE is used if the mistake is significant enough [94, 171].

4 Established gaps in literature

A critical assessment of several relevant scholarly research on the application of artificial intelligence methods to appraise the deployment of industrial and agro waste derivatives for the development of green geopolymer concrete with enhanced mechanical strength and durability performance has been presented. From the works reviewed it was observed that several soft-computing techniques were adapted and/or combined as hybridized algorithms for the optimization of various concrete properties involving a combination of different waste derivatives. The findings from the literature works reviewed depicts satisfactory and robust performance of the developed smart intelligent model in carrying out generalization functions and predicting the target response parameters as validated using several statistical measures and loss function parameters [172,173,174]. Furthermore, based on the review outcomes, it would be captivating to scrutinize the grey areas as it concerns optimization and modelling of geopolymer concrete’s behavior taking into consideration multiple constraints of mixture proportions, alkaline binder concentration and curing conditions to obtain desired results. Investigating the durability and mechanical strength properties of ternary blend of agro based geopolymer concrete using a combination of unitary and hybridized supervised learning algorithms such as ANFIS, ANN, and GEP is very interesting identified gap. The utilization of these techniques will provide a dependable approach for complex system optimization such as geopolymer developed from ternary mixture of agro wastes derivative ashes to appraise the combination levels with improved engineering properties [175].

5 Conclusion

The conclusion of the review on the application of artificial intelligence (AI) techniques in the production of geopolymer concrete highlights the following key points;

The development of agro-waste based geopolymer was evaluated using artificial intelligence modelling tools to optimize the strength characteristics and prediction performance in this crucial review study. Even though the literature survey recognizes that geopolymer concrete and structural material optimization problems are complex, ill-defined, ambivalent, and lacking in details, these difficulties have been effectively overcome by proposing solutions that utilize the expertise and knowledge of professionals supported by actual field data.

Through the deployment of AI and machine learning strategies, the intelligent precision with which mixture experiment design processes are proposed and utilization of pozzolanic materials derived from agro wastes with the aim of reducing greenhouse gases associated with cement production and utilization are optimized. AI techniques contribute to the sustainability of geopolymer concrete production by enabling the incorporation of alternative binders, recycled materials, and waste products. This reduces the environmental impact and promotes resource efficiency.

Also, the impact of structural concrete development procedures to global warming would be significantly less thanks to these cutting-edge approaches. It is advantageous to the production of eco-efficient geopolymer concrete and civil engineering to use artificial intelligence along with algorithms that are inspired by nature to remedy a variety of intractable tasks. This approach can be seen as an advancement in the development of sustainable structural materials that have zero carbon footprints.

Furthermore, while the application of AI techniques in geopolymer concrete production shows great potential, there are still challenges to overcome. These include data availability, computational complexity, integration with existing production processes, and practical implementation frameworks. Further research is needed to address these challenges and develop standardized approaches for the industry. The findings of the review have practical implications for researchers, engineers, and industry professionals involved in geopolymer concrete production. AI techniques offer opportunities for optimizing material selection, mix design, curing conditions, and overall production processes to achieve desired performance and sustainability goals.

Data availability

All data generated or analyzed during this study are included in this published article.

References

Alaneme GU, Mbadike EM (2021) Experimental investigation of Bambara nut shell ash in the production of concrete and mortar. Innov Infrastruct Solut 6:66. https://doi.org/10.1007/s41062-020-00445-1

Zakka WP, Lim NHAS, Khun MC (2021) A scientometric review of geopolymer concrete. J Clean Prod 280:124353

Jiang X, Zhang Y, **ao R, Polaczyk P, Zhang M, Hu W, Bai Y, Huang B (2020) A comparative study on geopolymers synthesized by different classes of fly ash after exposure to elevated temperatures. J Clean Prod 270:122500

Yang H, Liu L, Yang W, Liu H, Ahmad W, Ahmad A, Aslam F, Joyklad P (2022) A comprehensive overview of geopolymer composites: a bibliometric analysis and literature review. Case Stud Constr Mater 16:e00830

Provis JL, Bernal SA (2014) Geopolymers and related alkali-activated materials. Annu Rev Mater Res 44:299–327

Verma M, Dev N (2017) Review on the effect of different parameters on behavior of geopolymer concrete. Int J Innov Res Sci Eng Technol 6:11276–11281

Bondar D, Lynsdale CJ, Milestone NB, Hassani N, Ramezanianpour AA (2011) Engineering properties of alkali activated natural pozzolan concrete. ACI Mater J 108:64–72

Alawi A, Milad A, Barbieri D, Alosta M, Alaneme GU, Bux Q (2023) Eco-friendly geopolymer composites prepared from agro-industrial wastes: a state-of-the-art review. CivilEng 4(2):433–453. https://doi.org/10.3390/civileng4020025

Fernández-Jiménez A, Cristelo N, Miranda T, Palomo A (2017) Sustainable alkali activated materials: precursor and activator derived from industrial wastes. J Clean Prod 162:1200–1209

Dao DV, Ly HB, Trinh SH, Le TT, Pham BTJM (2019) Artificial intelligence approaches for prediction of compressive strength of geopolymer concrete. Materials 12:983

Ahmad A, Ostrowski KA, Maslak M, Farooq F, Mehmood I, Nafees A (2021) Comparative study of supervised machine learning algorithms for predicting the compressive strength of concrete at high temperature. Materials 14:4222

Song H, Ahmad A, Farooq F, Ostrowski KA, Maslak M, Czarnecki S, Aslam FJC, Materials B (2021) Predicting the compressive strength of concrete with fly ash admixture using machine learning algorithms. Constr Build Mater 308:125021

Nguyen H, Vu T, Vo TP, Thai HT (2021) Efficient machine learning models for prediction of concrete strengths. Constr Build Mater 266:120950

Nafees A, Amin MN, Khan K, Nazir K, Ali M, Javed MF, Aslam F, Musarat MA, Vatin NI (2022) Modeling of mechanical properties of silica fume-based green concrete using machine learning techniques. Polymers 14:30

Alaneme GU, Mbadike EM, Iro UI, Udousoro IM, Ifejimalu WC (2021) Adaptive neuro-fuzzy inference system prediction model for the mechanical behaviour of rice husk ash and periwinkle shell concrete blend for sustainable construction. Asian J Civil Eng 2021(22):959–974. https://doi.org/10.1007/s42107-021-00357-0

Onyelowe KC, Alaneme GU, Onyia ME, Van Bui D, Diomonyeka MU, Nnadi E, Ogbonna C, Odum LO, Aju DE, Abel C, Udousoro IM, Onukwugha E (2021) Comparative modeling of strength properties of hydrated-lime activated rice-husk-ash (HARHA) modified soft soil for pavement construction purposes by artificial neural network (ANN) and fuzzy logic (FL). J Kejuruter 33(2):365–384. https://doi.org/10.17576/jkukm-2021-33(2)-20

Aslam F, Farooq F, Amin MN, Khan K, Waheed A, Akbar A, Javed MF, Alyousef R, Alabdulijabbar H (2020) Applications of gene expression programming for estimating compressive strength of high-strength concrete. Adv Civ Eng 2020:8850535

Ahmad A, Ahmad W, Chaiyasarn K, Ostrowski KA, Aslam F, Zajdel P, Joyklad P (2021) Prediction of geopolymer concrete compressive strength using novel machine learning algorithms. Polymers 13:3389

Ilyas I, Zafar A, Afzal MT, Javed MF, Alrowais R, Althoey F, Mohamed AM, Mohamed A, Vatin NI (2022) Advanced machine learning modeling approach for prediction of compressive strength of FRP confined concrete using multiphysics genetic expression programming. Polymers 14:1789

Nafees A, Khan S, Javed MF, Alrowais R, Mohamed AM, Mohamed A, Vatin NI (2022) Forecasting the mechanical properties of plastic concrete employing experimental data using machine learning algorithms: DT, MLPNN, SVM, and RF. Polymers 14:1583

Onyelowe KC, Fazal EJ, Michael EO, Ifeanyichukwu CO, Alaneme GU, Chidozie I (2021) Artificial intelligence prediction model for swelling potential of soil and quicklime activated rice husk ash blend for sustainable construction. J Kejuruter. 33(4):845–852. https://doi.org/10.17576/jkukm-2021-33(4)-07

Sun J, Ma Y, Li J, Zhang J, Ren Z, Wang X (2021) Machine learning-aided design and prediction of cementitious composites containing graphite and slag powder. J Build Eng 43:102544

Song H, Ahmad A, Ostrowski KA, Dudek M (2021) Analyzing the compressive strength of ceramic waste-based concrete using experiment and artificial neural network (ANN) Approach. Materials 14:4518

Öztas A, Pala M, Özbay EA, Kanca E, Caglar N, Bhatti MA (2006) Predicting the compressive strength and slump of high strength concrete using neural network. Constr Build Mater 20:769–775

Gopalakrishnan K, Kim S, Ceylan H, Khaitan SK (2010) Natural selection of asphalt mix stiffness predictive models with genetic programming. Proceedings of the ANNIE 2010. Artificial Neural Networks in Engineering, St. Louis, pp 1–3

Ahmad W, Ahmad A, Ostrowski KA, Aslam F, Joyklad P, Zajdel P (2021) Application of advanced machine learning approaches to predict the compressive strength of concrete containing supplementary cementitious materials. Materials 14:5762

Topçu IB, Sarıdemir M (2008) Prediction of compressive strength of concrete containing fly ash using artificial neural networks and fuzzy logic. Comput Mater Sci 41:305–311

Akkurt S, Tayfur G, Can S (2004) Fuzzy logic model for prediction of cement compressive strength. Cem Concr Res 34(8):1429–1433. https://doi.org/10.1016/j.cemconres.2004.01.020

Adoko AC, Wu L (2011) Fuzzy inference systems-based approaches in geotechnical engineering a review. Electron J Geotechn Eng 16:1543–1558

Ahmad SSS, Othman Z, Kasmin F, Borah S (2018) Modeling of concrete strength prediction using fuzzy type-2 techniques. J Theor Appl Info Technol 96:7973–7983

Alaneme GU, Onyelowe KC, Onyia ME, Van Bui D, Mbadike EM, Dimonyeka MU, Attah IC, Ogbonna C, Iro UI, Kumari S, Firoozi AA, Oyagbola I (2020) Modelling of the swelling potential of soil treated with quicklime-activated rice husk ash using fuzzy logic. Umudike J Eng Technol (UJET) 6(1):1–22

Reza KR, Sayyed MH, Noorollah M (2018) A fuzzy inference system in constructional engineering projects to evaluate the design codes for RC buildings. Civil Eng J 4(9):2155–2172

Zadeh L (1992) Fuzzy logic for the management of uncertainty. Wiley, New York

Alaneme GU, Mbadike EM (2021) Optimization of strength development of bentonite and palm bunch ash concrete using fuzzy logic. Int J Sustain Eng 14(4):835–851. https://doi.org/10.1080/19397038.2021.1929549

Alaneme GU, Dimonyeka MU, Ezeokpube GC et al (2021) Failure assessment of dysfunctional flexible pavement drainage facility using fuzzy analytical hierarchical process. Innov Infrastruct Solut 6:122. https://doi.org/10.1007/s41062-021-00487-z

Özcan F, Atis CD, Karahan O, Uncuoglu E, Tanyildizi H (2009) Comparison of artificial neural network and fuzzy logic models for prediction of long-term compressive strength of silica fume concrete. Adv Eng Softw 40:856–863

Obianyo JI, Okey OE, Alaneme GU (2022) Assessment of cost overrun factors in construction projects in Nigeria using fuzzy logic. Innov Infrastruct Solut 7:304. https://doi.org/10.1007/s41062-022-00908-7

Zeng J, An M, Smith NJ (2007) Application of a fuzzy based decision making methodology to construction project risk assessment. Int J Project Manag 25(6):589–600

Gündüz M, Nielsen Y, Özdemir M (2013) Fuzzy assessment model to estimate the probability of delay in Turkish construction projects. J Manag Eng 31(4):1–14

Nasrollahzadeh Y, Basiri MM (2014) Prediction of shear strength of FRP reinforced concrete beams using fuzzy inference system. Exp Syst Appl 41:1006–1020. https://doi.org/10.1016/j.eswa.2013.07.045

Sayed T, Tavakolie A, Razavi A (2003) Comparison of adaptive network based fuzzy inference systems and B-spline neuro-fuzzy mode choice models. J Comput Civ Eng 17(2):123–130. https://doi.org/10.1061/(ASCE)0887-3801(2003)17:2(123)

Tavakolan M, Etemadinia H (2017) Fuzzy weighted interpretive structural modeling: improved method for identifcation of risk interactions in construction projects. J Constr Eng Manag 143(2004):1–14

Chanas S, Zieliã P (2001) Critical path analysis in the network with fuzzy activity times. Fuzzy Sets Syst 122:195–204

Mazer WM, Geimba DL (2011) Numerical model based on fuzzy logic for predicting penetration of chloride ions into the reinforced concrete structures–first estimates. In: De Freitas VP, Corvacho H, Lacasse M (eds) XII DBMC international conference on durability of building materials and components. FEUP Edições, Porto

Zimmerman J (2001) Fuzzy set theory and its applications. Kluwer Academic Publishers, Norwell

Demir F (2005) Prediction of compressive strength of concrete using ANN and Fuzzy logic. Cem Concr Res 35:1531–1538

Magavalli V, Manalel PA (2014) Modelling of compressive strength of admixture-based self-computing concrete using Fuzzy logic and ANN. Asian J Appl Sci 7:536–551

Sen Z (1998) Fuzzy algorithm for estimation of solar irradiation from sunshine duration. Sol Energy 63(1):39–49

Sarıdemir M (2009) Predicting the compressive strength of mortars containing metakaolin by artificial neural networks and fuzzy logic. Adv Eng Softw 40:920–927

Mamdani EH (1975) Fuzzy logic control of aggregate production planning. Int J Man-Mach Stud 7:1–13

Klir GJ, Yuan B (2001) Fuzzy sets and fuzzy logic: theory and applications. Prentice Hall, Englewood Cliffs

Alaneme GU, Mbadike EM, Attah IC, Udousoro IM (2022) Mechanical behaviour optimization of saw dust ash and quarry dust concrete using adaptive neuro-fuzzy inference system. Innov Infrastruct Solut 7:122. https://doi.org/10.1007/s41062-021-00713-8

McCulloch WS, Pitts W (1943) A logical calculus of the ideas immanent in nervousactivity. Bull Math Biophys 52(1–2):99–115. https://doi.org/10.1016/S0092-8240(05)80006-0

Akande KO, Owolabi TO, Twaha S, Olatunji SO (2014) Performance comparison of SVM and ANN in predicting compressive strength of concrete. IOSR J Comput Eng 16:88–94

Adeli H, Yeh C (1989) Preceptron learning in engineering design. Microcomput Civil Eng 4:247–256

Shafabakhsh GH, Ani OJ, Talebsafa M (2015) Artificial neural network modeling (ANN) for predicting rutting performance of nano-modified hot-mix asphalt mixtures containing steel slag aggregates. Constr Build Mater 85:136–143

Ujong JA, Mbadike EM, Alaneme GU (2022) Prediction of cost and duration of building construction using artificial neural network. Asian J Civ Eng. https://doi.org/10.1007/s42107-022-00474-4

Juez FJDC, Lasheras FS, Roqueñí N, Osborn J (2012) An ANN-based smart tomographic reconstructor in a dynamic environment. Sensors 12:8895–8911

Wang YR, Gibson GE Jr (2010) A study of preproject planning and project success using ANNs and regression models. Autom Constr 19(3):341–346

Tizpa P, Chenari RJ, Fard MK, Achado SL (2014) ANN prediction of some geotechnical properties of soil from their index parameters. Arab J Geosci 8:2911

Duan ZH, Kou SC, Poon CS (2013) Prediction of compressive strength of recycled aggregate concrete using artificial neural networks. Constr Build Mater 40:1200–1206