Abstract

In this paper, we propose to apply the linear mixed effects model (LMEM) for the first time in this discipline to evaluate wastewater treatment plant (WWTP) data. Because the most widely used statistical analyses cannot handle data dependency, as well as fixed and random factors, a new approach was essential. We used the LMEM method to analyze three groups of 19 pharmaceuticals and personal care products (PPCPs) from a California municipal WWTP. We studied the relationships between five treatments, seasons, and three groups of PPCPs. The main contributions of this paper are to investigate three-way and two-way factor interactions and propose that the autoregressive lag 1, AR (1), is a suitable variance-covariance structure for data dependency. While the seasons did not affect the mean concentration levels for the treatments and groups (\(p=0.2540\)), the mean concentration levels for the seasons differed by groups (\(p<0.0001\)) and the treatments (\(p=0.0027\)).

Article highlights

-

The Linear Mixed Effects Model with tree-way and two-way interactions is used to analyze wastewater treatment plant data.

-

The Method of Detection Limit values is considered to impute missing values caused by non-detected mechanism.

-

For the first time in this discipline, a specific correlation structure for data dependency is proposed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the last decades, countries have made efforts in reducing discharge of traditional contaminants (metals, nutrients, or abstract persistent organic pollutants also known as “forever chemicals”) into aquatic environments. Some developed countries have reported significant decreases in contaminants for nitrogen and phosphorus nutrients [1, 2], metals like organotin biocides tributyltin (TBT) [3], mercury [4], and organic compounds like DDT pesticides (1,1,1-trichloro-2,2\(^\prime\)bis(p-chlorophenyl)ethane or dichlorodiphenyl-trichloro ethane) [5, 6].

Besides traditional contaminants, compounds of emerging concern (CECs) also enter the aquatic environment [7]. The CECs include active PPCPs, microfibers, polar pesticides, micro-and nano-plastics, industrial chemicals such as bisphenol A (BPA), food additives, and lifestyle goods like caffeine. The frequent detection of CECs in an aquatic environment (wastewater, storm water, surface water, and sediment) pose a threat to human health and ecosystem [8,9,10]. Despite the strong link between human health and ocean health, little is known about how the two interact [11, 12].

In the last few decades, increasing investment in the health care sector, growing world population, aging societies, growing market availability, or the use of veterinary pharmaceuticals in intensive animal farming and industrially processed food and feeds have resulted in significant usage of PPCPs [13]. The advancement in technology for detecting some PPCPs in the range of micro-grams per liter or pico-grams per liter 2 in aquatic and terrestrial environments explains the increase in scientific publications related to the occurrences of PPCPs [14].

There are over 4000 pharmaceuticals primarily used for preventing or treating human, and animal disease, veterinary health care, or the growth of livestock in animal farms [15]. The three main sources of PPCPs released into the environment are point, disperse, and non-point. The point source is from the pharmaceutical industry, where the release of PPCPs takes place during the manufacture of drugs. Unfortunately, improperly managed PPCPs from businesses, medical facilities, or homes are released into the environment without receiving adequate care [16]. The WWTPs are the main sources of discharge wastewater and sewage sludge.

The widespread application of treated, partially treated, or untreated effluents from WWTPs and sewage effluent is used for irrigation [17] and sewage sludge for fertilization of lands in agriculture [18, 19]. Unfortunately, chemical standards to assess environmental risk do not cover effluents used for irrigation. Additionally, using reclaimed water–clean, transparent, and odorless treated wastewater–for irrigation on golf courses and other recreational areas can be an additional source of pharmaceutical contaminants, according to [20]. The PPCPs in reclaimed water could be absorbed by plant roots and eventually travel up the food chain [21]. The fertilizing of soil with natural fertilizers made from animal excreta or organic fertilizers like manure and slurry is another significant widespread source of PPCPs [22]. Finally, a non-point source is created when snow or rain naturally travels across the landscape, takes up contaminants like fertilizers and bacteria from pet waste, and deposits them into lakes, rivers, coastal waterways, and groundwater.

In recent years, there have been extensive publications on occurrences of PPCPs in surface waters, sea waters, and ground waters [15, 23,24,25,26,27]. The existence of the PPCPs in aquatic habitats, their bioaccumulation in marine organisms, and the development of microbes resistant to antibiotics pose potential dangers to human and animal health. Because the WWTPs are the most significant source of PPCPs in water, the study of various removal treatment processes and the effluent quality of PPCPs have gained importance in many countries [28, 29].

While the effluent of municipal sewage treatment plants is often investigated as a source of organic wastewater compounds in the environment, the solid end-product of wastewater treatment (i.e., digested municipal sludge or biosolids) has beginning to get attention [30]. Therefore, it is essential to get additional knowledge concerning the chemical composition of biosolids because the digested sewage sludge are applied as fertilizer in agriculture, forestry, and landsca**. In future studies, we recommend that scientists acquire such information while collecting data from the WWTP.

Because the WWTPs are the most significant source of PPCPs in water, the effluent quality of various removal treatment procedures must be studied using statistically valid methods. This field’s most widely used statistical procedures are ANOVA, paired or classical t-tests. These methods have significant limitations. The t-test is appropriate when only one treatment method, such as final effluent, is tested and observations are independent. The test is invalid if the data collection does not assure the independence of the observations [29]. For example, if the WWTP discharges water into a lake and data is collected from multiple sites, the data is likely correlated and we should not rely on the t-test result. Furthermore, if the study investigates many treatment procedures, as in our case, the independent t-test is invalid. A paired t-test based on the percentage of PPCPs removed is used in some research. This approach is reliable and widely used. The paired t-test, on the other hand, requires an equal number of points from each treatment group. When one of the samples in a pair is missing, the entire pair is removed from the data, reducing the number of observations and, as a result, making the test less powerful if the sample size is small. Consequently, there is a significant possibility that the test will miss the presence of PPCPs in the effluents.

The ANOVA test, which is also often employed, assumes that all observations are independent of one another, and that each factor’s variance is the same [31]. If, for instance, the WWTP data collection is carried out as in Fig. 1, at least one assumption is violated.

The repeated measures ANOVA works best with samples that are collected over time. However, if the assumptions that the variances of the differences between all group pairs are identical (i.e., sphericity) are not fulfilled, the likelihood of a Type I error could increase. If the data have a moderate sample size, we can apply Mauchly’s test to determine sphericity [32]. In small samples, the test won’t be able to detect sphericity, and in large samples, it might over-identify it. If the sphericity assumption is not satisfied, we can modify the degrees of freedom in F-tests using either Greenhouse-Geisser or Huynh-Feldt corrections [33, 34].

This paper proposes a linear mixed effects model (LMEM) method to overcome the challenges by incorporating fixed and random effects and allowing data dependency via a variance-covariance matrix. The LMEM provides answers for analyzing interactions between treatments and seasons that have never been addressed in this discipline before in the context of mixed effects. The LMEM has the property that if the data meets the properties of widely used statistical procedures, such as the t-test, then the LMEM’s results agree with those of the method.

2 Methodology

The LMEM allows us to investigate the fixed and random factors of interest while simultaneously accounting for variability within and across participants and items. Whereas fixed effects models account for population-level effects (i.e., average trends), random effects models account for trends across levels of grou** factor (e.g., participants or items). That is, the mixed effects model the behavior of certain participants or items that differ from the overall trend.

When observations within participants are nested, repeated measures ANOVA is preferable to standard ANOVAs and multiple regression. The repeated measures ANOVA models either person or item level variability but not both types of variability simultaneously. Because observations within a condition are collapsed across either participant or items in repeated measures ANOVA, important information about variability among participants or items is lost, reducing statistical power that may not detect an effect if one exists [35].

Also, the LMEM handles missing data and unbalanced designs better than ANOVA. While each observation in the LMEM approach reflects one of several replies within an individual, all responses within an ANOVA participant are part of the same observation. In other words, if one observation is missing, the entire observation is removed, and none of the data from that individual (or item) are included in ANOVA, resulting in less effects on parameter estimations by individual (or item) with more missing cases [36].

The complexity of the error term is the main difference between studies of independent data (e.g., between-subjects analyses) and dependent data (e.g., within-subjects analyses). When the data is independent, the error term is quite straightforward because there is only one source of random error. When data is dependent, however, the error term has multiple components, such as: (1) differences between subjects, know as (by-subject) random intercept, (2) differences between subjects in how they are affected by predictor variables, known as (by-subject) random slope, (3) random error, which captures all other types of error, such as unreliable measurement. These random error terms are incorporated into the LMEM.

Finally, it’s worth noting that ANOVA, t-tests, and multiple regression are all special cases of the general linear model (GLM), a special case of the LMEM. That is, the GLM is the LMEM without the random effects.

2.1 The LMEM model

The LMEM method is the best for analyzing our WWTP data since observations were collected monthly for each treatment process (multilevel/hierarchical, longitudinal), indicating a correlation structure. Also, some groups of compounds had more missing observations than others. Furthermore, we had fixed and random factors to consider when develo** the model.

In RStudio, we used the Linear Mixed Effect Models package (lme). The lme function fits a linear mixed-effects model described by Laird and Ware [37]. The “correlation” option describes the within-group correlation structure. We explored numerous autocorrelation structure models. Using the Likelihood Ratio Test and the Akaike information criterio (AIC), we concluded that the Auto Regressive Lag 1, AR(1), model was the best correlation structure for our data set. For fitting models, the lme offers maximum log-likelihood (MLE) and restricted maximum log-likelihood (REML) methods. We used the MLE method.

We fit the model

where \(\textbf{y}\) is a \(N\times 1\) column vector of the outcome variable, \(\textbf{X}\) is a \(N\times p\) matrix of the p predictor variables and their interactions, \({{\varvec{\beta }}}\) is a \(p\times 1\) column vector of the fixed-effects regression coefficients, \(\textbf{Z}\) is a \(N\times (qk)\) matrix for the q random effects and k groups, \(\textbf{u}\) is a \((qk)\times 1\) column vector of q random effects for k groups, and \({{\varvec{\epsilon }}}\) is a \(N\times 1\) column vector of the random errors that does not explain \(\textbf{y}\) by the model \(\textbf{X}{{\varvec{\beta }}}+\textbf{Z}\textbf{u}\). In our data, the response variable \(\textbf{y}\) is concentration level, \(\textbf{X}\) denote the design matrix for the study factors of group, treatment, season and their two- and three-way interactions, \(\textbf{Z}\) is a random individual-specific effect of month and \({{\varvec{\epsilon }}}\) is a within-individual measurement error.

3 Results and discussion

3.1 Sample data

California generates over 4 billion gallons of wastewater daily, which is managed by more than 900 WWTPs. A dataset from one of these WWTPs in California was examined in this study. Phonsiri (2018) (see Sect. 3.3 in [38]) collected data once a month on the 17th or 18th of each month from April 2017 to March 2018. The flowchart of the treatment processes with sample data locations is shown in Fig. 1.

There are preliminary, primary, and secondary treatment procedures used to remove PPCPs in the WWTP. Large objects like branches, and other items that could clog or harm the treatment plant’s machinery are screened out during the initial stage of the treatment process, known as preliminary treatment or influent screening. Following this screening, wastewater enters a grit chamber where gravity causes any small particles, like sand, eggshells, and coins, to settle to the bottom (treatment Influent, I). The wastewater is prepared for initial treatment procedures following the grit chamber (treatment Primary Effluent, P.E). To facilitate the physical process where heavy particles sink and light particles float, the wastewater is fed into primary settling or clarifier. The wastewater is now prepared for biological or secondary treatment after this procedure. Both the Activated Sludge (treatment A) and secondary Trickling Filter Effluent (treatment T) use microorganisms that feed on organic materials to remove pollutants. A secondary sedimentation tank receives the wastewater from treatment T after the organisms have absorbed and digested the organic contents. The mixture of 20% from treatment T and 80% from treatment A–known as the treatment Final Effluent, F.E–is the last phase and is used for reclaimed water.

We studied five treatments (I, P.E, T, A, and F.E). We regarded F.E to be a treatment even though it is only a combination of two existing treatments to compare with the baseline treatment I. (i.e., not a chemical process). Also, to explore seasonal fluctuations and the effect of seasons on treatment processes, we grouped each data point into relevant seasons.

Our main objective is to investigate similar chemical compounds together rather than individually. Thus, the 19 PPCPs are divided into three groups: (1) hormones, (2) analgesic and anti-inflammatory medicines, and (3) antibiotics. Table 1 displays compound names and their grou**s.

3.2 Handling missing values

Not applicable (NA) and Non-detect (ND) values are two types of missing values found in environmental data collection. For statistical analysis to be successful, it is critical to handle missing values correctly.

We omitted the NA missing values from the data as we had no information why they were missing. We were aware, however, that the ND missing data were caused by the method of detection limit (MDL). Regardless of technological advancements, the instrument of detection limit, or MDL, may prevent reliable readings of contaminants below the threshold. The MDL is defined as the amount of a material with a 95% chance of having an analyte concentration greater than zero.

Maximum likelihood estimate, extrapolation, and simple replacement are the common methods for imputed ND values. The most popular technique for replacing ND is called a “simple replacement,” in which missing values are replaced by zero, MDL, or a fraction of MDL. The MDL fractions that are most frequently used are 1/2 and \(1/\sqrt{2}\). According to Zhang et al. [39], the confidence intervals based on the fraction of 1/2 of the MDL method lead to the same conclusion as the maximum likelihood method. Also, as using zero values tends to bias estimate downward while using MDL values tends to bias estimation upward, We therefore replaced \(\text {MDL}/2\) for the ND values. Table 2 displays the MDL values from Phonsiri (2018).

3.3 Descriptive statistics

We analyzed the data in RStudio [40], an integrated programming environment for R-free software.

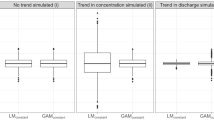

The literature in this field typically reports summary data along with confidence intervals as in Tables 3, 4, or displays boxplots as in Figs. 2, 3. Because dependence between observations and two-way or three-way interactions were not investigated at this point, one should always take precautions when making inferences using confidence intervals.

We only utilize the summary statistics table to decide whether to compute z-scores or standardized values. Table 3 shows that the concentration levels (measured in ng/L) for distinct groups have a wide range and spread values. As a result, we should standardize the data so that all concentration levels fell within the same range. The standardization technique allows for the comparison of different measures to prevent bias in statistical analysis. We calculated z-scores for each compound value by subtracting its mean and dividing its standard deviation. The new measurements have a mean of 0 and a standard deviation of 1.

Figure 2 shows the distributions of chemical removal for groups across several treatment techniques. The mean value is denoted by the “dot” between \(Q_1\) and \(Q_3\) values. We observed that the distributions were right-skewed, Group 2 had the highest mean concentration levels for all treatments, and Group 1 had the most outliers among three groups.

For comparison, we used the median values. Because the P.E. sample was split into two samples for treatments T and A, we compared treatments P.E. and T, P.E. and A, and T and A. We observed minor differences in treatments in Group 1. Treatment P.E outperformed treatment I in Group 3, but not in Groups 1 and 2. In Group 3, only treatment T outperformed treatment P.E, and treatment T surpassed treatment A in Groups 2 and 3. Treatments T and A were more efficient in removing PPCPs than treatment P.E in Group 2. Overall, the comparison of treatments I and F.E demonstrated that the final treatment procedure F.E was successful in removing PPCPs from Groups 2 and 3.

We were also interested in investigating seasonal variations among treatments and groups because consumption rates, precipitation amounts, and socio-geographical variables (such as tourism in the summer and winter) affect the concentration levels of PPCPs in WWTPs. The results are displayed in Table 4 and Fig. 3. The treatment distributions were right-skewed across all groups and seasons, with the exception of treatment I in the spring and treatment T in the winter.

Group 1: The baseline concentration levels (i.e., treatment I) were highest in the fall and winter, respectively, and similar in the other seasons.Treatment A outperformed Treatment T in the fall but performed similarly to treatment F.E. In the spring and summer, none of the treatments outperformed Treatment I. Treatments T and A outperformed treatment P.E for reducing PPCPs in the winter, while treatments A and F.E worked equally well.

Group 2: None of the treatments in the fall outperformed treatment I. Treatment P.E performed similarly to other treatments in the spring, however, it was more successful than treatment I in removing contaminants. Surprisingly, treatment F.E. was not as successful as treatment I and was the second worst treatment after treatment A. Although treatments P.E. and T were superior to treatment I, they both removed PPCPs with comparable results.

Group 3: While removal performance from treatments I and P.E was equivalent in the fall, treatment T marginally outperformed treatment P.E. Although treatment A was the worst, its blend with treatment T, i.e., treatment F.E, was the best. The seasons with the most PPCP concentration levels were spring followed by winter. Geographical regions, seasonal variations in infectious illnesses, or variations in antibiotic prescription patterns could all be contributing factors to the elevated concentration levels. The best treatment in the spring was F.E followed by P.E. All treatments outperformed treatment I, and treatment T was superior to treatment A. The best treatment over the summer was treatment T, which was an improvement over treatment I. The treatments P.E., A, and F.E. were inferior to treatment I. In the winter, Treatment F.E and Treatment T performed the worst, with Treatment A outperforming them all. Treatment P.E., the initial chemical treatment, was more successful at eradicating PPCPs than treatment I.

In summary, we found that the treatment processes had varied effects on different groups (i.e., two-way interaction between treatment processes and groups) and two-way interaction effects between groups and seasons. However, because data dependency was not addressed, we should be cautious about accepting the results from boxplots as valid inferences.

3.4 Data analysis

We visually investigated with three-way plotting if the interaction between the two factors differed depending on the third factor’s level. Figure 4 shows that there might be weak three-way interaction since the effects of the treatment procedures in groups do differ slightly between seasons. There appears to be treatment-season interaction because each treatment process behaves different in different seasons. Also, there may be two-way interactions between the group and season because each group behaves different in different seasons. However, we don’t observe that the treatment processes varies among groups. We will use the preliminary findings from the graph in building the model.

We may rewrite the model Eq. (1) in R-syntax as

with AR(1) is a correlation structure for error. The term \((1\mid Month)\) incorporates dependency of the data by including months as a random component in the model. We chose to fit the model using z-scores rather than raw concentration levels because these values change across different compounds.

First, we investigated whether there was three-way interaction between groups, treatments, and seasons.

Table 5 shows that the mean concentration levels for the treatments and groups were unaffected by seasons (\(p = 0.2540\)). Because there was no three-way interaction, we investigated whether one component influenced the effect of the other (i.e., two-way interactions.) Although the mean concentration levels for treatments were not statistically significant across groups (\(p = 0.9992\)), the mean concentration levels for seasons differed by groups (\(p <0.0001\)) and treatments (\(p =0.0027.\))

Because there were two-way interactions, the effect of simultaneous changes cannot be determined by examining the main effects separately. That is, assessing the effects of treatment, group, and season separately is meaningless. Given that we had significant interactions, the next step is to investigate where the important differences are. In the next section, Tukey’s HSD (Honestly Significant Difference) and Bonferroni tests are used to determine means that are significantly different from each other.

3.5 Contrasts of means with Tukey’s HSD and Bonferroni procedures

As post hoc tests, we implemented Tukey’s HSD (Honestly Significant Difference) and Bonferroni, also known as the Dunn-Bonferroni procedures. While the Tukey’s HSD test provides a true \(\alpha\) correction value for the number of comparisons while maintaining statistical power, the Bonferroni test controls the familywise error rate by computing a new pairwise \(\alpha\) to keep the familywise \(\alpha\) value at the specified value. We present only the pairs of means that were statistically significant at \(\alpha =0.05\) in Tables 6, 7, 8, 9.

Because the Tukey’s HSD and the Bonferroni tests compared the same number of tests, the Table 6 yielded the same findings for comparisons between seasons and groups of compounds. There were statistical differences in the fall between Groups 1 and 3 (\(p = 0.0004\)) and Groups 2 and 3 (\(p < 0.0001\)). The only difference in spring was between Groups 1 and 3 (\(p = 0.0353\)).

The comparisons between treatments and seasons are reported in Table 7. Except for the p-values, both approaches produced the identical estimates for a given comparison. The p-values were different because the number of tests varied in both procedures.

In the fall, both tests found statistical significance between the treatments P.E and F.E (\(p =0.0287\) for Tukey’s HSD and \(p=0.0351\) for Bonferroni). In winter, the treatment A, a chemical procedure, removed more pollutants than the baseline treatment I (\(p=0.0229\) for Tukey’s HSD and \(p=0.0275\) for Bonferroni).

3.6 Comparison of two-sample t-tests with LMEM

We chose (Welch) two-sample t-tests with unequal sample variances to compare widely used statistics in the analysis of WWTP data with the LMEM method. We should not rely on the findings of the t-tests because the WWTP data is dependent. Because we observed two-way interactions in the LMEM model between treatments and seasons and groups and seasons, we investigated the same relations in the two sample t-tests (Tables 8, 9).

The degrees of freedom were the key difference between the two methods, as seen in Tables 8, 9. The LMEM approach had significantly more degrees of freedom than the t-test. There was also no consistent trend in the estimates of the contrasts or their standard errors. For example, we cannot assert that the LMEM’s standard error estimates were always lower than those of the t-tests. Although the t-tests discovered the same group differences as the LMEM approach in the fall and spring, it also discovered differences between groups 1 and 3 in the summer and groups 2 and 3 in the winter, spring, and summer (Table 8). Also, in comparing treatments and seasons, there were no similar results between the LMEM and the t-tests (Table 9).

In conclusion, because the LMEM addresses the data relation through the variance-covariance matrix, incorporates random effects with fixed effects, and handles missing values better, we advocate relying on its conclusions.

4 Conclusion

In this research, we investigated five treatment techniques as well as the seasonal effects of removing PPCPs from the WWTP. Seasons had no effect on the mean concentration levels for the treatments or groups, revealing that there was no three-way interaction. We observed, however, that one factor influenced the other (i.e., two-way interactions). While there was no statistically significant difference in treatment mean concentration levels across groups (no treatment-group interaction), treatment mean concentration levels differed by season (treatment-season interaction), and group mean concentration levels differed by season (group-season interaction). Based on the estimations, we discovered that the final effluent achieved its goal of eliminating compounds when compared to the treatment P.E in the fall. Also, the treatment A is preferred over the baseline treatment I in the winter.

To conclude, we recommend that researchers analyze the WWTP dataset using the LMEM technique. It is simple and gives reliable results because the model adequately handles the data correlation structure, mixed effects factors, and missing values. Furthermore, researchers can explore factor inter-relationships through interactions.

References

Harding LW, Gallegos CL, Perry ES, Miller WD, Adolf JE, Mallonee ME et al (2015) Long-term trends of nutrients and phytoplankton in chesapeake bay. Estuar. Coas. 39:664–681. https://doi.org/10.1007/s12237-015-0023-7

Andersen JH, Carstensen J, Conley DJ, Dromph K, Fleming-Lehtinen V, Gustafsson BG et al (2017) Long-term temporal and spatial trends in eutrophication status of the baltic sea. Biol. Rev. 9:135–149. https://doi.org/10.1111/brv.12221

Schøyen M, Green NW, Hjermann DO, Tveiten L, Beylich B, Øxnevad S et al (2019) Levels and trends of tributyltin (tbt) and imposex in dogwhelk (nucella lapillus) along the norwegian coastline from 1991 to 2017. Mar. Envir. Res. 144:1–8. https://doi.org/10.1016/j.marenvres.2018.11.011

Bowman KL, Lamborg CH, Agather AM (2020) A global perspective on mercury cycling in the ocean. Sci. Total Envir. 710:136–166. https://doi.org/10.1016/j.scitotenv.2019.136166

Sericano JL, Wade TL, Sweet ST, Ramirez J, Lauenstein GG (2013) Emporal trends and spatial distribution of ddt in bivalves from the coastal marine environments of the continental united states, 1986–2009. Mar. Pollut. Bull. 81:303–316. https://doi.org/10.1016/j.marpolbul.2013.12.049

Robinson KJ, Hall AJ, Scholl G, Debier C, Thomé J-P, Eppe G et al (2019) Investigating decadal changes in persistent organic pollutants in scottish grey seal pups. Aquat. Conse. Mar. Fresh. Ecos. 29:86–100. https://doi.org/10.1002/aqc.3137

Baalbaki Z, Sultana T, Maere T, Vanrolleghem PA, Metcalfe CD, Yargeau V (2016) Fate and mass balance of contaminants of emerging concern during wastewater treatment determined using the fractionated approach. Sci. Total Envir. 573:1147–1158. https://doi.org/10.1016/j.scitotenv.2016.08.073

Brack W, Dulio V, Slobodnik J (2012) The norman network and its activities on emerging environmental substances with a focus on effect-directed analysis of complex environmental contamination. Envir Sci Eur. https://doi.org/10.1186/2190-4715-24-29

Petrie B, Barden R, Kasprzyk-Hordern B (2014) A review on emerging contaminants in wastewaters and the environment: current knowledge, understudied areas and recommendations for future monitoring. Water Res. 72:3–27. https://doi.org/10.1016/j.watres.2014.08.053

Borja A, White MP, Berdalet E, Bock N, Eatock C, Kristensen P et al (2020) Moving toward an agenda on ocean health and human health in europe. Front. Mar. Sci. 7:37. https://doi.org/10.3389/fmars.2020.00037

Depledge MH, White MP, Maycock B, Fleming LE (2019) Time and tide: our future health and well-being depend on the oceans. B. M. J. 366:4671. https://doi.org/10.1136/bmj.l4671

Fleming LE, Maycock B, White MP, Depledge MH (2019) Fostering human health through ocean sustainability in the 21st century. Peop. Nat. 1(3):276–283

Van Boeckel TP, Gandra S, Ashok A, Caudron Q, Grenfell BT, Levin SA, Laxminarayan R (2014) Global antibiotic consumption 2000 to 2010: An analysis of national pharmaceutical sales data. Lancet. Infect. Dis. 14:742–750. https://doi.org/10.1016/S1473-3099(14)70780-7

Zrnčić M, Gros M, Babić S, Kaštelan-Macan M, Barcelo D, Petrović M (2014) Analysis of anthelmintics in surface water by ultra high performance liquid chromatography coupled to quadrupole linear ion trap tandem mass spectrometry. Chem. 99:224–232. https://doi.org/10.1016/j.chemosphere.2013.10.091

Boxall ABA, Rudd MA, Brooks BW, Caldwell DJ, Choi K, Hickmann S et al (2013) Pharmaceuticals and personal care products in the environment: what are the big questions? Envir. Heal. Persp. 120:1221–1229. https://doi.org/10.1289/ehp.1104477

Fick J, Soderstrom H, Lindberg RH, Phan C, Tysklind M, Larsson DGJ (2009) Contamination of surface, ground and drinking water from pharmaceutical production. Envir. Toxic. Chem. 28(12):2522–2527. https://doi.org/10.1897/09-073.1

Dalkmann P, Siebe C, Amelung W, Schloter M, Siemens J (2014) Does long-term irrigation with untreated wastewater accelerate the dissipation of pharmaceuticals in soil? Envir. Sci. 48:4963–4970. https://doi.org/10.1021/es501180x

Kinney CA, Furlong ET, Werner SL, Cahill JD (2006) Presence and distribution of wastewater-derived pharmaceuticals in soil irrigated with reclaimed water. Envir. Toxic. Chem. 25(2):317–326. https://doi.org/10.1897/05-187R.1

Gworek B, Kijeńska M, Wrzosek J, Graniewska M (2021) Pharmaceuticals in the soil and plant environment: a review. Water Air Soil Pollut Inter J Environ Pollut. https://doi.org/10.1007/s11270-020-04954-8

Salgot M, Priestley GK, Folch M (2012) Golf course irrigation with reclaimed water in the mediterranean: a risk management matter. Water 4(2):389–429. https://doi.org/10.3390/w4020389

Calderon-Preciado D, Jimenez-Cartagena C, Matamoros V, Bayona JM (2011) Screening of 47 organic microcontaminants in agricultural irrigation waters and their soil loading. Water Res. 45(1):221–231. https://doi.org/10.1016/j.watres.2010.07.050

Heberer T (2002) Occurrence, fate, and removal of pharmaceutical residues in the aquatic environment: a review of recent research data. Toxic. Lettr. 131(1–2):5–17. https://doi.org/10.1016/s0378-4274(02)00041-3

Farré M, Pérez S, Kantiani L, Barceló D (2008) Fate and toxicity of emerging pollutants, their metabolites and transformation products in the aquatic environment. Tren. Anal. Chem. 27:991–1007. https://doi.org/10.1016/j.trac.2008.09.010

Watkinson AJ, Murby EJ, Kolpin DW, Costanzo SD (2009) The occurrence of antibiotics in an urban watershed: from wastewater to drinking water. Sci. Total Envir. 407:2711–2723. https://doi.org/10.1016/j.scitotenv.2008.11.059

Borecka M, Siedlewicz G, Haliński ŁP, Sikora K, Pazdro K, Stepnowski P, Białk-Bielińska A (2015) Contamination of the southern baltic sea waters by the residues of selected pharmaceuticals: method development and field studies. Mar. Pollut. Bull. 94:62–71. https://doi.org/10.1016/j.marpolbul.2015.03.008

Caban M, Lis E, Kumirska J, Stepnowski P (2015) Determination of pharmaceutical residues in drinking water in poland using a new spe-gc-ms(sim) method based on speedisk extraction disks and dimetris derivatization. Sci. Total Envir. 538:402–411. https://doi.org/10.1016/j.scitotenv.2015.08.076

Beek T, Weber FA, Bergmann A, Hickmann S, Ebert I, Hein A, Küster A (2016) Pharmaceuticals in the environment-global occurrences and perspectives. Envir. Toxic. Chem. 35(4):823–835. https://doi.org/10.1002/etc.3339

Zorita S, Mȧrtensson L, Mathiasson L (2009) Occurrence and removal of pharmaceuticals in a municipal sewage treatment system in the south of sweden. Sci. Total Envir. 407:2760–2770. https://doi.org/10.1016/j.scitotenv.2008.12.030

Arlos MJ, Bragg LM, Parker WJ, Servos MR (2014) Distribution of selected antiandrogens and pharmaceuticals in a highly impacted watershed. Water Res. 72:40–50. https://doi.org/10.1016/j.watres.2014.11.008

Seleiman MF, Santanen A (2020) Recycling sludge on cropland as fertilizer—advantages and risks. Res. Conser. and Recyc. 155:104647

Chebor J, Kiprop EK, Mwamburi LA (2017) Effect of seasonal variation on performance of vonventional wastewater treatment system. J. App. Envir. Micro. 5(1):1–7. https://doi.org/10.12691/jaem-5-1-1

Mauchly JW (1940) Significance test for sphericity of a normal n-variate distribution. An. Math. Stat. 11:204–209

Greenhouse SW, Geisser S (1959) On methods in the analysis ofprofile data. Psycho. 24:95–112

Huynh H, Feldt LS (1976) Estimation of the box correction for degrees of freedom from sample data in randomised block and split-plot designs. J. Educ. Stat. 1:69–82

Barr DJ (2008) Analyzing visual world eyetracking data using multilevel logistic regression. J. Memory. Lang. 59(4):457–474

Raudenbush SW, Bryk AS (2002) Hierarchical linear models: applications and data analysis methods. Sage

Laird NM, Ware JH (1982) Random-effects models for longitudinal data. Biome. 38(4):963–974. https://doi.org/10.2307/2529876

Phonsiri V (2018) Occurrence and detection of variety of human and animal pharmaceuticals in engineered wastewater system. M.S. Dissertationsin Environmental Engineering, California State University, Fullerton, CA. figshare http://hdl.handle.net/20.500.12680/fb494946z

Zhang Z, Lennox WC, Panu US (2004) Effect of percent non-detects on estimation bias in censored distributions. J. Hydro. 297(1–4):74–94. https://doi.org/10.1016/j.jhydrol.2004.04.017

Team R (2019) RStudio: integrated development for R. RStudio Inc.

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

Author prepared the manuscript and analyzed the data.

Corresponding author

Ethics declarations

Competing interest

The author has no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bourget, G. Statistical analysis of wastewater treatment plant data. SN Appl. Sci. 5, 130 (2023). https://doi.org/10.1007/s42452-023-05357-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-023-05357-0