Abstract

In this study, a parameter-uniform numerical scheme is built and analyzed to treat a singularly perturbed parabolic differential equation involving large spatial delay. The solution of the considered problem has two strong boundary layers due to the effect of the perturbation parameter, and the large delay causes a strong interior layer. The behavior of the layers makes it difficult to solve such problem analytically. To treat the problem, we developed a numerical scheme using the weighted average (\(\theta\)-method) difference approximation on a uniform time mesh and the central difference method on a piece-wise uniform spatial mesh. We established the Stability and convergence analysis for the proposed scheme and obtained that the method is uniformly convergent of order two in the temporal direction and almost second order in the spatial direction. To validate the applicability of the proposed numerical scheme, two model examples are treated and confirmed with the theoretical findings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A differential equation is said to be a delay differential equation (DDE) if the evolution of a dependent variable or its derivatives appears depending on the values of the previous state. DDE can manifest itself in different forms, such as constant delay, variable delay, state-dependent delay, distributed delay and so on [1]. In science and engineering, DDEs are used to model a variety of real-world phenomena, such as variation problems in control theory [2], stochastic neuronal movement depolarization model [3], mathematical modelling of HIV-1 infection [4], to model COVID-19 pandemic [5] and others.

A class of differential equations, which involves a small positive parameter \(\varepsilon\) (often \(0<\varepsilon \ll 1\)) multiplied by the term with highest order derivative and one or more shifting parameters is said to be a singularly perturbed delay differential equation. The delay parameter may be considered as larger or smaller than the perturbation parameter. If the delay parameter exceeds the perturbation parameter, then the problem is said to be a singularly perturbed problem with large delay. On the other hand, if the delay parameter is less than the perturbation parameter, then the problem is said to be a singularly perturbed problem with small delay [6,7,8].

The presence of the perturbation parameter causes the problem’s solution to change rapidly in certain regions and slowly in other regions of the domain. Regions where the solution changes rapidly are known as the inner region and those where the solution changes slowly are said to be the outer regions. Perturbation problems may be classified as singular and regular. A regular perturbation problem is a problem whose solution varies smoothly as \(\varepsilon\) approaches zero, whereas singular perturbation problem is the one in which the solution changes precipitously in some way as \(\varepsilon\) approaches zero. Thus, in the study of singular perturbation problems, naively considering \(\varepsilon\) to be zero changes the very nature of the problem. The rapid changing behavior of the layers makes it difficult to find an analytical solution. Also, classical numerical methods do not give a simplified and satisfactory result as they do not take the characteristics of the solutions in the boundary or interior layer into account, which causes a significant discrepancy between the numerical solution and the actual solution. To overcome these kinds of computational problems, there is a need of parameter independent numerical methods [9].

Numerous research works are available in literature to obtain suitable numerical techniques for singularly perturbed problems,. For instance, Kumar [10] proposed a collocation method for singularly perturbed turning point problems involving boundary/interior layers. Kumar and Kumari [11] solved singularly perturbed problems with integral boundary condition by constructing a parameter-uniform collocation scheme. Cimen and Amiraliyev [12] solved singularly perturbed problems based on a piecewise uniform mesh of Shishkin type. Cimen [13] treated singularly perturbed differential equation with delay and advance by constructing a scheme by the method of integral identities with the use of interpolating quadrature rules. Kumar and Kanth [14] solved time dependent singularly perturbed differential equation using tension spline on a non-uniform Shishkin mesh. Adilaxmi and Reddy [15] solved singularly perturbed differential-difference equations applying an initial value technique via fitted nonstandard finite difference method. Musharary and Mohapatra [16] treated singularly perturbed problems with mixed arguments by constructing a hybrid parameter-uniform numerical schemes. Shakti and Mohapatra [17] presented monotone hybrid numerical scheme for a singularly perturbed convection-diffusion problem on different types of nonuniform meshes. Bansal and Sharma [18] formulated a parameter-uniform numerical scheme using fitted mesh method to solve a singularly perturbed convection-diffusion problem with shift arguments. Woldaregay and duressa [19] treated singularly perturbed problems involving both shift and advance parameters by develo** a parameter-uniform numerical scheme using nonstandard finite difference method in x-direction and implicit Runge–Kutta method in t-direction. Ejere et al. [20] solved a singularly perturbed differential equation with negative shift by proposing a fitted numerical scheme via domain decomposition. Bansal and Sharma [21] developed a uniformly convergent numerical scheme for singularly perturbed reaction-diffusion problems by using implicit Euler method in the time direction and central finite difference method in the spatial direction. Kumar and Kumari [22] treated singularly perturbed reaction–diffusion problems with large delay by develo** a parameter-uniform numerical scheme using the Crank–Nicolson method in time direction and the central finite difference method in the spatial direction. Singularly perturbed differential equations involving temporal delay are well solved in various research works, some of which can be referred in [23,24,25,26] for the detail discussions.

In this research work, we are motivated to develop a uniformly convergent numerical scheme to treat a singularly perturbed differential equations involving large delay in the spatial variable. To treat the problem, we developed a numerical scheme using the \(\theta\)-method finite difference approximation on a uniform time discretization and using the central finite difference operator on a piece-wise uniform spatial discretization. For the developed numerical scheme, we have established the stability and convergence analysis, which show that our method is convergent, regardless of the perturbation parameter. To demonstrate the validity and applicability of the obtained method, two numerical examples are treated and confirmed with the theoretical findings.

This paper is organized as follows: In Sect. 2, we describe the continuous problem. The numerical method is briefly discussed in Sect. 3. To validate the proposed numerical scheme, we treated and discussed model examples in Sect. 4. The conclusion of the paper is given in Sect. 5. Through out this study, C is considered as a generic positive constant which is independent of the perturbation parameter and the mesh numbers. For a given continuous function u(x,t), we defined the maximum norm as \(\Vert u(x,t)\Vert =\smash {\displaystyle \max _{(x,t)\in \bar{\Omega }}}|u(x,t)|\).

2 Continuous problem

Consider a singularly perturbed problem on \(\Omega = \Gamma \times \Lambda = (0,2)\times (0,T]\) and \(\partial \Omega =\{(x,0)\cup (0,t)\cup (2,t): x \in \bar{\Gamma }=[0, 2], t\in \bar{\Lambda }=[0, T]\}\) for a finite time T as

subjected to the initial condition \(u(x,0)=u_{0}(x),\ \ \forall x \in \bar{\Gamma }\) and the boundary conditions \(u(x,t)=\gamma (x,t), \ \ \forall (x,t) \in \Omega ^{-}, \ u(2,t)=\zeta (t), \ \ \forall (2,t) \in \Omega ^{+}\), where \(\Omega ^{-}=\{(x,t): x\in [-1,0], \ t \in \bar{\Lambda }\}\), \(\Omega ^{+}=\{(2,t): t \in \bar{\Lambda }\}\) and \(0 < \varepsilon \ll 1\).

2.1 Basic assumptions of the continuous problem

We assume that the functions involved in the continuous problem are all smooth enough and moreover, for arbitrary positive number \(\beta\), the functions p(x) and q(x) satisfy the conditions

Considering the interval boundary conditions, (1) can be written as

with \(u(x,0)=u_{0}(x)\) for \(x\in \bar{\Gamma }\), \(u(x,t)=\gamma (x,t)\) for \((x,t)\in \Omega ^{-}\), \(u(2,t)=\zeta (t)\) for \(t\in \bar{\Lambda }\), \(u(1^{-},\,t)=u(1^{+},\,t)\) and \(u_{x}(1^{-},\, t)=u_{x}(1^{+},\, t)\) .

Letting \(\varepsilon =0\), we obtain the reduced form of Eq. (3) as

From the reduced problem, we observe that \(u_{0}(x,t)\) does not necessarily satisfy \(u_{0}(0,t)=\gamma (0,t)\) and \(u_{0}(2,t)=\zeta (t)\). This shows that the solution involves boundary layers at the end of the domain. At \(x=1\), since \(u_{0}(1^{-}, t)=\frac{f(1, t)-q(1)\gamma (0^{-}, t)-(u_{0})_{t}(1, t)}{p(1)}\) and \(u_{0}(1^{+}, t)=\frac{f(1, t)-q(1)u_{0}(0^{+},t)-(u_{0})_{t}(1, t)}{p(1)}\), it is not necessary that \(u_{0}(1^{-},t)\) is equal to \(u_{0}(1^{+},t)\). Hence, at \(x=1\) the solution involves strong interior layer. For more information we may refer [22, 27]. The compatibility requirement is also imposed to be satisfied by the problem under consideration, which means that at the points \((-1,0), (0,0), (1,0)\) and (2, 0) we have

As a result, if the aforementioned conditions are met, the existence and uniqueness of the solution of the continuous problem on a given domain can be determined. Thus, as described in [28], for \((x,t) \in \bar{\Omega }\), we have \(|u(x,t)-u_{0}(x)|\le Ct\).

2.2 Properties of the solution and its derivatives

In this subsection, we describe the stability and bound of the analytical solution and its derivatives for the continuous problem, which are important in the analysis of the discrete problem [29].

Lemma 1

Let \(\psi\) be a given sufficiently smooth function on \(\bar{\Omega }\). If \(\psi (x,t) \ge 0\), \((x,t)\in \partial \Omega\) and \(L_{\varepsilon }\psi (x,t)\ge 0\), \((x, t) \in \Omega\), then we have \(\psi (x,t)\ge 0\), \((x, t) \in \bar{\Omega }\).

Proof

Let \((\hat{x},\hat{t}) \in \bar{\Omega }\) be given and assume that \(\psi (\hat{x},\hat{t})=\smash {\min _{\bar{\Omega }}}\psi (x,t)<0\). From the hypothesis, \((\hat{x},\hat{t}) \notin \partial \Omega\) and from the derivative tests, \(\psi _{x}(\hat{x},\hat{t})=0\) and \(\psi _{xx}(\hat{x},\hat{t}) > 0\).

Case 1

On (0, 1] by (3), \(L_{\varepsilon }\psi (\hat{x},\hat{t})= u_{t} -\varepsilon \psi _{xx}+p(\hat{x})\psi (\hat{x},\hat{t}) < \ 0\).

Case 2

On (1, 2) by (3), \(L_{\varepsilon }\psi (\hat{x},\hat{t})=u_{t}-\varepsilon \psi _{xx}+p(\hat{x})\psi (\hat{x},\hat{t})+q(\hat{x})\psi (\hat{x}-1,\hat{t}) \le -\varepsilon \psi _{xx}(\hat{x},\hat{t})+2\alpha \psi (\hat{x},\hat{t}) < \ 0.\)

From the two cases, we see that \(L_{\varepsilon }\psi (\hat{x},\hat{t})< 0\), which is inconsistent with the hypothesis and proves that our assumption is incorrect. As a result, \(\psi (\hat{x},\hat{t})\ge 0\), which gives \(\psi (x,t)\ge 0\), \((x,t) \in \bar{\Omega }\). \(\square\)

Lemma 2

Let u(x, t) be the solution of Eq. (1). Then, we can estimated it as

where \(\Vert u\Vert _{\partial \Omega }=\max \{|u(0,t)|, |u(2,t)|\}\).

Proof

Define barrier functions as \(\omega ^{\pm }(x,t)=\max \left\{ \Vert u\Vert _{\partial \Omega }, \frac{1}{2\beta }\Vert L_{\varepsilon }u\Vert \right\} \pm u(x,t)\). Then, we have

For \(x\in (0,1]\), we have

For \(x\in (1,2)\), we have

Applying Lemma 1, \(\omega ^{\pm }(x,t) \ge 0\), \((x,t)\in \bar{\Omega }\), which gives the required stability estimate. \(\square\)

Lemma 3

For \((x,t) \in \bar{\Omega }\), the derivatives of the solution of Eq. (1) can be estimated as

Proof

In Eq. (4) for \(m=0\), we have \(|u(x,t)|\le C\), which implies Lemma 2. For \(m=1\), rearranging (1) gives

As described by [30], if we assume that all of the data considered in (1) are zero, we have \(u(x,0)=u_{0}=0\), \(u(x,t)=\gamma (x,t)=0\), \(u(2,t)=\zeta (t)=0\), so that (7) becomes

For \((x,t)\in \Omega ^{-}\), we have \(u(x-1,0)=\gamma (x-1,0)=0\) and for \((x,t)\in \Omega ^{+}\), we have \(u(x-1,0)=\zeta (0)=0\). Hence, (8) can be reduced to \(u_{t}(x,0)=f(x)\) and since f is smooth function, we have \(|u(x,0)|\le C\), \(x\in \partial \Gamma\), which gives

Similarly, for \(m=2\), assuming that the initial and boundary values considered in (1) are identically zero and differentiating with respect to t, we get \(u_{tt}-\varepsilon u_{xxt}+p(x)u_{t}(x,t)+q(x)u_{t}(x-1,t)=f_{t}(x)\) and along \(t=0\), it becomes

Since \(u_{xxt}(x,0)=f_{xx}(x)\) and \(u_{t}(x-1,0)=0\), (10) becomes \(u_{tt}(x,0)=\varepsilon f_{xx}(x)-p(x)f(x)+f_{t}(x)\), which implies that \(|u_{tt}(x,0)|\ \le \ C\) on \(\partial \Omega\) as f is smooth function. Hence, we have

Analogously, we can bound the derivatives of the solution in the spatial variable. That is, for \(m=0\), we obtain the stability estimate as in Lemma 2. For \(m=1\), let \(x \in \Gamma\) and consider a neighborhood \(I=(a, a+\sqrt{\varepsilon })\), \(\forall x \in I\). Then, applying the mean value theorem for some \(a\in \bar{I}\) and \(t\in (0,T]\), we can get

Now, for any x in \(\bar{I}\), we can get

Using (11), we can get \(|u_{x}(x,t)|\le C\varepsilon ^{\frac{-1}{2}}\left( \Vert u\Vert _{\partial \Omega }+\Vert f\Vert +\Vert f_{t}\Vert \right)\). Rearranging the terms in (1), we ge \(u_{xx}=\varepsilon ^{-1}\left( u_{t}+p(x)u(x,t)+q(x) u(x-1,t)-f(x)\right)\). From this result and (9), it is possible to write the bound of \(u_{xx}(x,t)\) as

In a similar procedure, the estimation in (6) can be confirmed and for more detail, we refer [31]. \(\square\)

3 Discrete problem

In this section, we develop a discrete problem for the model problem (1) in two steps, first based on the time variable and then based on the spatial variable to obtain a fully discrete problem.

3.1 Temporal semi-discretization

Let M denotes the number of mesh on [0, T]. Then, define a uniform mesh \(\Omega ^{M}_{t}\) as \(\Omega ^{M}_{t}=\left\{ t_{j}=j\Delta t, \ j+1=1(1) M, \ \Delta t=\frac{T}{M}\right\}\) and let’s apply the \(\theta\)-method for which \(\theta \in [0,1]\). For \(\theta \in [0,1/2)\), we obtain explicit method and in this case the method is unstable. For \(\theta =1/2\), we obtain the Crank–Nicolson method and for \(\theta \in (1/2,1]\), we obtain an implicit scheme. In general the \(\theta\)-method is numerically stable for \(\theta \in [1/2,1]\) [32]. Applying the \(\theta\)-method finite difference approximation on \(\Omega ^{M}_{t}\), (1) becomes

where

and

subjected to \(U^{0}(x)=u_{0}(x), \ x \in \bar{\Gamma }\), \(U^{j+1}(x)=\gamma (x,t_{j+1}) , \ x \in [-1,0], \ j+1=1(1)M\) and \(U^{j+1}(2, t_{j+1})=\zeta (t_{j+1}),\ j+1=1(1)M\).

Lemma 4

(Semi-discrete maximum principle) Let the mesh function \(\varphi ^{j+1}(x)\), \(j+1=1(1)M\) satisfies \(\varphi ^{j+1}(x)\ge 0\), \(x\in \partial \Gamma\) and \((1+\Delta t \theta L^{M}_{\varepsilon ,x})\varphi ^{j+1}(x)\ge 0\), \(x \in \Gamma\). Then \(\varphi ^{j+1}(x) \ge 0\), \(x \in \bar{\Gamma }\).

Proof

For some \(\hat{x} \in \Gamma\), assume that \(\varphi ^{j+1}(\hat{x})=\smash {\min _{x \in \bar{\Gamma }}}\varphi ^{j+1}(x)<0\). Then, by the extreme value properties, \(\varphi ^{j+1}_{x}(\hat{x})=0\) and \(\varphi ^{j+1}_{xx}(\hat{x})\ge 0\), and from the given hypothesis, \(\hat{x} \notin \{0,2\}\).

Case 1: For \(\hat{x} \in (0,1]\), we have

Case 2: For \(\hat{x} \in (1,2)\), we have

From these two cases we see that \((1+\Delta t \theta L^{M}_{\varepsilon })\varphi ^{j+1}(\hat{x})\le 0\), for some \(\hat{x} \in \Gamma\), which contradicts the given condition. This implies that \(\varphi ^{j+1}(x) \ge 0\), for all \(x \in \bar{\Gamma }\).

Additionally, the maximum principle is satisfied by the operator \(1+\Delta t \theta L^{M}_{\varepsilon ,x}\) and as a result, we have

\(\square\)

Lemma 5

Let the solution to the semi-discrete problem (12) be \(U^{j+1}(x)\), \(j+1=1(1)M\). Then, it can be estimated as

Proof

To verify this estimate, define

At \(x=0\), we have \(\psi _{\pm }^{j+1}(0) \ge \ \frac{\Delta t}{1+2\beta \theta \Delta t}\Vert g\Vert \ge \ 0\).

At \(x=2\), we have \(\psi _{\pm }^{j+1}(2) \ge \ \frac{\Delta t}{1+2\beta \theta \Delta t}\Vert g\Vert \ge \ 0\).

For \(x\in (0,1]\), from (12), we have

\(\square\)

For \(x\in (1,2)\), from (12), we have

As a result, using Lemma 4, \(\psi _{\pm }^{j+1}(x)\ge 0\), which implies the required stability estimate.

Lemma 6

Let \(\left|\frac{\partial ^{m} u(x,t)}{\partial t^{m}} \right|\le C\) for \((x,t) \in \bar{\Omega }\) and \(m=0,1,2\). Then, the local error is estimated as

Proof

The local truncation error can be determined by selecting an appropriate base point, where the Taylor series is expanded. The convenient base point for a fully implicit method is the point \((x_{i}, t_{j+1})\). However, the center point, \((x_{i},t_{j+\frac{1}{2}})\) is a convenient base point for any value of \(\theta \in [\frac{1}{2},1)\) and using this base point, the Taylor series expansion yield

Taking the difference between (12) and (15), we obtain the local error satisfying

Thus, applying Lemma 4, we can obtain the required estimate. \(\square\)

Lemma 7

Assuming that Lemma 6 holds true, the global truncation errors \(E^{j+1}\) can be estimated as

Proof

By the local error estimate up to the \((j+1)^{th}\) time level for \(\theta =\frac{1}{2}\), we have

By a similar procedures for \(\frac{1}{2}<\theta \le 1\), we have

\(\square\)

Remark 1

To obtain an improved error estimate, we decompose \(U^{j+1}(x)\) as \(U^{j+1}(x)=V^{j+1}(x)+W^{j+1}(x)\), where \(V^{j+1}(x)\) is the smooth component satisfying the problem

and \(W^{j+1}(x)\) is the singular component satisfying the problem

Lemma 8

The derivatives of \(U^{j+1}(x),\, j+1=1(1)M\) and its components can be estimated as

where \(a_{1}(x, \beta )=e^{-\sqrt{\frac{\beta }{\varepsilon }}x}+e^{-\sqrt{\frac{\beta }{\varepsilon }}(1-x)}\), \(a_{2}(x, \beta )=e^{-\sqrt{\frac{\beta }{\varepsilon }}(x-1)}+e^{-\sqrt{\frac{\beta }{\varepsilon }}(2-x)}\) and \(m=0, 1, 2, 3\) \(.\)

Proof

For the proof, we refer [27, 33]. \(\square\)

3.2 Spatial discretization

Here, we start by splitting the spatial domain [0, 2] into two sub-intervals [0, 1] and (1, 2]. For N mesh numbers, consider \(\bar{\Omega }^{N}_{x}\) as a piece-wise uniform spatial mesh. Then, each sub-intervals can be further divided as \([0,1]=[0,\sigma ]\cup (\sigma , 1-\sigma ]\cup (1-\sigma , 1]\) and \((1,2]=(1,1+\sigma ]\cup (1+\sigma ,2-\sigma ]\cup (2-\sigma ,2]\), Where \(\sigma\) is a transition parameter, which separates the non-uniform into uniform meshes and given as \(\sigma =\min \left\{ \frac{1}{4},2\sqrt{\frac{\varepsilon }{\beta }}\ln N\right\}\). We consider \(\frac{N}{8}\) mesh numbers on each of the fine mesh regions \([0,\sigma ]\), \((1-\sigma ,1)\) , \((1,1+\sigma )\) and \((2-\sigma ,2)\), and \(\frac{N}{4}\) mesh numbers on each of the coarse mesh regions \((\sigma ,1-\sigma ]\) and \((1+\sigma ,2-\sigma ]\). The nodal mesh points are \(x_{i}=x_{i-1}+h_{i} , \ i=1,2, ... , N\) , where the mesh interval \(h_{i}\)’s are given by

Now, let’s define the standard finite difference operators as

Substituting the differential operator in Eq. (12) by the standard finite difference operators, we obtain a fully-discretized problem as

where

and

From Eq. (17), we obtain a system of equations of the form

where \(\begin{aligned}&r_{i}^{-}=\frac{-2\varepsilon \theta }{h_{i}(h_{i}+h_{i+1})}, \, r_{i}^{0}=\frac{2\varepsilon \theta }{h_{i+1}(h_{i}+h_{i+1})}+\frac{2\varepsilon \theta }{h_{i}(h_{i}+h_{i+1})}+\frac{1}{\Delta t}\\&+\theta p_{i},\, r_{i}^{+}=\frac{-2\varepsilon \theta }{h_{i+1}(h_{i}+h_{i+1})},\, G_{i}=(1-\theta ) [\varepsilon D^{+}_{x}D^{-}_{x}U^{j}_{i}-p_{i}U^{j}_{i} -q_{i}\gamma^{j}\\&(x_{i}-1) +f^{j}(x_{i})] -\theta q_{i}\gamma ^{j+1}(x_{i}-1) +\theta f^{j+1}(x_{i})+(1/\Delta t)U^{j}_{i}.\end{aligned}\)

Similarly, we can obtain

where \(r_{i}^{-}\), \(r_{i}^{0}\) and \(r_{i}^{+}\) are provided as above and \(G^{*}_{i}=(1-\theta )[\varepsilon D^{+}D^{-}U^{j}_{i}- p_{i}U^{j}_{i}- q_{i}U^{j}_{N/2-1}+f^{j}(x_{i})] +(1/\Delta t)U^{j}_{i} +\theta f^{j+1}(x_{i})\).

Lemma 9

(Discrete Maximum Principle) Let \(\varphi ^{j+1}_{i}\) be a given mesh function, which satisfies \(\varphi ^{j+1}_{0}\ge 0\) and \(\varphi ^{j+1}_{N}\ge 0\), \(j+1=1(1)M\). If \(L^{N,M}_{\varepsilon } \varphi ^{j+1}_{i}\ge 0\), \(i=1(1)N-1\), then \(\varphi ^{j+1}_{i}\ge 0\), \(i=0(1)N\).

Proof

Assume that \(\varphi ^{j+1}_{\iota }=\smash {\displaystyle \min _{i=0,1,...,N}}\varphi ^{j+1}_{i} <0\) for some \(\iota \in \{0,1,...,N\}\) .

Case 1

For \(i=1(1)\frac{N}{2}\), we have

Case 2

For \(i=\frac{N}{2}+1(1)N-1\), we have

From the two cases, we obtained a contradiction to the given hypothesis, which implies that \(\varphi ^{j+1}_{i}\ge 0\) for all \(i=0(1)N\), \(j+1=1(1)M\). \(\square\)

Lemma 10

(Stability of the discrete problem) Let \(U^{j+1}_{i}\), \(i=0(1)N, \, j+1=1(1)M\) be a solution of the fully-discrete problem (17). Then, it is estimated as

Proof

To confirm this estimate, define two barrier functions as

The estimate holds true for \(i\in \{0,N\}\), and for other values of i, we examine the two cases below.

Case 1

For \(i=1(1)\frac{N}{2}\), we get

Case 2

For \(i=\frac{N}{2}+1(1)N-1\), we get

So, applying Lemma 9, the required stability estimate can be obtained. \(\square\)

Remark 2

To obtain an improved error estimate for the discrete scheme, the solution \(U^{j+1}_{i}\) can be decomposed into its regular component \(V^{j+1}_{i}\) and singular component \(W^{j+1}_{i}\) as \(U^{j+1}_{i}=V^{j+1}_{i}+W^{j+1}_{i}\). The regular component satisfies he problem

where

And the singular component, \(W^{j+1}_{i}\) satisfies the problem

Theorem 1

Suppose that \(U^{j+1}(x_{i})\) is the solution of (12) and \(U^{j+1}_{i}\) is the solution of (17). Then, the local truncation error at the \((j+1)^{th}\) time level is given by

Proof

The local truncation error at each mesh point \((x_{i}, t_{j+1})\) is given as \(e^{j+1}_{i}= U^{j+1}(x_{i})-U^{j+1}_{i}\). So, we can treat the error estimates in the regular and singular components separately. Using the classical argument for \(i=1(1)\frac{N}{2}-1\), the local error of the regular component is

Using the results in chapters 4 and 6 of [34], we obtain

And for \(m=0(1)4\), the regular component satisfies

which implies that \({\left\{ \begin{array}{ll} |V'''(x_{i},t_{j+1})|\le C(1+\varepsilon ^{-1/2}) , \\ |V^{(4)}(x_{i},t_{j+1})|\le C(1+\varepsilon ^{-1}). \end{array}\right. }\)

Since \(x_{i+1}-x_{i-1}\le 2/N\), (18) and (19) yield

Taking the smaller order of \(\varepsilon\), we get \(|L^{N,M}_{\varepsilon }(V^{j+1}_{i}-V^{j+1}(x_{i}))|\le {\left\{ \begin{array}{ll} C N^{-1}\varepsilon ^{1/2}, \ \ x_{i}\in (\sigma , 1-\sigma ),\\ C N^{-2}, \ \ otherwise. \end{array}\right. }\)

Now, introduce \(\psi (x_{i}) = CN^{-2}+CN^{-2}\frac{\sigma }{\sqrt{\varepsilon }}{\left\{ \begin{array}{ll} x_{i}/\sigma , \ \ 0\le x_{i}\le \sigma , \\ 1, \ \ \sigma \le x_{i}\le 1-\sigma ,\\ (1-x_{i})/\sigma , \ \ 1-\sigma \le x_{i}\le 1, \end{array}\right. }\) which provide \(\psi (x_{i}) = CN^{-2}+CN^{-2}\frac{\sigma }{\sqrt{\varepsilon }}(1)\), for \(x_{i}\in (\sigma , 1-\sigma )\). Since \(\sigma =2\sqrt{\frac{\varepsilon }{\beta }}\ln N\), we can obtain \(\psi (x_{i}) \le CN^{-2}\ln N\). Consequently, we have \(L^{N,M}_{\varepsilon }\left( \psi (x_{i})\pm (V^{j+1}_{i}-V^{j+1}(x_{i}))\right) \ge 0\) and \(L^{N,M}_{\varepsilon }\psi (x_{i})\ge {\left\{ \begin{array}{ll} C N^{-1}\varepsilon ^{1/2}, \ \ x_{i}\in (\sigma , 1-\sigma ),\\ C N^{-2}, \ \ otherwise. \end{array}\right. }\)

Applying Lemma 9, we obtain \(|V^{j+1}_{i}-V^{j+1}(x_{i})|\le \psi (x_{i})\le CN^{-2}\ln N\). Following similar procedure for \(i=\frac{N}{2}+1, \frac{N}{2}+2, ..., N-1\), we can obtain \(|V^{j+1}_{i}-V^{j+1}(x_{i})|\le \psi (x_{i})\le CN^{-1}\) and combining these outcomes gives

For the singular component, the error is estimated considering the transition parameter \(\sigma =\frac{1}{4}\) and \(\sigma =2\sqrt{\frac{\varepsilon }{\beta }}\ln N\) separately. That is, for the case \(\sigma =\frac{1}{4}\), we have a uniform mesh and \(2\sqrt{\frac{\varepsilon }{\beta }}\ln N \ge \frac{1}{4}\), so that \(\varepsilon ^{-1/2}\le C \ln N\) . By the classical argument we have \(|L^{N,M}_{\varepsilon }(W^{j+1}_{i}-W^{j+1}(x_{i}))|\le C\theta (x_{i}-x_{i-1})^{2} \varepsilon |W(x_{i})|_{4}\). Since \(|W^{j+1}(x_{i})|_{4}\le C\varepsilon ^{-2}\) and \(x_{i}-x_{i-1}\le N^{-1}\), we have \(|L^{N,M}_{\varepsilon }(W^{j+1}_{i}-W^{j+1}(x_{i}))|\le C(N^{-1}\ln N)^{2}\). Consequently, by using the maximum principle, we have

By a similar argument on \((1-\sigma , 1)\), we obtain the same estimate as in (21). For the case \(\sigma \le \frac{1}{4}\), a piece-wise uniform meshes exist with \(\frac{4(1-2\sigma )}{N}\) mesh size in \([\sigma ,1-\sigma ]\) and \(\frac{8\sigma }{N}\) mesh size in each of \([0,\sigma ]\) and \([1-\sigma ,1]\). Thus, to estimate \(|W^{j+1}_{i}-W^{j+1}(x_{i})|\), different arguments can be considered depending on the mesh spacing. For \(x_{i}\) in the sub-intervals \([0,\sigma ]\) and \([1-\sigma ,1]\) the classical argument gives \(|L^{N,M}_{\varepsilon }(W_{i}^{j+1}-W^{j+1}(x_{i}))|\le C\varepsilon \theta (x_{i}-x_{i-1})^{2}|W^{j+1}(x_{i})|_{4}\) . Since \(x_{i}-x_{i-1}=\frac{8\sigma }{N}\) and \(|W^{j+1}(x_{i}|_{4}\le C\varepsilon ^{-2}\), we have \(\vert L^{N,M}_{\varepsilon }(W_{i}^{j+1}-W^{j+1}(x_{i}))|\le C\varepsilon \theta \left( \frac{8\sigma }{N}\right) ^{2}C\varepsilon ^{-2}\le CN^{-2}\sigma ^{2}\varepsilon ^{-1}\). But \(\sigma =2\sqrt{\frac{\varepsilon }{\beta }}\ln N\) and hence \(|L^{N,M}_{\varepsilon }(W_{i}^{j+1}-W^{j+1}(x_{i}))|\le CN^{-2}\sigma ^{2}\varepsilon ^{-1}\le C(N^{-1}\ln N)^{2}\). By the maximum principle, we have \(|(W^{j+1}_{i}-W^{j+1}(x_{i}))|\le C(N^{-1}\ln N)^{2}, \ \ x_{i}\in [0,\sigma ]\cup [1-\sigma , 1]\). On the sub-interval \([\sigma ,1-\sigma ]\), the local truncation error of the singular component is

However, \(|D^{+}D^{-}W^{j+1}(x_{i})|\le \smash {\displaystyle \max _{x\in [x_{i-1},\, x_{i+1}]}}|W''(x_{i},t_{j+1})|\), and as a result, we have

Using the estimate for \(W''(x_{i},t_{j+1})\), we have

which implies that \(|L^{N,M}_{\varepsilon } (W^{j+1}_{i}-W^{j+1}(x_{i}))|\le CN^{-2}\le C(N^{-1}\ln N)^{2}, \ \ \ x_{i}\in (\sigma ,1-\sigma )\). So, we have \(|(W^{j+1}_{i}-W^{j+1}(x_{i}))|\le C(N^{-1}\ln N)^{2}\) on \([\sigma , 1-\sigma ]\). Combining the aforementioned findings for the singular components on (0, 1] with analogous procedures and results on the interval (1, 2), we can obtain

Thus, from the results in (20) and (22), we have

which is the error estimate at the \((j+1)^{th}\) time level. \(\square\)

Theorem 2

Let u(x, t) be the solution of the continuous problem (1) and \(U^{j+1}_{i}\) be the solution of the discrete problem (17). Then, the uniform error estimate given as

Proof

From Lemma 7 and Theorem 1, we have

by which we achieved the uniform error estimate for \(i=0(1)N, j+1=1(1)M\). \(\square\)

4 Numerical experiments and discussions

In this section, to demonstrate the validity and applicability of the obtained numerical scheme, we carry out numerical experiments for the problem under consideration. If the exact solution of a problem is known, we compute the maximum absolute error as the difference between the exact solution and the numerical solution obtained using the developed method. However, if the exact solution is not known, the maximum absolute error is computed using the double mesh principle [35] as \(E^{N,M}_{\varepsilon }=\smash {\displaystyle \max _{0\le i\le N, 0\le j\le M}}|U^{N,M}(x_{i},t_{j})-U^{2N,2M}(x_{i},t_{j})|\), where \(U^{2N,2M}(x_{i},t_{j})\) is a numerical solution obtained by doubling both the spatial and temporal mesh numbers, fixing the transition parameter. And the parameter-uniform absolute error is obtained by \(E^{N,M}=\smash {\max _{\varepsilon }}(E^{N,M}_{\varepsilon })\). We compute the nodal rate of convergence as \(R^{N,M}_{\varepsilon }=\frac{\log (E^{N,M}_{\varepsilon }/E_{\varepsilon }^{2N,2M})}{\log {2}}\) and the uniform convergence rate is obtained by \(R^{N,M}=\frac{\log (E^{N,M}/E^{2N,2M})}{\log {2}}\).

Example 1

Consider problem (1) with \(p(x)=3\), \(q(x)=-1\), \(f(x)=1\), \(u_{0}(x)=0\), \(\gamma (x,t)=0\) and \(\zeta (t)=0\) [22].

Example 2

Consider problem (1) with \(p(x)=5\), \(q(x)=-2\), \(f(x)=2\), \(u_{0}(x)=\sin (\pi x)\), \(\gamma (x,t)=0\) and \(\zeta (t)=0\) [21].

We have treated the two examples applying the numerical scheme developed in this paper using MATLAB R2019a software packages. Since the exact solutions are not known, the double mesh principle is applied and the obtained results are given in tabular and graphical forms. The maximum errors and rate of convergences are given in Table 1 for Example 1 and in Table 2 for Example 2. From these tables, we observe that for a given \(\varepsilon\), increasing both time and space mesh numbers, decreases the absolute point-wise error. And by fixing the mesh numbers and decreasing the perturbation parameter results in stabled point-wise error. This indicates the \(\varepsilon\)-uniform convergence of the proposed numerical schemes. Table 3 shows comparisons of the developed method with other results published in literature.

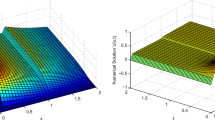

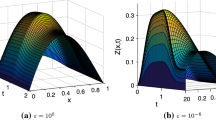

Figures 1 and 3 are plotted for Examples 1 and 2, respectively, at different time levels to show the changes in the boundary and interior layers with respect to the perturbation parameter. Also, to depict the physical behaviors of the solutions, surface plots are given in Figs. 2 and 4 for Examples 1 and 2, respectively. From these figures, we observe that as ε approaches to zero, the boundary and interior layers are resolved. To show the uniform convergence of the method, log-log plots are given in Fig. 5 for both examples.

Simulations for the numerical solution of Example 1, taking \(\theta\)=0.5, \(\beta\)=1 and N=72 and M=72 at four different time levels (line plots)

Simulations for the numerical solution of Example 2, taking \(\theta\)=0.5, \(\beta\)=1.5, N=64 and M=32 at different time levels and perturbation parameters (line plots)

Simulations for the numerical solution of Example 1, taking \(\theta\)=0.5, \(\beta\)=1, N=72 and M=72 for different perturbation parameters (surface plots)

Simulations for the numerical solution of Example 2, taking \(\theta\)=0.5, \(\beta\)=1.5, N=64 and M=32 at different time levels and perturbation parameters (surface plots)

5 Conclusion

In this research work, we considered a singularly perturbed differential equation with large delay in the spatial variable. Due to the influences of the perturbation parameter and the large delay, the solution of the problem changes rapidly in the layers, which is a challenging factor to solve the problem analytically. We treated the problem by constructing a numerical scheme. The scheme is obtained by approximating the time derivative term using \(\theta\)-method on a uniform mesh and the spatial derivative term is approximated using the central difference operator on a nonuniform Shishkin mesh. We established the stability and convergence analysis and obtained that the method is uniformly convergent. The method is demonstrated by solving two model examples. From results of the examples, we observe that the developed numerical scheme is convergent regardless of the perturbation parameter.

References

Humphries T (2016) Delay differential equations. University of Auckland, Bernd Krauskopf, NZMRI, pp 1–18

Glizer V (2000) Asymptotic solution of a boundary-value problem for linear singularly-perturbed functional differential equations arising in optimal control theory. J Optim Theory Appl 106(2):309–335

Stein RB (1967) Some models of neuronal variability. Biophys J 7(1):37. https://doi.org/10.1016/S0006-3495(67)86574-3

Nelson PW, Perelson AS (2002) Mathematical analysis of delay differential equation models of HIV-1 infection. Math Biosci 179(1):73–94

Kiselev AIRKAF Ilya N (2021) A delay differential equation approach to model the COVID-19 pandemic. https://doi.org/10.1101/2021.09.01.21263002

Rao RN, Chakravarthy PP (2014) A fitted Numerov method for singularly perturbed parabolic partial differential equation with a small negative shift arising in control theory. Numer Meth 7(1):23–40

Palli MMK, Ravi Kanth A (2021) Numerical simulation for a class of singularly perturbed convection delay problems. Khayyam J Math 7(1):52–64

Kanth AR, Kumar MM (2017) Numerical treatment for a singularly perturbed convection delayed dominated diffusion equation via tension splines. Int J Pure Appl Math 113(6):110–118

Narasimhan R (2016) Singularly perturbed delay differential equations and numerical methods. Singular perturbation problems. North-Holland Math Stud 172(3):41–62

Kumar D (2018) A collocation method for singularly perturbed differential-difference turning point problems exhibiting boundary/interior layers. J Differ Equ Appl 24(12):1847–1870

Kumar D, Kumari P (2020) A parameter-uniform collocation scheme for singularly perturbed delay problems with integral boundary condition. J Appl Math Comput 63(1):813–828

Cimen E, Amiraliyev GM (2019) Uniform convergence method for a delay differential problem with layer behaviour. Mediterr J Math 16(3):1–15

Cimen E (2020) Uniformly convergent numerical method for a singularly perturbed differential difference equation with mixed type. Bull Belgian Math Soc-Simon Stevin 27(5):755–774

Kumar P, Ravi Kanth A (2020) Computational study for a class of time-dependent singularly perturbed parabolic partial differential equation through tension spline. Comput Appl Math 39(3):1–19

Adilaxmi DB, Reddy YN (2019) An initial value technique using exponentially fitted Non standard finite difference method for singularly perturbed differential-difference equations. Appl Appl Math 14(1):245–269

Sahu Musharary JM S R (2020) A parameter uniform numerical scheme for singularly perturbed differential-difference equations with mixed shifts. J Appl Comput Mech 6(2):344–356

Shakti D, Mohapatra J (2021) Uniform convergence analysis of monotone hybrid scheme for convection-diffusion problems on layer adapted meshes. Math Rep 23(3):325–357

Bansal K, Sharma KK (2016) Parameter uniform numerical scheme for time dependent singularly perturbed convection-diffusion-reaction problems with general shift arguments. Numer Algorithms 75(1):113–145. https://doi.org/10.1007/s11075-016-0199-3

Woldaregay MM, Duressa GF (2019) Parameter uniform numerical method for singularly perturbed parabolic differential difference equations. J Niger Math Soc 38(2):223–245

Ejere AH, Duressa GF, Woldaregay MM, Dinka TG (2022) An exponentially fitted numerical scheme via domain decomposition for solving singularly perturbed differential equations with large negative shift. J Math. https://doi.org/10.1155/2022/7974134

Bansal K, Sharma KK (2018) Parameter-robust numerical scheme for time-dependent singularly perturbed reaction-diffusion problem with large delay. Numer Funct Anal Optim 39(2):127–154. https://doi.org/10.1080/01630563.2016.1277742

Kumar D, Kumari P (2020) Parameter-uniform numerical treatment of singularly perturbed initial-boundary value problems with large delay. Appl Numer Math 153:412–429

Sahu SR, Mohapatra J (2021) Numerical investigation of time delay parabolic differential equation involving two small parameters. Eng Comput. https://doi.org/10.1108/EC-07-2020-0369

Priyadarshana S, Mohapatra J, Govindrao L (2022) An efficient uniformly convergent numerical scheme for singularly perturbed semilinear parabolic problems with large delay in time. J Appl Math Comput 68(4):2617–2639

Govindarao L, Mohapatra J (2019) Numerical analysis and simulation of delay parabolic partial differential equation involving a small parameter. Eng Comput. https://doi.org/10.1108/EC-03-2019-0115

Priyadarshana S, Mohapatra J, Pattanaik S (2022) Parameter uniform optimal order numerical approximations for time-delayed parabolic convection diffusion problems involving two small parameters. Comput Appl Math 41(6):1–32

Manikandan NMJVS M Shivaranjani (2014) A parameter-uniform numerical method for a boundary value problem for a singularly perturbed delay differential equation. In: Advances in applied mathematics, pp 71–88. https://doi.org/10.1007/978-3-319-06923-4-7

Roos HG, Stynes M, Tobiska L (2008) Robust numerical methods for singularly perturbed differential equations: convection-diffusion-reaction and flow problems, vol 24. Springer, New York

Majumdar A, Natesan S (2019) An epsilon-uniform hybrid numerical scheme for a singularly perturbed degenerate parabolic convection-diffusion problem. Int J Comput Math 96(7):1313–1334. https://doi.org/10.1080/00207160.2018.1485896

Kadalbajoo MK, Awasthi A (2011) The midpoint upwind finite difference scheme for time-dependent singularly perturbed convection-diffusion equations on non-uniform mesh. Int J Comput Methods Eng Sci Mech 12(3):150–159

Franklin MVSMJ V Paramasivam (2010) Second order parameter-uniform convergence for a finite difference method for a singularly perturbed linear parabolic system. http://arxiv.org/abs/1008.2470

Woldaregay MM, Duressa GF (2021) Robust numerical method for singularly perturbed parabolic differential equations with negative shifts. Filomat 35(7):2383–2401

Clavero C, Gracia JL (2012) A high order HODIE finite difference scheme for 1D parabolic singularly perturbed reaction-diffusion problems. Appl Math Comput 218(9):5067–5080. https://doi.org/10.1016/j.amc.2011.10.072

Miller JJ, O’riordan E, Shishkin GI (2012) Fitted numerical methods for singular perturbation problems: error estimates in the maximum norm for linear problems in one and two dimensions. World Scientific, Singapore

Doolan EP, Miller JJ, Schilders WH (1980) Uniform numerical methods for problems with initial and boundary layers. Boole Press, Dublin

Acknowledgements

The authors acknowledge the editor and reviewers for their valuable suggestions and comments in improving the original version of this paper. Also, all individuals and institutions, who have contributions in this work are acknowledged by the authors.

Funding

For this research work, Ababi Hailu Ejere obtained a partial financial support from Adama Science and Technology University and sponsorship from Ethiopian Defence University, Engineering College.

Author information

Authors and Affiliations

Contributions

GFD initiated and prepared the plan of this study. AHE developed the numerical scheme and analysis of the results. MMW and TGD revised the procedures, analyses and results of the study. All authors have equal contributions in the paper and agreed on the submitted version.

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that this research work was conducted with out any potential conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ejere, A.H., Duressa, G.F., Woldaregay, M.M. et al. A uniformly convergent numerical scheme for solving singularly perturbed differential equations with large spatial delay. SN Appl. Sci. 4, 324 (2022). https://doi.org/10.1007/s42452-022-05203-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-022-05203-9