Abstract

Robot polishing is increasingly being used in the production of high-end glass workpieces such as astronomy mirrors, lithography lenses, laser gyroscopes or high-precision coordinate measuring machines. The quality of optical components such as lenses or mirrors can be described by shape errors and surface roughness. Whilst the trend towards sub nanometre level surfaces finishes and features progresses, matching both form and finish coherently in complex parts remains a major challenge. With increasing optic sizes, the stability of the polishing process becomes more and more important. If not empirically known, the optical surface must be measured after each polishing step. One approach is to mount sensors on the polishing head in order to measure process-relevant quantities. On the basis of these data, machine learning algorithms can be applied for surface value prediction. Due to the modification of the polishing head by the installation of sensors and the resulting process influences, the first machine learning model could only make removal predictions with insufficient accuracy. The aim of this work is to show a polishing head optimised for the sensors, which is coupled with a machine learning model in order to predict the material removal and failure of the polishing head during robot polishing. The artificial neural network is developed in the Python programming language using the Keras deep learning library. It starts with a simple network architecture and common training parameters. The model will then be optimised step-by-step using different methods and optimised in different steps. The data collected by a design of experiments with the sensor-integrated glass polishing head are used to train the machine learning model and to validate the results. The neural network achieves a prediction accuracy of the material removal of 99.22%.

Article highlights

-

First machine learning model application for robot polishing of optical glass ceramics

-

The polishing process is influenced by a large number of different process parameters. Machine learning can be used to adjust any process parameter and predict the change in material removal with a certain probability. For a trained model,empirical experiments are no longer necessary

-

Equip** a polishing head with sensors, which provides the possibility for 100% control

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The present work combines the two key technologies of the twenty-first century "Optical Technologies" and "Artificial Intelligence" with the objective of contributing to an increase in the understanding of polishing process of optical surfaces for material removal maximisation. The experimental and theoretical investigations carried out within the framework of this work on the mechanisms of action of the process, as well as on the technological interrelationships of the influencing parameters, are intended to provide further fundamentals for the robotic polishing of glass. Special attention is paid to the application of machine learning to the largely empirical process technology of glass polishing. On one hand, the use of sensors serves as business motivation such as plannable maintenance measures, shortening of downtimes, cost minimisation, especially predictive maintenance and condition monitoring. On the other hand, the sensors and actuators can be used to make scientific statements and achieve repeatable results, including the observation of new effects that remain hidden due to process divergence and improvements can be made on recognised weak spots of the process. The use of sensors also provides the basis for readjusting the process parameters in the event of damage or for intervention by the machine operator.

Without exploring the highly nonlinear physical removal mechanism of polishing, the data-driven approach proposed in this study models the relationship between process variables and material removal using a neural network. The enhanced process understanding and knowledge gained about the polishing system can support the increasingly important knowledge-based process design in this regard.

1 Introduction

Polishing is one of the oldest manufacturing processes in the world. About 70,000 years ago, people polished bones to improve the feel of tools [1]. Optical lenses have been manufactured and polished for about 3000 years [2]. Today, polishing is still a very skilled process based mainly on experience and empiricism. Due to its complexity, the mechanism of action of the process is still not fully understood today [3,4,5,6,7,8,9].

Since the 2000s, the level of automation in the optics industry has been steadily increasing. Robots are used as CNC polishing machines in addition to integrated handling. According to Brinkmann [10], in 2000 the degree of automation of the systems was low, as well as the reproducibility of the polishing processes on these machines was not yet given. From about 1990 onwards, CNC-controlled precision machine tools found their way into the production of optical components. Since 1988, surfaces of highest precision have been produced by targeted computer-controlled polishing (CCP), which nowadays corresponds to a shape deviation of 0,15–0,28 nm (depending on the spatial wavelength range between 0.5 and 30 µm) [11].

Robotic polishing is used by the companies Safran (formerly Sagem) [12] and Zeiss Semiconductor Technologies [13]. Commercial production machines are available for smaller optics [14, 15]. The company Coherent (formerly Tinsley) uses a gantry portals approach as a polishing machine [16]. The IRP (Intelligent Robotic Polishers) machines from the company Zeeko have eight degrees of freedom and are used in CCP up to a roughness of 0.2–0.3 Å with a shape deviation of λ/20 of the wavelength [17].

For the fabrication of single precise device surfaces, the operations of core fabrication have to be applied correspondingly often. The qualitative evaluation of the surface is ensured by interferometric measuring or testing procedures. For deterministic manufacturing, profound process understanding, automatic measurement, as well as material-specific and process-relevant parameters are necessary. According to the “Steering Committee Optical Technologies” (original: Lenkungskreis Optische Technologien), process control in polishing is a challenge for the twenty-first century. Due to the large number of process-relevant influencing variables, process control is difficult. The Steering Committee recommends, at least for preferred glasses, the investigation of the parameters, the monitoring of the polishing agent, as well as the integration of sensors and measuring technology for online surface assessment [10]. The American counterpart “Harnessing Light” describes CCP and the production of high-precision optics, for example for EUV technology (extreme ultraviolet radiation), as one of the key technologies of the twenty-first century. Here, roughnesses of 0.1 nm rms and 1 nm peak-to-valley (PV) are achieved [18]. For sustained repeatability, the performance of the manufacturing processes must be increased [19].

The main component of the polishing process consists of a polishing tool that is passed over the glass surface. All material removal takes place in the polishing gap, the area between the polishing tool and the glass surface. The polishing tool usually consists of an elastomer and a polishing film, the viscoelastic polishing agent carrier. Due to the elastic behaviour of the material, the polishing tool clings to the glass surface, even if it is uneven. The polishing gap usually contains a polishing suspension of water and polishing grains.

Deterministic models are suitable for describing individual aspects of the polish but do not manage to describe the polish completely. If individual process parameters diverge or are changed, the validity of the model is no longer given. The use of sensors improves the understanding of the process, but data-driven models do not directly convey a better understanding of the process, as physical models do, for example. The models attempt to describe one or more interactions.

Many parallels can be found between the polishing of optical surfaces and the chemical–mechanical planarisation of wafers, especially in the area of material removal hypotheses. In chemical-mechanical polishing (CMP), also called chemical mechanical planarisation, wafers of different materials (including monocrystalline silicon or silicon carbide) are polished to a thickness accuracy of ± 0.5 µm [20]. By planarising, multi-layer microelectrical circuits can be realised on wafers. Due to the higher economic importance and the larger research community, there are a larger number of publications for chemical–mechanical planarising than for glass polishing. This polishing process differs from the glass polishing process in geometry (exclusively planar workpieces), workpiece size (a workpiece contour), relative speed, the movement system and the number of pieces. CMP of wafers is understood to be similar to the polishing of glass workpieces and is primarily heuristic, i.e. researched via trial and error [21]. As long as the process can be held constant, the removal prediction is accurate. If parameters are changed in the process, no detailed statement about the process can be made [22]. Another reason for the low understanding in CMP is also the low use of in situ sensors [23].

Khalick et al. [24] also used a neural network for robotic polishing of metals. Both material removal and roughness were considered. The learning curve of the network has the appearance of overfitting and the results are for plane samples. The set-up is functional, but very similar to the set-up of the experiments conducted in this work.

Yu et al. [25] used neural networks to make statements about the final roughness of the glass polish with the robot based on the Preston equation. Since an adequate number of experimental data sets was lacking, many data were simulated. The resulting model has a prediction probability of 25.16%, although the results themselves were described as not optimal.

With regard to grinding and polishing with the robot, Diestel et al. [26] use sensors to create an automatic polishing process of injection moulds. Vibration sensors are used in the CMP process in the industrial sector to evaluate the polishing tool and the polishing slurry feed. Pilný and Bissaco [27] monitor the polishing process using acceleration sensors. Ahn et al. use sensors to detect when the polishing tool is worn or when it should be changed to the next smaller grain size. With similar process parameters as in the present work, a dependence of vibrations on the final roughness achieved is shown [28]. Segreto and Teti use accelerometers, strain gauges and measure current to use the data and an artificial neuronal network to predict the final surface finish achieved in CMP. In wafer planarisation, the polishing tool is dressed in the running process with a separate spindle. If the vibrations at the dressing unit are too high on the complete surface, the conditioning disc must be replaced. If there are individual local abnormal vibrations due to too much friction, a polishing nozzle must be adjusted or replaced [29]. Immersion cameras are used in situ to assess the polishing tool, especially the closure of the pores by material abrasion [30].

Currently, there is no adequate data-driven approach in the optics industry to predict material removal. The aim of this work is to build a sensor-based robot polishing head for glass–ceramic polishing and to predict the material removal based on the acquired sensor data with machine learning (with an artificial neural network). In contrast to deterministic polishing models, the process should also be predicted in this way, despite process fluctuations. Furthermore, 100% control is generated, as every process deviation is recorded.

2 Proceeding

This section deals with the general basics of this work. The individual steps that are necessary for data acquisition are shown. These include the process overview, the measurement technology and the experimental design. In addition, the selection of sensors and actuators is discussed.

In the present work, an existing standard neural network is optimised and applied to removal prediction in polishing. For this purpose, a test sequence for 17 tests is defined with a test plan and the data from these tests are used to train the neural network. Within the trials, the PLC samples at approx. 15 Hz. As a result, a total of 434.549 individual measurements with 15 assigned sensor data each are generated in the 17 trials, which are used for training. The network is trained using the hyperparameters epoch, optimiser, batch size, learning rate, number of neurons, number of layers and dropout. The optimisation takes place sequentially.

2.1 Set-up

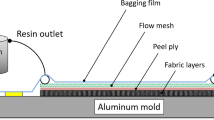

The motion system for this research project is an industrial robot ABB IRB 4400 with an S4C + controller. A polishing head is attached to the robot, which has a rotation motor for the rotary movement and a linear drive (pneumatic or electric) for the Z-stroke. In order to minimise vibrations, the polishing head is optimised for stiffness and has the lowest possible swing mass. Whilst a pneumatic stroke cylinder readjusts approximately every 16 rotations at a speed of 1000 min−1, an electric direct drive readjusts every 0.27 rotations. The latter is used for the experiments. Highly dynamic linear bearings are used for the normal movement of the linear drive. The polishing tool consists of a workpiece carrier, an elastomer for height compensation and the polishing pad. The polishing tool used is a ball cut-out with a ball diameter of 70 mm and a total width of 50 mm. The polishing wheel is a purchased part from Optotech, the polishing foil is a custom cut with a CO2-laser from the material LP66. The robot cell is enclosed in a protective cage. The polishing tray collects the polishing agent and feeds it back into the polishing agent reservoir. This has an agitator to prevent the polishing agent from settling. A peristaltic pump returns the polishing suspension to the polishing head and supplies the process with new polishing agent. The polishing suspension filters dirt particles before the nozzle on the polishing head and before the reservoir. The set-up is shown schematically in Fig. 1.

Tests were carried out with two newly manufactured polishing heads, which were optimised for sensor usage. With the first polishing head, the primary objective was to attach sensors as a feasibility study. In a second step, an optimised polishing head with sensors was built. In the process, sensors of minor significance were removed, and the dynamics, mechanical and process stability were increased. Unless otherwise stated, all results were generated with the second polishing head, the optimised machine learning polishing head. To support the machine operator, the polishing head has a voice assistant. The two polishing heads are described comparatively in Table 1. The pH value and temperature of the polishing slurry is determined in the polishing fluid tank. The density is measured in the feed to the nozzles on the polishing head and the temperature is measured once more. On the polishing wheel itself, the motor current, power (voltage and current), speed and motor temperature are measured. The normal force is measured directly above the polishing tool. Vibrations and tilt are measured further above the polishing wheel.

2.2 Metrology

A Fizeau laser interferometer Zeiss DIRECT 100 (short: D100), is used. The Fizeau interferometer has a HeNe laser with a wavelength of 633 nm. A CCD camera (Charge-Coupled Device) with a resolution of 2048 × 2048 pixels serves as the detector. The set-up consists of a collimator with a diameter of 6" (equivalent to Ø152.4 mm) and a lens system. Due to this design and the special measuring algorithms, the quality of the measurements is not directly dependent on the surface quality of the lenses [31, 32].

In interferometry, surface profiles are back-calculated by constructive and destructive interferences of the light. These interferences are usually visible at the measuring machine in an interference pattern for the adjustment of the measuring object. The interference patterns are based on a reference measurement. Compared to other interferometers, this allows many individual measurements to be averaged within a short time during subsequent measurement. By averaging several individual measurements, random errors (vibrations, air fluctuations and temperature fluctuations) are eliminated. The D100 can record up to 32.000 measurements/hour at a measurement frequency of up to 25 Hz. The measurements are displayed as a false colour image [33].

Before and after polishing, the complete workpiece surface of the glass ceramic is measured several times and then averaged. For this purpose polished area and the surrounding, nonpolished surface are measured. The Zernike fringes are subtracted from the averaged measurements. The absolute material removal of the polishing step is calculated from the two relative measured surfaces and thus several polishing tests can be carried out sequentially with one sample.

If the surface roughness is greater than the wavelength of the laser, two difficulties arise: firstly, the speckle pattern of the laser is visible and masks the macroscopic interferences and secondly, the rough surface scatters the light too much so that a high laser power is required to obtain sufficient light at the detector. Therefore, the initial surface must be pre-polished [34]. The height resolution is in the range of 20 µm.

Roughness measurements are taken with the ZYGO NEWVIEW 8300 white light interferometer (WLI) [35]. With the help of the known wavelength of the light source and the destructive and constructive interferences, the surface can be measured optically. Roughness measurements are not used for validation in the carried out tests. WLI measurements are used to evaluate whether the glass workpiece can be measured on the Zeiss D100 or to evaluate polishing structures on the sample surface. The pre-polished glass samples for the polishing tests have a roughness of less than 9 nm (Sa value).

2.3 Workpiece preparation

As a workpiece, a concave surface is ground and pre-polished from a Zerodur raw block. The concave has an outer diameter of 150 mm, a radius of curvature of 1050 mm with a centre height of approx. 5 mm. The surfaces of the work pieces were pre-polished in order for the material removal to be measured in the laser interferometer D100.

For the preliminary tests, plane samples were used and for the main tests, plane-concave optics were used. The delivered raw blocks have a rough surface due to the saw cut. The plane samples were pre-polished in a first lap** step with the polishing grains ABRALOX E220 (average grain size 80 µm) and then with PLAKOR 9 (average grain size between 8.5 and 11 µm). Abralox is aluminium oxide, including approx. 3% titanium oxide, approx. 1% silicon oxide and approx. 0.15% iron oxide, and has a blocky structure with sharp edges. Plakor is also aluminium oxide with a purity of over 99% and a grain length to width ratio of 1:5 [36]. In a further polishing step, the target surface for the polishing tests is achieved with opalines (cerium oxide with an average grain size of 1 µm).

A plano-concave Zerodur lens with a diameter of 150 mm and a radius of curvature of 535.4 mm and an aperture angle of 15.67° is used as the test optics. The plano-concave shape is produced in several iterative grinding steps using a RÖDERS RXP DS 500 HSC milling machine with grinding oil option. Electroplated diamond grinding pins with grit sizes D76, D126 and D151 are used in succession. Contrary to the commonly used grinding machines, the milling machine has rigid kinematics, which means that the spindle presses with its full processing weight and unyieldingly on the glass surface. With grinding or polishing machines, the tool is usually pressed elastically onto the surface with a defined pressure via the z-axis. When polishing the optics, the high number and the size of the depth damages are therefore visible to the naked eye. These must also be removed in the subsequent polishing steps. The aluminium polishing shells are also produced on the milling machine, then covered with LP 66 polishing pad and the mould is machined in a further milling step. The polishing pad is identical to the one of the robot polishing tool and contains cerium oxide as a filler. It has a density of 0.42 g/cm3 with a Shore hardness A (durometer) of 26. The polishing bowls for the plane specimens are dressed using diamond-studded conditioning plates. Subsequently, the glass workpieces are polished through with one polishing bowl per polishing agent. Due to the relative measurements of the material removal, up to ten tests (plane specimen) or three tests (plane concave) can be carried out on a specimen before the specimens have to be reset to their initial state. After pre-polishing the samples, the test parts have a roughness of less than 9 nm (Sa value).

2.4 Design of experiments

Statistical design of experiment (DoE) is a method for analysing technical systems. With the help of statistical experimental designs, the relationship between several influencing factors (e.g. speed and normal force) and individual target variables (e.g. material removal) is to be determined as precisely as possible with as few individual experiments as possible. In contrast to the conventional approach, in which only one influencing variable is varied in each individual series of experiments, several influencing variables can be changed simultaneously in the statistical design of experiments. The commercial software for statistical experimental design Design- Expert from the company STAT-EASE is used to create the DoE [37]. The software is used in the optimisation of process parameters and serves to check and validate the machine learning model in the following experiments. The use of an experimental design brings structure to the sequence of experiments and the selection of process parameters. In order to reduce the number of trials, a partial factorial trial design is used, e.g. in the case of limited resources or for trial designs with a high number of factors, as there are fewer runs than with a factorial trial design [38]. The number of factors determines the number of experiments in the Box–Behnken design. The experimental design model is mainly used when extreme input parameters cannot be measured in a factorial design. The design can be rotated around the centre point, the corners can therefore be neglected and extreme parameters can be avoided [39]. The Box–Behnken design is suitable for 3 to 21 factors. Due to the high power of the polishing head and the risk of fire for the polishing foil, the experimental design is adapted during the running process so that it no longer corresponds to a Box–Behnken design. However, the number of parameters and the boundary conditions are identical. The controllable parameters speed (min−1), normal force (N) and the polishing time (min) of the surface to be processed serve as input factors. This means 17 trials with three input factors. The material removal (mm3) serves as the target variable. The test parameters are shown in Table 2. Compared to minimising the surface roughness during polishing by known adjustment of the process parameters, maximising the material removal is a greater challenge. For this reason, maximisation of material removal is chosen as the target parameter. In order to minimise systematic errors (e.g. external vibrations, temperature fluctuations) on the individual tests and to avoid correlations associated with this, the tests are carried out in random order [40].

By using a ball tool of appropriate size, the polishing foil wear is negligible due to the size. The polishing tool is a ball cut-out with a diameter of 72 mm and a width of 52 mm. A rectangular meander with the size 80 × 80 mm is polished. The concave workpiece has a diameter of 150 mm, with a width of the polishing tool of 52 mm, a phase (circumferential 1 mm) and a circumferential border as a measuring reference surface, this results in a polishing field size of 80 × 80 mm.

The point spacing is 1 mm in the x-direction and the web spacing is 0.4 mm. The density of the polishing suspension is measured with an aerometer before the test and adjusted to 1.052 g/cm3. If the density of the polishing slurry is too high, the individual polishing grains block each other. If the density is too low, there are too few polishing grains and too little removal takes place. A density value of 1.052 g/cm3 has become established in the literature [41]. The pH value was adjusted to 8.55 mol/l by conditioning at the beginning of the experiments. Dissolved substances, which also contribute to material removal as well, keep the pH value stable through buffering properties. The pH value must remain at a constant level for a long time. With preconditioning, the pH value is brought to a plateau at which it no longer changes significantly and remains constant. Such a plateau was achieved at a pH value of 8.55 by adding removed Zerodur.

The advantage of statistical software over machine learning models is the known algorithms in the background. The software gets the identical and static parameters for all respective tests without process-related fluctuations. The machine learning model reacts to temporary fluctuations in the process and can adapt to them.

2.5 Data preparation

The sensor data are stored by the PLC in a CSV file (comma separated values) and processed offline to be used for the training of the machine learning model. In this case, the term data preparation describes the transformation of the raw data into a data frame. The data are in a tabular structure and have the same data format as the public machine learning datasets of the UCI database of the University of Massachusetts Amherst [42]. The vast majority of machine learning frameworks have interfaces for files from this database. Data preparation is, next to training the machine learning model, the most time-consuming step in achieving intelligent algorithms. FIGURE EIGHT, a company specialising in machine learning, surveys specialists in this field every year, and according to this survey, 60% of the working time is needed to prepare the raw data [43]. The processing of the measurement data of the ablation is carried out with the laboratory's own software ZAPHOD. This software is optimised for dwell time-controlled polishing. In the first step, the ablation data is trimmed, then outliers that lie outside 1.5 times the standard deviation are eliminated. In a further step, the points are calculated at an equidistant distance and the maximum value is set to 0, which allows the volume removal to be calculated. This is needed for the evaluation of the experimental design with the statistical software. By triangulation, the closed area is finally calculated from these points. Data vectorisation is the conversion of input and target parameters into tensors. The input parameters are already available as floating point number tensors. In the present work, the material removal is the target parameter: with the projection of the robot track onto the 3D surface, to each sensor value a material removal can be assigned. The latter is used as the target parameter for machine learning. Approach and departure paths of the polishing head are not considered for the further calculation.

To mitigate the edge effects, the peaks on the ablation data are smoothed using the ROBUST PEAK DETECTION algorithm. This algorithm is also known as Z-SCORE ALGORITHM. Compared to most other peak detection algorithms, this one is initialised with only three parameters and has few constraints [44].

The preprocessing process increases the learning success and the prediction accuracy of the neural network and thus its performance. Preprocessing operations include normalising, scaling and standardising the input and the target parameters.

The input parameters should have a certain consistency in order to simplify learning for the neuronal network. If this is not the case, extensive updates of the gradient can occur, which makes it difficult to converge the network. The individual features should have a standard deviation of 1 and a mean value of 0. This means that the values of all input parameters lie in the same interval. In order to avoid the prediction of a negative removal, the value range of the target parameter or the material removal is scaled to a value range between 0.15 and 0.85 [45].

2.6 Evaluation scheme

A standard method for evaluating regression models is the root-mean-square error (RMSE) or the squared value, the mean squared error (MSE). Both values quantify the distance between the vector of all predictions and the vector with the known target values and are described as follows [46]:

ŷi: describes the predicted values; yi: is the observed values; n: is the number of observations.

The difference between prediction and actual measured value, also called prediction accuracy, is validated via the mean absolute error (short: MAE). It is defined as the average absolute error of the difference between the predicted and the actual value:

The predictive accuracy of a regression model is evaluated in the literature by the R2 value, among other things. R2 is the percentage of variation explained by the relationship between two variables. The R2 value corresponds to the Pearson coefficient. Another evaluation parameter is the R-value: the root of the R2 value, which allows negative values. A value of 0 means that the input characteristics are not taken into account by the regression model. A value of 1 would be a perfect model fit. As a measure of quality, R2 exclusively represents linear correlations. It is the explained variation by the total variation and is described mathematically as follows [47]:

y̅: average.

3 Machine learning model

The search for the optimal hyperparameters epoch, batch size, optimiser as well as the learning rate is realised via the grid search. The remaining hyperparameters are considered constant here. For training, a primitive basic grid with sensible parameters and few hidden layers is used. For the batch size, contrary to the recommendation (batch size 32 [46]) from the literature, values between 64 and 1024 are available. The epochs are staggered between 50 and 400 epochs. The optimisers available are adam, RMSprop and SGD. The learning rate is graduated in four logarithmic steps between 0.001 and 1. Batch size and epochs as well as optimiser and learning rate are optimised together in one step. The raster search delivers a batch size of 64 with 50 epochs. The optimiser is adam, with a learning rate of 0.001. These hyperparameters are considered constant in the following step and are used unless otherwise mentioned. Batch size, optimiser and learning rate are thus identical to preliminary tests on plane samples [48].

The optimisation is performed by Hyperas, which selects and sets hyperparameters for each layer of a network. The created network is then trained and validated using the MSE. The smaller this value is, the better the existing network is. For reliable validation, the entire data set was divided into three parts: the training part (60%), the validation part (20%) and the testing part (20%). The latter is unknown to the neural network and is used for evaluation after training.

A neural network is only performant and efficient at the same time if the width and the depth of the network are dimensioned correctly in proportion. Five networks with one to five hidden layers are optimised separately. Due to the large number of parameter combinations, not all of them can be tested. Therefore, random search approaches are used within the framework of limited iterations.

As described above, activation functions ReLU, Softplus and Sigmoid are used. The number of neurons is individual for each layer: for a shallow network, usually fewer are used than for a deep network. Based on this data, a four-layer network is used, the parameters of which are shown in Table 3.

The reduction of the computing time via a reduction of the features (especially features with a high correlation to each other) was carried out. Likewise, a ranking was made according to the importance of each feature. However, the ablation prediction error increases so that all features are used to calculate the ablation. This shows the good selection of the individual sensors.

4 Results

In contrast to the creation of the network parameters, which takes several hours or days, the final training of the neural network takes a few minutes.

The learning curve of the trained model is shown in Fig. 2 as a loss function. This curve shows the training success and allows conclusions about the quality and behaviour of the predictions. In this case, the loss function, the difference between predicted and actual removal, is to be minimised. The typical exponentially decreasing training curve is recognisable. The validation curve fluctuates more, without a clear trend over the epochs. The validation data set curve is above that of the training data set, i.e. the model reproduces the validation data set better than the training data set. Another indicator of the good quality of the training is the overfitting of the two curves. In the case of overfitting, both curves would reach a plateau without crossing and they would have a high MSE. At the same time, errors in the training data would be much lower and the two curves would not overlap [46]

To illustrate the accuracy, all the removal data from the 17 tests are shown one after the other in Fig. 3. They form a large data set. The 20% validation data also forms the basis for the percentage predicted value. Apart from the outlier within the first 200 s, the predicted ablation matches the real one very well. The shape is well mapped, but there are slight deviations in the amount of ablation. The neural network achieves a prediction probability of 99.22%. The MAE of 24.7 nm is slightly higher than the MAE of the plan pretests, which had a value of 10 nm.

In addition, the use of the sensors alone made it possible to make statements about process fluctuations, anomalies in the process and the condition of the polishing head.

4.1 Validation with unknown data

The validation data are extracted from the raw training data. The data are unknown to the machine learning model, but the structure and value ranges are known. For the validation of the machine learning model, completely unknown data with deviating parameters is therefore also used. The polishing field size is increased by 56% from the original 80 × 80 mm to 100 × 100 mm. With the use of the plano-concave glass sample, the tilting angle of the polishing head increases by a total of 2.15° in the x and y directions. In addition, previously unknown values are used for the parameters of rotation speed and polishing time. The polishing time for this polishing field is 10 min and the speed of 450 min−1 is 6% faster than the highest speed so far. The individual values are summarised again in Table 4. The trained model was able to reproduce the data with a prediction probability of 60.25% (R-value). As can also be seen in Fig. 4, the maximum deviation of the prediction from the target surface is 385.88 nm (MAE). The neural network predicts too little material removal and can only insufficiently reproduce the fluctuations of the material removal due to the edge effect (roughness on the global shape of the curve).

4.2 Results design of experiments

The analysis of variance (ANOVA) [49] of the statistical software gives an indication of the predictive accuracy of the statistical regression model. If only the input variables of the test plan (rotation speed, normal force and polishing time) are considered, the statistical test plan software cannot create a significant model.

If the input parameters are selected according to the maximum prediction probability, the software selects only the rotation speed. On the one hand, this reflects the high importance of the speed, but also shows that the software cannot completely represent the process variance.

The sensor data of all individual trials, a total of 434.549 lines (training data) with 16 columns (15 sensor data and the material removal), cannot be processed by the software due to the high number of input lines. Looking at each trial individually, the software can create a significant model with a prediction probability of 36%.

The advantage over the machine learning model is the short calculation time and the ease of use. If the test data of the executed test plan are transferred to the statistical software, a few seconds are needed for evaluation. The polishing process and the edge effects are not taken into account. This results in a certain uncertainty regarding the material removal rate. The larger the area to be polished and the greater the relative removal, the smaller the influence of these anomalies on the removal prediction.

The removal data scatter very strongly and the mathematical model is not able to represent the noise of the data and the systematic errors. As an example of a systematic error, the sensor signal of the tilt sensor can be shown here: By processing a concave surface, the mean value in Y direction should be 0° and not − 0.18° as determined.

5 Discussion

The use of sensors alone allows statements to be made about the process and its stability. There is a constant evolution in the design of the polishing heads, which will also find its way into polishing practice in the future. Further polishing process parameters can be readjusted in the future. In the present work, only the rotation speed of the motor and the normal force are readjusted. By a process readjustment the process has a lower divergence and can provide even better data for the machine learning model.

The presented ML model has an R2 value of 0.9922 (R: 0.9961) in the evaluation. For classification, this value is compared with the results of the PHM (Prognostics and Health Management) data challenge for wafers. The scientific PHM teams achieved an R2 value between 74.89 and 90.1% [50]. Kong gives an R2 value of 90.14% [51]. The work of Jia published two years later gives an R2 value between 0.9817 and 0.9914 (R: 0.9908–0.9957), depending on the network parameters [52]. This puts the prediction accuracy well above the data challenge results and in the order of magnitude of Jia.

Feature selections were used to possibly reduce the data or calculation times (including correlation matrices, SelectKBest, Recursive Feature Elimination). For known dependencies, such as motor current and motor torque (the last is calculated from the motor current) or the dependency of the pH value on the polishing fluid-temperature, a feature can be skipped. The prediction results are nevertheless performed with all features and thus achieve the lowest prediction error.

It can be assumed that the prediction is possible when all parameters are changed. Thus, the model has 15 process parameters that can be varied at will. A human would struggle to make such a process prediction based on the sensor data. Physical models usually do not have the high number of variable parameter values.

With an R2 of 36%, statistical process prediction has a lower prediction probability than the neural network. The reason for this is the higher number of manipulated parameters in a neural network (a total of 1921 neurons distributed over four layers and an output layer, the latter having only a single neuron) compared to the lower number in a polynomial equation. The statistical model runs behind the random scatter and cannot represent measurement errors. In contrast to the data-driven machine learning model, the statistical experimental design evaluation has the advantage of providing a mathematical model that represents the physical laws. Thus, despite this low value, the process prediction is better in the case of diverging process parameters than the empirical observation of the polishing process or the observation of polishing models describing individual process aspects.

The stability of the process and the exact calibration of the system are the challenges and not the application of intelligent data models. In the future, the main task will be in the constructive area of the polishing head manufacturing and/or the measurement technology. In this set-up, the ex-situ measurement of the stock removal is the weak point: For example, the polishing head produces a depth stock removal of 12.5 µm at a normal force of 10 N, a speed of 425 min−1 and a surface machining rate of 3 mm3/s. This produces edges at the edge of the polishing field that are too steep and can no longer be measured with the D100. The optical D100 measuring device can measure up to approx. 24 µm of form removal before the edge flanks of the polishing field become too steep. Time-consuming tactile measurements or variable polishing data sets are an alternative. With such variable polishing data sets, the edge of the polishing field is polished with lower polishing pressure or lower speed. As a result, the opening angles of the resulting flanks become flatter and can still be measured optically. Furthermore, the measurement technique results in the largest error.

Some parameters are not taken into account: The contact condition of the tribological system, consisting of the glass material, the polishing tool and the colloidal polishing suspension can be described with the help of the viscosity of the polishing liquid [22]. The viscosity is considered constant during polishing and has an influence on the polishing gap height [53], the friction force in the polishing gap and on the material removal [54]. Another type of sensor used in polishing wafers is strain gauges [55]. The advantage of this type of sensor is the comparative ease of implementation, as they can be placed almost anywhere. Furthermore, they offer the possibility to make statements about the stability, the mechanical condition of the polishing head and the local position of a possible damage at the polishing head than the possibility of temperature measurement presented here. Their attachment to eccentric tools is constructively difficult and economically almost impossible.

The inspection of the surface must be done directly in situ on the machine. Due to the more complex design compared to ex-situ measurements, a deviation of less than three per cent is assumed for in situ measurements of the mould [56]. This accuracy is not sufficient for final correction processes. However, measuring the material removal via sensors could completely eliminate the need for offline measurement in the first polishing steps. Furthermore, an application of the presented machine learning model for aspheres is reasonable. Due to their complex geometry, these can be measured either only in partial areas or only by computer-generated holograms. The latter has to be adapted for each test geometry, which is economically reasonable only in serial production [57].

If acceleration sensors with three measuring axes in the respective spatial coordinates are used, the position of the polishing tool can be calculated at any time. Using the tilt sensor, this can also be calculated, but only for concave and convex optics. In combination with ablation sensor technology (e.g. confocal sensors) or the use of the machine learning model, a large part of the measurement technology in optics production can be dispensed with. This applies especially to the first polishing steps in iterative polishing.

Machine learning is always useful when one has a rough idea of the problem at hand. Without own empirical experience in polishing, a reasonable selection of sensors or a meaningful evaluation of the machine learning model would not have been possible. Due to the high number of network parameters, machine learning is still referred to as a "black box". It is difficult to understand the calculations.

The different processing times of the polishing field result in a different number of sensor and ablation data. Consequently, bias occurs when training the machine learning model. This could be remedied by adjusting the ablation rate. However, recorded data are not all used in this process. An inline feedback of the ablation by the machine learning model is currently not given. Data preparation per data set is currently too time-consuming. The process can be accelerated with programming close to the hardware or optimising the data preparation. Acceleration through a suitable choice of hardware for computation could also help.

6 Summary and outlook

Today, high-performance optical systems are a key component in numerous fields of application, e.g. lithography lenses or astro mirrors. As central components, high-precision glass lenses and glass–ceramic mirror substrates play a decisive role in determining the performance of these systems. Constant requirements and expansion of the fields of application demand an increase in manufacturing quality with regard to dimensional accuracy. Polishing is a decisive factor in determining the quality within the production chain of glass optics.

Despite thousands of years of human use of polishing, fundamental mechanisms of action are not yet fully understood. The creation of physical models only offers the possibility to look at individual aspects of polishing. Classifying polishing processes according to the principles of action of polishing is equally unhelpful. Depending on the workpiece or material to be polished, the polishing process must be considered as a whole. The choice of polishing medium, polishing pad and kinematics, among other things, must be made individually for the problem. Data-driven systems offer a possibility to represent such complex systems almost completely. A large number of main process parameters are collected and analysed by statistical evaluation, Big Data models or machine learning models.

In the present work, such an intelligent glass polishing system was set up for any contours, not exclusively for plane specimens. The basis is formed by robot polishing heads equipped with sensors. Adding sensors to an existing industrial polishing head is more complex than creating a new and optimised polishing head. Improved components and actuators (including ceramic ball bearings, dynamic rotary and linear motors) are used in the new design. Therefore, this polishing head has lower divergences in the running polishing process and the sensors can be used directly in their purpose. Indirect measurement, for example speed measurement on the gearbox and not on the motor, is not necessary with the new design. The evaluation of the sensor data via standard procedures alone shows the necessity of such a sensor-based design.

An optimised machine learning model is created from a conventional basic model by hyperparameter optimisation. The network parameters of the neural network are again shown in Table 3. The optimised and subsequently trained network has a prediction probability of 90.22% and thus exceeds all previous literature values.

A data-driven model lacks generalisability: it only applies to this or similar robot cells with this polishing head and the input parameters. If the process parameters vary too much, if the wear of the polishing head is too high or if other material materials are used, the machine learning model cannot make a sufficient statement about the removal rate. The process divergence of the individual parameters is recorded as a by-product and can be additionally evaluated by machine or manually. In an industrial manufacturing environment, the data-driven model can be used, for example, for one type of polishing head, each attached to a robot from a common robot manufacturer. An application for other glasses should also be investigated.

The manufacturer of the PLC used now offers interfaces for machine learning models that have already been trained. Trained neural networks with their parameters (including number of layers, number of neurons, individual weights of the neurons) can be loaded onto the PLC and used. Currently, the system is not real-time capable, so there is a slight delay of a few seconds. Statements about the material removal would no longer have to be made offline but can also be provided by the PLC in the process [58].

The roughness after the individual polishing steps and damage under the surface, so-called "sub-surface damage" (SSD), are not taken into account in the polishing in the present work. However, when using a polishing wheel, structures are created in the direction of rotation of the polishing wheel. The direction-dependent traces of the polishing wheel are clearly visible. First attempts to apply the "maximum entropy method" [59] took place in the margins of this work, without being part of it. In this method, the polishing head is rotated around its Z-axis, perpendicular to the contact point of the polishing head. Controlled chaos should be introduced into the polishing path to avoid or reduce polishing structures.

Although the central question of a data-based polishing model has been answered, additional questions have arisen during the course of this work that should be pursued in future research. In the future, the optics industry should provide standardised machine learning data to the research community. This would allow researchers to use the data without all the hassle of building their own, and in return the optics industry would get better algorithms and better results. This has led to the push for the technology at the CMP and the publication of numerous technical papers. In addition, sensors should be placed closer to the polishing gap to develop a data-based system for the mechanisms of action in the gap. Design improvements to the polishing heads can and will continue to significantly improve the understanding of the process. This will also enable the consideration of further and also new questions in the polishing process.

The machine learning model has not yet been applied to the PHM data. With the statistical software, however, a better result could be achieved through process understanding than the winning teams (e.g. eliminating outliers). A publication on these results is still pending.

There is a high level of interest on the part of industry due to the high growth in the photonics sector and the focus of German companies on precision optics. The shift from an empirical, experience-based to a knowledge-based process design is considered an indispensable step for maintaining the competitiveness of Germany as a production and research location. Polishing is currently not sufficiently researched.

Data availability

Most of the expertise is in process development and therefore data cannot be provided as this would allow conclusions to be drawn about process expertise.

Code availability

The source code is not provided, but can be made available as part of a joint research project.

References

Henshilwood CS, d’Errico F, Marean CW, Milo RG, Yates R (2001) An early bone tool industry from the Middle Stone Age at Blombos Cave, South Africa: implications for the origins of modern human behaviour, symbolism and language. J Hum Evolut 41:631–678

Curtis J, Reade J, Collon D (eds) (1995) Art and empire: treasures from Assyria in the British Museum. Metropolitan Museum of Art, New York

Preston FW (1922) The structure of abraded glass surfaces. Struct Abraded Glass Surf 23(3):141

Cook LM (1990) Chemical processes in glass polishing. J Non-Cryst Solids 120:152–171

Evans CJ, Paul E, Dornfeld D (2003) Material removal mechanisms in lap** and polishing. https://escholarship.org/uc/item/4hw2r7qc

Klocke F, Zunke R (2009) Removal mechanisms in polishing of silicon based advanced ceramics. CIRP Ann 58:491–494

Klocke F, Brecher C, Zunke R, Tuecks R (2011) Corrective polishing of complex ceramics geometries. Precis Eng 35:258–261

Becker E (2011) Chemisch-mechanische Politur von optischen Glaslinsen. Zugl.: Aachen, Techn. Hochsch., Diss., Aachen: Shaker (Berichte aus der Werkstofftechnik)

Lee H, Lee D, Jeong H (2016) Mechanical aspects of the chemical mechanical polishing process: a review. Int J Preci Eng Manuf 17:525–536

Brinkmann U (ed) (2000) Deutsche agenda optische technologien für das 21. Jahrhundert: potenziale, trends und erfordernisse. VDI-Technologiezentrum, Düsseldorf

Dirk HE, Udo D, Eric E (2017) Verfahren zur Herstellung eines Substrats und Substrat. Carl Zeiss SMT GmbH, 73447 Oberkochen, DE. Veröffentlichungsnr. DE 10 208 302 A1

Geyl R (2002) Recent developments for astronomy at SAGEM. In: Atad-Ettedgui E, D'Odorico S (Eds.), Specialized optical developments in astronomy: SPIE, (SPIE Proceedings), S. 67

Siegfried S, Yaolong C (2002) Method and apparatus for polishing or lap** an aspherical surface of a work piece. Veröffentlichungsnr. US6733369

Chen G, Yi K, Yang M, Liu W, Xu X (2014) Factor effect on material removal rate during phosphate laser glass polishing. Mater Manuf Process 29:721–725

Golini D, Kordonski WI, Dumas P, Hogan SJ (1999) Magnetorheological finishing (MRF) in commercial precision optics manufacturing. In: Stahl HP (Eds.), Optical manufacturing and testing III: SPIE, (SPIE Proceedings), S. 80–91

Kiikka C, Nassar T, Wong HA, Kincade J, Hull T, Gallagher B, Chaney D, Brown RJ, McKay AC, Lester M et. al. (2006) An overview of optical fabrication of the JWST mirror segments at Tinsley. In: Mather JC, MacEwen HA, Graauw MW (Eds.), Space telescopes and instrumentation I: optical, infrared, and millimeter: SPIE (SPIE Proceedings), 62650V

Zeeko (2021) Ultra-precision polishing. https://www.zeeko.co.uk/products.html – Überprüfungsdatum 07 Mar 2021

Ghosh G, Sidpara A, Bandyopadhyay PP (2018) Fabrication of optical components by ultraprecision finishing processes pp. 87–119

National Research Council (1999) Harnessing light: optical science and engineering for the 21st century. 2. Print. National Acad Press, Washington

Wolf S (2002) Deep-Submicron process technology. Sunset Beach, Calif.: Lattice Press, (Silicon processing for the VLSI era/Stanley Wolf; Richard N. Tauber ; Vol. 4)

Luo J, Dornfeld DA (2004) Integrated modeling of chemical mechanical planarization for sub-micron IC fabrication: from particle scale to feature, die and wafer scales. Springer, Berlin, Heidelberg

Mullany B, Byrne G (2003) The effect of slurry viscosity on chemical–mechanical polishing of silicon wafers. J Mater Process Technol 132:28–34

Boning DS, Moyne WP, Smith TH, Moyne J, Telfeyan R, Hurwitz A, Shellman S, Tayor J (1996) Run by run control of chemical-mechanical polishing. IEEE Trans Compon Packag Manuf Technol Part C 19:307–314

Khalick MAE, Hong J, Wang D (2017) Polishing of uneven surfaces using industrial robots based on neural network and genetic algorithm. Int J Adv Manuf Technol 93:1463–1471

Yu Y, Kong L, Zhang H, Xu M, Wang LL (2019) An improved material removal model for robot polishing based on neural networks. Infrared Laser Eng 48:317005

Dieste JA, Fernández A, Roba D, Gonzalvo B, Lucas P (2013) Automatic grinding and polishing using spherical robot. Procedia Eng 63:938–946

Pilný L, Bissacco G (2015) Development of on the machine process monitoring and control strategy in Robot Assisted Polishing. CIRP Ann 64:313–316

Ahn JH, Lee MC, Jeong HD, Kim SR, Cho KK (2020) Intelligently automated polishing for high quality surface formation of sculptured die. J Mater Process Technol 130–131:339–344

Sigenic (2020) Sigenic - engineering intelligent solutions: vibration sensor Series. https://www.sigenic.com/–Überprüfungsdatum 26 Oct 2020

Sensorfar (2020) In-situ metrology for pad surface monitoring in CMP. https://www.sensofar.com/de/cs13_in-situ-metrology-for-pad-surface-monitoring-in-cmp/. – Aktualisierungsdatum 26 Oct 2020

Kuechel MF (1990) New Zeiss interferometer. In: Proc. SPIE 1332, optical testing and metrology III: recent advances in industrial optical inspection 655

Gross H, Dörband B, Müller H (2012) Handbook of optical systems, Volume 5, Metrology of optical components and systems. Wiley-VCH, Weinheim

Kuechel MF (2020) Precise and robust phase measurement algorithms. Springer, New York, pp 371–384

Kuechel MF, Wiedmann W (1990) In-process metrology for large astronomical mirrors. In: Sanger GM, Reid PB, Baker LR (Eds.), Advanced optical manufacturing and testing: SPIE, (SPIE Proceedings), 280

Zygo (2020) Produktbeschreibung NewView 8300. https://www.zygo.com/products/metrology-systems/3d-optical-profilers/newview8300

Pieplow und Brandt (2020) Datenblätter Gesamtlieferprogramm: Abralox E220; Plakor 9

Statease (2020) Design Expert Version 12. https://www.statease.com/software/design-expert/ – Überprüfungsdatum 28 Dec 2020

Minitab (2020) Faktorielle und teilfaktorielle Versuchspläne. https://support.minitab.com/de-de/minitab/18/help-and-how-to/modeling-statistics/doe/supporting-topics/factorial-and-screening-designs/factorial-and-fractional-factorial-designs/. – Aktualisierungsdatum: 2019 – Überprüfungsdatum 25 Dec 2020

Esbensen KH, Swarbrick B, Westad F, Whitcombe P, Andersen M (2018) Multivariate data analysis: an introduction to multivariate analysis, process analytical technology and quality by design, 6th edn. CAMO, Oslo, Magnolia, TX

Box GEP (1990) George’s Column. Qual Eng 2:497–502

Kelm A (2016) Modellierung des Abtragsverhaltens in der Padpolitur. Technische Universität Ilmenau. Dissertation. http://d-nb.info/1126750794/34

UCI (2020) Machine learning repository: center for machine learning and intelligent systems. https://archive.ics.uci.edu/ml/index.php–Überprüfungsdatum 28 Dec 2020

Crowd Flower (2016) Data science - report 2016

Lima BMR, Ramos LCS, Oliveira TEA, Fonseca VP, Petriu EM (2019) Heart rate detection using a multimodal tactile sensor and a z-score basedpeak detection algorithm. https://proceedings.cmbes.ca/index.php/proceedings/article/view/850/843

Zheng A, Casari A, Lotze T (2019) Merkmalskonstruktion für machine learning : prinzipien und techniken der datenaufbereitung. 1. Auflage

Géron A, Rother K, Demmig T (2020) Praxiseinstieg machine learning mit scikit-learn, keras und tensorflow: konzepte, tools und techniken für intelligente systeme. 2. Auflage, (Animals)

Ross A, Willson VL (eds) (2017) Basic and advanced statistical tests. Sense Publishers, Rotterdam

Garcia L (2019) Machine Learning roboter polierzelle - entwicklung eines neuronalen netzes für polierprozesse. Aalen, Hochschule Aalen, Zentrum für Optische Technologien. Masterthesis

Kleppmann W (2011) Taschenbuch versuchsplanung: produkte und prozesse optimieren. 7., aktualisierte und erw. Aufl., [elektronische Ressource]. München : Hanser, (Praxisreihe Qualitätswissen)

PHM, Prognostics and health management society (2020) Annual conference of the prognostics and health management society 2016 : phm data challenge. https://www.phmsociety.org/events/conference/phm/16/data-challenge–Überprüfungsdatum 08 Nov 2020

Kong Z, Beyca O, Bukkapatnam ST, Komanduri R (2011) nonlinear sequential bayesian analysis-based decision making for end-point detection of chemical mechanical planarization (CMP) processes. IEEE Trans Semicond Manuf 24:523–532

Jia X, Di Y, Feng J, Yang Q, Dai H, Lee J (2018) Adaptive virtual metrology for semiconductor chemical mechanical planarization process using GMDH-type polynomial neural networks. J Process Control 62:44–54

Lu J, Rogers C, Manno VP, Philipossian A, Anjur S, Moinpour M (2004) Measurements of slurry film thickness and wafer drag during CMP. Wear 151:G241

Komanduri R, Lucca DA, Tani Y (1997) Technological advances in fine abrasive processes. CIRP Ann 46:545–596

Segreto T, Teti R (2019) Machine learning for in-process end-point detection in robot-assisted polishing using multiple sensor monitoring. Int J Adv Manuf Technol 103:4173–4187

Fang SJ, Barda A, Janecko T, Little W, Outley D, Hempel G, Joshi S, Morrison B, Shinn GB, Birang M (1998) Control of dielectric chemical mechanical polishing (CMP) using an interferometry based endpoint sensor. In: Proceedings of the IEEE 1998 International Interconnect Technology Conference (Cat. No.98EX102): IEEE, pp. 76–78

Pruss C, Baer GB, Schindler J, Osten W (2017) Measuring aspheres quickly: tilted wave interferometry. Opt Eng 56:111713

Beckhoff Automation (2021) Maschinelles Lernen für alle Bereiche der Automatisierung. https://www.beckhoff.com/de-de/produkte/automation/twincat-3-machine-learning/–Überprüfungsdatum 04 Mar 2021

Dai YF, Shi F, Peng XQ, Li SY (2009) Restraint of mid-spatial frequency error in magneto-rheological finishing (MRF) process by maximum entropy method. Sci China Ser E Technol Sci 52:3092–3097

Funding

Open Access funding enabled and organized by Projekt DEAL. The work is part of a funded German research project named OpTec 4.0 with the goal of funding young scientist, contract number 13FH003IB6. The authors would like to thank the Federal Ministry of Education and Research for funding this project. The results presented are part of the PhD thesis of Max Schneckenburger.

Author information

Authors and Affiliations

Contributions

Conceptualization: MS; Methodology: MS; SH; Software: LG; MS; Writing—original draft preparation: MS; Writing—review and editing: RA; Funding acquisition: RB, Resources: MS; Supervision: RaB.

Corresponding author

Ethics declarations

Conflicts of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Ethics approval

Neither humans nor animals were used for the experiments. The publication was prepared in compliance with ethical standards (e.g. no manipulation of data, no plagiarism).

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Schneckenburger, M., Höfler, S., Garcia, L. et al. Material removal predictions in the robot glass polishing process using machine learning. SN Appl. Sci. 4, 33 (2022). https://doi.org/10.1007/s42452-021-04916-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-021-04916-7