Abstract

We describe a framework for global ship** container monitoring using machine learning with low-power sensor hubs and infrared catadioptric imaging. A mesh radio satellite tag architecture provides connectivity anywhere in the world, with or without supporting infrastructure. We discuss the design and testing of a low-cost, long-wave infrared catadioptric imaging device and multi-sensor hub combination as an intelligent edge computing system that, when equipped with physics-based machine learning algorithms, can interpret the scene inside a ship** container to make efficient use of expensive communications bandwidth. The histogram of oriented gradients and T-channel (HOG+) feature is introduced for human detection with low-resolution infrared catadioptric images, and is shown to be effective for various mirror shapes designed to give wide volume coverage with controlled distortion.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the 80 years since a trucker named Malcolm McLean first conceived the modern-day ship** container, ship** has exploded into a $400B a year industry [1]. The ship** container has radically transformed supply chains, fundamentally inter-twined domestic and international economies across the world, and changed societies in the process. In a recent survey by the World Ship** Council, whose members operate as much as 90% of the global liner ship capacity, the international liner ship** industry transported approximately 130 million containers packed with cargo, with an estimated value of more than $4 trillion dollars [2]. These metal boxes will remain a staple into the future for an industry that relies upon their ease of use for transporting all types of cargo.

Despite their versatility, ship** containers are easy to break into, and difficult to keep track of, as they are transported around the globe. Cargo theft and cargo loss are estimated to cost the industry at least $50B annually, according to The National Cargo Security Council [3]. Losses can occur in a myriad of ways, from containers being mislaid, mislabeled, or simply failing to arrive at their destination, to instances involving premeditated criminal intent, such as breaking into ports to steal goods, or pirates attacking crews at gunpoint for their valuable cargo. In the last several decades, security and visibility of the global supply chain has largely been addressed through improved locking mechanisms and radio tracking devices that are attached to ship** containers, and it has been estimated that there are now over one million remote tracking systems on containers worldwide [4]. The latest adaptations to the existing technology include GPS capable devices that use satellite, cellular, or Wi-Fi connectivity. Containers are often modified post manufacturing with better locks or smart technology for improved security and visibility.

These methods have enhanced the industry but have come at a high monetary cost due to the infrastructure needed to track devices that use short-range radio frequency identification (RFID) and in some cases high bandwidth costs to transmit the data wirelessly. Vulnerabilities and shortfalls plague current RFID tracking methods, especially during disaster relief when wireless networks are destroyed, in emerging markets which exist outside wireless coverage areas, and in providing real-time information to stakeholders.

New technology offers lower cost and higher efficiency sensors connected to the internet that can better inform decision-making. Recent developments in this technology have improved sensing capability with lower power consumption. Ubiquitous devices still have bandwidth and power limitations, but the challenge of working in austere environments has been improved via on-board processors with the ability to do edge computing. By processing data near the sensor, bandwidth is dramatically reduced between the sensors and any central datacenter. Thus, it is now possible to equip containers with a device that can talk to stakeholders during its entire voyage in the transportation network. Better yet, it is possible to put sensors on the inside of the ship** container without drilling holes to feed signal wires by transmitting data through the container wall using ultrasonic waves.

2 GRIDSAT tag architecture

Our goal in this research is to develop a real-time tracking device for containerized goods. The system incorporates the existing GRIDSAT Tag architecture (Fig. 1) to provide location and status of goods as they are transported around the globe.

GRIDSAT Tag Architecture utilizes interconnected GRID tags configured in a self-healing mesh network which communicate with a GRIDSAT tag. Messages are compiled and transmitted via satellite communications to stakeholders through a GIS Software Application Package [5]. We use the Iridium satellites for full global coverage [6]

Low power sensor hubs [7] like those found in wearables and smartphones, fuse the inputs of several different types of MEMS sensors such as accelerometers, magnetometers, and gyroscopes without engaging the main processor, thereby reducing power consumption by up to 95%. A device that incorporates these low power sensor hubs can be attached to the inside of a container to detect intrusion or changes in conditions to monitor the status of goods inside. New compact long-wave infrared (LWIR) imaging systems also provide useful features for our application because they readily detect the body heat of an intruder. The Seek Thermal Compact LWIR camera is a small, inexpensive device which attaches to a smartphone (Fig. 2).

The left image is a false-color thermography image of a person standing inside the open door of a ship** container. Note that the roof is bright because it’s being heated by the sun, as is the background seen through the open door. The middle image shows a similar view with the catadiotropic mirror above the door and seek thermal imager attached to the roof identified. The right image shows a close-up view of the data collection apparatus with Seek Thermal LWIR camera, catadioptric mirror, and TI Sensor Tag that communicates to the iPhone 5

First, we use machine learning techniques to maximize status awareness and minimize data transmission with data collected by various microelectromechanical sensors (MEMS). Second, we transmit information through container walls without drilling holes and feeding wires utilizing ultrasonic guided waves via an appropriate combination of frequency and amplitude modulation (Fig. 3). This allows the device to be attached to the inside of a container and communicate to a device on the outside of the container, which is connected to a GRIDSAT Tag architecture for worldwide tracking.

Lamb waves can be transmitted in a pitch-catch setup from a piezoelectric transducer on the inside surface of a container to a transducer on the outside surface of the container. We find that these guided waves can be encoded with information via an appropriate combination of frequency and amplitude modulation [8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24]

3 Catadioptric imaging

With a 36-degree diagonal angle of view, the Seek Thermal cannot fully cover the inside of a ship** container, but there are several methods for increasing that field of view. The first is refractive optics such as a fisheye lens, but lens materials for the LWIR can be expensive and infrared fisheye applications often use a system of multiple lenses. The second is reflective optics which combines a standard imaging system with a convex mirror of polished brass or aluminum. A third method is rotating the imaging system, but that method is prone to failure and our goal is to make the device as robust and low-cost as possible. Since we are talking about a semi-disposable system operating in a harsh environment, our assessment is that a simple, low-cost shaped reflector is the right approach.

Chahl and Srinivasan state the best shape for an omnidirectional mirror is hyperbolic [25] because only the hyperbolic mirror guarantees a linear map** between the angle of elevation and the radial distance from the center of the image plane. Moreover, the resolution in the omnidirectional image captured increases with growing eccentricity, and hence, guarantees a uniform resolution for the panoramic image after unwar**.

Chahl and Srinivasan also note that mis-alignment of the camera with respect to the reflective surface produces distortions in the image from the camera. Similarly, a lateral displacement (orthogonal to the axis of the surface) results in a distortion of the plane of displacement that is offset from the correct angle of reflection. Therefore, polynomial surfaces must be imaged with the focus very close to the surface. The exact location of the focus varies with radial angle, unlike a plane mirror. To achieve high-resolution images, it is necessary to use a camera with a wide depth of field and the ability to focus at close range. This is simply a result of the surface being curved and is also true for spherical mirrors and cones. Intensive ray tracing is necessary to fully map the location of the focus for objects at different ranges and different angles of elevation. In practice, however, such a procedure is not required because of the large depth of field of typical charge coupled device video cameras, for which these surfaces are often designed.

Kecskes et al. [26] utilize a commercial off-the-shelf GoPano reflector, originally designed for visible light applications, to develop a nighttime surveillance concept to detect moving, human-sized heat sources at ranges between 10m and 70m. They replaced the \(\hbox {SiO}_2\) coating on the aluminum surface of the GoPano reflector with a Clausing Beral coating that is highly reflective in the thermal infrared and requires no additional overcoating. Commercial IR coating companies also offer low-temperature application processes for metallic coatings, so in addition to 3D printing and then coating the required geometry as we do here, it is possible to mill a single-surface mold, vacuum thermo-form a sheet of plastic, and then coat it with an IR reflective material, resulting in low-cost custom-shaped front-surface reflectors.

3.1 Catadioptric theory and design

A catadioptric sensor uses a combination of lenses and mirrors configured to capture a wide field of view [27]. Many mirrors today seek an omnidirectional field of view (FOV) which give a warped view of the world in a donut shape on the focal plane array. This invariably renders portions of the rectangular focal plane array unusable, reducing the pixel use efficiency and increasing the effective price per pixel of the detector [28]. The very low-cost LWIR imagers we are using do not have pixels to waste, so that becomes a primary design consideration. It is often the case that more complicated designs require careful registration between the camera lens and the mirror. The environment for our application can be jarring and a container’s route over land and sea requires multiple on and off loadings, so we seek a design that is robust to the conditions and one that allows proper sampling of the scene for decision making.

Baker and Nayar [27] developed the complete class of conic section mirrors used in catadioptric sensors that satisfy the single viewpoint (SVP) constraint for omnidirectional viewing. The SVP is rigid by design, so Swaminathan et al. [29] developed the geometry and analysis for non-single viewpoint (non-SVP) catadioptric systems which pose no constraints on mirror shape and relative position to the camera.

Yagi and Yachida [30] developed a tiny omnidirectional image sensor for autonomous robot navigation that adopts a technique used in reflecting telescopes to minimize the influence of blurring. Their design overcomes spherical aberration, astigmatism, and coma by using a two-mirror system with a convex primary mirror to capture rays from the environment and a concave secondary mirror to converge the rays to a single center of projection. This design is subject to the same limitation as the Chahl and Srinivasan family of mirrors, in that it is not suitable for harsh environments and requires careful calibration for assembly. Hicks [31] developed a conquistador helmet shaped mirror using differential methods that yields a cylindrical projection to the viewer without digital unwar** and leads to a resolution that is more uniform. Similarly, Srinivasan [32] designed equatorial view and polar view mirrors which eliminate the need for computational resources to map circular images into a rectangular form for analysis. Hicks [33] also developed a method for catadioptric sensor design for realizing prescribed projections. Realizing implementation of these types of freeform mirrors can be a manufacturing challenge and thus expensive.

Existing thermal panoramic imagers with parabolic or hyperbolic reflectors have optical aberrations which are typically reduced by imaging at low numerical apertures. At small apertures, any lens operates in a near-pinhole mode, but this approach to reduction of aberrations comes with a significant loss of light. In panoramic catadioptric systems, the mirror size affects the effective aperture. Larger catadioptric panoramic cameras have lower aberrations and produce better images, at the expense of the overall larger size of the optics. Small panoramic optics with low aberrations presents a design challenge for thermal optics where diffraction of the longer wavelength dictates large apertures, regardless of the light throughput, in order to match the diffraction limited spot size with the pixel pitch of the sensor.

Gutin et al. [34] developed an infrared panoramic imaging sensor for 360-degree automatic detection, location, and tracking of targets. In testing the effect of an SVP versus a non-SVP optical system arrangement, they determined a noticeable difference at short distances. However, they found that a critical distance for the detection of an object exists when the angular size of the optics as viewed from the object is equal to or larger than the immediate field of view, or the angle subtended by a single pixel in the object space. They also concluded that a non-SVP optical system with axial symmetry only affects the elevation of the object which still allowed determination of azimuth of the object. In a concept developed by Simon Thibault [35], which he calls the IR panamorph lens, we can think of the surveillance problem in terms of a pixel allocation problem by using distortion as a design parameter. This allows us to optimize our design by providing the highest resolution coverage where it is needed by dividing the coverage area into zones. Once the coverage area is divided into specific security zones a relative angular resolution analysis is used to allocate pixels.

When contemplating mirror shapes, we considered field of view, spatial resolution, and distortion for various zones of coverage. There is an inherent interplay between these considerations which invariably leads to tradeoffs. Common security mirror shapes are spherical. The half dome or quarter dome variety are often used to facilitate wide fields of view and to see around corners. However, these mirror shapes do not allocate pixels efficiently, in a catadioptric sensor, when paired with a rectangular focal plane array. We found that an oblate sphere with a somewhat flattened equatorial zone works well to capture the inside of a ship** container. Figure 4 shows an example of our experimental mirror shape inside of a checkboard lined box to illustrate distortions in three zones. The equatorial zone is rounded on the sides to allow viewing into the corners but generally flat offering minimal distortion to the opposite wall. The top and bottom zones are domed and preserve parallel lines, but, very small blind areas exist directly below and above the mirror. In practice, catadioptric design is a process from detector through lens (system) to the reflector such that aberrations are reduced or eliminated. We begin our proof-of-concept using an off-the-shelf, infrared imaging device with a 206 × 156 pixel focal plane array and estimated its lens parameters [8].

A single effective viewpoint is achieved by positioning the entrance pupil of the lens at one of the focal points of the reflector, making the other focal point the effective viewpoint. However, if the camera is placed between the mirror and the scene, a portion of the scene will be obscured by the camera. Thus, we prefer a non-single viewpoint sensor with no required projection of planes in the scene for two reasons. First, we have limited pixels to capture events, so we can ill afford to obscure any portion of the scene by the camera. Second, the movement of ship** containers in the supply chain will inevitably jostle the camera’s position relative to the mirror. Thus, we design robustness into the system by selecting a mirror geometry that achieves a full field of view of the inside of a ship** container while allowing for slight shifting during movement over time. The mirror’s profile is then generally rectangular, to maximize utilization of the camera’s FPA, with the necessary curvature to provide the desired field of view.

Seek Thermal publishes limited data about the technical characteristics of their device, but interaction with their support team has provided sufficient information to make approximations for the design of a catadioptric system. We do not know the true aperture size, thus, we do not know the entrance pupil or f-number of the imaging device. Moreover, we do not know the true detector size as it was estimated with the given pixel pitch of 12 microns [36]. Therefore, we estimate a focal plane array of 2.472 × 1.872 mm (3.10 mm diagonal). Furthermore, we computed a focal length of 4.77 mm using the manufacturer stated \(36^\circ\) diagonal angle of view. The Seek Thermal lens material is chalcogenide which has a refractive index of approximately 2.60 at \(10 \mu\)m assuming BD-2, which is composed of \(\hbox {Ge}_{28} \hbox {Sb}_{12} \hbox {Se}_{60}\). It offers low dispersion, broad transmission, and has minimal absorption [37]. Using the Camera Calibration Toolbox for MATLAB [38] we measured tangential and radial distortion of the Seek Thermal. We captured 21 images of a heated 6”x 6” flat piece of T316 welded stainless steel mesh in different positions by rotating/translating the wire mesh [8]. We took extra caution to detect corners manually by framing the region using the outside edges of the wire and included the wire thickness in the square size computation. Points at the corners of the image were displaced by as much as 45 pixels due to radial distortion. Radial lens distortion is a symmetric distortion caused by the lens due to imperfections in curvature when the lens was ground. By inspection, it is not clear if the measured radial distortion in the Seek Thermal lens produces barrel or pincushion distortions. It is possible that a lateral chromatic aberration exists in the lens which is due to radial and wavelength dependencies [39]. Nonetheless, considering noise effects and errors in finding the exact corners of the non-perfect steel mesh grid pattern we conclude there are no significant non-linear effects of the Seek Thermal lens allowing us to proceed with mirror design.

3.2 Mirror design

The parametric equations of a sphere have parameters s and t and radius r, modified by scalars a, b and c to control the scaling of z as well as to flatten the face of the sphere to reduce distortion in the main region of detection. We use the following parameterization of the mirror surface.

where \(0 \le s \le \pi\) and \(-\pi /2 \le t \le \pi /2\) where a, b, c, and r are constants such that \(a>b>0\) and \(c>0\) and r is the radius.

Determining the exact location of the imaging device’s entrance pupil to the reflector is important for two reasons. First, we want to capture the entire reflector in the frame of the camera. Second, we need the exact location of the entrance pupil to compute fields of view and spatial resolutions. Our method fits a rectangle to the exact back profile of the mirror then computes the distance of the entrance pupil from the mirror for various pupil heights fixing the lens’ diagonal angle of view. The pose (rotation) of the entrance pupil is fixed at the origin of our system, but it can be adjusted through a range of angles as necessary.

The camera’s FOV is not the angle of view (36 degrees) used to position the camera. Due to the geometry of the mirror, the FOV is derived in three-dimensions for the non-SVP case [29]. A three-step process begins by computing the caustic [40] which can be thought of as the locus of viewpoints of these rays [41]. We model the incident and reflected rays using surface normals and we find two extreme intersection points along the equator of both the reflector and the caustic surfaces. Finally, we determine the camera’s horizontal FOV by computing the angle between two incident rays which are formed from the lens’ entrance pupil and extending to the two extreme intersection points on both surfaces.

The model for a lens-based camera placed off-axis with respect to a symmetric reflector parameterized by (s, t). The viewpoint locus (caustic surface) of this imaging system is not rotationally symmetric and must be derived in three dimensions. The incident light ray \(V_i(s,t)\) reflects off the reflector surface at \(S_r(s,t)\) and images after passing through the entrace pupil O along the reflected ray \(V_r(s,t)\). The surface normal \(N_r(s,t)\) is used in the Law of Reflection, in conjunction with the reflector surface, to define the reflected and incident rays

A pixel element of area \(\delta A\) in the image plane projects through the entry pupil of the lens onto the reflector as a region of area \(\delta S\). The pupil is located at (0, 0, d), with respect to the origin. The principal ray from \(\delta A\) reflects off the reflector at \(S_R\left( x(t,\theta ), y(t,\theta ), z(t,\theta )\right)\). The corresponding viewpoint on the caustic surface is shown above. The solid angle subtended at this viewpoint is then \(\delta \omega = \delta S/r^2_c\), where \(r_c\) is the distance of the viewpoint from the reflector. Resolution is then defined as a ratio of \(\delta \omega\) to \(\delta A\)

Referring to Fig. 5, the caustic is formed by letting \(S_r (s,t)\) be a point on the three-dimensional reflector, parameterized by (s, t) [29].

Let O denote the position of the entrance pupil of the lens. For any point \(S_r (s,t)\) on the reflector, we define the direction of the ray entering the pupil as:

Given a reflector geometry, we define the incoming light rays \(V_i (s,t)\) using surface unit normals \(N_r (s,t)\), where \(\times\) denotes the cross product [29, 42].

The caustic is then defined by [9]:

where \(r_c\) denotes the distance from the point of reflection at which the caustic lies along \(V_i (s,t)\). A point on the caustic is defined as a singularity in the space of scene rays, parameterized by \((r_c,s)\). Thus, in the limit, traversing infinitesimally along \(V_i (s,t)\) (change in \(r_c\)) at the caustic is equivalent to traversing from one ray to the next (change in s) [29]. At this point, the determinant of the Jacobian \(J(S_r (s,t)+r_c\cdot V_r (s,t))\) must vanish [42]. We find the roots of the quadratic equation that results in solving for \(r_c\) after applying the Jacobian method [29, 41] for non-single viewpoint catadioptric cameras.

The x, y, and z components of the vectors are denoted as \(S_r (s,t)_x, S_r (s,t)_y\), and \(S_r (s,t)_z\), respectively. The roots are substituted into the equation of the caustic \(S_c (s,t)\) yielding its analytic form.

Swaminathan et. al proved that the point on the reflector at which a light ray passing through the entrance pupil of the lens grazes the reflector is also its caustic point [29]. Thus, step two of computing the camera’s field of view requires finding the two furthest intersection points along the x-axis between the reflector surface and the caustic (Fig. 6).

The x-axis corresponds to the horizontal direction, or equatorial cross section of the mirror, in our coordinate system. Therefore, the extreme x-values correspond to the furthest right-hand and left-hand points on the two surfaces, a two-dimensional computation for the rotationally symmetric case. An Extended Newton Method is used to compute the intersection points between the two surfaces: reflective surface \(S_r (s,t)\) and caustic \(S_c (u,v)\) [43]. Our system consists of three equations \(f_1,f_2,f_3\) and four variables s, t, u, and v, which are parameterized by w, as defined below.

We proceed by re-parameterizing the caustic surface \(S_c\) with parameters u and v, to differentiate the two surfaces, and use differential geometry procedures [43]. Let \(S_r\) and \(S_c\) be as follows:

where, \(S_r, S_c \in [0,1]^2\). The problem of intersection is solved by computing the set:

In the non-degenerate case the solution manifold M consists of one or several curves each fulfilling the condition:

where w is the arc-length parameter. We parameterize by arc-length w which is sometimes used to trace a constant distance along the arc-length of the intersection curve. We interpret the pair of two curves as: \(k_1 (w)=(s(w),t(w) )^T\subseteq [0,1]^2\) and \(k_2 (w)=(u(w),v(w) )^T\subseteq [0,1]^2\) which represent the path of intersection on each of the surfaces in the corresponding parameter space.

Following a process described by Alsaidi [43], we implement a routine in MATLAB by first setting \(S_r (s,t)= S_c (u,v)\) and solving for the parameters (s, t, u, v). The process begins by obtaining an appropriate start point lying within the solution manifold. An arbitrary initial guess to start the Extended Newton Method results in sporadic intersection points that are sometimes unwanted intersection points on the back portions of the reflector and caustic. Marching methods, used to find the intersection curves between parametric surfaces, took longer than 72 hours runtime using MATLAB on the William & Mary high-performance computing cluster [44]. This was due to the complicated caustic surface function. We overcame this by estimating surface values over the defined domain with a meshgrid of computed surface values to find the corresponding parameters values for Extended Newton Method initialization.

Our first approach to find the intersection between the reflector and caustic surfaces met resistance in multiple ways. First, it is not possible to plot the caustic directly. Not being able to visualize the two surfaces meant it was a guessing game which root to use for the caustic surface. Second, our code resulted in three roots when solving the Jacobian, which led to some trial and error to find the correct root. After multiple attempts trying each root value, we decided to estimate the caustic surface and use a discrete meshgrid to aid the convergence of iterative methods. With good approximate initial values of parameters s, t, u, and v convergence was fast, about 5 minutes with 25 iterations on average, using a tolerance value of \(1\times 10^{-7}\).

3.3 Horizontal and vertical fields of view

The camera’s horizontal field of view (C-HFOV) is computed as the angle between a vector from the entrance pupil to extreme point #1 (corresponding to the maximum x-value) and a vector from the entrance pupil to extreme point #2 (corresponding to the minimum x-value). This computed C-HFOV is greater than the nominal 28.35 degree horizontal angle of view (computed from the Seek Thermal’s 36 degree diagonal angle of view) used to position the camera. The reason for different values is due to the geometry of the reflector. It is important to go through this process, as merely using the incident rays on the reflector’s surface does not account for the locus of viewpoints as described above. The catadioptric system’s horizontal field of view (CS-HFOV) follows as the angle between the same incident rays just described. We conclude that this must be the CS-HFOV by visual inspection of the combined plot of caustic and corresponding reflector surfaces. There are no other incident rays with a wider field of view that reflect off of the mirror and enter the pupil.

We compute the catadioptric system’s vertical field of view (CS-VFOV) as the angle between incident rays on the top and bottom of the mirror surface. These incident rays are found by searching along elliptical contours on the top and the bottom of the reflector surface in 5-degree increments until incident rays that reach the pupil are found. This also helps to trim the surface by removing unnecessary curvature that cannot be viewed from each pupil location. Moreover, this procedure does not change any characteristics of the mirror, but instead defines the CS-VFOV from our model definition.

We derive the 2D spatial resolution for a conventional perspective camera, which has a frontal image plane located at a distance f from the camera pupil (modeled as a pinhole) and whose optical axis is aligned with the axis of symmetry of the mirror. See Fig. 6 for an illustration of this scenario. Following the method by Baker and Nayar [45], we consider an infinitesimal area \(\delta A\) on the image plane. If this infinitesimal pixel images an infinitesimal solid angle \(\delta \omega\) of the world, the resolution of the sensor (as a function of the point on the image plane at the center of the infinitesimal area \(\delta A\)) is \(\delta A/\delta \omega\). If \(\psi _i\) is the angle between the optical axis and the line joining the pinhole to the center of the infinitesimal area \(\delta A\), the solid angle subtended by the infinitesimal area \(\delta A\) at the pinhole is:

Therefore, the resolution of the conventional camera is:

whose behavior tends to decrease as \(\psi _i\rightarrow 0\), so higher resolution areas on the sensor plane continuously increase the farther away they get from the optical center. Then, the area of the mirror imaged by the infinitesimal area \(\delta A\) is:

where p is the location of the pupil \((x_o,y_o,z_o)\), \(S_r (s,t)\) is the surface value of the mirror, and \(\phi\) is the angle between the normal to the mirror and the line joining the pinhole to the mirror. This distance is denoted d in the diagram. Since reflection at the mirror is specular the solid angle of the world imaged by the catadioptric camera is:

Hence, the spatial resolution of the catadioptric system is:

From this equation we can examine our system in two ways. First, we want to know how resolution behaves for regions of interest (ROIs) in the scene. Second, we want to know the spot size resolution for a given heat source in the scene. Referring to Fig. 4, we superimpose a grid onto the image of our reflector, placed inside of a black and white checkerboard box. Then, five real-world planes (three walls, the floor, and the roof) are mapped to the reflector. We determine each region’s location on the reflector using the superimposed grid and note the distortions for each travelling in lateral directions away from the center of the reflector. The distortion is easily seen in Fig. 4 which depicts a sixth region we call the Head and Shoulders ROI. This ROI is the most likely location of a human’s torso in the image. As expected, the distortions in the center of the reflector are small and increase moving towards the reflector’s edge. The real-world corners, closest to the reflector, represent the areas where we see the most distortion. In general, from this image, we see that the near corners, and the floor area just below the reflector, are areas where we expect scene objects to be the most distorted. We keep this in mind for image processing as it may be possible to use a measurement metric to classify shapes.

For each of the six ROIs, we computed the spatial resolution along lateral lines of the reflector to understand behavior. This modeled behavior uses a scene point that is infinitesimally small and an incident ray from infinity, so the spatial resolution values are extremely small. Then, we computed spatial resolution curves along three radial slices (top, middle, and bottom) for each ROI. Next, we use the derived spatial resolution (16) to compute a spot size of a given area in the scene onto the focal plane array of the Seek Thermal.

3.4 Maximizing seek thermal pixels

The distribution of the Seek Thermal’s pixels onto the reflector surface can be computed by map** each pixel from reflector to image plane according to calibration techniques. By transformation and projection, map** pixels is shown to work well for rotationally symmetric shapes [46]. Our reflector surface does not directly adapt to the derived models, however, since our basic need is to evaluate the pixel use for various mirror shapes, so we employ a new approach. We project the mirror surface onto the y-plane, according to our coordinate system, and compute the area difference between said projection and the smallest fitting rectangle. This simplistic approach is not quite good enough, however, because it does not allow us to evaluate the effect on viewed area from different pupil heights. We improve this basic approach by using the computed visible area according to our model, first, and then project the viewable mirror’s surface to the image plane.

The four points that allow us to compute CS-HFOV and CS-VFOV are used to compute the visible area of the mirror for each pupil location. Since the computations of our model account for viewing direction, we use this fact to perform the projection and then find the area difference between the projected mirror surface and the smallest fitting rectangle. We do not assume any rectangle according to the projected mirror shape, but instead use computed surface values to draw the rectangle so that we verify it is the correct projection. The simple equations that capture the definition of Area Difference and Pixel Efficiency are then:

where \(A_r\) is the area of the smallest fitting rectangle and \(A_{S_r}\) is the area of the reflector surface as projected onto the y-plane.

3.5 Positioning the catadioptric sensor inside the container

The placement of an infrared imaging system on the inside of a ship** container can be investigated systematically by a geometry of space analysis. First, sampling the scene for infrared emittance from a heat source should be considered by computing the viewing angle for various camera placements. This procedure requires setting up a geometry of space model from which to calculate angles. Due to the symmetry of the geometry of a ship** container, we investigated camera positions at three locations.

First, at the top/center of the door and the corresponding location at the top/center of the back wall. Second, at the top corner of the container and third, at the top/middle along one side of the container. Any other sensor locations would be obscured by container contents and/or would quickly be damaged by the loading and unloading of contents. We did not consider a sensor location in the top/center of the roof of the container, which provides a good vantage point, because containers can be loaded to the roof and this position would lead to sensor damage and/or obscuration. Moreover, a catadioptric sensor in this location would require an omnidirectional mirror, which is not an efficient use of scarce pixels.

3.6 Mirror design results

After a number of initial trials with mirror shapes, we downselected ten variations of mirror shapes which include three different x- and y-scale factors, two different z-scale factors, and two different curvature factors. Using three different pupil heights we show the results of 30 mirror configurations (Table 1). A summary of results follows:

-

The average C-HFOV is 33.23\(^\circ\), with standard deviation 2.02 and variance 3.94

-

The average CS-HFOV is 212.13\(^\circ\), with standard deviation 1.24 and variance 1.47

-

The average CS-VFOV is 144.28\(^\circ\), with standard deviation 17.45 and variance 294.49

-

The average Area Difference is 750.02, with standard deviation 204.49 and variance 40,423.90

-

The average Pixel Efficiency is 91.09, with standard deviation 0.46 and variance 0.20

The effect of parameter values on CS-HFOV are as follows (increase means better):

-

Holding x- and y-scale factor and z-scale factor constant, while increasing the curvature factor, resulted in an increase in CS-HFOV.

-

Holding z-scale factor and curvature factors constant, while increasing the x- and y-scale factors, resulted in an increase in CS-HFOV.

-

Holding x- and y-scale factor and curvature factors constant, while increasing the z-scale factor, resulted in a decrease in CS-HFOV.

-

Pupil height has very little effect on CS-HFOV, which is favorable because that means we have small variance in the equatorial region of our reflector. This is a design trait that translates to assuming lower distortion exists in the main region of detection.

The effect of parameter values on CS-VFOV are as follows (increase means better):

-

Holding x- and y-scale factor and z-scale factor constant, while increasing the curvature factor, resulted in an increase in CS-VFOV.

-

Holding z-scale factor and curvature factors constant, while increasing the x- and y-scale factors, resulted in a decrease in CS-VFOV.

-

Holding x- and y-scale factor and curvature factors constant, while increasing the z-scale factor, resulted in a decrease in CS-VFOV.

-

Raising the pupil height resulted in an increase in CS-VFOV.

The effect of parameter values on Area Difference are as follows (increase means worse):

-

Holding x- and y-scale factor and z-scale factor constant, while increasing the curvature factor, resulted in an increase in Area Difference.

-

Holding z-scale factor and curvature factors constant, while increasing the x- and y-scale factors, resulted in an increase in Area Difference.

-

Holding x- and y-scale factor and curvature factors constant, while increasing the z-scale factor, resulted in an increase in Area Difference.

-

Raising the pupil height resulted in a decrease in Area Difference.

The effect of parameter values to Pixel Efficiency are negligible for all tested mirror shapes. We expected this because the mirror is matched to the pupil location to enforce the camera’s full angle of view.

For the Head and Shoulders ROI, we observed the highest spatial resolution in the center with a steady decrease moving laterally outward until reaching zero resolution at \(s= 2\pi /9\) and \(s=7\pi /9\). It makes sense that lower resolutions are in the near corners as previously discussed. The values for when \(s=0\) above, and throughout this section, correspond to the viewable edges of the mirror. We do not do anything with these values as they only confirm the fields of view with no discrepancies noted for falling outside the range of the computed CS-HFOV or CS-VFOV. The results of spatial resolution behavior for the LHS Wall ROI and the RHS Wall ROI both show high resolution from the center of the reflector decreasing toward the edges as expected. This result means we have higher resolution to detect heat sources in the far corners of the container. Results for the Back Wall ROI are as expected with the highest resolution in the center of the ROI decreasing as we move to the edges. The slope of the curves is relatively flat for this region meaning mostly uniform resolution. It follows that the Floor ROI has similar spatial resolution behavior in the two regions closest to the Back Wall ROI since they are also in the main equatorial region of the mirror. The concern is the bottom floor area, just below the reflector, which has significantly lower resolution than any other ROI. There is nothing unusual about the spatial resolution for the Roof ROI. It is highest in the center and decreases moving outward.

For the purposes of prototy** a mirror we selected the design that has the lowest Area Difference value and the third highest CS-VFOV. There are only 4 degrees of separation between the highest CS-HFOV and the lowest with all mirror shapes above 209 degree horizontal field of view making it a metric not used for selection. It turns out that the Area Difference is the metric that differentiated the mirror shapes the most. In choosing the mirror with the lowest Area Difference, we select the mirror with the highest spatial resolution. This was observed when comparing spatial resolution plots between mirror shapes. The rationale is that a smaller Area Difference directly translates to a more uniform distribution of camera pixels to image the reflector. Moreover, the selected mirror is more compact in size with a shorter pupil to reflector length and had the highest pupil height, meaning less obscuration of the scene by the camera. The prototype mirror parameters are: \(r=57.15\) mm, \(a=1.0, b=0.6, c=1.1\) and Pupil = (0, 186.175 mm, 60 mm).

Prototy** was conducted in two ways that can be used for high-volume production [8]. The first is to model the mirror in a CAD program that then controls the milling of a form in either aluminum or wood. We found it preferable to use aluminum so that we can both test it directly after polishing, and then use it as the block for vacuum thermo-forming an acrylic sheet which then can be coated with an IR reflective material. The second is simply 3D printing the mirror, filling and polishing imperfections, and the coating it (Fig. 7).

4 Embedded machine learning

Detection based on infrared and visible image fusion is widely used for many real-life applications [51] who developed the highly cited system called Pfinder. The central aspects of their approach are the learning of a scene by acquiring a sequence of video frames that do not contain a person, then searching for large deviations from this model by measuring the Mahalanobis distance to identify regions of interest for analysis, and finally identifying head, hands, and feet locations based on 2D contour shapes. We investigated a similar approach, but found that this method is challenged by using very specific parameters that make it difficult to generalize for an autonomous application, especially one in real-time. Moreover, contour shape analysis is noisy and has difficulty generalizing which we found is exacerbated in low-resolution images.

With improved computational power in the early 2000’s, state-of-the-art human detection methods included Haar wavelet-based Adaboost cascade [52], histogram of oriented gradient (HOG) features combined with a linear SVM [53], artificial neural network (ANN) using local receptive fields (LRF) [54], and key point detection methods [55]. These seminal works have withstood the tests of extreme datasets and scrutiny as observed from the amount of citations received and application implementations in the last couple of decades. Also, they have been optimized for many applications outside their first use which shows their adaptability to perform various classification tasks.

Tens of thousands of papers are being published each year on applications of machine learning. Often that machine learning is being performed remotely via cloud computing, but for sophisticated Internet of Things (IoT) applications the images and/or sensor data is intended to be processed locally. The Support Vector Machines (SVM) approach has less classification complexity than Convolutional Neural Networks (CNN) [56] by taking advantage of only a small amount of training data, called support vectors, to construct the optimal hyperplane decision boundary [57] which can make it more amenable to edge-computing implementations.

There is less research on surveillance using fisheye cameras [58] relative to perspective cameras. The relative dearth of omnidirectional images to train CNNs means that deep learning approaches have not been used as much for omnidirectional human detection [59,60,61] and standard human detectors are not directly applicable to top-view fisheye frames [62]. For these reasons [63] use an SVM human detector with an HOG descriptor.

Despite a heavy focus on human detection in computer vision, little research has been conducted on human detection in catadioptric images for security applications, especially those captured in low resolution. Omnidirectional systems are the most commonly used catadioptrics which capture a warped version of the 360\(^\circ\) scene onto a donut-shaped image. This warped image is usually transformed into a panoramic or a sequence of perspective images before applying classification techniques. As part of the proposed algorithm in [64], a direct approach for human detection involves HOG features and SVM classification. The HOG computation undergoes a modification using a Riemannian metric to form an omnidirectional (non-rectangular) sliding window. This method has some interesting aspects that are applicable to our problem as we will see that human objects in our dataset are skewed near the edges of the reflector. Using training and search windows that conform to match the geometry of the reflector may improve detection performance.

One way of separating the task of human detection is by methods that use background subtraction and those that perform direct detection [65]. This is certainly an important consideration in our application. Some background subtraction methods can be versatile in that no background model needs to be learned. In contrast, direct detection methods are attractive because features can be immediately extracted and fed to a classifier. Ultimately, a decision can be determined after analysis of performance, computational, and battery expenses for each method in a test environment.

In general, we are cautious about methods that require full scans of the image, multiple passes over the same data, or cookbook/dictionary methods which require the most long-term memory. The current state-of-the-art methods unfortunately use full scans at multiple scales. The work-around is to find a sufficient search window overlap and size that minimizes false alarms, has high detection, and reduces computations. Since computations can be separated into parallel cores by treating pixels and cells of pixels as independent of each other, potential gain is sometimes minimal. Additionally, it is possible to take advantage of features with highly discriminative power by reducing feature vector complexity through Adaboost methods [66]. These methods use a combination of weak learners to build a stronger classifier. Finally, for the plain fact that many of these methods are already employed in real-time embedded applications, we consider them in this work.

The research of Tang et al. [68]. The authors found that a fixed reference template outperformed a filter with an adaptive reference template due to the adaptive method being sensitive to background changes.

Montage of daytime images. Note that there can be quite significant difference in the thermal signature of the container roof depending upon whether it is sunny or cloudy since temperature determines gray scale value in the thermograms. The main feature that the eye is drawn to in these images is the fixture holding the Seek Thermal camera, which is top-center in each image. The person is the small, bright feature in the lower part of each image

4.1 Data collection

We collected a dataset that includes 30 videos inside of two ship** containers over a six-month period (Nov 2018 to Apr 2019). We use two forty-foot containers in an open parking lot located at \(37^\circ 16' 37'', 76^\circ 43' 11''\) (Fig. 2). The videos were captured at various times of the day and night with different outside ambient temperatures to provide a sample of different backgrounds with variability in detected radiance. The persons in the scenes are in various poses including side profile, back profile, head on, bent over, and squatting down. In addition to moving around in the container, the humans are partially occluded by container contents which include desks, chairs, wardrobes, and tables. Partial occlusion occurs during the act of picking up and moving chairs and climbing on top of container contents. A montage of image samples can be seen in Figs. 8 and 9.

During data collection, the camera is positioned directly above the door (as seen in Fig. 2) in three positions: center, left corner, and right corner. These positions were selected because they offer the most protection to damage during loading and unloading and provide the best vantage point for intruders’ most probable point of entry. The dataset consists of 19,485 images (13,032 positive and 6453 negative examples) as captured by five different mirrors. Table 1 provides a summary of the data collected with a brass orb reflector (seen in Fig. 4) which includes 34 background sequences and 51 foreground sequences. A sequence is a set of consecutive frames that have no person (background) or a person in the frame (foreground). Table 2 provides a summary of images captured by four 3D-printed then aluminum coated reflectors (Fig. 7) of different geometries. We refer to these four aluminum coated mirrors based on their size (extra-large = XL, large = L, medium = M, and small = S). Table 3 provides size and geometric information about these four reflectors.

Montages of 36 daytime sample images and 21 nighttime sample images (Figs. 8 and 9) show the variability in backgrounds as well as human poses within the dataset. An initial analysis on these 57 images highlights shape and intensity differences of people in the dataset by location in the image. We attribute the variability in the measurements to pose articulation. We defined seven regions where we find the human intruder for sample images and refer to these seven regions as FacePosition. The width, height, and max intensity of the intruder’s head for each FacePosition is compared by time of day using the MATLAB imtool function.

A comparison of FaceHeight, which is measured as number of pixels, with respect to FacePosition for day and night shows an approximate range of 10 to 60 pixels with tighter grou**s according to within FacePosition regions. This is to say, FaceHeights captured in a certain FacePosition, like MiddleHigh for example, are more closely grouped than the full range of measured FaceHeight values. FaceWidth, also measured in pixels, shows an approximate range of 5 to 45 pixels. In general, we observe that the relationship between FaceHeight and FaceWidth correspond in a similar fashion as compared to FacePosition albeit at different scales. MaxIntensity, which is the highest intensity value for the head object, has a range between 150 and 250. Lower variances (see Table 4) for FaceWidth and FaceHeight might be features helpful in determining human shapes from non-human shapes during supervised learning. However, higher variance in intensity values could be an alarm for approaching intensity-based methods with caution. It is not obvious from this sample of images if we can cluster these features according to FacePosition, however, it is promising that FaceWidth and FaceHeight are correlated indicating distortion is minimal and the human form can be assumed consistent with what we would expect.

Next, we applied segmentation methods systematically to the dataset. Since infrared sensors capture emitted radiation from objects in the scene which have variable intensities and detection differences according to atmospheric conditions and viewing angles, we want to understand the performance of different segmentation methods to identify regions of interest to both check its viability as an initial first step to perform simple human detection methods and to see if it could be used to enhance direct methods. Also, in our application, it is not clear there are no unanticipated adverse effects of using a reflector to capture emitted radiation. We look at separating foreground from background as a way to facilitate feature extraction for scenarios when no contrast exists. Thus, we try edge detection, threshold segmentation, and frame differencing techniques.

We reduce bias or variance in the procedure by first selecting pre-processing steps that allow subsequent routines to be executed sequentially with consistent results. We experimented with histogram equalization methods as a low-level pre-processing step for each method. However, we found that this step is not necessary for all methods.

Initial analysis showed that standard thresholding methods which include global, adaptive mean, adaptive Gaussian, and binary do a poor job segmenting the images in this dataset. Another segmentation method, which uses an active contour model evolves a curve so that it separates foreground from background based on the means of the two regions [69]. The technique is very robust to initialization and returns good results when there is a difference between the foreground and the background means. Chan and Vese [69] developed such an active contour model based on Mumford-Shah segmentation techniques and the level set method. It does not use the classical methods of edge detection to stop the evolving curve on the desired boundary and claims to not need very smooth boundaries defined by a gradient.

Active contour segmentation [70] does a fairly good job for images in our dataset. In images where enough contrast between foreground and background exists the human object is isolated. We note that this method isolated the torso of the human, which corresponds to the brightest part. This method did a particularly excellent job for the images captured when outside conditions were fair or windy and temperatures were in the mid to low 50s.

We found Sobel, Prewitt, and Roberts edge detection work well enough to pursue as a possible enhancement after testing on more data. Our results show sensitivity values between 0.015 and 0.040 using built-in MATLAB functions with both horizontal and vertical edge directions perform equally well for all reflectors and for all ambient conditions. We do run into implementation challenges, just like with thresholding, where we need a way to auto-find appropriate sensitivity values. We found the challenge here to be the amount of noise in the images and the low resolution. Roberts edge detection left the most noise in the image. The amount of noise was not found to be an issue that could not be cleaned up by morphological operations. It was easy to find a person using a sliding bar to select the sensitivity value, but more processing steps may be required to clean up the jittery human form as we saw in the results for March 31, 2019 taken at 1659 hours and another on March 30, 2019 at 0336 hours. These images were captured with aluminum coated 3-D printed reflectors. We noticed that human objects captured by these reflectors had a glow to them. While this makes the human object easy to see in the image, it complicated edge detection [8].

4.2 Human detection approach

Our initial approach was to apply two broad methods to conduct human detection inside of a ship** container. First we isolate the foreground and then use features on that object to determine if it is a person or not. We divide this approach into two problems. Since we are not performing continuous observation like watching a street corner or train station, we can process images as short sequences or as single frames. If we exploit the information contained in a two-frame sequence, we can use frame differencing or motion methods to locate the target and then apply feature extraction and binary classification. This assumes that the frames at \(t-1\) (frame at a previous time step) and t (current frame) do not both contain a static target. If we perform detection on a single frame, we need an adaptive background model on standby to use whenever we detect a potential break-in with other sensors.

The background inside a ship** container varies by time of day, ambient conditions, container orientation, and other factors. While the change in background is dynamic, it is usually gradual. Thus, it is possible to capture background information at periodic intervals to be prepared for the task of detection. We look at both methods despite their obvious drawbacks because they have advantages in computational expense.

The more sophisticated approach is to use direct methods that do not require segmentation. For this approach we test the HOG feature with additional T Channel information as in the T\(\pi\)HOG feature proposed by Baek et al. [71]. The T Channel uses intensity information within cells defined as an aggregated version of a thermal image. We choose the HOG feature over Haar features because of its finer detail to capture edge information. Haar features are limited to edges in four directions: vertical, horizontal, and two 45 degree diagonals [53]. This makes Haar features efficient for tasks such as face recognition because faces have well-defined structure. The finer resolution of HOG features makes it robust to pose variation and built into the algorithm is a block normalization step that makes it robust to illumination changes. While illumination changes usually do not affect LWIR imaging, ambient conditions do, so performing block normalization puts feature extraction for images captured under different conditions on a level playing field.

When we started to build a detector, it occurred to us that simple shape features were not discriminative enough to cluster human objects versus all other objects. It was also clear that building these features was time consuming via trial and error. Therefore, this method would lead to a model that is trained only for a specific dataset and would not generalize well to new observations. Furthermore, we verified the HOG feature does an excellent job capturing the human shape even slightly distorted as captured by our catadioptric sensor. Its two major challenges are detecting people on the extreme edges of the reflector and finding a person under high humidity conditions and hot backgrounds, as expected [72]. Containers often transit tropical conditions en route to Panama and Suez canals.

We found humidity to be an interesting challenge when processing imagery captured when it had been raining all day. The background seemed almost washed out because any IR waves emitted from scene objects seemed to be attenuated to zero or scattered before reaching the camera’s detector. This was not the case for the human in the scene. Nonetheless, while the human object can be seen in the image, the hazy separation between the target and background gave our detector trouble. The fix is foreground detection or background subtraction which can still be done to perform feature extraction and then classification.

These initial results led us to a refined approach of using the HOG feature as our only shape feature for human detection. We propose to modify this feature by adding intensity information as discussed below. To overcome the challenges of failed detection for human objects captured on the extreme edges of the reflector an approach as suggested by Cinaroglu and Bastanlar [64] to transform the training and search windows to match the geometry of the reflector in the catadioptric system could be a worthwhile pursuit. We do not attempt this method, but we found it encouraging that with more training windows our approach did find people on the edge of the reflector as long as the head and shoulders were captured. If the image only had the head of human object visible there was not enough information to classify the object as a human. We approach the challenge of detecting a human intruder against a hot background or humid conditions by using frame differencing or background modeling and then performing segmentation to give an advantage to the HOG feature extraction. After feature extraction we perform classification using a linear support vector machine (SVM), also discussed below.

To describe a human detector using a direct method with the histogram of oriented gradients (HOG) global feature we use three steps:

-

1.

the method of feature extraction from positive and negative images,

-

2.

the training of a linear support vector machine, and

-

3.

the search method on new images.

Implementation of this detector follows [53] and [73]. We found that the HOG feature on its own does not perform well for our application because it does not capture pixel intensity information. Thus, modifications to McCormick’s code [73] include augmenting the HOG feature with a T-Channel descriptor to the feature vector as described in [71]. We call the feature in this detector HOG+ which includes the standard HOG feature plus the T-Channel feature. This adaptation improves performance by exploiting aggregate information from the pixel intensities within cells. We do not include pixel position information as proposed by Baek et al. [71] because we found intensity information was enough to find people in our dataset. Also, the large 3780-value feature vector for HOG combined with the 128-value feature vector for T-Channel creates a 3908-value feature vector which we felt should be kept as lightweight as possible for embedded use.

Feature extraction begins by capturing 66 × 130 pixel training windows from both positive and negative frames. Positive frames contain a person and negative ones do not. The true size of training windows is 64 × 128 pixels, however, an extra pixel around the outside is required to compute gradients along the edges. One point to make here is that the dataset has many sequences of a person or persons in the container. We did not find it necessary to use every pose in a sequence as similarity between frames was enough to identify the person. So, after splitting the data into training and test sets, we selected a variety of poses from the training set to capture training windows and then tested the classifier on the images in the test set. Negative training frames were captured from background only images in the dataset. We felt it would enhance performance to use actual backgrounds from the dataset as will be seen there are hard examples which will need to be addressed by further analysis but using actual backgrounds to form negative training windows seemed to work well. We will also show that there are times when the classifier incorrectly classifies a portion of the background as a person. One would think that the portion that is misclassified would have a human shape, but this was not always the case. This is why we believe future implementations will require some kind of background subtraction step before feature extraction.

Collecting training windows is a tedious process. We wrote MATLAB code that opens an image, allows the user to draw a box over the desired area, then an automatically cropped window of the correct dimensions is saved for training. It is still a manual process but much faster than using the rectangular crop function in MATLAB which uses pixel locations found by opening each image, approximating coordinates to use, then executing the crop function after manual input.

The HOG part of our HOG+ feature is defined as the histogram of the magnitude sum for gradient orientations in a cell. It is widely used as an efficient feature for pedestrian or vehicle detection. It outperforms Haar features because Haar are course features and do not have orientation information. It uses four parameters: number of histogram bins, cell size (number of pixels), block size (number of cells) and window size (number of pixels). Dalal and Triggs suggest that the cell size should be about the size of a person’s limb and that the block size should be about two to three times the size of a limb. We use a cell size of 8 × 8 pixels and a block size of 8 × 16 cells for test purposes. In addition to these parameter values being the most commonly used, recall from data exploration that the head size can be as small as 5 × 10 pixels in our dataset. A cell size of 8 × 8 pixels will not capture the fine details of the smallest head, but it performs well enough, and smaller cell sizes take more computations.

The HOG feature is a 3780-value vector formed by putting the magnitudes of 64 gradient vectors for each 8 × 8 cell into an n-bin histogram. Gradient vectors for every pixel are computed from intensity values using a [− 1 0 1] mask in both the vertical and horizontal directions for each pixel in the entire training window. The number of bins n is usually set to 9. Histograms range from 0 to 180 degrees, so there are 20 degrees per bin, using unsigned gradient values. The choice of [0,180] or [0,360] degree orientation bins is based on the object class. For human detection, 0 to 180 has good performance. Since gradient magnitudes are split into bins, the strongest gradients will have a bigger impact on the histogram. Contributions of gradient vectors to bins is done proportionally between the two closest bins. The forming of a histogram from gradient vectors is a way to quantize the 64 vectors with 2 components each into a string of 9 values. The histogram does not encode the locations of each gradient within the cell, it only encodes the distribution of gradients within the cell.

The next step is to normalize the histograms. Normalization is performed locally to increase robustness to intensity changes between frames and variation between foreground-background contrast. Histograms are not normalized individually. Instead, they are grouped into blocks and normalized based on all histograms in a block. This is done by concatenating the histograms of 2 × 2 cells within a block into a vector with 36 components and dividing by is magnitude. We use a standard L2-Normalization which along with L2-Hysterisis and L1-Square Root were empirically determined to perform the best by Dalal and Triggs.

The T-Channel, originally proposed by Hwang et al. [74], computes the sum of pixel intensities within an 8 × 8 cell. It was suggested as a feature to classify pedestrians in infrared images taking into account that pedestrians tend to have higher pixel intensity values than backgrounds. The images in our dataset highlight this fact even though they are captured by a catadioptric imaging system. We see especially good contrast for the images captured using the aluminum coated mirrors. To summarize, the 64 × 128 pixel training window is divided into 7 blocks horizontally by 15 blocks vertically for a total of 105 blocks. Each block contains 4 cells with a 9-bin histogram for a total of 36 values per block. The HOG descriptor vector is then 7 × 15 × 4 × 9 = 3780 values. The 128 intensity sums per 16 × 8 cell of the T-Channel feature is concatenated to this vector forming a 3908-value feature vector for HOG+.

The optimization objective of the SVM is to maximize the margin. The margin is defined as the distance between the separating hyperplane (decision boundary) and the training samples that are closest to this hyperplane, which are the so-called support vectors. Given the training set

with L samples, the SVM is trained to classify input data \(x^{(i)}\) as positive \((y^{(i)}=1)\) class or negative (\(y^{(i)}=-1)\) class, where

and

If the input data \(x^{(i)}\) is mapped to a higher dimensional feature space as \(\phi (\cdot )\) then the decision function of the SVM is defined by

where

is the weight and \(b\in {\mathfrak {R}}\) is the bias. The SVM is trained by finding optimal solutions of w and b which maximize the margin between the two classes.

Transforming the training data onto a higher dimensional feature space via the map** function \(\phi (\cdot )\) is known as a kernel trick whereby we train a linear SVM model to classify the data in this new feature space and then use the same map** function \(\phi (\cdot )\) to transform new, unseen data to classify it using the same linear model. Since the computation of new features is expensive, especially when using high-dimensional data, the kernel trick is used to help overcome the problem of solving the quadratic programming task to train the SVM. In practice, the dot product \(x^{(i)T} x^{(j)}\) is replaced by \(\phi (x^{(i)})^T \phi (x^{(j)} )\) leading to the kernel function

When dealing with the case of nonlinearly separable classes slack variables \(\xi _i\) are introduced. The motivation is that linear constraints need to be relaxed to allow convergence of the optimization in the presence of misclassifications under the appropriate cost penalization. L2-SVMs use the square sum of the slack variables \(\xi _i\) in the objective function forming the following optimization problem:

We use the variable C to control the penalty for misclassification. Large values of C correspond to large error penalties and small values indicate that we are less strict about misclassification errors. We can then use the parameter C to control the width of the margin and therefore tune the bias-variance trade-off. We found \(C=1.0\) and the L2-SVM loss function work well on our dataset.

The rationale behind having decision boundaries with large margins is that they tend to have a lower generalization error whereas models with small margins are more prone to overfitting [75]. The high generalization ability has led to the popularity of the SVM over conventional methods especially when the amount of training data is small. It also offers the advantages of adaptability to various classification problems by changing kernel functions and it has a global optimal solution.

After training windows are captured from training images, the HOG+ feature is extracted from each training window, and an SVM is trained to classify between two classes (person v. no person), the detection phase searches new images at all scales and locations to localize human objects.

First, it is important to note that the HOG+ descriptor window is never resized. Instead, the image is rescaled many times where each image scale is 5% smaller than the previous one until the resulting image is too small to fit a descriptor window. An image scale of 1.00 represents the full image where the idea is to look for persons who are small within the image or farther from the camera. At smaller scales, we search for people who appear larger in the image, meaning closer to the camera. For each scale, the image is downsampled or resized, then HOG+ descriptors are calculated for every possible detection window with in the resized image. The HOG+ descriptors are fed to the linear SVM to determine if the detection window contains a person. A person is detected when the value of the computed probability is above a threshold set by the designer. The implementation here does not support variable detection window strides. It is stepped by 1 cell (8 × 8 pixels) in each direction with no overlap.

4.3 Human detector results

The performance of our human detector on unseen images was analyzed by mirror type, near/far detection of human objects, and day v. night. We trained one SVM classifier with 349 positive and 250 negative training windows (Figs. 10 and 11 respectively). We selected various human poses for training but did not chose all poses in the dataset by design. All training windows came from videos collected with the BrassOrb mirror (Fig. 4).

Test results were recorded for the following number of test images by mirror: BassOrb (43 images), MirrorXL (44 images), MirrorL (32 images), MirrorM (34 images), and MirrorS (43 images). Test images for each mirror include images for all times of day and weather conditions as per the data collected.

During testing we recorded the threshold, the scale of the detected window, and counted false positives, true positives, and false negatives. The threshold is the value that separates the two classes. Since -1 represents non-persons and 1 represents persons, a value of 0 would normally be selected, however, the threshold can be set higher to say 0.4 to reduce false positives. The actual threshold values for our code ranges between − 25 and 45. This is because we adapted the code to include the T-Channel descriptor which changed the range of threshold values. The range of threshold values where we found people using the adapted code is between 4 and 40. This maps to values of approximately − 0.1714 to 0.8571 on the original − 1 to 1 scale. Lower thresholds allow more detected windows to be found and higher thresholds reduce the number of detected windows. We tested several threshold values for every test run and recorded only the one that resulted in a low number of detected windows while finding the person. This allowed us to conduct a fair comparison between mirrors.

The mean, median, variance, and standard deviation of threshold values gives us insight into the detection sensitivity of the different mirrors. As expected, from our experimentation of shapes and intuition from their distortion as viewed inside of a checkerboard mock-up (see Figs. 4 and 7), the smallest mirror, MirrorS, has the highest threshold variability of 74.3186 and highest standard deviation of 8.6208. Not surprisingly, the BrassOrb shows the smallest variance of 13.6811 and standard deviation of 3.6811. Since the training windows are formed from BrassOrb data this indicates that further analysis should match training windows to the mirror for best results. The threshold variances and standard deviations for mirrors MirrorM, MirrorL, and MirrorXL are all very similar.

A detected window is simply where a person is identified. For our 480 × 640 pixel images, we search 24668 windows in about 11 seconds on a 3.2 GHz Intel Core i5. Our code is not optimized to run on parallel cores and is written for us to understand the process and results not for real-time implementation purposes. The detected window is recorded as a row vector which has coordinates and dimensions to be plotted on the image. The values are recorded relative to the original image dimensions.

The scale of the detected window is the size of the downsampled image relative to the original image. The number of detected windows per scale is an output of the code. We only record the range of scales to verify our perception of where the person should be detected according to its distance from the camera. As it turns out, we detect people who are both near and far from the camera at different scales in the same test run. This can be seen by the different sized windows plotted in the results. We also detect the person multiple times per scale. There is not conclusive evidence to say how mirror shapes correlate to scale of detection. All seemed to detect people in the expected scale range. We note that for distances between person and camera in this application we do not expect much variation in the size of the person on the image at distances between 5 to 15 feet. At distances of over 15 feet, the person appears small and was detected less than half the time.

Test results for MirrorS were 65.7% true positive, 34.3% false positive, and 20.8% false negative. For MirrorM, results were 69.7% true positive, 30.3% false positive, and 15.6% false negative. For MirrorL, results were 90% true positive, 9.6% false positive, and 18.4% false negative. Results for MirrorXL were 84.2% true positive, 15.8% false positive, and 26.7% false negative. Finally, for BrassOrb there were 38.5% true positive, 61.5% false positive, and 33.3% false negative.

5 Discussion and future work

The primary contribution of this work is the design and proof-of-concept demonstration of an infrared catadioptric imaging device with physics-based machine learning algorithms that can make effective use of inexpensive infrared imagers which have relatively small detector arrays. The specific goal was to interpret the scene inside of a ship** container at the point of detection, i.e. in an edge-computing paradigm, and thus make efficient use of expensive communications bandwidth. The histogram of oriented gradients and T-channel (HOG+) feature was implemented for human detection using infrared catadioptric images, and was shown to be effective for various mirror shapes.

While human detection with infrared cameras is not difficult at all if cost is not a consideration, here we are exploiting very inexpensive LWIR imagers that enable real-world applications such as monitoring ship** containers. The primary design challenge is being able to give coverage of the necessary container volume with an imager tucked up out of the way such that it will survive loading and unloading of the container. Moreover, the catadioptric imaging system described here uses reflective, rather than transmissive, optics for both cost and durability considerations.

Figure 12 shows that it is possible to detect distorted people captured on the edge of the reflector. With less than 600 training windows we correctly found people on the edge in both day and evening hours. Our system correctly classified human objects despite other hot objects in the scene. It correctly detected a person outside the door with the catadioptric system positioned in the top corner of the ship** container. From this position, we were able to find the person as soon as they opened the door. It is important to note that this position corresponds to the corner above the left door, as looking at the container from the front. The reason this position works best between the two top corner positions is because the door is built such that the left door can only be opened after opening the right door.

Figure 12 also provides positive results of two people near the edge of the reflector and even with one of them just below the camera, and at a distance far from the camera. With no training windows of woman or children the detector correctly found both in the scene purely based on the commonality of human shapes. Finally, this method correctly found two out of three people and a person far from the camera out of focus.

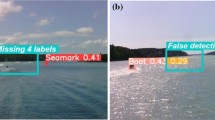

False positive results include people on the edge, finding only one of three people, classifying an object in the background with a similar shape as a person, and 13 false positives of background in one image. Our first videos included Seek Thermal watermarks in the top right-hand corner of the frame, which was misclassified because of intensity miscues, which should not be a factor during field deployment.