Abstract

In this study, we proposed using Bayesian nonparametric quantile mixed-effects models (BNQMs) to estimate the nonlinear structure of quantiles in hierarchical data. Assuming that a nonlinear function representing a phenomenon of interest cannot be specified in advance, a BNQM can estimate the nonlinear function of quantile features using the basis expansion method. Furthermore, BNQMs adjust the smoothness to prevent overfitting by regularization. We also proposed a Bayesian regularization method using Gaussian process priors for the coefficient parameters of the basis functions, and showed that the problem of overfitting can be reduced when the number of basis functions is excessive for the complexity of the nonlinear structure. Although computational cost is often a problem in quantile regression modeling, BNQMs ensure the computational cost is not too high using a fully Bayesian method. Using numerical experiments, we showed that the proposed model can estimate nonlinear structures of quantiles from hierarchical data more accurately than the comparison models in terms of mean squared error. Finally, to determine the cortisol circadian rhythm in infants, we applied a BNQM to longitudinal data of urinary cortisol concentration collected at Kurume University. The result suggested that infants have a bimodal cortisol circadian rhythm before their biological rhythms are established.

Similar content being viewed by others

References

Betancourt, M. (2020). Robust gaussian process modeling. https://betanalpha.github.io/assets/case_studies/gaussian_processes.html. Accessed on 30 Jan 2021

de Boor, C. (2001) A practical guide to splines; rev. ed. Applied Mathematical Sciences, Springer, Berlin. https://cds.cern.ch/record/1428148

Duane, S., Kennedy, A. D., Pendleton, B. J., & Roweth, D. (1987). Hybrid monte carlo. Physics Letters B, 195(2), 216–222.

Fenske, N., Fahrmeir, L., Hothorn, T., Rzehak, P., & Höhle, M. (2013). Boosting structured additive quantile regression for longitudinal childhood obesity data. The International Journal of Biostatistics, 9(1), 1–18.

Galarza, C., Lachos Davila, V., Barbosa Cabral, C., & Castro Cepero, L. (2017). Robust quantile regression using a generalized class of skewed distributions. Statistics, 6(1), 113–130.

Galarza, C. E., Castro, L. M., Louzada, F., & Lachos, V. H. (2020). Quantile regression for nonlinear mixed effects models: A likelihood based perspective. Statistical Papers, 61(3), 1281–1307.

Gelman, A., Vehtari, A., Simpson, D., Margossian, CC., Carpenter, B., Yao, Y., Kennedy, L., Gabry, J., Bürkner, PC., & Modrák, M. (2020) Bayesian workflow. ar**v preprint ar**v:2011.01808

Geraci, M. (2019). Additive quantile regression for clustered data with an application to children’s physical activity. Journal of the Royal Statistical Society: Series C (Applied Statistics), 68(4), 1071–1089.

Geraci, M. (2019). Modelling and estimation of nonlinear quantile regression with clustered data. Computational Statistics & Data Analysis, 136, 30–46.

Geraci, M., & Bottai, M. (2007). Quantile regression for longitudinal data using the asymmetric laplace distribution. Biostatistics, 8(1), 140–154.

Geraci, M., & Bottai, M. (2014). Linear quantile mixed models. Statistics and Computing, 24(3), 461–479.

Hoffman, M. D., & Gelman, A. (2014). The no-u-turn sampler: adaptively setting path lengths in hamiltonian monte carlo. Journal of Machine Learning Research, 15(1), 1593–1623.

Kawano, S., & Konishi, S. (2007). Nonlinear regression modeling via regularized gaussian basis functions. Bulletin of Informatics and Cybernetics, 39, 83.

Kidd, S., Midgley, P., Nicol, M., Smith, J., & McIntosh, N. (2005). Lack of adult-type salivary cortisol circadian rhythm in hospitalized preterm infants. Hormone Research in Pædiatrics, 64(1), 20–27.

Kinoshita, M., Iwata, S., Okamura, H., Saikusa, M., Hara, N., Urata, C., et al. (2016). Paradoxical diurnal cortisol changes in neonates suggesting preservation of foetal adrenal rhythms. Scientific Reports, 6, 35553.

Koenker, R. & Bassett, Jr G. (1978) Regression quantiles. Econometrica: Journal of the Econometric Society, 46(1), 33–50.

Kozumi, H., & Kobayashi, G. (2011). Gibbs sampling methods for bayesian quantile regression. Journal of Statistical Computation and Simulation, 81(11), 1565–1578.

Krieger, D. T., Allen, W., Rizzo, F., & Krieger, H. P. (1971). Characterization of the normal temporal pattern of plasma corticosteroid levels. The Journal of Clinical Endocrinology & Metabolism, 32(2), 266–284.

Laird, N. M., & Ware, J. H. (1982). Random-effects models for longitudinal data. Biometrics, 38(4), 963–974.

Lindstrom, M. J., & Bates, D. M. (1990). Nonlinear mixed effects models for repeated measures data. Biometrics, 46(3), 673–687.

Pinheiro, J. C., & Bates, D. M. (1995). Approximations to the log-likelihood function in the nonlinear mixed-effects model. Journal of Computational and Graphical Statistics, 4(1), 12–35.

Stan Development Team (2020) Stan modeling language users guide and reference manual, version 2.25.0. http://mc-stan.org/

Takeuchi, I., Le, Q. V., Sears, T. D., & Smola, A. J. (2006). Nonparametric quantile estimation. Journal of Machine Learning Research, 7(Jul), 1231–1264.

Waldmann, E., Kneib, T., Yue, Y. R., Lang, S., & Flexeder, C. (2013). Bayesian semiparametric additive quantile regression. Statistical Modelling, 13(3), 223–252.

de Weerth, C., Zijl, R. H., & Buitelaar, J. K. (2003). Development of cortisol circadian rhythm in infancy. Early Human Development, 73(1–2), 39–52.

Weitzman, E. D., Fukushima, D., Nogeire, C., Roffwarg, H., Gallagher, T. F., & Hellman, L. (1971). Twenty-four hour pattern of the episodic secretion of cortisol in normal subjects. The Journal of Clinical Endocrinology & Metabolism, 33(1), 14–22.

Wichitaksorn, N., Choy, S. B., & Gerlach, R. (2014). A generalized class of skew distributions and associated robust quantile regression models. Canadian Journal of Statistics, 42(4), 579–596.

Yang, Y., Wang, H. J., & He, X. (2016). Posterior inference in bayesian quantile regression with asymmetric laplace likelihood. International Statistical Review, 84(3), 327–344.

Yu, K., & Moyeed, R. A. (2001). Bayesian quantile regression. Statistics & Probability Letters, 54(4), 437–447.

Yue, Y. R., & Rue, H. (2011). Bayesian inference for additive mixed quantile regression models. Computational Statistics & Data Analysis, 55(1), 84–96.

Acknowledgements

This research was supported in part by the Japan Society for the Promotion of Science 20K11707 for YA and 20H00102 for OI.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Setting of hyperpriors

Here, we describe the method of setting the prior for \(\alpha \) and \(\rho \), which are parameters of a GP prior, based on Gelman et al. (2020) and Betancourt (2020).

1.1 Prior predictive checks

In this study, when using a GP prior for the coefficient parameter vector \(\varvec{\beta }=(\beta _1, \cdots , \beta _m)^{\top } \) of the basis function \(\varvec{\phi }(t)=(\phi _1(t), \cdots , \phi _m(t))^{\top }\), the following RBF kernel was used as the kernel function:

By assuming the priors of the hyperparameters \(\alpha \) and \(\rho \) \((\alpha , \rho > 0)\) defined in the RBF kernel, the posteriors of \(\alpha \) and \(\rho \) can be estimated by the MCMC method.

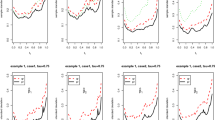

The hyperparameter \(\alpha \) determines the amplitude of the sampled function f(t), and \(\rho \) determines the smoothness of f(t), where \(f(t)=\varvec{\beta }^{\top } \varvec{\phi }(t)\). Note, however, that it is not f(t) but \(\varvec{\beta }\) that is sampled directly from the GP prior. Figure 8 shows the change in amplitude of the function f(t) due to changes in the value of \(\alpha \), and Fig. 9 shows the change in smoothness of the function f(t) due to changes in \(\rho \). For these prior predictive checks, spline-based Gaussian basis functions are used as basis functions, and the number of basis functions is \(m=15\). Each sample \(t_i (i = 1, \cdots , 500)\) generated from the uniform distribution U(0, 1) was used as the input of the basis functions.

The results of Figs. 8 and 9 can be used to select a prior for hyperparameters in a GP, and Gelman et al. (2020) notes that these prior predictive checks constitute a useful method for understanding the effects of priors.

Here, the above setting of the range of input t of \(0 \le t \le 1 \) corresponds to the range of time points in the numerical experiment in Sect. 3 and the analysis of infant cortisol data in Sect. 4. In other words, the prior predictive check of this section is the prior predictive check for the numerical experiment in Sect. 3 and the analysis of infant cortisol data in Sect. 4. (In the analysis of infant cortisol data, normalization processing was performed in advance so that data with a maximum value of 1 and minimum value 0 were obtained.)

1.2 Select of prior for \(\alpha \)

In this study, we used the following prior for \(\alpha \):

where \(N_{+}(0, \sigma _\alpha ^2)\) is a half-normal distribution. In particular, we used \( \sigma _ \alpha = 1 \) in the numerical experiment (Sect. 3) and the analysis of infant cortisol data (Sect. 4). Figure 10 shows the probability density function of \(\alpha \sim N_{+}(0, 1) \).

From Fig. 10, it can be inferred that the prior \(\alpha \sim N _ {+} (0, 1) \) gives the prior information that the value of \( \alpha \) will be approximately in the range less than 2. From the amplitudes of the estimated curves for the case of \(0< \alpha < 2 \) in Fig. 8, we consider that this is a weakly informative assumption that is appropriate for samples of numerical experiments and infant cortisol data (after normalization).

1.3 Select of prior for \(\rho \)

In this study, we used the following prior for \(\rho \), referring to Betancourt (2020):

where \(IG(g_a, g_b)\) is the inverse gamma distribution. In the GP, if the value of \(\rho \) is too small, overfitting occurs, and if the value of \(\rho \) is too large, non-identifiability occurs. Therefore, the inverse gamma distribution is suitable because it can suppress both the upper and lower limits of \(\rho \). In particular, the Monte Carlo simulation in Sect. 3 and the analysis of infant cortisol data in Sect. 4 used \(g_a = 6.28\) and \(g_b = 1.35\), respectively, and the method for setting these values is described in the following, based on Betancourt (2020). The prior predictive check shown in Fig. 9 confirms the smoothness of the function for various values of \( \rho \), and we can use this prior predictive check to determine the lower l and upper u limits for each value of \( \rho \). In this study, \(l=0.1\) and \(u =0.7\) were used. Betancourt (2020) expressed l as the lower limit and u as the upper limit using the lower probability and upper probability as

The parameters simultaneously satisfying these two conditions are defined as \(g_a\) and \(g_b\). By solving this optimization problem of the simultaneous equations, \(g_a \approx 6.28\) and \(g_b \approx 1.35\) are obtained.

HMC and NUTS

Let \(\varvec{\theta }=(\varvec{\beta }^{\top },\varvec{b}^{\top }, \alpha _f, \alpha _r, \rho _f, \rho _r, \sigma ,\varvec{v}^{\top })^{\top }\) be the vector of the unknown parameters in a BNQM (see equation (15)). Using \(\varvec{\theta }\), the posterior can be rewritten as \(p(\varvec{\theta }|\varvec{y})\). It is necessary to estimate the unknown parameter vector \(\varvec{\theta }\) in the posterior inference. Here, we summarize the algorithm of HMC and NUTS when there is a d-dimensional unknown parameter \(\varvec{\theta }=(\theta _1, \cdots , \theta _d)^{\top }\) for any Bayesian model, based on Hoffman and Gelman (2014) and the Stan reference manual (Stan Development Team 2020).

1.1 HMC

Step 1: Initial setting

-

Setting for \(t = 1\). Initialize the parameters \(\varvec{\theta }^{(1)}\) and set \(\epsilon , L, \varvec{\Sigma }\). (Here, \(\epsilon \) and L are the width of one small discrete transition and the number of repetitions in the leapfrog integrator (step 3), respectively, and \(\varvec{\Sigma }\) is the covariance matrix of the multivariate normal distribution used to generate random samples.)

Step 2: Random sample generation

-

Draw \(\varvec{\rho }^{(t)}\) from the following d-dimensional multivariate normal distribution:

$$\begin{aligned} \varvec{\rho }^{(t)} \sim N(\varvec{0}, \varvec{\Sigma }). \end{aligned}$$

Step 3: Leapfrog Integrator

-

Set \(\varvec{\rho }=\varvec{\rho }^{(t)}, \varvec{\theta }=\varvec{\theta }^{(t)}\) and repeat the following updates L times:

$$\begin{aligned} \varvec{\rho }= & {} \varvec{\rho }- \frac{\epsilon }{2} \frac{\partial -\log p(\varvec{\theta }|\varvec{y})}{\partial \varvec{\theta }},\\ \varvec{\theta }= & {} \varvec{\theta }+ \epsilon \varvec{\Sigma }\rho \\ \varvec{\rho }= & {} \varvec{\rho }- \frac{\epsilon }{2} \frac{\partial -\log p(\varvec{\theta }|\varvec{y})}{\partial \varvec{\theta }}. \end{aligned}$$Then, denote the final \(\varvec{\theta }\) and \(\varvec{\rho }t\) by \(\varvec{\theta }^*\) and \(\varvec{\rho }^*\), respectively.

Step 4: Metropolis accept step

-

Accept the candidate \((\varvec{\theta }^{(t+1)}=\varvec{\theta }^{*})\) with the following probability and otherwise maintain the current state \((\varvec{\theta }^{(t+1)}=\varvec{\theta }^{(t)})\):

$$\begin{aligned} \min (1, r), \end{aligned}$$where \(r = \exp \{\log (\varvec{\rho },\varvec{\theta })-\log (\varvec{\rho }^*, \varvec{\theta }^*)\}\).

Step 5: Determine whether to continue HMC

-

If \(t = T\) (where T is the number of HMC iterations.), end sampling; otherwise set \(t = t+1\) and return to step 2.

1.2 NUTS

The HMC algorithm in the previous section has parameters \(\epsilon \), L, and \(\varvec{\Sigma }\), which need to be set and affect the sampling efficiency. The advantage of the HMC algorithm is that the average transition distance can be increased.

For the same L, increasing \(\epsilon \) increases the transition distance in the leapfrog integrator but decreases the acceptance rate. Increasing L and decreasing \(\epsilon \) increases the transition distance and acceptance rate but increases the computational cost. Depending on the value of L, the transition may make a U-turn, resulting in a shorter travel distance. For such situations, Hoffman and Gelman (2014) proposed using the NUTS algorithm, an extension of the HMC algorithm. Their proposed algorithm uses half the squared distance between the current parameter \(\varvec{\theta }\) and the candidate point \(\varvec{\theta }^*\) to determine whether a transition makes a U-turn:

Specifically, the criterion that the first derivative with respect to time t of half the squared distance becomes less than 0 (meaning that half the squared distance does not increase even if the number of updates L is increased) is used:

Thus, the NUTS algorithm can automatically set L. For more details on the algorithm, see Hoffman and Gelman (2014) and the Stan reference manual (Stan Development Team 2020).

Rights and permissions

About this article

Cite this article

Tanabe, Y., Araki, Y., Kinoshita, M. et al. Bayesian nonparametric quantile mixed-effects models via regularization using Gaussian process priors. Jpn J Stat Data Sci 5, 241–267 (2022). https://doi.org/10.1007/s42081-022-00158-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42081-022-00158-y