Abstract

Dangerous driving behavior is a major contributing factor to road traffic accidents. Identifying and intervening in drivers’ unsafe driving behaviors is thus crucial for preventing accidents and ensuring road safety. However, many of the existing methods for monitoring drivers’ behaviors rely on computer vision technology, which has the potential to invade privacy. This paper proposes a radar-based deep learning method to analyze driver behavior. The method utilizes FMCW radar along with TOF radar to identify five types of driving behavior: normal driving, head up, head twisting, picking up the phone, and dancing to music. The proposed model, called RFDANet, includes two parallel forward propagation channels that are relatively independent of each other. The range-Doppler information from the FMCW radar and the position information from the TOF radar are used as inputs. After feature extraction by CNN, an attention mechanism is introduced into the deep architecture of the branch layer to adjust the weight of different branches. To further recognize driving behavior, LSTM is used. The effectiveness of the proposed method is verified by actual driving data. The results indicate that the average accuracy of each of the five types of driving behavior is 94.5%, which shows the advantage of using the proposed deep learning method. Overall, the experimental results confirm that the proposed method is highly effective for detecting drivers’ behavior.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Drivers’ driving behavior is a vital factor in driving safety. Road accidents were result in 1.35 million fatalities, which is one of the top causes of death globally [1]. Various abnormal situations can arise while on the road [2,3,4]. A significant percentage, approximately 74%, of these accidents are linked to erratic driving behavior [5]. Therefore, understanding driver behavior can significantly mitigate the rate of these accidents.

Extensive studies have been conducted on driver behavior in recent decades, with a particular focus on driving habits [6] (categorized as aggressive, normal, and cautious), as well as driver emotions [7], dangerous driving behavior [8,9,10], and other related aspects. And they require capturing information about the driver’s state [11], including head posture [12], eye state [13], hand movements [14], foot movements [15, 16], and also some physiological signals [17, 18].

Cameras are widely used as detection devices due to their intuitiveness. Video-based behavior recognition methods utilize image sequences as input and combine information from inside and outside frames and inter-frame motion information to derive features for representation and classification. In [19], data on the driver’s head posture, hand movements, foot movements, and their view ahead were collected to detect distracting driver activity. In [20], cameras were used to monitor the driver’s eyes, head, and mouth to distinguish whether they were driving under fatigue. In [21], ten driving behaviors, including safe driving, drinking, and talking to passengers, were effectively identified through segmenting video and the application of deep convolutional neural networks. However, video-based approaches may invade the user’s privacy and may not be suitable for installation in family cars.

In addition to vision-based feature extraction methods, physiological signals including electroencephalography (EEG) [22] and electrooculography (EOG) [23] have also been employed for real-time driver status monitoring. In this study [24], six traffic flow conditions are designed in a simulated car-following experiment and a two-layer EEG-based driving behavior recognition system is proposed, the average accuracy is 69.5% and the highest accuracy can reach 83.5%. In [25], Electrooculographic signals and a one-dimensional convolutional neural network are applied to the recognition of driver behavior, which achieve an average accuracy of 80%. Moreover, wearable acoustic sensors, wearable wristbands for heart rate detection, and wearable EMG sensors are also widely used in Driver Activity Recognition (DAR) systems. However, the inconvenience of wearing such devices limits their potential for further applications.

In recent years, millimeter wave radar has gained widespread usage in the realm of human movement recognition. This is primarily thanks to its benefits, including its resistance to environmental influences (such as sunlight), its reliability [26], its capacity to ensure privacy protection [27, 28], and its high degree of accuracy [29, 30]. In [31, 32], Linear Frequency-Modulated Continuous-Wave radar (FMCW) is effectively utilized to recognize basic movements that are typical in everyday life, such as walking, sitting, and standing up. In the domain of driver activity recognition, a study [33] evaluating driving behavior recognition using FMCW radar has successfully yielded the anticipated outcomes. The usage of radar technology is rapidly increasing, and numerous studies are underway to investigate its potential in recognizing human movement. Therefore, this paper presents a device that employs the mutual fusion of two radar signals to precisely and conveniently detect driving behavior. In terms of methods for DAR recognition, deep learning models have achieved state-of-the-art results in object detection, classification, generation, and segmentation tasks due to the continuous development of deep learning (DL) techniques. So far, it has been successfully applied to a number of driver monitoring tasks [34,35,36]. However, as technology evolves, deep learning network scan be further improved to provide better recognition results. This paper introduces the two types of Radar Fusion Driver Activity Recognition Neural Net (RFDANet) model for driver activity recognition, which employs two different types of radar (FWCW in combination with TOF radar) and focuses specifically on five common driving activities to maintain user privacy. By utilizing pre-processing of the radar data and avoiding manual feature extraction, the RFDANet model effectively addresses DAR detection, achieving impressive results with a pre-trained CNN model.

The main contributions of this work are as follows:

-

(1)

A method for multimodal data fusion using non-intrusive radar technology is proposed for use in DAR. This approach has led to a significant improvement of 10% in data fusion results, as compared to using a single sensor.

-

(2)

A novel deep learning-based approach to identify driver behavior is proposed, which combines multi-level attention fusion and hybrid CNN-LSTM models for detecting driving behavior This branch-attention-based multimodal fusion model automatically extracts features and weighs features from different modalities, resulting in improved performance in DAR.

-

(3)

The paper conducted experiments to validate the proposed model for detecting the driving behavior of unknown persons. The experimental results show that radar detection of driver behavior is highly promising, and the attention-based multimodal fusion model surpasses other DNN models.

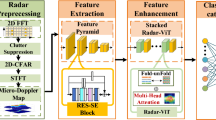

The framework of this paper is illustrated in Fig. 1. The structure of this article is as follows: “Measurement setup” introduces the working principle of FMCW radar and TOF radar. The data processing methods and the developed RFDANet are presented in “Methods”. The experimental verification of the development method is performed in “Experiment”. “Analysis of results and discussion” extends further discussions. The conclusions are drawn in the final Section.

Measurement setup

FMCW radar system

FMCW radar systems use a chirp signal for transmission. The chirp’s frequency increases or decreases linearly over time, and a set of chirps produces a frame serving as a radar processing observation window. The system’s performance is influenced by various chirp parameters, such as frequency slope and scanning band.

The FMCW radar signal, as employed in this paper, can be represented by a simplified sawtooth modulated waveform, as shown in Fig. 2. The period of the chirp signal transmitted by the antenna is determined by summing the idle time and the ramp end time. By default, the signal period is set to 160 us, while the idle time and the ramp end time are set to 100 us and 60 us, respectively. The signal’s maximum bandwidth is 4 GHz, which enables the achievement of a maximum detection distance 3.75 cm. The transmit signal can be mathematically expressed as follows:

where \(B\) is the bandwidth of transmitted signal, \(f_{c}\) indicates the carrier frequency, and \(T\) is the sweep time. Assuming an object at a distance of \(R\) from the radar and moving with a radial velocity of \(v\), the reflected signal is shown as follows:

where \(\tau = 2\left( {R + vt} \right)/c\) is the round-trip delay and \(c\) is the speed of light. In the radar sensor, by mixing the transmitted signal with the received signal, the mixed output signal can be obtained as follows:

As depicted in Eq. (3), the mixed signal is a beat signal. The sampling data of the mixed signal is stored in the computer and then subsequently processed to obtain a micro-Doppler signature of driving behavior.

TOF radar system

The TOF radar uses the zero-difference detection principle to measure distance. It achieves this by measuring the correlation between the reflected light and a reference signal. To be more specific, it transmits a near-infrared optical signal that is modulated by a sinusoidal wave. This signal is then reflected by the target surface and received by an infrared detector. By calculating the time delay of the received signal in relation to the transmitted signal, the target distance information can be determined. Figure 3 shows this principle.

Assuming that the infrared optical signal being transmitted is \(g\left( t \right)\), here \(g\left( t \right) = acos\left( {2\pi f_{0} t} \right)\), with an amplitude of \(a\) and a signal modulation frequency of \(f_{0}\). The received optical signal \(s\left( t \right)\) is then expected to be:

where \(a_{r}\) is the amplitude of the emitted signal light, \(\varphi\) is the phase delay caused by the distance to the target, and \(b\) is the offset caused by the ambient light.

The correlation equation between the transmitted and received light signals is shown as follows:

where \(\tau\) is the time delay.

To recover the amplitude \(a_{r}\) and phase \(\varphi\) of the reflected light signal, four sequential amplitude images are generally acquired, defined as:

The distance \(d\) between the driver and the TOF radar can be expressed as:

The TOF radar measures distance based on the principle of time-of-flight, while FMCW radar calculates distance based on frequency difference. They both capture driving behavior information, but from different domains, namely time and frequency. The TOF radar excels in measuring static driving behavior information effectively, while the FMCW radar is more adept at detecting dynamic driving behavior information. Integrating the information obtained from both sources can more precisely and efficiently identify driving behavior.

Methods

FMCW radar data processing

The acquired FMCW radar echo signal undergoes I/Q quadrature demodulation, sampling, and multi-cycle combination to yield a 128 × 256 dimensional data matrix per cycle. The matrix comprises 128 cycles of echo data vectors regarding rows and 256 frequency data vectors regarding columns. However, data obtained from actual vehicle driving behavior often contains static clutter and DC drift, hence necessitates clutter removal and suppression before proceeding with the next step in processing. To address this in the current study, an MTI filter is utilized to eliminate DC clutter and static clutter from the dataset.

The MTI filter is designed to enhance the detection of driving actions by eliminating DC clutter, which refers to stationary velocity, from the Doppler frequency. Although motion information is the key indicator for detecting driving behavior, static information between consecutive movements can also provide valuable insight into different driving behaviors. Consequently, the MTI filter must be able to purge static clutter while retaining the driver’s body information. To accomplish this, the MTI filter assigns different weights of 1 or 0.5 to static interference based on the detection distance. DC clusters representing self-interference are filtered using a weight of 1, whereas static or moving data pertaining to the target is filtered using a weight of 0.5.

To obtain more Doppler features related to driving behavior in the time–frequency domain, the FMCW radar data is transformed with a Short-Time-Fourier-Transform (STFT) after the MTI filter described above. The STFT adds a window to the Fourier-Transform (FFT) and the time range of the analysis is reduced and will be amplified significantly. Assuming the signal is smooth during this time range, the STFT is

The processed radar signal is denoted as \(s\left( \cdot \right)\) and the window function as \(w\left( \cdot \right)\) in the Short-Time Fourier Transform (STFT). Although STFT is useful in characterizing signal distribution, there is a trade-off between temporal and frequency resolution. Decreasing the window length improves temporal resolution, while increasing it enhances frequency resolution. This study investigates five driving behaviors, and for STFT analysis, a window length of 10 is chosen. As illustrated in Fig. 4, the time–frequency spectrogram resulting from MTI and STFT effectively removes DC and static clutter, and reveals a clear Doppler signature.

To improve the efficiency of the subsequent classification model and reduce its size, the input of the designed network consists solely of one-dimensional signals. The spectral maps transformed through MTI and STFT are then downscaled using principal component analysis (PCA) to remove redundant features while preserving the important dimensions in the feature vectors.

Suppose the spectrogram is a two-dimensional set of feature vectors \(P\). The PCA transformation requires transforming \(P\) into a one-dimensional \(Q\).

The PCA method needs to find the maximum of the following equation to find the linear map** \(k\).

where \(cov(P)\) is the covariance of the set of spectral map eigenvectors.

TOF radar data processing

The TOF radar information \(x,y,z\) includes various areas, such as the driver and the seat backrest. However, we only require information pertaining to the target region, specifically the region concerning the driver which we are interested in. For this purpose, the thesis uses a threshold segmentation method to divide the image pixels into regions of different grey levels, as there is a clear difference in intensity between the target and background pixel grey values, and by setting a suitable threshold \(I\left( {x,z} \right) > T\) the set of target regions that meet the requirements can be extracted. Additionally, the captured TOF radar information data may contain a significant number of zero points due to reflected light. These points do not contain useful information and must be eliminated to streamline processing. Figure 5 displays the resulting output, with unnecessary data omitted to reduce the overall computational workload.

RFDANet (Radar Fusion Driver Activity Recognition Net)

To fully leverage the potential of FMCW radar information and TOF radar information, two independent forward propagation channels are established for each type of information. These channels are then merged through the use of a branching structure, facilitating the fusion of FMCW radar information and TOF radar information within the model. Furthermore, to enhance the integration of FMCW radar information and TOF radar information and to improve the flexibility of feature integration from diverse branches, an attention mechanism is implemented in each branch. This attention mechanism assigns varying dynamic weights to different features. Unlike classical channel [37] and spatial attention [38], branch attention [39] is not restricted to measuring the inter-feature relationship within the model but instead emphasizes the significance of different branch features. The information from FMCW radar and TOF radar are segregated into separate branches, which are later fused at the end of the model and transmitted to the LSTM classification layer. The final output of the model is utilized to differentiate driving behaviors. Figure 6 portrays the branching fusion strategy for integrating FMCW radar information and TOF radar information.

Each branch contains several basic units consisting of regular convolutional layers (including ReLU activation function and maximum pooling layer). In addition, to further improve the performance of the model, a Batch Normalization Layer (BN Layer: Batch Normalization Layer) is added between the convolutional layer and the basic ReLU function of the proposed RFDANet.

By inserting BN layers into the proposed RFDANet, the features of each layer can be normalized to a fixed distribution (mean of 0 and standard deviation of 1). This helps in mitigating the feature distribution drift that may occur with increasing iterations, thereby preventing gradient vanishing during model training.

To comprehensively evaluate the significance of distinct branches during the feature fusion process, RFDANet extends the attention mechanism to the branch level and integrates the features of each basic unit in the corresponding branch to constitute the global attention weights. Unlike the classical channel or spatial attention, the branch attention mechanism in this paper focuses on the importance of different branch features. Specifically, assume that \(f_{l}^{m}\) is the output feature of the \(m{\text{th}}\) basic unit convolutional layer in the \(l{\text{th}}\) branch. Then the local attention weights of the \(m{\text{th}}\) basic unit in the \(l{\text{th}}\) branch, the global attention weights of the \(l{\text{th}}\) branch, the feature map of the \(l{\text{th}}\) branch, and the final feature map after the fusion of different branches are given by Eqs. (10), (11), (12) and (13), respectively. Figure 7 illustrates the general architecture of the developed RFDANet.

where \(AWM_{l}^{m}\) is the attention weight module on the \(m{\text{th}}\) basic cell of the \(l{\text{th}}\) branch for outputting local attention weights, \(F^{final}\) is the unweighted features outputted from the \(l{\text{th}}\) branch, \(\otimes\) denotes the multiplication operation of the features, and \(Fusion\) denotes the fusion process of the features.

In this paper, we mainly consider the common methods of adding, multiplying, and splicing FMCW radar information and TOF radar information. In addition, for the fusion of AWM feature maps, multiplication operations are used.

In the developed RFDANet, a multi-level feature fusion strategy is employed to obtain the branch-level attention weights and to integrate the influence of each layer’s feature maps. In each basic unit, the feature maps generated by the convolutional layers are first converted into local attention weights using the local attention module. Then, the branch attention weights representing the importance of the corresponding branches are obtained by multi-level local attention fusion. Specifically, in the local attention module, the output feature maps undergo a compression process through global average pooling (GAP) and global maximum pooling (GMP) along the channel direction. Afterward, the compressed features are inputted into a multilayer perceptron (MLP) with shareable weights, generating two sets of one-dimensional weightings. It is worth noting that the main purpose of using Weight-Sharing-MLP for the local attention module is to reduce the number of training parameters and computational cost of the developed RFDANet. However, it is also important to consider the use of MLPs with non-shared weights or other network structures in practical applications. Finally, the weights of the corresponding branches are obtained by summing the two sets of weights.

Assuming that \(F_{l}^{m}\) is a feature of the output of the \(mth\) basic unit convolution layer of the \(l{\text{th}}\) branch, the procedure for the calculation of the \(mth\) attentional power module \(AWM_{l}^{m}\) of the \(l{\text{th}}\) branch can be given by the following equation. Figure 8 shows the internal structure of the attention module.

where \(\oplus\) denotes the addition operation for the corresponding position element and \(Sigmoid\) denotes the activation function that allows branch attention weights to be restricted to the range (0, 1).

Experiment

This section delves into the measurement of driving behavior through radar technology in real-life driving scenarios. It covers the measurement environment, system, and parameter settings. In this paper, two radar systems based on different principles (FMCW radar and TOF radar) are used for the measurement of driving behavior.

The measurement system relies on a Texas Instruments (TI) millimeter wave radar evaluation board, illustrated in Fig. 9. Two evaluation boards were employed: the IWR6843BOOST, responsible for signal transmission and reception, and the DCA1000EVM, responsible for real-time data transmission. With the assistance of the mmWave Studio software system, radar signal waveform parameters, transmit, and receive procedures were configured. No additional hardware beyond a mobile power supply and a laptop was necessary for the measurements.

The main measuring parameters are shown in Table 1. The measurement campaign was conducted in the 77 GHz band with an effective bandwidth of 4 GHz for signal transmission and reception.

The tested vehicle was a Volkswagen Magotan 1.8 TSI, which includes seats for both front and rear rows. The front seats were for the driver and the safety supervisor, while the rear seat had one passenger who was responsible for operating the laptop to record data. During the measurement, the FMCW radar board was fixed with a bracket in front of the driver’s right side. Meanwhile, the TOF radar was placed in front of the driver’s front upper place, where the visor typically sits. The configuration of software adjustment parameters and the control of transmit and receive commands in the laptop were performed manually.

The vehicle was driven along the lane in an open and unoccupied environment of the holiday campus under typical driving conditions. The driver navigated through a straight line, random left, and right turns, as well as U-turns. The vehicle travel route is shown in Fig. 10.

During the measurements process, each volunteer was asked to adjust their seat to the position that was most comfortable for them. The distance between the radar and the volunteer ranged from 0.4 to 0.8 m, while the height of their head was between 0.9 and 1.2 m. The three volunteers performed each behavior 20 times, with 10 of them in a parked car and 10 while the car was moving at approximately 20 km/h.

A total of approximately 30 min of data collection was carried out to ensure that the large amount of data gathered was sufficiently random. 3 volunteers participated in this experiment, performing 5 types of movements under real driving conditions: normal driving, head-up, head-turning, looking at a mobile phone, and dancing to music. These 5 driving behaviors are described in Fig. 11. All procedures involving human research conformed to the ethical standards of Zhejiang Sci-Tech University and followed Declaration of Helsinki.

-

(1)

Normal driving the driver keeps the upper body upright for normal driving with little to no other movement;

-

(2)

Head up head leans forward at a slow rate and then tilts up with a controlled period of about 4 s to simulate driving fatigue;

-

(3)

Head twisting turn your head backward to the left and the right, kee** your torso still, 2 times back and forth, keep the cycle to about 4 s;

-

(4)

Pick up the phone the driver holds the phone in his left hand and picks it up and places it in front of him while driving;

-

(5)

Dance to music popular songs are played in the car and the driver can’t help but dance to the music while driving.

As shown in Fig. 11a, the distance-Doppler diagram is typically smooth during regular driving. There is no visible presence of periodic waveforms, only slight fluctuations, which may be due to vibrations caused by the motion of the vehicle. This can be observed when comparing it to the interior of an unstartled motor vehicle.

As shown in Fig. 11b, the distance-Doppler trajectory also shows a positive Doppler during head-up. The distance decreases, leading to the eventual disappearance of the Doppler signal. However, when the driver is tilting his head before raising it again, the Doppler features and distance features of the Doppler trajectory increase again after the initial drop.

As shown in Fig. 11c, it was observed that during the head-twisting maneuver, only the lateral rotation of the right side was captured, as the left and right rotations produced identical Doppler and distance signals. Apart from the predicted negative Doppler components, certain positive Doppler components were also noticed. This is owing to the fact that, while rotating the neck, one side of the face turns away from the radar, whereas the other side moves closer to it. However, a trajectory point was identifiable from the distance-Doppler diagram, which made it more challenging to account for the positive Doppler components.

As shown in Fig. 11d, dance to music shows a periodic character on the distance-Doppler diagram, and the waveform periodicity is obvious.

As shown in Fig. 11e, the distance-Doppler diagram of picking up a mobile phone while driving shows that the power of the Doppler diagram keeps increasing with a larger slope when picking up the phone.

Model parameters and hyperparameter settings

The RFDANet model consists of two branches, each containing three basic units of Convn—BN—ReLU—Pooling. The first and third convolutional layers feature 32 convolutional kernels, while the second convolutional layer houses 16 convolutional kernels of size 2 and with a step value of 1. The pooling region and step size in the MaxPooling layer are both set to 2. Additionally, between the convolutional and MaxPooling layers, a BN layer and a ReLU function are incorporated. The resulting features from the latest basic unit in the two branches are combined and fused by tiling, and the fused feature map is then fed into the MLP for classification. The MLP consists of three fully connected (FC) layers, with 256, 128, and 5 neurons per layer, respectively. Additionally, the output feature map from the current convolutional layer must be passed through an auxiliary network to generate local attention weights before proceeding to the next convolutional layer. The auxiliary network employed in this paper primarily comprises three fully-connected layers, with the number of neurons (which equates to the number of feature map** channels) being halved, quartered, and one respectively. The implementation of the deep learning model discussed in this paper relies on TensorFlow 1.13.1 and Keras 2.2.4. The computing platform used is Win10 Professional (64-bit) with a Core (TM) i7-6700HQ CPU and 12G RAM (2133 Hz).

To further compare the performance of different information fusion methods, a diagnostic model based on data-level information fusion, namely the 1D-CNN method [40], is also constructed in this section. The 1D-CNN model consists of 3 Convn—BN—ReLU—Pooling basic units, with the convolutional layer and MLP parameters being the same as those of RFDANet. The difference is that 1D-CNN only has a single branch and does not contain an attention module. Table 2 outlines the specifics of each model. Cross-entropy classification is employed as the loss function during network training. The mini-batch strategy was chosen for network training, with 64 samples in a single mini-batch. In addition, the Adam optimizer was consistently parameterized, and a dropout technique was used with a retention rate of 0.5 between the last two fully connected layers to mitigate the effects of overfitting.

Analysis of results and discussion

In this section, we investigate the accuracy of driving behavior recognition with different information integration. Firstly, the results of fusing FMCW radar with TOF radar information of different dimensions (x,y,z) are compared using the 1-DCNN method, as shown in Fig. 12 (vertical axis represents the accuracy, horizontal axis represents the fused data). The fusion of FMCW radar data with TOF radar x-axis information is denoted as "radar_x", while the fusion with TOF radar y-axis information is denoted as "radar_y". "radar_xy" represents the fusion of FMCW radar data with both TOF radar x-axis and y-axis information. "radar_xz" denotes the fusion with TOF radar x-axis and z-axis data. Similarly, for fusion with TOF radar y-axis and z-axis the "radar_yz" are used. And "radar_xyz" represents the fusion with TOF radar x-axis, y-axis and z-axis information. After conducting 10 validations, we conclude that fusing FMCW radar information with TOF radar x- and y-axis data results in comparable accuracy to that achieved with the fusion of FMCW radar information with TOF radar 3-axis data. Therefore, to simplify the computational process and improve the classification efficiency of the model, we use the FMCW radar and TOF radar x- and y-axis information fusion, which we refer to as "FMCW + TOF data" in this paper.

Secondly, the proposed RFDANet model, illustrated in Fig. 13, was evaluated through a comparison of three recognition schemes. These include the single FMCW radar-RFDANet, the single TOF radar-RFDANet, and the fusion scheme of FMCW radar and TOF radar-RFDANet. Table 3 reveals that the recognition scheme based on the fusion of FMCW radar and TOF radar information achieves the highest recognition accuracy for driving behavior. The combination of the two types of radar information results in a recognition accuracy that is approximately 10% higher than the recognition achieved when using each radar type separately.

The box plot shows the distribution of test accuracy for each case. The upper and lower borders of the blue boxes represent the third and first quartiles of all accuracy rates, respectively, denoted as \(Q_{3}\) and \(Q_{1}\). This means that half of the test accuracy rates lie in the blue boxes. The size of the box, denoted by \(IQR\), corresponds to its level of robustness. The red line in the box indicates the median \(Q_{2}\). Points with values greater than \(Q_{3} + 1.5 \times IQR\) or less than \(Q_{1} - 1.5 \times IQR\) are identified as outliers. Figure 13 demonstrates that the results from both the FMCW radar and the TOF radar are capable of achieving a higher maximum accuracy and a lower minimum accuracy. Moreover, a smaller gap between the upper and lower boundaries indicates better differentiation between individuals. Additionally, the narrower blue frame illustrates the improved robustness of the technique.

A random selection of 70% of the final dataset was used as the training set and the remaining 30% as the test set. In addition, the average accuracy (time) of 10 training trials was used as the final evaluation metric of this paper. As shown in Fig. 14, the average training accuracy of the developed RFDANet can be maintained at around 94.5%, where Nd for normal driving, Lu for head up, Th for head twisting, Puh for picking up the phone and looking at it, and Dm for dancing with music and dancing. Also as shown in Fig. 15, RFDANet converges quickly and after about 80 epochs, the loss plateaus and fluctuates only within a small range.

Table 4 illustrates the model size and computational cost of the RFDANet compared with other methods. The size of the proposed RFDANet is the smallest. Table 5 presents the average accuracy and speed of recognition of the AlexNet [41], 1-D CNN, CNN-LSTM, CNN-Channel attention, and CNN-Spatial attention methods as a benchmark to compare against the RFDANet method proposed in this paper. The results indicate that the RFDANet method achieves higher recognition accuracy compared to the other methods, without any compromise on speed. These experimental findings demonstrate the promising benefits of using the RFDANet method for classification models.

Discussion

Installation position of the radar system

The mounting position of the FMCW radar and TOF radar has a significant impact on the detection of driving behavior and warrants further experimental study. There are currently two options: one is to mount the FMCW radar on the steering wheel and the other is to place the radar on the dashboard. The FMCW radar is placed on the steering wheel and the TOF radar is placed on the dashboard to detect driving behavior from above in the absence of direct light. However, there may be some missed data, resulting in limited recognition accuracy. As a next step, we are considering placing a radar at the window to supplement the missing data on the side of the driving action.

Subject differences impact

The applicability of the model was investigated to classify and identify the driving behavior of unknown persons by a model trained on the driving behavior of known persons. For this purpose, data on the driving behavior of three additional volunteers were collected separately in a laboratory setting as a test group. For each maneuver, the test was repeated 30 times to obtain reliable results. Figure 16 shows the box plots of the test results, which indicate that the model can achieve classification with high robustness for the same driving behavior of an unknown person.

Conclusion

The paper proposes a solution to the challenge of detecting and measuring driving behaviors in real-world situations. The solution, referred to as RFDANet, utilizes FMCW radar and TOF radar systems to identify five prevalent driving behaviors. RFDANet has two feature branches that process FMCW radar information and TOF radar information, respectively. It also extends the attention mechanism to the branch level and adjusts the importance of features in different branches, which helps the autonomous fusion of FMCW radar information and TOF radar information, thereby enhancing the flexibility and accuracy of driving behavior detection. Also, it is capable of integrating FMCW radar information and TOF radar information more flexibly than general external information fusion methods. The experiments have validated the superiority of the method. The model has demonstrated impressive performance in both real-world driving scenarios and laboratory-simulated driving environments. The proposed RFDANet has the potential to bolster driving behavior recognition accuracy while maintaining a rapid detection time. There are still certain limitations in scenarios involving in-vehicle factors and varied road conditions due to the lack of data. To improve the model’s accuracy and robustness, future research will focus on extending the driving behavior dataset to include more diverse and complex traffic situations.

References

World Health Organization (2018) Global Safety Report on Road Safety. https://www.who.int/publications/i/item/9789241565684

Wu P, Liu J, Shen F (2019) A deep one-class neural network for anomalous event detection in complex scenes. IEEE Trans Neural Netw Learn Syst 31:2609–2622

Liu T, Lam K, Kong J (2023) Distilling privileged knowledge for anomalous event detection from weakly labeled videos. IEEE Trans Neural Netw Learn Syst, pp 1–15. https://doi.org/10.1109/TNNLS.2023.3263966

Köpüklü O, Zheng J, Xu H, Rigoll G (2021) Driver anomaly detection: a dataset and contrastive learning approach. In: 2021 IEEE Winter Conference on Applications of Computer Vision (WACV) , 2021: IEEE, pp 91–100

Santokh S (2018) Critical Reasons for Crashes Investigated in the National Motor Vehicle Crash Causation Survey. Natl. Highw. Traffic Saf. Adm

Su Z, Woodman R, Smyth J, Elliott M (2023) The relationship between aggressive driving and driver performance: a systematic review with meta-analysis. Accident Anal Prev 183(106972):1–13

Lu J (2022) Symbolic aggregate approximation based data fusion model for dangerous driving behavior detection. Inf Sci 609:626–642

Huang T, Fu R (2022) Driver distraction detection based on the true driver’s focus of attention. IEEE Trans Intell Transp Syst 23:19374–19386

Du G, Li T, Liu P, Li D (2021) Vision-based fatigue driving recognition method integrating heart rate and facial features. IEEE Trans Intell Transp Syst 22:3089–3100

Tian Y, Cao J (2021) Fatigue driving detection based on electrooculography: a review. EURASIP J Image Video Process 33:1–17

Singh H, Kathuria A (2021) Analyzing driver behavior under naturalistic driving conditions: a review. Accident Anal Prev 150(105908):1–21

Ansari S, Naghdy F, Du H, Pahnwar Y (2022) Driver Mental Fatigue Detection Based on Head Posture Using New Modified reLU-BiLSTM Deep Neural Network. IEEE Transactions on Intelligent Transportation System 23(8):10957–10969

Shen N, Zhu Y, Oroni C, Wang L (2021) Analysis of drivers’ eye movements with different experience and genders in straight-line driving. In: 2021 IEEE Intl Conf on Dependable, Autonomic and Secure Computing, Intl Conf on Pervasive Intelligence and Computing, Intl Conf on Cloud and Big Data Computing, Intl Conf on Cyber Science and Technology Congress (DASC/PiCom/CBDCom/CyberSciTech), 2021: IEEE, pp 907–911

Liu W, Li H, Zhang H (2022) Dangerous driving behavior recognition based on hand trajectory. Sustainability 14(12355):1–16

Nakagawa C, Yamada S, Hirata D, Shintani A (2022) Differences in driver behavior between manual and automatic turning of an inverted pendulum vehicle. Sensors 22(9931):1–21

Kajiwara Y, Murata E (2022) Effect of behavioral precaution on braking operation of elderly drivers under cognitive workloads. Sensors 22(5741):1–16

Tabatabaie M, He S, Yang X (2022) Driver Maneuver identification with multi-representation learning and meta model update designs. Proc ACM Interact Mob Wear Ubiquit Technol 6:1–23

Lu K, Dahlman A, Karlsson J, Candefjord S (2022) Detecting driver fatigue using heart rate variability: a systematic review. Accid Anal Prev 178(106830):1–10

Ezzouhri A, Charouh Z, Ghogho M, Guennoun Z (2021) Robust deep learning-based driver distraction detection and classification. IEEE Access 9:168080–168092

Ohn-Bar E, Tawari A, Martin S, Trivedi M (2015) On surveillance for safety critical events: in-vehicle video networks for predictive driver assistance systems. Comput Vis Image Underst 134:130–140

Lashkov I, Kashevnik A, Shilov N, Parfenov V, Shabaev A (2019) Driver dangerous state detection based on OpenCV& Dlib libraries using mobile video Processing. In: 2019 IEEE International Conference on Computational Science and Engineering (CSE) and IEEE International Conference on Embedded and Ubiquitous Computing (EUC), IEEE, pp 74–79

Yang L, Ma R, Zhang H, Guan W, Jiang S (2017) Driving behavior recognition using EEG data from a simulated car-following experiment. Accident Anal Prev 116:30–40

Doniec R, Konior J, Sieci ´nski S, Piet A, Irshad M, Piaseczna N, Hasan M, Li F, Nisar M, Grzegorzek M (2023) Sensor-based classification of primary and secondary car driver activities using convolutional neural networks. Sensors (Basel, Switzerland) 23(5551):1–19

Shahbakhti M, Beiramvand M, Rejer I, Augustyniak P, Broniec-Wójcik A, Wierzchon M, Marozas V (2022) Simultaneous eye blink characterization and elimination from low-channel prefrontal EEG signals enhances driver drowsiness detection. IEEE J Biomed Health Inform 26(3):1001–1012

Zhang G, Etemad A (2021) Capsule attention for multimodal EEG-EOG representation learning with application to driver vigilance estimation. IEEE Trans Neural Syst Rehabil Eng 29:1138–1149

Gurbuz S, Amin M (2019) Radar-based human-motion recognition with deep learning: promising applications for indoor monitoring. IEEE Signal Process Mag 36:16–28

Amin M (2017) Radar for indoor monitoring: detection, classification, assessment. CRC Press, Boca Raton

Rai P, Idsøe H, Yakkati R, Kumar A, Khan M, Yalavarthy P, Cenkeramaddi L (2021) Localization and activity classification of unmanned aerial vehicle using mmWave FMCW radars. IEEE Sens J 21(14):16043–16053

Nguyen H, Lee S, Nguyen T, Kim Y (2022) One shot learning based drivers head movement identification using a millimetre wave radar sensor. IET Radar Sonar Navig 16:825–836

Chae R, Wang A, Li C (2019) FMCW radar driver head motion monitoring based on Doppler spectrogram and range-Doppler evolution. In: IEEE Topical Conf. Wireless Sensors Sensor Netw. (WiSNet), 2019: IEEE, pp 1–4

Varela V, Rodrigues D, Zeng L, Li C (2023) Multitarget physical activities monitoring and classification using a V-band FMCW radar. IEEE Trans Instrum Meas 72:1–10

Abdu F, Zhang Y, Deng Z (2022) Activity Classification based on feature fusion of FMCW radar human motion micro-doppler signatures. IEEE Sens J 22(9):8648–8662

Bresnahan D, Li Y (2021) Classification of driver head motions using a mm-wave FMCW radar and deep convolutional neural network. IEEE Access 9:100472–100479

Shahverdy M, Fathy M, Berangi R, Sabokrou M (2020) Driver behavior detection and classification using deep convolutional neural networks. Expert Syst Appl 149(113240):1–12

Sánchez S, Pozo R, Gómez L (2022) Driver identification and verification from smartphone accelerometers using deep neural networks. IEEE Trans Intell Transp Syst 23(1):97–109

Jeham I, Alouani I, Khalifa A, Mahjoub M (2023) Deep learning-based hard spatial attention for driver in-vehicle action monitoring. Expert Syst Appl 219(119629):1–11

Li R, Wang X, Wang J, Song Y, Lei L (2022) SAR target recognition based on efficient fully convolutional attention block CNN. IEEE Geosci Remote Sens Lett 19:1–5

Xu Y, Zhang Y, Yu C, Chao Ji, Yue T, Li H (2022) Residual spatial attention kernel generation network for hyperspectral image classification with small sample size. IEEE Trans Geosci Remote Sens 60:1–14

Liu T, Lam K, Zhao R, Kong J (2022) Enhanced attention tracking with multi-branch network for egocentric activity recognition. IEEE Trans Circuits Syst Video Technol 32(6):1587–3602

Shahverdy M, Fathy M, Berangi R, Sabokrou M (2021) Driver behaviour detection using 1D convolutional neural networks. Electron Lett 57(3):119–122

**ng Y, Lv C, Wang H, Cao D, Velenis E, Wang F (2019) Driver activity recognition for intelligent vehicles: a deep learning approach. IEEE Trans Veh Technol 68(6):5379–5390

Acknowledgements

This study is supported by the Key Research and Development Projects of Zhejiang Province (2023C01062).

Funding

This study was supported by the Key Research and Development Projects of Zhejiang Province (2023C01062).

Author information

Authors and Affiliations

Contributions

Concept and design: MG and KC; data collection and analysis: KC; drafting of the article: KC; critical revision of the article for important intellectual content: MG; data collection: KC and ZC; study supervision: MG. All the authors approved the final article.

Corresponding author

Ethics declarations

Conflict of interest

Corresponding authors declare on behalf of all authors that there is no conflict of interest. We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Ethical approval

The experiments were conducted under the guidance of Zhejiang Sci-Tech University.

Consent to participant

All procedures involving human research conformed to the ethical standards of Zhejiang Sci-Tech University and followed Declaration of Helsinki.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gu, M., Chen, K. & Chen, Z. RFDANet: an FMCW and TOF radar fusion approach for driver activity recognition using multi-level attention based CNN and LSTM network. Complex Intell. Syst. 10, 1517–1530 (2024). https://doi.org/10.1007/s40747-023-01236-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-023-01236-8