Abstract

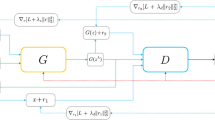

The generative adversarial network (GAN) is a well-known model for learning high-dimensional distributions, but the mechanism for its generalization ability is not understood. In particular, GAN is vulnerable to the memorization phenomenon, the eventual convergence to the empirical distribution. We consider a simplified GAN model with the generator replaced by a density and analyze how the discriminator contributes to generalization. We show that with early stop**, the generalization error measured by Wasserstein metric escapes from the curse of dimensionality, despite that in the long term, memorization is inevitable. In addition, we present a hardness of learning result for WGAN.

Similar content being viewed by others

Notes

Technically, Fig. 1 curve \(\textcircled {1}\) concerns the \(W_2\) loss, but it is reasonable to believe that training on \(W_1\) or any \(W_p\) loss cannot escape from the curse of dimensionality either.

References

Ambrosio, L., Gigli, N., Savaré, G.: Gradient flows: in metric spaces and in the space of probability measures. Springer, Berlin (2008)

Arbel, M., Korba, A., Salim, A., Gretton, A.: Maximum mean discrepancy gradient flow. ar**v preprintar**v:1906.04370 (2019)

Arjovsky, M., Chintala, S., Bottou, L.: Wasserstein GAN. ar**v preprintar**v:1701.07875 (2017)

Arora, S., Ge, R., Liang, Y., Ma, T., Zhang, Y.: Generalization and equilibrium in generative adversarial nets (GANs). ar**v preprintar**v:1703.00573 (2017)

Arora, S., Risteski, A., Zhang, Y.: Do GANs learn the distribution? Some theory and empirics. In: International Conference on Learning Representations (2018)

Ba, J.L., Kiros, J.R., Hinton, G.E.: Layer normalization. ar**v preprintar**v:1607.06450 (2016)

Bai, Y., Ma, T., Risteski, A.: Approximability of discriminators implies diversity in GANs (2019)

Balaji, Y., Sajedi, M., Kalibhat, N.M., Ding, M., Stöger, D., Soltanolkotabi, M., Feizi, S.: Understanding overparameterization in generative adversarial networks. ar**v preprintar**v:2104.05605 (2021)

Borkar, V.S.: Stochastic approximation with two time scales. Syst. Control Lett. 29(5), 291–294 (1997)

Chavdarova, T., Fleuret, F.: SGAN: an alternative training of generative adversarial networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 9407–9415 (2018)

Che, T., Li, Y., Jacob, A., Bengio, Y., Li, W.: Mode regularized generative adversarial networks. ar**v preprintar**v:1612.02136 (2016)

Dobrić, V., Yukich, J.E.: Asymptotics for transportation cost in high dimensions. J. Theor. Probab. 8(1), 97–118 (1995)

E, W., Ma, C., Wang, Q.: A priori estimates of the population risk for residual networks. ar**v preprintar**v:1903.02154 1, 7 (2019)

E, W., Ma, C., Wojtowytsch, S., Wu, L.: Towards a mathematical understanding of neural network-based machine learning: what we know and what we don’t (2020)

E, W., Ma, C., Wu, L.: A priori estimates for two-layer neural networks. ar**v preprintar**v:1810.06397 (2018)

E, W., Ma, C., Wu, L.: On the generalization properties of minimum-norm solutions for over-parameterized neural network models. ar**v preprintar**v:1912.06987 (2019)

E, W., Ma, C., Wu, L.: Machine learning from a continuous viewpoint, I. Sci. China Math. 63(11), 2233–2266 (2020)

E, W., Ma, C., Wu, L.: The Barron space and the flow-induced function spaces for neural network models. Construct. Approx., 1–38 (2021)

E, W., Wojtowytsch, S.: Kolmogorov width decay and poor approximators in machine learning: shallow neural networks, random feature models and neural tangent kernels. ar**v preprintar**v:2005.10807 (2020)

E, W., Wojtowytsch, S.: On the Banach spaces associated with multi-layer ReLU networks: function representation, approximation theory and gradient descent dynamics. ar**v preprintar**v:2007.15623 (2020)

Feizi, S., Farnia, F., Ginart, T., Tse, D.: Understanding GANs in the LQG setting: formulation, generalization and stability. IEEE J. Sel. Areas Inf. Theory 1(1), 304–311 (2020)

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. In: Advances in neural information processing systems, pp. 2672–2680 (2014)

Gretton, A., Borgwardt, K.M., Rasch, M.J., Schölkopf, B., Smola, A.: A kernel two-sample test. J. Mach. Learn. Res. 13(25), 723–773 (2012)

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., Courville, A.: Improved training of Wasserstein GANs (2017)

Gulrajani, I., Raffel, C., Metz, L.: Towards GAN benchmarks which require generalization. ar**v preprintar**v:2001.03653 (2020)

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B., Hochreiter, S.: GANs trained by a two time-scale update rule converge to a local Nash equilibrium. In: Advances in Neural Information Processing Systems, pp. 6626–6637 (2017)

Hornik, K.: Approximation capabilities of multilayer feedforward networks. Neural Netw. 4(2), 251–257 (1991)

Ioffe, S., Szegedy, C.: Batch normalization: Accelerating deep network training by reducing internal covariate shift. ar**v preprintar**v:1502.03167 (2015)

Isola, P., Zhu, J.-Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1125–1134 (2017)

Jiang, Y., Chang, S., Wang, Z.: TransGAN: Two transformers can make one strong GAN. ar**v preprintar**v:2102.07074 (2021)

Karras, T., Laine, S., Aila, T.: A style-based generator architecture for generative adversarial networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4401–4410 (2019)

Kingma, D.P., Welling, M.: Auto-encoding variational bayes. ar**v preprintar**v:1312.6114 (2013)

Kodali, N., Abernethy, J., Hays, J., Kira, Z.: On convergence and stability of GANs. ar**v preprintar**v:1705.07215 (2017)

Krogh, A., Hertz, J.A.: A simple weight decay can improve generalization. In: Advances in Neural Information Processing Systems, pp. 950–957 (1992)

Lei, Q., Lee, J.D., Dimakis, A.G., Daskalakis, C.: SGD learns one-layer networks in WGANs (2020)

Liang, Y., Lee, D., Li, Y., Shin, B.-S.: Unpaired medical image colorization using generative adversarial network. Multimed. Tools Appl., 1–15 (2021)

Lin, T., **, C., Jordan, M.: On gradient descent ascent for nonconvex-concave minimax problems. In: International Conference on Machine Learning, PMLR, pp. 6083–6093 (2020)

Mao, X., Li, Q., **e, H., Lau, R.Y., Wang, Z., Smolley, S.P.: On the effectiveness of least squares generative adversarial networks. IEEE Trans. Pattern Anal. Mach. Intell. 41(12), 2947–2960 (2018)

Mao, Y., He, Q., Zhao, X.: Designing complex architectured materials with generative adversarial networks. Sci. Adv. 6, 17 (2020)

Mescheder, L., Geiger, A., Nowozin, S.: Which training methods for GANs do actually converge? In: International Conference on Machine Learning, PMLR, pp. 3481–3490 (2018)

Miyato, T., Kataoka, T., Koyama, M., Yoshida, Y.: Spectral normalization for generative adversarial networks. ar**v preprintar**v:1802.05957 (2018)

Mustafa, M., Bard, D., Bhimji, W., Lukić, Z., Al-Rfou, R., Kratochvil, J.M.: CosmoGAN: creating high-fidelity weak lensing convergence maps using generative adversarial networks. Comput. Astrophys. Cosmol. 6(1), 1 (2019)

Nagarajan, V., Kolter, J.Z.: Gradient descent GAN optimization is locally stable. ar**v preprintar**v:1706.04156 (2017)

Nagarajan, V., Raffel, C., Goodfellow, I.: Theoretical insights into memorization in GANs. In: Neural Information Processing Systems Workshop

Nowozin, S., Cseke, B., Tomioka, R.: \(f\)-GAN: training generative neural samplers using variational divergence minimization. In: Advances in Neural Information Processing Systems, pp. 271–279 (2016)

Petzka, H., Fischer, A., Lukovnicov, D.: On the regularization of Wasserstein GANs (2018)

Prykhodko, O., Johansson, S.V., Kotsias, P.-C., Arús-Pous, J., Bjerrum, E.J., Engkvist, O., Chen, H.: A de novo molecular generation method using latent vector based generative adversarial network. J. Cheminform. 11(74), 1–11 (2019)

Radford, A., Metz, L., Chintala, S.: Unsupervised representation learning with deep convolutional generative adversarial networks. ar**v preprintar**v:1511.06434 (2015)

Rahimi, A., Recht, B.: Uniform approximation of functions with random bases. In: 2008 46th Annual Allerton Conference on Communication, Control, and Computing, IEEE, pp. 555–561 (2008)

Royden, H.L.: Real Analysis, 3rd edn. Collier Macmillan, London (1988)

Saxena, D., Cao, J.: Generative adversarial networks (GANs) challenges, solutions, and future directions. ACM Comput. Surv. (CSUR) 54(3), 1–42 (2021)

Shah, V., Hegde, C.: Solving linear inverse problems using GAN priors: an algorithm with provable guarantees. In: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE, pp. 4609–4613 (2018)

Shalev-Shwartz, S., Ben-David, S.: Understanding Machine Learning: From Theory to Algorithms. Cambridge University Press, Cambridge (2014)

Singh, S., Póczos, B.: Minimax distribution estimation in Wasserstein distance. ar**v preprintar**v:1802.08855 (2018)

Sun, Y., Gilbert, A., Tewari, A.: On the approximation properties of random ReLU features. ar**v preprintar**v:1810.04374 (2018)

Tabak, E.G., Vanden-Eijnden, E., et al.: Density estimation by dual ascent of the log-likelihood. Commun. Math. Sci. 8(1), 217–233 (2010)

Villani, C.: Topics in Optimal Transportation. No. 58 in Graduate Studies in Mathematics. American Mathematical Society, New York (2003)

Weed, J., Bach, F.: Sharp asymptotic and finite-sample rates of convergence of empirical measures in Wasserstein distance. ar**v preprintar**v:1707.00087 (2017)

Wojtowytsch, S.: On the convergence of gradient descent training for two-layer ReLU-networks in the mean field regime. ar**v preprintar**v:2005.13530 (2020)

Wu, H., Zheng, S., Zhang, J., Huang, K.: GP-GAN: Towards realistic high-resolution image blending. In: Proceedings of the 27th ACM International Conference on Multimedia, pp. 2487–2495 (2019)

Wu, S., Dimakis, A.G., Sanghavi, S.: Learning distributions generated by one-layer ReLU networks. In: Advances in Neural Information Processing Systems 32. Curran Associates, Inc., pp. 8107–8117 (2019)

Xu, K., Li, C., Zhu, J., Zhang, B.: Understanding and stabilizing GANs’ training dynamics using control theory. In: International Conference on Machine Learning, PMLR, pp. 10566–10575 (2020)

Yang, H., E, W.: Generalization and memorization: the bias potential model (2020)

Yazici, Y., Foo, C.-S., Winkler, S., Yap, K.-H., Chandrasekhar, V.: Empirical analysis of overfitting and mode drop in GAN training. In: 2020 IEEE International Conference on Image Processing (ICIP), IEEE, pp. 1651–1655 (2020)

Zhang, P., Liu, Q., Zhou, D., Xu, T., He, X.: On the discrimination-generalization tradeoff in GANs. ar**v preprintar**v:1711.02771 (2017)

Zhao, J., Mathieu, M., LeCun, Y.: Energy-based generative adversarial network. ar**v preprintar**v:1609.03126 (2016)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yang, H., E, W. Generalization error of GAN from the discriminator’s perspective. Res Math Sci 9, 8 (2022). https://doi.org/10.1007/s40687-021-00306-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40687-021-00306-y