Abstract

We consider a society where individuals get divided into different groups. All groups play the same normal form game, and each group is in the basin of attraction of a particular strict Nash equilibrium of the game. Group selection refers to the evolution of the mass of each group under the replicator dynamic. We provide microfoundations to the replicator dynamic using revision protocols, wherein agents migrate between groups based on average payoffs of the groups. Individual selection of strategies within each group happens in two possible ways. Either all agents instantaneously coordinate on a strict Nash equilibrium or all agents change their strategies under the logit dynamic. In either case, the group playing the Pareto efficient Nash equilibrium gets selected. Individual selection under the logit dynamic may slow down the process and introduce non-monotonicity. We then apply the model to the stag hunt game.

Similar content being viewed by others

Availability of Data and Materials

This is an entirely theoretical paper. No data were used. Hence, this declaration is not applicable.

Notes

The average payoff of the entire society is the average of the group average payoffs.

Sandholm [25] presents an extensive analysis of this dynamic and of the more general class of perturbed best response dynamics to which it belongs.

The standard basis vector \(e_s\in {\textbf{R}}^n\) is the vector with 1 in the s-th place and 0 otherwise.

This is the typical way to describe population games, i.e., games with a large number of agents (Section 2.1, Sandholm [25]). Intuitively, we can think of each agent as a point in the interval [0, 1]. Thus, the total mass of 1 is the length of the interval and each agent, being a point, is of size zero.

As is standard in evolutionary game theory, we interpret (6) as the current payoff, i.e., the payoff based on the current state and mass of the group. It is liable to change when the state or mass changes under evolutionary dynamics.

Here, \(\sum _{k\in S}m^k\pi _k(m)\) is the average payoff of the strategies.

If revision opportunities arrive according to an exponential distribution with rate R, then the number of revision opportunities that arrive in the time interval \([\tau _1,\tau _2]\) follows a Poisson distribution with rate \(R(\tau _2-\tau _1)\). Hence, the expected number of revision opportunities in the time interval \([0,d\tau ]\) is \(Rd\tau \). A stochastic process satisfying such conditions is, therefore, called a Poisson process. See Section 10.C.2 in Sandholm [25] for further details.

The best response to \(\frac{m^ie_i}{m^i}=e_i\) is i. Hence, the initial state being \(m^ie_i\) is consistent with our assumption that the best response for every agent in the group to \(\frac{x^i(0)}{m^i}\) is strategy i.

The off-diagonal payoffs will, however, matter for the size of the sets \(B_i\) as defined in (4).

A more general reason behind such convergence is that the logit dynamic converges in potential games (Sandholm [24], Hofbauer and Sandholm [16]). Pure coordination games are the prototypical examples of potential games. Only if (1) is a two-strategy game can we relax the assumption that it is a coordination game. The logit dynamic must converge to an approximation of a strict Nash equilibrium even if (1) is not a coordination game. Section 6 presents such an example.

One reason is that we seek to track the entire trajectory \(m^i(\tau )\) in order to check whether \(m^1(\tau )\) increases monotonically, i.e., whether selection of group 1 is monotonic.

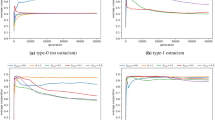

To clarify interpretation, we note that of the total mass of 0.5 in each group in (19), the mass of agents playing strategy 1 is 0.1717 in group 1 and 0.005 in group 2.

Thus, by \(\tau =5\), \(m^1(\tau )\) is nearly equal to 1 in the right panel, which the not the case in the left panel.

In fact, the same pattern of monotonic convergence of \(m^1(\tau )\) is also observed in Fig. 1.

This happens because (17) is a two-strategy game with two strict equilibria.

With instantaneous coordination, the average payoff of group 1 would immediately be 2 and that of group 2 would be 1.

In a two-strategy game with two strict Nash equilibria, this would be true even if the game is not a coordination game. See also footnote 13 in this connection.

The stag hunt game is clearly not a coordination game. But recall from footnote 13 that we can apply multilevel selection to two-strategy symmetric games even if they are not coordination games.

With two strategies, the state space is one-dimensional. Hence, under standard evolutionary dynamics like the replicator dynamic or the logit dynamic, we can write the basin of attraction of \(e_1\) as \((y_1,1]\) while that of \(e_2\) as \([0,y_1)\). For the logit dynamic, these basins of attraction would hold up to an approximation. Thus, the basin of attraction of \(e_1\) is larger if \(1-y_1>y_1\) or \(y_1<\frac{1}{2}\). This is the condition for \(e_1\) to be risk dominant.

But it is still possible for the equilibrium that is not risk dominant to be selected. It is just that its basin of attraction is smaller.

Thus, in (23), if c is close to V, then the hare payoff is close to zero.

In a two-strategy game, \(m^2=1-m^1\).

References

Bandhu S, Lahkar R (2023) Survival of altruistic preferences in a large population public goods game. Econ Lett 226:111113

Bergstorm TC (2002) Evolution of social behavior: individual and group selection. J Econ Perspect 16:67–88

Bester H, Güth W (1998) Is altruism evolutionary stable? J Econ Behav Organ 34:193–209

Björnerstedt J, Weibull JW (1996) Nash equilibrium and evolution by imitation. In: Arrow KJ, Colombatto E, Perlman M, Schmidt C (eds) The rational foundations of economic behaviour. St. Martin’s Press, New York, pp 155–181

Boyd R, Richerson PJ (1985) Culture and the evolutionary process. University of Chicago Press, Chicago

Boyd R, Richerson PJ (1990) Group selection among alternative evolutionarily stable strategies. J Theor Biol 145:331–342

Darwin C (1871) The descent of man and selection in relation to sex. John Murray, London

Dawkins R (1989) The selfish gene. Oxford University Press, Oxford

Ellison G (1993) Learning, local interaction, and coordination. Econometrica 61:1047–1071

Fudenberg D, Levine D (1998) Theory of learning in games. MIT Press, Cambridge

Güth W (1995) An evolutionary approach to explaining cooperative behavior by reciprocal incentives. Int J Game Theory 24:323–344

Güth W, Yaari M (1992) Explaining reciprocal behavior in simple strategic games: An evolutionary approach. In: Witt U (ed) Explaining forces and change: approaches to evolutionary economics. University of Michigan Press, Ann Arbor, pp 23–34

Harsanyi JC, Selten R (1988) A general theory of equilibrium selection in games. MIT Press, Cambridge

Heifetz A, Shannon C, Spiegel Y (2007) The dynamic evolution of preferences. Econ Theor 32:251–286

Hofbauer J (1995) Imitation dynamics for games. Unpublished manuscript, University of Vienna

Hofbauer J, Sandholm WH (2007) Evolution in games with randomly disturbed payoffs. J Econ Theory 132:47–69

Kandori M, Mailath GJ, Rob R (1993) Learning, mutation, and long run equilibria in games. Econometrica 61:29–56

Lahkar R (2019) Elimination of non-individualistic preferences in large population aggregative games. J Math Econ 84:150–165

Lahkar R, Sandholm WH (2008) The projection dynamic and the geometry of population games. Games Econ Behav 64:565–590

Maynard Smith J (1964) Group selection and kin selection. Nature 201:1145–1147

Robson AJ (2008) Group selection. In: Durlauf Steven N, Blume Lawrence E (eds) New Palgrave dictionary of economics. Palgrave Macmillan, New York

Robson AJ, Samuelson L (2011) The Evolutionary Foundations of Preferences. In: Benhabib SJ, Bisin A, Jackson MO (eds) Handbook of social economics, vol 1, pp 221–310

Samuelson L, Zhang J (1992) Evolutionary stability in asymmetric games. J Econ Theory 57:363–391

Sandholm WH (2001) Potential games with continuous player sets. J Econ Theory 97:81–108

Sandholm WH (2010) Population games and evolutionary dynamics. MIT Press, Cambridge

Schlag KH (1998) Why imitate, and if so, how? A boundedly rational approach to multi-armed bandits. J Econ Theory 78:130–156

Taylor PD, Jonker L (1978) Evolutionarily stable strategies and game dynamics. Math Biosci 40:145–156

Williams GC (1960) Adaptation and natural selection. Princeton University Press, Princeton

Wilson DS, Sober E (1994) Reintroducing group selection to the human behavioral sciences. Behav Brain Sci 17:585–654

Wilson DS, Sober EO (2008) Evolution “for the Good of the Group’’. Am Sci 96:380–389

Wynne-Edwards VC (1962) Animal dispersion in relation to social behavior. Oliver and Boyd, Edinburgh

Wynne-Edwards VC (1986) Evolution through group selection. Blackwell Scientific Publications, Oxford

Young HP (1993) The evolution of conventions. Econometrica 61:57–84

Funding

No funding was received for this paper. Hence, this declaration is not applicable.

Author information

Authors and Affiliations

Contributions

This is a single authored paper. Hence, this declaration is not applicable.

Corresponding author

Ethics declarations

Conflict of interest

The author does not have any personal or financial interest in the findings of this paper.

Ethical Approval

This paper does not contain any studies involving humans or animals. Hence, this declaration is not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The author thanks an associate editor and two anonymous reviewers for various comments and suggestions.

Appendix

Appendix

Proof of Proposition 3.1

We provide the proof only for the revision protocol (11). Applying (11) to (10), we obtain

where \({\bar{F}}(x,m)\) is the average social payoff (8). But (27) is the replicator dynamic (9). \(\square \)

Proof of Lemma 4.1

By our assumptions about the game (1), \(e_i\) is a strict Nash equilibrium for every \(i\in \{1,2,\ldots ,n\}\). Instantaneous coordination implies that all agents who are in group i at time \(\tau =0\) will play \(i\in S\) resulting in the Nash equilibrium \(e_i\). At subsequent rounds of random matching, they continue playing the best response i. Revision protocols in Sect. 3 imply that at most one migrant will enter or leave group i at any given time \(\tau \). When a single agent leaves the group that does not affect the best response of agents who remain. Hence, those agents continue to play \(i\in S\). When a single agent arrives, that agent will best respond to the current strategy distribution \(e_i\) in the group immediately upon arrival. The unique best response to \(m^i(\tau )e_i\) is \(i\in S\). Hence, at any given \(\tau \), \(x^i(\tau )=m^i(\tau )e_i\). \(\square \)

Proof of Proposition 4.2

At any time \(\tau \ge 0\), the state of group i is \(m^i(\tau )e_i\). We denote the vector of group masses as \(m(\tau )=(m^1(\tau ),m^2(\tau ),\ldots ,m^n(\tau ))\) and the vector of group states as \(m(\tau )e=(m^1(\tau )e_1,m^2(\tau )e_2,\ldots ,m^n(\tau )e_n)\).

From (6), we now obtain \(F_i^i(m^i(\tau )e_i,m^i(\tau ))=v_{ii}\) and \(F_s^i(m^i(\tau )e_i,m^i(\tau ))=v_{si}\) for all other strategies \(s\ne i\). Therefore, (7) implies \({\bar{F}}^i(m^i(\tau )e_i,m^i(\tau ))=v_{ii}\) and (8) implies \({\bar{F}}(e,m(\tau )e)={\bar{v}}(\tau )\) as defined following (14). Hence, the replicator dynamic (9) takes the form

\(\square \)

Proof of Proposition 4.3

By Proposition 4.2, \(m^i(t)\) evolves according to the replicator dynamic (14). We can interpret group i as “strategy i” in (14). The payoff of strategy i in (14) is the constant \(v_{ii}\). But \(v_{11}>v_{22}>\cdots >v_{nn}\). Hence, strategy 1 is strictly dominant. Under the replicator dynamic, the mass of strictly dominated strategies go to zero (Theorem 1, Samuelson and Zhang [23]). Therefore, \(m^j(t)\rightarrow 0\) for all \(j\ne 1\) and \(m^1(t)\rightarrow 1\). \(\square \)

Proof of Proposition 6.1

As \(v_{11}=V\) and \(v_{22}=V-c\) in (23), the average payoff in (14) isFootnote 26

Using (28), we can write the rate of change in the mass of the stag group will under the replicator dynamic (14) as

\(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lahkar, R. Group Selection Under the Replicator Dynamic. Dyn Games Appl (2024). https://doi.org/10.1007/s13235-024-00556-9

Accepted:

Published:

DOI: https://doi.org/10.1007/s13235-024-00556-9