Abstract

We consider goodness-of-fit methods for multivariate symmetric and asymmetric stable Paretian random vectors in arbitrary dimension. The methods are based on the empirical characteristic function and are implemented both in the i.i.d. context as well as for innovations in GARCH models. Asymptotic properties of the proposed procedures are discussed, while the finite-sample properties are illustrated by means of an extensive Monte Carlo study. The procedures are also applied to real data from the financial markets.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Stable-Paretian (SP) distributions are extremely important from the theoretical point of view as they are closed under convolution, and also they are the only possible limit laws for normalized sums of i.i.d. random variables. In fact, this last feature makes SP laws particularly appealing for financial applications since stock returns and other financial assets often come in the form of sums of a large numbers of independent terms. Moreover the empirical density of such assets is leptokurtic and in many cases skewed, thus making the family of SP laws particularly suited for related applications; see for instance the celebrated stability hypothesis that goes back at least to Mandelbrot (1963) and Fama (1965).

The above findings prompted further research on the stochastic properties and inference of SP laws and on their application potential. The reader is referred to Samorodnitsky and Taqqu (1994), Adler et al. (1998), Uchaikin and Zolotarev (1998), Rachev and Mittnik (2000) and Nolan (2020) for an overview on the stochastic theory, statistical inference and applications of SP laws.

In the aforementioned works, statistical inference and applications are mostly restricted to univariate SP laws. The respective topics for SP random vectors are much less explored. In this connection, certain distributional aspects of SP random vectors are discussed in Press (1972b), while Press (1972a) defines moment-type estimators of the parameters of a multivariate SP distribution utilizing the characteristic function (CF). Maximum-likelihood-based estimation is discussed by Ogata (2013), and Nolan (2013), Bayesian methods in Tsionas (2016), Lombardi and Veredas (2009) use indirect estimation methods, whereas Koutrouvelis (1980), Nolan (2013) and Sathe and Upadhye (2020) consider CF-regression methods. As far as testing is concerned, the only available formal method seems to be the CF-based goodness-of-fit test of Meintanis et al. (2015).

In this article we propose CF-based goodness-of-fit procedures for SP random vectors in the elliptically symmetric case. Moreover we also consider the asymmetric case for which to the best of our knowledge goodness-of-fit have not been considered before. The remainder of the article unfolds as follows. In Sect. 2 we introduce a general goodness-of-fit test for elliptically symmetric SP laws. In Sect. 3 we particularize this test in terms of computation. Section 4 addresses the problem of estimation of the SP parameters and the study of asymptotics of the proposed test, while in Sect. 5 we extend the test to a multivariate GARCH model with SP innovations. The results of an extensive simulation study illustrating the finite-sample properties of the method are presented in Sect. 6. In Sect. 7 the case of asymmetric SP law is considered, with known as well as unknown characteristic exponent, by means of a different testing procedure that avoids integration over a complicated CF and computation of the corresponding density. The impact of high dimension on the test statistic is also discussed in this section. Applications are given in Sect. 8, and we conclude in Sect. 9 with a discussion. Some technical material is deferred to an online supplement along with additional Monte Carlo results.

2 Goodness-of-fit tests

Let \({\varvec{X}}\) be a random vector in general dimension \(p\ge 1\), with CF \(\varphi ({\varvec{t}})=\mathbb E(e^{{{i}} {\varvec{t}}^\top {\varvec{X}}}), \ {\varvec{t}} \in \mathbb R^p, \ {{i}}=\sqrt{-1}\). Here we consider goodness-of-fit tests for the elliptically symmetric SP law. In this connection we note that SP random vectors are parameterized by the triplet \((\alpha ,{{\varvec{\delta }}}, {\textbf{Q}})\), where \(\alpha \in (0,2]\) denotes the characteristic exponent, and \({{\varvec{\delta }}} \in \mathbb R^p\) and \({\textbf{Q}} \in {\mathcal {M}}^p\), are location vector and dispersion matrix, respectively, with \({\mathcal {M}}^p\) being the set of all symmetric positive definite \((p\times p)\) matrices. On the basis of i.i.d. copies \(({\varvec{X}}_j, \ j=1,\ldots ,n)\) of \({\varvec{X}}\) we wish to test the null hypothesis

where we write \({\varvec{X}}\sim {\mathcal {S}}_\alpha ({\varvec{\delta }},{\varvec{Q}})\) to denote that \({\varvec{X}}\) follows a SP law with the indicated parameters. For subsequent use we mention that if \({\varvec{X}} \sim {\mathcal {S}}_\alpha ({\varvec{\delta }},{\varvec{Q}})\), then it admits the stochastic representation

where A is a totally skewed to the right SP random variable with characteristic exponent \(\alpha /2\) (\(\alpha <2\)) and \({\varvec{N}}\) is a zero-mean Gaussian vector with covariance matrix \({\varvec{Q}}\), independent of A; see Samorodnitsky and Taqqu (1994, §2.5).

Our test will make use of the fact that if \({\varvec{X}} \sim {\mathcal {S}}_\alpha ({\varvec{\delta }},{\varvec{Q}})\), then the CF of \({\varvec{X}}\) is given by \(\varphi _\alpha ({{\varvec{t}}};{{\varvec{\delta }}},{{\textbf {Q}}})=e^{{{i}} {{\varvec{t}}}^\top {{\varvec{\delta }}}-({{\varvec{t}}}^\top {{\textbf {Q}}} {{\varvec{t}}})^{\alpha /2}} \). The cases \(\alpha =2\) and \(\alpha =1\), respectively, correspond to the most prominent members of the SP family, i.e. the Gaussian and Cauchy distributions, while \(\phi _{\alpha }({\varvec{t}})=\exp ({-\Vert {\varvec{t}}\Vert ^{\alpha }})\), will denote the CF of a spherical SP law, i.e. an SP law with location \({\varvec{\delta }}={\varvec{0}}\) and dispersion matrix \(\textbf{Q}\) set equal to the identity matrix.

As it is already implicit in (1) the parameters \(({\varvec{\delta }},\textbf{Q})\) are considered unknown, and hence they will be replaced by estimators \((\widehat{{\varvec{\delta }}}_n,\widehat{{\textbf { Q}}}_n)\) and the test procedure will be applied on the standardized data

Specifically for a non-negative weight function \(w(\cdot )\) we propose the test criterion

where \(\phi _n({\varvec{t}})=n^{-1} \sum _{j=1}^n \exp ({{{i}} {\varvec{t}} ^\top {\varvec{Y}}_j})\) is the empirical CF computed from \(({\varvec{Y}}_j, \ j=1,\ldots ,n)\). Here the characteristic exponent \(\alpha \) is considered fixed at value \(\alpha =\alpha _0\), while the case of unknown \(\alpha \) will be considered in Sect. 7.

3 Computational aspects

It is already transparent that the test statistic \(T_{n,w}\) depends on the weight function \(w(\cdot )\), the choice of which we consider in this section. Specifically, from (4) we have by simple algebra

where

3.1 Using the CF of the Kotz-type distribution

We now discuss the computation of the test statistic figuring in (5). In doing so we will make use of an appropriate weight function \(w(\cdot )\) that facilitates explicit representations of the integrals in (6). Specifically we choose \(w({\varvec{t}})=(\Vert {\varvec{t}} \Vert ^2)^{\nu }e^{-(\Vert {\varvec{t}}\Vert ^2)^{\alpha _0/2}}\) as weight function, which for \((r,s)=(1,\alpha _0/2)\) is proportional to the density of the spherical Kotz-type distribution \(c(\Vert {\varvec{x}}\Vert ^2)^{\nu } e^{-r (\Vert {\varvec{x}}\Vert ^2)^s}\), with c a normalizing constant; see Nadarajah (2003). With this weight function the integrals in (6) may be derived as special cases of the integral

with the cases of interest being \(0<s(=\alpha _0/2)\le 1\). In turn this integral can be computed by making use of the CF of the Kotz-type distribution in a form of an absolutely convergent series of the type (see Streit, 1991; Iyengar and Tong, 1989; Kotz and Ostrovskii, 1994) \( I_{\nu ,r}({\varvec{x}};s)=\sum _{k=0}^\infty \psi _k(\Vert {\varvec{x}}\Vert ^2), \) which for selected values of s reduces to a finite sum. For more details we refer to Section S1 of the online supplement.

Given \(I_{\nu ,r}(\cdot ;\cdot )\), the test statistic figuring in (5) may be written as

3.2 Using the inversion theorem

In this section we will use the inversion theorem for CFs in order to compute the integrals defined by (6). Specifically for an absolutely integrable CF \(\varphi (t)\), the inversion theorem renders the density \(f(\cdot )\) corresponding to \(\varphi (\cdot )\) as

In this connection, we start from the expression of the test statistic in (5), and adopt the weight function \(w({\varvec{t}})=e^{-r \Vert {\varvec{t}}\Vert ^{\alpha _0}}\). This choice amounts to taking \(\nu =0\) in the Kotz-type density, which is the same as if we incorporate the CF \(\phi _{\alpha _0}(\cdot )\) of the SP law under test in the weight function.

With this weight function, the statistic figuring in (5), say \(T_{n,r}\), becomes

where by making use of the inversion theorem in (9),

with \(f_\alpha (\cdot )\) being the density of the spherical SP law with CF \(\phi _{\alpha }(\cdot )\).

4 On estimation of parameters and limit properties of the test

4.1 Estimation of parameters

The parameters \({\varvec{\delta }}\) and \({\textbf {Q}}\) in (1) are assumed unknown and need to be estimated. Seeing that reliable procedures for calculation of stable densities are available, we use maximum likelihood estimation. To avoid searching over the space of positive definite matrices, we use the estimators \(\widehat{{\varvec{\delta }}}_n\) and \(\widehat{\textbf{Q}}_n=\widehat{{\varvec{L}}}_n \widehat{{\varvec{L}}}_n^\top \) given by

where \(\mathbb {L}^p\) denotes the space of lower triangular \(p\times p\) matrices, and, as before, \(f_{\alpha }(\cdot )\) denotes the density of a p-dimensional stable distribution with CF \(\phi _{\alpha _0}(\cdot )\). Initial values for the optimization procedure are obtained using projection estimators of \({\varvec{\delta }}\) and \(\textbf{Q}\) as outlined in Nolan (2013, Section 2.3).

4.2 Limit null distribution and consistency

We present here the main elements involved in the limit behavior of the test statistic \(T_{n,w}\). In this connection and despite the fact that, as already emphasized, it is computationally convenient to use a weight function that is proportional to the density of a spherical Kotz-type distribution, our limit results apply under a general weight function satisfying certain assumptions and, under given regularity conditions, with arbitrary estimators of the distributional parameters.

Specifically, we assume that the weight function satisfies \(w({\varvec{t}})>0\) (apart from a set of measure zero), \(w({\varvec{t}})=w(-{\varvec{t}})\) and \(\int _{\mathbb R^p} w({\varvec{t}}) \mathrm{{d}}{\varvec{t}}<\infty \).

We also suppose that the estimators figuring in the standardization defined by (3) admit the Bahadur-type asymptotic representations

and

where \(({\varvec{\delta }}_0,\textbf{Q}_0)\) denote true values, with \({\varvec{X}}_1\sim {\mathcal {S}}_{\alpha _0}({\varvec{\delta }}_0,\textbf{Q}_0)\) and \(\left( {{\varvec{\ell }}}_\mathbf{\delta }({{\varvec{X}}}_1),\mathbf{{\ell }}_\textbf{Q}({{\varvec{X}}}_1)\right) \) satisfying \(\mathbb E\left( {\varvec{\ell }}_{{\varvec{\delta }}},{\varvec{\ell }}_\textbf{Q}\right) =(\textbf{0},\textbf{0})\) and \(\mathbb E\left\| {\varvec{\ell }}_{{\varvec{\delta }}}+{\varvec{\ell }}_\textbf{Q}\right\| ^2<\infty \).

Then we may write from (4)

where \(\** _0(\cdot )\) is a zero-mean Gaussian process with covariance kernel, say, \(K({\varvec{s}},{\varvec{t}})\). In turn the law of \(T_w\) is that of \(\sum _{j=1}^\infty \lambda _j {\mathcal {N}}^2_j\), where \(({\mathcal {N}}_j, \ j\ge 1)\) are i.i.d. standard normal random variables. The covariance kernel \(K(\cdot ,\cdot )\) of the limit process \(\** _0(\cdot )\) enters the distribution of \(T_w\) via the eigenvalues \(\lambda _1\le \lambda _2\le \cdots \) and corresponding eigenfunctions \(f_1,f_2,\ldots \), through the integral equation

In this connection the maximum likelihood estimators defined by (12) satisfy certain equivariance/invariance properties (refer to Section S2 of the online supplement), and as a consequence the resulting test statistic is affine invariant. In this case we may set \(({\varvec{\delta }}_0,\textbf{Q}_0)\) equal to the zero vector and identity matrix, respectively, thus rendering the limit null distribution free of true parameter values; see Ebner and Henze (2020) and Meintanis et al. (2015).

Moreover the standing assumptions imply the strong consistency of the new test under fixed alternatives.

Proposition 4.1

Suppose that under the given law of \({\varvec{X}}\) the estimators of the parameters \({\varvec{\delta }}\) and \(\textbf{Q}\) satisfy, \((\widehat{{\varvec{\delta }}}_n,\widehat{\textbf{Q}}_n) \rightarrow ({\varvec{\delta }}_X, \mathbf{{Q}}_{{\varvec{X}}}) \in \mathbb R^p\times {\mathcal {M}}^p\), a.s. as \(n\rightarrow \infty \). Then we have

a.s. as \(n\rightarrow \infty \).

Proof

Recall from (4) that

Now the strong uniform consistency of the empirical CF in bounded intervals (see Csörgő,1981) entails

a.s. as \(n\rightarrow \infty \). Consequently, since \(|\phi _n({\varvec{t}})-\phi _{\alpha _0}({\varvec{t}})|^2\le 4\), an application of Lebesgue’s theorem of dominated convergence on (14) yields (13). \(\square \)

Since \(w>0\), \({\mathcal {T}}_{w}\) is positive unless

identically in \({\varvec{t}}\), which is equivalent to \(\varphi ({\varvec{t}})=\varphi _{\alpha _0}({{\varvec{t}}};{{\varvec{\delta }}}_{{\varvec{X}}},{{\textbf {Q}}}_{{\varvec{X}}})\), \({\varvec{t}} \in \mathbb R^p\), i.e. \({\mathcal {T}}_{w}>0\) unless the CF of \({\varvec{X}}\) coincides with the CF of a SP law with \(\alpha =\alpha _0\) and \(({{\varvec{\delta }}}, \mathbf{{Q}})=({{\varvec{\delta }}}_{{\varvec{X}}},\mathbf{{Q}}_{{\varvec{X}}})\), and thus by the uniqueness of CFs, the test which rejects the null hypothesis \({\mathcal {H}}_0\) in (1) for large values of \(T_{n,w}\) is consistent against each fixed alternative distribution.

The above limit results have been developed in a series of papers, both in the current setting as well as in related settings, and with varying conditions on the weight function and the family of distributions under test; see for instance Henze and Wagner (1997), Gupta et al. (2004), Meintanis et al. (2015), Hadjicosta and Richards (2020a), and Ebner and Henze (2020). In this regard, the solution of the above integral equation, and thus the approximation of the limit null distribution of \(T_{n,w}\), is extremely complicated, and in fact constitutes a research problem in itself. This line of research has been followed by a few authors. We refer to Matsui and Takemura (2008), Hadjicosta and Richards (2020b), Meintanis et al. (2023) and Ebner and Henze (2023). In these works, the infinite sum distribution of \(T_w\) is approximated by a corresponding finite sum employing numerically computed eigenvalues and then large-sample critical points for \(T_{n,w}\) are found by Monte Carlo. It should be noted that such approximation is specific in several problem-parameters: the distribution under test, the type of estimators of the distributional parameters, and the weight function employed, and thus have to be performed on a case-to-case basis. A different more heuristic approach is moment-matching between the first few moments of \(T_w\) (computed numerically) and a known distribution, like the gamma distribution or one from a Pearson family of distributions; see Henze (1990, 1997) and Pfister et al. (2018), while yet another, Satterthwaite-type, approximation is studied by Lindsay et al. (2008).

The validity and usefulness of the above approximation methods notwithstanding, we hereby favor Monte Carlo simulation and bootstrap resampling for the computation of critical points and for test implementation, not only because these are large-sample approximations performed mostly in the univariate setting and thus inappropriate for small sample size n and/or dimension \(p>1\), but more importantly because, in the case of GARCH-type observations considered in the next section, the finite-sample counterpart of this distribution may even involve true parameter values; see for instance Henze et al. (2019).

5 The case of the stable-GARCH model

Assume that observations \(({\varvec{X}}_{j}, \ j=1,\ldots ,n)\) arise from a multivariate GARCH model defined by

where \(({\varvec{\varepsilon }}_{j}, j=1,\dots ,n)\) is a sequence of i.i.d. p-dimensional random variables with mean zero and identity dispersion matrix, and \(\textbf{Q}_{j}:=\textbf{Q}({\varvec{X}}_j\vert \mathbb I_{j-1})\), with \(\mathbb I_{j}\) denoting the information available at time j, is a symmetric positive definite matrix of dimension \((p\times p)\). We wish to test the null hypothesis stated in (1) for the innovations \({\varvec{\varepsilon }}_j\) figuring in model (15). Note that in view of (2), \(\textbf{Q}_j\) may be interpreted as the conditional covariance matrix of the corresponding latent Gaussian vector \({\varvec{N}}\).

As GARCH model we adopt the constant conditional correlation (CCC) specification (see, for instance, Silvennoinen and Teräsvirta, 2009)

where \({\varvec{X}}^{(2)}_{j}=(X^2_{1,j},\ldots ,X^2_{p,j})^\top \), \({\textbf{D}}_j=\mathrm{{diag}}(q_{1,j},\ldots , q_{p,j})\), \({\varvec{\mu }}\) is an \((p\times 1)\) vector of positive elements, \(\textbf{R}\) is a correlation matrix, and \({\textbf{A}}_k\) and \({\textbf{B}}_k\) are \((p\times p)\) matrices with nonnegative elements. Note that the vector \({\varvec{\vartheta }}\) which incorporates all parameters figuring in (16) is of dimension \(p+p^2(\kappa _x+\kappa _q)+(p(p-1)/2)\).

Now let \({\varvec{\Upsilon }}_{j,\kappa _x,\kappa _q}=\left( {\varvec{X}}^\top _{j-1},\ldots ,{\varvec{X}}^\top _{j-\kappa _x},{\varvec{q}}^\top _{j-1},\ldots , {\varvec{q}}^\top _{j-\kappa _q}\right) ^\top \) be a vector of dimension \(p\times (\kappa _x+\kappa _q)\) associated with model (15, 16). Since the innovations are unobserved, any test should be applied on the corresponding residuals,

where \({\varvec{\widehat{\vartheta }}}_n\) is a consistent estimator of \({\varvec{ \vartheta }}\) that uses \({\varvec{\Upsilon }}_{j,\kappa _x,\kappa _q}\) as input data. We also employ initial values in order to start the estimation process. As an estimator of \({{\varvec{\vartheta }}}\) we use the equation-by-equation (EbE) estimators proposed by Francq and Zakoïan (2016). The reader is referred to Section S3 of the online supplement for more details on the EbE estimator.

6 Numerical study

We now turn to a simulation study to demonstrate the performance of the new tests under various alternatives, specifically:

-

(i)

elliptically symmetric SP distributions, denoted by \(S_{\alpha }\);

-

(ii)

Student t-distributions with \(\nu \) degrees of freedom, denoted by \(t_\nu \);

-

(iii)

skew normal distributions with skewness parameter \(\nu \), denoted by \({\text {SN}}_\nu \);

-

(iv)

skew Cauchy distributions with skewness parameter \(\nu \), denoted by \({\text {SC}}_\nu \);

The cases \(p=4,6\) are considered for the dimension of the distribution. Throughout, we choose \({{\varvec{\delta }}}={{\varvec{0}}}\) and \({\textbf{Q}}={\textbf{I}}_p\), where \({\textbf{I}}_p\) denotes the \(p\times p\) identity matrix. Recall in this connection that our method requires the computation of the density of a spherical SP law. Details on this computation are included in Section S4 of the online supplement.

6.1 Monte Carlo results for i.i.d. data

We focus on our test criterion in (10) that employs \(\phi _{\alpha _0}(\cdot )\) in the weight function, say \(T_r\) for simplicity, and we consider several values of the tuning parameter \(r>0\). Corresponding results for the test in (8) that employs the Kotz-type weight function are included in Tables S8 and S9 of the online supplement.

For comparison, we include results obtained when using the test of Meintanis et al. (2015) using the Gaussian weight function \(\exp (-\Vert {\varvec{t}}\Vert ^2)\). The test, denoted by \(M_a\) in the tables, depends on a tuning parameter denoted by a for which we consider the choices \(a=4,6,10\) and \(a=15\). As pointed out by the authors, the number of operations required to compute their test statistic is in the order of \(n^{2a}\) (for integer-valued a) and becomes time-consuming for larger values of n and a. We therefore use the Monte Carlo approach suggested by the authors to approximate the value of the test statistic (using 1 000 replications for each approximation); see p. 180 of Meintanis et al. (2015). When testing for multivariate normality (i.e. \(\mathcal {H}_0\) with \(\alpha =2\)), we consider the test of Henze et al. (2019), denoted by HJM in the tables, with weight function \(\exp (-1.5\Vert {\varvec{t}}\Vert ^2)\), which yielded good results in the original paper.

The rejection percentages of the tests are shown in Tables 1, 2, 3, and 4. All simulations results are based on 1000 independent Monte Carlo iterations and a significance level of 10% is used throughout.

We first consider the case where we test \(\mathcal H_0\) with \(\alpha =2\), i.e. when testing for departures from multivariate normality. Table 1 shows that, when heavier-tailed symmetric Paretian alternatives are considered, the newly proposed tests based on \(T_r\) are more powerful than the existing tests \(M_a\) of Meintanis et al. (2015), and have power slightly lower but comparable to the test HJM of Henze et al. (2019). In light of the above-mentioned computational complexity of the existing tests, this gain in power makes the new tests attractive for implementation in practice. Moreover, the favorable power is visible for all the considered choices of the tuning parameter r, the choice of which, as opposed to a in \(M_a\), has no significant impact on computational complexity. Finally, as is expected, the power of the new tests increase as the sample size is increased. Similar conclusions can also be made in the case of elliptically symmetric t alternatives (see Table S2 of the online supplement).

Considering skew normal alternatives, even more favorable behavior can be observed in the results shown in Table 2. Notice that the tests \(M_a\) and HJM seem to have very low power against skew normal alternatives, which is not the case with the newly proposed tests.

We now shift our attention to the case of testing \(\mathcal H_0\) with \(\alpha =1.8\). Table 3 shows that, compared to the existing tests, the new tests are quite powerful against heavier-tailed alternatives, i.e. alternatives with stability index less than 1.8. Despite the evident dependence of the performance of the new tests on the tuning parameter r, we note that the power is very competitive to the existing tests in most cases, and significantly outperforms the existing tests for most choices of r. For lighter-tailed alternative distributions, the new tests exhibit some under-rejection for certain choices of r. Nevertheless, the problem seems to disappear as the sample size is increased.

Another advantageous feature of tests based on \(T_r\) is that the high power is also visible in the higher-dimensional setting where \(p=6\). In fact, in this case the power of the tests seem to increase as the dimension is increased from \(p=4\) to \(p=6\). In contrast, the tests \(M_a\) exhibit a clear decrease in power as the dimension p is increased.

We finally consider testing \(\mathcal H_0\) with \(\alpha =1\), i.e. when testing for departures from multivariate Cauchy. Overall, in agreement with previous observations, Table 4 shows that the new tests seem to be competitive in terms of power, with the existing tests having some advantage when the true data-generating distribution has a stability index greater than 1. However, this advantage in power disappears when considering the higher-dimensional case \(p=6\). Similar behavior can be seen in the case of t and skew Cauchy alternatives (see Tables S6 and S7 in the supplement).

6.2 Monte Carlo results for GARCH data

We consider a CCC-GARCH(1, 1) model as defined in (16) with \(\kappa _x=\kappa _q=1\). As parameters, we take \({\textbf{A}}_1=0.2{\textbf{I}}_p\), \({\textbf{B}}_1=0.3{\textbf{I}}_p\), and correlation matrix \(\textbf{R}\) with all off-diagonal entries set to 0.5.

To test whether the innovations \({{\varvec{\varepsilon }}}_j\) were generated by an elliptically symmetric SP law, we use the statistic in (10) applied to the residuals \(\widetilde{{\varvec{\varepsilon }}}_j\) defined in (17). Denote the value of the test statistic by \(T_{r}:=T_{r}(\widetilde{{\varvec{\varepsilon }}}_1,\ldots ,\widetilde{{\varvec{\varepsilon }}}_n)\).

We use the following bootstrap scheme to determine critical values:

-

1.

Independently generate innovations \({{\varvec{\varepsilon }}}_1^*,\ldots ,{{\varvec{\varepsilon }}}_n^*\) from the SP law \(S_{\alpha _0}({{\varvec{0}}},{\textbf{I}}_p)\).

-

2.

Construct a bootstrap sample \({{\varvec{X}}}_1^*,\ldots ,{{\varvec{X}}}_n^*\) using the recursive relation \({{\varvec{X}}}_j^*=\widetilde{\textbf{ Q}}_j^{1/2}{{\varvec{\varepsilon }}}_j^*\), \(j=1,\ldots ,n\), with \(\widetilde{\textbf{ Q}}_j\) as defined in (17).

-

3.

Based on the sample \({{\varvec{X}}}_1^*,\ldots ,{{\varvec{X}}}_n^*\), fit the CCC-GARCH model in (16) to obtain estimates \(\widetilde{\textbf{Q}}_j^*\), \(j=1,\ldots ,n\), and recover the bootstrap residuals \(\widetilde{{\varvec{\varepsilon }}}_j^*=(\widetilde{\textbf{Q}}_j^{*})^{-1/2}{{\varvec{X}}}_j^*\), \(j=1,\ldots ,n\)

-

4.

A bootstrap version of the statistic is given by \(T_{r}^{*}=T_{r}(\widetilde{{\varvec{\varepsilon }}}_1^*,\ldots ,\widetilde{{\varvec{\varepsilon }}}_n^*)\).

Steps 1–4 are repeated many times, say B, to obtain realizations \(T_{r}^{*}(1),\ldots ,T_{r}^{*}(B)\) of the bootstrap statistic. The null hypothesis is rejected at significance level \(\xi \) whenever \(T_{r}\) exceeds the (\(1-\xi \))-level empirical quantile of the bootstrap realizations \(\{T_{r}^{*}(b)\}_{b=1}^B\). In the Monte Carlo simulations that follow, instead of drawing B bootstrap samples, we employ the warp speed method of Giacomini et al. (2013) which involves drawing only one bootstrap sample for each Monte Carlo iteration.

Table 5 (power against symmetric SP laws) exhibits similar favorable power properties of the newly proposed test as was observed in the i.i.d. case. Notice that the tests all have empirical size close to the nominal level of 10% when using critical values obtained by means of the bootstrap scheme given above. Also see Tables S13 and S14 of the online supplement.

Table S12 (power against skew normal alternatives) shows that the new tests are especially competitive in terms of power when the true innovation distribution is not elliptically symmetric. Finally we mention that although the tests of Meintanis et al. (2015) are competitive when the innovations have an elliptically symmetric Student t-distribution (see Table S11), the slight advantage in power disappears rapidly as the dimension p increases.

7 Testing asymmetric SP laws

We now shift our focus to the more general case of testing whether multivariate observations from \({\varvec{X}}\) originate from a SP law, which need not necessarily be elliptically symmetric. In this connection note that the general multivariate SP law depends on a location vector \({\varvec{\delta }}\in \mathbb {R}^p\) and a spectral measure \(\Gamma (\cdot )\) on the unit sphere \(S^p\). Accordingly we wish to test the null hypothesis

where we write \({\varvec{X}} \sim \widetilde{\mathcal {S}}_\alpha ({\varvec{\delta }},\Gamma )\), when \({\varvec{X}}\) follows a skew SP law with the indicated parameters. We will be considering the case of \(\widetilde{\mathcal H}_{0}\) for fixed \(\alpha =\alpha _0\) as well as the case of testing the null hypothesis \(\widetilde{\mathcal {H}}_0\) with an unspecified \(\alpha \).

In this connection note that if \({\varvec{X}} \sim \widetilde{\mathcal {S}}_\alpha ({\varvec{\delta }}, \Gamma )\), then the CF of \({\varvec{X}}\) is given by

with

See, e.g., Nolan et al. (2001).

In testing the null hypothesis \(\widetilde{\mathcal H}_{0}\), we consider an entirely different idea for the test statistic first put forward by Chen et al. (2022). Specifically, we consider a test statistic formulated as a two-sample test between the original data \(\mathcal {X}_n=({\varvec{X}}_j, \ j=1,\ldots ,n)\) and artificial data \(\mathcal {X}_{0n}=({\varvec{X}}_{0j}, \ j=1,\ldots ,n)\) generated under the null hypothesis \(\widetilde{\mathcal {H}}_0\). More precisely, we propose the test criterion

where \(\varphi _n({\varvec{t}})=n^{-1} \sum _{j=1}^n e^{{{i}} {\varvec{t}} ^\top {\varvec{X}}_j}\) is the empirical CF of the data at hand, while \(\varphi _{0n}({\varvec{t}})=n^{-1} \sum _{j=1}^n e^{{{i}} {\varvec{t}} ^\top {\varvec{X}}_{0j}}\) is the empirical CF computed from the artificial data set \(\mathcal {X}_{0n}\) generated under the null hypothesis \(\widetilde{\mathcal H}_{0}\) with \(\Gamma \) and \({\varvec{\delta }}\) estimated from the original observations \(\mathcal {X}_{n}\).

By straightforward computations, we obtain

where \(I_w({\varvec{x}})=\int _{\mathbb R^p} \cos ({\varvec{t}}^\top {\varvec{x}})w({\varvec{t}})\mathrm{{d}}{\varvec{t}}\), as defined in (6). Clearly then the numerical approaches of Sect. 3 are no longer required and the simplicity of this test lies in the fact that in (20) only the computation of \(I_w(\cdot )\) is needed. Specifically the need for tailor-made weight functions such as those employed in Sect. 3 is circumvented. Furthermore we no longer need to compute the density of the underlying SP law as in (11). In this connection, suppose that the weight function \(w({\varvec{x}})\) figuring in \(I_w({\varvec{x}})\) above is chosen as the density of any spherical distribution in \(\mathbb R^p\). Then the integral \(I_w({\varvec{x}})\) gives the CF corresponding to this spherical distribution at the point \({\varvec{x}}\). Furthermore it is well known that this CF may be written as \(\Psi (\Vert {\varvec{x}} \Vert ^2)\), where \(\Psi (\cdot )\) is called the “kernel” of the specific family of spherical distributions. Thus the test statistic figuring in (20) may be written as

where the kernel \(\Psi (\cdot )\) can be chosen by the practitioner so that the resulting expression in (21) is as simple as possible. In this connection, as already clear from the preceding paragraphs, a simple kernel is the kernel of the spherical SP family of distributions with \(\Psi (\xi )=e^{-r \xi ^{\alpha /2}}, \ r>0, \ \alpha \in (0,2]\).

Implementation of the test however relies on estimation of the spectral measure \(\Gamma (\cdot )\) appearing in (18). Motivated by a result of Byczkowski et al. (1993, Theorem 1), we assume that \(\Gamma (\cdot )\) can be approximated by the discrete spectral measure \( \widetilde{\Gamma }(\cdot ) = \sum _{k=1}^K \gamma _k \mathrm{{I}}_{{\varvec{s}}_k}(\cdot ), \) with weights \(\gamma _k\) corresponding to mass points \({\varvec{s}}_k\in S^p\), \(k=1,\ldots ,K\), and \(\mathrm{{I}}_{{\varvec{s}}_k}(\cdot )\) being the indicator index. So in order to apply the test, we use the stochastic representation of \({\varvec{X}}_0\sim \widetilde{\mathcal {S}}_{\alpha _0}({\varvec{\delta }},\widetilde{\Gamma })\) as

where \((A_k, \ k=1,\ldots ,K)\) are i.i.d. (univariate) SP variates following a totally skewed to the right SP law with \(\alpha =\alpha _0\). In turn this representation is used in order to generate observations \(({\varvec{X}}_{0j}, \ j=1,\ldots ,n)\) under the null hypothesis \(\widetilde{\mathcal {H}}_0\) with \({\varvec{\delta }}\) and \((\gamma _k, \ k=1,\ldots ,K)\) replaced by appropriate estimates \(\widehat{{\varvec{\delta }}}\) and \((\widehat{\gamma }_k, \ k=1,\ldots ,K)\), respectively. The estimates \(\widehat{{\varvec{\delta }}}\) and \((\widehat{\gamma }_k, \ k=1,\ldots ,K)\) are obtained as shown in Nolan et al. (2001) and outlined in Section S5 of the online supplement.

7.1 Monte Carlo results

We now turn to a simulation study to demonstrate the performance of the test based on (20), say \(\widetilde{T}_{\Psi }\) for simplicity, in the bivariate case. We specifically consider the following alternative distributions:

-

(i)

the asymmetric SP law \(\widetilde{\mathcal {S}}_\alpha ({\varvec{\delta }},\Gamma )\) with CF defined in (18), where we take \({\varvec{\delta }}={\varvec{0}}\) and \(\Gamma (\cdot )=\sum _{k=1}^L \gamma _k \mathrm{{I}}_{{\varvec{s}}_k}(\cdot )\), with \(L=5\), \((\gamma _1,\ldots ,\gamma _L) = (0.1,0.3,0.1,0.4,0.1)\) and

$$\begin{aligned} {\varvec{s}}_k = \left[ \cos \left( \frac{2\pi (k-1)}{L}\right) , \sin \left( \frac{2\pi (k-1)}{L}\right) \right] ^\top , \qquad k=1,\ldots ,L; \end{aligned}$$(23) -

(ii)

spherically symmetric SP distributions, denoted by \(S_{\alpha }\).

The statistic in \(\widetilde{T}_{\Psi }(\mathcal {X}_{n};\mathcal {X}_{0n})\) in (21) is subject to randomness introduced by the artificial data \(\mathcal {X}_{0n}\). To address this randomness in practical implementation, we follow Chen et al. (2022) and base our test on the statistic

where, for each \((r=1,\ldots ,m)\), the set \(\mathcal {X}_{0n}^r\) is a random sample of observations generated from the SP law \(\widetilde{\mathcal {S}}_{\alpha _0}(\widehat{{\varvec{\delta }}},\widehat{\Gamma })\), i.e. a random sample satisfying \(\widetilde{\mathcal {H}}_0\).

Critical values of the test can be obtained using the following parametric bootstrap scheme:

-

1.

Generate a bootstrap sample \(\mathcal {X}_{n}^*\) from the SP law \(\widetilde{\mathcal {S}}_{\alpha _0}(\widehat{{\varvec{\delta }}},\widehat{\Gamma })\).

-

2.

Calculate bootstrap estimates \(\widehat{{\varvec{\delta }}}^*\) and \(\widehat{\Gamma }^*\) from \(\mathcal {X}_{n}^*\) and generate m random samples \((\mathcal {X}_{0,n}^{*r}\), \(r=1,\ldots ,m)\), from the SP law \(\widetilde{\mathcal {S}}_{\alpha _0}(\widehat{{\varvec{\delta }}}^*,\widehat{\Gamma }^*)\).

-

3.

A bootstrap version of the statistic is \( \widetilde{T}_{\Psi }^{*(m)} = m^{-1}\sum _{r=1}^m \widetilde{T}_{\Psi }(\mathcal {X}_{n}^*;\mathcal {X}_{0,n}^{*r}). \)

Steps 1–3 are repeated many times, say B, to obtain realizations \(\widetilde{T}_{\Psi }^{*(m)}(1),\ldots ,\widetilde{T}_{\Psi }^{*(m)}(B)\) of the bootstrap statistic. The null hypothesis is rejected at significance level \(\xi \) whenever the statistic \(\widetilde{T}_{\Psi }^{(m)}\) in (24) exceeds the (\(1-\xi \))-level empirical quantile of the sequence of bootstrap realizations \(\{\widetilde{T}_{\Psi }^{*(m)}(b)\}_{b=1}^B\).

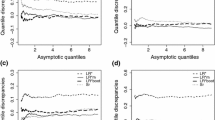

Table 6 shows the empirical rejection percentages of tests based on \(\widetilde{T}_{\Psi }\) with \(\Psi (\xi )=e^{-r \xi ^{\alpha _0/2}}\), where \(\alpha _0\) denotes the hypothesized stability index in \(\widetilde{\mathcal {H}}_0\). We write \(\widetilde{T}_r\), \(r>0\), for this test. In the case of unknown \(\alpha \) in Table 7 we use the same weight function with \(\alpha _0=2\).

The left-hand side of Table 6 shows the rejection percentages when observations are sampled from an asymmetric SP distribution, whereas the right-hand side shows the results when observations are sampled from a symmetric SP distribution. In all cases, the proposed procedure seems to respect the nominal size of the test, although it seems to be somewhat conservative especially for smaller sample sizes. Nevertheless, the tests have good power against alternatives, which increases with the extent of violation of the null hypothesis. Corresponding results when testing \(\widetilde{\mathcal {H}}_0\) with \(\alpha =1.8\) are given in Table S16.

7.1.1 The case of unknown \(\alpha \)

In practice, the true value of stability index \(\alpha \) will typically be unknown and need to be estimated from data. Suppose the hypothesis of interest is

To test this hypothesis, we again use the test based on the statistic in (21), but now generate the artificial data \({\mathcal {X}}_{0n}\) from the SP law \({\mathcal {S}}_{\widehat{\alpha }}(\widehat{{\varvec{\delta }}},\widehat{\Gamma })\), where \(\widehat{\alpha }\), \(\widehat{{\varvec{\delta }}}\) and \(\widehat{\Gamma }\) are projection estimates obtained as outlined in Section S5 of the supplement. Note that data can be generated from \({\mathcal {S}}_{\widehat{\alpha }}(\widehat{{\varvec{\delta }}},\widehat{\Gamma })\) using the stochastic representation in (22) with \(\alpha _0\) replaced by \(\widehat{\alpha }\). The bootstrap procedure for obtaining critical values follows similarly to the procedure described in Sect. 7.1.

The empirical rejection percentages (for the bivariate case, \(p=2\)) are shown in Table 7 for several distributions. Note that when observations are sampled from a \(\mathcal {S}_{1.8}\) distribution (symmetric SP law with stability index 1.8), the rejection percentages are close to the nominal level, indicating that the test has reasonable empirical size.

As alternatives, we consider the skew normal (\({\text {SN}}_{\nu }\)), symmetric Laplace and generalized Gaussian (\({\text {GG}}_{\nu })\) distributions. An observation X from the \({\text {GG}}_{\nu }\) distribution (refer to Cadirci et al. 2022) is generated according to \(X=UV^{1/\nu }\), where U is uniform on \(S^{p-1}\) and \(V\sim \text {Gamma}(p/\nu ,2)\). Table 7 shows that the proposed test procedure has power against non-SP alternatives, and noting the increase in power associated with increasing sample size, the results suggest that the procedure is consistent against non-SP alternatives.

7.2 The high-dimensional case

It should be clear that so far, and despite the fact that the new test applies to any dimension p, the underlying setting is not that of high dimension (\(p>n\)). In this connection we point out that the extension of goodness-of-fit methods specifically tailored for the classical “small p–large n” regime to high or infinite dimension is not straightforward, and therefore it is beyond the scope of the present article. This is not restricted to our setting alone but applies more generally, and the collections of Goia and Vieu (2016) and Kokoszka et al. (2017) reflect the need for statistical methods specifically tailored for non-classical settings. If we restrict it to our context, in the latter settings such methods have so far been mostly confined to testing for normality and the interested reader is referred to Bugni et al. (2009), Nieto-Reyes et al. (2014), Bárcenas et al. (2017), Kellner and Celisse (2019), Yamada and Himeno (2019), Jiang et al. (2019), Górecki et al. (2020), Henze and Jiménez-Gamero (2021), Chen and **a (2023) and Chen and Genton (2023). If however the setting is that of regression, with or without conditional heteroskedasticity, the number of parameters rapidly increases with the dimension p. This is one extra reason that corresponding specification methods in high/infinite dimension need to be treated separately, and in this connection the methods of Cuesta-Albertos et al. (2019) and Rice et al. (2020) appear to be of the few available for testing the (auto)regression function.

For an illustration of the special circumstances that are brought forward as the dimension grows, consider the test statistic in (10) for \(\alpha _0=2\) and without standardization, i.e. replace \({\varvec{Y}}_j\) by \({\varvec{X}}_j\sim {\mathcal S}_2({\varvec{0}}, {\textbf{ I}}_p)\), \(j=1,\ldots ,n\). Then from (11), and by using the density of the p-variate normal distribution with mean zero and covariance matrix \(2{\textbf{I}}_p\), we obtain

and consequently

Now the quantity \(\Vert {\varvec{X}}_j\Vert ^2=\sum _{k=1}^pX_{jk}^2\) behaves like \(2p+\mathrm{{O}}_{\mathbb P}(\sqrt{p})=2p(1+\mathrm{{O}}_{\mathbb P}(1/\sqrt{p}))\), and thus our test statistic contains sums of terms, each of which is of the order \(e^{-2p}\), as \(p\rightarrow \infty \). (For simplicity we suppress the terms 4r and \(4(r+1)\) which occur as denominators in these sums, as they are anyway irrelevant to our argument).

In order to get a feeling of this result, write \(\Vert {\varvec{X}}_j\Vert ^2=2\sum _{k=1}^p(X_{jk}/\sqrt{2})^2=:2 S_p\), where, due to Gaussianity and independence, \(S_p\) is distributed as chi-squared with p degrees of freedom. Thus the expectation of the quantity \(e^{-\Vert {\varvec{X}}_j\Vert ^2}\) figuring in \(T_{n,r}\) coincides with the value of the CF of this chi-squared distribution computed at the point \(t=2{{i}}\). To proceed further, notice that the CF of \(S_p/p\) at a point t is given by the CF corresponding to \(S_p\) computed at t/p, and recall that the CF of the chi-squared distribution with p degrees of freedom is given by \(\varphi _{S_p}(t)=(1-2{{i}}t)^{-p/2}\). Hence, in obvious notation,

and reverting back to the CF of \(S_p\) we get \(\varphi _{S_p}(t)\approx e^{{{i}}pt}\), and thus \(\varphi _{S_p}(2{{i}})\approx e^{-2p}\), as \(p\rightarrow \infty \). A similar reasoning applies to \(\Vert {\varvec{X}}_j-{\varvec{X}}_k\Vert ^2\) implying that the expectation of \(e^{-\Vert {\varvec{X}}_j-{\varvec{X}}_k\Vert ^2}\) (also occurring in \(T_{n,r}\)) being approximated by \(e^{-4p}\), as \(p\rightarrow \infty \).

Hence, by using these approximations, we obtain

from which it follows that

as \(p\rightarrow \infty \). Consequently our test statistic degenerates in high dimension, a fact that calls for proper high-dimensional modifications of the test criterion, which is definitely a worthwhile subject for future research.

Nevertheless, we have obtained some initial Monte Carlo results that show a reasonable performance for the test criterion in cases where the dimension is much higher than the maximum \(p=6\) considered so far. These results may be found in Tables S18 and S19 of the Supplement.

8 Application to financial data

We consider daily log returns from 4 January 2010 to 30 June 2017 of stocks of two mining companies listed on the London Stock Exchange: Anglo American (AAL) and Rio Tinto (RIO). The complete data set (available from Yahoo! Finance) consists of 1,891 log returns. We model the log returns using the CCC-GARCH(1, 1) model (with intercept) given by

where \({\textbf{Q}}_j\) is as in (16) with \(\kappa _x=\kappa _q=1\) and \({\textbf{B}}_1\) assumed diagonal.

We are interested in determining whether the innovations \({{\varvec{\varepsilon }}}_j\) have a bivariate stable distribution. Since the innovations are unobserved, we apply our test to the residuals \(\widehat{{\varvec{\varepsilon }}}_j=\widehat{\textbf{Q}}^{-1/2}_j({{\varvec{Y}}}_j - \widehat{{\varvec{\omega }}})\), where the estimates \(\widehat{\textbf{Q}}_j\) and \(\widehat{{\varvec{\omega }}}\) are obtained using EbE estimation as discussed earlier. To obtain critical values of the tests, we apply the bootstrap algorithm of Sect. 6.2 with \(B=1,000\).

Table 8 shows that, when a stability index of \(\alpha \in \{1.75,1.8\}\) is assumed, the tests based on \(T_{r}\) do not reject the null hypothesis that the CCC-GARCH-(1, 1) innovations have a \({\mathcal {S}}_\alpha \) distribution (at a 10% level of significance). On the other hand, the null hypothesis of stable innovations is rejected when a stability index of \(\alpha =\{1.7,1.85,1.9,2\}\) is assumed.

The correct choice of the innovation distribution has important implications for value-at-risk (VaR) forecasts. For the considered stable distributions, we fit the model in (25) to the first 1,000 observations and calculated one-step-ahead 5% and 1% portfolio VaR forecasts for long and short positions for the remaining time period (i.e. 891 forecasts for each position). The portfolio is assumed to consist of 50% AAL shares and 50% RIO shares.

Table 8 shows the empirical coverage rates of the forecasted VaR bounds, that is, the proportion of times that the value of the portfolio exceeded the bounds. For the cases where our test supports the null hypothesis, i.e. when \(\alpha \in \{1.75,1.8\}\), the empirical coverage rates of the value-at-risk bounds are quite close to the nominal rates. In addition, the p-values of Christofferson’s (1998) \({\text {LR}}_{cc}\) test (given in brackets in Table 8), indicate that, if the stability index of the innovation distribution is chosen either too high or too low, the true conditional coverage rates of the forecasted VaR bounds are significantly different from the nominal rates.

9 Conclusion

We have studied goodness-of-fit tests with data involving multivariate SP laws. Our tests include the case of i.i.d. observations as well as the one with observations from GARCH models, and cover both elliptical and skewed distributions. Moreover they refer to hypotheses whereby some parameters are assumed known, as well as to the full composite hypothesis with all parameters estimated from the data at hand. The new procedures are shown to perform well in finite samples and to be competitive against other methods, whenever such methods are available. An application illustrates the usefulness of the new procedure for modeling stock returns and explores the subsequent forecasting implications.

References

Adler R, Feldman R, Taqqu M (1998) A practical guide to heavy tails: statistical techniques and applications. Birkhäuser, Boston

Bárcenas R, Ortega J, Quiroz AJ (2017) Quadratic forms of the empirical processes for the two-sample problem for functional data. TEST 26:503–526

Bugni FA, Hall P, Horowitz JL, Neumann GR (2009) Goodness-of-fit tests for functional data. Econom J 12:S1–S18

Byczkowski T, Nolan JP, Rajput B (1993) Approximation of multidimensional stable densities. J Multivar Anal 46(1):13–31

Cadirci MS, Evans D, Leonenko N, Makogin V (2022) Entropy-based test for generalised Gaussian distributions. Comput Stat Data Anal 173:107502

Chen F, Jiménez-Gamero MD, Meintanis S, Zhu L (2022) A general Monte Carlo method for multivariate goodness-of-fit testing applied to elliptical families. Comput Stat Data Anal 175:107548

Chen W, Genton MG (2023) Are you all normal? It depends! Int Stat Rev 91(1):114–139

Chen H, **a Y (2023) A normality test for high-dimensional data based on the nearest neighbor approach. J Am Stat Assoc 118(541):719–731

Christoffersen PF (1998) Evaluating interval forecasts. Int Econ Rev 39(4):841–862

Csörgő S (1981) Multivariate empirical characteristic functions. Z für Wahrscheinlichkeitstheorie und Verwandte Gebiete 55(2):203–229

Cuesta-Albertos JA, García-Portugués E, Febrero-Bande M, González- Manteiga W (2019) Goodness-of-fit tests for the functional linear model based on randomly projected empirical processes. Ann Stat 47(1):439–467

Ebner B, Henze N (2020) Tests for multivariate normality-a critical review with emphasis on weighted L2-statistics. TEST 29:845–892

Ebner B, Henze N (2023) On the eigenvalues associated with the limit null distribution of the Epps–Pulley test of normality. Stat Pap 64(3):739–752

Fama EF (1965) The behavior of stock-market prices. J Bus 38(1):34–105

Francq C, Zakoïan J-M (2016) Estimating multivariate volatility models equation by equation. J R Stat Soc Ser B 78:613–635

Giacomini R, Politis DN, White H (2013) A warp-speed method for conducting Monte Carlo experiments involving bootstrap estimators. Econom Theory 29(3):567–589

Goia A, Vieu P (2016) An introduction to recent advances in high/infinite dimensional statistics, vol 146. Elsevier

Górecki T, Horváth L, Kokoszka P (2020) Tests of normality of functional data. Int Stat Rev 88(3):677–697

Gupta AK, Henze N, Klar B (2004) Testing for affine equivalence of elliptically symmetric distributions. J Multivar Anal 88:222–242

Hadjicosta E, Richards D (2020) Integral transform methods in goodness-of-fit testing, II: the Wishart distributions. Ann Inst Stat Math 72:1317–1370

Hadjicosta E, Richards D (2020) Integral transform methods in goodness-of-fit testing, I: the gamma distributions. Metrika 83:733–777

Henze N (1990) An approximation to the limit distribution of the Epps–Pulley test statistic for normality. Metrika 37:7–18

Henze N (1997) Extreme smoothing and testing for multivariate normality. Stat Probab Lett 35(3):203–213

Henze N, Jiménez-Gamero MD (2021) A test for Gaussianity in Hilbert spaces via the empirical characteristic functional. Scand J Stat 48(2):406–428

Henze N, Wagner T (1997) A new approach for the BHEP test for multivariate normality. J Multivar Anal 62:1–23

Henze N, Jiménez-Gamero MD, Meintanis SG (2019) Characterizations of multinormality and corresponding tests of fit, including for GARCH models. Econom Theory 35:510–546

Iyengar S, Tong YL (1989) Convexity properties of elliptically contoured distributions with applications. Sankhyā A 51:13–29

Jiang Q, Hušková M, Meintanis SG, Zhu L (2019) Asymptotics, finite-sample comparisons and applications for two-sample tests with functional data. J Multivar Anal 170:202–220

Kellner J, Celisse A (2019) A one-sample test for normality with kernel methods. Bernoulli 25:1816

Kokoszka P, Oja H, Park B, Sangalli L (2017) Special issue on functional data analysis. Econom Stat 1(C):99–100

Kotz S, Ostrovskii I (1994) Characteristic functions of a class of elliptic distributions. J Multivar Anal 49(1):164–178

Koutrouvelis IA (1980) Regression-type estimation of the parameters of stable laws. J Am Stat Assoc 75(372):918–928

Lindsay BG, Markatou M, Ray S, Yang K, Chen S-C (2008) Quadratic distances on probabilities: a unified foundation. Ann Stat 36(2):983–1006

Lombardi MJ, Veredas D (2009) Indirect estimation of elliptical stable distributions. Comput Stat Data Anal 53(6):2309–2324

Mandelbrot B (1963) The variation of certain speculative prices. J Bus 36:394–419

Matsui M, Takemura A (2008) Goodness-of-fit tests for symmetric stable distributions-empirical characteristic function approach. TEST 17:546–566

Meintanis SG, Ngatchou-Wandji J, Taufer E (2015) Goodness-of-fit tests for multivariate stable distributions based on the empirical characteristic function. J Multivar Anal 140:171–192

Meintanis SG, Milošević B, Obradović M (2023) Bahadur efficiency for certain goodness-of-fit tests based on the empirical characteristic function. Metrika 86(7):723–751

Nadarajah S (2003) The Kotz-type distribution with applications. Stat J Theor Appl Stat 37(4):341–358

Nieto-Reyes A, Cuesta-Albertos JA, Gamboa F (2014) A random projection based test of Gaussianity for stationary processes. Comput Stat Data Anal 75:124–141

Nolan JP (2013) Multivariate elliptically contoured stable distributions: theory and estimation. Computat Stat 28(5):2067–2089

Nolan JP (2020) Univariate stable distributions. Springer, New York

Nolan JP, Panorska AK, McCulloch JH (2001) Estimation of stable spectral measures. Math Comput Model 34(9–11):1113–1122

Ogata H (2013) Estimation for multivariate stable distributions with generalized empirical likelihood. J Econom 172(2):248–254

Pfister N, Bühlmann P, Schölkopf B, Peters J (2018) Kernel-based tests for joint independence. J R Stat Soc Ser B 80(1):5–31

Press SJ (1972) Estimation in univariate and multivariate stable distributions. J Am Stat Assoc 67(340):842–846

Press SJ (1972) Multivariate stable distributions. J Multivar Anal 2(4):444–462

Rachev ST, Mittnik S (2000) Stable Paretian models in finance, vol 7. Wiley, New York

Rice G, Wirjanto T, Zhao Y (2020) Tests for conditional heteroscedasticity of functional data. J Time Ser Anal 41(6):733–758

Samorodnitsky G, Taqqu MS (1994) Stable non-Gaussian random processes: stochastic models with infinite variance. Chapman and Hall, New York

Sathe AM, Upadhye NS (2020) Estimation of the parameters of multivariate stable distributions. Commun Stat Simul Comput 1–18

Silvennoinen A, Teräsvirta T (2009) Multivariate GARCH models. Handbook of financial time series. Springer, New York, pp 201–229

Streit F (1991) On the characteristic functions of the Kotz type distributions. C R Math Rep Acad Sci Can 13(4):121–124

Tsionas MG (2016) Bayesian analysis of multivariate stable distributions using one-dimensional projections. J Multivar Anal 143:185–193

Uchaikin VV, Zolotarev VM (1998) Chance and stability: stable distributions and their applications. VSP, Amsterdam

Yamada T, Himeno T (2019) Estimation of multivariate 3rd moment for high-dimensional data and its application for testing multivariate normality. Comput Stat 34(2):911–941

Acknowledgements

The authors wish to sincerely thank Christian Francq (University of Lille, CREST) for providing the code for EbE estimation.

Funding

Open access funding provided by North-West University.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No funds, grants, or other support was received for conducting this study. The authors have no relevant financial or non-financial interests to disclose. The authors have no conflicts of interest to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Meintanis, S.G., Nolan, J.P. & Pretorius, C. Specification procedures for multivariate stable-Paretian laws for independent and for conditionally heteroskedastic data. TEST 33, 517–539 (2024). https://doi.org/10.1007/s11749-023-00909-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11749-023-00909-3