Abstract

Background

Guidelines recommend Advance Care Planning (ACP) for seriously ill older adults to increase the patient-centeredness of end-of-life care. Few interventions target the inpatient setting.

Objective

To test the effect of a novel physician-directed intervention on ACP conversations in the inpatient setting.

Design

Stepped wedge cluster-randomized design with five 1-month steps (October 2020–February 2021), and 3-month extensions at each end.

Setting

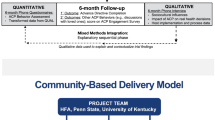

A total of 35/125 hospitals staffed by a nationwide physician practice with an existing quality improvement initiative to increase ACP (enhanced usual care).

Participants

Physicians employed for ≥ 6 months at these hospitals; patients aged ≥ 65 years they treated between July 2020–May 2021.

Intervention

Greater than or equal to 2 h of exposure to a theory-based video game designed to increase autonomous motivation for ACP; enhanced usual care.

Main measure

ACP billing (data abstractors blinded to intervention status).

Results

A total of 163/319 (52%) invited, eligible hospitalists consented to participate, 161 (98%) responded, and 132 (81%) completed all tasks. Physicians’ mean age was 40 (SD 7); most were male (76%), Asian (52%), and reported playing the game for ≥ 2 h (81%). These physicians treated 44,235 eligible patients over the entire study period. Most patients (57%) were ≥ 75; 15% had COVID. ACP billing decreased between the pre- and post-intervention periods (26% v. 21%). After adjustment, the homogeneous effect of the game on ACP billing was non-significant (OR 0.96; 95% CI 0.88–1.06; p = 0.42). There was effect modification by step (p < 0.001), with the game associated with increased billing in steps 1–3 (OR 1.03 [step 1]; OR 1.15 [step 2]; OR 1.13 [step 3]) and decreased billing in steps 4–5 (OR 0.66 [step 4]; OR 0.95 [step 5]).

Conclusions

When added to enhanced usual care, a novel video game intervention had no clear effect on ACP billing, but variation across steps of the trial raised concerns about confounding from secular trends (i.e., COVID).

Trial Registration

Clinicaltrials.gov; NCT 04557930, 9/21/2020.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Gaps persist in the patient-centeredness of care provided to patients with serious illness1. Clinical practice guidelines encourage Advance Care Planning (ACP)—the iterative process where patients complete advance directives, articulating their preferences for future health care, and/or preparing their proxies—to address these gaps2,3,4. Acute hospitalizations, which make salient these considerations, may be a high yield opportunity to engage patients in ACP.

The most successful ACP interventions to increase ACP completion rates by patients use third-party facilitators (e.g., research staff) to shepherd patients through their conversations with clinicians, but have limited scalability5. Existing scalable interventions designed to increase clinician ACP conversations with their patients (e.g., audit-and-feedback, financial incentives) have produced only moderate improvements in performance6,7. None target autonomous motivation (defined as the psychological need to perform tasks that generate innate satisfaction or that align with deeply held values), which we identified as one (but not the only) predictor of sustained behavior change8. We built a theory-based digital behavior change intervention, a customized video game played on iPads, to increase clinicians' willingness to engage in ACP conversations9.

The objective of this study was to test whether the game increased hospitalists’ willingness to have ACP conversations (measured by attitudes) and frequency of conversations (measured by ACP billing) in a stepped-wedge cluster-randomized clinical trial at hospitals staffed by a hospitalist group that already offered ACP education, financial incentives, and audit-and-feedback. We chose the trial design to avoid the misclassification of patients and the contamination of control physicians introduced by physician-level randomization, to minimize the risk of imbalance introduced by a parallel-cluster design because of the high intra-class correlation for ACP billing at the hospital-level, and to maximize statistical efficiency.

METHODS

Overview

We conducted a stepped-wedge cluster-randomized trial in the USA between July 2020 and May 2021, with 35 hospitals, 5 1-month steps, and 3-month pre- and post-intervention extensions (see Appendix), and compared the difference in the ACP billing of physicians before-and-after dissemination of a digital behavior change intervention. We have previously published the trial protocol and details of the development of the intervention9,10.

Trial Oversight

Dartmouth’s Committee for the Protection of Human Subjects approved the study. The National Institute of Aging funded the trial and convened an independent data and safety monitoring board. The authors vouch for the accuracy and rigor of the analysis.

Hospital Sites, Physicians, and Patients

We partnered with a national physician practice that provides hospitalist services to 220 acute-care hospitals in the USA, and with an interest in supporting efforts to increase appropriate ACP billing. We selected 125 hospitals that participated in a value-based delivery model of care, had > 0% risk-adjusted ACP billing in Quarter 1 2020, and were staffed by the organization for ≥ 2 quarters. We sampled 40 for the trial, based on their balance on blocking variables (e.g., risk-adjusted ACP rates). Using a schema developed in R (R Core Team, Vienna, Austria), one team member (AJO) stratified hospitals into eight blocks and randomized each hospital per block to the step in which they were invited to receive the intervention. At the start of each step (to accommodate the oscillating stresses from COVID-19), we sequentially contacted the chief of the hospitalist services of each hospital. With their consent, we e-mailed invitations to their hospitalist team, and screened responders for eligibility. Once we reached the target enrollment for the step, we stopped recruitment. Physician eligibility included employment at the practice for ≥ 2 quarters, staffing of an eligible hospital for ≥ 1 quarter, affirming the use of ACP billing codes during the eligibility screen, providing informed consent, and the provision of contact information that matched employer records. We excluded non-physician providers because of variation in billing practices. We obtained discharge abstracts for patients over the age of 65 treated by a trial physician at a trial hospital during the study period. Potential trial physicians remained unaware of the trial until we approached them for consent. The two trial analysts remained blinded to the hospital’s intervention status until the completion of data abstraction. We provide additional details in the Appendix.

Study Protocol

Physicians completed a questionnaire when they enrolled, reporting their demographics, professional characteristics, and attitudes toward ACP. Next, we mailed them a new iPad with the game pre-loaded, which they kept at the conclusion of the trial (approximate value: $300). The use of the iPad both allowed us to provide participants with an honorarium that they would value and also standardized access to the intervention11. We asked participants to spend ≥ 2 h playing the game within 2 weeks of receiving the iPad, and then to complete a post-intervention questionnaire. We issued four reminders.

Intervention: Hopewell Hospitalist

As part of a theory-based development process modeled on best-practice principles adopted from Intervention Map** and the Behavior Change Wheel, we reviewed the literature, performed a Delphi-panel study to generate expert consensus guidelines for ACP conversations in the hospital, and conducted semi-structured interviews with a sample of hospital leaders responsible for leading ACP quality improvement efforts at the physician practice with which we partnered1,12,13,14,15,16,17,18,19,20,21,22. Specifically, we specified the criteria that providers should use when deciding whether or not to have ACP conversations with hospitalized older adults, and attempted to isolate barriers and facilitators of the behavior. Results from the Delphi panel reframed the behavioral problem from one of recognition (i.e., training providers to detect patients who needed ACP conversations) to one of willingness (i.e., persuading providers to make ACP conversations a priority for all patients over 65)18. The literature review and the interviews with end-users identified three barriers to willingness to have ACP conversations in the hospital: skills, attitudes, and logistical impediments13,14,17,19,20,21,22. We could not find any existing interventions that effectively modified providers’ attitudes, and therefore decided to focus our efforts in this area, using self-determination theory as our basis of behavioral change5,6,7,23. A multi-disciplinary team created a theory-based video game in collaboration with Schell Games (Pittsburgh, PA) with the behavioral objective of increasing hospitalists’ willingness to have inpatient ACP conversations with all patients over the age of 658,9,24,25. We include additional details in Box 1.

Box 1 Description of Hopewell Hospitalist.

Objective: To increase the frequency of ACP conversations during acute illness by targeting hospitalists’ willingness to raise the topic with all patients over the age of 65 Duration: 3 h of gameplay possible Theory of behavior: self-determination theory Framework of design: narrative engagement theory |

Didactic principles: ACP conversations—(a) help to ensure goal-concordant treatment decisions when or if medical decompensation occurs; (b) represent an opportunity to discuss hospice eligibility: (c) allow older adults to think generally about “life completion” tasks; (d) reduce emotional distress and decisional conflict experienced by surrogates and patients. Race should not influence physician decisions to engage in ACP conversations because individual goals and values, not race, affect patient preferences for end-of-life treatment |

Game concept: The player takes on the role of Andy Jordan, a young emergency medicine physician, who moves home after his grandfather’s disappearance and accepts a job at a local community hospital covering night shifts |

Game content |

Medical: Physicians interview patients who present to Hopewell Hospital, and have the option of investigating further, having an ACP conversation with the patient/surrogate, or entering a code status order. The patients include: • 5 “teaching” cases of older patients with serious illness (e.g., heart failure), adapted from clinical practice. If players engage in ACP conversations, they later receive updates on the positive outcomes experienced by these patients. If players do not engage in ACP conversations, these patients return with complications of their initial complaint. Players also receive feedback from in-game characters (e.g., their supervisor, consultants, family members) about the impact that timely advanced care plans can have on the trajectories of patients’ care • 5 “non-teaching” cases of patients with diagnostically challenging problems, adapted from the clinical case records of the Massachusetts General Hospital as presented in the New England Journal of Medicine. These patients are designed to facilitate player engagement in the clinical task • 2 “non-teaching” cases of patients with life-threatening illnesses, adapted from clinical practice. These patients serve as a management challenge to facilitate player engagement in the clinical task |

Non-medical: Robert Jordan, Andy’s estranged grandfather, has disappeared. The prologue hints that his disappearance may or may not have occurred voluntarily. The player must solve the mystery by uncovering clues revealed through conversation with in-game characters and by exploring the environment. The non-medical content is designed to increase player engagement with the game by increasing realism, interest, and identification |

Game mechanics |

1. Connect the dots: clues (medical and non-medical) appear on a notepad on the screen. The player can draw connections between clues to uncover information and to unlock additional dialogue options 2. Tap to act: the player can tap on the screen to move through the world and interact with other characters. This mechanic also allows the player to perform key patient-care actions, including procedures like lumbar punctures and intubations 3. Points: players receive points for uncovering non-medical clues, which unlock in-game lore. Specifically, they can access letters written by Andy and his grandfather, which should provide additional insight into their characters and motivations |

Comparator: Enhanced-Usual Care

The physician practice had already implemented best-practice quality improvement efforts to improve ACP practices for patients ≥ 65 years among its providers, which resulted in an increase in billing from 0% in 2016 to 21% in Quarter 1 2020. The program, targeting knowledge and extrinsic motivation, included web-based didactic education to increase knowledge of ACP guidelines and of coding rules for billing, reminders in the electronic medical record, audit-and-feedback to reinforce organizational norms, and a financial incentive of $20 for each billed ACP conversation. It was extant during the trial period.

Data Sources and Management

Hospital, Physician, and Patient Characteristics

In January 2020, the physician practice provided data on their hospitals (i.e., ACP billing proportions, numbers of hospitalists, organizational characteristics). At the time of enrollment, participating physicians provided information on their personal characteristics. In December 2021, the practice provided (a) discharge abstracts for all patients treated by its hospitalists during the study period, with demographics, insurance status, dates of stay, discharge diagnoses, disposition status, and readmission (7- and 30-day); (b) physician claims filed during the hospitalization; (c) physician responses to the Surprise Question (“would you be surprised if the patient died in the next year?”), a prompt triggered for all inpatients over the age of 65 and linked to a reminder to engage in ACP.

Fidelity of Intervention Delivery and Receipt

To assess fidelity of delivery (adherence), we relied exclusively on self-reported data (see Appendix for more details). We measured the fidelity of intervention receipt (i.e., assessed participant comprehension and use of novel cognitive skills) by capturing physicians’ attitudes to ACP before and after playing the game using items adapted from a published study26. Additionally, after playing the game, we asked physicians to review a set of five vignettes with varying inpatient and 1-year mortality, and describe how they would prioritize an ACP conversation18. Within-subject improvements in positive attitudes toward ACP or evidence that they prioritized ACP during their responses to the vignettes would suggest receipt of learning principles in the game.

Outcome Assessment

We used ACP billing (i.e., the presence of ACP charges [billing codes 99,497 and 99498]) as a proxy measure for the outcome of interest (ACP conversations) because it made the work feasible27. In pilot work (details provided in the Appendix, manuscript in preparation), we found the measure specific (100%) but not sensitive (41%). To increase the reliability of the outcome assessment, two independent, blinded analysts abstracted the patient data provided by the physician practice, with complete agreement, and then one (MM) performed the analyses.

Sample Size and Protocol Deviations

Power Calculation

To calculate the sample size for the trial, we decided that a clinically meaningful effect would be a 3% absolute increase in ACP billing across quarters (twice the 1.5% per quarter increase that the physician practice had achieved between 2018 and 2019 with enhanced usual care). We then estimated the design-effect (a measure of the inefficiency of the design in comparison to a completely randomized one), and performed conventional power calculations in R28. Based on the practice’s ACP billing in Quarter 1 2020, a hospital intra-class correlation coefficient of 0.01–0.10, and 160 evaluable patients per physician-quarter, with 25 to 30 physicians per hospital and 4 to 8 hospitals per step, we anticipated the ability to detect between a 1% absolute difference with a power of 80% and a 3.5% absolute difference with power of greater than 99% using a two-sided test at the 0.05-level between ACP billing before and after the distribution of the game.

Protocol Deviations

We followed a pre-defined protocol except for four violations10. First, we mailed iPads directly to participants’ homes instead of to a practice-employed nurse liaison. Second, we could not use game-based analytics to assess adherence (see Appendix) and relied on self-report. Third, after step 3, we increased the cap on physician enrollment from 30 to 40 per step because of physician interest in the trial. Fourth, we planned direct observation of ACP behaviors at a subset of sites, but canceled this analysis because of restrictions imposed by COVID.

Analyses

We summarized hospital, physician, and patient characteristics using means (standard deviations) and proportions and compared their distribution using chi-square and F tests. We calculated a cooperation frequency for the trial as the proportion of invited, eligible physicians at randomized hospitals who agreed to participate, a response frequency as the proportion who agreed to participate and performed any study tasks, and a completion frequency as the proportion who completed all the study tasks.

Fidelity of Intervention Delivery and Receipt

We summarized the length of time that physicians spent playing the game. We compared responses to items on the survey of physician attitudes to ACP before and after the intervention using McNemar tests, and summarized responses to the vignettes.

Statistical Models and Analyses to Estimate Relationship of Intervention to Outcomes

We applied the intention-to-treat principle to analyses of all inpatients over the age of 65 who received care from at least one enrolled hospitalist in our primary analysis estimating the effect of treatment assignment, and not delivery. In a descriptive analysis of the outcome, we summarized the proportion of patients with an ACP bill in the pre- and post-intervention periods.

To estimate the effect of the video game, we used a traditional statistical model for the analysis of stepped-wedge designs. Homogeneous intervention effect was assumed across the steps with the presence of an ACP bill as the dependent variable. Specifically, we estimated a mixed-effects patient-level logistic regression model with indicator variables for trial step and for calendar time, patient and hospital covariates hypothesized to influence the likelihood of an ACP conversation (e.g., serious illness), and random effects for hospitals to account for clustering of multiple patient observations within each hospital29,30. We specify the full model in the Appendix.

We conducted planned secondary analyses to evaluate the effect of heterogeneity among steps (including a step-by-intervention interaction) and the dose-treatment effect. In post hoc analyses, we tested the durability of the treatment effect (the time-by-treatment-effect interaction), excluded hospitals ending their contract with the practice within 3 months of the conclusion of the trial (to mitigate against incomplete data), restricted patients to those treated by physicians at the original site of enrollment (to mitigate against physician cross-over among steps), and focused on the sickest patients (including a mortality-by-intervention interaction).

RESULTS

Hospital, Physician, and Patient Characteristics

We enrolled 35 hospitals and 163 eligible, invited hospitalists (cooperation 52%), and identified 44,235 patients treated by these physicians across the trial period of July 2020–May 2021 (see Fig. 1). Hospitals were mostly large (51%), urban (89%), and Southern (66%). Physicians were mostly male (66%), Asian (52%), and experienced (mean 6.6 years [SD 5] in practice). At baseline, they reported using ACP billing codes often (45%) or always (33%) after having an ACP conversation. Most of their patients were over 75 (57%); 35% had at least one serious illness, and 15% had COVID-19. We include a description of hospital, physician, and patient characteristics in Table 1 and a comparison of these characteristics in the Appendix.

Fidelity of Intervention Delivery and Receipt

Among physicians who enrolled in the trial, 161 (response 99%) completed the post-enrollment survey and 132 reported playing the video game for ≥ 2 h (completion 81%). No physician reported any harms from the intervention. Physicians had positive attitudes toward ACP before the trial, describing confidence in their ability to discuss ACP with patients and belief that ACP improved end-of-life care. These attitudes did not change after exposure to the video game. After playing the game, most participants (58%) reported prioritizing ACP conversations for all patients over the age of 65, even among those with the lowest risk of inpatient and 1-year mortality. We include more details in the Appendix.

Fidelity of Intervention Enactment

The intervention rollout intersected with the Delta wave of the pandemic, with steps 1–2 occurring as it crested, step 3 occurring at the peak, and steps 4–5 occurring as it ebbed (see Fig. 2). ACP billing declined over the study from 26 to 21% (see Table 1). After adjustment for time, patient, and hospital characteristics, when the effect of the intervention was pooled across the steps, exposure to the video game had a non-significant average effect on ACP billing (odds ratio [OR] 0.96; 95% confidence interval [CI] 0.88–1.06) as shown in Table 2. However, we found a significant interaction between the step of the trial and the effect of the intervention (p < 0.001), with the intervention having a positive effect in steps 1–3 (OR 1.03, 95% CI 0.89–1.19 [step 1]; OR 1.15, 95% CI 0.98–1.36 [step 2]; OR 1.13, 95% CI 0.97–1.33 [step 3]) and a negative effect in steps 4–5 (OR 0.66, 95% CI 0.57–0.76 [step 4]; OR 0.96, 95% 0.89–1.19 [step 5]). We present these analyses in Table 3, along with the time-by-treatment-effect interaction and a dose–response effect, which confirm the heterogeneity of the intervention’s effect across steps. Additional post hoc sensitivity analyses are presented in the Appendix. Only one analysis changed the main effect of the intervention from null to positive: comparing decedents to survivors. This should be interpreted with caution not only because it is a post hoc analysis, but because it is conditioned on an outcome (death) after “treatment” (ACP).

DISCUSSION

In this trial of a video game designed to increase physician willingness to have ACP conversations with hospitalized adults, adherence to the experimental protocol was high, and physicians reported strongly positive attitudes toward ACP conversations. Nonetheless, ACP billing practices did not improve between the pre- and post-intervention periods. In planned secondary analyses, an interaction occurred between the step of the trial and the effect of the game.

There is increasing controversy in the literature over the ability of ACP initiatives to improve end-of-life care in the USA31. Systematic reviews differ in their conclusions about the effect of advance care planning on patient outcomes and a recent opinion piece suggests that “in the moment” decision support (i.e., goals of care conversations) is more useful for aligning care with treatment preferences.5,19,20,21 We speculated that ACP conversations conducted during acute care hospitalizations would be more like “in the moment” decision support than those in the outpatient setting because they probably inform more proximate (hospital) decisions. In the end, our trial cannot inform this controversy because it had a null result.

Several factors could have contributed to this null effect. First, when designing the trial in 2019, we selected a stepped-wedge rather than a parallel-cluster design to reduce the impact of site-level intraclass correlation on trial power32. Stepped wedge trials assume that clusters experience secular trends equivalently, particularly when randomization balances the distribution of cluster characteristics across steps. However, the Delta wave of COVID-19 began to crest shortly after we started enrollment. The stress exerted by the pandemic potentially had a differential impact across the steps, changing the contextual conditions moderating the intervention in unexpected ways and potentially biasing results toward the null33. Anecdotally, for example, we learned that hospitals in step 4, where a reduced rate of ACP billing was observed, experienced greater organizational turmoil (e.g., losing their clinical nurse provider who spearheaded the ACP initiative; changing their physician leader) than hospitals in the other steps of the trial. Obviously, we cannot exclude the possibility that these ecological observations may have no relationship.

Second, we used ACP billing to assess the effect of the intervention34. In a chart review, billing data captured physician behavior with good specificity but limited sensitivity, under-estimating the number of conversations that occurred in practice. We selected ACP billing pragmatically, but cannot exclude the possibility that a trial with a more sensitive outcome measure (e.g., direct observation) may have had a different result.

Third, we selected our behavioral target to maximize the potential for scalability and to address a gap in the physician practices’ existing quality improvement program, but in doing so may have limited the incremental efficacy of the intervention.17,18,35 Other barriers (e.g., competing patient care obligations) may have a greater impact on behavior, particularly during a pandemic13,14,15. The intervention may also have encountered a ceiling effect, as most physicians expressed generally positive attitudes toward ACP at the start of the trial and many reported always using the billing codes. Finally, we designed the experiment to test a clinically meaningful effect size of 3%, based on a review of the literature but may have overestimated the potential power of the intervention.

The observation that ACP conversations did increase among the sickest cohort of patients, those who died, suggests potential future directions of this program of research. For example, interventions may need to improve physician recognition of patients with serious illness in addition to increasing their willingness to have these conversations. Other potential opportunities include combining the game with interventions that target other determinants of behavior (e.g., communication skill), testing its effect on physicians with less positive attitudes to ACP, and using more sensitive measures of behavior (e.g., natural language processing of charts). Finally, future trials (ideally conducted outside of a pandemic) should use best-practice implementation science methods to evaluate the effectiveness of the intervention more fully.

We note several weaknesses of the trial. The organization, with which we partnered, had an explicit commitment to increasing ACP within hospitals. The effect the video game would have outside of this context is unclear. Second, we did not supplement data collection with a systematic evaluation of ACP behaviors at each hospital, which may have provided insight into the contextual conditions that moderated the game’s effect. We planned this analysis, but canceled data collection because the pandemic made site visits infeasible. Third, we powered the trial to include all hospitalized patients, and not just those with serious illness, who might have experienced the greatest impact of the intervention. Fourth, we limited the pre-intervention assessment of attitudes to ACP to minimize respondent burden, but in doing so impeded our ability to make inferences about the mechanism of the null trial result.

In summary, exposure to a customized, theory-based video game did not consistently affect the ACP billing practices of physicians when treating hospitalized older adults. However, changes in contextual conditions at hospitals because of the COVID-19 pandemic made isolating a pure and coherent effect of the intervention challenging and sensitive to model specifications. Next steps should include testing the effect of the intervention on a cohort of patients with more serious illness.

Data Availability:

Access to the de-identified dataset will be made available upon written request to the senior author.

References

Tulsky JA. Improving quality of care for serious illness: findings and recommendations of the Institute of Medicine report on dying in America. JAMA Intern Med, 2015. 175(5): p. 840-1.

Rosenberg AR, et al. Now, more than ever, is the time for early and frequent advance care planning. J Clin Oncol, 2020. 38(26): p. 2956-2959.

Schwerzmann M, et al. Recommendations for advance care planning in adults with congenital heart disease: a position paper from the ESC Working Group of Adult Congenital Heart Disease, the Association of Cardiovascular Nursing and Allied Professions (ACNAP), the European Association for Palliative Care (EAPC), and the International Society for Adult Congenital Heart Disease (ISACHD). Eur Heart J, 2020. 41(43): p. 4200-4210.

Qaseem A, et al. Evidence-based interventions to improve the palliative care of pain, dyspnea, and depression at the end of life: a clinical practice guideline from the American College of Physicians. Ann Intern Med, 2008. 148(2): p. 141-6.

Schichtel M, et al. Clinician-targeted interventions to improve advance care planning in heart failure: a systematic review and meta-analysis. Heart, 2019. 105(17): p. 1316-1324.

Houben CHM. et al. Efficacy of advance care planning: a systematic review and meta-analysis. J Am Med Dir Assoc, 2014. 15(7): p. 477-489.

Bryant J, et al. Effectiveness of interventions to increase participation in advance care planning for people with a diagnosis of dementia: A systematic review. Palliat Med, 2019. 33(3): p. 262-273.

Ryan RM and Deci EL. Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. Am Psychol, 2000. 55(1): p. 68-78.

Mohan D et al. Development of a theory-based video-game intervention to increase advance care planning conversations by healthcare providers. Implement Sci Commun, 2021. 2(1): p. 117.

Mohan D, et al. Videogame intervention to increase advance care planning conversations by hospitalists with older adults: study protocol for a stepped-wedge clinical trial. BMJ Open, 2021. 11(3): p. e045084.

Mohan D et al. Using incentives to recruit physicians into behavioral trials: lessons learned from four studies. BMC Res Notes, 2017. 10(1): p. 776.

Anderson WG, Berlinger N, Ragland J et al. Hospital-based prognosis and goals of care discussions with seriously ill patients: a pathway to integrate a key primary palliative care process into the workflow of hospitalist physicians and their teams, in Society of Hospital Medicine and The Hastings Center. 2017.

Howard M, et al. Barriers to and enablers of advance care planning with patients in primary care: Survey of health care providers. Can Fam Physician, 2018. 64(4): p. e190-e198.

Lund S, Richardson A, May C. Barriers to advance care planning at the end of life: an explanatory systematic review of implementation studies. PLoS One, 2015. 10(2): p. e0116629.

Heyland DK, et al. Failure to engage hospitalized elderly patients and their families in advance care planning. JAMA Intern Med, 2013. 173(9): p. 778-87.

Centers for Medicare and Medicaid Services (2019). Quality measures fact sheet: Advance Care Plan (ACP) [NQF#0326]. Accessed 30 May 2023; Available from: https://innovation.cms.gov/files/fact-sheet/bpciadvanced-fs-nqf0326.pdf.

Sacks OA, Knutzen KE, Rudolph MA et al. Advance care planning and professional satisfaction from "doing the right thing": Interviews with hospitalist chiefs. J Pain Symptom Manage, 2020. 60(4): p. e31-e34.

Mohan D, et al. A new standard for advance care planning (ACP) conversations in the hospital: results from a Delphi panel. J Gen Intern Med, 2021. 36(1): p. 69-76.

Vanderhaeghen B, et al. Toward hospital implementation of advance care planning: should hospital professionals be involved. Qual Health Res, 2018. 28(3): p. 456-465.

Fulmer T, et al. Physicians' views on advance care planning and end-of-life care conversations. J Am Geriatr Soc, 2018. 66(6): p. 1201-1205.

Mullick A, Martin J, Sallnow L. An introduction to advance care planning in practice. Bmj, 2013. 347: p. f6064.

Fanta L Tyler J. Physician perceptions of barriers to advance care planning. S D Med, 2017. 70(7): p. 303-309.

Deci EL, Ryan RM. Self-determination theory: A macrotheory of human motivation, development, and health. Canadian Psychology / Psychologie canadienne, 2008. 49(3): p. 182-185.

Michie S, et al. The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: building an international consensus for the reporting of behavior change interventions. Ann Behav Med, 2013. 46(1): p. 81-95.

Miller-Day M, Hecht ML. Narrative means to preventative ends: a narrative engagement framework for designing prevention interventions. Health Commun, 2013. 28(7): p. 657-70.

Zhou Q, et al. Factors associated with nontransfer in trauma patients meeting American College of Surgeons' criteria for transfer at nontertiary centers. JAMA Surg, 2017. 152(4): p. 369-376.

Barnato AE, et al. Use of advance care planning billing codes for hospitalized older adults at high risk of dying: a national observational study. J Hosp Med, 2019. 14(4): p. 229-231.

Arnup SJ, et al. Understanding the cluster randomised crossover design: a graphical illustraton of the components of variation and a sample size tutorial. Trials, 2017. 18(1): p. 381.

Kelley AS, et al. Identifying older adults with serious illness: a critical step toward improving the value of health care. Health Serv Res, 2017. 52(1): p. 113-131.

Kelley AS, et al. The serious illness population: ascertainment via electronic health record or claims data. J Pain Symptom Manage, 2021. 62(3): p. e148-e155.

Morrison RS, Meier DE, Arnold RM. What's wrong with advance care planning?. JAMA, 2021. 326(16): p. 1575-1576.

Hemming K, et al. The stepped wedge cluster randomised trial: rationale, design, analysis, and reporting. BMJ, 2015. 350: p. h391.

Hemming K, Taljaard M. Reflection on modern methods: when is a stepped-wedge cluster randomized trial a good study design choice. Int J Epidemiol, 2020. 49(3): p. 1043-1052.

Jones CA, et al. Top 10 tips for using advance care planning codes in palliative medicine and beyond. J Palliat Med, 2016. 19(12): p. 1249-1253.

Mohan D, et al. Serious games may improve physician heuristics in trauma triage. Proc Natl Acad Sci U S A, 2018. 115(37): p. 9204-9209.

Acknowledgements:

The authors would like to thank Dartmouth Presidential Scholar undergraduate research assistants Allison Zheng, Deborah Feifer, Olivia Brody-Bizar for their help recruiting and enrolling trial participants, Devang Agarwal for data abstraction, and Derek C. Angus, MD at the University of Pittsburgh for his advice on the design of the trial.

Funding

This work was supported by the National Institute of Aging (P01AG019783, Barnato PI) and the Patrick and Catherine Weldon Donaghue Medical Research Foundation (Barnato PI). Hopewell Hospitalist is an iteration of a game funded by the National Library of Medicine (DP2 LM012339 Mohan).

Author information

Authors and Affiliations

Contributions

Study concept and design or acquisition and analysis of data: DM, AJO, JC, MM, MM, MR, JAE, AEB.

Drafting of the manuscript: DM.

Critical revision of the manuscript for important intellectual content: AJO, JC, MM, MM, MR, JAE, AEB.

All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics Approval and Consent to Participate

The Dartmouth Committee for the Protection of Human Subjects has approved this study (STUDY00031980). Given our recruitment strategy (email letters soliciting participation), we obtained permission to waive written consent for participation. The study team obtained electronic consent and explained the study protocol to all physicians who agreed to participate in the trial.

Consent for Publication:

Not applicable.

Competing interests:

The authors have no financial conflicts to disclose.

Disclaimer

The funding agencies reviewed the study but played no role in its design, analysis, or interpretation.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Mohan, D., O’Malley, A.J., Chelen, J. et al. Using a Video Game Intervention to Increase Hospitalists’ Advance Care Planning Conversations with Older Adults: a Stepped Wedge Randomized Clinical Trial. J GEN INTERN MED 38, 3224–3234 (2023). https://doi.org/10.1007/s11606-023-08297-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-023-08297-y