Abstract

Monitoring systems have become increasingly prevalent in order to increase the safety of elderly people who live alone. These systems are designed to raise alerts when adverse events are detected, which in turn enables family and carers to take action in a timely manner. However, monitoring systems typically suffer from two problems: they may generate false alerts or miss true adverse events.

This motivates the two user studies presented in this paper: (1) in the first study, we investigate the effect of the performance of different monitoring systems, in terms of accuracy and error type, on users’ trust in these systems and behaviour; and (2) in the second study, we examine the effect of recommendations made by an advisor agent on users’ behaviour.

Our user studies take the form of a web-based game set in a retirement village, where elderly residents live in smart homes equipped with monitoring systems. Players, who “work” in the village, perform a primary task whereby they must ensure the welfare of the residents by attending to adverse events in a timely manner, and a secondary routine task that demands their attention. These conditions are typical of a retirement setting, where workers perform various duties in addition to kee** an eye on a monitoring system.

Our main findings pertain to: (1) the identification of user types that shed light on users’ trust in automation and aspects of their behaviour; (2) the effect of monitoring-system accuracy and error type on users’ trust and behaviour; (3) the effect of the recommendations made by an advisor agent on users’ behaviour; and (4) the identification of influential factors in models that predict users’ trust and behaviour. The studies that yield these findings are enabled by two methodological contributions: (5) the game itself, which supports experimentation with various factors, and a version of the game augmented with an advisor agent; and (6) techniques for calibrating the parameters of the game and determining the recommendations of the advisor agent.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Monitoring systems have become increasingly prevalent in order to increase the safety of elderly people who live alone. These systems are designed to raise alerts when adverse events are detected, which in turn enables family and carers to take action in a timely manner. However, monitoring systems typically suffer from two problems: they may generate false alerts or miss true adverse events.

This motivates our first research question, focusing on a care-taking scenario: (RQ1) What is the effect of monitoring-system accuracy and error type on users’ trust in automation and behaviour? This question is addressed in our first user study, called monitoring-system study. This study was conducted in a web-based game set in a retirement village, where elderly residents live in smart homes equipped with monitoring systems. Players, who “work” in the village, perform a primary task whereby they must ensure the welfare of the residents by attending to adverse events in a timely manner, and a secondary routine task that demands their attention.Footnote 1 These conditions are typical of settings where workers perform various duties in addition to kee** an eye on a monitoring system.

In our study, users interacted with different monitoring systems that committed two types of common errors: False Alerts (FAs) and Misses of true events. We investigated the influence of these errors on users’ trust in a monitoring system, compliance and reliance. Compliance refers to an operator responding in accordance with an alarm signal (in our case, an alert raised by the monitoring system), and reliance refers to an operator taking no action when no alarm is raised (in our case, assuming that the monitoring system has not missed any adverse events) [45].

Even though participants reacted to FAs and Misses in this study, most users did not make large changes to their general behaviour patterns throughout the study. This led us to posit our second research question: (RQ2) If we had a good advisor agent that makes recommendations about how users should interact with a monitoring system, what is the effect of its recommendations on users’ behaviour?. This question is addressed in our second study, called advisor-agent study. Here, we added to the game a “very good” agent that makes rational recommendations about how to act in light of a monitoring system’s alerts or lack thereof, and is endowed with features that are deemed influential according to the literature (Section 2.2); and we determined the training effect of this agent, i.e., do users change their compliance and reliance on the monitoring system as a result of their exposure to the agent? The idea is that if such an agent can affect user behaviour, then it is worth performing further studies that examine the impact of individual agent features. However, such an investigation is outside the scope of this research.

Our observations of users’ behaviour in both studies prompted our third research question: (RQ3) Can we identify factors that predict users’ trust and behaviour? One of the factors we considered in order to answer this question was user type. Specifically, we asked Can we identify user types that shed light on behaviours of interest and have predictive value?. To answer this question, we automatically grouped participants according to basic observable behaviours. We then checked whether the answers to research questions RQ1 and RQ2 differ for different groups, e.g., do Misses affect the behaviour of one group more than that of another?

Table 1 summarizes the research questions, the studies that address them, the platforms that support these studies, and the main results. We now describe the main findings obtained from our studies, followed by the methodological contributions pertaining to the design of the game and the advisor agent.

1.1 Main findings

Our main findings pertain to: (1) the identification of user types that shed light on users’ trust in automation and aspects of their behaviour; (2) the effect of monitoring-system accuracy and error type on users’ trust, compliance and reliance on the system; (3) the effect of the recommendations made by a good advisor agent on users’ compliance and reliance on the monitoring system, and learning outcomes; and (4) the identification of influential factors in models that predict users’ trust and aspects of their behaviour.

User types obtained from basic behaviours. We automatically identified different types of users according to their behaviour in various stages of the game. For instance, high-compliance / high-reliance users attend to a large proportion of alerts, and tend not to act in the absence of alerts; while low-compliance / low-reliance users ignore many alerts, but act often in the absence of alerts. These user types shed further light on the answers to our research questions, as described below.

Effect of monitoring-system accuracy and error type on user trust in automation and behaviour. Intuitively, one would expect high FAs to reduce trust and compliance, and high Misses to reduce trust and reliance. In addition, one would expect trust to be positively correlated with system performance, and with compliance and reliance (the more we trust a system, the more we comply with its advice and rely on it, i.e., the less we check on it).

As shown in Section 2.1, these intuitions were not always validated experimentally. Hoff and Bashir [27] address this point in their comprehensive survey paper by noting that “Overall, the specific impact that false alarms and misses have on trust likely depends on the negative consequences associated with each error type in a specific context”. Thus, it is worthwhile to conduct studies about trust and behaviour in different contexts — our elderly care-taking scenario, where the consequences of users’ actions affect elderly patients, has not been investigated to date. In addition, our study differs from most previous studies in that it considers FAs and Misses together, since both occur in real monitoring systems.

The results of our study agree with the first set of intuitions: (1) system performance (FAs and Misses) affects trust (FAs have a slightly stronger effect), (2) FAs affect compliance, and (3) Misses affect reliance. However, when we considered separately the types of users we identified, we found that they are affected differently by FAs and Misses. For instance, users of one type reacted to changes in FAs, but were largely unaffected by changes in Misses.

Interestingly, the second set on intuitions did not hold: we found no correlation between trust and reliance, and we found a very weak correlation between trust and compliance.

Effect of the recommendations made by a good advisor agent on users’ behaviour. This part of our work falls under the umbrella of choice architectures [66], in the sense that we compare two ways in which people interact with a monitoring system: (1) users make their own decisions about how to react to alerts raised by a monitoring system or lack thereof; and (2) an advisor agent makes suggestions to steer users towards making better decisions [46]. The results of our study indicate that the agent-based architecture leads to improved decision-making outcomes (in the direction encouraged by the agent), both while users work with the agent and when they work independently later on, which shows that the agent has a training effect. In addition, the agent’s advice attenuated the differences between different types of users, yielding larger improvements for those who did not perform the monitoring task well initially.

Influential factors in models that predict users’ trust in automation and aspects of their behaviour. We automatically derived models that make the following predictions: trust in the monitoring system, compliance and reliance; and trust in the advisor agent, conformity with its advice and behaviour improvement (when users no longer had the agent).Footnote 2 We found that a feature value is predictive of its value in the short term, e.g., previous trust and reliance predicts future trust and reliance respectively – a phenomenon that is related to trust inertia [39]; and that user types identified in early stages of a game have predictive value for compliance, reliance and training outcomes.

1.2 Methodological contributions – game and agent

Our experiments are supported by a web-based game and an advisor agent grafted onto this game.

The game. Participants in our experiment “work” in a retirement village whose elderly residents live in smart homes. Each home is equipped with a monitoring system that raises alerts when it detects an adverse event, such as a fall, or a potential hazard, such as a tap left running for a long time. The participants’ “job” consists of performing a routine “administration task”, and ensuring the welfare of the residents, which can be done by attending to the monitoring system’s alerts or checking on the residents from time to time.

Our game supports the systematic investigation of the influence of the following factors, and combinations thereof, on user trust and behaviour: system performance (accuracy and error type), situation (risk, cognitive load and consequences), communication style (etiquette and speech versus text), transparency (explanations) and feedback (apologies and communication of capabilities). In our monitoring-system study, we investigate the influence of system performance on users’ trust and behaviour.

Our game design methodology offers a principled approach for setting rewards and penalties in order to emphasize factors of interest (Section 3.3). This is in contrast to [11], who provided no details about their reward structure, or [30, 37], who had an ad hoc structure.

The advisor agent. We offer an algorithm for generating advice, and propose guidelines for justifying recommendations and providing feedback to users. Our advisor agent supports the investigation of the influence of different aspects of such an agent (viz competence, appearance, communication style, transparency and feedback) on users’ behaviour. However, as mentioned above, the focus of our study is to investigate the training effect of an agent endowed with influential features.

1.3 Roadmap to the paper

This paper is organized as follows. In Section 2, we discuss related research, focusing on the effect of system performance and features of assistive agents on users’ trust and behaviour. In Section 3, we describe the experiment, and present our game, including our approach for calibrating the parameters of the game. Section 4 describes the advisor agent, and outlines the generation of its policy for interacting with the monitoring system. Our experimental results appear in Section 5, followed by our predictive models in Section 6, and concluding remarks in Section 7.

2 Related Research

When investigating aspects of human behaviour, there is a long-standing tradition of using games and scenarios that rely on a narrative in order to engage participants. The idea is that such tools will elicit more realistic responses than questionnaires — this idea was refined in [59] by associating user types with particular game designs, and in [47] by associating strategies employed in persuasive gamified systems with domains and personality traits.

Trust between people has been studied by means of economic games such as the trust game [6] and the ultimatum game [25]. Games have also been used to investigate the influence of different aspects of automated devices on subjective trust-related attributes (e.g., self-reported trust and perception of reliability) and user behaviour (notably compliance and reliance). Some of the aspects that have been studied are: system dependability [39, 73] and error type [11], task criticality [52], anthropomorphism [29, 61, 70] or addressing the outcomes of actions (e.g., errors made) [5, 16, 19, 29, 32]. The cited studies showed that feedback increases trust in automation. However, the results regarding trust calibration are conflicting: according to [32, 61, 70], feedback leads to appropriate trust calibration, while the opposite was found in [16, 19]. Focusing on addressing outcomes, de Visser et al. [15] offered strategies to repair breaches in trust, such as apologizing (conveying regret and taking responsibility) [33], empathizing [8], explaining errors [19], and using an anthropomorphic channel [14, 50].

Level of control is the extent to which a user determines how a task is performed — it ranges from no control (full automation) to complete control (low-level automation) [62]. Verberne et al. [68] found that a mixed level of control between user and system was more trustworthy than no user control. In contrast, the experiments described in [55] yielded the lowest trust ratings for a medium level of automation, with a low automation level receiving the highest trust ratings, and a high automation level receiving intermediate ratings.

Summary. In short, increased anthropomorphism has been shown to increase users’ trust in automation, as well as its influence of on users’ behaviour. In addition, trust is increased by ease of use of the automation, utilization of an older avatar and polite interaction, transparency and provision of feedback. However, the results regarding level of control are conflicting.

3 The Experiment and the Game

Our experiments most resemble Johnson’s [30], in that they consider FAs and Misses jointly, require users to take initiative in the absence of a stimulus, and comprise two tasks, one of which is interrupted by alerts (but in our case, this task is repetitive, rather than continuous). Section 3.1 provides an overview of our experiments, Section 3.2 presents the game, and Section 3.3 describes how the parameters of the game are determined.

3.1 Experiment overview

Our game was employed in two studies: monitoring system and advisor agent. In the first study, we investigated the effect of system accuracy and error type on user trust and behaviour, and in the second study, we investigated the training effect of a good advisor agent. Clearly, we had to select design features for the monitoring system and the advisor agent. For the monitoring system, we followed current industry standards whereby a disembodied system sends written alerts to carers (e.g., www.sofihub.com). For the advisor agent, we selected features that distinguish it from the monitoring system, and which, according to the findings reported in Section 2.2, increase trust.

At the beginning of our studies, participants filled a demographic and technology-experience questionnaire, and a questionnaire about their propensity to trust devices, adapted from [43, 44] (Table 3). Participants were then shown the complete version of the abridged narrative in Figure 1 and a training video, and proceeded to the game part.

Monitoring-system study. We considered six types of monitoring systems, where each system has a particular combination of % of FAs, {HighFA, LowFA}, and % of Misses, {HighMiss, LowMiss, NoMiss} (Table 4). The study was divided into two within-subject experiments, which were conducted as follows: Sona1, which featured four systems representing combinations of {HighFA, LowFA} \(\times \) {LowMiss, NoMiss}; and Sona2, where the combinations were {HighFA, LowFA} \(\times \) {HighMiss, LowMiss}. In each experiment, a participant played one or more trials and four games, each with a different type of monitoring system. A game comprises two stages, and a report of the performance of the monitoring system and the user is produced after each stage (Figure 3). Users entered their level of trust in a monitoring system (on a 1-5 Likert scale) after seeing the performance report.

In both experiments, we compensated for the effect of presentation order of different types of monitoring systems, but we found no order effect.

Upon completion of the game, participants were asked for their opinion about different aspects of the experiment, e.g., engagement, stress and difficulty of the experiment, importance of the administration and monitoring tasks, and reliability of the monitoring system.

Advisor-agent study. We compared two choice architectures: Agent and NoAgent – with and without an advisor agent respectively. The study was a mixed between-subjects and within-subject experiment, where we assigned one group of users to each choice architecture. In the between-subjects part, we compared the behaviours of the two cohorts, and in the within-subject part, we examined the behaviour of each cohort separately. Both arms of the experiment comprised a trial and two games, each with two stages. The experiment was conducted as follows: the NoAgent users decided by themselves how to interact with the monitoring system in both games; while the Agent users were shown by the advisor agent how to make decisions about their actions in the first game, and then played the second game by themselves. Prior to interacting with the advisor agent, users in the Agent group were given an introduction to the agent and its capabilities (Figure 6). After each stage of the game, a performance report is produced, which, for the Agent group, includes the agent’s performance. After seeing the report, all users entered their trust in the monitoring system, and users in the Agent group also entered their trust in the agent.

As noted in Section 4.3, the notional accuracy of our advisor agent is 80%, which is considered high by Parasuraman and Miller [52]. For this study, we chose a HighFA/HighMiss monitoring system, where 67% of the alerts are true and 67% of the adverse events are detected by the system. This configuration, which is deemed trustable according to [50], was selected in order to clearly differentiate between the advisor agent and the monitoring system.

Upon completion of the game, participants in the NoAgent cohort were asked the same questions as those posed in the monitoring-system study. However, for the Agent group, we replaced most of the post-game questions with questions about the advisor agent, including a question about users’ preferences regarding whether and how to interact with the agent. Owing to the length of this paper, we report only on this last question.

3.2 The game

As stated above, our game comprises two tasks: a primary maintenance task of taking care of the elderly residents, and a secondary routine administration task, which keeps players occupied (a version of the monitoring-system study and the advisor-agent study can be accessed at MONITOR and ADVISOR respectively). The routine task is a one-back memory card game (a special case of the n-back task [35]), where participants are shown a sequence of stimuli – in our case, playing cards – and they must decide whether the current stimulus is the same as the previous one. Clearly, the card-playing task differs from the routine tasks performed in a real care-taking scenario. However, the presence of a secondary task that demands users’ attention enhances the ecological validity of our scenario. This type of simplification is often used in psychological experiments [7, 36, 54], and was employed in [10, 17] to manipulate cognitive load.

Figure 1 shows an abridged version of the narrative about the scenario and the game, and Figure 2 illustrates the game interface. The left-hand panel shows the card game (Figure 2(a)) — the remaining game time and current income appear at the top of the screen. The right-hand panel is used for the trust-relevant task, viz interacting with the monitoring system. This panel allows users to check on the residents, which yields an accurate eyewitness report that displays events that were missed by the system (in  ) or true alerts that were ignored by the user (Figure 2(b)). Figure 2(c) shows a true low-risk alert generated by the system, and the feedback after the user clicks the attend button. Figure 2(d) depicts a high-risk alert that turns out to be false, and the feedback generated for attending to it. The outcome of decisions made in the card game (correct or wrong) and the feedback for attending to alerts are reinforced with auditory signals.

) or true alerts that were ignored by the user (Figure 2(b)). Figure 2(c) shows a true low-risk alert generated by the system, and the feedback after the user clicks the attend button. Figure 2(d) depicts a high-risk alert that turns out to be false, and the feedback generated for attending to it. The outcome of decisions made in the card game (correct or wrong) and the feedback for attending to alerts are reinforced with auditory signals.

To give players timely general feedback, a performance report is issued half-way through a game with a monitoring system, and at the end of the game (Figure 3). In the left-hand panel of the report, participants are given feedback about their own performance, and in the right-hand panel, they are informed about the monitoring system’s performance and the events that remained unattended. In the bottom-right panel of the report, participants are asked to enter their level of trust in the monitoring system on a 1-5 Likert scale.

3.3 Determining the Parameters of the Game

The operating parameters of the game must satisfy the following conditions: (1) the length of the game should enable users to learn how to act and to form an opinion about the monitoring system, without becoming bored; (2) the frequency of the cards must be suitable for maintaining users’ interest, but not cause stress; (3) the number and distribution of alerts must be such that users can still play the card game; and (4) the rewards and penalties must be such that “good citizens” that attend promptly to most events, and play the card game reasonably well, end up with earnings above their initial salary.

Next, we discuss the first three factors, followed by the calibration of rewards and penalties.

3.3.1 Game configuration: length, card frequency and alert frequency

A game with a monitoring system lasts 520 seconds, and is divided into two stages (the game clock stops when users perform monitoring activities). A card appears every 4 seconds (65 cards per stage) — 96% of the users deemed this pace to be not stressful or a little stressful, according to our post-experiment questionnaire.

A monitoring system may generate a true alert (TA) for an event or miss an event (Miss), or it may generate a false alert (FA) in the absence of an event. Thus, the number and distribution of alerts depends on the number and distribution of adverse events and the performance profile of a monitoring system. To achieve a playable game with monitoring systems that are trustable and reliable according to [50, 52], we allocated 14 adverse events to each stage (i.e., the probability of an adverse event during a card is \(\hbox {Pr}(\textit{Event})=\frac{14}{65}\)).

Table 4 illustrates the profiles of the monitoring systems in our study. These profiles reflect two proportions of FAs (High and Low FAs) and three proportions of Misses (High, Low and No Misses). The probabilities associated with these rubrics were chosen to reflect system reliabilities in the range specified in [50, 52] — the fluctuations of probabilities of the same type (High or Low) are due to some stages in a game having an extra event.

3.3.2 Rewards and penalties

The game is monetized to reflect the notion that the main task, for which users earn a salary, is to take care of the residents. Hence, they do not receive additional rewards for performing this monitoring task correctly. However, players incur monitoring penalties when they respond to FAs or check on residents, which use company resources, or when they delay attendance to adverse events, which is bad for the residents. In addition, players earn “bonuses” for correct answers in the card game (secondary task), but they are penalized for giving wrong answers or skip** cards.

We first outline our formulation for calculating rewards and penalties (details appear in Appendix A), followed by the calibration of the parameters of the game.

Game formulation – rewards and penalties. A user’s expected net income combines expected earnings from the card game (

), which are positive, and expected losses from the monitoring task (

), which are positive, and expected losses from the monitoring task (

), which are negative:

), which are negative:

where

includes losses from checking on residents (

includes losses from checking on residents (

), delayed responses to TAs and Misses (

), delayed responses to TAs and Misses (

), and attendance to FAs (

), and attendance to FAs ( ).

).

Calibrating rewards and penalties. The reward structure of the game comprises (1) the monitoring-task penalties $Check, $PenaltyFA and $DelaySlope, which respectively specify losses from checks on residents, attendance to FAs and attendance delays; and (2) the administration task reward $Correct for a correct answer in the card game, and penalties $Skip for a skipped card and $Wrong for an incorrect answer — we set $Skip to −$1 and $Wrong to −$Correct.

Our objective is to calibrate these parameters in order to convey the idea that the monitoring task is more important than the administration task, and to ensure that the expected absolute and relative income of different types of users is commensurate with their behaviour and performance. To this effect, we first defined several hypothetical user types in terms of card-playing and monitoring parameters. Table 5 shows four of these user types, e.g., the Ordinary player / Best carer (second row) skips 10% of the cards, gives a correct answer for 80% of the remaining cards, checks on the residents four times per stage on average, and attends immediately to all alerts.

To calibrate the parameters of the game, we compute the expected earnings of these user types for monitoring systems with different performance profiles. Specifically, we iterate over the following steps: (1) determine the value of $Correct for particular values of $Check, $PenaltyFA and $DelaySlope; and (2) adjust the values of these last three parameters if necessary. These steps, which are performed manually, are repeated until no more adjustments are deemed necessary.Footnote 3 Figure 4 displays the expected net income of the user types in Table 5 as a function of $Correct for the LowFA/NoMiss monitoring system (Figure 4(a)) and the HighFA/LowMiss system (Figure 4(b)). As seen in Figure 4, the expected net income of the user types who take good care of the residents and engage in the game (with varying levels of competence) is positive for \(\$\textit{Correct}\ge \$4\), which is the value we selected. In contrast, user types who do not take good care of the residents will make money for \(\$\textit{Correct}\ge \$6\) and \(\$\textit{Correct}\ge \$8\) for the LowFA/NoMiss system and the HighFA/LowMiss system respectively, if they play the card game very well.

4 The Advisor Agent

Our advisor agent offers suggestions about how to act in light of a monitoring system’s alerts or lack thereof. As mentioned in Section 1, we wanted our agent to have the best chance to influence users’ behaviour. Therefore, we endowed it with design and performance features that promote trust and compliance, according to the findings reported in Section 2.2. Section 4.1 describes the agent’s design features, focusing on transparency and feedback, Section 4.2 outlines our calculation of the agent’s policy, and Section 4.3 describes the agent’s performance.

4.1 Agent design features

We first describe briefly our agent’s appearance/anthropomorphism, ease of use and communication style — these attributes were directly sourced from the literature; and then discuss in more detail the agent’s transparency and feedback.

4.1.1 Appearance/anthropomorphism, ease of use and communication style

-

Appearance/anthropomorphism – In line with [13, 23, 27, 50], our agent has a human appearance and oral communication — speech is considered an anthropomorphic channel [14], plus we wanted to distinguish between agent communications and monitoring-system notifications, which are textual. The only textual instruction accompanying the agent is written advice about how to interact with the agent. Figure 5 shows how our agent is incorporated into the game: its image and the written advice (in

) are displayed at the top of the interface.

) are displayed at the top of the interface. -

Ease of use – Our agent gives spoken advice when an alert is raised by a monitoring system and when a periodic check is required. In addition, the agent or the monitoring system provide feedback after a user’s actions.

-

Communication style – Based on the results in [38, 51], we chose a middle-aged male appearance.Footnote 4 To address the findings in [15] regarding variation in expression, we pre-recorded three spoken messages, with different levels of verboseness, for each type of justification and feedback given by the agent (Sections 4.1.2 and 4.1.3 respectively), and randomly select one of them (the components of these messages were generated manually on the basis of the concepts described in Sections 4.1.2 and 4.1.3, but they can be easily generated using programmable templates). Finally, our agent is proactive, i.e., it offers advice without being asked, owing to the training efficacy of this mode of interaction [34].

4.1.2 Transparency

In line with the findings in [19, 48, 71], the agent justifies its recommendations for specific actions — these explanations must be brief, since the game is fast paced, and they are given orally.

An advice message has the following components: (1) recommended action – obtained from the agent’s policy (Section 4.2), (2) alert risk (high or low) – optional; and (3) rationale for the action (Table 6). Our agent may recommend to Check on the residents in the absence of an alert, and it may recommend one of three actions when an alert has been raised: Attend, Check or Check\(+1\); Check\(+1\) differs from Check in that the user is advised to ignore the alert for one card, and then check on the residents in the next card.Footnote 5Check or Check\(+1\) may be suggested for an alert, instead of Attend, in order to catch previous Misses or avoid attending to an FA. These ideas, together with information about the performance profile of the monitoring system and the reward structure of the game, make up our manually-derived rationales for the actions recommended by the agent.Footnote 6

Our agent’s rationales are backward looking (Section 2.2), as future rewards, which are used in forward-looking explanations, are not informative in our case. Specifically, our rationales mention the following “teachable” applicability conditions: (a) recency of the last check on the residents, (b) accuracy (error rate) of the monitoring system, (c) risk of the event flagged in an alert – optional, and (d) need for a periodic check.

Table 6 displays the components of advice messages and sample messages of different levels of verboseness. For instance, the row marked with an “\(*\)” illustrates the message generated for a Check\(+1\) advice given for a low risk alert, where the rationale is that checking can be delayed because the alert is low risk.

,

,  , rationale), and sample spoken advice message.

, rationale), and sample spoken advice message. , whether the advice was followed or

, whether the advice was followed or  , alert status and eyewitness report), feedback components (

, alert status and eyewitness report), feedback components ( ,

,  and attend reminder), and sample spoken feedback message.

and attend reminder), and sample spoken feedback message.4.1.3 Feedback

Following [5, 16, 19, 29, 32], we present information about the agent’s capabilities when the agent is introduced (Figure 6), provide a summary feedback after each stage of the game with the agent (like that in Figure 3, with additional agent-related information), and give immediate outcome-related feedback for users’ actions. This feedback has the following components when the action is Check or Check\(+1\) (if a user’s action is Attend, the game gives automatic feedback, as shown in Figures 2(c) and 2(d)):

-

– As noted in [15, 33], apologies are instrumental in repairing trust breaches due to automation failure, which happens when the agent’s advice is incorrect, e.g., to Attend to an alert that turns out to be false. An acknowledgment is generated when both the agent’s advice and an alternative action are correct.

– As noted in [15, 33], apologies are instrumental in repairing trust breaches due to automation failure, which happens when the agent’s advice is incorrect, e.g., to Attend to an alert that turns out to be false. An acknowledgment is generated when both the agent’s advice and an alternative action are correct. -

– This is the teachable component of the feedback, where positive aspects about an action recommended by the agent or taken by a user are noted, e.g., an FA or Misses were revealed by checking on the residents.

– This is the teachable component of the feedback, where positive aspects about an action recommended by the agent or taken by a user are noted, e.g., an FA or Misses were revealed by checking on the residents. -

Attend advice – This advice is given to make sure users attend to adverse events seen in an eyewitness report.

Table 7 displays information about the agent’s immediate feedback for an action performed by a user after receiving the agent’s advice (Columns 1-4), and sample messages of different levels of verboseness (Column 5). Focusing on users’ actions Check or Check\(+1\), the components of the feedback appear in Column 4. The factors that determine these components are: the agent’s advice (gray rows spanning Table 7), whether the advice was followed ( if the advice was not followed, Column 1), whether the alert was True or False (Column 2), and whether the eyewitness report is empty (\(\emptyset \)) or contains Misses (Column 3). For example, the row marked with an “\(*\)” depicts a situation where the agent’s advice was Attend, but the user checked on the residents instead. This Check showed that the alert was True (i.e., the advice was correct), but also revealed previous Misses (i.e., the user’s action, which differed from the advice, was also correct). In this case, the agent acknowledges the accuracy of its advice, and reinforces the user’s correct action, which caught missed events.

if the advice was not followed, Column 1), whether the alert was True or False (Column 2), and whether the eyewitness report is empty (\(\emptyset \)) or contains Misses (Column 3). For example, the row marked with an “\(*\)” depicts a situation where the agent’s advice was Attend, but the user checked on the residents instead. This Check showed that the alert was True (i.e., the advice was correct), but also revealed previous Misses (i.e., the user’s action, which differed from the advice, was also correct). In this case, the agent acknowledges the accuracy of its advice, and reinforces the user’s correct action, which caught missed events.

4.1.4 Summary of design features

In short, our agent is a middle-aged male that proactively offers spoken, explained advice and feedback. Potential trust miscalibration due to increased anthropomorphism, male gender and feedback is prevented by our agent’s high expected accuracy (Section 4.3).

4.2 Calculating the agent’s policy

The problem of deriving a policy for the agent resembles a Partially Observable (high-order) Markov Decision Process (POMDP), in the sense that it has a probability distribution over all the possible states, and it combines the immediate reward for an action with future rewards [57] (we minimize expected costs instead of maximizing expected rewards). However, we have taken advantage of the features of our game to find a tailored solution: (1) periodic checks on residents are important in a care-taking scenario; and (2) when an alert is raised, an Attend does not affect the belief state about Misses, and a Check leaves no uncertainty about Misses or the status of the alert.

Thus, our agent’s policy has two main parts: (1) a sub-policy for periodically checking on the residents, i.e., the optimal interval that should elapse between successive checks (Section 4.2.1); and (2) a sub-policy for handling alerts, i.e., which action to perform when an alert is raised (Section 4.2.2).

The derivation of the policy takes into account the probability of adverse events and the performance profile of the monitoring system – probabilities of FAs and Misses (Section 3.3.1); and the reward structure of the game – cost of checking on residents ($Check), penalty for attending to FAs ($PenaltyFA) and penalty for attendance delay ($DelaySlope) (Section 3.3.2).

4.2.1 Sub-policy for performing periodic checks

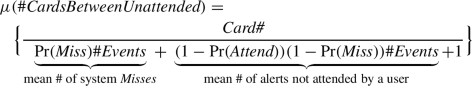

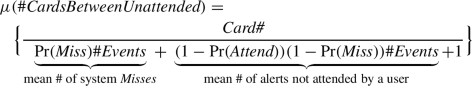

The optimal checking interval \(N_{{\varvec{opt}}}\) is that which yields the minimal total expected cost for a particular configuration of our game, as follows (details appear in Appendix B.1):

where  (=65) is the number of cards in one stage of our game, and \(E(\textit{CumCheckCost}(i+1))\) is the expected cumulative cost of checking every \(i+1\) cards throughout a stage of a game. This cost is determined by the cost of checking on the residents ($Check) plus the expected penalty for delayed event attendance over i cards, which in turn depends on the probability that an adverse event was missed by the monitoring system (\(\hbox {Pr}(\textit{Event}) \times \Pr (\textit{Miss}|\textit{Event})\)), the penalty for each card elapsed while an event remains unattended ($DelaySlope), and the elapsed number of cards for each event that could happen over i cards.

(=65) is the number of cards in one stage of our game, and \(E(\textit{CumCheckCost}(i+1))\) is the expected cumulative cost of checking every \(i+1\) cards throughout a stage of a game. This cost is determined by the cost of checking on the residents ($Check) plus the expected penalty for delayed event attendance over i cards, which in turn depends on the probability that an adverse event was missed by the monitoring system (\(\hbox {Pr}(\textit{Event}) \times \Pr (\textit{Miss}|\textit{Event})\)), the penalty for each card elapsed while an event remains unattended ($DelaySlope), and the elapsed number of cards for each event that could happen over i cards.

Thus, in the absence of an alert, the agent will remind a player to check on the residents after  cards have elapsed. For our game configuration,

cards have elapsed. For our game configuration,  , i.e., if a user has not checked on the residents for the last 6 cards, the agent will remind them to do so in the 7th card.

, i.e., if a user has not checked on the residents for the last 6 cards, the agent will remind them to do so in the 7th card.

4.2.2 Sub-policy for handling alerts

Figure 7 illustrates a segment of the game timeline (in number of cards) comprising a previous Check at card number \(t_{c}\), an alert at card number \(t_a\), and future periodic checks. If the alert is attended, these checks are expected to happen at cards  according to the periodic-check policy (

according to the periodic-check policy ( sequence). However, if a Check or Check\(+1\) is performed, the periodic checks shift, and the calculation of expected penalties for Misses is adjusted (

sequence). However, if a Check or Check\(+1\) is performed, the periodic checks shift, and the calculation of expected penalties for Misses is adjusted ( and

and  sequences). Thus, the expected cumulative cost of a specific Action considered for an alert at card number \(t_a\) is calculated by the following equation (details appear in Appendix B.2):

sequences). Thus, the expected cumulative cost of a specific Action considered for an alert at card number \(t_a\) is calculated by the following equation (details appear in Appendix B.2):

In terms of POMDPs, the first two terms comprise the immediate reward for an action (shaded pink in Figure 7), and the last two terms represent its future reward. For example, when the action considered for card \(t_a\) is Attend, the immediate expected cost is derived from the probability of the alert being false and the penalty associated with attending to an FA (first term), plus the expected cost at card \(t_a + 1\) (second term), which is obtained from the probability of having another alert at \(t_a + 1\), the probability that this alert is false, and the penalty for attending to an FA or ignoring a TA. The future expected cost for an Attend comprises the expected cost of the sub-policy for periodic checks from \(t_c\) onwards (fourth term) — the third term is 0 for an Attend. The future expected cost due to alerts beyond card \(t_a + 1\) is the same for all the actions, and can therefore be omitted from the calculation.

Computing the alert-handling policy. Algorithm 1 uses Equation 4 to calculate the expected cumulative cost of each action for each card in a stage of the game ( ), assuming that the most recent Check could have been done at any card since the beginning of the game (even though we have a periodic-check sub-policy, users may ignore the agent’s advice, and fail to check on the residents). The algorithm then selects the action with the minimal cost. The chosen actions are stored in a triangular matrix \({{\varvec{ACTION}}_{{\varvec{opt}}}}\), which embodies the alert-handling policy.

), assuming that the most recent Check could have been done at any card since the beginning of the game (even though we have a periodic-check sub-policy, users may ignore the agent’s advice, and fail to check on the residents). The algorithm then selects the action with the minimal cost. The chosen actions are stored in a triangular matrix \({{\varvec{ACTION}}_{{\varvec{opt}}}}\), which embodies the alert-handling policy.

4.3 Agent performance

As seen in Table 2, most studies found a correlation between system performance and user trust and behaviour. Thus, in order to engender trust, we need a highly reliable advisor agent. The reliability of the agent’s advice depends on its policy, which in turn depends on the performance profile of the monitoring system. At the limit, if the monitoring system is 100% accurate, our agent will recommend attending to all the alerts and no periodic checks, and will be 100% reliable as well. For the HighFA/HighMiss system employed in our advisor-agent study, the interval for periodic checks is  (=7) cards (so Misses may be attended with some delay), and the \({{\varvec{ACTION}}_{{\varvec{opt}}}}\) matrix usually recommends Check or Check\(+1\), rather than Attend; Attend may be suggested when an alert appears shortly after a check has been performed.

(=7) cards (so Misses may be attended with some delay), and the \({{\varvec{ACTION}}_{{\varvec{opt}}}}\) matrix usually recommends Check or Check\(+1\), rather than Attend; Attend may be suggested when an alert appears shortly after a check has been performed.

When the agent’s recommendations are followed, its notional accuracy (proportion of instances where the actions it recommends yield good outcomes) is 80%, which is defined as highly reliable in [52], compared to the 67% accuracy of the monitoring system. To further validate the agent’s policy, we compared its monetary reward for each stage of a game with that of all the players who had not been exposed to the agent — the agent’s reward was the highest.

5 Experimental Results

We first present demographic information, relevant experience and trust propensity for the participants in the monitoring-system study and the advisor-agent study (Table 8). Our findings are discussed next, followed by a characterization of our participants on the basis of their behaviour.

5.1 Participant demographics, experience and trust propensity

Participants in the monitoring-system study were recruited from the SONA platform (www.sona-systems.com); as seen in Table 8, most of these participants were under 30 years of age. For the advisor-agent study, we decided to recruit an older cohort, who would be more likely to have ageing parents than the monitoring-system study participants. The advisor-agent study participants were recruited from CloudResearch (www.cloudresearch.com).Footnote 7

Ideally, an experiment should have participants who are personally engaged with the domain. However, it is extremely difficult to recruit participants who work in the aged-care sector. Hence, we recruited crowd workers – an accepted practice for user studies conducted in areas such as User Modeling, Language Technology and Trust. In our case, the problem of out-of-domain participants is mitigated by the following: (1) the narrative immersion we provided; (2) monitoring systems, such as that described in our study, have become increasingly prevalent (as indicated in Table 8, 59% of the participants in the monitoring-system study and 85% of the participants in the advisor-agent study reported medium-high experience with such systems); and (3) many people in the general population have ageing relatives.

As seen in Table 8, the participants in the monitoring-system study differ from the participants in the advisor-agent study in most demographic aspects. However, these differences do not affect the validity of our research for the following reasons: (1) the two studies address different research questions, and (2) the behaviour of the participants in the two studies was not affected by their demographic differences. This was ascertained by performing Wilcoxon rank-sum tests for the activities relevant to the monitoring task (% alerts attended and # checks on residents) to compare between the behaviour of participants from the two studies in their initial exposure to the game, i.e., the (first) trial of the monitoring-system study and the first stage of the trial of the advisor-agent study (# checks was pro-rated to account for differences in trial length). The test did not reject the null hypothesis that both groups exhibit the same behaviour (i.e., no statistically significant differences were found between the behaviours of the two groups).

5.2 Monitoring-system study

This section describes our findings regarding the relationship between system performance, trust and user behaviour, focusing on compliance (% alerts attended) and reliance (# checks on residents, where more checks indicate lower reliance).

Influence of error type on self-reported trust, compliance and reliance. The calculations for trust involved both stages of a game with a monitoring system, while the calculations for compliance and reliance involved only the second stage of a game. This is because users enter their level of trust in the system only after they have played a stage of a game and seen the report (Figure 3). In contrast, users do not have explicit performance information while they are playing the first stage of a game with a monitoring system, and their behaviour may be influenced by the previous system they saw.

As mentioned in Section 1, one would expect high FAs to reduce trust and compliance, and high Misses to reduce trust and reliance. Our results match these intuitions: more errors decrease trust, more FAs decrease compliance, and more Misses decrease reliance (statistically significant with \(\textit{p}\hbox{-}\textit{value}\ll 0.01\) adjusted with Holm-Bonferroni correction for multiple comparisons [28]; the means and standard deviations of trust, compliance and reliance, and p-values prior to Holm-Bonferroni correction appear in Table 14 in Appendix C). In addition, FAs do not affect reliance and Misses do not affect compliance, which is consistent with the findings reported in [11, 64].

To determine which independent variable has a stronger effect on trust, we used three measures: ANOVA coefficients for standardized independent variables, change in \(R^2\) and Spearman correlation. All three measures agreed that FAs have a slightly stronger influence than Misses on trust, which lends support to the findings in [24, 30].

Relationship between self-reported trust and behaviour. In line with our statistical significance tests, we used the second stage of each game to determine the relation between user behaviour and self-reported trust. Intuitively, one would expect trust in a system to be positively correlated with both compliance and reliance. However, similarly to [11, 58], we found no correlation between trust and reliance, and only a very weak correlation between trust and compliance.

5.3 Advisor-agent study

This section reports our findings about the views of participants in the Agent group about interacting with the agent, the relationship between trust in the agent and conformity with its advice, and the agent’s training effect.

Views of participants in the AGENT group about interacting with the advisor agent. Upon completion of the experiment, we asked participants “Which of these would more closely reflect your views about having the agent (Daniel) to help you use the monitoring system?”. The possible answers were “I prefer to always have the agent / I prefer to always NOT have the agent / I prefer to learn from the agent and work without the agent / Other [with an option to type free text]”. 29% of the participants preferred to always have the agent, and 55% preferred to learn from the agent and then work without the agent. That is, 84% of the users saw value in the agent.

Relationship between self-reported trust and behaviour. We calculated the correlation between trust in the agent and conformity with its advice for each stage of Game 1 — we deemed that the agent’s advice was followed if a user acted on it within six seconds.Footnote 8 We found no correlation between trust in the agent and conformity with its advice.

Training effect of the agent’s advice. As indicated in Section 4.3, in principle, the agent’s advice yields good outcomes 80% of the time, if its recommendations are followed. However, this is not always the case. In practice, the agent’s accuracy for different users varies according to their behaviour. Despite sometimes receiving inaccurate advice, we hoped that users would learn behavioural principles from the agent’s recommendations and their associated justifications (Section 4.1.2).

In order to determine the agent’s training effect, we first define what constitutes good behaviour. In Section 4.3, we noted that to obtain good performance when interacting with the monitoring system employed in this study, participants should perform relatively frequent checks on residents (low reliance) and should attend infrequently to alerts (low compliance). Thus, we define a “better behaviour” as an increase in # checks on residents and a reduction in % alerts attended. This definition is used to test the following hypotheses regarding the agent’s influence on users’ behaviour:

Hypothesis 1

(1) The agent has an immediate influence on users’ behaviour, i.e., users’ behaviour while being advised by the agent is better than their previous behaviour; and (2) this difference is more pronounced than the difference obtained by learning from experience.

Hypothesis 2

(1) The agent has a training effect, i.e., users’ behaviour after interacting with the agent is better than their pre-agent behaviour; and (2) this difference is more pronounced than the difference obtained by learning from experience.

To test Hypothesis 1, we compared the Game 1 behaviour of the Agent cohort (averaged over two stages) with their behaviour in the second stage of the trial, denoted Trial-Stage2, and the Game 1 behaviour of the NoAgent cohort with their Trial-Stage2 behaviour (the # checks in Trial-Stage2 was pro-rated to make them comparable to the # checks in the longer stages of the games). To test Hypothesis 2, we compared the behaviour of the two cohorts in Game 2 (averaged over two stages) with their (pro-rated) Trial-Stage2 behaviour. A Wilcoxon rank-sum test on # checks and % alerts attended in Trial-Stage2 did not reject the null hypothesis that the two cohorts exhibit the same behaviour for this stage.

Our results confirm both of the hypotheses postulated above (Wilcoxon signed-rank test, \(\textit{p}\hbox{-}\textit{value}< 0.01\) adjusted with Holm-Bonferroni correction for multiple comparisons [28]; the means and standard deviations of compliance and reliance in Trial-Stage2, Game 1 and Game 2 for the Agent and NoAgent cohorts, and the p-values of the comparisons prior to Holm-Bonferroni correction appear in Table 15 in Appendix C):

-

Hypothesis 1 (1) The # checks for the Agent group in Game 1 was significantly higher than the (pro-rated) # checks in Trial-Stage2, and the % alerts attended in Game 1 was significantly lower than in Trial-Stage2. Both behaviours are consistent with the agent’s advice, and are better in Game 1. (2) There was no statistically significant difference between the # checks or % alerts attended by the NoAgent group in Game 1 and the corresponding behaviour in Trial-Stage2.Footnote 9

-

Hypothesis 2 (1) The # checks for the Agent group in Game 2 was significantly higher than the (pro-rated) # checks in Trial-Stage2, and the % alerts attended in Game 2 was significantly lower than in Trial-Stage2. Further, in Game 2, the participants in the Agent group maintained the behaviours they learned from the agent in Game 1 (there was not statistically significant difference between Game 2 and Game 1). (2) There was no statistically significant difference between the # checks or % alerts attended in Game 2 by the NoAgent group and the corresponding behaviour in Trial-Stage2. In addition, there was no statistically significant difference between Game 2 and Game 1.\(^9\)

5.4 Characterizing user types

The behaviours that are directly observable in both studies are the card-playing behaviours (% cards correct and % cards skipped), and the monitoring behaviours (% alerts attended and # checks on residents).

In order to determine whether these behaviours represent different types of users, we used them as input features to a clustering algorithm – K-means. We then checked whether these user types are useful for inferring behaviour patterns pertaining to our research questions.

5.4.1 Monitoring-system study

Behaviour throughout the experiment. Initially, we clustered the participants in our study in order to validate our hypothetical user types (Table 5) against user types derived from real behaviours. Specifically, we used as clustering features the average of each of the four basic behaviours throughout an experiment. The best configuration (Silhouette score = 0.64) comprises the three clusters in Table 9. The users in all the clusters had a similar card-playing behaviour; in fact, we obtained the same clusters without the card-playing features, which indicates that the users differed mainly in their monitoring behaviour. The users in the HighReliance-HighCompliance cluster (low # checks and high % alerts attended) fit the Ordinary Carer type, and those in the MediumReliance-HighCompliance cluster resemble the Best Carer type. The LowReliance-MediumCompliance cluster (high # checks and medium % alerts attended) is noteworthy because the users in this cluster traded alert attendance for checks on residents — a behaviour we did not anticipate.

Behaviour in the initial stages of the experiment. The user types identified for the entire experiment led us to ask whether user types derived from behaviour in the initial stages of the experiment shed light on users’ trust, compliance and reliance on the monitoring system. This question is addressed below. In Section 6.1, we determine whether these user types have predictive value with respect to trust and behaviour.

To answer the first question, we clustered users according to their monitoring behaviour in the first game of the experiment (averaged over two stages).Footnote 10 Table 10 shows the best configuration (Silhouette score = 0.68). Overall, the user types based on their initial monitoring behaviour resemble the types obtained from users’ behaviour in the entire experiment (Table 9), but there was some migration between clusters throughout the experiment.

The following results were obtained for the three user types in Table 10 (the means, standard deviations and p-values prior to Holm-Bonferroni correction, and Spearman correlations between trust and compliance and between trust and reliance, appear in Table 16 in Appendix C):

-

HighReliance-HighCompliance – The users in this group did not significantly change their trust and reliance on the monitoring system due to changes in the number of Misses. However, they significantly increased their trust in the monitoring system when the proportion of FAs was reduced, and also increased their compliance (trend after Holm-Bonferroni correction). This indicates that users of this type are reactive, and do not engage in the proactive reasoning required to take Misses into account. In terms of correlations, we only found a very weak correlation between trust and compliance for these users.

-

MediumReliance-HighCompliance – The users in this cohort are the most susceptible to changes in FAs and Misses. Their trust in the monitoring system and their reliance on it increased significantly as the number of Misses decreased. They also significantly increased their trust in the monitoring when the proportion of FAs was reduced, and also increased their compliance (trend after Holm-Bonferroni correction). However, we found no correlation between trust and compliance or reliance for these users.

-

LowReliance-MediumCompliance – The participants in this cluster did not change their behaviour significantly as a result of changes in FAs, but they significantly increased their trust in the monitoring system when the proportion of FAs was reduced. They also increased their trust and reliance on the monitoring system when the proportion of Misses decreased (trend after Holm-Bonferroni correction). In terms of correlations, we found a moderate correlation between trust and compliance and between trust and reliance for these users.

5.4.2 Advisor-agent study

Our experience with the monitoring-system study led us to identify user types in the advisor-agent study. We clustered all the participants according to their behaviour in Trial-Stage2, and clustered separately the participants in the Agent and the NoAgent cohorts according to their Game 1 behaviour (averaged over two stages). Here too, only the monitoring-task features affected the found clusters.

Table 11 shows the monitoring characteristics of the users in the discovered clusters, and the Silhouette scores of these clusters. The clusters found in Trial-Stage2 for both cohorts and in Game 1 for the NoAgent cohort resemble the HighReliance and LowReliance clusters found in the first game of the monitoring-system study (Table 10). In contrast, the Game 1 clusters found for the Agent cohort reflect the influence of the advisor agent, with most of the participants exhibiting a medium-low compliance and a low to very-low reliance on the monitoring system.

Similarly to the monitoring-system study, below we discuss whether the user types obtained from Trial-Stage2 shed light on users’ trust in the agent, conformity with the agent’s advice, and compliance and reliance on the monitoring system. In Section 6.2, we determine whether the user types obtained from Trial-Stage2 and Game 1 have predictive value with respect to trust and behaviour.

The following results were obtained for the two user types identified in Trial-Stage2 for the participants in the Agent cohort (the means, standard deviations and p-values prior to Holm-Bonferroni correction appear in Table 17 in Appendix C).

-

Trust in the advisor agent and conformity with its advice – There is no statistically significant difference between the two clusters in terms of trust in the agent and conformity with its advice. In addition, for both clusters, we found no correlation between trust and conformity.

-

Change in compliance and reliance on the monitoring system due to the advisor agent – Both types of users statistically significantly decreased their compliance and reliance (increased the # checks on residents) on the monitoring system in Game 1 relative to Trial-Stage2 (\(\textit{p}\hbox{-}\textit{value}\ll 0.01\)), which is consistent with our results for Hypothesis 1(1). This indicates that both types of users are susceptible to the agent’s advice. Indeed, the decrease in compliance was similar for both groups (and there was no statistically significant difference between their compliance), but the HighReliance group increased their # checks on residents more than the LowReliance group. Still, the # checks on residents of the latter group remained significantly higher than that of the former (\(\textit{p}\hbox{-}\textit{value}< 0.05\)).

6 Predicting Trust and Behaviour

The prediction of trust and behaviour is essential to anticipate the adoption of devices, and to assist people in their usage. In Section 2, we highlighted two pieces of research that predict trust-related aspects: Lee and Moray [39] built time-series models of the influence of system performance on trust, but they did not consider behaviour; and Xu and Dudek [72] employed Dynamic Bayesian Networks to predict trust ratings and user interventions (to override the decisions of automated agents) from system performance and previous trust ratings.

We consider predictive models for the following aspects in our two studies:

-

Monitoring system – a user’s trust score in a monitoring system, compliance (% alerts attended) and reliance (# checks on residents).

-

Advisor agent – a user’s trust score in the advisor agent, conformity (% advice followed) and the user’s learning outcomes.

For both studies, we used three types of input features: System – characteristics of the system and the game; User – demographic information, experience and trust propensity; and User game experience – trust and behaviour in previous stages of the game (details about the input features are provided in Appendix D.1 and D.2 for the monitoring-system study and the advisor-agent study respectively).

We considered several predictive models, viz random forests, naïve Bayes, support vector machines and linear/logistic regression (we do not have enough data for Deep Learning models), and performed 10-fold cross validation. Random forests outperformed the other models in most cases, and had a stable performance overall (Tables 18 and 19 in Appendix D compare the performance of these models for the monitoring-system study and the advisor-agent study respectively). However, the main significance of our results pertains to the features that have predictive value.

In the following sections, we report the predictive performance of random forests, and describe the most influential features.

6.1 Monitoring-system study

We predict a user’s trust in a monitoring system, compliance and reliance for each of the last five stages played by a user (the first stage of the second game contains data used to make predictions for the following stage, and as mentioned in Section 5.4.1, the first game (two stages) was used to cluster the users). Predictive performance was measured with Root Mean Square Error (RMSE), as the target variables are numerical (trust scores are ordinal).

Table 12 shows the RMSEs of the predictions for trust, compliance and reliance obtained with random forests. These errors are between 7-15% of the available range, which may be sufficiently informative for practical purposes. The most influential features for predicting trust, compliance and reliance are:

-

Trust score [1-5]: System feature # FAs in the current stage, User features Ethnicity (which is consistent with the findings reported in [4]) and Average trust propensity, and User game experience feature Trust in the monitoring system in the preceding stage.

-

Compliance (% alerts attended) [0-1]: User feature Ethnicity, and User game experience features % alerts attended, # checks and % checks attended in the preceding stage and User Type based on the first game.

-

Reliance (# checks on residents) [0-20]: User game experience features % alerts attended, # checks and % checks attended in the preceding stage and User Type based on the first game.

Noteworthy aspects of these features are: (1) a feature value in the preceding stage is predictive of its value in the next stage; (2) user type based on the first game is influential for predicting compliance and reliance (using the individual features that gave rise to the clusters reduces performance); and (3) the only demographic feature that has a high predictive power (for trust and compliance) is ethnicity.

6.2 Advisor-agent study

We predict a user’s trust in the agent and conformity with its advice (% advice followed) for Game 1, and learning outcomes (whether the user has learned a better checking and alert-attendance policy from the agent or from experience) for Game 2. As for the monitoring-system study, predictive performance for trust and conformity was measured using RMSE, but we used accuracy (percentage correct) for learning outcomes, as they are binary – the classes are {Yes,No}.

In order to predict learning outcomes, we must provide an operational definition of learning. To this effect, we harness Hypothesis 2, which posits that there is a training effect if a user’s behaviour in Game 2 is better than their initial behaviour, i.e., they increased the # checks and reduced the % alerts attended (Section 5.3). Specifically, we define learning as follows:

-

A user U has learned to check on residents if the # checks performed by U in each stage of Game 2 is higher than their (pro-rated) # checks in Trial-Stage2, and the average # checks performed by U in Game 2 is higher than their (pro-rated) # checks in Trial-Stage2 by at least half a standard deviation of the # checks performed by all the users in Game 2.

-

A user U has learned to attend to alerts if the % alerts attended by U in each stage of Game 2 is lower than their % alerts attended in Trial-Stage2, and the average % alerts attended by U in Game 2 is lower than their % alerts attended in Trial-Stage2 by at least half a standard deviation of the % alerts attended by all the users in Game 2.

Although the requirement of half a standard deviation is somewhat arbitrary, it reflects a substantial difference between users’ pre-agent and post-agent behaviour, which is indicative of learning.Footnote 11

Table 13 shows the RMSEs of the predictions of trust and conformity and the accuracy of the predictions of learning outcomes obtained with random forests. The RMSE for trust is similar to that obtained for the monitoring-system study, and the accuracy of the learning-outcomes predictions is quite creditable, but the RMSE for conformity is 23% of the range, which leaves something to be desired. Nonetheless, what is noteworthy are the features with predictive power. The following features alone yield the best performance for predicting trust, conformity with the agent’s advice and learning outcomes:

-

Trust score agent (Game 1, Stage 2) [1-5]: User game experience feature Trust in the agent in the preceding stage of Game 1.

-

Conformity with the agent’s advice (Game 1, Stage 2) [0-1]: User game experience features # checks and conformity with the agent’s advice in the preceding stage of Game 1.

-

Learning checks and alert attendances (Game 2) {Yes, No}:

For the Agent cohort, the majority class was Yes for learning checks (56.66%) and learning alert attendances (53.33%). The best predictors for learning to check are: Agent feature Accuracy in Game 1, and User game experience features # checks in Game 1 and User type according to Trial-Stage2. The best predictors for learning to attend are: Agent feature Accuracy in Game 1, and User game experience feature User Type according to Game 1.

For the NoAgent cohort, the majority class was No for learning checks (68.97%) and learning alert attendances (89.66%). The best predictors for learning to check are: User game experience features # checks in Game 1 and User Type according to Trial-Stage2. However, the majority class is the best predictor for learning to attend.

Similarly to the monitoring-system study, (1) a feature value in a preceding stage is predictive of its value in the next stage; and (2) user type according to Trial-Stage2 or Game 1 is an influential behaviour predictor (here too, using the individual features that gave rise to the clusters reduces performance). In addition, (3) the agent’s Game 1 accuracy for a user is an influential predictor of the user’s learning outcomes for checks on residents and alert attendance (recall that the agent’s accuracy varies according to users’ behaviour).

7 Conclusion

In this paper, we have (1) identified user types that shed light on users’ trust in automation and aspects of their behaviour; (2) ascertained the effect of monitoring-system accuracy and two common error types (false alerts and missed events) on users’ trust, compliance and reliance on the system; (3) determined the effect of the recommendations made by a good advisor agent on users’ compliance and reliance on the monitoring system, and learning outcomes; and (4) identified influential factors for predicting users’ trust and aspects of their behaviour.

User types obtained from basic behaviours. We characterized participants based on two aspects of the behaviour they exhibited early in an experiment: % alerts attended and # checks on residents. What is noteworthy is that the user types obtained from this basic information have predictive value, and shed light on other behaviours. Specifically, users of different types react differently to changes in FAs and Misses. This indicates that user characterizations obtained from a few basic information items may be of value — an insight that extends beyond reactions to FAs and Misses.

Relationship between system performance, trust and behaviour. Our results are similar to those in [11, 64], i.e., FAs and Misses influence trust, FAs affect compliance and Misses affect reliance. Like [11, 58] and unlike [3, 30, 64, 72, 73], we found no correlation between users’ self-reported trust in the monitoring system and reliance. In addition, similarly to [11, 64], we found only a very weak correlation between trust in the monitoring system and compliance, and found no correlation between trust in the advisor agent and conformity with its advice.

As mentioned above, the effects of FAs and Misses differ for different types of users identified in the monitoring-system study (Section 5.4): one group was highly reactive (to alerts), one group was highly deliberative (performing many checks on the residents), and a third group sat between these extremes. This finding extends Hoff and Bashir’s hypothesis regarding factors that influence the relationship between system performance and users’ trust and behaviour [27] (Section 2), and as above, is applicable beyond responses to FAs and Misses.

Effect of the recommendations made by an advisor agent on users’ behaviour. The results of our study indicate that the agent-based architecture leads to improved decision-making outcomes, both while users work with the agent and when they work independently later on, thus showing that the agent has a training effect. In addition, even though our agent’s advice was not tailored to particular types of users, it led to larger changes in behaviour for the reactive users than for the deliberative users, attenuating the difference between these user types. This finding is in line with the results of Buçinca et al. [9], whereby users that conform excessively with an AI’s recommendations benefit from cognitive forcing – interventions that disrupt heuristic reasoning, and encourage users to engage in analytical thinking.

Predicting trust and behaviour. Our predictive models generate usable predictions of trust scores, compliance and reliance on the monitoring system, conformity with the advisor agent’s advice and learning outcomes. They also complement our findings about features that influence users’ trust and aspects of behaviour. Specifically, in both user studies, (1) a feature value is predictive of its value in the short term, which agrees with the findings in [49]; and (2) user type based on a user’s early behaviour is influential for predicting compliance and reliance on the monitoring system, conformity with the agent’s advice and learning outcomes. A possible explanation for the better predictive performance when clusters (i.e., user types) were used as features (instead of using the features from which the clusters were derived) is that the individual features may lead to over-fitting, while the clusters reflect meaningful abstractions. Finally, the results of the advisor-agent study also indicate that (3) the agent’s accuracy for individual users is a predictor of their learning outcomes. Although this finding is not surprising, it suggests that it is worth taking into account users’ personal experience with a system, which may differ from general system accuracy.

Methodological contributions. The studies that yield these findings are enabled by two methodological contributions: the game itself, which supports experimentation with various factors, and a version of the game augmented with an advisor agent; and techniques for calibrating the parameters of the game and determining the recommendations of the advisor agent.

Limitations and future work. Our studies have the following limitations: (1) our routine administration task is not related to the care-taking scenario; and (2) our participants were crowd workers recruited from the SONA and CloudResearch platforms, and were not aged-care workers. Regarding the first limitation, we posit that the presence of a task that occupies participants’ attention enhances the ecological validity of our user studies, as it represents a common situation where people are responsible for patients’ welfare, while engaged in activities that demand their attention. Although not ideal, the recruitment of crowd workers has become an accepted standard in studies conducted in User Modeling and related areas. Mitigating factors to this limitation are: our narrative immersion, the observation that most of our participants had experience with smart homes or monitoring devices, and the fact that having ageing relatives is a common experience.

As mentioned in Section 1, Hoff and Bashir [27] noted that “Overall, the specific impact that false alarms and misses have on trust likely depends on the negative consequences associated with each error type in a specific context”. Our monitoring-system study is set in a care-taking scenario, where the consequences pertain to patients — a setting that has not been investigated to date. Since our focus was on the influence of error type on trust and behaviour, the consequences remained constant across different configurations of FAs and Misses. Going forward, it would be worthwhile to conduct studies which vary the consequences of users’ actions or lack thereof.

In terms of our design methodology, the process for setting the parameters of the game is manual (Section 3.3.2). An interesting avenue of investigation involves using an optimization algorithm to calibrate the game parameters together, e.g., to maximize the income gap between different types of users, while ensuring an appropriate level of income.