Abstract

The number of research articles published on COVID-19 has dramatically increased since the outbreak of the pandemic in November 2019. This absurd rate of productivity in research articles leads to information overload. It has increasingly become urgent for researchers and medical associations to stay up to date on the latest COVID-19 studies. To address information overload in COVID-19 scientific literature, the study presents a novel hybrid model named CovSumm, an unsupervised graph-based hybrid approach for single-document summarization, that is evaluated on the CORD-19 dataset. We have tested the proposed methodology on the scientific papers in the database dated from January 1, 2021 to December 31, 2021, consisting of 840 documents in total. The proposed text summarization is a hybrid of two distinctive extractive approaches (1) GenCompareSum (transformer-based approach) and (2) TextRank (graph-based approach). The sum of scores generated by both methods is used to rank the sentences for generating the summary. On the CORD-19, the recall-oriented understudy for gisting evaluation (ROUGE) score metric is used to compare the performance of the CovSumm model with various state-of-the-art techniques. The proposed method achieved the highest scores of ROUGE-1: 40.14%, ROUGE-2: 13.25%, and ROUGE-L: 36.32%. The proposed hybrid approach shows improved performance on the CORD-19 dataset when compared to existing unsupervised text summarization methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Globally, the SARS-CoV-2 virus had a destructive effect on communities since the upsurge of the COVID-19 pandemic in November 2019 [1]. Medical communities and researchers are under increased pressure to remain current with the articles due to the rapid growth of research articles [3]. It intends to condense the article or document that holds the relevant facts by acquiring crucial information in a short duration. Text summarization has its uses in various application domains, such as for generating news summaries, email summaries, financial reports, research article summaries, and medical informative reports to track the patient’s treatment. Condensing a vital piece of knowledge into a summary will be beneficial since the internet is abundant with relevant and irrelevant information on any topic. It is challenging, as well as time-consuming, for humans to summarize such a large amount of data. Therefore, this has given rise to the demand for powerful and convoluted summarizers.

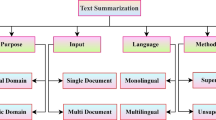

Generally, there are two categories of text summarization approaches—extractive and abstractive [4]. Extractive summarization techniques generate a summary using the most relevant sentences from the given document, whereas abstractive summarization techniques construct new phrases from the source document. The summary generated by abstractive-based summarization models is more similar to the summary created by humans. Though summaries from abstractive models tend to be more meaningful and grammatically correct, these may not be factually accurate as proved by several researchers [5, 6]. In addition, Zhong et al. demonstrated that extractive-based approaches outperformed abstractive equivalents in human assessment [7]. That makes it inadequate for different domains where factual persistence is crucial, like in medical research articles [5]. Text summarization has another category known as hybrid summarization models that combine both the concepts of extractive and abstractive models. See et al. in [8] acknowledge the benefit of hybrid summarizing approaches, and employ a pointer-generator technique in which the model is primarily abstractive, and detects and replicates significant facts straight from the source material to eliminate factual inaccuracy. Transformer-based methods are employed to create salient features, and an extractive summary is then constructed to ensure factual consistency.

The pre-trained transformer architecture has evolved over the past few years. The T5 language model [9] has shown significant performance improvements over the baseline transformer model and is used for generative linguistic tasks like abstractive summarization [10] and query generation. Furthermore, pre-trained Bidirectional Encoder Representations from Transformer (BERT) language models have exhibited positive results for various NLP challenges, including text summarization. Transformers have become increasingly proficient at capturing semantic knowledge, but they pose new challenges. Their processing capacity is restrained by the number of tokens they can handle simultaneously. Furthermore, the transformer’s attention mechanisms can fine-tune at a high computational cost. As a result of these constraints, recent text summarization methods often analyze a truncated version of documents [11, 12]. An unsupervised technique was chosen due to its appeal of not requiring manually labeled datasets for training. By utilizing graph-based knowledge and leveraging correlations within sentences, the most appropriate sentences are extracted to construct the summary.

The proposed technique presents a notable advantage over established methods, which is its novel combination of unsupervised automatic text summarization using a graph-based technique and a pre-trained model. This hybrid approach is referred to as CovSumm. Utilizing language models that are pre-trained on large corpora in the proposed approach allows for a superior interpretation of the textual content. These language models have undergone rigorous training on the huge amount of data, acquiring them to proficiently capture the semantics of the natural language. The integration of graph-based methods in the proposed framework also grants for a more exhaustive investigation of relationships within the sentences. This, in turn, facilitates the recognition of the most critical knowledge to be incorporated into the summary. Overall, the proposed approach presents a new and effective solution for addressing the challenges posed by information overload and data redundancy in COVID-19 scientific literature, providing a comprehensive summary that enables researchers to stay informed on the latest studies and advancements in the field.

This study is characterized by several contributions, which comprise:

-

1.

An innovative hybrid approach for unsupervised automatic text summarization is proposed that extracts salient sentences in Covid-19-related scientific literature for generating the summary.

-

2.

The hybrid approach is an integration of graph-based techniques and pre-trained language models that achieves a text summarization without requiring large quantities of manually labeled data.

-

3.

Based on the evaluation outcomes, the hybrid approach presented in this study surpasses current unsupervised techniques for automatic text summarization that are considered state of the art.

This article comprises several sections, namely: Sect. 2, which provides an outline of previous research in the field; Sect. 3, which describes the proposed approach; Sect. 4, which presents the experimental results; and Sect. 5, which concludes the paper with a discussion of future directions.

2 Related work

This part offers an overview of the existing literature on extractive text summarization. Document summarization has been largely investigated over the last few years. To summarize a document, extractive summarization algorithms select a set of statements from the source text. Sentences are extracted from the original corpus, scored, and rearranged as they appeared in the original document for a meaningful summary generation by the algorithm. Employing these methods requires the sentences to be converted into feature vectors to estimate the similarity. Term frequency—Inverse document frequency (TF-IDF) [13], skip-thought vectors [14], Bidirectional Encoder Representations (BERT) [15], Global vectors for word representation (GloVe), and Word2Vec embedding [16] are widely used word embedding techniques used to encode sentences into sequences of vectors.

In 1958, Luhn [17] first presented a concept for text summarization that involves scoring sentences based on the frequency of significant word-containing phrases. This idea was subsequently refined by Edmundson and Wyllys in 1961 [18]. Mihalcea and Tarau [19] presented the TextRank algorithm as a graph-based system that was originally based on Google’s PageRank algorithm. The LexRank algorithm is the graph-based revised interpretation of TextRank presented by Erkan and Radev [20]. Bishop et al. in [21] considered the LEAD method as the baseline; it takes the initial N sentences for summary, and final summary sentences are selected at random using the RANDOM model. Using singular vector decomposition (SVD), latent semantic analysis (LSA) retrieves semantically rich phrases and generates a summary [22]. Nenkova and Vanderwende [23] proposed a system known as SumBasic that tends to generate generic summaries from multiple documents.

A wide range of NLP applications show enhanced performance by using pre-trained models [24, 38]. In the medical domain, the authors of [26] presented an unsupervised and extractive-based summarization technique that uses hierarchical clustering, which groups contextual embedding of sentences according to BERT encoders, and the most appropriate sentences were excerpts from within the group for generating summaries. Furthermore, [27] presented an extractive-based unsupervised technique based on the GPT-2 transformer model. The authors have used pointwise mutual information for sentence encoding to determine whether sentences and documents are semantically similar. On the medical journal dataset, the presented technique outperformed previous benchmarks. In a recent work [28], Ju et al. introduced an unsupervised extractive method for scientific documents using the pre-trained Sci-BERT model; experiments were performed on ar**v, PubMed, and COVID-19 datasets. CAiRECOVID was proposed in [29] for mining scientific literature in response to an input query; it was composed of a question-answering system with multi-document summarization. The medical researchers assessed the performance of the model using the Kaggle CORD-19 dataset and verified its efficacy, as reported in their study. To enhance language comprehension through unsupervised techniques of pre-training and fine-tuning, a method known as Generative Pre-Training (GPT) was introduced in [24]. This approach aimed at improving the understanding of language. Language representation is handled by BERT, a method proposed by [15]. A hybrid approach was introduced in the work of Bishop et al. [21], where they presented an innovative method called GenCompareSum. Evaluated on scientific datasets, this method outperforms both unsupervised and supervised models.

There are plenty more examples of unsupervised extractive summarization in the literature. The method known as the Learning Free Integer Programming Summarizer (LFIP-SUM) is described in [30]. This technique involves the formulation of an integer programming problem through the use of pre-trained sentence embedding vectors. Additionally, it employs principal component analysis to identify the optimal number of sentences to extract and evaluate their significance. What sets LFIP-SUM apart from conventional models is that it does not necessitate labeled training data. The study concludes that this approach offers significant advantages over existing methods and has potential applications in various domains. Belwal et al. [31] presented a method for extractive text summarization that utilizes a graph-based approach, which involves assigning weights to graph edges for ranking sentences. The weights assigned to the edges depending on the correlation between the sentences, which is determined through a vector space model and topic modeling. Additionally, the suggested technique employs topic vector generation to obtain the topic of interest in a given document. Additionally, a semantic similarity measure is incorporated to determine the sentence relevance, resulting in two approaches for creating the topic vector: combined and individual. The method’s primary contribution is a general mechanism that reduces the input document’s dimension to the topic vector, enabling the comparison of sentences with the vector and achieving impressive results in terms of rouge parameters. In [32], the authors introduced an unsupervised method for extractive summarization which combines K-Medoids clustering and Latent Dirichlet Allocation (LDA) topic modeling to minimize topic bias. The findings of this study demonstrate that this approach, with a stronger emphasis on subtopics, outperforms conventional topic modeling and deep learning approaches in unsupervised extractive summarization. The graph-based summarization technique proposed in the study in [33] takes into account both the resemblance among individual statements and their relation to the entire document. This approach employs topic modeling to determine the pertinence of specific edges to the topics discussed in the text, as well as a semantic measure to evaluate the similarity between nodes. The method EdgeSumm [34] employs four distinct methods to form a summary of a document. In the first method, a unique graph-based model is constructed to represent the document. The subsequent two techniques are responsible for identifying pertinent sentences from the text graph. Finally, when the model-generated summary surpasses the necessary word limit, a fourth technique is employed to get the crucial sentences for the summary. EdgeSumm fuses extractive techniques to leverage their strengths and mitigate their limitations. A distance-augmented sentence graph is used in [35] to model sentences with greater granularity, resulting in the improved characterization of document structures. Additionally, the model is adapted to the multi-document setting by linking the sentence graphs of input documents using proximity-based cross-document edges. Ranksum [36] is an unsupervised extractive text summarization methodology for single documents. The technique employs four features, namely, the topic information, semantic content, significant keywords, and position of each sentence, to generate rankings indicating their degree of saliency. The rankings are then weighted and fused to produce a final score for each sentence. Ranksum employs probabilistic topic models, Siamese networks, and a graph-based method to derive the rankings and eliminate redundant sentences.

3 Proposed methodology

The proposed method CovSumm is an unsupervised hybrid extractive approach for Covid-19 document summarization that fuses the sentence scores of GenCom-pareSum [21] which relies on a generative transformer-based model (T5) and BERT for sentence scoring, with that of TextRank [19] which is a graph-based model based on the cosine similarity measure. These are two distinctive and complementary lines of research for unsupervised extractive text summarization. The process flow is depicted in Fig. 1. The proposed framework consists of two branches, the left branch is GenCompareSum and the right branch is TextRank. Subsequently, using different methodologies, sentence scores are generated by the two methods; these are ultimately fused in the proposed hybrid framework in order to generate the final summary. The detailed steps are explained in the subsections below.

3.1 Data-preprocessing and heuristic sentence extraction for dataset generation

The CORD-19 dataset containing Covid-19 scientific literature [25, 37] was used for evaluation purposes. The documents published between January 1, 2021 and December 31, 2021 were extracted to construct the summarization corpus. For this dataset, it was considered that the original abstract of the paper is used as the gold summary and the actual paper content is used as the input document. To evaluate the proposed model’s performance, a comparison is made among the gold summary and the proposed model summary. After eliciting the research articles within the specified time frame, the documents with empty abstract fields were removed. Furthermore, the non-English documents and duplication were removed based on the paper title. After all the pre-processing steps mentioned above, 840 scientific articles were left. The BERT model is restricted to 512 words since the transformer models are constrained by this constraint. CORD-19 documents average 6970 words in length, which is more than the length constraint of input available to existing pre-trained language models. Working with long sequences requires high computational power. Since the majority of the preceding study evaluating transformer-based methods uses truncated documents [7, 10,11,12, 21, 37], a corpus was also constructed for evaluating the proposed method by truncating long articles to 512 words long. In consideration that most of the salient knowledge of a research article is documented at the start of the research article, starting from the top of the article, we successively assimilate sentences to form one paragraph until 512 words long [1].

3.2 Sentence scores generation using the pre-trained T5 model

The subsequent part describes the sentence score generation by utilizing the pre-trained T5 model of GenCompareSum. The input to the model is corpus C which consists of k documents \(C=\left\{{D}_{1},{D}_{2},{D}_{3},\dots .,{D}_{k}\right\}\). Each document has several sentences \(D=\left\{{q}_{1},{q}_{2},{q}_{3},\dots .,{q}_{n}\right\}\) and the output from the system is \({D}^{{{\prime}}}=\left\{{{q}^{{{\prime}}}}_{1},{{q}^{{{\prime}}}}_{2},{{q}^{{{\prime}}}}_{3},\dots ,{{q}^{{{\prime}}}}_{m}\right\}\), where m < n. Firstly, the documents should be split into sentences, then grouped into several sections. The T5 transformer model receives these sections as its input and develops a fixed number of text fragments for each section. The output from the T5 text generation model is fragmented text \(F=\left\{{f}_{1},{f}_{2},{f}_{3},\dots .,{f}_{l}\right\}\) for each section. To remove the redundancy, N-gram blocking is employed along with a combination of combined text fragments. The weights \(W=\left\{{w}_{1},{w}_{2},{w}_{3},...,{w}_{p}\right\}\) for text fragments are obtained for the top p text fragments. Based on the BERT score, the correlation between the sentence from the source document and the fragment chosen in the previous step is calculated.

A similarity matrix is derived based on the scores calculated above. The similarity scores are multiplied by the weights generated for each fragment and summed up over the text fragments to get the final sentence scores using the T5 model. Final scores from the T5 model are summarized as \(S=\left\{{s}_{1},{s}_{2},{s}_{3},\dots ,{s}_{n}\right\}.\)

The equation to calculate the final scores for every sentence i is as stated below.

3.3 Sentence scores generation using the graph-based model

In this section, the TextRank method of text summarization is described. A total of k documents constitute the corpus C, such that \(C=\left\{{D}_{1},{D}_{2},{D}_{3},\dots .,{D}_{k}\right\}\), which is the input for the models. Each document contains several sentences \(D=\left\{{q}_{1},{q}_{2},{q}_{3},\dots .,{q}_{n}\right\}\). In the data preprocessing step, sentence tokenization and word tokenization are performed. Subsequently, punctuations and stopwords are removed, and the text is converted to lowercase. The pre-trained GloVe embedding is utilized to obtain the target sentence vector \(Vec=\left\{{vec}_{1},{vec}_{2},{vec}_{3},\dots ,{vec}_{n}\right\}\) per document. The similarity among the sentences is computed by utilizing the cosine similarity formula. The formulation of cosine similarity is as stated.

Using the embedding vectors denoted by A and B, we can calculate the correlation between two sentences. The degree of correlation can be measured on a scale from 0 to 1, with a value of 0 indicating minimal similarity and a value of 1 indicating maximal similarity. For calculating scores, graphs are constructed using cosine similarity matrices. The graph \(G\left(V,E\right)\) comprises of vertices and edges, where the vertices denote the nodes and the edges denote the links between them. The nodes in the graph correspond to the sentences present in a document, whereas the edges signify the degree of similarity among the sentences within the document. The process of generating the graph involves representing sentences as nodes, and calculating the weights of the edges connecting nodes using Eq. (2),

The score of a node can be computed using the given formula:

Regarding a directed graph, the collection of nodes that direct toward a specific node \({V}_{j}\) is designated as \(\mathrm{In}\left({V}_{j}\right)\), whereas the collection of nodes that \({V}_{j}\) directs is designated as \(\mathrm{out}\left({V}_{j}\right)\). Here, a dam** factor \(d\) is used to control the chances of randomly hop** from one node to another node. This value is typically set to between 0 and 1 and determines the weight given to the random walk component in the overall score calculation. By adjusting the dam** factor, one can fine-tune the relative importance of local and global graph structure in the ranking process. To generate graphs, the Networkx library is utilized. The default value of the dam** factor is 0.85. The PageRank function of the Networkx library is employed for generating the sentence scores, every node is assigned a score that reflects its importance within the network. This score is used to rank the nodes in order of importance, with the most highly-ranked nodes typically being those that are most central or influential in the network; the sentence scores are denoted by \(S{^{\prime}}=\left\{s{^{\prime}}_{1},s{^{\prime}}_{2},s{^{\prime}}_{3},\dots ,s{^{\prime}}_{n}\right\}.\)

Although the algorithm was initially intended for use with directed graphs, it can also be utilized with undirected graphs if certain conditions are met. Specifically, the algorithm can be applied to undirected graphs in which each vertex has the same number of incoming and outgoing edges.

3.4 Extracting relevant sentences based on scores for the final summary

The proposed method presents two sentence scores, one from the T5 model denoted as \(S=\left\{{s}_{1},{s}_{2},{s}_{3},\dots ,{s}_{n}\right\}\) and another from the graph-based model denoted as \(S{^{\prime}}=\left\{s{^{\prime}}_{1},s{^{\prime}}_{2},s{^{\prime}}_{3},\dots ,s{^{\prime}}_{n}\right\}.\) The hybrid methodology proposed in this study combines these two scores to generate the final sentence scores. To combine these scores, the following formulation is used to integrate the sentence scores shown in (1) and (3).

Here \(\beta \) (Beta) is the influence factor, and its value ranges from 0 to 1. This study shows it is possible to shift more weight to the model that performs better. After obtaining the final scores from the proposed method, the top N sentence excerpts are chosen for the final summary.

4 Experimental result and analysis

4.1 Dataset used

The updated CORD-19 [37] Corpus was downloaded from Kaggle on March 31, 2022. The White House, in partnership with various research associations, assembled the CORD-19 corpus of scholarly articles about COVID-19. This database contains scientific papers from PubMed Central, PubMed, WHO’s Database, and other pre-print servers such as bioRxiv, ar**v, and medRxiv. High computational power is required to work with a large number of documents. Training is not enforced for the unsupervised methods though; we have tested the proposed methodology on the scientific papers in the database dated from January 1, 2021 to December 31, 2021, consisting of 840 documents in total.

4.2 State-of-the-art models for extractive text summarization

Text summarization is the mechanism of producing a brief overview of a document by extracting and linking the salient phrases within it. This task has garnered significant attention from the Natural Language Processing (NLP) community, and several unsupervised approaches have been developed to address it. These techniques utilize various heuristics, such as term or phrase frequency, sentence similarity, and sentence placement, to determine the most salient sentences. Recently, neural network-based approaches have also emerged, which typically train a model to directly predict the most influential sentences in an article. The proposed methodology is compared with the following powerful unsupervised summarization techniques. In Table 1, state-of-the-art techniques for unsupervised extractive-based text summarization are displayed.

4.3 Evaluation metric

In [39], the authors presented the ROUGE score metric. Machine translation and summarization are generally evaluated using this set of metrics. This metric employs the correlation between the proposed methodology-generated summary with the gold summary. ROUGE-1 estimates the number of unigrams that match the method output against the reference output. ROUGE-2 is analogous to ROUGE-1, but it measures the number of bi-grams. The longest common subsequence (LCS) between a system summary and ground truth summary is calculated using ROUGE-L. Based on F1 scores computed using Pyrouge version 1.5.5, the performances of the proposed method and the state-of-the-art approaches were analyzed and compared in terms of the ROUGE-1, ROUGE-2 and ROUGE-L metrics.

The following formulae are used to calculate recall, precision, and F1 score for ROUGE-1 and ROUGE-2:

Recall score: The ratio of total counts of co-occurring n-grams encountered in both the system-generated summary and gold summary to total counts of n-grams in the reference summary.

Precision score: The ratio of total counts of co-occurring n-grams encountered in both the model-generated summary and gold summary to total counts of n-grams in the model-generated summary.

F1 score: The F1 score can therefore be estimated as follows using the recall and precision values.

The following formulae are used to calculate recall, precision, and F1 score for ROUGE-L.

Recall score: In the system-generated and gold summary, the total number of LCS n-grams encountered is divided by the total number of n-grams in the gold summary.

Precision score: In the system-generated and gold summary, the total number of LCS n-grams encountered is divided by the total number of n-grams in the system-generated summary.

F1 Score: To estimate the F1 score for ROUGE-L, one can use the equation that is shown in Eq. (7).

4.4 Parameter settings

The models were executed on Google Colab Pro with GPUs on NVIDIA Tesla T4 in addition to high RAM. To find the best parameter setting for the proposed model, a detailed performance analysis was conducted with different parameter settings. A list of all the optimal parameter settings for the model is presented in Table 2. Stride is used to make the chunks or sections as the input to the T5 transformer, whose value is experimented in the range of 2 and 10. The temperature value has been experimented within the range of 0.2 to 1. The number of salient texts is the parameter that helps to select the top-weighted fragments; it is experimented for the range of values from 5 to 12. N-gram blocking which is used to reduce the redundancy is tested for the values 3 and 4. BERTScore is employed to compute the degree of similarity between the input text and the fragments produced by using the T5 transformer, it is tested for base models, i.e., bert-base-uncased and allenai/scibert_scivocab_cased. The count of sentences parameter helps to include the top sentences for the final summary generation, its value is tested within 4–10. The β value used to calculate the scores is tested for the range of 0–1.

4.5 Discussion of results

Unsupervised extractive-based summarization models are compared and analyzed on the truncated version of the CORD-19 corpus using the ROUGE metric. The study shows, according to Table 3 and Fig. 2, that the hybrid proposed methodology surpassed the listed techniques, with the F1 scores of 40.14%, 13.25%, and 36.32% for ROUGE1, ROUGE-2 and ROUGE-L, respectively. Whereas ROUGE-1 gains the highest F1 score of 40.14%. The lowest performing is the RANDOM method with F1 score of ROUGE-1: 33.26% and ROUGE-2: 8.17%. SumBasic, with a score of 27.63%, is the model performing the least well for ROUGE-L. In conclusion, the proposed CovSumm model performs well for all metrics including ROUGE-1, ROUGE-2, and ROUGE-3.

The efficacy of the proposed approach is analyzed with a distinct number of sentences, and the outcomes are illustrated in Table 4 and Fig. 3.

The graph depicts how the total count of sentences influences the efficacy of the proposed technique when evaluating the CORD-19 corpus using the ROUGE metric a The number of sentences vs ROUGE-1 score b The number of sentences vs ROUGE-2 score c The number of sentences vs ROUGE-L score d The number of sentences vs ROUGE-1, ROUGE-2, and ROUGE-L score

Table 4 illustrates how the number of sentences used to produce the final summary generation affects the performance of the proposed hybrid model. The experiment was performed on values ranging from 4 to 10. According to the analysis conducted, the model performance increases with an increasing number of sentences. However, after 7, the performance starts to decline as the number of sentences increases. On selecting 7 sentences for the final summary, ROUGE-1, ROUGE-2, and ROUGE-L have the highest F1 value of 40.14%, 13.25%, and 36.32%, respectively. On value 4, ROUGE-1, ROUGE-2, and ROUGE-L scored the lowest with F1 scores of 35.78%, 11.87%, and 32.10%, respectively. From Fig. 3, it was analyzed that the proposed hybrid model performs best when selecting seven sentences for the summary generation for all the F1 scores of ROUGE-1, ROUGE-2, and ROUGE-L.

Additionally, the performance of the proposed model for different values of \(\beta \) is represented in Table 5 and Fig. 4. Table 5 presents a comparison of the efficacy of the proposed technique according to the parameter \(\beta \). An experiment was conducted with values ranging from 0 to 1. It was found that the model performance increases with an increasing \(\beta \) value, but gradually declines after 0.3. The highest F1 scores were achieved by ROUGE-1, ROUGE-2, and ROUGE-L, for \(\beta \) = 0.3. According to the F1 score, ROUGE-1, ROUGE-2, and ROUGE-L scored the lowest values of 39.92%, 13.05%, and 36.06%, respectively, for \(\beta \) = 0.6. According to the analysis from Fig. 4, can be inferred that the proposed methodology performs best when the \(\beta \) value is set to 0.3 for all the F1 scores of ROUGE-1, ROUGE-2, and ROUGE-L.

The graph illustrates how the \(\beta \) Values influence the efficacy of the proposed methodology when evaluating the CORD-19 corpus using the ROUGE metric a \(\beta \) Values vs ROUGE-1 score. b \(\beta \) Values vs ROUGE-2 score c \(\beta \) Values vs ROUGE-L score d \(\beta \) Values vs ROUGE-1, ROUGE-2 and ROUGE-L score

Figure 5 illustrates a sample of the original text utilized as input for the CovSumm model. The reference summary used to calculate the ROUGE scores is the gold summary, while the summary produced by the proposed model is the generated summary.

5 Conclusion

In this study, the CovSumm model was proposed for the summary generation of the scientific papers related to Covid-19. CovSumm is an unsupervised hybrid approach, a fusion of the recently introduced GenCompareSum method involving the pre-trained T5 model for language generation and the TextRank graph-based algorithm, to produce summaries for scientific research articles. The major advantage of CovSumm is that it is a hybrid approach that combines the strengths of two distinctive and complementary lines of research for unsupervised extractive text summarization: T5 language model and graph-based algorithm. This combination allows for a more comprehensive and non-redundant summary of COVID-19-relevant scientific publications, as demonstrated by the higher scores of the ROUGE evaluation metric as compared to the state-of-the-art methodologies. Another benefit of the proposed approach is that it can effectively handle the problem of information overload that is prevalent in a massive volume of COVID-19-relevant scientific studies. This would help to keep researchers and medical associations informed on the latest COVID-19-related information. The experimental results and analysis depict that the proposed model CovSumm outperforms various unsupervised summarization methods on CORD-19 corpus. The proposed CovSumm model will boost and give direction to researchers in further studies on effective summarization of scientific literature. The use of domain-specific pre-trained transformer models [42] may help to boost the performance scores. Further, this model can be experimented with several transformer-based language models like PEGASUS, GPT2, BART, etc., for language generation tasks, as well as with different graph-based approaches, and can be evaluated on various other biomedical datasets.

Data availability

The corresponding author can make available the data underlying the findings of this research upon a reasonable request.

Code availability

Upon a reasonable request, the corresponding author can provide the code supporting the conclusions of this study.

References

Cai X, Liu S, Yang L, Lu Y, Zhao J, Shen D, Liu T (2022) COVIDSum: a linguistically enriched SciBERT-based summarization model for COVID-19 scientific papers. J Biomed Inform 127:103999

**e Q, Bishop JA, Tiwari P, Ananiadou S (2022) Pre-trained language models with domain knowledge for biomedical extractive summarization. Knowl-Based Syst 252:109460

Tang T, Yuan T, Tang X, Chen D (2020) Incorporating external knowledge into unsupervised graph model for document summarization. Electronics 9(9):1520

Zhao J, Liu M, Gao L, ** Y, Du L, Zhao H, Haffari G (2020) SummPip: unsupervised multi-document summarization with sentence graph compression. In: Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, pp 1949–1952

Wallace BC, Saha S, Soboczenski F, Marshall IJ (2021) Generating (factual?) narrative summaries of rcts: experiments with neural multi-document summarization. AMIA Summits Transl. Sci. Proc. 2021:605

Huang D, Cui L, Yang S, Bao G, Wang K, **e J, Zhang Y (2020) What have we achieved on text summarization?. In: Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP). Association for Computational Linguistics, pp 446–469

Zhong M, Liu P, Chen Y, Wang D, Qiu X, Huang X (2020) Extractive summarization as text matching. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pp 6197–6208. https://doi.org/10.18653/v1/2020.acl-main.552

See A, Liu PJ, Manning CD (2017) Get to the point: summarization with pointer-generator networks. In: Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, pp 1073–1083. Vancouver, Canada

Raffel C, Shazeer N, Roberts A, Lee K, Narang S, Matena M, Liu PJ (2020) Exploring the limits of transfer learning with a unified text-to-text transformer. J Mach Learn Res 21(1):5485–5551

Cachola I, Lo K, Cohan A, Weld C (2020) TLDR: extreme summarization of scientific documents. In: Findings of the association for computational linguistics: EMNLP 2020. Association for Computational Linguistics, pp 4766–4777

Liu Y, Lapata M (2019) Text summarization with pretrained encoders. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. Hong Kong, pp 3728–3738

Dou Z-Y, Liu P, Hayashi H, Jiang Z, Neubig G (2021) GSum: a general framework for guided neural abstractive summarization. In: Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 4830–4842. https://doi.org/10.18653/v1/2021.naacl-main.384

Ramos J (2003) Using tf-idf to determine word relevance in document queries. Proc First Instr Conf Mach Learn 242(1):29–48

Kiros R, Zhu Y, Salakhutdinov R, Zemel RS, Torralba A, Urtasun R, Fidler S (2015) Skip-thought vectors. In: Proceedings of the 28th International Conference on Neural Information Processing Systems, vol 2, pp 3294–3302

Kenton JDMWC, Toutanova LK (2019) Bert: pre-training of deep bidirectional transformers for language understanding. In: Proceedings of naacL-HLT, vol 1, p 2

Mutlu B, Sezer EA, Akcayol MA (2020) Candidate sentence selection for extractive text summarization. Inf Process Manag 57(6):102359

Luhn HP (1958) The automatic creation of literature abstracts. IBM J Res Dev 2(2):159–165

Edmundson HP, Wyllys RE (1961) Automatic abstracting and indexing—survey and recommendations. Commun ACM 4(5):226–234

Mihalcea R, Tarau P (2004) Textrank: bringing order into text. In: Proceedings of the 2004 Conference on Empirical Methods in Natural Language Processing, pp 404–411

Erkan G, Radev DR (2004) Lexrank: graph-based lexical centrality as salience in text summarization. J Artif Intell Res 22:457–479

Bishop J, **e Q, Ananiadou S (2022) GenCompareSum: a hybrid unsupervised summarization method using salience. In: Proceedings of the 21st workshop on biomedical language processing, pp 220–240

Ozsoy MG, Alpaslan FN, Cicekli I (2011) Text summarization using latent semantic analysis. J Inf Sci 37(4):405–417

Nenkova A, Vanderwende L (2005) The impact of frequency on summarization. Microsoft Research, Redmond, Washington, Tech. Rep. MSR-TR-2005, 101

Radford A, Narasimhan K, Salimans T, Sutskever I (2018) Improving language understanding by generative pre-training. Technical Report. OpenAI

Wang LL, Lo K, Chandrasekhar Y, Reas R, Yang J, Burdick D, Eide D, Funk K, Katsis Y, Kinney RM, Li Y, Liu Z, Merrill W, Mooney P, Murdick DA, Rishi D, Sheehan J, Shen Z, Stilson B, et al. (2020) CORD-19: the COVID-19 open research dataset. In: Proceedings of the 1st workshop on NLP for COVID-19 at ACL 2020. Association for Computational Linguistics

Moradi M, Dorffner G, Samwald M (2020) Deep contextualized embeddings for quantifying the informative content in biomedical text summarization. Comput Methods Prog Biomed 184:105117

Padmakumar V, He H (2021) Unsupervised extractive summarization using pointwise mutual information. In: Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume. Association for Computational Linguistics, pp 2505–2512

Ju J, Liu M, Koh HY, ** Y, Du L, Pan S (2021) Leveraging information bottleneck for scientific document summarization. In: Findings of the association for computational linguistics: EMNLP 2021, Punta Cana, Dominican Republic. Association for Computational Linguistics, pp 4091–4098

Su D, Xu Y, Yu T, Siddique FB, Barezi E, Fung P (2020) CAiRE-COVID: a question answering and query-focused multi-document summarization system for COVID-19 scholarly information management. In: Proceedings of the 1st workshop on NLP for COVID-19 (part 2) at EMNLP. Association for Computational Linguistics

Jang M, Kang P (2021) Learning-free unsupervised extractive summarization model. IEEE Access 9:14358–14368

Belwal RC, Rai S, Gupta A (2021) Text summarization using topic-based vector space model and semantic measure. Inf Process Manag 58(3):102536

Srivastava R, Singh P, Rana KPS, Kumar V (2022) A topic modeled unsupervised approach to single document extractive text summarization. Knowl-Based Syst 246:108636

Belwal RC, Rai S, Gupta A (2021) A new graph-based extractive text summarization using keywords or topic modeling. J Ambient Intell Humaniz Comput 12(10):8975–8990

El-Kassas WS, Salama CR, Rafea AA, Mohamed HK (2020) EdgeSumm: graph-based framework for automatic text summarization. Inf Process Manag 57(6):102264

Liu J, Hughes DJ, Yang Y (2021) Unsupervised extractive text summarization with distance-augmented sentence graphs. In: Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp 2313–2317

Joshi A, Fidalgo E, Alegre E, Alaiz-Rodriguez R (2022) RankSum—an unsupervised extractive text summarization based on rank fusion. Expert Syst Appl 200:116846

COVID-19 Open Research Dataset Challenge (CORD-19), https://www.kaggle.com/datasets/allen-institute-for-ai/CORD-19-research-challenge. Accessed 07 Aug 2022

Xu S, Zhang X, Wu Y, Wei F, Zhou M (2020) Unsupervised extractive summarization by pre-training hierarchical transformers. In: Findings of the association for computational linguistics: EMNLP 2020. Association for Computational Linguistics, pp 1784–1795

Lin CY (2004) Rouge: a package for automatic evaluation of summaries. In: Text summarization branches out, pp 74–81

Haghighi A, Vanderwende L (2009) Exploring content models for multi-document summarization. In: Proceedings of Human Language Technologies: the 2009 Annual Conference of the North American Chapter of the Association for Computational Linguistics, pp 362–370

Ishikawa K (2001) A hybrid text summarization method based on the TF method and the lead method. In: Proceedings of the second NTCIR workshop meeting on evaluation of Chinese & Japanese text retrieval and text summarization, pp 325–330

Bansal A, Choudhry A, Sharma A, Susan S (2023) Adaptation of domain-specific transformer models with text oversampling for sentiment analysis of social media posts on COVID-19 vaccine. Comput Sci 24(2). https://doi.org/10.7494/csci.2023.24.2.4761

Acknowledgements

Not applicable.

Funding

The authors did not receive any external funding or support for the research presented in their submission.

Author information

Authors and Affiliations

Contributions

AK contributed to conceptualization, methodology, software, data curation, writing—original draft, visualization, and investigation. SS contributed to conceptualization, methodology, investigation, supervision, and writing—review and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors state that they do not have any known financial interests or personal relationships that may have influenced the findings reported in this paper.

Consent for publication

The manuscript has been approved by all authors and they have given their full consent for its publication.

Ethical approval and consent to participate

The article does not contain any studies involving human participants and animals.

Human and animal ethics

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Karotia, A., Susan, S. CovSumm: an unsupervised transformer-cum-graph-based hybrid document summarization model for CORD-19. J Supercomput 79, 16328–16350 (2023). https://doi.org/10.1007/s11227-023-05291-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-023-05291-3