For better or for worse, affect and cognition are inseparable and perhaps inescapable. Where, when, and for whom this inexorable linking of feeling and thinking will facilitate good decision making, and when it will lead to systematic departures from a rational course of action, are important questions currently unanswered.

-Milton Lodge and Charles Taber (2000)

Abstract

While affective polarization has been shown to have serious social consequences, there is little evidence regarding its effects on political attitudes and behavior such as policy preferences, voting, or political information accrual. This paper provides evidence that affective polarization impacts misinformation belief, arguing that citizens with higher levels of affective polarization are more likely to believe in-party-congruent misinformation and less likely to believe out-party-congruent misinformation. The argument is supported by data from the ANES 2020 Social Media Study and the ANES 2020 Time Series Study, which speaks to the generalizability of the relationship. Additionally, a survey experiment provides evidence that the relationship is causal. The results hold among Democrats and Republicans and are independent of the effects of partisan strength and ideological extremity. Furthermore, the relationship between affective polarization and misinformation belief is exacerbated by political sophistication rather than tempered by it, implying that education will not solve the issue. The results speak to the need for work on reducing affective polarization.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

There has been much focus on affective polarization in the political science literature in recent years, with good cause. Scholars have found that affective polarization has negative social effects, including decreasing citizens’ monetary generosity towards members of the out-party (Panagopoulos et al., 2020), decreasing cross-partisan dialogue (Chen & Rohla, 2018), decreasing how attractive citizens find members of the out-party (Nicholson et al., 2016), and decreasing social cohesion as citizens avoid social interaction with members of the out-party (Iyengar et al., 2012). These social consequences are serious and necessitate inquires into ways to reduce affective polarization (Levendusky, 2018; Wojcieszak & Warner, 2020; Badrinathan, 2020).

That said, affective polarization’s political effects remain mostly hypothetical. Research on affective polarization frequently comments on its potential corrosive consequences on the political system, yet there is little evidence of its negative effects in the realm of political attitudes and behavior. Affective polarization may or may not change the way in which citizens choose for whom to vote. It may or may not have effects on policy preferences. It may or may not change the ways in which citizens process political information. If it does so, then recent work on how to reduce affective polarization is particularly warranted and vital, as the functioning of our democracy could depend on its reduction.

In an exciting recent development, the affective polarization literature has started to provide this evidence. Druckman et al. (2021) found an effect of party animus on policy stances. As party animus increased, Republicans became less troubled by COVID-19 and reduced their support for policies aiming to respond to the virus, while Democrats became more likely to be concerned and to support such policies. Wagner (2021) found that turnout likelihood increased with affective polarization. And yet these findings are debated, with other scholars finding that the effects of affective polarization are primarily in the interpersonal domain; in terms of support for democratic norms, willingness to engage in electoral accountability, adoption of in-party policy attitudes, support of legislative bipartisanship, and perceptions of objective conditions including unemployment rates and deaths from COVID-19, this work has found no difference among citizens by their levels of affective polarization (Broockman et al. 2020).

Not yet examined is the relationship between citizens’ levels of affective polarization and their belief in misinformation about political facts. Work has analyzed the connection between partisan strength and misperceptions (Flynn et al., 2017; Gaines et al., 2007; Jerit & Barabas, 2012; Nyhan & Reifler, 2010), but it has not yet been shown whether affective polarization has an effect above and beyond one’s degree of partisan affiliation. On the one hand, Broockman et al. (2020) found that affective polarization has no effect citizens’ likelihood of adopting in-party policy positions. Similar to misinformation belief, this concerns the extent to which one’s opinions are compatible with in-party-congruent information. This suggests that affective polarization may not be associated with misinformation belief. On the other hand, affective polarization should affect motivated reasoning and thus be associated with an increase in one’s likelihood of believing in-party-affirming misinformation and a decrease in the likelihood of believing out-party-affirming misinformation. So perhaps affective polarization’s effects extend to misinformation belief.

This paper begins by uncovering correlations between affective polarization and misperceptions using observational data from two probability samples. This first step of establishing a generalizable statistical association between affective polarization and misinformation belief is important. The results show that affective polarization and misinformation belief are strongly correlated. For in-party-congruent misinformation, the relationship is positive. And for out-party-congruent information, the relationship is negative. Furthermore, the relationship between the two is exacerbated, not tempered, by political sophistication, meaning that increased education will not prevent more affectively polarized people from believing misinformation.

Next, the paper presents results from a survey experiment showing that the relationship between affective polarization and misinformation is causal. Respondents who received a treatment in which they were given zero dollars from members of the out-party in an ultimatum-type game reported greater affective polarization than respondents in the control group, and through this mediator, the treatment indirectly affected misinformation belief. It did so in the predicted directions, increasing belief in in-party-congruent misinformation and decreasing belief in out-party-congruent misinformation.Footnote 1

These results mean that the recent increase in affective polarization is harmful not only to our society socially but also to our representative democracy politically. Affectively polarized citizens’ increased likelihood of believing in-party-congruent misinformation means that their issue and candidate opinions are more likely to be dependent on false information (Kuklinski et al., 2000). And, because affectively polarized citizens are more likely to vote (Wagner, 2021), this means that the collective preferences reflected in our elections may be different than they would be had voters been informed by true facts. Thus, affective polarization has serious political consequences and research into its reduction is vitally important to our democracy.

A Theory of Affective Polarization and Misinformation Belief

Affective polarization is the tendency of partisans to view the in-party positively and the out-party negatively in emotional terms or, in other words, to associate the concept of partisanship with affect. If one is affectively polarized, then when partisanship is activated, affect associated with the parties that is stored in long term memory is also activated and “the mere act of identifying with a political party is sufficient to trigger negative evaluations of the opposition” as well as positive evaluations of the in-party (Iyengar et al., 2012). Affective polarization is thus a “natural off-shoot” of partisan identity (Iyengar et al., 2019); it is related to but unique from partisan strength. Unlike partisan identification, affective polarization necessarily involves an association between emotions and one’s political party. One may be a strong Democrat or Republican and not vilify the out-party. One may also be a weak or leaning Democrat and dislike the out-party strongly. Indeed, the Appendix Section C shows that the two concepts have low correlations and do not predict each other well in terms of proportions of variance explained.

This necessary involvement of emotion in affectively polarized citizen’s reactions to information about the parties mirrors the discussion of Lodge and Taber (2013) about affect underlying the reactions of motivated reasoners. Affect is the main impetus driving evaluation in both processes. One of the underlying postulates of motivated reasoning is “hot cognition.” Hot cognition refers to a process by which political concepts—people, groups, events, or issues—become positively or negatively affectively charged. This affective charge enters long-term memory alongside the concept, and thus whenever one is exposed to the concept and it is activated in memory, the affect is also activated. As a result, affect “. . . anchors all judgments and is always a central and oftentimes the single most dominant piece of information guiding judgment and choice. . . . This the case, we expect that most citizens most of the time will be biased reasoners who find it near impossible to evaluate new, attitude-relevant information in an evenhanded way” (Lodge & Taber, 2013).

This has implications for citizens’ decision making processes. Motivated reasoners are more likely to search for information that favors their preconceptions, to find reinforcing information more convincing than countervailing information (confirmation bias), and to devise counter-arguments against contradictory information (disconfirmation bias) (Taber & Lodge, 2006; Redlawsk, 2002). When encountering information in the political realm, motivated reasoners have the goal of reinforcing their prior partisan beliefs rather than arriving at an accurate conclusion (Schaffner & Roche, 2016; Bisgaard, 2019; Bolsen et al., 2014; Leeper & Slothuus, 2014; Jerit & Barabas, 2012).

Through motivated reasoning, confirmation and disconfirmation bias are active when people are confronted with misinformation (Vegetti & Mancosu, 2020; Schaffner & Roche, 2016; Peterson & Iyengar, 2021; Ecker & Ang, 2019; Flynn et al., 2017). When considering in-party-congruent misinformation, confirmation bias takes hold, and when considering out-party-congruent misinformation, disconfirmation bias occurs. People are more likely to be influenced by false information that is congruent with their parties’ beliefs even after being informed that it is not true (Thorson, 2016). This results in an increased likelihood of people holding partisan-congruent misbeliefs and a decreased likelihood of people holding partisan-incongruent misbeliefs (Peterson & Iyengar, 2021; Vegetti & Mancosu, 2020).

Perhaps these processes of confirmation and disconfirmation bias work similarly for affectively polarized individuals as it does for motivated reasoners. Affectively polarized citizens may reason about party information in a directional and biased manner. As their level of affective polarization increases, they may be more likely to accept misinformation that is congruent with the in-party and less likely to accept misinformation that is congruent with the out-party.

This paper examines four hypotheses:

H1

An increase in affective polarization is associated with an increase in acceptance of misinformation that is in-party-congruent.

While the association between affective polarization and acceptance of in-party-congruent misinformation should be positive, the association between affective polarization and acceptance of out-party-congruent misinformation should be negative. Affective polarization may lead to increased analytical reasoning when confronted with out-party-congruent information, which should cause people to be more likely to counter-argue and detect falsehoods in the information.

H2

An increase in affective polarization is associated with a decrease in acceptance of out-party-congruent misinformation.

Recent literature has largely focused on misinformation belief among Republicans (Swire et al. 2017; Schaffner and Luks 2018). However, motivated reasoning is a common occurrence among both Republicans and Democrats. Notably, Schaffner and Roche (2016) found that motivated reasoning was associated with greater acceptance of misinformation among members of both parties. The third hypothesis is in line with this finding.

H3

As affective polarization rises, acceptance of in-party-congruent misinformation will increase and acceptance of out-party-congruent misinformation will decrease among both Democrats and Republicans.

Finally, this paper will consider political sophistication as a moderator of the relationship between affective polarization and misinformation belief. On the one hand, political sophistication has been shown to decrease belief in misinformation as sophisticates are more able to tell real from fake news (Vegetti & Mancosu, 2020). On the other hand, Zaller (1992) argued that receptivity to information that favors one’s prior beliefs should increase as political awareness increases. Alongside Taber and Lodge (2006)’s finding that more informed citizens are more likely to engage in motivated reasoning, this implies that the more sophisticated will have a greater belief in in-party-congruent misinformation.

H4

Political sophistication will increase the magnitude of the associations between affective polarization and in-party-congruent/out-party-congruent misinformation belief.

Study 1: Observational Data

Data and Measures

The observational data are drawn from the ANES 2020 Social Media Study and the ANES 2020 Time Series Study. Missing values were replaced using a random forest model (Doove et al., 2014).Footnote 2 All variables had less than 3% of responses missing, with the one exception of ideology in the Time Series Study. In that data set, 13.66% of responses were “I haven’t thought much about this” and were coded as missing and imputed. After removing respondents who were pure independents (Social Media Study: \(N = 646\); Time Series Study: \(N = 814\)) (explained below), the data sets are comprised of 5104 observations and 6352 observations. Responses were weighted by the weights provided by the ANES for all statistical calculations.

Measuring In-Party-Congruent and Out-Party-Congruent Misinformation Beliefs

In many previous studies of misinformation, misinformation belief was measured on a single issue or topic. Kuklinski et al. (2000) tested misinformation belief about welfare, Schaffner and Roche (2016) used the unemployment rate, Berinsky (2017) used health care reform, and Hochschild and Einstein (2015) examined climate change. All of these studies implicitly assume that the attitudes exhibited on the single issue are externally valid across many issues.

The datasets used here similarly include one issue regarding Democrat-congruent misinformation, allowing the external validity of this study to be consistent with previous studies. But the datasets are unique and particularly well-suited for an investigation of general misinformation belief because they include measures of belief on several topics of Republican-favoring misinformation. They thus allow a greater degree of external validity than is typical for the misinformation literature in regards to Republican-congruent misinformation.

Belief in misinformation was measured by asking respondents which of two statements they thought were most likely true. In the Social Media Study, respondents were asked about Russian interference in the 2016 election, illegal voting in the 2016 election, whether the Affordable Care Act (ACA) increased or decreased the number of uninsured Americans, and whether Donald Trump or Barack Obama had a greater number of unauthorized immigrants deported during their first three years in office (see Appendix Section A for question wording). For example, the answer options for the question about Russian interference were: “Russia tried to interfere in the 2016 presidential election” or “Russia did not try to interfere in the 2016 presidential election.”

The questions asked in the Time Series Study were two from above (those about Russian interference in the 2016 election and the comparative number of immigrants deported during Donald Trump and Barack Obama’s administrations) along with three questions not included in the Social Media Study: whether scientific evidence indicates that vaccines cause autism, whether global temperatures have risen on average over the past century, and whether hydroxychloroquine is a safe and effective treatment for COVID-19 (see Table 1).Footnote 3

Following each of these questions, respondents were asked how confident they felt about their belief, with five answer options ranging from “not at all” to “completely.” The answers were coded on a scale from 0 to 1, with 0 representing a complete belief in the correct answer to 1 representing a complete belief in an incorrect answer. Appendix Section B shows the distribution of responses. There do not appear to be ceiling effects.

In the Social Media Study, three of the misinformation questions were theoretically more likely to be believed by Republicans than Democrats because they were consistent with Republican interests or policy positions. Republicans were less likely to think that Russia interfered in the 2016 election, more likely to think that millions of people voted illegally in the 2016 election,Footnote 4 and more likely to think that the ACA increased the number of uninsured Americans. In the Time Series study, four of the questions were more likely to be believed by Republicans. These include questions about Russian interference in the 2016 election, vaccines,Footnote 5 global warming, and COVID-19.

One misinformation question in each survey was more likely to be believed by Democrats. Democrats should have been more likely to answer that the number of illegal immigrants deported in Donald Trump’s first 3 years in office was greater than the number deported in Barack Obama’s first 3 years. Barack Obama deporting a greater number of illegal immigrants was at odds with the Democratic Party’s current progressive stance on immigration.

The variable for in-party-congruent misinformation belief is Republicans’ belief in the Republican-congruent misinformation and Democrats’ belief in the Democrat-congruent misinformation. Republican’s in-party-congruent misinformation belief was formed by a latent variable that was created from the three or four (depending on the dataset) issues’ misinformation questions (the direction of belief being combined with the confidence in belief) using a principal components analysis (PCA).Footnote 6 The variable for Democrats’ belief in in-party-congruent misinformation is Democrats’ answer to the one item that was congruent with Democrats’ beliefs.

The out-party misinformation variable is one’s belief in misinformation that is congruent with the other party. In this case, a latent variable was created using a PCA for Democrats’ beliefs on the three or four Republican-congruent items. And Republicans’ out-party misinformation beliefs were simply respondents’ answers on the Democrat-congruent item. This means that pure independents were excluded from the analysis. To be affectively polarized, one must belong to a party.

Misinformation vs. Partisan Cheerleading

There is an alternative way to interpret answers to misinformation questions. Partisan cheerleading occurs when survey respondents know the correct answer to a political knowledge question but consciously report an incorrect answer that is supportive of their party (for a review, see Bullock & Lenz, 2019). By doing so, respondents give the impression of believing the misinformation even though they privately do not. As such, it is difficult to measure cheerleading versus honest belief using typical survey methods.

But evidence indicates that the effect of partisan cheerleading on measurements of political misinformation are minimal. Scholars have utilized financial incentives to impel respondents to answer according to their true beliefs. Unless survey respondents’ responsiveness to the incentives is minimal—which could only be the case if the incentives are not large enough or if the respondents do not value money—the incentives motivate them to respond with the answer that they believe to be correct. Such studies have found that the misinformation beliefs reported in surveys are genuine (Peterson & Iyengar, 2021; Berinsky, 2018). These studies provide some confidence that misinformation belief is generated by motivated reasoning rather than partisan cheerleading. However, the results should be interpreted with this caveat in mind.

Descriptive Statistics for Misinformation Belief

The proportion of subjects believing misinformation differed between the items. At least half of both Democrats (60%) and Republicans (50%) believed that Donald Trump deported more illegal immigrants than Barack Obama during each of their first 3 years in office. On the other hand, only 18% of Democrats and 24% of Republicans believed that child vaccines cause autism. Nevertheless, more than half of a party does not need to believe misinformation for it to be politically consequential. For example, enough Democrats and Republicans believe that child vaccines cause autism to potentially cause dangerous outbreaks of diseases, despite the fact that nowhere near 50% of them believe the misinformation.

All questions were answered incorrectly more often and with greater confidence by the expected party. In the Social Media Study, the difference in distributions of answers by political party were significant for all questions [Question 1: \(X^{2}(9,\, N=5104)=1507.34,\, p<0.001\); Question 2: \(X^{2}(9,\, N=5104)=708.21,\, p<0.001\); Question 3: \(X^{2}(9,\, N=5104)=1388.86,\, p<0.001\); Question 4: \(X^{2}(9,\, N=5104)=142.97,\, p<0.001\); see Table 1]. These differences were in the proper directions, with Republicans having greater belief in the Republican-congruent misinformation and Democrats having greater belief in the Democrat-congruent misinformation. The magnitude of these differences is substantial; the average difference was 0.23, which represents a movement of approximately one category on the Likert scale of belief.

The differences by party were also significant for all questions in the Time Series Study [Question 3: \(X^{2}(9,\, N=6352)=1705.22,\, p<0.001\); Question 4: \(X^{2}(9,\, N=6352)=258.34,\, p<0.001\); Question 5: \(X^{2}(9,\, N=6352)=982.01,\, p<0.001\); Question 6: \(X^{2}(9,\, N=6352)=1503.99,\, p<0.001\); Question 7: \(X^{2}(9,\, N=6352)=241.66,\, p<0.001\)]. These differences were in the proper directions, with Republicans having greater belief in the misinformation on four of the questions (Question 3: Republican \({M}=0.52,\) Democrat \({M}=0.20;\) Question 5: Republican \({M}=0.24,\) Democrat \({M}=0.18;\) Question 6: Republican \({M}=0.30,\) Democrat \({M}=0.13;\) Question 7: Republican \({M}=0.48,\) Democrat \({M}=0.20)\) and Democrats having greater belief in the misinformation on the fourth question (Question 4: Republican \({M}=0.46,\) Democrat \({M}=0.58).\)

Respondents believed in-party-congruent misinformation significantly more than out-party-congruent misinformation. In-party-congruent misinformation belief (Social Media Study: \({M}=0.54;\) Time Series Study: \({M}=0.49\)) was significantly higher than out-party congruent misinformation belief (Social Media Study: \({M}=0.34;\) Time Series Study: \({M}=0.31;\) Social Media Study: \(t=34.52,\) \(p < 0.001;\) Time Series Study: \({t}=36.34,\) \({p} < 0.001).\)

Measuring Affective Polarization

Affective polarization has been measured in a number of ways in the literature (Druckman & Levendusky, 2019), but thermometer scores for the parties is one of the often-used measures (Iyengar et al., 2012, 2019) and were utilized here.Footnote 7 Respondents’ ratings of the Republican and Democratic parties were taken on feeling thermometer scales that range from 0 (coldest) to 100 (warmest). Respondents’ scores for the out-party were subtracted from the scores of the in-party. Respondents’ feelings towards their in-party (Social Media Study: \({M}=0.74;\) Time Series Study: \({M}=0.71)\) were significantly warmer than those towards the out-party (Social Media Study: \({M}=0.20;\) Time Series Study: \({M}=0.19;\) Social Media Study: \({t}=129.33,\) \({p} < 0.001;\) Time Series Study: \({t}=139.03,\) \({p} < 0.001)\) (mean differences assessed using a two-tailed paired comparison t-test).

The combination of in- and out-party thermometer scores is a common measure of affective polarization, as it controls for different baseline levels of warmth towards both parties among respondents. That said, Iyengar et al. (2012) found that out-party animus was the main driver of affective polarization. Thus, in the Appendix Section D, the main model is shown with in-party and out-party attitudes considered separately. The analysis shows that both variables were important predictors of misinformation belief.Footnote 8

Other Measures

In addition to these variables of primary interest, the models included a control variable for respondents’ strength of party identification, coded to be on a 0 (leaning Democrat or Republican) to 1 (strong Democrat or Republican) scale. Scholars have found that motivated reasoning is engaged in to a greater extent among those who have stronger party identification (Lavine et al., 2012; Bolsen et al., 2014; Kuklinski et al., 2000). Affective polarization has likewise been shown to be positively correlated with partisan strength (Robison & Moskowitz, 2019). (That said, see the Appendix Section A.1 for histograms of affective polarization among partisans and among partisan leaners. They show that affective polarization was widely distributed among both sets of people.) Including partisan strength as a predictor controlled for these alternative explanations.Footnote 9

It is also necessary to control for ideological extremity, which has been associated with affective polarization (Mason, 2018). Prior work has found that ideological extremity increases misinformation belief when the misinformation favors one’s prior stances—about COVID-19 (Calvillo et al., 2020) and immigration (Hopkins et al., 2019). Ideological extremity was coded to be on a 0 (moderate) to 1 (very liberal or conservative) scale.

Last, variables for political attention, political knowledge, education, gender, age, race, income, and region (Social Media Study only)/urban or rural (Time Series Study only) were included. Political attention, political knowledge, education, income, and age were coded as ordinal variables. Gender and region (Midwest, West, South, and Northeast)/urban or rural were instantiated as dummy variables. See the Appendix Section A for the wording of the questions that were used to measure these variables. All variables in the models were normalized using min–max normalization to be on 0–1 scales.

Results

H1 and H2 Results

OLS regressions were run to test H1 and H2.Footnote 10H1 is supported by the data; belief in in-party-congruent misinformation increased as affective polarization increased (Social Media Study: \(\beta = 0.31\), \(p < 0.001\); Time Series Study: \(\beta =0.27\), \(p<0.001\); see Table 2). Marginal effects can help to put these coefficients in perspective. In the Social Media Study, the predicted belief in in-party-congruent misinformation of someone with the least affective polarization was 0.31, while that of someone with the highest degree of affective polarization was 0.61, holding all other predictors at their means. This represents a movement of the dependent variable from believing the true statement with “a little” confidence to believing the piece of misinformation with “a little” confidence.

In the Time Series Study, the results represent a similar movement in terms of the magnitude of the effects. Holding all other predictors at their means, those with the least affective polarization were predicted to believe in-party-congruent misinformation at a level of 0.28, meaning that the belief in the true statement was held with “a little” confidence. Those with the most affective polarization had a predicted belief in misinformation of 0.55. Thus, a one-unit movement in affective polarization yielded a switch from believing the true information to believing the misinformation.

The data also support H2. Affective polarization was not positively correlated with all misinformation; it had a negative relationship with out-party misinformation beliefs. As affective polarization increased, belief in out-party-congruent misinformation decreased (Social Media Study: \(\beta = -0.16\), \(p < 0.001\); Time Series study: \(\beta = -0.14\), \(p < 0.001\); see Table 2). The magnitude of the effects were quite large. In the Social Media Study, the predicted level of belief in out-party misinformation was 0.45 when affective polarization was at its minimum and only 0.31 when it was at its maximum. This represents a movement from believing the misinformation to believing the true information with “a little” confidence.

Note that the correlations of affective polarization and in-party-congruent/out-party-congruent misinformation belief held when partisan strength and ideological extremity were controlled for. Additionally, when affective polarization was dropped from the model, the coefficients on partisan strength barely increased (see Appendix Section G). This indicates that the relationship between affective polarization and misinformation belief was independent of partisan strength.

Covariates

As partisan strength increased, respondents were more likely to believe misinformation of any type, whether it favored to their party (Time Series Study: \(\beta = 0.05\), \(p< 0.01\); Social Media Study: \(\beta = 0.01\), \(p = 0.49\)) or not (Social Media Study: \(\beta = 0.04\), \(p< 0.01\); Time Series Study: \(\beta = 0.02\), \(p< 0.01\)). Higher levels of political attention, political knowledge, and education significantly reduced the level of belief in in-party-congruent misinformation in the Social Media Study (political attention: \(\beta = -0.06\), \(p< 0.001\); political knowledge: \(\beta = -0.07\), \(p< 0.001\); education: \(\beta = -0.07\), \(p < 0.001\)). In the Time Series Study, only political knowledge was significant (\(\beta = -0.10\), \(p < 0.001\)). In the Social Media Study, the predicted belief in in-party-congruent misinformation was 0.74 when affective polarization was at its maximum, and political attention, political knowledge, and education were at their minimum. On the other hand, when affective polarization was at its maximum and political attention, political knowledge, and education were also at their maximum, the predicted in-party-congruent misinformation belief was 0.17, a substantial reduction in predicted misinformation belief. That said, see below in the “Political Sophistication as a Moderator” section for additional analyses of the role of political sophistication in the relationship between affective polarization and misinformation belief. While it is true that increasing political knowledge was generally associated with reduced beliefs in in-party-congruent misinformation, this did not hold for respondents who were highly affectively polarized.

All three of these variables had a negative relationship with out-party misinformation (political attention: Social Media Study: \(\beta =-0.25\), \(p<0.01\); Time Series Study: \(\beta =-0.19\), \(p<0.01\); political knowledge: Social Media Study: \(\beta = -0.20\), \(p<0.01\); Time Series Study: \(\beta = -0.16\) \(p<0.01\); education: Social Media Study: \(\beta = -0.15\), \(p<0.01\); Time Series Study: \(\beta = -0.13\), \(p<0.01\)). The magnitude of the effect on out-party misinformation belief was larger than that on in-party belief. This reflects citizens being more likely at baseline to believe in-party-congruent misinformation than out-party misinformation.

African Americans were more likely to believe in-party-congruent misinformation (Social Media Study: \(\beta =0.12\), \(p<0.01\); Time Series Study: \(\beta =0.11\), \(p<0.01\)) and less likely to believe out-party-congruent misinformation (Social Media Study: \(\beta =-0.11\), \(p<0.05\); Time Series Study: \(\beta =-0.06\), \(p<0.05\)) than whites were. One possible (post hoc) explanation is that due to the confluence of Blacks’ racial and political identity (Huddy & Bankert, 2017; Mason, 2018), African Americans have a sense of party loyalty that leads to acceptance of in-party-congruent misinformation and rejection of out-party misinformation. People may be unable to sever political judgements from racial judgments, and thus non-political racial interactions may lead to affective polarization (Westwood & Peterson, 2020). That said, more work is needed to validate this association.

Misinformation Belief Among Democrats and Republicans

The relationships between affective polarization and misinformation belief held among respondents of each party, considered separately. Both Democrats (Social Media Study: \(\beta = 0.16\), \(p < 0.001\); Time Series Study: \(\beta = 0.26\), \(p < 0.001\)) and Republicans (Social Media Study: \(\beta = 0.44\), \(p < 0.001\); Time Series Study: \(\beta = 0.28\), \(p < 0.001\); see Table 3) showed a positive, significant correlation of affective polarization and in-party-congruent misinformation belief. H2 held for Democrats. A significant, negative relationship existed between affective polarization and acceptance of out-party-congruent misinformation (Social Media Study: \(\beta = -0.23\), \(p < 0.001\); Time Series Study: \(\beta = -0.20\), \(p < 0.001\)). For Republicans, this coefficient was negative, though not significant, in the Social Media Study and negative and marginally significant in the Time Series Study (Social Media Study: \(\beta = -0.06\), \(p = 0.26\); Time Series Study: \(\beta = -0.09\), \(p < 0.10\); see Table 4). Thus, the relationship between affective polarization and out-party-congruent misinformation was less definitive for Republicans than it was for Democrats.

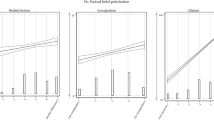

Partisan strength had a different relationship with in- and out-party-congruent misinformation for Democrats and Republicans, and the association was much stronger for Democrats. The relationship between partisan strength and both in- and out-party congruent misinformation was positive and significant for Democrats [Social Media Study (in-party): \(\beta = 0.04\), \(p < 0.01\); Social Media Study (out-party): \(\beta = 0.02\), \(p < 0.10\); Time Series Study (in-party): \(\beta = 0.08\), \(p < 0.01\); Time Series Study (out-party): \(\beta = 0.03\), \(p < 0.01\); see Table 3; Fig. 1], indicating that the stronger Democrats’ party ties were, the more they were likely to believe both in-party and out-party favoring misinformation. For Republicans, the relationship was only marginally significant for out-party-congruent misinformation in the Social Media Study (\(\beta = 0.07\), \(p < 0.01\)).Footnote 11

The relationship between political attention, political knowledge, and education and misinformation belief held for both Democrats and Republicans. For all three variables, the relationship was negative; as political attention, political knowledge, and education increased, both Democrats and Republicans became less likely to accept either type of misinformation.

Political Sophistication as a Moderator

In the regressions presented thus far, political knowledge and education consistently reduced the extent of belief in both in-party-congruent misinformation and out-party-congruent misinformation. But this may be misleading; the average effect may hide an exacerbation of the association between partisan affect and misinformation belief as political sophistication increases. Table 5 presents a regression run with an interaction between affective polarization and political sophistication. Political sophistication was measured by the average of respondents’ political knowledge and education scores.

Political sophistication exaggerated belief in in-party-congruent misinformation and disbelief in out-party misinformation. As the interaction between affective polarization and political sophistication increased, in-party-congruent misinformation belief increased (Social Media Study: \(\beta = 0.33\), \(p < 0.05\); Time Series Study: \(\beta = 0.37\), \(p < 0.01\)). The joint effect of affective polarization and political sophistication did away with the otherwise negative effect of political sophistication almost entirely.Footnote 12 Comparing someone with low and high political sophistication among those with maximum affective polarization, the predicted probability of believing in-party-congruent misinformation was 0.64 (Social Media Study)/0.56 (Time Series Study) and 0.57 (Social Media Study)/0.55 (Time Series Study), a difference of only \(-0.07\) (Social Media Study/\(-0.01\) (Time Series Study). While political sophistication on its own reduced belief in in-party-congruent misinformation belief, its effect was positive when interacted with affective polarization, almost entirely eliminating its otherwise favorable effect.

The same pattern emerges for out-party-congruent misinformation. Political sophistication exacerbated the association of affective polarization and misinformation belief. For respondents viewing out-party-congruent misinformation, political sophistication interacted negatively with affective polarization (Social Media Study: \(\beta = -0.63\), \(p < 0.01\); Time Series Study: \(\beta = -0.35\), \(p < 0.01\)). Comparing someone with low and high political sophistication among those with maximum affective polarization, the predicted probability of believing in-party-congruent misinformation was 0.51 (Social Media Study)/0.47 (Time Series Study) and 0.01 (Social Media Study)/0.10 (Time Series Study), respectively, a difference of \(-0.50\) (Social Media Study)/\(-0.37\) (Time Series Study). In other words, among those with high affective polarization, political sophistication took them from believing the misinformation (with low confidence) to believing the true information very confidently.

These results can be explained by two factors. One is confirmation and disconfirmation bias, which the motivated reasoning literature has documented (e.g., Peterson & Iyengar, 2021) and, as explained above, is theoretically more likely to be engaged in by the politically sophisticated. The other is the ability of more sophisticated citizens to argue persuasively for ideas that support their preconceptions and against ideas that opposite their preconceptions (Zaller, 1992). When faced with an in-party-congruent piece of misinformation, affectively polarized political sophisticates may think of true facts that support the general thrust of the story and thus believe it more than less politically aware individual do. On the other hand, when faced with an out-party-congruent piece of misinformation, affectively polarized political sophisticates may think of true facts that are in opposition the story and thus believe it less than a less politically aware individual does.

The substantive importance of this finding lies in the fact that the association of affective polarization with misinformation belief does not depend on a set of the electorate that is less politically sophisticated and more prone to believing misinformation. Instead, the typically preventative effects of political sophistication on misinformation belief are close to entirely inapplicable to the highly affectively polarized when it comes to in-party-congruent misinformation belief.

Study 2: Experimental Evidence

The observational data has only demonstrated a correlation between affective polarization and belief/disbelief in in/out-party-congruent misinformation, not a causal effect. It is possible that the relationship is causal in the direction specified in the theory. But the causal arrow could instead go the other way; believing in in-party-congruent misinformation and disbelieving out-party-congruent misinformation could lead to increased levels of affective polarization. Testing this experimentally can establish whether or not a causal relationship exists in the theorized direction.

Experimental Design

The experiment used a modified trust game designed by Westwood and Peterson (2020) and additionally used by Broockman et al. (2020). There were two players. Player 1 was given $10 and instructed to give some amount of that money, from $0 to $10, to Player 2. The amount given was tripled. Player 2 received this amount and was instructed to give as much of it as he or she wanted back to Player 1. Then the game ended.

Participants played three rounds of this game. They always played the role of Player 2, whereas Player 1 was actually a computer opponent who offered a predetermined amount of money. However, respondents did not know this and were given four pieces of information about Player 1: age, gender, income level, and political partisanship. The values on these attributes were randomized according to a uniform distribution.Footnote 13 Respondents were informed that their values on these attributes were also given to Player 1. After making their choices, players indicated the factors involved in their decision making process from five options: the other player’s age, gender, income, partisanship, and/or something else. Before Player 2 made his or her choice, he or she received a report of the factors involved in Player 1’s choice. When the game was over, participants saw a summary of the games’ outcomes and were told the amount that they had won.Footnote 14

Participants were randomized into two treatment conditions. The ‘positive experience’ group received pre-determined allocations of $5, $7, and $8 (tripled to $15, $21, and $24) from Player 1. Those in the ‘negative experience’ group received pre-determined allocations of $0 in all three rounds, and in these rounds Player 1’s partisanship was the opposite of Player 2’s partisanship. Additionally, respondents in the negative experience condition were told that the reason Player 1 chose to give them $0 was because of their partisanship.

To ensure that all participants understood the game, they saw three example rounds and answered two comprehension questions about the game before beginning. Respondents who gave incorrect responses to the comprehension questions were asked the question again until they answered correctly.

After playing the game, participants’ affective polarization was measured by asking feeling thermometers about “people who are Democrats/Republicans” (‘mass public affective polarization’) and about “Democrat/Republican politicians and elected officials” (‘politician affective polarization’). Social distance from the outparty, an alternative measure of affective polarization, was also measured.Footnote 15 Feeling thermometer questions were coded the same way as they were for the ANES data.

Next, participants were shown four pieces of misinformation in the form of news stories. The misinformation was relevant to current political events. Two of the pieces of misinformation were Democrat-congruent: Trump asking Russia to interfere in the 2024 election (referred to as Question 1) and it being discovered as a result of the raid on Mar-A-Lago that Trump attempted to blackmail Emmanuel Macron (termed Question 2). The other two pieces of misinformation were Republican-congruent: Obama having ordered FBI agents not to investigate Hunter Biden ahead of the 2020 election (Question 3) and the raid on Mar-A-Lago being an unlawful result of Joe Biden pressuring the FBI (Question 4). (Appendix Section K, contains the stories.) These stories were followed by the same misinformation questions asked in the ANES: first, a question asking respondents which of two statements they considered most likely to be true and then a five-answer–option–question about their confidence in that statement.

Misinformation measures were coded in the same way as for the ANES data. In-party-congruent misinformation was coded as the means of answers to the party-congruent misinformation questions. Out-party-congruent misinformation was coded as the means of answers to the party-incongruent misinformation questions. Independents who identified themselves as leaning towards one of the parties were counted as party members. All measures were normalized to be on 0–1 scales.

Data were drawn from Amazon’s Mechanical Turk (MTurk) using CloudResearch’s MTurk Toolkit service, which vets MTurk users based on their previous performance. CloudResearch’s samples are of better quality than MTurk’s base samples and additionally are more attentive than Prolific samples and of equal overall quality to Dynata samples (Litman et al., 2017; Peer et al., 2021). 1003 Participants took the survey between August 31 and September 1, 2022. Respondents who answered one of the written response questions with nonsense (e.g., “asdf”) (\({N}=9),\) who did not answer all of the questions applicable to the models (\({N}=2),\) who were not U.S. citizens (\({N}=5),\) and who elected not to have their data included in the analyses given that the survey involved deception (\({N}=2)\) were excluded. Additionally, as in the ANES analyses, pure independents were excluded (\({N}=116,\) or 11.69% of the sample). This left the sample with 869 respondents. For all analyses that involve affective polarization, respondents who rated the out-party more highly than the in-party were not included (mass public affective polarization: \({N}=30;\) elite affective polarization: \({N}=22).\)Footnote 16

Descriptive Statistics

The misinformation questions worked as expected. The differences in belief among Democrats and Republicans were significant for each misinformation question (see Table 6). Belief was higher among Democrats for the expected misinformation (a difference of 0.34 for Question 1 and of 0.31 for Question 2) and higher among Republicans for the expected misinformation (a difference of 0.24 for Question 3 and of 0.43 for Question 4).

The proportion of respondents who thought that the pieces of misinformation were most likely true was dismayingly high (Question 1: 37.63%; Question 2: 38.32%; Question 3: 22.21%; Question 4: 24.97%). Among these respondents, the average confidence in their belief was also quite high (Question 1: \(M=2.73\), or “moderately confident”; Question 2: \(M=3.01\), or “moderately confident”; Question 3: \(M=2.90\), or “moderately confident”; Question 4: \(M=2.41\), or “a little confident”).

Structural Equation Modeling

Many experiments manipulate the independent variable of interest directly. For example, papers testing the effect of a candidate’s sex on candidate evaluations provide the control group with the candidate as a male and the treatment group with the candidate as a female. Because this manipulation of sex is direct, such papers can analyze a simple difference in means between the treated and untreated groups (i.e., the average treatment effect or ATE). But in the experimental treatment used here, affective polarization, was not directly manipulated; the treatment—the trust game—was utilized in order to indirectly manipulate affective polarization. As such, a different analytical strategy is called for.

When the variable of interest is indirectly manipulated in an experiment, a structural equation model (SEM) or mediation analysis is the appropriate analysis (Pearl, 2022; Bullock & Ha, 2011; Glynn, 2021; Imai et al., 2011). The reason for a mediation approach is straightforward given the theoretical argument. In theory, the trust game treatment has three distinct effects on the outcome variable of misinformation belief. The theoretically relevant average causal mediation effect (ACME) captures how changing levels of affective polarization due to the treatment affect misinformation belief. The treatment also has two other affects that are not of theoretical interest. One of these is the average direct effect (ADE) on misinformation belief. The ADE captures direct effects of the trust game on misinformation. The other is indirect effects of the treatment on misinformation belief through mediators other than affective polarization. The trust game is a heavy-handed treatment that could have affected a number of variables,Footnote 17 and these variables may in turn have affected answers to misinformation belief questions. (When other mediators are not accounted for in the modeling, these indirect effects are captured by the ADE.)

A simple ATE would conflate these effects, indicating whether the trust game changed levels of misinformation belief but not specifying the effects that affective polarization was responsible for. A mediation approach effectively separates these alternative mediators’ effects, as well as direct effects, from the effect of the trust game mediated by affective polarization. It correctly identifies the ACME and allows us to evaluate my theoretical argument, which solely concerns the ACME (see Fig. 2). This improves the robustness, specificity, and appropriateness of the test over that offered by a simple comparison of means.

The ACME is the Expected Causal Relationship. While the ADE is, in theory, the direct effect of the treatment on the outcome, in practice its measurement also incorporates indirect effects through potential additional mediators that are unacknowledged. When potential additional mediators are not accounted for, the ADE is c combined with the product de. The ATE is c combined the products ab and de. Note that the figure is based on the simplifying case in which there is one additional mediator. But, there could be zero or more than one

Note that a prior use of the same experimental treatment did not take this analytical approach. Broockman et al. (2020) analyzed the ATE. In order for this approach to be appropriate, one must believe that the trust game manipulated the outcome only through affective polarization (rather than through other mediators) and that there was no direct effect from the treatment to the outcome.Footnote 18 Using a mediation approach allows for the possibility that the trust game manipulates other mediators as well as affective polarization and/or has a separate, direct effect on the outcome variable.

I use SEM. While primarily used in modeling observational data, SEM is appropriate to analyze experimental data (Bagozzi & Yi, 1989; Bagozzi et al., 1991; Kline, 2015) and, indeed, perhaps better than GLM-based approaches such as ANOVA (Breitsohl, 2019). The running of the Imai et al. mediation model using the mediation package in R reports the same effects substantively. See the Appendix Section O for those results. The model is run with mass public affective polarization. For the results with two other affective polarization measures, which are substantively similar, see the Appendix Section N.

Large changes in affective polarization were produced by the treatment, consistent with the findings of Westwood and Peterson (2020) and Broockman et al. (2020). The direct effect of the treatment on affective polarization (a in Fig. 2) was positive and significant (\(\beta =0.15, p<0.001\); see Table 7).

The direct effect of the treatment on in-party-congruent misinformation belief (c and de in Fig. 2) was negative (\(\beta = -0.03, p=0.08\)). This indicates that there was an effect—direct and/or indirect—of the treatment on the outcome through some other means than affective polarization. See the Appendix Section M and Section O for further discussion. The direct effect of affective polarization on in-party-congruent misinformation belief (b in Fig. 2) was positive, as expected (\(\beta = 0.24, p<0.001\)). As affective polarization went from its lowest to its highest value, people became 24% more likely to believe in-party-congruent misinformation.

The direct effect of the treatment on out-party-congruent misinformation (c and de Fig. 2) was positive (\(\beta = 0.03, p = 0.06\)). The direct effect of affective polarization on out-party-congruent misinformation belief (b in Fig. 2) was negative (\(\beta = -0.24, p<0.001\)). This is consistent with expectations; as affective polarization increased, belief in out-party-congruent information decreased.

Most importantly, the indirect effect of the treatment mediated by affective polarization on in-party-congruent misinformation belief (the ACME) was positive (\(\beta = 0.03, p<0.001\)). Respondents in the negative treatment condition were three percent more likely to believe in-party-congruent misinformation than respondents in the positive treatment condition. The hypotheses also hold when predicting out-party-congruent misinformation. The indirect effect of the treatment mediated by affective polarization on out-party-congruent misinformation belief was negative (\(\beta = -0.03, p<0.001\)). Respondents in the negative treatment condition were three percent less likely to believe out-party-congruent misinformation than respondents in the positive treatment condition, and this effect was mediated by respondents’ level of affective polarization.

Discussion

This paper tests and confirms four hypotheses across three data sets: the ANES Social Media Study, the ANES Time Series Study, and a survey experiment. The data show that an increase in affective polarization caused an increase in acceptance of in-party-congruent misinformation. But the opposite was true of out-party-congruent misinformation: an increase in affective polarization caused a decrease in acceptance of out-party-congruent misinformation. These associations held for both Democrats and Republicans and were independent of the effects of partisan strength and ideological extremity. The effect of political sophistication on the relationship between affective polarization and misinformation belief was to exacerbate the belief in in-party-congruent misinformation and further reduce the belief in out-party-congruent misinformation. The use of observational data demonstrates the generalizability of the results, and the experimental data demonstrate the causal validity of these claims.

These results affirm that affective polarization has implications for political attitudes and behavior as well as the social consequences that have been well documented (Panagopoulos et al., 2020; Chen & Rohla, 2018; Nicholson et al., 2016; Huber & Malhotra, 2017; Iyengar et al., 2012). They join and expand upon the recent findings of Druckman et al. (2021) and Wagner (2021): in addition to policy stances and turnout likelihood, affective polarization also has a relationship with levels of misinformation belief.

The findings suggest that the increase in affective polarization over the past few years is matched by polarization in terms of partisans’ beliefs. This in turn has both social and political implications. Regarding the social implications, it is difficult to engage in political conversation with someone whose fundamental ideas about what is true differ. If Democrats and Republicans believe the misinformation congruent with their own party and disbelieve the misinformation congruent with the other party, then they will be working from different sets of “facts.” We should expect more rancor in discussions between affectively polarized partisans.

More important are the political implications. Affectively polarized people are more likely to turn out to vote than those less affectively polarized (Wagner, 2021). Since affectively polarized people are more likely to believe in-party-congruent misinformation, their issue and candidate opinions are also more likely to be dependent on false information. Kuklinski et al. (2000) showed that misinformed voters do indeed use the misinformation in forming their political opinions, potentially causing different collective preferences than would exist had they been well informed. Together, these findings imply that those most likely to participate in our democratic process are more likely to have preferences that have been developed through the use of misinformation. Thus, the increase in affective polarization portends trouble for our representative democracy.

There are many avenues for future research on affective polarization and misinformation. First, though this paper has shown that affective polarization causes misinformation belief, it is also possible that misinformation belief increases affective polarization. Believing negative false information about the out-party may increase one’s feelings of anger towards the out party. The two concepts may be mutually reinforcing. This possibility should be examined by future papers. Second, there is an alternative explanation to motivated reasoning for misinformation belief, cheerleading. It is not possible in this study to tell if respondents answered the misinformation questions incorrectly on purpose, cheerleading for their parties rather than giving honest answers. Studies have confirmed that respondents answer misinformation questions truthfully (Peterson & Iyengar, 2021; Berinsky, 2018), and yet this has not been tested in the context of these surveys. A study that did so would offer even more compelling evidence that the specific misinformation questions were answered genuinely.

Work should also focus on validating the mechanisms of the theory presented in this paper. Confirming that motivated reasoning is the mechanism connecting affective polarization and misinformation belief would be useful. Taber and Lodge (2006) suggested that motivated reasoners are more likely to think intuitively when receiving information congruent with their prior beliefs and more likely to think analytically when receiving incongruent information. If this is the case regarding the information processing of affectively polarized citizens, this could suggest solutions besides reducing affective polarization. For example, if using quick or intuitive processing system when receiving in-party-congruent information is the mechanism that causes acceptance of this misinformation, then focusing attention to accuracy could mitigate belief. Indeed, Pennycook et al. (2021) found that shifting people’s attention towards accuracy increased the quality of news that they subsequently shared. It would be useful to know if this shift in focus works for affectively polarized citizens.

Milton Lodge and Charles Taber highlighted an important goal of social science research: finding when affect has negative implications for cognition (Groenendyk & Krupnikov, 2021). What should be added to this goal is the discernment of which of these negative implications have the greatest impact on society. The relationship between affective polarization and misinformation belief shows that affective polarization is concerning not only for the well-being of our society socially but also politically and should renew efforts to identify mechanisms by which it can be reduced.

Data Availability

Replication materials are available for this study at https://doi.org/10.7910/DVN/HEODWP.

Notes

Replication data and code are available for this study at https://doi.org/10.7910/DVN/HEODWP.

In random forest, regression trees are constructed by bootstrap** the complete cases. An imputed value is generated via a prediction rule that is given by the overall average of the trees. The random forest was run with 25 estimators and a depth of 50 in Python 3.6.

Additionally, the Time Series Study asked respondents about whether Coronavirus was intentionally developed in a laboratory. This question was not included in the formation of the misinformation variable given that this may not actually be misinformation.

While Trump won the 2016 election, he nonetheless said that millions of people voted illegally for Hillary Clinton and prevented him from winning the popular vote (Farley, 2016).

There was only a six percentage point difference in belief found between Republicans and Democrats (see Table 1). Additionally, a minority of Democrats/liberals are strongly anti-vax. Thus, it is debatable whether this topic truly constitutes a Republican-congruent piece of misinformation. However, Republicans and conservatives have been more likely to believe that vaccines cause autism than independents or Democrats since 2015 (Lupton & Hare, 2015). And by 2020, these formerly fringe views had gained traction among mainstream Republican audiences due to COVID-19 (Motta, 2021). Additionally, the Appendix Section E, shows that the associations between affective polarization and misinformation belief are consistent across all Republican-favoring topics of misinformation, including vaccines.

The eigenvalue on the first component is 1.8 for the Social Media Study and 2.08 for the Time Series Study. All other components have eigenvalues of substantially less than one (Social Media Study: 0.72 and 0.52; Time Series Study: 0.82, 0.59, and 0.52). Scree plots in Appendix Section I show graphically that there is one primary latent variable underlying answers to the questions for both sets of data as well as providing the eigenvectors. See the Appendix Section F for results (which are indistinguishable from those presented in the main document) with the variable composed of the average of the items rather than the latent variable. Additionally, the Appendix Section E shows results with each individual Republican-congruent piece of misinformation by themselves, rather than using the latent variable.

A second frequently-utilized measure of affective polarization uses respondents’ political stereotypes of members of the in- and out-party. In the Social Media Study, respondents were asked to indicate how smart and how open-minded they considered supporters of Democrats and Republicans to be. See Appendix Section H for results run with this version of the affective polarization variable.

Additionally, the analyses show that it is not the case that in-party attitudes were responsible for in-party-congruent misinformation beliefs and out-party animus were responsible for out-party-congruent misinformation beliefs. Instead, out-party animus had important implications for in-party-congruent misinformation beliefs.

Additionally, the model was run without the inclusion of this variable in the Appendix Section G to see if the coefficient or significance on party strength changed when affective polarization was no longer in the model. It did not. If it had done so, this would have indicated that the correlation between the two variables was large enough to cause multicollinearity issues.

For further examination of which independent variables in the model are most closely associated with in-party misinformation belief, see the Appendix Section J for LASSO analyses.

Note that this difference in effect strength cannot be explained by there not being a sufficient number of highly affectively polarized Republicans in the samples. The proportion of highly affectively polarized Republicans (Social Media Study: 39%; Time Series Study: 45%) was just as large, and larger in one dataset, than the proportion of highly affectively polarized Democrats (Social Media Study: 37%; Time Series Study: 38%).

The predicted belief of someone with no affective polarization and no political sophistication was 0.46 (Social Media Study)/0.48 (Time Series Study); that of someone with no affective polarization and maximum level of political sophistication was 0.06 (Social Media Study)/0.10 (Time Series Study), a large negative effect of political sophistication of \(-0.40\) (Social Media Study)/\(-0.38\) (Time Series Study).

See the Appendix Section K for attribute values.

Participants were informed before beginning the game that they would receive a bonus equal to 0.05 times the amount they won in the game.

See the Appendix Section K, for question wording.

If the analyses are instead run including these people, they are the same substantively, with small changes in the magnitude of the effects.

For example, it almost certainly affected feelings of anger and disappointment. It likely affected effort on the remaining survey questions, with satisficing becoming more prevalent among respondents in the treatment condition who received no money. It affected the time that respondents took to complete the survey.

This is not the case in my data. The ADE between the treatment and outcome variable is significant (\(p<.10\)) and in the opposite direction as the ACME.

References

Badrinathan, S. (2020). Educative interventions to combat misinformation: Evidence from a field experiment in India. American Political Science Review, 115(4), 1–17.

Bagozzi, R. P., & Yi, Y. (1989). On the use of structural equation models in experimental designs. Journal of Marketing Research, 26(3), 271–284.

Bagozzi, R. P., Yi, Y., & Singh, S. (1991). On the use of structural equation models in experimental designs: Two extensions. International Journal of Research in Marketing, 8(2), 125–140.

Baron, R. M., & Kenny, D. A. (1986). The moderator–mediator variable distinction in social psychological research: Conceptual, strategic, and statistical considerations. Journal of Personality and Social Psychology, 51(6), 1173.

Berinsky, A. J. (2017). Rumors and health care reform: Experiments in political misinformation. British Journal of Political Science, 47(2), 241–262.

Berinsky, A. J. (2018). Telling the truth about believing the lies? Evidence for the limited prevalence of expressive survey responding. The Journal of Politics, 80(1), 211–224.

Bisgaard, M. (2019). How getting the facts right can fuel partisan-motivated reasoning. American Journal of Political Science, 63(4), 824–839.

Bolsen, T., Druckman, J. N., & Cook, F. L. (2014). The influence of partisan motivated reasoning on public opinion. Political Behavior, 36(2), 235–262.

Breitsohl, H. (2019). Beyond ANOVA: An introduction to structural equation models for experimental designs. Organizational Research Methods, 22(3), 649–677.

Broockman, D. E., Kalla, J. L., & Westwood, S. J. (2020). Does affective polarization undermine democratic norms or accountability? Maybe not. American Journal of Political Science. https://doi.org/10.1111/ajps.12719

Bullock, J. G., & Ha, S. E. (2011). Mediation analysis is harder than it looks. In Cambridge handbook of experimental political science (Vol. 508, p. 521). Cambridge University Press.

Bullock, J. G., & Lenz, G. (2019). Partisan bias in surveys. Annual Review of Political Science, 22, 325–342.

Calvillo, D. P., Ross, B. J., Garcia, R. J., Smelter, T. J., & Rutchick, A. M. (2020). Political ideology predicts perceptions of the threat of COVID-19 (and susceptibility to fake news about it). Social Psychological and Personality Science, 11(8), 1119–1128.

Chen, M. K., & Rohla, R. (2018). The effect of partisanship and political advertising on close family ties. Science, 360(6392), 1020–1024.

Collins, L. M., Graham, J. J., & Flaherty, B. P. (1998). An alternative framework for defining mediation. Multivariate Behavioral Research, 33(2), 295–312.

Davis, J. A., & Weber, R. P. (1985). The logic of causal order (Vol. 55). SAGE.

Doove, L. L., Van Buuren, S., & Dusseldorp, E. (2014). Recursive partitioning for missing data imputation in the presence of interaction effects. Computational Statistics and Data Analysis, 72, 92–104.

Druckman, J. N., Klar, S., Krupnikov, Y., Levendusky, M., & Ryan, J. B. (2021). Affective polarization, local contexts and public opinion in America. Nature Human Behaviour, 5(1), 28–38.

Druckman, J. N., & Levendusky, M. S. (2019). What do we measure when we measure affective polarization? Public Opinion Quarterly, 83(1), 114–122.

Ecker, U. K., & Ang, L. C. (2019). Political attitudes and the processing of misinformation corrections. Political Psychology, 40(2), 241–260.

Farley, R. (2016, October). Trump’s bogus voter fraud claims.

Flynn, D., Nyhan, B., & Reifler, J. (2017). The nature and origins of misperceptions: Understanding false and unsupported beliefs about politics. Political Psychology, 38, 127–150.

Gaines, B. J., Kuklinski, J. H., Quirk, P. J., Peyton, B., & Verkuilen, J. (2007). Same facts, different interpretations: Partisan motivation and opinion on Iraq. The Journal of Politics, 69(4), 957–974.

Gilbert, D. T., Fiske, S. T., & Lindzey, G. (1998). The handbook of social psychology (Vol. 1). Oxford University Press.

Glynn, A. N. (2021). Advances in experimental mediation analysis. In Advances in experimental political science (pp. 257–270). Cambridge University Press.

Groenendyk, E., & Krupnikov, Y. (2021). What motivates reasoning? A theory of goal-dependent political evaluation. American Journal of Political Science, 65(1), 180–196.

Hochschild, J., & Einstein, K. L. (2015). ‘it isn’t what we don’t know that gives us trouble, it’s what we know that ain’t so’: Misinformation and democratic politics. British Journal of Political Science, 45(3), 467–475.

Hopkins, D. J., Sides, J., & Citrin, J. (2019). The muted consequences of correct information about immigration. The Journal of Politics, 81(1), 315–320.

Huber, G. A., & Malhotra, N. (2017). Political homophily in social relationships: Evidence from online dating behavior. The Journal of Politics, 79(1), 269–283.

Huddy, L., & Bankert, A. (2017). Political partisanship as a social identity. In Oxford research encyclopedia of politics. Oxford University Press.

Imai, K., Keele, L., Tingley, D., & Yamamoto, T. (2011). Unpacking the black box of causality: Learning about causal mechanisms from experimental and observational studies. American Political Science Review, 105(4), 765–789.

Iyengar, S., Lelkes, Y., Levendusky, M., Malhotra, N., & Westwood, S. J. (2019). The origins and consequences of affective polarization in the United States. Annual Review of Political Science, 22, 129–146.

Iyengar, S., Sood, G., & Lelkes, Y. (2012). Affect, not ideology: A social identity perspective on polarization. Public Opinion Quarterly, 76(3), 405–431.

Jerit, J., & Barabas, J. (2012). Partisan perceptual bias and the information environment. The Journal of Politics, 74(3), 672–684.

Judd, C. M., & Kenny, D. A. (2010). Data analysis in social psychology: Recent and recurring issues. In Handbook of social psychology. Wiley.

Kline, R. B. (2015). Principles and practice of structural equation modeling. Guilford Publications.

Kuklinski, J. H., Quirk, P. J., Jerit, J., Schwieder, D., & Rich, R. F. (2000). Misinformation and the currency of democratic citizenship. The Journal of Politics, 62(3), 790–816.

Lavine, H. G., Johnston, C. D., & Steenbergen, M. R. (2012). The ambivalent partisan: How critical loyalty promotes democracy. Oxford University Press.

Leeper, T. J., & Slothuus, R. (2014). Political parties, motivated reasoning, and public opinion formation. Political Psychology, 35, 129–156.

Levendusky, M. S. (2018). Americans, not partisans: Can priming American national identity reduce affective polarization? The Journal of Politics, 80(1), 59–70.

Litman, L., Robinson, J., & Abberbock, T. (2017). TurkPrime.com: A versatile crowdsourcing data acquisition platform for the behavioral sciences. Behavior Research Methods, 49(2), 433–442.

Lodge, M., & Taber, C. (2000). Three steps toward a theory of motivated political reasoning. In Elements of reason: Cognition, choice, and the bounds of rationality (pp. 183–213). Cambridge University Press.

Lodge, M., & Taber, C. S. (2013). The rationalizing voter. Cambridge University Press.

Lupton, R., & Hare, C. (2015). Conservatives are more likely to believe that vaccines cause autism. Washington Post.

MacKinnon, D. P., Krull, J. L., & Lockwood, C. M. (2000). Equivalence of the mediation, confounding and suppression effect. Prevention Science, 1(4), 173–181.

MacKinnon, D. P., Lockwood, C. M., Hoffman, J. M., West, S. G., & Sheets, V. (2002). A comparison of methods to test mediation and other intervening variable effects. Psychological Methods, 7(1), 83.

Mason, L. (2018). Ideologues without issues: The polarizing consequences of ideological identities. Public Opinion Quarterly, 82(S1), 866–887.

McFatter, R. M. (1979). The use of structural equation models in interpreting regression equations including suppressor and enhancer variables. Applied Psychological Measurement, 3(1), 123–135.

Memon, M. A., Jun-Hwa, C., Ramyah, T., Ting, H., & Chuah, F. (2018). Mediation analysis issues and recommendations. Journal of Applied Structural Equation Modeling, 2(1), i–ix.

Motta, M. (2021). Republicans, not Democrats, are more likely to endorse anti-vaccine misinformation. American Politics Research. https://doi.org/10.1177/1532673X211022639.

Nicholson, S. P., Coe, C. M., Emory, J., & Song, A. V. (2016). The politics of beauty: The effects of partisan bias on physical attractiveness. Political Behavior, 38(4), 883–898.

Nyhan, B., & Reifler, J. (2010). When corrections fail: The persistence of political misperceptions. Political Behavior, 32(2), 303–330.

Panagopoulos, C., Green, D. P., Krasno, J., Schwam-Baird, M., & Endres, K. (2020). Partisan consumerism: Experimental tests of consumer reactions to corporate political activity. The Journal of Politics, 82(3), 996–1007.

Pearl, J. (2022). Direct and indirect effects. In Probabilistic and causal inference: The works of Judea Pearl (pp. 373–392). ACM.

Peer, E., Rothschild, D., Gordon, A., Evernden, Z., & Damer, E. (2021). Data quality of platforms and panels for online behavioral research. Behavior Research Methods, 54, 1–20.

Pennycook, G., Epstein, Z., Mosleh, M., Arechar, A. A., Eckles, D., & Rand, D. G. (2021). Shifting attention to accuracy can reduce misinformation online. Nature, 592(7855), 590–595.

Peterson, E., & Iyengar, S. (2021). Partisan gaps in political information and information-seeking behavior: Motivated reasoning or cheerleading? American Journal of Political Science, 65(1), 133–147.

Redlawsk, D. P. (2002). Hot cognition or cool consideration? Testing the effects of motivated reasoning on political decision making. The Journal of Politics, 64(4), 1021–1044.

Robison, J., & Moskowitz, R. L. (2019). The group basis of partisan affective polarization. The Journal of Politics, 81(3), 1075–1079.

Schaffner, B. F., & Luks, S. (2018). Misinformation or expressive responding? What an inauguration crowd can tell us about the source of political misinformation in surveys. Public Opinion Quarterly, 82(1), 135–147.

Schaffner, B. F., & Roche, C. (2016). Misinformation and motivated reasoning: Responses to economic news in a politicized environment. Public Opinion Quarterly, 81(1), 86–110.

Shrout, P. E., & Bolger, N. (2002). Mediation in experimental and nonexperimental studies: New procedures and recommendations. Psychological Methods, 7(4), 422.

Swire, B., Berinsky, A. J., Lewandowsky, S., & Ecker, U. K. (2017). Processing political misinformation: Comprehending the Trump phenomenon. Royal Society Open Science, 4(3), 160802.

Taber, C. S., & Lodge, M. (2006). Motivated skepticism in the evaluation of political beliefs. American Journal of Political Science, 50(3), 755–769.

Thorson, E. (2016). Belief echoes: The persistent effects of corrected misinformation. Political Communication, 33(3), 460–480.

Tibshirani, R. (1996). Regression shrinkage and selection via the LASSO. Journal of the Royal Statistical Society: Series B (Methodological), 58(1), 267–288.

Tingley, D., Yamamoto, T., Hirose, K., Keele, L., & Imai, K. (2014). Mediation: R package for causal mediation analysis. Journal of Statistical Software, 59(5).

Vegetti, F., & Mancosu, M. (2020). The impact of political sophistication and motivated reasoning on misinformation. Political Communication, 37(5), 678–695.

Wagner, M. (2021). Affective polarization in multiparty systems. Electoral Studies, 69, 102199.

Westwood, S. J., & Peterson, E. (2020). The inseparability of race and partisanship in the United States. Political Behavior, 44, 1–23.

Wojcieszak, M., & Warner, B. R. (2020). Can interparty contact reduce affective polarization? A systematic test of different forms of intergroup contact. Political Communication, 37(37), 1–23.

Zaller, J. R. (1992). The nature and origins of mass opinion. Cambridge University Press.

Zhao, X., Lynch, J. G., Jr., & Chen, Q. (2010). Reconsidering Baron and Kenny: Myths and truths about mediation analysis. Journal of Consumer Research, 37(2), 197–206.

Acknowledgements

The author thanks the participants in the University of Houston American Behavior Working Group and three anonymous reviewers for useful comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

A ANES Measures

Misinformation

Social Media Study:

Republican-favoring misinformation, with the misinformed response bolded:

1. Which of these do you think is most likely to be true?

[1] Russia tried to interfere in the 2016 presidential election

[2] Russia did not try to interfere in the 2016 presidential election

[Display on the same page as previous item after response is entered]

How confident are you about that?

[1] Not at all

[2] A little

[3] Moderately

[4] Very

[5] Completely

2. Which of these do you think is mostly likely to be true?

[1] Millions of people voted illegally in the 2016 election

[2] Very few people voted illegally in the 2016 election

[Confidence asked about the same way as the first question]

3. Which of these two statements do you think is most likely to be true about the Affordable Care Act of 2010 [ACA], also known as Obamacare?

[1] The ACA increased the number of people with health insurance

[2] The ACA did not increase the number of people with health insurance

[Confidence asked about the same way as the first question]

Democrat-favoring misinformation:

4. Whose administration deported more unauthorized immigrants during the first three years?

[1] Donald Trump’s administration