Abstract

Early diagnosis-treatment of melanoma is very important because of its dangerous nature and rapid spread. When diagnosed correctly and early, the recovery rate of patients increases significantly. Physical methods are not sufficient for diagnosis and classification. The aim of this study is to use a hybrid method that combines different deep learning methods in the classification of melanoma and to investigate the effect of optimizer methods used in deep learning methods on classification performance. In the study, Melanoma detection was carried out from the skin lesions image through a simulation created with the deep learning architectures DenseNet, InceptionV3, ResNet50, InceptionResNetV2 and MobileNet and seven optimizers: SGD, Adam, RmsProp, AdaDelta, AdaGrad, Adamax and Nadam. The results of the study show that SGD has better and more stable performance in terms of convergence rate, training speed and performance than other optimizers. In addition, the momentum parameter added to the structure of the SGD optimizer reduces the oscillation and training time compared to other functions. It was observed that the best melanoma detection among the combined methods was achieved using the DenseNet model and SGD optimizer with a test accuracy of 0.949, test sensitivity 0.9403, and test F score 0.9492.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Melanoma, a highly aggressive form of skin cancer originating from pigment-producing melanocyte cells, often spreads rapidly and ranks among the most common cancer types [1]. Main types include superficial spreading melanoma, nodular melanoma, lentigo maligna melanoma, and acral lentiginous melanoma. While UV radiation exposure is a primary cause, genetics, skin color, the presence of numerous moles, and weakened immunity also contribute. Early detection and treatment are crucial due to its hazardous nature, significantly boosting patient recovery rates. Classification of melanoma, based on parameters like asymmetry, irregular borders, color and shape changes, and size (typically larger than 6 mm), is vital for diagnosis, prognosis, and treatment planning [2]. Dermatologists rely on visual examination of skin lesions for the diagnosis and classification of melanoma. Nonetheless, factors such as variation in observation techniques person to person and the observer's momentary state can affect the accuracy of diagnosis and classification. In this regard, the use of machine learning methods has been promising. In particular, the deep learning approach has been observed to provide significant benefits in the diagnosis and classification of melanoma [3].

It has been observed that the visual examination of dermatologists is not sufficient due to the types of lesions and used examination techniques. Considering the importance of early diagnosis and classification for the healing of patients, the use of machine learning, especially deep learning methods, is an area of research. There are many studies on the use of deep learning methods for the early diagnosis and classification of melanoma in particular. ** N (2023) SkinLesNet: Classification of Skin Lesions and Detection of Melanoma Cancer Using a Novel Multi-Layer Deep Convolutional Neural Network. Cancers 2024(16):108. https://doi.org/10.3390/cancers16010108 " href="/article/10.1007/s11042-024-19561-6#ref-CR15" id="ref-link-section-d195623878e411">15] proposed the SkinLesNet algorithm. The SkinLesNet contains several convılutional layers, max-pooling layer and some feature extraction operations. They used different skin image datasets; PAD-UFES-20, HAM10000, and ISIC2017. The proposed method cis compared with VGG16 and ResNet50 and the result SkinLesNet has higher accuracy than other methods. All these studies are given in Table 1.

1.1 Motivation

Early diagnosis and classification of melanoma, one of the most common types of cancer, is vital for patients. Physical methods used by dermatologists for diagnosis and classification are not sufficient. In the literature, it has been shown that machine learning, especially deep learning methods, have been successfully used for melanoma diagnosis and classification. However, the differences in the optimizer methods used in these studies and their contribution to success have not been evaluated. In this study, our motivation is to use a hybrid method that combines different deep learning methods for the classification of melanoma, a dangerous type of skin cancer, and to investigate the contribution of optimizer methods used in deep learning methods to classification success.

1.2 Novelties and contributions

This study examines the use of deep learning methods in an important medical application area such as melanoma detection and the role of optimizer methods to improve its performance. Melanoma is one of the deadliest types of skin cancers and early detection and accurate classification are critical for the treatment and management of the disease. In the literature, various machine learning and deep learning approaches have been proposed for melanoma classification. However, the selection of an appropriate optimizer method to ensure high performance of deep learning models is important and studies on this topic are limited. In this study, the impact of optimizer methods on the performance of deep learning methods is investigated for the first time. Different optimizer methods are used and their effects on classification accuracy are evaluated.

The most important contributions of this study are as follows:

-

1.

Investigating the effects of transfer learning architectures: Forty different experimental runs were performed with five different transfer learning architectures and eight different optimizer functions in melanoma classification and the results were compared and interpreted.

-

2.

The first study of the impact of optimizer methods on the success of deep learning methods: This study is one of the first to focus on the success of optimizer methods to optimize the training of deep learning models in melanoma classification. Different optimizer methods such as SGD, Adam, RMSprop are extensively tested on the performance of the deep learning model. The results obtained help us understand the impact of different optimizer methods on the performance of the deep learning model.

As a result, this study is an important step towards optimizing the performance of deep learning methods in the vital healthcare field of melanoma detection. By enabling the successful use of deep learning models in melanoma classification, it can make a great contribution to early detection and treatment processes. Moreover, the results of the study can be applicable to the optimization of deep learning models in general and to other medical imaging problems. Therefore, this research in the field of melanoma classification can open new doors in an important intersection between medicine and artificial intelligence and can be an important reference source for future studies.

The rest of the paper, Section-2 Materials and Methods gives information about dataset, dimension reduction, and classification methods that are used in this study. Section-3 Experimental Evaluations shows the results of all analyses and discussions. The Conclusion is given in Section-4 and summarizes the whole work.

2 Materials and Methods

In this study, the Melanoma Skin Cancer Dataset [16] was used to classify benign/malignant status for melanoma skin disease. The data set consists of 10,605 RGB images in 300 × 300 size, 5500 benign and 5105 malignant. In the study, classification processes are carried out on this data set by using different deep learning architectures and optimizer methods.

2.1 Transfer Learning

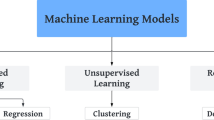

Deep learning is a field that improves the learning and analysis capabilities of computer systems by focusing on complex tasks. This approach usually involves multilayer artificial neural networks trained on large data sets. Deep learning is particularly effective in processing complex visual and signal data, improving automatic learning capabilities by extracting learned features hierarchically [17, 18].

Transfer Learning is a machine learning approach that is often used in deep learning techniques. This approach involves adapting the features of a pre-trained model to another task to solve it. This way, a higher success rate can be achieved on a smaller data set and the training time of the model can be reduced [19, 20].

Transfer Learning is often used, especially in image processing. For example, many image classification tasks can be initiated from a pre-traned model trained on a large data set such as ImageNet. This model can then be used for a more customized classification task by adapting it to a new data set [21].

MobileNet, a convolutional neural network model developed by Google; features fast model convergence, low memory consumption and low computational overhead [22]. In addition, it uses fewer parameters compared to other models. Thanks to these features, it is very useful in real-time classification operations on low computing devices. Although it is suitable for 224 × 224 inputs, it can also be used for any size input larger than 32 × 32 [23]. The MobileNet architectural structure is shared in Fig. 1.

DenseNet, proposed by Huang et al. provides cross-layer information flow in deep networks. This model won the best article award at CVPR2017. The DenseNet working process takes place in such a way that each layer receives additional inputs from all previous layers and transfers its own feature map to all subsequent layers [24]. DenseNet architecture is shown in Fig. 2 in general terms. Compared to other deep learning architectures, it has advantages such as having fewer parameters, reuse of features and overcoming the problem of gradient backpropagation disappearance. In addition to these, there are also disadvantages such as excessive memory usage due to the fact that the training time takes time due to layer density and the merging of feature maps [25, 26].

Inceptionv3, a deep neural network architecture released by Google that won ILSVRC (ImageNet Large Scale Visual Recognition) in 2014. Its basic principle is to reduce the number of connections without reducing network efficiency [27]. Thus, it offers a solution to the overfitting problem in traditional networks with densely interconnected layers. It also reduces the computational cost thanks to the sparse connection. The InceptionV3 architecture, which offers a more efficient operation with fewer parameters, is state-of-the-art architecture used in image classification studies [28]. The architectural model is presented in Fig. 3.

ResNet50(Residual Neural Network), which has the advantage of having a deeper structure compared to other deep learning architectures, also offers a solution to the disappearing gradient problem experienced in previous architectures [22]. Thanks to the method of adding shortcuts between the layers in its working principle, it prevents the deterioration that may occur in case the network becomes deeper and more complex. In addition, bottleneck blocks are used for faster training [29]. The ResNet50 architectural structure is shared in Fig. 4.

InceptionResnetV2 deep learning architecture is proposed by examining the positive effects of introducing residual connections on the performance of Inception architecture. A more advanced, 164-layer deep neural network model was created by combining two state-of-the-art models such as Inception and Residual networks (shown in Fig. 5). Thanks to ResNet, it is ensured that the model is deeper, avoiding the disappearing gradient problem, and the learning process is fast. Inception architecture, on the other hand, provides advantages such as lower computational cost, better performance and preventing information loss by using ReLU activation function [30]. The InceptionResNetv2 architecture is trained on over one million photos from the ImageNet dataset. Thus, it has been seen that architecture can define images in 1000 item categories [31].

2.2 Optimizer Methods

Optimizer methods are the methods used to minimize the error, that is, the difference between the output value produced by the network and the actual value. In deep learning processes, the absolute minimum value of the error function is found by using optimizer methods. Thus, it is aimed to realize the learning processes in a healthier way. One of the most important factors in the use of optimizer methods is the learning rate. However, setting the appropriate learning rate for each algorithm is a difficult process. In order to overcome this difficulty, different models of gradient methods have been developed [32].

SGD (Stochastic Gradient Descent) is an optimizer method that works iteratively to find the optimal parameter value in deep learning algorithms. It frequently updates the parameter values to reduce the error value. It runs slowly as a new predictive value is calculated for the training data at each iteration. Due to this feature, it is not suitable for use in large data sets. Its main advantage is that it is efficient and an easy-to-apply method [33].

AdaGrad optimizer method aims to overcome this problem by using a different learning rate at each step. A fixed learning rate is used in the SGD optimizer method. It calculates the learning rate based on the sum of the squares of the past gradients [34].

RMSProp (Root Mean Squared Propagation) has been proposed as a solution to the constant learning rate problem. It differs from AdaGrad in terms of the gradient sum method. By discarding the past gradient information, operations are performed with the last gradient information. Gradients are calculated by taking the exponentially weighted average. It is a gradient-based optimizer method whose learning rate changes over time and a separate value is applied for each parameter [35, 36].

AdaDelta is the proposed optimizer method to overcome AdaGrad's learning rate problem. In case of too many iterations, too small learning rate causes slow convergence. When this method is used, there is no obligation to choose a learning rate. Instead of the learning rate, the momentum sums of squares of the delta value, which indicates the difference between the current weights and the updated weights, are used. Thus, taking the exponentially decreasing average value eliminates the slowness problem [34, 37].

Adam (Adaptive Moment Estimation) is designed mainly for deep learning studies. It is aimed to obtain the most appropriate value by adding momentum to the RMSProp method. It is an optimizer method that keeps the exponentially descending average of historical gradients and adjusts adaptive learning rates for each parameter. Its most important feature is to find individual adaptive learning rates for various parameters [38,39,40].

Nadam (Nesterov-accelerated Adaptive Moment Estimation) is formed by combining Adam and Nesterov Momentum. It uses an exponential growth method based on the moving average of the gradients. Thus, it accelerates the learning process during model training. The Nadam converges faster than Adam. It is often preferred because it is simple to apply and efficient to calculate [6. Within the scope of the study, transfer learning models were trained. Model training was done in a cloud environment using Google Colab.

The optimizer algorithms are used to minimize errors in training. Experimental analyzes were conducted to examine the effect of Optimizers on deep learning models. Melanoma detection was performed from skin lesions images through simulation. The simulation was created with seven different optimizer functions with different deep learning architectures DenseNet, InceptionV3, ResNet50, InceptionResNetV2 and MobileNet. In experimental studies, SGD, Adam, RmsProp, AdaDelta, AdaGrad, Adamax and Nadam Optimizers were used. For the performance evaluation of deep learning models, the data set is divided into 80% training (8484 images) and 20% testing (2121 images). In Table 3–7 shows the results of DenseNet, InceptionV3, ResNet50, InceptionResNetV2 and MobileNet models that detect Melanoma disease. In the experiments, the activation function ReLU and the error function cross-entropy were preferred.

In Table 3, the loss, accuracy, f-score and sensitive informations are observed. These informations were obtained from a simulation with 50 epochs. The increase in accuracy performance was investigated by comparing the ADAM function, which is used extensively on deep learning architectures, with other optimizer functions. In the experimental analyzes performed on the DenseNet legacy, it is seen that the SGD optimizer gives more successful results than the other optimizers.

Figure 7 displays the average validation loss values and their standard deviations for different optimizers applied to the Melanoma dataset for DenseNet model. The distance between the extreme values in the box plot is very small. This indicates that the performance of different activation functions on the Melanoma dataset for the DenseNet model is relatively consistent, with minimal variation between the best and worst results. The box plot lengths of the SGD and AdaGrad optimizers are shorter than the other box plot lengths. A shorter box plot suggests that the results using these activation functions have less variability and are more concentrated around the median value. The distance of whiskers to the box is closed for SGD and AdaGrad. This means that the data points representing the results for these activation functions are not significantly spread out beyond the whiskers, indicating stability in the results. The results of the simulation process created with the InceptionV3 model and seven different optimizer functions are presented in Table 4.

In Table 4, the results of the performance criteria obtained in the classification of melanoma disease with the InceptionV3 model are given. When the results are examined, it is seen that the SGD optimizer is more effective than other optimizer functions on the InceptionV3 architecture. The closest to the results obtained with the SGD optimizer is Adamax in the InceptionV3 architecture.

Figure 8 displays the average validation loss values and their standard deviations for different optimizers applied to the Melanoma dataset for InceptionV3model. The distance between the extreme values in the box plot is very small. This indicates that the performance of different activation functions on the Melanoma dataset for InceptionV3 model is relatively consistent, with minimal variation between the best and worst results. The box plot lengths of the SGD optimizer are shorter than the other box plot lengths. A shorter box plot suggests that the results using these activation functions have less variability and are more concentrated around the median value. The distance of whiskers to the box is closed for SGD and AdaDelta. This means that the data points representing the results for these activation functions are not significantly spread out beyond the whiskers, indicating stability in the results. The median value for SGD is in the middle of the box. This indicates that the median performance using these activation functions is relatively central and not skewed towards extreme values. The results for RmsProp are less stable compared to the other optimizers. This is evident from the larger distance between the lower whiskers and the box, as well as the median value being far from the middle. These characteristics suggest that RmsProp shows more variability and inconsistency in its performance on the InceptionV3 model. The results of the simulation process created with the ResNet50 model and seven different optimizer functions are presented in Table 5.

In Table 5, the results of the performance criteria obtained in the classification of melanoma disease with the ResNet50 model are given. When the results are examined, it is seen that the SGD optimizer is more effective than other optimizer functions on the ResNet50 architecture. The closest to the results obtained with the SGD optimizer is AdaGrad. In addition, it is observed that AdaGrad is the closest to the results obtained with the SGD optimizer. The AdaGrad optimizer function is inspired by the SGD optimizer. It has been observed that results close to each other are obtained due to their similar structures.

Figure 9 displays the average validation loss values and their standard deviations for different optimizer applied to the Melanoma dataset for InceptionV3model. The distance between the extreme values in the box plot is very small. This indicates that the performance of different activation functions on the Melanoma dataset for ResNet50 model is relatively consistent, with minimal variation between the best and worst results. The box plot lengths of the SGD optimizer are shorter than the other box plot lengths. The median value for SGD is in the middle of the box. This indicates that the median performance using these activation functions is relatively central and not skewed towards extreme values. The results of the simulation process created with the InceptionResNetV2 model and seven different optimizer functions are presented in Table 6.

In Table 6, the results of the performance criteria obtained in the classification of melanoma disease with the InceptionResNetV2 model are given. When the results are examined, it is seen that the SGD optimizer is more effective than other optimizer functions on the InceptionResNetV2 architecture. In addition, 0.9434 accuracy, 0.9479 f-score and 0.3457 loss values were obtained with SGD.

Figure 10 displays the average validation loss values and their standard deviations for different optimizers applied to the Melanoma dataset for InceptionV3model. The distance between the extreme values in the box plot is very small. This indicates that the performance of different activation functions on the Melanoma dataset for InceptionResNetV2 model is relatively consistent, with minimal variation between the best and worst results. The box plot lengths of the SGD optimizer are shorter than the other box plot lengths. The results for RmsProp are less stable compared to the other optimizers. This is evident from the larger distance between the lower whiskers and the box, as well as the median value being far from the middle. These characteristics suggest that RmsProp, Adam, and Nadam show more variability and inconsistency in its performance on the InceptionResNetV2 model. The results of the simulation process created with the MobileNet model and seven different optimizer functions are presented in Table 7.

In Table 6, the results of the performance criteria obtained in the classification of melanoma disease with the MobileNet model are given. When the results are examined, Adagrad Optimizer is more effective than other optimizer functions on Inceptionresnetv2 architecture. In addition, 0.9323 accuracy with Adagrad, 0.9351 f-score and 0.2089 loss values were obtained. Adagrad Optimizer function has been developed inspired by SGD optimizer.

Figure 11 displays the average validation loss values and their standard deviations for different optimizer applied to the Melanoma dataset for MobilNet. The distance between the extreme values in the box plot is very small. This indicates that the performance of different activation functions on the Melanoma dataset for MobileNet model is relatively consistent, with minimal variation between the best and worst results. The box plot lengths of the AdaGrad optimizer are shorter than the other box plot lengths. The results for RmsProp are less stable compared to the other optimizers. This is evident from the larger distance between the lower whiskers and the box, as well as the median value being far from the middle. The results of the simulation process created with the all models are presented in Table 8.

In Table 8, the effects of five different transfer learning methods DenseNet, InceptionV3, ResNet50, InceptionResNetV2 and MobileNet. Therefore, seven different optimizer functions (SGD, Adam, RmsProp, AdaDelta, AdaGrad, Adamax and Nadam) on the detection of melanoma disease were examined. It is observed that the best melanoma detection is performed with the DenseNet model and SGD optimizer. Momentum parameter is added to the structure of SGD optimizer in order to reduce oscillation and training time compared to other functions. Therefore, SGD optimizer gives high performance in all deep learning architectures except MobileNet, compared to other optimizer functions. Table 8 also shows that DenseNet offers the highest performance with 0.9490 accuracy, 0.9492 f-score and 0.1809 loss.

4 Discussion

In deep learning architectures, the learning process is basically expressed as an optimization problem. Optimizer methods are commonly used to find the optimum value in the solution of nonlinear problems. In deep learning applications, optimizer functions such as stochastic gradient descent (SGD), adagrad, adadelta, adam, and adamax are commonly used. There are differences between these functions in terms of performance and speed. When the momentum value is used in the SGD optimizer, it is observed that SGD performs better than adaptive methods in terms of performance. Performance comparisons of the optimizer functions used with five different DenseNet, InceptionV3, ResNet50, InceptionResNetV2 and MobileNet models were performed. These models were made to classify the disease in melanoma images with the image processing method. In light of the results obtained, DenseNet model and SGD optimizer are more successful than other methods with an accuracy of 0.9490. The results of the study were compared with the studies in the literature in the last two years as shown in Table 9.

When the studies conducted with different datasets for the same purpose in the literature are examined, it is seen that the results of our study are more accurate than the other studies. When the two studies with higher accuracy are examined, it is thought that the number of images in the dataset is very small compared to our study and the datasets in the related studies are not as diverse as desired. In this context, the results obtained reveal that different optimizer functions positively affect the classification performance of transfer learning architectures, which is our main motivation in this study.

Optimizer methods are used to prevent deep learning architectures from making too much oscillation during training and to minimize errors. Optimizer processes are structures that can be operated step by step in deep learning architectures. Back propagation algorithm is used to update parameters in deep learning architectures. In order to find the difference in the derivative operation in the back-propagation algorithm and calculate the difference value step by step, it is multiplied by the learning rate and the new weights of the architecture are calculated. In addition to the learning rate, the momentum value is added to the optimizer functions to reduce the oscillation and perform a more consistent optimization process faster [46]. Thanks to the added momentum parameter, instead of taking the newly produced value as it is, the new value is calculated by adding the beta coefficient to the previous value. Thus, noise and oscillations in the graphics are reduced with the SGD optimizer and a faster method is created [47,48,49,50].

In this study, SGD (Stochastic Gradient Descent) stands out against other optimizers such as adagrad, adadelta, adam and adamax due to its simplicity, speed, generalisability and compatibility with large data sets. Its ability to perform gradient update on a single randomly selected sample at each iteration, its tendency to reduce overfitting, and its ideal for situations where the entire data set cannot be processed simultaneously due to memory limitations make SGD attractive. Its easy integration with momentum techniques provides a robust alternative to situations where optimizers with adaptive learning rate may be more prone to overfitting. Furthermore, its direct control over hyperparameters such as the learning rate allows researchers to optimizer model performance. These features make SGD a preferred optimizer method in deep learning projects.

5 Conclusions

Early diagnosis and classification of melanoma, one of the most common types of cancer, is vital for patients. The physical methods used by dermatologists for diagnosis and classification are not sufficient. In the literature, machine learning and deep learning methods are frequently mentioned to play an effective role in the diagnosis and classification of the disease. In deep learning architectures, the learning process is basically expressed as an optimization problem. The crucial goal of machine learning in a particular set of scenarios is to create a model that performs effectively and offers thorough predictions; however, optimization strategies are required to do that. Melanoma detection was performed from skin lesions images through simulation created with seven different optimizer functions. And these functions were used with different deep learning architectures DenseNet, InceptionV3, ResNet50, InceptionResNetV2 and MobileNet. We analyzed optimizers that are most used for optimizer: SGD, Adam, RmsProp, AdaDelta, AdaGrad, Adamax and Nadam. The results of the study show that SGD performs better and consistently in terms of convergence rate, training speed and performance compared to other optimization strategies. The SGD gives higher accuracy with 94.90% when used with the DenseNet algorithm. The limitation of the study may be that the experimental studies were applied only to the melanoma dataset and that seven of the deep learning models were used. In future works, new optimizer functions that will increase the accuracy and time performance of deep learning architectures will be proposed and tested on different data sets. In addition, the effect of optimizer functions on Vision Transformer architectures will be examined.

Data Availability

Contacting the corresponding authors Codella [16], or accessing to the study's dataset can be found here, https://www.kaggle.com/datasets/kmader/skin-cancer-mnist-ham10000.

References

Poornimaa JJ, Anitha J, Henry AP, Hemanth DJ (2023) Melanoma Classification Using Machine Learning Techniques: Design Studies and Intelligence Engineering. Front Artif Intell Appl 365:178–185

Adepu AK, Sahayam S, Jayaraman U, Arramraju R (2023) Melanoma Classification from Dermatoscopy İmages Using Knowledge Distillation for Highly İmbalanced Data. Comput Biol Med 154(2023):106571

Tembhurne JV, Hebbar N, Patil HY, Diwan T (2013) Skin Cancer Detection Using Ensemble of Machine Learning and Deep Learning Techniques: Multimed Tools Applications. https://doi.org/10.1007/s11042-023-14697-3

**e F, Fan H, Li Y, Jiang Z, Meng R, Bovik A, (2017) Melanoma Classification on Dermoscopy Images Using a Neural Network Ensemble Model: Transactions on Medical Imaging, 36, 3, 849–858, IEEE

Brinker TJ, Hekler A, Enk AH, Berking C, Haferkamp S, Hauschild A, Weichenthal M, Klode J, Schadendorf D, Holland-Letz T, Kalle CV, Fröhling S, Schilling B, Utikal JS (2019) Deep Neural Networks Are Superior to Dermatologists İn Melanoma İmage Classification. Eur J Cancer 119:11–17

Perez F, Avila S, Valle E, (2019) Solo or Ensemble? Choosing a CNN Architecture for Melanoma Classification: CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), IEEE

Naeem, A., Farooq, M.S., Khelifi, A., & Abid, A., (2020). "Malignant Melanoma Classification Using Deep Learning: Datasets, Performance Measurements, Challenges and Opportunities: IEEE Access, 8, (pp. 110575–110597), IEEE

Zhang Y, Wang C, (2021) SIIM-ISIC Melanoma Classification with DenseNet: 2021 2nd International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), (pp. 14–17), IEEE

Ramadan R, Aly S (2022) CU-net: a new improved multi-input color U-net model for skin lesion semantic segmentation. IEEE Access 10:15539–15564

Alenezi F, Armghan A, Polat K, (2023) A Multi-Stage Melanoma Recognition Framework with Deep Residual Neural Network and Hyperparameter Optimization-Based Decision Support in Dermoscopy İmages: Expert Systems with Applications: An International J, 215

Keerthana, D., Venugopal, V., Nath, M.K., & Mishra, M., (2023). Hybrid Convolutional Neural Networks with SVM Classifier for Classification of Skin Cancer. Biomed Eng Adv, 5

Alenezi F, Armghan A, Polat K (2023) A Novel Multi-Task Learning Network Based on Melanoma Segmentation and Classification with Skin Lesion Images. Diagnostics (Basel) 13(2):262

Bandy, A D, Spyridis Y, Villarini B, Argyriou V (2023) Intraclass Clustering-Based CNN Approach for Detection of Malignant Melanoma, Sensors, 23(2), 926. MDPI AG

Abbas Q, Gul A (2023) Detection and Classification of Malignant Melanoma Using Deep Features of NASNet: SN Coumputer. Science 4:21

Azeem M, Kiani K, Mansouri T, Top** N (2023) SkinLesNet: Classification of Skin Lesions and Detection of Melanoma Cancer Using a Novel Multi-Layer Deep Convolutional Neural Network. Cancers 2024(16):108. https://doi.org/10.3390/cancers16010108

Codella, N.C., Gutman, D., Celebi, M.E., Helba, B., Marchetti, M.A., Dusza, S.W., Kalloo, A., Liopyris, K., Mishra, N., Kittler, H. & Halpern, A., (2018). Skin lesion analysis toward melanoma detection: A challenge at the 2017 international symposium on biomedical imaging (isbi), hosted by the international skin imaging collaboration (isic). In 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018) (pp. 168–172). IEEE

Rajagopal, A., Joshi, G. P., Ramachandran, A., Subhalakshmi, R. T., Khari, M., Jha, S., ... & You, J. (2020). A deep learning model based on multi-objective particle swarm optimization for scene classification in unmanned aerial vehicles. IEEE Access, 8, 135383–135393

Srivastava S, Khari M, Crespo R G, Chaudhary G, Arora P (Eds.) (2021) Concepts and real-time applications of deep learning

Yu X, Wang J, Hong QQ, Teku R, Wang SH, Zhang YD (2022) Transfer learning for medical images analyses: A survey. Neurocomputing 489:230–254

Atasever S, Azginoglu N, Terzi DS, Terzi R (2023) A comprehensive survey of deep learning research on medical image analysis with focus on transfer learning. Clin Imaging 94:18–41

Tang H, Cen X, (2021) A Survey of Transfer Learning Applied in Medical Image Recognition, 2021 IEEE International Conference on Advances in Electrical Engineering and Computer Applications (AEECA), Dalian, China. 94–97, https://doi.org/10.1109/AEECA52519.2021.9574368

Ou L, & Zhu K, (2022) Identification Algorithm of Diseased Leaves based on MobileNet Model: 2022 4th International Conference on Communications, Information System and Computer Engineering (CISCE), Shenzhen, China, (pp. 318–321)

Penmetsa AV, Sarma TH (2022) Crop Type and Stress Detection using Transfer Learning with MobileNet: 2022 International Conference on Emerging Techniques in Computational Intelligence (ICETCI), Hyderabad, India, (pp. 1–4)

Zhang K, Guo Y, Wang X, Yuan J, Q Ding (2019) Multiple Feature Reweight DenseNet for Image Classification: IEEE Access 7 9872–9880

Nugroho A, Suhartanto H (2020) Hyper-Parameter Tuning based on Random Search for DenseNet Optimization: 2020 7th International Conference on Information Technology, Computer, and Electrical Engineering (ICITACEE), (pp. 96–99)

Yousef R, Gupta G, Yousef N, Khari M (2022) A holistic overview of deep learning approach in medical imaging. Multimedia Syst 28(3):881–914

Raihan M, Suryanegara M, (2021) Classification of COVID-19 Patients Using Deep Learning Architecture of InceptionV3 and ResNet50: 2021 4th International Conference of Computer and Informatics Engineering (IC2IE), (pp. 46–50)

Abhange N, Ga S, Paygude S, (2021) COVID-19 Detection Using Convolutional Neural Networks and InceptionV3: 2021 2nd Global Conference for Advancement in Technology (GCAT), (pp. 1–5)

Narin A, Kaya C, Pamuk Z, (2021) Automatic detection of coronavirus disease (COVID-19) using X-ray images and deep convolutional neural networks.: Pattern Anal Applic 24, (1207–1220)

Jethwa N, Gabajiwala H, Mishra A, Joshi P, Natu P, (2021) Comparative Analysis between InceptionResnetV2 and InceptionV3 for Attention based Image Captioning: 2021 2nd Global Conference for Advancement in Technology (GCAT), (pp. 1–6)

Guefrechi S, Jabra MB, Hamam H (2022) Deepfake video detection using InceptionResnetV2: 2022 6th International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), (pp. 1–6)

Ser G, Bati CT (2019) Derin Sinir Ağları ile En İyi Modelin Belirlenmesi: Mantar Verileri Üzerine Keras Uygulaması: Yuzuncu Yıl University Journal of. Agric Sci 29(3):406–417

Özdemir, M.F., Arı, A., & Hanbay, D., (2021). Çoklu Nesne Takibi FairMOT Algoritması İçin Optimizasyon Algoritmalarının Karşılaştırılması: Computer Science, 5th International Artificial Intelligence and Data Processing symposium, 147–153

Seyyarer E, Karci A, Ateş, A (2022) Stokastik ve deterministik hareketlerin optimizasyon süreçlerindeki etkileri: Gazi Üniversitesi Mühendislik Mimarlık Fakültesi Dergisi, 37 (2), 949-966

Zaheer R, Shaziya H, (2019) A Study of the Optimization Algorithms in Deep Learning: Third International Conference on Inventive Systems and Control (ICISC), (pp. 536–539)

Hadipour-Rokni R, Asli-Ardeh EA, Jahanbakhshi A, paeen-Afrakoti IE, Sabzi S (2023) Intelligent Detection of Citrus Fruit Pests Using Machine Vision System and Convolutional Neural Network Through Transfer Learning Technique: Computers in Biology and Medicine, 155

Kale RS, Shitole S (2022) Deep learning optimizer performance analysis for pomegranate fruit quality gradation: Bombay Section Signature Conference (IBSSC), (pp. 1–5), IEEE

Taqi AM, Awad A, Al-Azzo F, Milanova M, (2018) The Impact of Multi-Optimizers and Data Augmentation on TensorFlow Convolutional Neural Network Performance: Conference on Multimedia Information Processing and Retrieval (MIPR), (pp. 140–145), IEEE

Şen SY, Ozkurt N (2020) Convolutional Neural Network Hyperparameter Tuning with Adam Optimizer for ECG Classification: Innovations in Intelligent Systems and Applications Conference (ASYU), (pp. 1–6)

Karadağ B, Arı A, Karadağ M (2021) Derin Öğrenme Modellerinin Sinirsel Stil Aktarımı Performanslarının Karşılaştırılması. Politeknik Dergisi 24(4):1611–1622

**ao B, Liu Y, **ao B (2019) Accurate State-of-Charge Estimation Approach for Lithium-Ion Batteries by Gated Recurrent Unit with Ensemble Optimizer. IEEE Access 7:54192–54202

Sun J, Li P, Wu X, (2022) Handwritten Ancient Chinese Character Recognition Algorithm Based on Improved Inception-ResNet and Attention Mechanism: 2nd International Conference on Software Engineering and Artificial Intelligence (SEAI), (pp. 31–35), IEEE

Panigrahy S, Karmakar S, Sahoo R, (2021) Condition Assessment of High Voltage Insulator using Convolutional Neural Network: International Conference on Electronics, Computing and Communication Technologies (CONECCT), (pp. 1–6), IEEE

Ariff NAM, Ismail AR (2023) Study of Adam and Adamax Optimizers on AlexNet Architecture for Voice Biometric Authentication System: 17th International Conference on Ubiquitous Information Management and Communication (IMCOM), (pp. 1–4)

Landro N, Gallo I, La Grassa R (2020) Mixing ADAM and SGD: A combined optimization method. ar**v preprint ar**v:2011.08042

Wilson AC, Roelofs R, Stern M, Srebro N, Recht B (2017) The marginal value of adaptive gradient methods in machine learning. Adv Neural Inf Process Syst, 30

Mengüç, K., Aydin, N., & Ulu, M. (2023). Optimisation of COVID-19 vaccination process using GIS, machine learning, and the multi-layered transportation model. Int J Prod Res, 1–14

Huang S, Arpaci I, Al-Emran M, Kılıçarslan S, Al-Sharafi MA (2023) A comparative analysis of classical machine learning and deep learning techniques for predicting lung cancer survivability. Multimed Tools Appl 82(22):34183–34198

Bülbül MA (2024) Optimization of artificial neural network structure and hyperparameters in hybrid model by genetic algorithm: iOS–android application for breast cancer diagnosis/prediction. J Supercomput 80(4):4533–4553

Diker A, Elen A, Közkurt C, Kılıçarslan S, Dönmez E, Arslan K, Kuran EC (2024) An effective feature extraction method for olive peacock eye leaf disease classification. Eur Food Res Technol 250(1):287–299

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kılıçarslan, S., Aydın, H.A., Adem, K. et al. Impact of optimizers functions on detection of Melanoma using transfer learning architectures. Multimed Tools Appl (2024). https://doi.org/10.1007/s11042-024-19561-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11042-024-19561-6