Abstract

The selection of well-conditioned sub-matrices is a critical concern in problems across multiple disciplines, particularly those demanding robust numerical stability. This research introduces an innovative, AI-assisted approach to sub-matrix selection, aimed at enhancing the form-finding of reticulated shell structures under the xy-constrained Force Density Method (also known as Thrust Network Analysis), using independent edge sets. The goal is to select a well-conditioned sub-matrix within a larger matrix with an inherent graph interpretation where each column represents an edge in the corresponding graph. The selection of ill-conditioned edges poses a significant challenge because it can render large segments of the parameter space numerically unstable, leading to numerical sensitivities that may impede design exploration and optimisation. By improving the selection of edges, the research assists in computing a pseudo-inverse for a critical sub-problem in structural form-finding, thereby enhancing numerical stability. Central to the selection strategy is a novel combination of deep reinforcement learning based on Deep Q-Networks and geometric deep learning based on CW Network. The proposed framework, which generalises across a trans-topological design space encompassing patterns of varying sizes and connectivity, offers a robust strategy that effectively identifies better-conditioned independent edges leading to improved optimisation routines with the potential to be extended for sub-matrix selection problems with graph interpretations in other domains.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

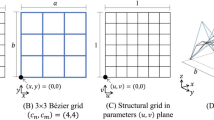

Research on shell form-finding has grown recently [1,2,3,4,5] driven by the need and opportunities to design free-form or efficient structural systems. Structural form-finding is a process used in architecture and engineering to determine a structure's optimal shape and form under an envelope of applied loads [6]. This work focuses on the form-finding of reticulated equilibrium shell structures (RESS). Figure 1 shows that they are discrete networks form-found from structural patterns—a process whereby the patterns’ nodes are equilibrated into 3-D space, resulting in RESS designs that achieve equilibrium against applied loads strictly through axial structural action.

Thrust Network Analysis is a variant of the Force Density Method that performs form-finding based on constrained vertical projection, map** patterns \({\varvec{\Gamma}}\) into designs with equilibrated force distributions \({{\varvec{\Gamma}}}^{\star }\) and surface geometries \(\mathcal{S}\), resulting in equilibrated networks \(\mathbf{G}\)

An overview of form-finding algorithms for reticulated structures and their computational performance is provided in [7]; they include Geometric Stiffness Methods, Stiffness Matrix Methods, and Dynamic Equilibrium Methods. This work supports the first family of methods, which are material-independent and usually rely on the Force Density Method (FDM) [8]. FDM enables the linearisation of the equilibrium equations of reticulated shell structures by introducing the force density ratio as the quotient among the force in a member and its length.

Many applications of FDM exist for structural form-finding, including conceptual design [9,10,11,12,13], masonry assessment [14,15,16,17] and graphic statics [18,19,20]. These applications are often combined with optimisation procedures: common objectives include finding equilibrium solutions that ‘best-fit’ a target geometry [20], minimise material or load path [13] and minimise energy [21,22,23]. More recently, researchers developed interactive form-finding tools to offer designers real-time feedback between edits on a system’s forces and its equilibrium geometry [24,25,26], combining the (constrained) form-finding algorithms with data-driven approaches [27,28,29], and develo** filters to modify global properties instead of local forces [30, 31].

This work enhances a particular constrained adaptation of the FDM based on fixed vertical projection called Thrust Network Analysis (TNA), which has found extensive applications in multiple fields. These include masonry analysis [15, 32], constrained best-fit [14], and planar structural analysis [33]. Constraining the planar projection of networks is useful in many aspects to ease fabrication and aesthetics, or to model specific features of existing buildings.

Irrespective of the form-finding algorithms used, most frameworks for designing reticulated shell structures are paired with optimisation to enable the solving of inverse form-finding problems. In inverse problems, designers are interested in achieving particular performance goals (e.g., structural geometry, length equalisation, material minimisation) by modifying the forces in a system, requiring the inverse of the problem’s equilibrium matrices. For this operation, ill-conditioned matrices cause numerical instability, leading to numerical sensitivities that may significantly impede design exploration and optimisation.

In the case of TNA, the geometric constraints reflect reduced degrees of freedom (DOFs) in the systems. One way to represent this reduction in DOF is by selecting a subset of edges in a pattern \({\varvec{\Gamma}}\), also called independent edges or \({E}_{{\text{I}}}\), whose forces can uniquely characterise the entire equilibrium design space for the given pattern. Selecting \({E}_{{\text{I}}}\) corresponds to selecting a subset of linearly dependent columns from the problem’s equilibrium matrix \(\mathbf{E}\) such that the remaining linearly independent matrix can be inverted for form-finding. Mathematically, the remaining matrix should be well-conditioned and full rank so that different analyses can be performed on the problem.

Removing linearly dependent columns in a matrix and ensuring that the remaining portion of the matrix is well-conditioned, however, is a hard sequential and combinatorial problem. The prevalent body of work in column subset selection for large matrices focuses on finding a representative subset of columns from the original matrix for low-rank approximation or least-squares approximation problems. Extracting relevant sub-matrices can improve model performance, achieve dimensionality reduction, and therefore reduce computational complexity and enable more efficient and targeted analysis [34]. Substantial research has investigated randomised methods. For example, the Nystrom method randomly selects a subset of columns or rows, ho** that the chosen subset captures the structure of the original data [35]. See [34] for an extensive investigation of algorithms for similar tasks. These algorithms are highly effective; however, their formulation depends on properties specific to approximation problems. Goreinov et al. [36] provides the only research considering the selection of well-conditioned non-singular sub-matrix as key criteria. However, it requires matrix determinants to be computed and can therefore support only square matrices. Selection strategies for general matrices remain an open question.

This paper revisits this problem by proposing a novel combination of deep reinforcement learning (DRL) based on Deep Q-Networks (DQN) [37] and geometric deep learning (GDL) based on CW Network to automate or direct selection of a pattern’s DOFs, leading to more well-conditioned form-finding. As mentioned, form-finding with constrained vertical projection using TNA is considered. Improving the selection of \({E}_{{\text{I}}}\) assists in the computation of pseudo-inverses for a critical sub-problem of TNA, thereby enhancing its numerical stability. The proposed framework generalises across a trans-topological design space encompassing patterns of varying sizes and connectivity, offering a robust strategy with potential implications for sub-matrix selection problems with graph interpretations in other domains.

Overall, this paper is organised to deepen knowledge of the complexities of the independent edge selection problem and to provide a DRL-assisted remedy. Accordingly, Sect. 2 mathematically reviews fundamental expressions of FDM and TNA using independent edge sets. Next, Sect. 3 presents current approaches for selecting independent edges, describing the characteristics of the edge selection problem and relating them to structural theory and geometric intuition. Building on these concepts, Sect. 4 investigates the effects of ill-conditioning in edge selection to design exploration and optimisation algorithms. Moving towards implementation, Sect. 5 defines a trans-topological design generator to generate training data, Sect. 6 articulates the DRL framework developed in this work to solve the selection problem, and Sect. 7 summarises the trained model’s independent edge selections. Lastly, Sects. 8 and 9 respectively offer a brief discussion and summarise the findings and contributions of this work.

2 Mathematical formulation of force density method

2.1 Equilibrium of reticulate structures using force densities

An equilibrium design or network is defined as \(\mathbf{G}:= \{V, E,F\}\), with \(V, E\) and \(F\) denoting respectively the set of \(\left|V\right|\) vertices, \(\left|E\right|\) edges, and \(\left|F\right|\) faces. The vertex set \(V\) in turn is a combination of \(\left|{V}_{{\text{N}}}\right|\) internal, unsupported or free vertices, and \(\left|{V}_{{\text{F}}}\right|\) support or fixed vertices.

The central quantity of FDM is the edge-wise force density parameter \({q}_{e}={f}_{e}/{l}_{e}\), calculated as the ratio between the force \({f}_{e}\) in an edge \(e\) of the network and its length \({l}_{e}\) cast in the vector \({\mathbf{q}}^{\mathbf{e}} [\left|E\right|\times 1]\). The nodal positions of \(\mathbf{G}\) are cast in the vectors \({\mathbf{x}}^{\mathbf{v}},{\mathbf{y}}^{\mathbf{v}},{\mathbf{z}}^{\mathbf{v}}[|V|\times 1]\) and the applied nodes in each direction are collected in \({\mathbf{p}}_{\mathbf{x}}^{\mathbf{v}},{\mathbf{p}}_{\mathbf{y}}^{\mathbf{v}},{\mathbf{p}}_{{\text{z}}}^{\mathbf{v}} [|V|\times 1]\). Assuming the sparse connectivity matrix \(\mathbf{C} [\left|E\right|\times \left|V\right|]\) after [8], and map** the topology of the network, the \(3{\cdot V}_{{\text{N}}}\) equilibrium equations can be written as follows:

where \(\mathbf{U}={\text{diag}}\left(\mathbf{C}{\mathbf{x}}^{\mathbf{v}}\right),\mathbf{V}={\text{diag}}\left(\mathbf{C}{\mathbf{y}}^{\mathbf{v}}\right),\mathbf{W}={\text{diag}}\left(\mathbf{C}{\mathbf{z}}^{\mathbf{v}}\right).\) All three matrices have sizes of \([\left|E\right| \times \left|E\right|]\), and the subscripts ‘N’ and ‘F’ represent the slicing of vectors and matrices with regards to the free nodes and fixed nodes, respectively.

These equations are rewritten to determine the internal coordinates in the equilibrated network \({\mathbf{x}}_{\mathbf{N}}^{\mathbf{v}},{\mathbf{y}}_{\mathbf{N}}^{\mathbf{v}},{\mathbf{z}}_{\mathbf{N}}^{\mathbf{v}}\boldsymbol{ }[\left|{V}_{{\text{N}}}\right|\times 1]\) in relation to the edge force densities \({\mathbf{q}}^{\mathbf{e}}\), and the position of the support vertices \({\mathbf{x}}_{\mathbf{F}}^{\mathbf{v}},{\mathbf{y}}_{\mathbf{F}}^{\mathbf{v}},{\mathbf{z}}_{\mathbf{F}}^{\mathbf{v}} [\left|{V}_{{\text{F}}}\right|\times 1]\), as follows:

where \({\mathbf{D}}_{\mathbf{N}}={\mathbf{C}}_{\mathbf{N}}^{\mathbf{T}}\mathbf{Q}{\mathbf{C}}_{\mathbf{N}} \left[\left|{V}_{{\text{N}}}\right| \times \left|{V}_{{\text{N}}}\right|\right],{\mathbf{D}}_{\mathbf{F}}={\mathbf{C}}_{\mathbf{N}}^{\mathbf{T}}\mathbf{Q}{\mathbf{C}}_{\mathbf{F}} \left[\left|{V}_{{\text{N}}}\right| \times \left|{V}_{{\text{F}}}\right|\right],\) and \(\mathbf{Q}={\text{diag}}\left({\mathbf{q}}^{\mathbf{e}}\right) \left[\left|E\right| \times \left|E\right|\right].\)

With this formulation, general form-finding problems can be tackled, relating the geometry of the structure (i.e. nodal coordinates) to its distribution of edge force densities \({\mathbf{q}}^{\mathbf{e}}\).

2.2 Networks with fixed horizontal projection

In this work, networks with fixed vertical projections based on the principles of TNA are considered. The projection corresponds to a planar, connected graph called the form diagram \(({\varvec{\Gamma}})\). As a consequence, the horizontal equilibrium equations (Eqs. (1) and (2)) are rearranged, introducing the horizontal equilibrium matrix \(\mathbf{E}=[{\mathbf{C}}_{\mathbf{N}}^{\mathbf{T}}\mathbf{U};{\mathbf{C}}_{\mathbf{N}}^{\mathbf{T}}\mathbf{V}]\) with size \([2\left|{V}_{{\text{N}}}\right| \times \left|E\right|]\) and the applied horizontal internal forces \({\mathbf{p}}_{\mathbf{H}}=\left[{\mathbf{p}}_{\mathbf{x},\mathbf{N}}^{\mathbf{v}};{\mathbf{p}}_{\mathbf{y},\mathbf{N}}^{\mathbf{v}}\right]\) with size \([2\left|{V}_{{\text{N}}}\right|\times 1]\), where \(\left[\mathbf{A};\mathbf{B}\right]\) indicates the row-wise (vertical) concatenation of matrices A and B. These terms allow the admissible edge force densities \({\mathbf{q}}^{\mathbf{e}}\) preserving fixed vertical projection to be expressed as a linear constraint, belonging to the null-space of the matrix E:

This system of equations is generally under-determined. By exploiting the structure of the null space of \(\mathbf{E}\), the force densities are re-parametrised and written as a function of k independent force densities \({\mathbf{q}}_{\mathbf{I}}^{\mathbf{e}}\) as follows:

where \({\mathbf{I}}_{|E_{\text{I}}|}\) is the identity matrix of size [\(\left|E_{\text{I}}\right|\times\left|E_{\text{I}}\right|]\), \({\mathbf{E}}_{\mathbf{D}}\) and \({\mathbf{E}}_{\mathbf{I}}\) are slices of related to the dependent and independent edges, respectively, and \({\mathbf{E}}_{\mathbf{D}}^{\dagger}\) is the generalised inverse (also known as Moore–Penrose pseudo-inverse) of \({\mathbf{E}}_{\mathbf{D}}\).

Through this formulation, the infinite geometry space of spatial networks for a given form \({\varvec{\Gamma}}\) can be explored. The elevation of the free nodes in the network \({\mathbf{z}}_{\mathbf{N}}^{\mathbf{v}}\) is then described by Eq. (6), solely as a function of \({\mathbf{q}}_{\mathbf{I}}^{\mathbf{e}}\) and the support heights \({\mathbf{z}}_{\mathbf{N}}^{\mathbf{v}}\).

2.3 Independent edges as the null-space of the equilibrium matrix

The transformation adopted in Eq. (8) allows for exploring the network equilibrium while kee** the form diagram projection \({\varvec{\Gamma}}\) unchanged. Crucially, it also introduces and requires a variable reduction step, namely the selection of independent edges \({E}_{{\text{I}}}\) enabling the slicing of \(\mathbf{E}\) into \({\mathbf{E}}_{\mathbf{D}}\) and \({\mathbf{E}}_{\mathbf{I}}\). The number of independent force density parameters \(k\) that can be chosen freely in Eq. (8) corresponds to the rank deficiency of the matrix E, or in other words, the dimension of the null-space of matrix E. It is computed for the networks as:

Given the matrix construction adopted in this work, each column of E relates to one specific edge in \({\varvec{\Gamma}}\) according to the ordering assumed when \({\varvec{\Gamma}}\) was generated. Therefore, the selected group of k independent edges relates to the independent force densities \({\mathbf{q}}_{\mathbf{I}}^{\mathbf{e}}\), or in other words, to the k columns of the null-space of E, as follows:

Hence, selecting independent edges \({E}_{{\text{I}}}\) correspond to selecting the largest set of \(k\) columns belonging to the null-space of the equilibrium matrix \(\mathbf{E}\). This necessarily follows that selecting \({E}_{{\text{I}}}\) enables \({\mathbf{E}}_{\mathbf{D}}\) to be non-singular, invertible and equal in rank as \(\mathbf{E}\), thereby defining the criteria required for valid selection of \({E}_{{\text{I}}}\). There are multiple ways in which the null-space of a matrix can be determined, and the selection of columns in the null-space is not unique. Consequently, multiple independent edge set selections are possible for a given topology, as discussed in [38] and further explored in Sect. 3.

3 On the selection of independent edges for structural topologies

Existing strategies for selecting independent edges are articulated in this section. The structural meaning of the sets of independent edges is subsequently discussed and presented in various networks. Together, these insights elucidate the characteristics of the edge selection problem that renders it difficult to solve. Of particular interest are the two edge selection criteria of validity and optimality.

3.1 An algorithm to select independent edges

3.1.1 Heuristics-based approaches for select pattern types

Selection heuristics are available for several canonical topologies. Figure 2 highlights a valid set of independent edges for these network topologies, which include (a) an orthogonal and grid-based pattern, (b) a circular or radial pattern, (c) a cross-grid diagram, and (d) a three-sided diagram. Their structural meaning, as shown for each topology, is discussed below:

-

(a)

In the continuously supported orthogonal grid (Fig. 2a), there exists one independent edge per group of continuous edges. The following subsections provide a geometrical interpretation of this heuristic.

-

(b)

The circular radial topology is composed of circular closed hoops and meridional segments converging to the centre; there are 3 and twelve of each, respectively, in the example depicted in Fig. 2b. One independent per hoop is observed, and one independent for all but two meridians converging to the centre (i.e., 10 independents for 12 meridians). One independent per hoop is necessary to control the axial force in the hoop, while one independent per meridian is necessary to distribute the loads to the supports. However, not all meridian forces can be freely chosen as they converge to a single point where equilibrium must be ensured. In fact, at the centre, \(n=12\) segments converge to the singular point, and \((n-2)\) DOFs are observed.

-

(c)

For the corner-supported cross-grid diagram of Fig. 2c, one independent exists at each of the four boundaries, behaving independently of the rest of the structure as they connect directly to two support points. Two independents exist among the four diagonal segments connecting the supports to the singular point at the pattern’s centre, verifying the \((n-2)\) rule stated above. Additionally, one independent edge exists per continuous closed strip, analogous to the closed hoops observed in Fig. 2b.

-

(d)

Finally, for the three-sided diagram in Fig. 2d, one independent edge exists at each boundary of the pattern, where continuous polylines connect two supports. There is also one independent edge for the three continuous segments converging to the pattern’s central singularity, following the \((n-2)\) rule.

3.1.2 General rank-based approach

For non-standard patterns encountered in real-life structural design, a number of linear algebra techniques can be applied to select the null-space of matrices, including Gauss-Jordan Elimination [38] and sequential matrix decompositions [32]. The latter is used in this work by minimising rounding errors. The method finds the independent edges—or columns from the null-space— through a sequential Singular Value Decomposition (SVD) approach. In this sequential method, the matrix \(\mathbf{E}\) is reconstructed column-by-column. Each time a column is added, the matrix rank is checked through SVD. After this addition, if the rank of the matrix does not increase, the column can be inferred as belonging to the null-space of \(\mathbf{E}\), and its corresponding edge can be labelled as independent. This work similarly uses this technique to evaluate the validity of independent edge choices (see Sect. 6.3.1).

3.2 Characteristics of the independent edge selection problem

3.2.1 Validity in independent edge set selection understood geometrically

The validity of independent edge selection in a grid such as the one described in Fig. 2a can be interpreted intuitively via geometric representation of a design’s internal force distribution. Figure 3a depicts a simple orthogonal \(3\times 3\) grid-based form diagram \({\varvec{\Gamma}}\) and presents two (attempted) selections of independent edges around the highlighted vertex \({v}_{8}\). Each selection involves two independent edges and their magnitudes are independently adjusted. The implications of the imposed changes are shown to the right in each case as a force polygon \({{\varvec{\Gamma}}}_{{f}^{\mathbf{*}}={v}_{8}}^{\mathbf{*}}\). Each edge \({e}^{\star }\) of \({{\varvec{\Gamma}}}_{{f}^{\mathbf{*}}={{\varvec{v}}}_{8}}^{\mathbf{*}}\) corresponds to the force vector of an edge \(e\) in \({\varvec{\Gamma}}\) around \({v}_{8}\): the length of \({e}^{\star }\) denotes force magnitude and the orientation of both \(e\) and \({e}^{\star }\) must be aligned for equilibrium to be satisfied.

Interpretation of independent edge (subset) validity around vertex \({v}_{8}\) by illustrating the force polygon \({{\varvec{\Gamma}}}_{{{f}^{\star }=v}_{8}}^{\star }\) created by its edge force vectors. The figure shows (a) an invalid selection of (\({e}_{6},{e}_{11}\)) whereby independently assigned force values would break the parallelism between force vectors and their pattern edge counterparts; and (b) a valid selection (\({e}_{9},{e}_{11}\)) that maintains the parallelism between the force vectors in \({{\varvec{\Gamma}}}_{{{f}^{\star }=v}_{8}}^{\star }\) and their associated pattern edges for any independently assigned force values

For this diagram, the edges \({e}_{8}\) and \({e}_{9}\) are co-linear and cannot be both selected as independent edge, since assigning their force values independently will break the equilibrium along the y-direction (Fig. 3IIa). Alternatively, it is possible to select \({e}_{9}\) and \({e}_{11}\); their force magnitude can be adjusted freely and be balanced directly by changes to the unselected edges \({e}_{6}\) and \({e}_{8}\). This in turn explains the heuristics developed in Fig. 2a, whereby a single edge is selected from each group of co-linear continuous edges as they are independent of one another.

3.2.2 Sequential and combinatorial complexity

It follows from the discussion in Fig. 3 that independent edge selection is both combinatorically and sequentially complex. Each new edge selection directly prescribes the space of valid edges that may be selected in the subsequent step. For example, while it is possible to select one edge from \({e}_{8}\), \({e}_{9}\) or \({e}_{10}\), selecting any of the three edges will remove the edges \({e}_{9}\) and \({e}_{10}\), \({e}_{8}\) and \({e}_{10}\), and \({e}_{8}\) and \({e}_{9}\) from the selection space, respectively, in all subsequent steps.

Figure 4 explains these concepts for a 2-D planar four-bar structure subjected to horizontal forces, showing all possible independent edge selection sequences. Since the central node is surrounded by two sets of perpendicular edge sequences, this system possesses two independent lines of force. A valid edge set must therefore consist of only one edge along the x-direction and another along the y-direction. Consequently, once an edge aligned to the x-direction is selected, the second edge must necessarily be an edge aligned to the y-direction, and vice versa—underscoring the problem’s sequential and combinatorial complexity. Attempting to select two edges along the same direction will create two linearly independent columns in \({\mathbf{E}}_{\mathbf{D}}\), rendering it singular.

The total valid edge set for a given pattern cannot be determined analytically. Nonetheless, the size of the valid edge set corresponding to any problem can be inferred from the pattern’s closest fitting structured grid-based pattern. For example, a boundary-supported \(N\times N\) grid-based pattern possesses a total of \(|E|\)= \(2\cdot N\left(N-1\right)\) of edges, an independent edge set size of \(\left|{E}_{{\text{I}}}\right|=2\cdot \left(N-1\right)\), and the total valid edge set selection is \({N}^{2\cdot (N-1)}\). The problem therefore has an exponential complexity of \(O\left({N}^{2N}\right)\). Consider the example in Fig. 4 with \(N=3\), \(|E|=12\), \({\text{rank}}(\mathbf{E})=8\) and, therefore, \(\left|{E}_{{\text{I}}}\right|=4\), the size of valid edge sets in this case is \({3}^{4}=81.\) If \(N=10\), the number of valid selections is \({10}^{18}\). Thus, the total number of valid edge sets becomes intractable to manage even at moderate pattern sizes, reinforcing the need for selection support.

3.2.3 Optimality in valid independent edge selection based on sensitivity

While edge selection is straight-forward in orthogonal configurations like Fig. 4, complications arise when spatial irregularity is introduced. Figure 5 presents three 12-bar designs with the same connectivity as Fig. 3; each design differs only in the magnitude by which \({v}_{8}\) is translated towards the centre of each design’s domain.

Geometric interpretation on edge equilibrium force sensitivities: this figure illustrates the sensitivity of changes in forces of independent edges \({E}_{{\text{I}},i}\) to those of the dependent edges \({E}_{{\text{D}},i}\) for three local independent edges (subset) selections around vertex \({v}_{8}\), or (A–C). Row (I) shows the three selections of \({E}_{{\text{I}},i}\); and row (II) draws the closed equilibrated force polygon around \({v}_{8}\), or \({{\varvec{\Gamma}}}_{{{f}^{\star }=v}_{8}}^{\star }\), for each independent edge subset. Each example fixes the force of one independent edge (marked ⚓) and imposes a + 10% change on the other (marked Δ), intuitively depicting the required changes to \({E}_{{\text{D}},i}\) to restore equilibrium through the change in the area of \({{\varvec{\Gamma}}}_{{{f}^{\star }=v}_{8}}^{\star }\)

The principal effect of the geometric change is the loss of orthogonality of the diagram depicted initially in Fig. 4, and therefore the co-linearity of \({e}_{6}\) and \({e}_{11}\), and of \({e}_{8}\) and \({e}_{9}\). This in turn enables both pairs of edges to be selected as independent edges with independently assigned values. Figure 5II represents the edge forces around \({v}_{8}\). It shows that the forces of \({e}_{6}\) and \({e}_{8}\) are no longer exclusively balanced by \({e}_{11}\) and \({e}_{9}\), respectively, and vice versa. This contrasts with the orthogonal configuration depicted in Fig. 4.

While the edge selection space has expanded, different edge selections correspond to different force sensitivities. To illustrate, consider that the edge orientations are highly aligned for \({e}_{6}\) and \({e}_{11}\) and also for \({e}_{8}\) and \({e}_{9}\). This implies that within each set, independently assigned changes to any one of its edges would most effectively, or proportionally, be equilibrated by changes to the remaining unselected edge within the same set. Consequently, choosing both edges from either \({e}_{6}\) and \({e}_{11}\), or \({e}_{8}\) and \({e}_{9}\) as independent edges would lead to ill-conditioned behaviour. This is visualised in Fig. 5, which presents three subsets of independent edges \({E}_{{\text{I}},i}\). All subsets include \({e}_{6}\) as an independent edge and impose on it a force value increase of 10%. In parallel, the three examples require the force value of a second independent edge, namely (A) \({e}_{11}\), (B) \({e}_{8}\), and (C) \({e}_{9}\) to stay fixed. It can be observed in Fig. 5A that independent changes assigned to both \({e}_{6}\) and \({e}_{11}\) require disproportionally larger changes from \({e}_{8}\) and \({e}_{9}\). Thus, certain selections exaggerate numerical sensitivity or ill-conditioning. This captures the primary edge selection criteria of optimality that provides the central motivation of this work.

The degree of optimality associated with any edge set \({E}_{{\text{I}}}\) is ultimately determined by the entire Euclidian configuration of a pattern \({\varvec{\Gamma}}\) and a clear distinction between validity and optimality cannot always be made. For example, Fig. 6 depicts intermediate patterns bridging the orthogonal and deformed configurations of respectively Figs. 3 and 5. It repeats the previous exercise of Fig. 5A, imposing force change and fixities on respectively \({e}_{6}\) and \({e}_{11}\). As the patterns from Fig. 6a–d become increasingly orthogonal, the magnitudes of the required changes on the unselected edges \({e}_{8}\) and \({e}_{9}\) also increase disproportionally. As such, the invalidity of \({e}_{6}\) and \({e}_{11}\) in Fig. 3 may be viewed as an infinitely sensitive selection. The subsequent subsections explain the challenges posed by highly sensitive, or ill-conditioned, independent edge choices to numerical form-finding tasks.

Geometric interpretation on edge equilibrium force sensitivities: this figure illustrates the sensitivity of changes in forces of independent edges \({E}_{{\text{I}},i}:=\{{e}_{6}, {e}_{11}\}\) to those of the dependent edges \({E}_{{\text{D}},i}:=\{{e}_{8}, {e}_{9}\}\) for I patterns {\({{\varvec{\Gamma}}}_{i}:1<i<4\)} embedding varying internal spatial deformation around vertex \({v}_{8}\). As the patterns become more orthogonal from a to d, row II illustrates that the required changes to the force polygon of vertex \({v}_{8}\), or \({{\varvec{\Gamma}}}_{{{f}^{\star }=v}_{8}}^{\star }\), also increases

4 Numerical consequences of independent edge selection

This section builds on the theory and properties of the independent edge selection problem developed in the preceding section and discusses the consequences of edge set selection to numerical design space navigation and optimisation algorithms.

4.1 Relation with conditioning & implication to design space

As presented in Sect. 3.2.3, changing the geometry of the network impacts the validity and optimality of independent edge choices. For designs with larger and deeper underlying graphs, independent edge choices and both force and geometry sensitivities interact in unpredictable ways. Different sets \({E}_{{\text{I}}}\) can result in global design spaces with vastly different characteristics that may either facilitate or impede design navigation.

The implication of edge set selection to the characteristics of the design space is studied in Fig. 7 in relation to matrix conditioning. In numerical linear algebra, the conditioning number of a matrix quantifies how sensitive the matrix is to perturbations. Given a matrix X and its ordered singular values \({\sigma }_{1},{\sigma }_{2},\dots ,{\sigma }_{n}\), which are ordered such that \({\sigma }_{1}\ge {\sigma }_{2}\ge \dots \ge {\sigma }_{n}>\upepsilon \), the conditioning number of X is written as:

where \({\sigma }_{1}\) and \({\sigma }_{n}\) correspond to the largest and smallest non-zero singular values of \(\mathbf{X}\), according to some numerical tolerance \(\upepsilon \). The conditioning value provides a ratio of how much the output can be stretched relative to the input in the worst-case scenario, with a low conditioning number being good and a high conditioning number indicating potential numerical sensitivity to small changes in the inputs.

Diagram relating the 0 selection of independent edge set \({E}_{{\text{I}},i}\) to I matrix conditioning and II the quality of the design space for the III 4-DOF reticulated shell structure \(\mathbf{G}\) depicted. Results are evaluated based on the criteria of (i) numerical convergence; (ii) constraint satisfaction of the compression-only requirement; and (iii) numerical sensitivity

Figure 7 visualises a 4-DOF design space associated with an input design with structured quadrilateral pattern. The initial design covers a bounding box of 3 × 3 on the xy-plane, measuring with a unit height of \(1.0\) with edge force density range of \(-1.4\le \) \({q}_{e}\le -0.4\), providing a practical span-to-height ratio of 3:1. Four possible edge sets are displayed under column II; they correspond to selection with condition value \(\upkappa \left({\mathbf{E}}_{\mathbf{D},i}\right)\) at the 100th, 50th, 25th and 1st percentile among 10,000 randomly generated sets. For each independent edge set (see 1–4 in Fig. 7), three criteria were investigated, map** the 4-DOF design space in six pairwise 2-DOF subspaces as one of three rows of plot appearing in each set. Each plot is centred on the input design’s initial \({\mathbf{q}}_{\mathbf{I}}^{\mathbf{e}(0)}\) (depicted as + within each plot).

The first criterion considered was numerical convergence (see Fig. 7IIi). It is expressed as:

where the nominator and denominator terms denote respectively total surface area and total vertex applied loading. The expression places an upper bound on the vertex applied loads, which depend also on vertex areas, according to the initial vertex areas scaled by a multiplier \({\alpha }_{{\text{max}}}\). This technique promoted greater numerical stability during form-finding using iterative FDM. Thus, the expression measures the discrepancy induced by numerically unstable force densities on the geometry of a RESS that impedes the convergence of its geometry-dependent loading.

Next, constraint satisfaction of compression-only structural action (see Fig. 7.IIii) is computed as the average magnitude of positive (denoting tension) force densities relative to the entire system’s average absolute edge force density. This is expressed below:

Finally, geometric sensitivity (see Fig. 7.IIiii) measures the maximum vertex distances between the initial and varied design, denoted respectively as \({\mathbf{z}}^{\mathbf{v}(0)}\) and \({\mathbf{z}}^{\mathbf{v}}\), as follows:

where normalisation relative to the height range of the initial design is applied.

The value and colour ranges are calibrated in all cases to show invalid or problematic regions of the design space as a gradient from red to black. These studies show that the regions containing feasible RESS designs are evidently more narrow and non-convex in design spaces created by \({\mathbf{q}}_{\mathbf{I}}^{\mathbf{e}}\) that are associated with edge sets \({E}_{{\text{I}}}\) corresponding to high \(\kappa \left({\mathbf{E}}_{\mathbf{D}}\right)\), making the navigation of their design spaces intractable and difficult. For example, Fig. 7—Row 1 presents the edge set \({E}_{{\text{I}}}\) with the highest \(\kappa \left({\mathbf{E}}_{\mathbf{D}}\right)\): here, the input design at the centre of each plot is seemingly trapped with almost all neighbouring designs \({\mathbf{q}}_{\mathbf{I}}^{\mathbf{e}}\) either failing to converge, violating the compression-only requirement (Fig. 7—Row 1.ii), or producing large geometrical changes. By contrast, the design space associated with the \({E}_{{\text{I}}}\) with the lowest \(\kappa \left({\mathbf{E}}_{\mathbf{D}}\right)\) is smooth and entirely explorable within the plotted value range (see Fig. 7—Row 4), illustrating the importance of well-conditioned selection of \({E}_{{\text{I}}}\).

4.2 Relating matrix conditioning to the efficacy of numerical algorithms

This subsection describes the complications created by ill-conditioned parameter space for design exploration and optimisation tasks.

4.2.1 Design space exploration

Unpredictable design changes frustrate the ability of designers to navigate and understand the design space via manual parameter tuning. Figure 8 presents attempts to generate variant designs by applying a small stochastic perturbation to the independent edge force density values of an input design with initial edge force densities \({\mathbf{q}}_{\mathbf{I}}^{\mathbf{e}\left(0\right)}\). The perturbation to individual independent edge \(\Delta {\mathbf{q}}_{\mathbf{I}}^{\mathbf{e}}\) is generated via uniform sampling following the expression below:

where \({\mathcal{U}}_{\left|d\right|}({\mathbf{x}}_{{\text{LB}}},{\mathbf{x}}_{{\text{UB}}})\) defines a vector in \({\mathbb{R}}^{|d|}\) sampled from a uniform distribution with interval \(\left[{\mathbf{x}}_{{\text{LB}}},{\mathbf{x}}_{{\text{UB}}}\right]\), \({\mathbf{q}}_{\mathbf{I}}^{\mathbf{e}\left(0\right)}\) refers to an input design’s initial independent edges’ force densities, \(\alpha \) the perturbation magnitude factor, \({\overline{q} }^{{\text{e}}}\) the initial design’s average absolute edge force density or \({\sum }_{i=1}^{|E|}\left|{q}_{i}^{{\text{e}}}\right|/\left|E\right|\), and \({-{\varvec{\upepsilon}}}_{\left|{E}_{{\text{I}}}\right|}\) the maximum (negative) allowable force density value to ensure all independent edges are in compression. Even with a small \(\alpha \) set at 0.1, the final equilibrium geometry can vary wildly. Significantly, more than half of all designs fail to obey the compression-only criteria. Furthermore, practically untenable design characteristics, such as high edge length or local curvatures, are notable in many generated designs.

Furthermore, because the narrow valleys prescribing the feasible design space may not possess orientations that are aligned to any variable axes (recall Fig. 7), designers navigating such design space can easily overshoot into unstable or infeasible regions, producing non-usable results even under small adjustments.

4.2.2 Gradient-based optimisation

Ill-conditioning in parameter spaces often leads to uneven landscapes, marked by elongated valleys in certain dimensions and variable sensitivities across others. Such conditions pose challenges for gradient-based optimisation methods. These irregularities can trigger oscillations, hindering the process from converging efficiently and predictably. Furthermore, these conditions complicate the determination of appropriate step sizes.

Figure 9 demonstrates the impact of matrix conditioning on optimisation according to the global structural load-path, which is computed as \({\sum }_{e}^{\left|E\right|}\left|{q}_{e}\right|\cdot {l}_{e}^{2}\). The analysis, which was conducted using the non-linear optimisation algorithm SLSQP implemented within SciPy [39], revealed that edge sets with a higher condition number \(\kappa \left({\mathbf{E}}_{\mathbf{D},i}\right)\) typically demanded more extensive optimisation runs. Visible issues include the optimiser’s need for extended initialisation before initial improvements were observed and, in some cases, entrapments at local minimums. Notably, runs associated with \({E}_{{\text{I}},i}\) with highly ill-conditioned \(\kappa \left({\mathbf{E}}_{\mathbf{D},i}\right)\) occasionally failed to converge to the global optimum.

Matrix conditioning associated with the selection of independent edges substantially influences optimisation efficacy. Each of the 5 × 4 plots shows optimisation runs using 20 randomly sampled valid independent edge sets \({E}_{{\text{I}},i}\) from one of 20 initial force densities \({\mathbf{q}}_{j}^{{\text{e}}\left(0\right)}\). In each plot, the x-axis indicates the number of functional evaluations in logscale. The y-axis denotes the normalised optimisation objective value based on load-path, ranging from 0 (global optimum) to 1 (initial value). Line colours indicate the percentile ranking of an edge set \({E}_{{\text{I}},i}\) in terms of their conditioning value \(\upkappa \left({\mathbf{E}}_{\mathbf{D},i}\right)\) relative to the condition values of all 20 edge sets tested

4.2.3 Stochastic optimisation

Ill-conditioning also significantly reduces the usability of stochastic optimisation methods. To illustrate, consider the formulation of two biologically inspired mechanisms, namely cross-over and mutation that Genetic Algorithm (GA) rely on to produce variant designs. These mechanisms generate offspring designs by respectively blending parent design options, and by investigating parameter values neighbouring those of current designs. Other stochastic techniques like simulated annealing similarly feature a candidate generation stage based on probabilistic sampling.

For continuous real-valued problems, given \({N}_{{\text{P}}}\) parent designs \(\{{\mathbf{x}}_{{\text{P}},j}:1\le j\le {N}_{{\text{P}}}\}\), the i-th element of \({\mathbf{x}}_{{\text{C}}}\), a design generated by cross-over of the parent variables, is expressed as follows:

where \({w}_{j}\) is a parent-specific weighting sampled from \(\mathcal{U}\left(0, 1\right)\), and the denominator term ensures that \({\mathbf{x}}_{\mathbf{C}}\) is a convex combination of parents \({\mathbf{x}}_{{\text{P}},j}\) and thus always within the acceptable value ranges. Next, the i-th element of \({\mathbf{x}}_{\mathbf{C}\mathbf{^{\prime}}}\), a design vector mutated from \({\mathbf{x}}_{\mathbf{C}}\), is computed as:

where \({x}_{\mathrm{C{\prime}}, i}\) and \({x}_{{\text{C}},i}\) are the i-th elements of respectively \({\mathbf{x}}_{\mathbf{C}\mathbf{^{\prime}}}\) and \({\mathbf{x}}_{\mathbf{C}}\), and \(\mathcal{N}\left(0,\sigma \right)\) a normal distribution defined with mean \(\mu =0\) and some variance \(\sigma \)—setting the mutation magnitude or rate—and \({x}_{i,\mathrm{ LB}}\) and \({x}_{i,\mathrm{ UB}}\) respectively prescribe the minimum and maximum allowable value. The mechanism is therefore similar to the stochastic perturbation expressed in (15).

Both mechanisms are problematic in ill-conditioned design spaces. Because ill-conditioning can introduce non-convexity and other irregularities (recall Fig. 7), convex combinations of feasible design options are not guaranteed to be feasible. Revisiting the design in Fig. 7.III, Fig. 10 illustrates the tendencies of designs generated via cross-over of \({\mathbf{q}}_{\mathbf{I}}^{\mathbf{e}}\) to violate the three criteria studied in Fig. 7 when using the moderately ill-conditioned edge set \({E}_{{\text{I}},2}\). It is observed that at least 25% of designs generated via cross-over were inadmissible regardless of the internal diversity and number of parents involved. Note that these are not simply poorly performing designs expected and integral to the functioning of stochastic optimisation processes, but numerically non-convergent designs with neither physical validity nor interpretation.

Visualising the magnitude of inadmissible designs generated by the weighted sum formulation of cross-over under the three aforementioned criteria (i-iii), incorporating different numbers of parents (a–c) drawn from a design collection embedding various degrees of internal diversity (1–5). Each plot superimposes the spread of the generated designs’ infeasibility (in box-plots, left y-axis) and the percentage of inadmissible designs (in bar graphs, right y-axis)

5 Pattern design & data space

This work supports the form-finding of quadrilateral-dominant patterns, which may embed varying ranges of irregularity. Quadrilateral patterns are preferred in structural designs because they offer well-known conceptual, numerical and fabrication benefits [40]. Furthermore, the design and development of reticulated shell structural designs often require connectivity-based customisation to be made to achieve additional performance gains. Based on these considerations, this section identifies the pattern design scope where enhanced support for independent edge selection through ML is most beneficial, concluding with the pattern design generation pipeline providing the training data.

5.1 Pattern design space

The difficulty involved in selecting an independent edge conducive to well-conditioned \({\mathbf{E}}_{\mathbf{D}}\) selection depends significantly on the pattern design characteristics. Pattern designs can be described in terms of spatial qualities like orthogonality, connectivity characteristics such as singularities and face valencies, as well as qualities related to both spatial configuration and connectivity, such as uniformity, regularity and symmetries.

Applying some generalisation, quadrilateral pattern designs may be grouped into two broad categories with divergent characteristics. On the one hand, are structured or semi-structured quadrilateral-dominant pattern embedding, optionally, spatial orthogonality and symmetries. Figure 2 shows established heuristics referring to the underlying quadrilateral layout of these patterns to aid the selection of well-conditioned independent edges for these patterns. The dominant selection criterion is validity, \({\text{rank}}\left({\mathbf{E}}_{\mathbf{D}}\right)={\text{rank}}\left(\mathbf{E}\right)\), and there is a limited degree of variation in \(\kappa \left({\bf{E}}_{\left[:,{E}_{{\text{D}}}\right]}\right)\) among valid combinations of \({E}_{{\text{I}}}\).

Edge selection is challenging, however, when spatial irregularities are introduced to patterns. As explained through the examples of Figs. 4 and 5, spatial deformations remove orthogonality and increase the entanglement of edge structural action. They expand the edge selection space relative to the pattern with orthogonal configuration to include valid, but potentially ill-conditioned edge choices (see pattern modifications denoted \({\varvec{\Delta}}{{\varvec{\Gamma}}}_{\mathbf{x}\mathbf{y}}\left(\cdot \right)\) in Fig. 11).

Increasing the spatial and connectivity irregularity of quadrilateral patterns raises the difficulty of the well-conditioned independent edge selection problem: the figure illustrates patterns generated via the application of operators \({\varvec{\Delta}}{{\varvec{\Gamma}}}_{\mathbf{x}\mathbf{y}}\left(\cdot \right)\) and \({\varvec{\Delta}}{{\varvec{\Gamma}}}_{\mathbf{C}}\left(\cdot \right)\), which introduces respectively spatial and connectivity irregularity, on the input pattern at the figure’s top-left corner

Irregular connectivity (\({\varvec{\Delta}}{{\varvec{\Gamma}}}_{{\text{C}}}\left(\cdot \right)\) in Fig. 11) exacerbates both complexity and difficulty in edge selection because triangulation raises the rank deficiency of the matrix \({\mathbf{E}}_{\mathbf{D}}\). Consider the underlying quadrilateral pattern of Table 1A with diagonals removed. It has 7 independent edges whereas its triangulated version has 13. This raises the rank-deficiency ratio, or \(|{E}_{{\text{I}}}|/\left|E\right|\), from \(7/12=22.5\%\) to \(13/34=30.2\%\). Consequently, the selection of diagonal edges is needed, increasing the problem’s combinatorial complexity.

Selection is most difficult in face of combined spatial and connectivity distortions. In these cases, the quadrilateral structure of a pattern is no longer detectable, rendering selection principles based on quadrilateral structures inapplicable (see \({\varvec{\Delta}}{{\varvec{\Gamma}}}_{\mathbf{C}}\left(\cdot \right)+{\varvec{\Delta}}{{\varvec{\Gamma}}}_{\mathbf{x}\mathbf{y}}\left(\cdot \right)\) in Fig. 11). Indeed, selecting edges that are closely aligned in their orientation no longer necessarily causes high conditioning. Nonetheless, edge selection can substantially impact matrix conditioning: Table 1 shows 3 designs with 3 different edge choices differing by an order of magnitude of 3 despite the lack of any apparent selection logic.

5.2 Pattern design data space and generation pipeline

A two-stage computational pipeline was developed to create patterns embedding a range of spatial and connectivity irregularity. The first stage, as Fig. 12.1 shows, creates a set of base patterns: randomly generated boundaries and singularity features are mapped using the singularity-based pattern generator compas_singular [41] into patterns that are (a) structured or (b) semi-structured, which are patterns embedding vertex singularities that are either fully or pre-dominantly quadrilateral.

Example procedural modifications applied on 1 an initial pattern design to create variant irregular patterns. The figure illustrates the effects of connectivity modification operators \({\varvec{\Delta}}{{\varvec{\Gamma}}}_{\mathbf{C}}\left(\cdot \right)\) like 2 triangular face subdivision and 3 line-mesh intersections on randomly sampled faces and vertices, respectively. Lastly, it shows the effects of 4 Laplacian mesh smoothing, a spatial deformation operator

The second stage is illustrated in Fig. 12.2-3; it involves creating pattern irregularities. First, connectivity irregularity is introduced to a subset of the patterns via random and recursive applications of two mesh connectivity shape grammar operators \({\varvec{\Delta}}{{\varvec{\Gamma}}}_{\mathbf{C}}\left(\cdot \right)\). The two operators, which include triangular face subdivision and line-mesh intersection, allow the production of the mixed and unstructured patterns incorporated in this work. Finally, Laplacian smoothing was used as a spatial deformation operator \({\varvec{\Delta}}{{\varvec{\Gamma}}}_{\mathbf{x}\mathbf{y}}\left(\cdot \right)\) and applied to all patterns at varying intensities (see Fig. 12d).

A total of 1000 pattern designs were included in the training process. Only base patterns with \(\left|V\right|\le 200\) were used to create the training dataset because each episodic step can involve multiple matrix rank computations to assess the validity of predicted edge selections, which can be computationally intensive for large designs with large matrix \(\mathbf{E}\).

6 Data-driven trans-topology method to select independent edges

This section describes the critical components composing the framework based on Deep Reinforcement Learning (DRL) that provide the modelling backbone for this work. DRL offers a natural choice over other ML methods for learning sequential planning problems, as it is formulated to optimise for long-term objectives through its consideration of future accumulated rewards. This lends its suitability for learning the well-conditioned independent edge selection problem, which is also a sequential problem—one involving the incremental selection of edge.

6.1 Framework based on Deep Q-learning

More concretely, DRL combines two powerful ML methods called Reinforcement Learning (RL) and Artificial Neural Network (ANN). In RL, an agent optimises its behaviour during the course of a training process via interaction with an environment. The DRL training process is structured into episodes [42], and a series of steps or iterations within each episode.

Interpreted within this work, each episode \(0\le {e}_{{\text{RL}}}<{N}_{{\text{E}}}\) corresponded to the solving of the independent edge set for a different pattern. At each time-step \(t\), the agent observed the current state \({\mathbf{S}}^{\left(t\right)}\) and then took an action \({a}^{\left(t\right)}\) (i.e. the selection of a new edge \({e}^{(t)}\)). The environment implemented the action \({a}^{\left(t\right)}\), leading to a transformed state \({\mathbf{S}}^{\left(t+1\right)}\) that was evaluated and translated into a reward \({r}^{\left(t\right)}\) to give as feedback to the agent. Each step created a transition tuple \(\tau :=\) (\({\mathbf{S}}^{\left(t\right)}\), \({a}^{\left(t\right)},{r}^{\left(t\right)},{ \mathbf{S}}^{\left(t+1\right)}\)) that provided the building blocks of experiences in RL [37].

Ultimately, the agent's goal in RL is to learn an optimal policy \({\pi }^{*}\), as in:

where \({\mathbb{E}}\) denotes the expectation operator, \(R\left(\cdot \right)\) is a reward function, and \(0\le {\gamma }_{{\text{RL}}}\le 1\) is a discount factor raised to the power of the time step t indicating the importance of future rewards compared to immediate rewards [42]. The goal of the agent in RL is therefore to maximise the expected value of the sum of discounted rewards over a sequence of time steps t when following policy π.

Specifically, this work relied on the DRL modelling framework called Deep Q-Learning [43] (DQN), owing to its straightforward implementation and well-known versatility for handling complex discrete sequential decision processes across multiple fields [44]. These include applications like gaming [37], autonomous vehicles [45, 46], robotics [47, 48], finance [49, 50], and healthcare [51] and structural design [27, 52].

DQN is governed by the Bellman equation; the quality or, simply, Q-value associated with any state:

The objective in DQN is to learn \(Q\left(\cdot \right)\), which is formulated as the map** of \(S\times \mathcal{A}\to \mathcal{Q}\). Each entry of \({\mathcal{Q}}^{(t)}\) corresponds to a quality value on an action \({a}_{i}^{\left(t\right)}\) from the set of actions \({\mathcal{A}}^{(t)}\) that can be implemented on state \({\mathbf{S}}^{\left(t\right)}\) at time \(t\)—providing information enabling the selection of the optimal action \({a}^{(t)}\). The subsequent subsections detail the exact definition of state \({\mathbf{S}}^{\left(t\right)}\), the formulation of the reward function \(R\left(\cdot \right)\) for computing reward \({r}^{\left(t\right)}\), and the custom GDL-enhanced ANN-based architecture at the heart of the agent’s decision-making.

6.2 Model agent & action space

In this work, the agent’s map** is defined as:

One sees the model’s outputs are \({\widehat{\mathcal{Q}}}^{\rm{e}(t)}\left[\left|E\right|\right]\) and \({\widehat{\mathcal{Q}}}_{\Gamma }^{(t)}\), which together define the total action space of the problem. They contained the Q-values corresponding to respectively edge-based and global actions, which are described below:

-

Select Edge: The \(\mathcal{Q}\)-values corresponding to the selection of all edges were contained by \({\widehat{\mathcal{Q}}}^{\rm{e}(t)}\), with each e-th entry, or \({\widehat{\mathcal{Q}}}_{\left[e,1\right]}^{{\text{e}}\left(t\right)}\) referring to an edge e. At each step, masking was applied so that only the values from the remaining unpicked edges \({\widehat{E}}_{{\text{D}}}^{(t)}=E\setminus {\widehat{E}}_{{\text{I}}}^{\left(t\right)}\) were considered.

-

Stop: After \(\left|E\right|-{\text{rank}}\left(\mathbf{E}\right)\) valid edges had been picked (see Eq. (9)), the stop action was automatically executed. It existed for training and accounting purposes to provide a clean separation on the attribution of final and intermediate rewards, which are defined in Sect. 6.3.2.

As is common practice in DRL, an action, or edge was selected either via random exploration, or exploitation, which leveraged the agent’s current learning. At each step during an episode, whether an agent explored or exploited was determined by comparing a sampled value \({{\epsilon }}_{{\text{xpl}}}^{(t)}\) from \(\mathcal{U}\left(\mathrm{0,1}\right)\) with a \({\epsilon }_{{\text{xpl}}}^{({e}_{\rm{RL}})}\) that was updated at each episode \({e}_{\rm{RL}}\), annealing as the training process advances with increasing number of episodes. Exploitation occurred if \({{\epsilon }}_{{\text{xpl}}}^{(e_{\rm{RL}})}\le {\epsilon }_{{\text{xpl}}}^{\left(t\right)}.\) Specifically, this work makes use of the Boltzmann exploration [53] for exploitation, whereby the selection probability of any given edge e is formulated as:

where \({\tau }_{{\text{xpl}}}^{({e}_{{\text{RL}}})}\) is a temperature parameter annealed with each increasing episode \({e}_{{\text{RL}}}\), with higher values corresponding to greater deviation from the agent’s predicted optimal course. Thus, the training explicitly exposed the agent to a varied set of experiences beyond its current optimal estimate at any given time step.

6.2.1 State representation

The state \({\mathbf{S}}^{(t)}\) describes the current progress in solving the edge selection problem. It was defined in this work as follows:

where \({\widehat{E}}_{{\text{I}}}^{(t)}\) refers to the accumulated set of agent-selected independent edges at time \(t\). Using GDL, the pattern \({\varvec{\Gamma}}\) was understood by the model agent through hand-crafted design attributes \({\mathbf{X}}^{\mathbf{v}}\left[\left|V\right|\times {D}_{V}\right]\), \({\mathbf{X}}^{\mathbf{e}} \left[\left|E\right|\times {D}_{E}\right]\), \({\mathbf{X}}^{\mathbf{f}} \left[\left|V\right|\times {D}_{F}\right]\) computed for and assigned locally to respectively vertices, edges, and faces. They were provided as input to the model and transformed throughout the model’s architecture by geometric message passing.

To enhance the model agent’s capacity to learn the edge selection problem, a comprehensive set of vertex, edge, and face design attributes (respectively \({\mathbf{X}}^{\mathbf{v}}, {\mathbf{X}}^{\mathbf{e}}, {\mathbf{X}}^{\mathbf{f}}\)) were included as inputs at all steps. As Table 2 shows, they variously captured information about the position, sizes, both support and boundary conditions, and both local and global connectivity information.

6.2.2 Geometric deep learning

A suitable agent \({\mathcal{Q}}_{{\varvec{\Theta}}}\) was built based on the DQN-based model architecture the author articulated in [27, 54, 55], which was structured into the three sub-model components as follows:

1. Encoding: This stage used GDL to analyse the patterns under study through the set of input features \({\mathbf{X}}^{\mathbf{v}}, {\mathbf{X}}^{\mathbf{e}}, {\mathbf{X}}^{\mathbf{f}}\), interpreting the mesh-based patterns as 2-dimensional CW Complexes and conducting geometric message passing along their adjacencies. The approach, which was based on the author’s model architecture CWMeshNet articulated in [55], can flexibly incorporate data features individually assigned to vertices, edges, and faces as model data. For this work, the outputs of CWMeshNet, which provided hidden (intermediate) features for downstream map**s, are denoted here as \({\mathbf{H}}^{\mathbf{v}} \left[\left|V\right|\times {D}_{M}\right]\), \({\mathbf{H}}^{\mathbf{e}} \left[\left|E\right|\times {D}_{M}\right]\), and \({\mathbf{H}}^{\mathbf{f}} \left[\left|V\right|\times {D}_{M}\right]\), where \({D}_{M}\) corresponds to a model dimension hyper-parameter.

2. Summarisation: The output hidden features \({\mathbf{H}}^{\mathbf{v}}\), \({\mathbf{H}}^{\mathbf{e}}\) and \({\mathbf{H}}^{\mathbf{f}}\) were then transformed as follows:

where \({{\varvec{\Theta}}}_{{\text{Gated}},\mathrm{ V}}\), \({{\varvec{\Theta}}}_{{\text{Gated}},\mathrm{ E}}\), and \({{\varvec{\Theta}}}_{{\text{Gated}},\mathrm{ F}}\) are learnable attention-based reduction gate functions based on [56]. They remapped \({\mathbf{H}}^{\mathbf{v}}\), \({\mathbf{H}}^{\mathbf{e}}\) and \({\mathbf{H}}^{\mathbf{f}}\) into three constant-length vectors \({\mathbf{h}}_{\mathbf{v}}\), \({\mathbf{h}}_{\mathbf{e}}\), and \({\mathbf{h}}_{\mathbf{f}}\) in size \(\left[1\times {D}_{M}\right]\), providing a summary for each class of element. These vectors were then concatenated and remapped to produce a single summary \({\mathbf{h}}_{{\varvec{\Gamma}}}\) \(\left[1\times {D}_{M}\right]\) for the entire pattern:

Next, the features from both \({\mathbf{X}}^{\mathbf{e}}\) and \({\mathbf{H}}^{\mathbf{e}}\) corresponding to the selected edges \({\widehat{E}}_{{\text{I}}}^{(t)}\) were similarly aggregated and transformed, resulting in \({\mathbf{h}}_{{\mathbf{e}}_{\mathbf{I}}}\left[1\times {D}_{M}\right]\). This is expressed as follows:

3. Valuation: At the last phase, \({\mathbf{h}}_{\mathbf{e}}\), \({\mathbf{h}}_{{\varvec{\Gamma}}}\) and \({\mathbf{h}}_{{{{E}}}_{{\text{I}}}}\) were tiled to tensors of size \(\left[\left|E\right|\times {D}_{M}\right]\) and concatenated and remapped through several layers of multi-layer perceptron to produce \({\widehat{\mathcal{Q}}}^{\mathbf{e}}\) \(\left[\left|E\right|\right]\), which stored the \(\mathcal{Q}\)-value for the edge-based actions. Similarly, \({\mathbf{h}}_{{\varvec{\Gamma}}}\), \({\mathbf{h}}_{\mathbf{e}}\) and \({\mathbf{h}}_{{{{E}}}_{{\text{I}}}}\) were concatenated, and remapped to produce \({\widehat{\mathcal{Q}}}_{\Gamma }\). The two functions are expressed as follows:

6.3 Environment

6.3.1 Evaluation

Any new edge selection \({\widehat{e}}^{(t)}\) at the current time step \(t\) was immediately checked for its validity before its inclusion in the accumulated edge set \({\widehat{E}}_{{\text{I}}}^{\left(t+1\right)}\) for the next time step \(t+1\). An edge choice was deemed valid if a sub-matrix of equivalent rank as E could still be assembled from the sub-matrix of E with all independent edges including the current edge choice \({\widehat{e}}^{(t)}\) removed, or \({\mathbf{E}}_{[{:\widehat{E}}_{{\text{D}}}^{\left(t+1\right)}]}\), where, \({\widehat{E}}_{{\text{D}}}^{\left(t+1\right)}:=E\setminus \left({\widehat{E}}_{{\text{I}}}^{\left(t\right)}\cup \left\{{\widehat{e}}^{(t)}\right\}\right)\) and \({\widehat{E}}_{{\text{I}}}^{\left(t\right)}\) is the current independent edge set inherited from the previous time-step. This work used the iterative procedure defined in [32] to identify the sub-matrix of \({\mathbf{E}}_{[{:\widehat{E}}_{{\text{D}}}^{\left(t+1\right)}]}\) with equivalent rank of \(\mathbf{E}\).

Should an edge choice \({\widehat{e}}^{(t)}\) be found to be invalid, the agent was asked to repeat the selection of the current edge, while the incorrect \({\widehat{e}}^{(t)}\) would be removed and no longer be considered. Consequently, experiences are only generated via valid edge choices, which was ensured by checking the rank of the matrix generated at each step.

6.3.2 Reward

This work directly formulated the rewards for the RL model based on the problem’s optimisation objective, to minimise the matrix conditioning number of the dependent equilibrium matrix.

Specifically, a three-prong reward formulation was developed, consisting of two rewards offered at the final transition of each episode corresponding to the stop action, noted \({r}_{\upkappa }^{(t)}\) and \({r}_{\%}^{(t)}\) defined in respectively (30) and (31), and an intermediate reward \({r}_{\phi }^{(t)}\) offered with each new edge selection using potential-based reward sha**. The three rewards were summed as follows:

where \({\alpha }_{\phi }\) is 1 for \(0<t\le \left|E\right|-{\text{rank}}\left(\mathbf{E}\right)\) and 0 otherwise, providing a clean separation of rewards for transitions corresponding to either edge selection or the final stop** action.

6.3.2.1 Remap** value

Considering that the value range of \(\kappa \left({\widehat{\mathbf{E}}}_{\mathbf{D}}^{\left(t\right)}\right)\) is highly variable, encompassing in some cases values that may be up to five orders of magnitude apart, using the matrix condition number would pose potential numerical challenges for the training process. In response, a global rescaling of the condition number by taking its \(p\)-th root was adopted as follows:

where \({p}_{\kappa }\) is a hyper-parameter controlling the degree of flattening of the conditioning value and the negative signage enabled the recasting of the matrix conditioning minimisation problem as a reward maximisation problem for DRL. The expression presented above was determined experimentally. Other remap** functions have also been investigated. For example, the author observed that the model's learning efficacy was significantly reduced when the condition number was directly employed as a reward, or when log scaling was applied instead of the one proposed above.

6.3.2.2 Final reward

The primary reward \({r}_{\kappa }^{(t)}\) offered at the completion of the selection of an independent edge was simply computed as the scaled conditioning number at time-step \(t\) as follows:

6.3.2.3 Percentile reward

Additionally, the agent received a percentile ranking-based reward \({r}_{\%}\) when it identified an edge set \({E}_{{\text{I}}}\) with a corresponding \(\kappa \left({\widehat{\mathbf{E}}}_{\mathbf{D}}^{(t)}\right)\) that was equal to or below a pre-defined percentile threshold \({p}_{\%}\). This is expressed as:

where \(\kappa :=\{{\kappa }_{1},\dots ,{\kappa }_{i},\dots ,{\kappa }_{{N}_{{E}_{{\text{I}}}} }\}\), the set of pre-computed conditioning values computed from \({N}_{{E}_{{\text{I}}}}\) randomly sampled edge sets \({E}_{{\text{I}},i}\) with \({\kappa }_{i}=\kappa \left({\mathbf{E}}_{{[:,E}_{{\text{I}}, i}]}\right)\), and \({{\text{Q}}}_{\%}\left(\bullet \right)\) a function returning the value corresponding to the \({p}_{\%}\)-th percentile of \(\kappa \).

6.3.2.4 Improvement reward

To accelerate the learning process, the agent also received a reward \({r}_{\phi }^{(t)}\) to provide intermediate feedback on its performance before it completed an edge set selection. This work employed a potential-based reward sha** [57], which offers theoretical guarantees of convergence to the optimal policy. Specifically, \({r}_{\phi }\) is defined as the difference in potential between any given pair of consecutive states \({\mathbf{S}}^{(t)}\) and \({\mathbf{S}}^{(t+1)}\), computed using the function \(\Phi \left(\bullet \right)\) as in:

For simplicity and consistency, the potential function \(\Phi \left(\bullet \right)\) was set directly to \(f\left({\mathbf{S}}^{\left(t\right)}\right)\) in refers to Eq. (29).

6.4 Model optimisation

This work modelled its framework according to Double DQN (DDQN), maintaining two copies of the network: a policy network \({\mathcal{Q}}_{{\varvec{\Theta}}}\) and a target network \({\mathcal{Q}}_{{\varvec{\Theta}}}^{\ddagger }\). The optimisation relied on the principles of bootstrap**, whereby the agents’ estimations of Q-values were iteratively updated with their own estimates. Specifically, at the end of each episode, the transitions stored in the replay buffer were sampled and the network \({\mathcal{Q}}_{{\varvec{\Theta}}}\) was used to estimate \({\widehat{\mathcal{Q}}}_{a}^{(t)}\), the \(\mathcal{Q}\)-value corresponding to action \({a}^{\left(t\right)}\). For actions representing edge selections, \({\widehat{\mathcal{Q}}}_{a}^{(t)}\) was set to \({\widehat{\mathcal{Q}}}_{[e,1]}^{e}\), where \(e\) is the edge indicated by \({a}^{\left(t\right)}\); it was set to \({\widehat{\mathcal{Q}}}_{\Gamma }\) otherwise for the ‘stop’ action (recall Sect. 6.2). Next, \({\widehat{\mathcal{Q}}}_{a}^{(t)}\) was compared against the target value \({\widehat{\mathcal{Q}}}_{a}^{\ddagger (t)}\), which was computed as:

where the technique in DDQN (in contrast to DQN) of using \({\mathcal{Q}}_{{\varvec{\Theta}}}\) to choose the action \({a}^{(t+1)}\) in the one-step look ahead is evident. Next, the mean squared error between \({\widehat{\mathcal{Q}}}_{a}^{\ddagger (t)}\) and \({\widehat{\mathcal{Q}}}_{a}^{(t)}\) provided the temporal difference (TD) error used to update \({\mathcal{Q}}_{{\varvec{\Theta}}}\):

Model weights for the network \({\mathcal{Q}}_{{\varvec{\Theta}}}^{\ddagger }\) were periodically duplicated from \({\mathcal{Q}}_{{\varvec{\Theta}}}\). Additionally, this work applied Prioritized Sequence Experience Replay [58], sampling the transition according to the TD error above and hel** the model to prioritise the learning from experiences where it had the greatest difficulty in anticipating.

7 Results

7.1 Basic training statistics & configuration

Results presented in this sub-chapter are produced using models trained with \({N}_{{\text{E}}}=15000\), and \({\gamma }_{{\text{RL}}}=0.98\). Regarding the definition of rewards, \({p}_{\kappa }=4{, \lambda }_{\kappa }=5\), \({\lambda }_{\Phi }=5\), \({p}_{\%}\) = 90, and \({\lambda }_{\%}=5\).

In general, the agent achieved considerably better performance than the baseline by random sampling. To compare the agent’s performance across multiple starting pattern designs, the conditioning numbers of matrices \({\widehat{\mathbf{E}}}_{\mathbf{D}}\) corresponding to the agent’s selected independent edge set \({\widehat{E}}_{{\text{I}}}\) can be normalised according to that of their equilibrium matrix, as in \(\kappa ({\widehat{\mathbf{E}}}_{\mathbf{D}})/\kappa (\mathbf{E})\). This measure is derived from the insight shown below in Fig. 13 that \(\kappa (\mathbf{E})\) tends to set the lower limit of \(\kappa ({\mathbf{E}}_{\mathbf{D}})\). Using this metric, the trained agent’s error was 3.95, based on its predictions on pattern designs from the test dataset corresponding to its best episode averaged from three training runs. This is approximately 515 times lower than that of the random baseline, which was 2034.4.

Figure 14 visualises the training progression according to measures relevant to DRL and this task. The noticeable fluctuations in the loss profile at every 2000 episodes was an expected phenomenon corresponding to the target model update period discussed in Sect. 6.4. Additionally, the agent’s performance exhibited cyclical behaviour combined with a certain degree of noise. This points to the need for further investigations in future research to mitigate the large swing in performance during training. However, it is worth noting that cyclical behaviour is also a natural part of the dynamics of exploration and exploitation intrinsic to DQN and is not necessarily a cause for concern. Indeed, a strong trend of consistent improvement is discernible, especially in Fig. 14b when smoothing is applied to the training progression, highlighting the model's underlying promise.

Progression of various measures relevant to the training of DQN from 3 runs and their smoothed averages, measuring the agent’s performance on a dataset reserved strictly for evaluation. From top to bottom, the figure shows the a Total reward; the b Matrix conditioning ratio corresponding to selected independent edge sets; the c Average exploration strategy, showing the gradual transition from exploration to exploitation; and the d Model loss based on the temporal-difference error at each training episode

In general, data size was observed to contribute to model performance. On the other hand, the relationship between diversity and performance is more nuanced. It was observed that the introduction of highly unstructured and irregular patterns (recall triangulation methods introduced in Sect. 5) led to the overall improved capacity of the converged model to handle a wider range of patterns. However, it also complicated and slowed the agent’s training, in comparison to earlier experiments when only structured and quadrilateral patterns with spatial non-uniformity were presented.

Furthermore, certain structured pattern types, such as the radial and cross-grid, were more prone to numerical instability and ill-conditioning, causing the condition value to explode, and were therefore removed from the training process.

7.2 On training design set

The remainder of this paper examines the agent’s prediction across various categories of pattern designs. For each pattern studied, a comparison is made with \({N}_{{\text{s}}}=\) 200 independent edge set obtained by random sampling. The sample size was determined through trial-and-error, utilising quadrilateral patterns with known selection heuristics (see Sect. 5.1). The process ensured that the set of matrix conditioning values associated with the randomly sampled collection, denoted as \(\kappa :=\left\{{\kappa }_{1},\dots ,{\kappa }_{{N}_{{\text{S}}}}\right\},\) consistently had a lower bound of value \({\kappa }_{{\text{min}}}\), which was within 10% of the conditioning value associated with edges selected via heuristics.

7.2.1 Quadrilateral designs

The agent’s prediction on structured quadrilateral patterns with varying boundary conditions is shown in Fig. 15. Because there are simple heuristics in place for solving these patterns, the model’s performance can be assessed readily, providing an intuitive check on its performance.

Overall, the model was successful in learning the heuristics defined in Sect. 5.1 selecting one member per each continuous sequence of edges. Additionally, in Fig. 15A and C where \(\left|{E}_{{\text{I}}}\right|\) is less than the number of continuous line sequences running across on either directionality of the quadrilateral pattern, the agent was able to pick edges from sequences that are associated to lower corresponding \(\kappa \left({\mathbf{E}}_{\mathbf{D}}\right)\): for instance, the agent selected edges within sequences linking supports to supports in Fig. 15C as opposed to those linking supports to the unsupported edge sequence.

The agent’s efficacy is further corroborated by the conditioning numbers associated with its selections, which were generally close to the reference minimums obtained from the random baseline collections generated for the respective patterns. In all patterns, the agent’s selections had conditioning values that were well below the patterns’ random baseline mean and median conditioning values.

7.2.2 On pattern types without structure & regularity

The practical usefulness of the model ultimately depends on patterns without known heuristics, such as those embedding irregularity both in terms of spatial configuration and connectivity, and mixed quadrilateral and triangular elements. Table 3 shows that the agent was able to offer convincing predictions in these scenarios as well.

In all patterns tested, the agent was able to achieve better performances than both the mean and median random baseline values, achieving corresponding \(\upkappa \left({\widehat{\mathbf{E}}}_{\mathbf{D}}\right)\) that were within 5 times the amount of the lowest value found through the patterns’ random baseline collections. Encouragingly there were even instances where the agent offered more well-conditioned selections than the cases found through sampling, offering some suggestions on the agent’s usefulness.

7.2.3 On out-of-distribution structured pattern

The trained agent was used to select independent edges in out-of-distribution pattern designs that were omitted from the training process, such as the cross-grid and radial patterns presented in Fig. 2. These patterns have corresponding \(\mathbf{E}\) that are nearly singular with enormous value ranges and were as such omitted from the training process. The model’s performance on these patterns can therefore offer some indication of its robustness.

Table 4 shows the agent’s edge selections on cross-grids with different densities. Comparing the rows corresponding to \({\widehat{E}}_{{\text{I}}}\) and \({E}_{{\text{I}}, {\text{heuristic}}}\) in Table 4 shows that the conditioning associated with the agent’s predictions is similar to the performance achievable by the heuristic selection method described in Sect. 2. Similarly, Table 5 illustrates the predictions on the radial pattern at various densities. Even though the model did not incorporate these patterns into the training, it was able to offer edge sets with associated conditioning values that were near the lower limit of the patterns’ random baseline conditioning values, and certainly below the collections’ median values.

7.2.4 Extended investigations

7.2.4.1 Interpreting the agent’s sequential decision-making

The model can potentially offer pedagogical value to designers in the selection of independent edges. Figure 16 visualises the Q-values output by the agent at each time-step during sequential edge selection on 5 designs.

Depicted in Fig. 16.1-2 are predictions on topologically structured and spatially non-uniform quadrilateral patterns with intuitive solutions (recall Fig. 2 and Sect. 5.1). Each new edge selection caused the similarly oriented and face-sharing edge on a neighbouring edge sequence to lighten up immediately in the subsequent time-step. By contrast, the aligned edge immediately in front or behind the selected edge on the same edge sequence darkened substantially. These predictions illustrate that the agent’s sequential decision-making aligned with geometric intuition. It was previously discussed in Sect. 3.2.3 that the selection of similarly aligned edges would produce ill-conditioned behaviour, referring to the example of Fig. 6. Conversely, the selection of two edges on opposite sides of a face in a quadrilateral pattern offered some degree of geometric decoupling, so that they can be adjusted independently from one another with limited influence on the remaining edges, thereby producing well-conditioned behaviour.

The agent’s understanding, however, was not consistent throughout the pattern’s domain. The effects of the current edge selection at subsequent steps to edges further away along the same edge sequence were visibly weaker, with the colours of these edges darker, but also lighter than the edge immediately connected to the selected edges. Additionally, the Q-values predicted by the agent in Fig. 16.B at \(t=2\) corresponded to 3 invalid edges along the unsupported boundary edge sequence. These inconsistencies are likely due to the design of the environment, which only considered optimality, deferring the validity check to the rank-based method outlined in Sect. 6.3.1. Furthermore, the masking of actions, an established approach in DQN, inadvertently allowed the attribution of high Q-values to previously selected edges, which would neither be intuitive nor valid in practice since it would not be possible to select the same edge twice. In spite of these peculiarities, the agent nonetheless offered substantial functional utility and efficacy with regard to edge selection on topologically and spatially irregular patterns, finding edge sets with associated values \(k\left({\mathbf{E}}_{\mathbf{D}}\right)\) that were between one to two orders of magnitude lower than the patterns’ corresponding median random baseline values.

7.2.4.2 Multiplicity & opportunity for intervention

Since the agent was trained with the Boltzmann exploration, it also learnt to accommodate edge selections that deviated from optimality. This is evident in Fig. 17, which illustrates 3 repeated inferences on 4 pattern designs with edge selection probabilistically sampled according to Q-value. It can be observed that the agent can produce edge selections corresponding to matrix condition values that are generally 10 to 20 times better than the median random baseline values. This opens the possibility for users or designers to incorporate their selection preferences, useful in scenarios when exact control over specific edges is desired.

8 Closing remarks

8.1 Limitations & outlooks

At present, the significant limitation of this work is that it relies on a computationally exhaustive validity check based on successive matrix rank computation (recall Sect. 6.3.1). The step-wise response time is usable (with step time below 100 ms) for the systems investigated in this work but becomes substantial with larger patterns, alluding to the cubic time complexity of the rank operation, which is \(O\left({N}^{3}\right)\). The requirement for rank computation weakens the opportunity for the acquired learning to seamlessly support new pattern design for significantly larger patterns.