Abstract

Context

Map** the distribution of species, especially those that are endemic and endangered like certain tree species, is a vital step in the effective planning and execution of conservation programs and monitoring efforts. This task gains even more significance as it directly contributes to forest conservation by highlighting the importance of species diversity.

Objectives

Our study objective was to assess the detection accuracy of a specific tree using different remote sensing sources and approaches.

Methods

Initially, individual trees were identified and classified using a canopy height model derived from UAV data. Next, we carried out the classification of satellite data within the Google Earth Engine. Lastly, we scaled the UAV-RGB dataset to match the spatial resolution of Sentinel-2, which was then employed to train random forest models using the multispectral data from Sentinel-2.

Results

For the UAV data, we achieved overall accuracies of 56% for automatically delineated tree crowns and 83% for manually delineated ones. Regarding the second approach using Sentinel-2 data, the classification in the Noor forest yielded an overall accuracy of 74% and a Kappa coefficient of 0.57, while in the Safrabasteh forest, the accuracy was 80% with a Kappa of 0.61. In the third approach, our findings indicate an improvement compared to the second approach, with the overall accuracy and Kappa coefficient of the classification rising to 82% and 0.68, respectively.

Conclusions

In this study, it was found that according to the purpose and available facilities, satellite and UAV data can be successfully used to identify a specific tree species.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Providing adequate maps is an essential step in planning and designing protection and conservation management strategies for endangered tree species. Traditionally, field inventory has been carried out to detect and characterize the spatial distribution, population size, and structural attributes of endangered species. Field efforts are costly, and to date, only relatively few plant species have undergone a range of activities to give the required data for conservation (Rominger and Meyer 2019).

Opposed to fieldwork, remote sensing provides data for monitoring and map** at lower costs and faster and easier repeatability. Besides, it can cover farther, larger, and harder-to-reach areas. To collect images of the earth, a variety of remote sensing instruments including unmanned aerial vehicles (UAV), satellite map** instruments, simple optical imaging tools, and sophisticated laser and radar systems have been designed (Asner et al. 2018). To access and analyze data from remote sensing, numerous approaches have been developed that help ecologists address significant conservation issues. Remote sensing technology offers an amazing opportunity for biodiversity conservation (Gao et al. 2019).

Satellite and drone-based ecological research aims to link ecology and remote sensing to make it easier to study ecosystem function and to measure the geographic distribution of vegetation types at the plot, landscape, local, and global scale (Muraoka and Koizumi 2009). Numerous sensor types at different spatial resolutions ranging from mild (Landsat, Sentinel, and Hyperion) to high (RapidEye and SPOT) and very-high (WorldView and IKONOS) spatial resolution, providing important data for conservationists of nature, for example, tree species identification (Fassnacht et al. 2016). Among the available satellites, Sentinel-2 A and Sentinel-2B of the Copernicus Sentinel-2 constellation each include multispectral sensors that capture 13 bands with varied bandwidths and wavelengths ranging from 442 to 2202 nm. The data are freely available at a high temporal resolution of 5 days and a spatial resolution of 10, 20, and 60 m. Also, using the free cloud-based Google Earth Engine platform (GEE: http://earthengine.google.org) provides a user-friendly way of data exploitation based on JavaScript language and Google’s cloud to analyze and access remotely sensed data on a worldwide scale (Pazúr et al. 2021; Zhang et al. 2020). Multiple satellite data and products are available through the catalog of GEE data. GEE provides a variety of image collection, processing, analysis, classification, and export options. It combines a large-scale computational capability designed for parallel processing of geospatial data with a public data catalog that consists of all of the Sentinel images (Lee et al. 2016). Praticò et al. (2021) tested Sentinel-2 time-series classification in GEE to classify natural land cover in a mountainous national park using a range of algorithms. The best results (overall accuracy of 0.88 and multi-class F-score of 0.88) were achieved based on a random forest algorithm and a summer image composite. The efficiency of using Sentinel-2 images for forest area classification in GEE has been demonstrated in a range of studies (Çağlayan et al. 2020; Fang et al. 2020; Tassi and Vizzari 2020; Praticò et al. 2021).

Using satellite data to map endangered species is thus of great interest. Yet, Collecting field reference data in connection with satellite remote sensing is essential. In situ data are prone to a range of issues such as limited sample sizes due to laborious and costly fieldwork, GPS uncertainty resulting from dense canopy cover, and discrete point or plot observation measurements not exactly matching the ongoing representation of earth observation data (Kattenborn et al. 2019). A practical alternative can be the collection of reference data based on UAV imagery of high spatial resolution. In place of field data, Kattenborn et al. (2019) and Daryaei et al. (2020) utilized UAV images to map woody invasive plants and detect riparian forests using satellite imagery.

Eco-drones or conservation drones present a low-cost alternative to environmental planners working in various ecosystem types (Ivosevic et al. 2015). RGB (i.e., Red, Green, and Blue) cameras mounted on UAV platforms are affordable instruments for forest conservation and monitoring projects because of their high spatial and temporal resolution. Due to their operational flexibility, light UAVs have gained much attention for forest management, inventory, conservation, and health monitoring (Guerra-Hernández et al. 2017; Rees et al. 2018).

To the extent that we are informed, studies with a focus on map** endangered species using remote sensing technology are still scarce. Leduc et al. (2018) explored the map** of endangered species of wild leek with a low-flying UAV in Gatineau Park, Quebec. They used orthomosaics derived from UAV imagery to distinguish wild leek from other plants on the forest floor, achieving F-scores of 0.69 and 0.76 for two different areas. Rominger and Meyer (2019) used UAV images for the survey of Arctomecon humilis, an endangered species and endemic to the Mojave Desert, USA. Some studies have used methods such as fishnet grids to detect endangered species (Rominger and Meyer 2019), while others have used individual tree detection (ITD) algorithms. In this context, Baena et al. (2017) used a multiresolution segmentation algorithm in the Lambayeque region in Northern Peru to delineate and detect individual trees, including Algarrobo (Prosopis pallida), Overo (Cordia lutea), and Sapote (Capparis scrabrida). Also, Otero et al. (2018) carried out a study in a mangrove forest reserve in Malaysia to detect individual mangrove trees using local maxima algorithms on the canopy height model. But, these all studies have either been carried out for forest floor assessment or in pure stands or were based solely on the use of UAV data to detect endangered species.

Caspian poplar (Populus caspica Bornm) a unique and threatened species, faces a decline due to its low regeneration rate (Fallah et al. 2011). Its habitats have increasingly been compromised by recent developmental activities and land use changes (Yousefzadeh et al. 2018). This species is native to the Hyrcanian forest, a sanctuary for numerous Arco-Tertiary relict plants. Remarkably, over 300,000 hectares of these forests are recognized as part of UNESCO’s World Heritage (UNESCO 2019). Worldwide, many species are under increasing threat. To combat and slow down this trend of degradation and potential extinction, there’s a pressing need for precise and efficient monitoring techniques (Jimenez Lopez and Mulero-Pazmany 2019; Woellner and Wagner 2019). In response, we have developed a workflow to identify individual trees, specifically distinguishing Caspian poplar, within a diverse, multilayered forest using UAV (unmanned aerial vehicle) data. Additionally, we examine two different methods for map** Caspian poplar on a broader scale. The effectiveness of using Sentinel-2 data in map** individual Caspian poplar trees is also evaluated. Our research investigates how the accuracy of classifying satellite data is enhanced when a UAV-derived map of Caspian Poplar is utilized as training data.

The following are the aims of this study: (i) to evaluate the suitability of the SfM technique to generate point clouds over Hyrcanian broadleaf forest stands using UAV-RGB imagery, (ii) to compare the performance of manual and automatic crown delineation on the UAV-RGB imagery, (iii) to investigate the potential of the GEE environment for classification and identification of specific trees, and (iv) to evaluate UAV data as training data to map endangered trees species of Caspian poplar trees using Sentinel-2 imagery in GEE.

Materials and methods

Three approaches were assessed for Caspian poplar identification based on UAV-RGB data, Sentinel-2 data, and coupling UAV and Sentinel-2 data. An overview of the workflow is given in Fig. 1.

Study area

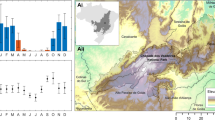

The research locations are in areas of both the Noor old-growth forest (Mazandaran province) and Safrabasteh forest (Gilan province) in the North of Iran, which is the coastal plain forest in the country (Fig. 2). The Noor forest consists of 3682 ha, approximately 400 hectares of which is a promenade and the remaining is managed as forest reserves. The area is located at an altitude range of − 20 to + 50 m above sea level (Datum of WGS84), centered at an eastern latitude of \({52}^{^\circ }{02}^{{\prime }}{29.14}^{{\prime \prime }}\) and a northern longitude of \({36}^{^\circ }{34}^{{\prime }}{43.96}^{{\prime \prime }}\). Slopes range from 0 to 5%. The climate is humid according to De Martonne’s climate classification (Hadiani 2015), annual rainfall amounts to 997 mm, and the average temperature is 16.4 °C. The forested area consists of different tree species, with common hornbeam (Carpinus betulus), Caspian Poplar (Populus caspica), alder (Alnus glutinosa), Caucasian zelkova (Zelkova carpinifolia), Chestnut-leaved oak (Quercus castaneifolia), Common Elm (Ulmus minor), Caspian locust (Gleditschia caspica), Persian maple (Acer velutinum) and Tilia (Tilia begonifolia) being the main species (Table 1). The Safrabasteh forest is considered a plain forest with an altitude below sea level of -20 m. It is mainly composed of alder, Caspian poplar, and Caucasian wingnut (Pterocarya fraxinifolia). The average annual rainfall and temperature for this forest are 1228.5 mm and 16.1 °C, respectively.

Location of the study area. a Iran, b Hyrcanian forest in the north of Iran (Bing satellite image as background), c Safrabasteh forest (RGB image of Sentinel-2 was downloaded from https://scihub.copernicus.eu), d Noor plain forest (RGB image of Sentinel-2)

Satellite and UAV data collection and process

Copernicus Sentinel-2 level 2 A data from Google Earth Engine’s dataset (https://earthengine.google.com) covering Noor and Safrabasteh forest were utilized over a summer period (all images of a target season, e.g., 2020, from 2020-06-01 to 2020-09-30). Following statistics calculation in GEE, the median of the images was used for the subsequent classification steps (the median was calculated in 3 months). Besides, the maskS2clouds function was applied to detect pixels covered by clouds and then replaced with non-cloud observations. Original spectral bands with spatial resolution of 10 and 20 m (i.e., Blue, Green, Red, Red Edge 1, Red Edge 2, Red Edge 3, NIR, and SWIR1 with a central wavelength (µm) of 0.490, 0.560, 0.665, 0.705, 0.740, 0.783, 0.842, and 1.610, respectively), as well as common vegetation indices (i.e., Normalized Difference Vegetation Index (NDVI), Enhanced Vegetation Index (EVI), Normalized Difference Water Index (NDWI), and Soil Adjusted Vegetation Index (SAVI)) were used to compute spectral characteristics.

UAV images were collected in five different forest stands (See Table 2) in September 2020 during the leaf-on season. UAV imaging was only carried out in Noor Plain Forest. These stands were selected based on visual detection and appropriate distribution, as well as road accessibility (Kuzmin et al. 2021). The UAV images were acquired using a multirotor platform Phantom 4 PRO. Phantom 4 carries a 20-megapixel camera with an 84-degree field of view and a 1/2.3 CMOS sensor that can collect red, green, and blue (RGB) data.

The flight height was 100–200 m above ground. The forward lap and side lap of adjacent images were set to 80% and 70%, respectively, resulting in a ground sampling distance (GSD) of 2.5–4.2 cm. Images were taken in JPG format with the camera set to automatic mode (ISO:100, and mechanical shutter speed: 8 − 1/2000) at noon under a clear sky and calm wind conditions. An east-west flight direction was chosen to reduce wind and shadow impacts on images. Original spectral bands (i.e., Red, Green, Blue), as well as spectral indices (Green normalized by Blue, and Red normalized by Blue), were derived from the RGB orthomosaic. The following formulas were applied:

Field measurements

In June 2019, a field survey was conducted in the five respective forest stands to record tree species. The trees’ geographic positions were recorded using Real-time kinematic positioning (RTK GPS) (Gintec G10) with high horizontal accuracy (± 4.5 cm accuracy). In case the GPS signal was too weak to measure tree positions correctly, an azimuth–distance technique utilizing Bosch DLE 50 professional Laser Distance Meter and a Suunto KB-14/360R Hand Bearing Compass was used. All trees with crowns identifiable on the orthomosaic were considered for UAV data validation.

Map** of Caspian Poplar on a small scale based on RGB-UAV imagery

Image processing

The UAV images were processed using Agisoft Metashape Professional v1.6.3 based on the structure from motion (SfM) technique to generate the orthomosaic. Based on 2D overlap** images, the SfM algorithm creates 3D point clouds. It uses key points in each image to match the same points in another set of images over the same area (Otero et al. 2018). The process of creating 3D point cloud data to establish the forest structure is a common step for tree detection and delineation (Fujimoto et al. 2019). A Canopy Height Model (CHM), which contains the necessary information about vegetation height above the terrain surface (Panagiotidis et al. 2016), is the primary indicator of forest structure and hence a necessity for estimating other forest structure attributes (Nevalainen et al. 2017).

To generate the CHM, the Digital Terrain Model (DTM) is subtracted from the Digital Surface Model (DSM). A dense point cloud dataset was utilized to construct DTM and DSM (Birdal et al. 2017; Mohan et al. 2017; Peña et al. 2018). The DSM was generated by automatically analyzing the dense point cloud for terrain and other (vegetation) points based on their Z values. The ground points were then used to construct the DTM, which was subsequently subtracted from the point cloud Z values (Brovkina et al. 2018; Shin et al. 2018). Finally, the CHM was obtained by subtracting the DTM from the DSM (Tanhuanpaa et al. 2016; Birdal et al. 2017; Mohan et al. 2017; Fankhauser et al. 2018; Goldbergs et al. 2018; Peña et al. 2018; Surovy et al. 2018).

The Noor forest is dense and in some stands, there were not enough features to be identified as tie points. Therefore, a range of images could not be aligned automatically. This was the case for four stands (i.e., stands 1–4 in Table 2), thus, only the image mosaic (without the point cloud data) of these stands could be used as training data on Sentinel-2 imagery. Stand 5 contained both forest cover and built-up features, allowing the generation of the point cloud for this stand. Automatic individual tree detection was performed for just stand 5 to detect the tree tops and to delineate their crown. Also, in this study, the evaluation of tree detection accuracy was performed independently of the trees’ age and height.

Individual tree detection of Caspian Poplar

The local maxima (LM) algorithm (ForestTools package in R software) was used on the UAV-derived CHM (see (Miraki et al. 2021) for details). An accuracy assessment was carried out to establish the reliability of the tree detection, i.e., the number and location of trees (point accuracy). A very crucial stage in validating the ITD results is an agreement between the detected trees and the field-surveyed trees. The following equations were used to evaluate individual tree detection (Goldbergs et al. 2018; Yin and Wang 2016).

where, r is equal to the rate of tree detection or recall, p is equal to the precision of the detected trees, F-score is equal to the overall accuracy, TP (true positive) is equal to the number of correctly detected trees, FN (false negative) is equal to the omission error, FP (false positive) is equal to the commission error.

The training data for Caspian poplar classification were taken from the RGB orthomosaic by selecting pixels inside crowns delineated by the watershed algorithm. Statistical variables, i.e., mean value, range, variety, standard deviation, sum, median, majority, and minority were calculated from original spectral bands and spectral indices (NGB, NRB) from the RGB orthomosaic. Finally, a Random Forest (RF) algorithm was applied for classification (randomForest package in R version 3.6.0.). RF is an ensemble learning method that uses a randomly selected subset of training samples and variables to generate numerous decision trees. Because of the performance and precision of the classification, the RF classifier has become prominent in the field of remote sensing (Zhang et al. 2019). To run the RF classifier, we relied on default values (i.e., number of trees (ntree) set to 500), since previous studies confirmed good performances using default settings (Daryaei et al. 2020; Immitzer et al. 2012, 2016). The tree species in our study area were classified into two classes, with one class containing Populus capsica (Caspian poplar) and the other class containing Parrotia persica (Ironwood tree), Ulmus minor (Common Elm) and Quercus castaneifolia (Chestnut-leaved oak). The tree identification accuracy was assessed using 10-fold cross-validation by computing the producer’s, user’s, and overall accuracy (OA), as well as the Kappa coefficient. Besides, crown delineation was performed manually to compare the results of automatic and manual crown delineation. To do so, individual treetops were manually delineated with a one-meter buffer, and data point values were extracted for each tree using descriptive statistics computed from the orthomosaics. Following statistics calculation, the RF classification was performed. Finally, to determine species misclassification, as well as the accuracy of Caspian poplar tree identification against all other species, classification was performed for all species individually.

Map** of Caspian Poplar at large scale based on Sentinel-2 imagery in GEE

Large-scale tree species classification was performed using a Random Forest (RF) classifier. In the Noor forest, we collected a total of 278 sample areas over Caspian poplar (84), other trees (177), and built-up areas (17) from Sentinel-2 images in the GEE environment. Classification accuracy was assessed based on independent validation sample areas, i.e., 78 sample areas for Caspian poplar, 100 sample areas for other trees, and 25 sample areas for the built-up class. In the Safrabasteh forest, a total of 111 sample areas for Caspian poplar (31), other trees (69), and non-tree areas (11) were collected. To assess the classification accuracy, 25 sample areas of Caspian poplar, 46 sample areas of other trees, and 13 sample areas of the non-tree class were collected.

Map** of Caspian poplar at large scale based on the UAV data as training data for Sentinel-2 imagery in GEE.

The UAV orthophotos were segmented using the eCognition developer software and a multi-resolution image segmentation technique was applied to all forest stands (Blaschke 2010). To perform the segmentation, based on trial-and-error, as well as visual interpretation of the results, scale parameters, shape, and compactness parameters were determined. The segmentations were labeled into three classes, i.e., Caspian poplar, other trees, and built-up areas. Then, the segmentation and its labeled performance were visually assessed, particularly the segment boundaries between Caspian poplar and other trees were evaluated, and any obvious misclassifications were corrected manually. The UAV segmentation results were then upscaled to 10 m Sentinel-2 spatial resolution (Kattenborn et al. 2019; Daryaei et al. 2020). When upscaling high-resolution UAV data to Sentinel-2 pixel size, the class label with the highest abundance was selected as the final upscaled pixel label using the resampling technique (for details, see (Daryaei et al. 2020). The final map was used as training data for the Sentinel-2 image classifications in the GEE. Training data generated by upscaling UAV data for Sentinel-2 image classification is given in Table 2 for the Noor forest. Classification accuracy assessment was performed based on independent validation sample areas (i.e., 78 sample areas of Caspian poplar, 100 sample areas of other trees, and 25 built-up sample areas). Some patches of Caspian poplar trees in Copernicus Sentinel-2 imagery and UAV orthophotos are shown in Fig. 3.

Results

Caspian Poplar detection on a small scale based on RGB-UAV imagery

The highest overall accuracy of the UAV orthophoto segmentation (F-score = 0.69) was obtained for a Gaussian-filtered CHM with sigma 1 and a pixel size of 50 cm. Recall and precision were 0.77 and 0.6, respectively (Fig. 4). The classification accuracies for the two classes (i.e., Caspian poplar, and other trees) based on automatic and manual tree crown delineation are presented in Table 3.

The accuracy metrics of the classification for all four classes (i.e., Caspian poplar, Persian ironwood, Common elm, and Chestnut-leaved oak) based on automatic and manual tree crown delineation are shown in Table 4. Tree species classification using the delineated crown segments on the CHM showed moderate results (OA = 56%, Kappa = 0.39, with F-score = 69%). Common elm was detected with the highest producer’s (67%) and user’s accuracy (70%), followed by Caspian poplar (i.e., producer’s accuracy of 58% and user’s accuracy of 53%). The Persian ironwood showed the lowest producer’s (38%) and user’s (36%) accuracy. The highest misclassification for Caspian poplar was Chestnut-leaved oak, with six Caspian poplar trees being classified as Chestnut-leaved oak. However, when the tree crowns were determined manually, Caspian poplar resulted in the highest accuracy among all species (97% producer’s and 94% user’s accuracy). Namely, out of 33 Caspian poplar trees, only one tree was identified as Chestnut-leaved oak. In this approach, too, the Persian ironwood identification showed the lowest accuracy. Overall, tree classification using the manually delineated crowns showed good results (OA = 83%, Kappa = 0.74).

Map** of Caspian Poplar trees at large scale based on Sentinel-2 imagery in GEE

The accuracy evaluation of the RF model efficiency for a 3-class pixel-based classification (Caspian poplar, other trees, built-up class in Noor forest, and non-tree class in Safrabasteh forest) in the GEE environment is presented in Table 5. For the Sentinel-2 dataset, classification results were obtained with an OA and Kappa of 74% and 0.57 in Noor, and 80% and 0.61 in Safrabasteh forest, respectively. For Noor Forest, in the built-up class, all sample areas were correctly classified, and the user’s and producer’s accuracy was 100%. From the 78 test sample areas for Caspian poplar, 41 sample areas were correctly identified and 37 sample areas were identified as belonging to the “other trees” class. There was a misclassification for the “other trees” class, too. Specifically, from 100 test sample areas, 86 sample areas were correctly identified, but 14 sample areas were mistaken for Caspian poplar. In the Safrabasteh forest, the RF classification results resulted in an OA and Kappa of 80% and 0.61. Caspian poplar was detected with better producer’s accuracy (69%) in the Safrabasteh forest than in the Noor forest. The final classification maps for Caspian poplar detection for both forests are shown in Fig. 5.

Map** of Caspian poplar trees at large scale based on UAV data as training data for Sentinel-2 imagery in GEE

In this approach, following the conversion of the UAV orthophoto imagery into training data for classifying Sentinel-2 images, a total of 1645 training areas including 396 training areas of Caspian poplar, 1153 training areas of other trees, and 96 built-up training areas were considered. As already mentioned in Sect. 3.2, there is a noticeable misclassification for the Caspian poplar class. Table 6 shows that for the Caspian poplar tree class with 78 test sample areas, 43 sample areas were correctly identified, and 35 sample areas were classified as other trees. From the 100 test sample areas for other trees, 99 sample areas were correctly identified and one sample area was identified as Caspian poplar class. All areas of the built-up class were correctly classified. Table 6 displays that the RF classification overall accuracy using UAV imagery as training data only slightly differs from the previous approach (OA = 82%, Kappa = 0.68). The final classification map is shown in Fig. 6.

a Noor forest (RGB image of Sentinel-2 was obtained from https://scihub.copernicus.eu) with this study’s five UAV tree stands (yellow squares), b Image of tree stands in the UAV data from Phantom 4 PRO, c Result of semantic segmentation of the UAV images of the five tree stands, d Classification map for Caspian poplar map** from Sentinel-2 imagery in GEE using UAV imagery as training data

Discussion

In this study, we assessed the performance of UAV and satellite image classification for detecting and discriminating a specific tree (Caspian poplar) from other tree species in the mixed broadleaf forest. To do so, we classified multispectral Sentinel-2 images in the GEE environment. Also, point clouds and orthomosaics acquired by a UAV carrying an RGB camera were used for the Caspian poplar identification.

Detection of Caspian Poplar trees on a small scale based on RGB-UAV imagery

The automatic ITD accuracy to identify species depends on the accuracies of tree crown delineation and species classification in the study area (Deng et al. 2016). In this research, nearly 69% of the trees in our reference tree stands were identified, and a classification overall accuracy of 56% was acquired by the RF classification. This accuracy is the result of local maxima ITD and species discrimination. The local maxima approach identifies individual canopy peaks using a suitability search window size. For small search window sizes, a higher number of incorrect peaks will be detected (false positives; errors of commission); in contrast, large window sizes cause a larger number of correct peaks to be missed (false negatives; errors of omission) (Popescu et al. 2003; Goldbergs et al. 2018). Consequently, we used a variable window size algorithm where the window size varies according to its central cell height.

The UAV imagery pixel size also affects the final accuracy of ITD. Very high and very low spatial resolutions decrease tree detection accuracy (Goldbergs et al. 2018) and an optimal pixel size has to be identified for different stands. Further, the pixels to be expanded by segmentation to delineate the crowns encounter the challenge of excess segments that are primarily a consequence of the occurrence of several peaks within individual tree crowns (Miraki et al. 2021). CHM smoothing lowers the number of produced segments (Tanhuanpaa et al. 2016). In our study, the overall accuracy increased when stronger smoothing was applied, implying that suppression of within-tree peaks positively affects detection accuracy. The ITD overall accuracy in our study was lower compared to other studies ( Mohan et al. 2017; Fujimoto et al. 2019; Harikumar et al. 2019). An important difference arises from the difference between the studied stands. For instance, for conifer species in a rather sparse single-story forest, nearly all the trees could be detected, whereas, in a dense forest, the detection rate was found to be considerably lower (Tanhuanpaa et al. 2016).

In contrast to automatic crown delineation, manual crown delineation on the orthomosaic resulted in a higher overall accuracy of tree species classification. When the tree canopies were manually delineated, the overall accuracy and Kappa reached 83% and 0.72, respectively. This accuracy is a promising result for detecting Caspian poplar trees, hinting at differences in the spectral signature of this species compared to the other three species (Parrotia persica, Ulmus minor, and Quercus castaneifolia). Classifying all four species simultaneously showed that Caspian poplar can be discriminated with the highest producer’s and user’s accuracy (producer’s accuracy of 97% and user’s accuracy of 94%). Generally, the effectiveness of species detection depends on a significant spectral difference between target species (Kuzmin et al. 2017; Sadeghi and Sohrabi 2018; Rominger and Meyer 2019). So far, only a few studies have been carried out to identify or detect endangered or endemic species from drone imagery (Rominger and Meyer 2019; Sun et al. 2018; Van Auken and Taylor 2017). Their results mostly depended on the spectral differences among a target species and the rest of the plants.

This approach aimed to investigate whether high-resolution RGB imagery can be used to detect individual trees and identify a specific species, thus being able to classify Caspian poplar as an endangered tree. Overall, the performance was notable as we only used cost-effective UAV-based RGB images and publicly available software packages. In contrast, the majority of previous studies have used expensive devices such as multi- or hyperspectral sensors to improve performance (Onishi and Ise 2018). Our results suggest that the photogrammetric products obtained from UAV-RGB make it possible to classify specific tree species over the studied sites, which is beneficial for managing purposes, and it allows us to protective activity prioritization more effectively with the available limited resources. Of course, It is important to know that obtained results are not necessarily generalizable everywhere.

Map** of Caspian Poplar trees at large scale based on Sentinel-2 imagery in GEE

The detection of Caspian poplar trees using Sentinel-2 imagery was carried out with acceptable accuracy. The RF model in the GEE environment for Caspian poplar, other trees, and built-up classes resulted in an OA of 74% and Kappa of 0.57. The producer’s accuracies were 53% and 86% for the Caspian poplar class and other trees, respectively. Misclassifications for these two classes were high, which can be explained by the similarity of the spectral signatures of Caspian poplar and other trees except for reflectance magnitudes between 800 and 1000 nm, as can be seen in Fig. 7. In addition, visual examination revealed that increased misclassifications occurred between Caspian poplar and hornbeam trees. Of course, this study does not take into account the phenology of vegetation which could help to better discriminate tree species. The use of a longer time series should therefore be useful to explore. The higher accuracy of Caspian poplar detection (producer’s accuracy of 69%) in the Safrabasteh forest is assumed to be linked to lower species diversity in this forest. Besides, the largest number of companion species were alder trees, which have a very different spectral signature compared to Caspian poplar.

One reason for not achieving higher classification accuracies could be the difficulty in identifying spectrally pure training pixels, as this highly affects the classification accuracy (Poblete-Echeverría et al. 2017). Using spectrally pure pixels (i.e., pixels representing one single class only) for training leads to higher accuracy in pixel-based classification. Finding pixels made up entirely of a single tree species is difficult, even for the classification of tree species (Fassnacht et al. 2016). Due to the varied and intrinsically mixed nature of broadleaf forests, it was not always possible to collect pure sample locations consisting of a single Caspian poplar tree for our investigation. To cope with this fact, we concentrated on patches of a single tree (> 60% cover of a single species type) for selecting training pixels (Çağlayan et al. 2020).

Another factor affecting classification accuracy could be related to the spatial resolution of the satellite data (10 m), which may not be sufficient to discriminate certain tree species. Although higher spatial resolution can help to produce more accurate classification results at the species level, it requires significant processing power and large storage capacities. Map** endangered species with high accuracy often proves time-consuming and timeliness is therefore a critical factor in monitoring projects. To this end, GEE provides a cloud computing platform for Earth observation data analysis.

Map** of Caspian poplar trees on a large scale based on UAV data as training data for Sentinel-2 imagery in GEE

As the spatial resolution of the UAV imagery is very high, it can easily and rapidly be labeled by visual interpretation to obtain a large and accurate reference data set for training the Sentinel-2 classification model. The use of UAV-based RGB data as a reference for training Sentinel-2-based classification models increased the overall accuracy from 74 to 82%. UAV datasets upscaling to Sentinel-2 spatial resolution provided a larger number of Sentinel-2 pixels to train the classification model than using the originally collected training areas. In our study, the increase in OA and Kappa was moderate when using UAV-based training data (around 8% in the case of OA). A reason for this could be the imbalance of training data in different classes. Here, five different forest stands were selected for UAV data acquisition based on road accessibility, and proximity to UAV landing spots in different forest types (see Table 2). Stand 2 contained only tree species of the “other trees” class, and Caspian poplar trees were very few in stands 3, 4, and 5 compared to other trees. The low producer’s accuracy of Caspian poplar trees (0.55) is assumed to be a consequence thereof, as the imbalance in training data reduces the accuracy (Nevalainen et al. 2017) and Caspian poplar trees were frequently classified as belonging to the “other trees” class.

Overall, our results are in line with previous studies combining satellite and UAV data to take advantage of very high-resolution UAV data at a local scale and achieve results at larger scales. Daryaei et al. (2020), Dash et al. (2018), and Kattenborn et al. (2019) emphasize the usefulness of using UAV-based data in combination with satellite data in terms of time and cost performance, as well as improving classification accuracy. Our study is the first to investigate the ability of a lightweight and inexpensive UAV carrying a simple RGB camera to improve Sentinel-2 classification results in detecting endangered tree species in a dense broadleaved forest.

Conclusion

In this study, we framed the existing challenges in using UAV and satellite data for tree species detection and assessed in detail how to address them via the latest innovations in optical sensor technologies and image analysis algorithms. Our results presented that the use of Copernicus Sentinel-2 satellite imagery with UAV imagery as training data can detect Caspian poplar trees with acceptable accuracy (OA = 82%) at a large scale. GEE, a cloud-based computational platform for geographical analysis and worldwide-scale earth observation data has been widely employed, which makes customized Sentinel-based classification more available presents an enormous data pool of satellite imagery, and makes it easy access to advanced algorithms. Also at stand-scale, the use of UAV images allowed the identification of target trees with very high accuracy. In this way, a producer’s accuracy of 97% was achieved for the identification of Caspian poplar trees by manual crown delineation, and a respective accuracy of 58% for identification by automatic crown delineation. The results of our study highlight UAV-based photogrammetric point cloud potential for (i) individual tree monitoring and (ii) assessment of fine-scale habitat using high-resolution data to help the identification of endangered or locally extinct tree species. Overall, in this study, it was found that according to the purpose and available facilities, satellite and drone data can be successfully used to identify a specific tree species. It is noteworthy that differences in the spectral signature of Caspian poplar (e.g., in the NIR region) distinguish this species from others and are thus an important factor in separating it.

Data availability

The data used in this study are available for researchers upon request to the corresponding author for reasonable use in research.

References

Asner G, Martin R, Keith L, Heller W, Hughes M, Vaughn N et al (2018) A spectral map** signature for the Rapid Ohia Death (ROD) Pathogen in hawaiian forests. Remote Sens 10(3):404.

Baena S, Moat J, Whaley O, Boyd DS (2017) Identifying species from the air: UAVs and the very high resolution challenge for plant conservation. PLoS ONE 12(11):e0188714.

Birdal AC, Avdan U, Türk T (2017) Estimating tree heights with images from an unmanned aerial vehicle. Geomatics Nat Hazards Risk 8(2):1144–1156.

Blaschke T (2010) Object based image analysis for remote sensing. ISPRS J Photogrammetry Remote Sens 65(1):2–16.

Brovkina O, Cienciala E, Surový P, Janata P, Group F (2018) Geo-spatial Information Science Unmanned aerial vehicles (UAV) for assessment of qualitative classification of Norway spruce in temperate forest stands. Geo-spatial Inform Sci 5020:1–9.

Çağlayan SD, Leloglu UM, Ginzler C, Psomas A, Zeydanlı US, Bilgin CC, Waser LT (2020) Species level classification of Mediterranean sparse forests-maquis formations using Sentinel-2 imagery. Geocarto Int. 37(6):1587–1606

Daryaei A, Sohrabi H, Atzberger C, Immitzer M (2020) Fine-scale detection of vegetation in semi-arid mountainous areas with focus on riparian landscapes using Sentinel-2 and UAV data. Comput Electron Agric 177:105686.

Dash JP, Pearse GD, Watt MS (2018) UAV multispectral imagery can complement satellite data for monitoring forest health. Remote Sens 10(8):1216.

Deng S, Katoh M, Yu X, Hyyppä J, Gao T (2016) Comparison of tree species classifications at the individual tree level by combining ALS data and RGB images using different algorithms. Remote Sens 8(12):1034

Fallah H, Tabari M, Azadfar D, Jalali SGA (2011) Distribution and Ecological Features Endangered Species Populus Caspica Bornm in the Hyrcanian Forest. Natural Ecosystems of Iran, 2, 41–53. https://www.sid.ir/en/journal/ViewPaper.aspx?ID=259824. Accessed 19 January 2020

Fang P, Zhang X, Wei P, Wang Y, Zhang H, Liu F, Zhao J (2020) The classification performance and Mechanism of Machine Learning Algorithms in Winter Wheat Map** using Sentinel-2 10 m resolution imagery. Appl Sci 2020 10(15):5075.

Fankhauser KE, Strigul NS, Gatziolis D (2018) Augmentation of Traditional Forest Inventory and Airborne Laser scanning with unmanned Aerial systems and Photogrammetry for Forest Monitoring. Remote Sens 10(10):1–17.

Fassnacht FE, Latifi H, Stereńczak K, Modzelewska A, Lefsky M, Waser LT et al (2016) Review of studies on tree species classification from remotely sensed data. Remote Sens Environ 186:64–87.

Fujimoto A, Haga C, Matsui T, Machimura T, Hayashi K, Sugita S, Takagi H (2019) An end to end process development for UAV-SfM Based Forest Monitoring: Individual Tree Detection, species classification and Carbon Dynamics Simulation. Forests 10(8):680.

Gao P, Cheng C, Song C (2019) Satellite remote sensing for biodiversity conservation: exemplary practices and lessons learned. Landscape Ecol 34(8):2045–2047.

Goldbergs G, Maier S, Levick S, Edwards A, Goldbergs G, Maier SW et al (2018) Efficiency of individual tree detection approaches based on light-weight and low-cost UAS imagery in Australian savannas. Remote Sens 10(2):161.

Guerra-Hernández J, González-Ferreiro E, Monleón VJ, Faias SP, Tomé M, Díaz-Varela RA et al (2017) Use of Multi-temporal UAV-Derived Imagery for estimating individual Tree Growth in Pinus pinea stands. Forests 8(8):300.

Hadiani MO (2015) Uncertainty of Climate Change and Synoptic parameters and modeling the trends. Environ Resour Res 3(2):179–190

Harikumar A, Bovolo F, Bruzzone L (2019) A local projection-based Approach to Individual Tree Detection and 3-D Crown Delineation in Multistoried Coniferous forests using high-density Airborne LiDAR Data. IEEE Trans Geosci Remote Sens 57(2):1168–1182.

Immitzer M, Atzberger C, Koukal T (2012) Tree Species Classification with Random Forest Using Very High Spatial Resolution 8-Band WorldView-2 Satellite Data. Remote Sensing. 4(9):2661–2693

Immitzer M, Vuolo F, Atzberger C (2016) First Experience with Sentinel-2 Data for Crop and Tree Species Classifications in Central Europe. Remote Sensing 8(3):166

Ivosevic B, Han YG, Cho Y, Kwon O (2015) The use of conservation drones in ecology and wildlife research. J Ecol Environ 38(1):113–118.

Jimenez Lopez J, Mulero-Pazmany M (2019) Drones for conservation in protected areas: Present and Future. Drones 3(1):10.

Kattenborn T, Lopatin J, Förster M, Braun AC, Fassnacht FE (2019) UAV data as alternative to field sampling to map woody invasive species based on combined Sentinel-1 and Sentinel-2 data. Remote Sens Environ 227(February):61–73.

Kuzmin A, Korhonen L, Manninen T, Maltamo M (2017) Automatic segment-level Tree species Recognition using high Resolution Aerial Winter Imagery. Eur J Remote Sens 7254:238–259.

Kuzmin A, Korhonen L, Kivinen S, Hurskainen P, Korpelainen P, Tanhuanpää T et al (2021) Detection of European Aspen (Populus tremula L.) based on an unmanned Aerial Vehicle Approach in Boreal forests. Remote Sens 2021 13(9):1723.

Kwok R (2018) Ecology’s remote-sensing revolution. Nature 556(7699):137–138

Leduc M-B, Knudby AJ, Leduc M-B, Knudby AJ (2018) Map** wild leek through the Forest Canopy using a UAV. Remote Sens 10(2):70.

Lee JSH, Wich S, Widayati A, Koh LP (2016) Detecting industrial oil palm plantations on landsat images with Google Earth Engine. Remote Sens Applications: Soc Environ 4:219–224.

Miraki M, Sohrabi H, Fatehi P, Kneubuehler M (2021) Individual tree crown delineation from high-resolution UAV images in broadleaf forest. Ecol Inf 61:101207.

Mohan M, Silva CA, Klauberg C, Jat P, Catts G, Cardil A et al (2017) Individual tree detection from unmanned aerial vehicle (UAV) Derived Canopy Height Model in an Open Canopy mixed Conifer Forest. Forests 8(9):1–17.

Muraoka H, Koizumi H (2009) Satellite Ecology (SATECO)—linking ecology, remote sensing and micrometeorology, from plot to regional scale, for the study of ecosystem structure and function. J Plant Res 122(1):3–20.

Nevalainen O, Honkavaara E, Tuominen S, Viljanen N, Hakala T, Yu X et al (2017) Individual Tree Detection and classification with UAV-Based Photogrammetric Point clouds and Hyperspectral Imaging. Remote Sens 9(3):185.

Onishi M, Ise T (2018) Automatic classification of trees using a UAV onboard camera and deep learning. In CoRR (p. abs/1804.10390 ). http://arxiv.org/abs/1804.10390. Accessed 31 August 2019

Otero V, Van De Kerchove R, Satyanarayana B, Martínez-Espinosa C, Fisol MA, Bin, Ibrahim MR, Bin et al (2018) Managing mangrove forests from the sky: forest inventory using field data and unmanned aerial vehicle (UAV) imagery in the Matang Mangrove Forest Reserve, peninsular Malaysia. For Ecol Manag 411:35–45.

Panagiotidis D, Abdollahnejad A, Surový P, Chiteculo V (2016) Determining tree height and crown diameter from high-resolution UAV imagery. Int J Remote Sens 38(8–10):2392–2410.

Pazúr R, Price B, Atkinson PM (2021) Fine temporal resolution satellite sensors with global coverage: an opportunity for landscape ecologists. Landscape Ecol 36(8):2199–2213.

Peña J, de Castro I, Torres-Sánchez A, Andújar J, Martín DS, Dorado C, J., et al (2018) Estimating tree height and biomass of a poplar plantation with image-based UAV technology. AIMS Agric Food 3(3):313–323.

Poblete-Echeverría C, Olmedo G, Ingram B, Bardeen M (2017) Detection and segmentation of Vine Canopy in Ultra-high spatial resolution RGB Imagery obtained from unmanned aerial vehicle (UAV): a Case Study in a commercial vineyard. Remote Sens 9(3):268.

Popescu SC, Wynne RH, Nelson RF (2003) Estimating plot-level tree heights with lidar: local filtering with a canopy-height based variable window size. Comput Electron Agric 37(1–3):71–95.

Praticò S, Solano F, Fazio S, Di, Modica G (2021) Machine Learning Classification of Mediterranean Forest Habitats in Google Earth Engine based on Seasonal Sentinel-2 Time-Series and Input Image Composition Optimisation. Remote Sens 2021 13(4):586.

Rees A, Avens L, Ballorain K, Bevan E, Broderick A, Carthy R et al (2018) The potential of unmanned aerial systems for sea turtle research and conservation: a review and future directions. Endanger Species Res 35:81–100.

Rominger K, Meyer S (2019) Application of UAV-Based Methodology for Census of an Endangered Plant Species in a Fragile Habitat. Remote Sens 11(6):719.

Sadeghi S, Sohrabi H (2018) Tree species discrimination using RGB vegetation indices derived from UAV images. In UAV Small Unmanned Aerial system for environmental research-6th Edition (p. 5)

Shin P, Sankey T, Moore M, Thode A, Shin P, Sankey T et al (2018) Evaluating unmanned aerial vehicle images for estimating Forest Canopy fuels in a Ponderosa Pine stand. Remote Sens 10(8):1266.

Sun Y, Yi S, Hou F (2018) Unmanned aerial vehicle methods makes species composition monitoring easier in grasslands. Ecol Ind 95:825–830.

Surovy P, Almeida Ribeiro N, Panagiotidis D (2018) Estimation of positions and heights from UAV-sensed imagery in tree plantations in agrosilvopastoral systems. Int J Remote Sens 39(14):4786–4800.

Tanhuanpaa T, Saarinen N, Kankare V, Nurminen K, Vastaranta M, Honkavaara E et al (2016) Evaluating the performance of high-Altitude Aerial Image-based Digital Surface models in detecting individual Tree crowns in mature boreal forests. Forests 7(12):143.

Tassi A, Vizzari M (2020) Object-oriented LULC classification in Google Earth Engine combining SNIC, GLCM, and machine learning algorithms. Remote Sens 2020 12(22):3776.

UNESCO (2019) World Heritage List, Hyrcanian forests. Description is available under license CC-BY-SA IGO 3.0. https://whc.unesco.org/en/list/1584/

Van Auken OW, Taylor DL (2017) Using a drone (UAV) to determine the Acer grandidentatum (bigtooth maple) density in a Relic, isolated community. Phytologia 99(3):208–220

Woellner R, Wagner TC (2019) Saving species, time and money: application of unmanned aerial vehicles (UAVs) for monitoring of an endangered alpine river specialist in a small nature reserve. Biol Conserv 233:162–175.

Yin D, Wang L (2016) How to assess the accuracy of the individual tree-based forest inventory derived from remotely sensed data: a review. Int J Remote Sens 37(19):4521–4553.

Yousefzadeh H, Hosseinzadeh A, Effat C, Badbar M, Kozlowski G (2018) Phylogenetic relationship and genetic differentiation of Populus Caspica and Populus alba using cpDNA and ITS noncoding sequences. J Forestry Res. https://doi.org/10.1007/s11676-018-0785-4

Zhang M, Gong P, Qi S, Liu C, ** bamboo with regional phenological characteristics derived from dense landsat time series using Google Earth Engine. Int J Remote Sens 40(24):9541–9555.

Zhang X, Long T, He G, Guo Y, Yin R, Zhang Z et al (2020) Rapid generation of global forest cover map using Landsat based on the forest ecological zones. J Appl Remote Sens 14(2):022211.

Author information

Authors and Affiliations

Contributions

MM and HS wrote the main manuscript text and PF and MK read and edited the manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Miraki, M., Sohrabi, H., Fatehi, P. et al. Coupling UAV and satellite data for tree species identification to map the distribution of Caspian poplar. Landsc Ecol 39, 30 (2024). https://doi.org/10.1007/s10980-024-01846-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10980-024-01846-8