Abstract

Background

Chronotropic incompetence (CI) is common among elderly cardiac resynchronization therapy pacemaker (CRT-P) patients on optimal medical therapy. This study aimed to evaluate the impact of optimized rate-adaptive pacing utilizing the minute ventilation (MV) sensor on exercise tolerance.

Methods

In a prospective, multicenter study, older patients (median age 76 years) with a guideline-based indication for CRT were evaluated following CRT-P implantation. If there was no documented CI, requiring clinically rate-responsive pacing, the device was programmed DDD at pre-discharge. At 1 month, a 6-min walk test (6MWT) was conducted. If the maximum heart rate was < 100 bpm or < 80% of the age-predicted maximum, the response was considered CI. Patients with CI were programmed with DDDR. At 3 months post-implant, the 6MWT was repeated in the correct respective programming mode. In addition, heart rate score (HRSc, defined as the percentage of all sensed and paced atrial events in the single tallest 10 bpm histogram bin) was assessed at 1 and 3 months.

Results

CI was identified in 46/61 (75%) of patients without prior indication at enrollment. MV sensor–based DDDR mode increased heart rate in CI patients similarly to non-CI patients with intrinsically driven heart rates during 6MWT. Walking distance increased substantially with DDDR (349 ± 132 m vs. 376 ± 128 m at 1 and 3 months, respectively, p < 0.05). Furthermore, DDDR reduced HRSc by 14% (absolute reduction, p < 0.001) in those with more severe CI, i.e., HRSc ≥ 70%.

Conclusion

Exercise tolerance in older CRT-P patients can be further improved by the utilization of an MV sensor.

Graphical Abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The prevalence of advanced heart failure (HF) is progressively increasing worldwide. It is estimated that the prevalence of HF will increase by 46% between 2010 and 2030, probably related to longer life expectancy [1]. Cardiac resynchronization therapy (CRT) is a valid therapeutic option for patients with systolic HF, long QRS duration (QRSD), and optimal medical therapy [2]. CRT in elderly patients has also become increasingly common in clinical practice. Even though geographical differences may exist, CRT-P tends to be more commonly used in elderly patients than CRT-D. In a nationwide HF registry, 72.6% of CRT-P recipients were ≥ 70 years of age, whereas only 43.2% of CRT-D patients were in this age group [3]. Older patients with more comorbidity, cognitive dysfunction, or frailty are underrepresented in clinical trials that assess the effectiveness of CRT. Thus, the elderly population is not well represented in the guidelines [4]. However, patients older than 75 years have similar benefits from CRT as patients younger than 75 years, with equivalent CRT response rates [5, 6].

With greater optimized pharmacological treatment of HF, especially the use of beta-blockers, more patients are affected by chronotropic incompetence (CI). Furthermore, the autonomic nervous system is chronically shifted toward the sympathetic pathway in patients with HF and has been shown to reduce β-adrenergic responsiveness, resulting in a reduced heart rate (HR) response during exercise in spite of the typically elevated resting HR [7]. CI is generally defined as the inability to increase HR adequately during exercise to match cardiac output to metabolic demands. CI in HF is associated with reduced functional capacity [8] and poor survival [9]. In a healthy heart, HR, stroke volume, and cardiac output increase during exercise, whereas in a failing heart, contractility reserve is lost, thus rendering increases in cardiac output primarily dependent on the increase in HR. Consequently, insufficient increases in HR because of CI may be considered a major limiting factor in the exercise capacity of patients with HF [10].

Rate-adaptive pacing as a pacemaker feature has been used in clinical routine for patients with CI to restore physiological HR response to daily physical activities. Assuming a causal link between CI and the limitation in exercise capacity in patients with HF, the reversal of CI by rate-adaptive pacing should increase exercise capacity. Thus, CI serves as a possible therapeutic target using implantable cardiac device technology in patients with HF. Despite the potential importance of CI in HF, the issue has drawn limited attention and is often unrecognized in clinical practice. Rate-adaptive pacemakers control HR using a single activity sensor or a combination of sensors. Studies investigating the effects of different types of rate-adaptive pacing modes in different stages of CI have shown variable results, thus further studies are needed. Measuring respiratory minute ventilation (MV) offers a physiological approach to assessing metabolic activity, including high specificity, good proportionality to metabolic needs, and high sensor reliability but with a moderate speed of response [10]. To our knowledge, there is no previous data available on the use of the MV sensor alone in HF patients with CRT.

A substantial part of the HF population with reduced left ventricular ejection fraction (LVEF) is currently implanted with a cardiac implantable electronic device, which offers a unique opportunity to study HR dynamics. An option for identifying CI during in-clinic or remote follow-up is the use of a HR Score (HRSc), which allows using a common heart rate histogram as a marker of chronotropic performance [11]. The HRSc is defined as the percentage of all sensed and paced atrial events in the single tallest 10 bpm histogram bin (Fig. 1). For example, when all events occur in the 60 to 70 bpm bin, the HRSc is 100%. When events are distributed over a wider range with rates < 60 bpm and > 70 bpm, the HRSc becomes lower. Using a cutoff value of 70%, it has been demonstrated that a HRSc ≥ 70% independently predicts 5-year mortality in a large population of CRT-D patients [12]. This is one of several variables within our awareness correlating with survival. Other factors include for example indication at enrolment (LBBB preferable for CRT) [13], percentage of biventricular pacing [14], and adherence to guideline-directed medications [15].

In CRT patients with CI and a HRSc ≥ 70%, reprogramming the device from dual chamber (DDD) mode to dual chamber rate-adaptive (DDDR) mode (i.e., rate-adaptive pacing ON) improved (i.e., lowered) the HRSc and was associated with improved survival. Rate-adaptive pacing has thus shown favorable effects on both exercise capacity and survival in a well-selected subset of HF patients with CI, although the retrospective nature of the study limits the interpretation of the results [12]. Advances in device technology by incorporating additional physiological activity sensors, like the MV sensor, and the detection of CI using a device histogram-based score, such as the HRSc, might improve future treatment of CI in the HF population.

Finally, two indicators have been associated with better CRT response in clinical trials: prolonged interventricular delay, as measured by the difference in activation time (V-V timing) between the right ventricular (RV) sensing electrode and left ventricular (LV) sensing electrode [16,17,18], and shortening of the QRS duration (∆QRSD) following CRT system implantation [19, 20]. The main objective of our study was to assess the impact of optimized rate-adaptive pacing on exercise tolerance with CRT-P devices in an elderly population using the MV sensor alone in a prospective clinical trial setting. The secondary objective was to determine if DDDR mode with the use of an MV sensor (DDDR-MV) improves HRSc in elderly CRT-P patients. We also analyzed the correlations between exercise tolerance, HRSc, V-V timing, and ∆QRSD.

2 Methods

2.1 Study design

The Rally CRT-P study (NCT02488239) was a prospective, multicenter trial of patients with a well-established CRT-P indication, following the ESC guidelines. Patients with symptomatic HF (NYHA class II or III) and a successfully implanted VISIONIST CRT-P device (de novo or upgrade) were assessed.

The main inclusion criteria were as follows:

-

Planned to be implanted or replaced with a VISIONIST Ingenio 2 CRT-P device

-

Planned to be implanted with a 3-lead CRT-P system

-

Planned to be connected to the remote data collection through the LATITUDE® system

-

Able to do a 6-min walk test (6MWT)

-

Maximum sensor rate of age-predicted maximal heart rate (APMHR) 80% should be clinically acceptable

The device system was equipped with a rate-response sensor to increase HR based on MV. The programming allowed the device to measure the electrical delay between RV and LV sensing electrodes. Data was collected on demographics, resting and maximum HR, percentage of atrial pacing, sensing delay between RV and LV electrodes (V-V delay), device programming, walking distance, and adverse events. All data collection requirements including device-related measurements are shown in Supplementary Table 1. Patients with a known need for rate-response pacing based on medical history were maintained with rate-response ON at pre-discharge. At 1 month, patients were assessed by 6MWT, including those with and without known CI, with CI defined as HR trend < 100 bpm or 6MWT peak HR < 80% APMHR [21,22,23,24,25]. Patients identified with CI underwent a programming change from DDD to DDDR; patients not meeting the criteria for CI remained DDD. At 3 months post-implant, an additional 6MWT was performed.

Sensor optimization was individually done per patient at 1-month follow-up and followed the protocol below:

Prior to the initial device interrogation, subjects did a 6-min brisk walk, in a non-rate-adaptive pacing mode.

The interrogation of subjects’ devices occurred following the completion of the 6MWT. Patients were classified as CI subjects based on rate trend diagnostics for the previous 24 h (including the 6MWT). If the maximum heart rate was < 100 bpm or < 80% of the age-predicted heart rate ([220 − age] × 80%), the MV sensor was programmed ON (pacing mode DDDR if the patient was in sinus rhythm). The accelerometer was turned off during the course of the study until the 12th month of LATITUDE close-out follow-up. In case the accelerometer needed to be turned on for clinical reasons, an event and corrective action had to be documented in the study database. Rate-adaptive pacing during this study should be triggered by the MV sensor only.

Optimization guidance is as follows:

-

The maximum sensor rate should be programmed to APMHR × 80%.

-

The maximum sensor rate should not be programmed below 110 bpm.

-

The MV “Response Factor” should be programmed based on the result of the sensor modulation after 6MWT, starting at a nominal value of 8.

-

The resulting HR frequency in the sensor response modulation (especially in the 2nd part of the 6MWT) should result in a minimum of 70% of APMHR so that an appropriate HR can be achieved during future exercises.

2.2 Heart rate score analysis

HRSc was measured for patients who were programmed to DDD between pre-discharge and 1-month follow-up and were found to have CI at follow-up (Fig. 2). From 1 to 3 months of follow-up, those patients were programmed to DDDR and remained mainly in sinus rhythm. At 3 months post-implant, HRSc was collected from the device, and the programming impact on HRSc between 1 and 3 months of follow-up was determined. Patients were dichotomized at a HRSc of 70%, and the result of the 6MWT between patients with HRSc > / < 70% was compared.

2.3 Implantation and measurements

Devices and leads used in the study were fully commercially available, and all patients were planned to receive a CRT-P implant as part of their standard of care. The assignment of the specific Ingenio 2 CRT-P VISIONIST device was the physician’s choice as well as the consideration to use leads currently in place from previous devices and/or to use planned new leads (e.g., ACUITY X4 and/or other LV leads). Given the well-established clinical field experience with this pacing platform, no additional risks were expected when compared to implantation and follow-up procedures associated with any commercially available CRT-P device. The difference between biventricular paced and preimplantation QRS width was calculated. Electrocardiogram (ECG) measurements were made using any lead to obtain the largest value. The QRSD was defined as the interval between the earliest onset of the QRS waveform in any ECG lead and the latest offset in any lead. In the case of paced beats, pacing spikes were not considered the onset of the QRS complex. At 1 and 3 months, 6MWT was assessed in patients with and without known CI.

2.4 Statistical analyses

Continuous variables are expressed as means ± standard deviation. Between-group comparisons were made by Mann–Whitney’s U-test for continuous variables and by Fisher’s exact test for contingency. Correlations were measured with Spearman’s rank correlation coefficient. A p-value < 0.05 was considered statistically significant.

3 Results

3.1 Patient characteristics and disposition

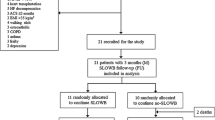

Of 64 enrolled subjects, 61 were implanted with a CRT-P. Fifty-seven devices were programmed DDD, three devices were programmed DDDR, and one device was set to VVIR at implant. The disposition of patients throughout the study is shown in Fig. 3. Seventeen patients had a prior pacemaker implant and received an upgrade to CRT-P therapy. One patient received a CRT-P replacement device. Sixty patients received a quadripolar lead. The demographics of the study patients are shown in Table 1. The median age of the study population was 76 years with a mean LVEF of 41.1 ± 11.6% and an NYHA class score of 2.6 ± 0.64. There were no differences in sex, height, weight, or BMI. Out of 61 enrolled patients successfully implanted, 56 presented at 1-month follow-up and were tested for CI with a 6MWT. Forty-six were determined to have CI and consequently reprogrammed to DDDR mode (using MV sensor only). Eleven patients were classified as non-CI and programmed to DDD after 6MWT. A subset of six non-CI patients remained in DDD pacing mode and had LATITUDE data available, 3 patients were previously programmed DDDR at pre-discharge, and 1 patient was in persistent atrial fibrillation (AF) and programmed to VVIR. Fifty-four patients completed follow-up at 3 months, including the 46 patients who needed additional DDDR programming at 1 month.

3.2 HRSc analysis

In total, 46 subjects with DDDR programming and CI were identified as candidates for later remote analysis of HRSc.

HRSc and 6MWT were successfully analyzed in 35/61 patients (57%) (Fig. 3), following the exclusion of 5 patients mainly in AF for whom HRSc could not be calculated, 4 patients who did not complete the 6MWT, and 2 patients with no LATITUDE data available. Patients with CI and the need for sensor-supported adjustment of pacing frequency were only identified due to additional testing at 1-month follow-up.

The impact of sensor-supported programming on HRSc and walking distance in this cohort was studied based on the comparison of 1-month and 3-month data. More severe CI with HRSc ≥ 70% was present in 14/61 (23%) patients, and 21/61 were CI with HRSc < 70% (Table 2). At 1-month follow-up, 35 patients had MV sensor turned ON, 11 patients remained in non-rate-adaptive pacing mode (DDD), and 15 patients were excluded (no complete dataset due to early study withdrawal or missed follow-up visits or patients with other programming) (Fig. 3).

Table 3 shows clinical history data at baseline (prior device implant) for patients programmed to DDD and DDDR after 6MWT at 1-month follow-up determining the need for sensor programming according to protocol guidance.

No MV sensor–related adverse events were reported. With DDDR-MV programming, there was a substantial increase in percent atrial pacing, maximum HR, and walking distance. Atrial pacing demonstrated a comparable increase in maximum HR among CI patients when compared to non-CI patients with intrinsic atrial responses (Table 4). In CI patients, DDDR-MV resulted in a 6% absolute reduction in HRSc (p = 0.02). In patients with documented CI during 6MWT and a HRSc ≥ 70% (based on device diagnostics), this reduction was even more pronounced at 14% (p < 0.001). HRSc was not reduced in non-CI patients (p = 0.29) or if HRSc was < 70% (p = 0.68). Waking distance increased, but not substantially, with DDDR-MV irrespective of the HRSc (Table 5).

3.3 Interventricular delay

The median of the measured V-V sensing delay at implantation varied for the quadripolar LV lead depending on the used sensing electrode. When sensing occurred between RV and LV-E1 (distal LV electrode), the median was 75.7 ms (range 3–140 ms), and for sensing configuration RV–LV-E4 (most proximal LV electrode), the median was 95 ms (range 10–150 ms) (n = 34). Although the number of V-V delay data sets with reprogramming to MV ON at 1-month follow-up was small (n = 24), an increase in walking distance correlated with longer V-V timing. Within used cut-offs of 60 or 80 ms in V-V timing, a significant difference was seen between 1 and 3 months (60 ms, p = 0.034; 80 ms, p = 0.039).

3.4 QRS duration

Data for ∆QRSD (n = 34) showed a shortening of the QRS duration after CRT implantation in 20/22 patients with left bundle branch block (LBBB) and in 6/12 patients with non-LBBB, signaling potentially beneficial CRT-P therapy. It could also be seen in patients with CI and with a wide (> 162 ms, median 182 ms) or narrower (≤ 162 ms, median 130 ms) QRS complex at baseline that both experienced improved mean walking distance between 1 and 3 months (wide 367.7 to 428.6 m, p = 0.011; narrower 359.0 to 433.6 m, p = 0.031) (Table 6).

4 Discussion

CI defined as maximum HR < 100 bpm or 80% of APMHR after 6MWT was effective at identifying patients with a background of CI. Our main finding is that older HF patients with CI benefit from the use of a physiological activity MV sensor in CRT-P devices. The study showed beneficial clinical effects of using the MV sensor in CRT-P patients who could not reach a HR of 100 bpm or 80% of APMHR during 6MWT at 1-month follow-up. Better exercise tolerance was seen when the MV sensor was programmed ON in patients with CI between 1- and 3-month follow-ups. There was a substantial increase in the percentage of atrial pacing, maximum HR, and walking distance. Atrial pacing increased CI patients’ maximum HR in a similar way compared to non-CI patients with intrinsically driven atrial response. Our findings suggest that a systematic screening for CI may play a role in improving the clinical outcomes of older CRT-P patients and should be considered in clinical routine.

Increasing HR itself does not necessarily lead to higher exercise capacity. On the contrary, increasing HR with atrial pacing in patients with CI and HF with preserved ejection fraction (HFpEF) did not increase exercise tolerance [26]. This likely illustrates the difference between systolic and diastolic LV dysfunction. LV filling time is shortened by increased HR, which is essential in diastolic dysfunction. Furthermore, the PEGASUS trial did not demonstrate a difference in clinical outcomes between programming DDD-70, DDD-40, and DDDR-40 groups during CRT [27]. In that study, however, the amount of atrial pacing was almost identical in the DDD and DDDR groups indicating conservative programming of the sensor. In the CRT landmark studies, sensor-driven atrial pacing was not used [28,29,30]. In addition, in two of the studies, atrial pacing was avoided by either using VDD mode [28] or setting a lower rate of 40 bpm in DDD mode [30]. However, it is important to note that the patients in these early studies were much younger (median or mean 65–67 years) and thus less prone to CI than the patients in our study. There is concern that high amounts of atrial pacing predispose patients to AF. However, the recent randomized DANPACE II trial did not find any difference in the incidence of AF in sick sinus syndrome patients receiving either DDD-40 (atrial pacing 1%) or DDDR-60 (atrial pacing 49%) [31]. In addition, DDD-40 was associated with a higher incidence of syncope or presyncope. These results encourage the use of rate-response pacing when clinically indicated.

In the present study, cardiac output is impacted by the combined contributions of the sensor increasing HR and the effects of biventricular pacing through the action of the CRT-P system. Additional capacity for higher cardiac output is realized through synchronization of the ventricular contractions and increased HR driven by MV during physical activity. Taken together, this may explain why these patients achieve additional walking distance and why CI patients with both short and longer QRS durations benefit. The quickly gained capacity observed between 1 and 3 months suggests that there is probably a short-term cardiac output reserve that can be utilized by increased HR, which would support immediate rehabilitation possibilities and increased physical exercise shortly after CRT-P implantation. Increased capacity at 6 months post-implant and beyond is likely due to a different mechanism, possibly additional remodeling.

The non-CI subgroup received significantly less beta-blockers and ACE inhibitors prior to implantation of the device (Table 3). Medication may have had an impact on HR response and on the CI classification based on the criteria outlined in the protocol. Additionally, patients programmed to DDD did not show increased walking distance despite a numerical rise in HR. We would conclude that the reduced medication may help to avoid reduced HR response during exercise, but medication in combination with CRT and sensor support was associated with increased exercise capacity. Interestingly, a recent study involving patients with HFpEF and CI suggested that patients have improved functional capacity with the withdrawal of beta-blockers, especially in cases of low left ventricular end-systolic volume [32]. However, in HF with reduced LVEF, beta-blockers play a pivotal role as one of the cornerstones of treatment. Also, our current results underscore the importance of optimizing both medication and device therapy in managing HF effectively.

Previous work has shown a correlation between CRT response and prolonged interventricular delays (longer V-V timing). Patients with a V-V timing delay of ≥ 80 ms had significantly longer 6MWT distance improvements than patients with a V-V timing of < 80 ms, although patients with short V-V timing had longer walking distances overall. Longer V-V timings are usually associated with more advanced heart disease, thus a significant improvement for these sicker patients is encouraging. Notably, all the patients in the present study were elderly, meaning even small beneficial changes in their walking distances are clinically significant.

HRSc as a diagnostic device marker for CI was reduced (improved) in patients with DDDR-MV programming (p = 0.023). The largest impact was on 14 patients with poor HRSc (≥ 70%) (p ≥ 0.001). For patients with HRSc < 70% (less CI), the HRSc remained similar at 3 months (p = 0.68). 6MWT distance as exercise marker improved at 3 months for all 35 patients in the HRSc analysis (p = 0.006). Patients with HRSc < 70% demonstrated a statistically significant improvement (p = 0.031). However, patients with HRSc (≥ 70%) at 1-month follow-up showed less improvement, and the difference did not reach statistical significance at 3 months (p = 0.095). Poor HRSc may be associated with factors that limit exercise capacity, and reduction in HRSc may be associated with increases in activity at lower levels of exertion. In addition to 6MWT, HRSc is another useful tool for identifying CI, and it can be easily done using remote follow-up data.

The rising life expectancy is also increasing the need for research on therapy efficacy in elderly HF patients. More research on identifying and treating CI should be conducted in this growing population. Treatment of CI by MV sensor programming and optimization appears promising, but a randomized study would be necessary to document the potential impact on increased exercise capacity. Furthermore, particularly in this specific group of patients, a larger study evaluating the impact of treating CI on the quality of life would be valuable.

Conduction system pacing (CSP) is rapidly challenging biventricular pacing as the gold standard for resynchronization therapy [33,34,35]. A large, randomized study comparing CSP and biventricular pacing has recently started (NCT05650658). It remains to be seen whether CSP will offer a viable alternative to traditional CRT in older patients, who perhaps have more advanced disease in the conduction system and more fibrosis in the myocardium.

5 Limitations

Our study does have noteworthy limitations. Firstly, due to the study design, the sample size included in the final analysis was relatively modest. Moreover, it is important to highlight that only the contribution of the MV sensor was evaluated in the study. Therefore, caution should be exercised when generalizing our findings to encompass the use of other types of sensors available in CRT devices. Despite these limitations, our study offers valuable data on the utilization of rate-response pacing as a part of CRT optimization among older patients who are frequently underrepresented in clinical studies.

6 Conclusions

Older CRT-P patients under optimal medical therapy have an underestimated need for sensor-driven HR. Patients with MV-driven rate response and a high percentage of atrial pacing could increase their maximum HR in a similar way to patients with intrinsic-driven atrial response. The study showed beneficial clinical effects of using the MV sensor alone in CRT-P patients who could not reach 80% APMHR during 6MWT. In conclusion, our study demonstrates that old and very old CRT-P patients may benefit from the use of MV sensor.

Data availability

The data that support the findings of this study are available from Boston Scientific upon reasonable request. Limitation: The obtained patient consent doesn’t allow the secondary use of study data outside Rally CRT-P study protocol, including combination with other data sets.

Abbreviations

- 6MWT:

-

6-Min walk test

- AF:

-

Atrial fibrillation

- APMHR:

-

Age-predicted maximal heart rate

- CI:

-

Chronotropic incompetence

- CSP:

-

Conduction system pacing

- CRT:

-

Cardiac resynchronization therapy

- CRT-P:

-

Cardiac resynchronization therapy pacemaker

- ECG:

-

Electrocardiogram

- HF:

-

Heart failure

- HFpEF:

-

Heart failure with preserved ejection fraction

- HR:

-

Heart rate

- HRSc:

-

Heart rate score

- LBBB:

-

Left bundle branch block

- LV:

-

Left ventricle

- LVEF:

-

Left ventricular ejection fraction

- MV:

-

Minute ventilation

- NYHA:

-

New York Heart Association

- RV:

-

Right ventricle

- QRSD:

-

QRS duration

- ∆QRSD:

-

Shortening of the QRS duration

References

Groenewegen A, Rutten FH, Mosterd A, Hoes AW. Epidemiology of heart failure. Eur J Heart Fail. 2020;22(8):1342–56.

Glikson M, Nielsen JC, Kronborg MB, Michowitz Y, Auricchio A, Barbash IM, et al. 2021 ESC Guidelines on cardiac pacing and cardiac resynchronization therapy. Eur Heart J. 2021;42(35):3427–520.

Schrage B, Lund LH, Melin M, Benson L, Uijl A, Dahlstrom U, et al. Cardiac resynchronization therapy with or without defibrillator in patients with heart failure. Europace. 2022;24(1):48–57.

Bibas L, Levi M, Touchette J, Mardigyan V, Bernier M, Essebag V, et al. Implications of frailty in elderly patients with electrophysiological conditions. JACC Clin Electrophysiol. 2016;2(3):288–94.

Behon A, Merkel ED, Schwertner WR, Kuthi LK, Veres B, Masszi R, et al. Long-term outcome of cardiac resynchronization therapy patients in the elderly. Geroscience. 2023;45(4):2289–301.

Montenegro Camanho LE, BenchimolSaad E, Slater C, Oliveira Inacio Junior LA, Vignoli G, Carvalho Dias L, et al. Clinical outcomes and mortality in old and very old patients undergoing cardiac resynchronization therapy. PLoS One. 2019;14(12):e0225612.

Colucci WS, Ribeiro JP, Rocco MB, Quigg RJ, Creager MA, Marsh JD, et al. Impaired chronotropic response to exercise in patients with congestive heart failure. Role of postsynaptic beta-adrenergic desensitization Circulation. 1989;80(2):314–23.

Witte KK, Cleland JG, Clark AL. Chronic heart failure, chronotropic incompetence, and the effects of beta blockade. Heart. 2006;92(4):481–6.

Magri D, Corra U, Di Lenarda A, Cattadori G, Maruotti A, Iorio A, et al. Cardiovascular mortality and chronotropic incompetence in systolic heart failure: the importance of a reappraisal of current cut-off criteria. Eur J Heart Fail. 2014;16(2):201–9.

Katritsis D, Shakespeare CF, Camm AJ. New and combined sensors for adaptive-rate pacing. Clin Cardiol. 1993;16(3):240–8.

Wilkoff BL, Richards M, Sharma A, Wold N, Jones P, Perschbacher D, et al. A device histogram-based simple predictor of mortality risk in ICD and CRT-D patients: the heart rate score. Pacing Clin Electrophysiol. 2017;40(4):333–43.

Olshansky B, Richards M, Sharma A, Wold N, Jones P, Perschbacher D, et al. Survival after rate-responsive programming in patients with cardiac resynchronization therapy-defibrillator implants is associated with a novel parameter: the heart rate score. Circ Arrhythm Electrophysiol. 2016;9(8):e003806.

Zareba W, Klein H, Cygankiewicz I, Hall WJ, McNitt S, Brown M, et al. Effectiveness of cardiac resynchronization therapy by QRS morphology in the multicenter automatic defibrillator implantation trial-cardiac resynchronization therapy (MADIT-CRT). Circulation. 2011;123(10):1061–72.

Hayes DL, Boehmer JP, Day JD, Gilliam FR 3rd, Heidenreich PA, Seth M, et al. Cardiac resynchronization therapy and the relationship of percent biventricular pacing to symptoms and survival. Heart Rhythm. 2011;8(9):1469–75.

Ruppar TM, Cooper PS, Mehr DR, Delgado JM, Dunbar-Jacob JM. Medication adherence interventions improve heart failure mortality and readmission rates: systematic review and meta-analysis of controlled trials. J Am Heart Assoc. 2016;5(6):e002606.

Gold MR, Birgersdotter-Green U, Singh JP, Ellenbogen KA, Yu Y, Meyer TE, et al. The relationship between ventricular electrical delay and left ventricular remodelling with cardiac resynchronization therapy. Eur Heart J. 2011;32(20):2516–24.

Ansalone G, Giannantoni P, Ricci R, Trambaiolo P, Fedele F, Santini M. Doppler myocardial imaging to evaluate the effectiveness of pacing sites in patients receiving biventricular pacing. J Am Coll Cardiol. 2002;39(3):489–99.

Becker M, Kramann R, Franke A, Breithardt OA, Heussen N, Knackstedt C, et al. Impact of left ventricular lead position in cardiac resynchronization therapy on left ventricular remodelling A circumferential strain analysis based on 2D echocardiography. Eur Heart J. 2007;28(10):1211–20.

Hsing JM, Selzman KA, Leclercq C, Pires LA, McLaughlin MG, McRae SE, et al. Paced left ventricular QRS width and ECG parameters predict outcomes after cardiac resynchronization therapy: PROSPECT-ECG substudy. Circ Arrhythm Electrophysiol. 2011;4(6):851–7.

De Pooter J, El Haddad M, Timmers L, Van Heuverswyn F, Jordaens L, Duytschaever M, et al. Different methods to measure QRS duration in CRT patients: impact on the predictive value of QRS duration parameters. Ann Noninvasive Electrocardiol. 2016;21(3):305–15.

Scherr J, Wolfarth B, Christle JW, Pressler A, Wagenpfeil S, Halle M. Associations between Borg’s rating of perceived exertion and physiological measures of exercise intensity. Eur J Appl Physiol. 2013;113(1):147–55.

Newman AB, Haggerty CL, Kritchevsky SB, Nevitt MC, Simonsick EM, Health ABCCRG. Walking performance and cardiovascular response: associations with age and morbidity–the health, aging and body composition study. J Gerontol A Biol Sci Med Sci. 2003;58(8):715–20.

Mond HG, Kertes PJ. Rate-responsive cardiac pacing. In: Ellenbogen KA, Kay GN, Wilkoff BL, editors. Clinical cardiac pacing. Philadelphia: Saunders; 1995. p 224.

Kay GN, Bubien RS, Epstein AE, Plumb VJ. Rate-modulated cardiac pacing based on transthoracic impedance measurements of minute ventilation: correlation with exercise gas exchange. J Am Coll Cardiol. 1989;14(5):1283–9.

Tanaka H, Monahan KD, Seals DR. Age-predicted maximal heart rate revisited. J Am Coll Cardiol. 2001;37(1):153–6.

Reddy YNV, Koepp KE, Carter R, Win S, Jain CC, Olson TP, et al. Rate-adaptive atrial pacing for heart failure with preserved ejection fraction: the RAPID-HF randomized clinical trial JAMA. 2023;329(10):801–9.

Martin DO, Day JD, Lai PY, Murphy AL, Nayak HM, Villareal RP, et al. Atrial support pacing in heart failure: results from the multicenter PEGASUS CRT trial. J Cardiovasc Electrophysiol. 2012;23(12):1317–25.

Bristow MR, Saxon LA, Boehmer J, Krueger S, Kass DA, De Marco T, et al. Cardiac-resynchronization therapy with or without an implantable defibrillator in advanced chronic heart failure. N Engl J Med. 2004;350(21):2140–50.

Cleland JG, Daubert JC, Erdmann E, Freemantle N, Gras D, Kappenberger L, et al. The effect of cardiac resynchronization on morbidity and mortality in heart failure. N Engl J Med. 2005;352(15):1539–49.

Moss AJ, Hall WJ, Cannom DS, Klein H, Brown MW, Daubert JP, et al. Cardiac-resynchronization therapy for the prevention of heart-failure events. N Engl J Med. 2009;361(14):1329–38.

Kronborg MB, Frausing M, Malczynski J, Riahi S, Haarbo J, Holm KF, et al. Atrial pacing minimization in sinus node dysfunction and risk of incident atrial fibrillation: a randomized trial. Eur Heart J. 2023;44(40):4246–55.

Palau P, de la Espriella R, Seller J, Santas E, Dominguez E, Bodi V, et al. Beta-blocker withdrawal and functional capacity improvement in patients with heart failure with preserved ejection fraction. JAMA Cardiol. 2024;9(4):392–396.

Vinther M, Risum N, Svendsen JH, Mogelvang R, Philbert BT. A randomized trial of His pacing versus biventricular pacing in symptomatic HF patients with left bundle branch block (His-alternative). JACC Clin Electrophysiol. 2021;7(11):1422–32.

Vijayaraman P, Sharma PS, Cano O, Ponnusamy SS, Herweg B, Zanon F, et al. Comparison of left bundle branch area pacing and biventricular pacing in candidates for resynchronization therapy J Am Coll Cardiol. 2023;82(3):228–41.

Diaz JC, Sauer WH, Duque M, Koplan BA, Braunstein ED, Marin JE, et al. Left bundle branch area pacing versus biventricular pacing as initial strategy for cardiac resynchronization. JACC Clin Electrophysiol. 2023;9(8 Pt 2):1568–81.

Acknowledgements

The authors would like to sincerely thank the Rally CRT-P enrolling sites, physicians, and study coordinators. Special thanks to Samantha Dunmire (Boston Scientific, Scientific Communications) for assistance in editing this manuscript.

Funding

Open Access funding provided by University of Helsinki (including Helsinki University Central Hospital). This research was supported and funded by Boston Scientific Corp.

Author information

Authors and Affiliations

Contributions

JK interpreted the data and drafted and revised the manuscript. SL analyzed the data and revised the manuscript. CL and SV designed the study, performed device implantations, and revised the manuscript. CB implemented statistical analysis, managed the project, and revised the manuscript. SO performed a biostatistical review and data analysis and revised the manuscript. TK analyzed, interpreted, and summarized the data and revised the manuscript. SP designed the study, performed device implantations, interpreted the data, and drafted and revised the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval

This study was conducted in accordance with ISO 14155, the relevant parts of the International Conference on Harmonization Guidelines for Good Clinical Practice, the ethical principles of the Declaration of Helsinki, and pertinent individual country laws and regulations. The protocol was approved by the responsible ethics committee for all participating centers and required written informed consent from all enrolled patients.

Conflict of interest

Jarkko Karvonen has worked as a consultant for Abbott, Biotronik, Boston Scientific, and Medtronic. Sami Pakarinen has worked as a consultant for Abbott.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Karvonen, J., Lehto, S., Lenz, C. et al. Minute ventilation sensor–driven rate response as a part of cardiac resynchronization therapy optimization in older patients. J Interv Card Electrophysiol (2024). https://doi.org/10.1007/s10840-024-01848-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10840-024-01848-1