Abstract

Providing users with relevant search results has been the primary focus of information retrieval research. However, focusing on relevance alone can lead to undesirable side effects. For example, small differences between the relevance scores of documents that are ranked by relevance alone can result in large differences in the exposure that the authors of relevant documents receive, i.e., the likelihood that the documents will be seen by searchers. Therefore, develo** fair ranking techniques to try to ensure that search results are not dominated, for example, by certain information sources is of growing interest, to mitigate against such biases. In this work, we argue that generating fair rankings can be cast as a search results diversification problem across a number of assumed fairness groups, where groups can represent the demographics or other characteristics of information sources. In the context of academic search, as in the TREC Fair Ranking Track, which aims to be fair to unknown groups of authors, we evaluate three well-known search results diversification approaches from the literature to generate rankings that are fair to multiple assumed fairness groups, e.g. early-career researchers vs. highly-experienced authors. Our experiments on the 2019 and 2020 TREC datasets show that explicit search results diversification is a viable approach for generating effective rankings that are fair to information sources. In particular, we show that building on xQuAD diversification as a fairness component can result in a significant (\(p<0.05\)) increase (up to 50% in our experiments) in the fairness of exposure that authors from unknown protected groups receive.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The objective of an information retrieval (IR) system has traditionally been seen as being to maximise the fraction of results presented to the user that are relevant to the user’s query or to address the user’s information need as close to the top rank position as possible. However, many studies have shown that focusing on relevance alone as a measure of search success can lead to undesirable side effects that can have negative societal impacts (Baeza-Yates 2018; Epstein et al. 2017; Kay et al. 2015; Mehrotra et al. 2018). Interventions in computational decision-support systems, such as IR systems, cannot solve such societal issues (Abebe et al. 2020). However, develo** systematic interventions can support broader attempts at understanding and addressing social problems. Therefore, in recent years, there has been an increased interest in the societal implications of how IR systems select or present documents to users and the potential for IR systems to systematically discriminate against particular groups of people (Pleiss et al. 2017).

IR systems and machine learned models can encode and perpetuate any biases that exist in the test collections that they use (Zehlike et al. 2017; Bender et al. 2021). Moreover, search engines that are used to find, for example, jobs or news can have a significant negative impact on information sources that produce relevant content but are often unfairly under-represented in the search results. Therefore, it is imperative that the IR community focuses on minimising the potentially negative human, social, and economic impact of such biases in search systems (Culpepper et al. 2018), particularly for disadvantaged or protected groups of society (Pedreschi et al. 2008). One way to mitigate against such biases that is receiving increasing attention in the IR community is to develop fair ranking strategies to try to ensure that certain users or information sources are not discriminated against (Culpepper et al. 2018; Ekstrand et al. 2019; Olteanu et al. 2019b). The increasing importance this topic is exemplified by the Text REtrieval Conference (TREC) Fair Ranking Track (Biega et al. 2020).

When defining fairness for ranking strategies, we agree with Singh and Joachims (2018) that there is not one definitive definition, and a judgement of fairness is context specific. In this work, we take the view that for a search engine’s ranking to be considered as being fair, relevant information sources should be given a fair exposure to the search engine’s users. Following Singh and Joachims (2018), we consider a fair exposure to mean that the exposure that a document receives should be proportional to the relevance of the document with respect to a user’s query. In the context of an IR system that presents documents to a user that are ranked in decreasing order of their estimated relevance to the user’s query, documents that are placed lower in the ranking will receive less exposure than higher ranked documents. The reduction (or drop-off) in exposure that a document at position j (\(Pos_j\)) in a ranking gets can be estimated as \(\mathrm {Exposure}(Pos_j) = \frac{1}{\log (1+Pos_j)}\). This is the user model that encapsulates position bias that is commonly used in the Discounted Cumulative Gain (DCG) (Järvelin and Kekäläinen 2002) measure. Moreover, the amount of exposure that a document receives accumulates over repeated instances of a query (we refer to this as a sequence of rankings).

Therefore, in this work, as our definitions of fairness we adopt the Disparate Treatment and Disparate Impact fairness constraints of Singh and Joachims (2018). Disparate Treatment enforces the exposure of two fairness groups, \(G_0\) and \(G_1\), (e.g., authors from a protected societal group) to be proportional to the average relevance of the group’s documents, and is defined as:

where \({\mathbf {P}}\) is a doubly stochastic matrix where the cell \({\mathbf {P}}_{i,j}\) is the probability that a ranking r places document \(d_i\) at rank j, and the average utility (relevance) of a group, \(\mathrm {U}(G_k \mid q)\) is calculated as \(\mathrm {U}\left( G_{k} \mid q\right) =\frac{1}{ \mid G_{k} \mid } \sum _{d_{i} \in G_{k}} {\mathbf {u}}_{i}\), where \({\mathbf {u}}\) is the individual utility scores of each of the documents in the group \(G_{k}\).

The Disparate Impact constraint builds on Disparate Treatment with the additional constraint that the clickthrough rates for the groups, as determined by their exposure and relevance, are proportional to their average utility. Disparate Impact is defined as:

where the average clickthrough rate of a group, \(\mathrm {CTR}\left( G_{0} \mid {\mathbf {P}}\right) \), is defined as:

for N documents with utility, \({\mathbf {u}}\), and attention (i.e., exposure drop-off), \({\mathbf {v}}\). The probability of a document being clicked is calculated using the click model of Richardson et al. (2007) as follows:

In our experiments, we view the Disparate Treatment and the Disparate Impact constraints as the target exposures for each of the fairness groups, \(G_0\) and \(G_1\), under two different fairness constraints, and measure how much a sequence of rankings violates each of the constraints.

Many approaches in recent years have tried to ensure that items, e.g., documents, that represent particular societal groups, e.g., gender or ethnicity, receive a fair exposure within a single ranking. However, queries are often searched repeatedly (either by the same user over a period of time or by multiple users) and if the same static ranking is produced for each instance of the query then inequalities of exposure can emerge over time. For example, consider a fairness policy that ensures that, in a single ranking, 50% of relevant documents are by authors from a protected societal group. If, for a particular query, the highest ranked 5% of the documents are not by authors from the protected group, then although the single ranking could be considered to be fair, over time the documents in the top-ranked positions would cumulatively receive more exposure than the authors from the protected group that have also produced relevant documents. However, there is also the potential to compensate for any under-exposure of the documents in previous rankings if the search engine introduces a fair ranking policy (Biega et al. 2018). This scenario, where authors receive exposure to users over repeated queries, is addressed in the context of academic search by the TREC Fair Ranking Track (Biega et al. 2 we discuss prior work on fairness in information access systems. We introduce the fair ranking task in Sect. 3 before defining our proposed assumed fairness groups in Sect. 4. We present how we propose to cast fair ranking as a search result diversification task in Sect. 5 before presenting our experimental setup in Sect. 6, then our results in Sect. 7. Concluding remarks follow in Sect. 8.

2 Related work

In this section, we firstly discuss work related to fairness in classification systems and search engines, before presenting prior work on search results diversification.

Fairness Most of the previous work on measuring or enforcing fairness in information access systems has focused on fairness in machine learning classifiers. Such classifiers might be deployed in decision-making tasks, such as loan or parole applications (Chouldechova 2017; Hardt et al. 2016; Kleinberg et al. 2013; Kamiran and Calders 2009; Zemel et al. 2013), or from external resources, such as word embeddings (Bolukbasi et al. 2016). However, the majority of the literature on fair classification focuses on enforcing fairness constraints in the classifier’s predictions, for example (Dwork et al. 2012; Calders and Verwer 2010; Pleiss et al. 2017; Woodworth et al. 2017; Celis et al. 2018; Singh and Joachims 2018). Differently from constraint-based approaches, which rely on the protected groups being known a priori, in this work we propose to cast the fair ranking task, where the protected groups are unknown a priori, as a search results diversification task. Indeed, Gao and Shah (2020) recently showed that diversity and relevance are highly correlated with statistical parity fairness.

Identifying the most appropriate method of evaluating fairness in systems that output ranked results is a develo** area of research (Diaz et al. 2020). Early work on develo** fair ranking metrics focused on directly applying fairness approaches from classification, such as statistical parity (Yang and Stoyanovich 2017) and group fairness (Sapiezynski et al. 2019). Biega et al. (2018) proposed a fairness evaluation metric akin to evaluating individual fairness (Dwork et al. 2012). Their approach evaluated position bias and was modelled on the premises that (1) the attention of searchers should be distributed fairly and (2) information producers should receive attention from users in proportion to their relevance to a given search task. To account for the fact that no single ranking can achieve individual fairness, the authors introduced amortized fairness, where attention is accumulated over a series of rankings. In this work, we are interested evaluating the fairness of the exposure that authors from protected groups are likely to receive in a ranking. Singh and Joachims (2018) introduced the Disparate Treatment Ratio (DTR) and Disparate Impact Ratio (DIR) metrics to evaluate such a scenario. Therefore, we select to use these metrics to evaluate our proposed approaches. We present full details of DTR and DIR in Sect. 6.

Search Results Diversification Queries submitted to a Web search engine are often short and ambiguous (Spärck-Jones et al. 2007). Therefore, it is often desirable to diversify the search results to include relevant documents for multiple senses, or aspects, of the query. There are two main families of search result diversification approaches, namely implicit and explicit diversification.

Implicit diversification approaches, for example (Carbonell and Goldstein 1998; Chen and Karger 2006; Wang and Zhu 2009; Radlinski et al. 2008), assume that documents that are similar in content will cover the same aspects of a query. Such approaches increase the coverage of aspects in a ranking by demoting to lower ranked documents that are similar to higher-ranked documents. Maximal Marginal Relevance (MMR) (Carbonell and Goldstein 1998) was one of the first implicit diversification approaches. MMR aims to increase the amount of novel information in a ranked list by selecting documents that are dissimilar to the documents that have already been selected for the results list. In this work, we evaluate MMR as a fair ranking strategy based on implicit diversification. However, differently from when MMR is deployed for search result diversification, e.g. as in (Carbonell and Goldstein 1998), we instead evaluate the effectiveness of selecting documents from information sources that have dissimilar characteristics. We provide details of how we build on MMR in Sect. 5.1.

Explicit diversification approaches, e.g. (Santos et al. 2010; Radlinski and Dumais 2006; Agrawal et al. 2009; Dang and Croft 2012), directly model the query aspects with an aim to maximise the coverage of aspects that are represented in the search results. For example, eXplicit Query Aspect Diversification(Santos et al. 2010) (xQuAD) uses query reformulations to represent possible information needs of an ambiguous query and iteratively generates a ranking by selecting documents that maximise the novelty and diversity of the search results. Dang and Croft (Dang and Croft 2012) proposed an explicit approach, called PM-2, that was based on proportional representation. The intuition of PM-2 is that, for each aspect of an ambiguous query, the number of documents relating to the aspect that are included in the search results should be proportional to the number of documents relating to the aspect in a larger ranked list of documents that the search results are sampled from. For example, for the query java, if 10% of the documents in the list of ranked documents that the search results are sampled from are about java the island then 10% of the search results should also be about java the island. In this work, we build on the xQuAD (Santos et al. 2010) and PM-2 (Dang and Croft 2012) explicit diversification approaches to generate fair rankings. However, differently from the work of (Santos et al. 2010) and (Dang and Croft 2012), we explicitly diversify over the characteristics of assumed groups that we wish to be fair to. We provide details of how we leverage xQuAD and PM-2 in Sect. 5.2.

Castillo (2018) argued that search results diversification differs from fair ranking in that the former focuses on utility for the searcher while the latter focuses on the utility of sources of relevant information. We agree that intuitively the tasks are different. However, in this work, we postulate that fair rankings that also provide utility for the search engine users can be generated by diversifying over a set of assumed groups that we aim to be fair to, to maximise the representation of such groups in the search results.

3 Fair ranking task

In this section, we introduce the fair ranking task and provide a formal definition of the problem, as it is set out by the TREC Fair Ranking Track (Biega et al. 2015).

To generate fair rankings with respect to an assumed fairness group, for each paper that is to be ranked, we need a list of scores that represent the amount that a specific instance of a document attribute (i.e., an author, a publication venue or a topic) represents a characteristic that we wish to be fair to (i.e., experience in the case of authors, popularity / exposure in the case of publication venues, or aboutness in the case of topics). A high (or low) score is not intended as a measure of how good (or bad) a paper is but is an indicator of how representative the paper is of a particular characteristic of an assumed fairness group. For example, when considering topics, each score represents the probability that a document is about a particular topic. Each of our proposed approaches that we present in this section outputs a list of such scores that can be directly used as an input for each of the diversification approaches that we present later in Sect. 5.

In the remainder of this section, we provide details of, and define, our three proposed approaches for generating assumed fairness grou**s for evaluating the effectiveness of search results diversification for generating fair rankings in the context of academic search.

4.1 Author experience

The first assumed fairness group that we propose aims to provide a fair exposure to authors that are at different stages of their careers: e.g. early career researchers vs. highly experienced researchers. Intuitively this approach aims to reduce the preponderance of individual authors at the top of the ranking for a given query. Hence, prolific or highly experienced authors should not overwhelm other authors in the ranking and relevant work that is produced by early career researchers should receive a fair exposure to the users.

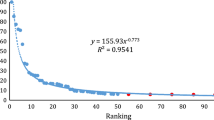

For defining this fairness group, we need a score to represent the amount of experience that an author has. There are many possible signals that could be used as proxies for estimating the amount of experience that an author has. For example, the number and/or dates of the author’s publications, or the dates and/or trends of their citations. In this work, to estimate an author’s experience we use the total number of citations that the author has in the collection, calculated as follows:

where d is a document authored by a in the set of all documents, \(D_a\), that a is an author of and \(\mathrm {Citations}(d)\) is the number of documents that cite d. A given document, d, is then represented as a list of author experience scores, one for each of the authors of d. This document representation can then be used as input to the search results diversification approaches that we evaluate. Approaches that use our Author Experience assumed fairness group for diversification are denoted with the subscript A in Sect. 7.

In this approach, the group characteristic that we are aiming to be fair to is the authors’ experience and the sub-groups of this assumed fairness group, \(g_i \in G_A\), are early career researchers and highly experienced / professorial researchers. Authors with a high \(\mathrm {Experience}(a)\) score are likely to be more senior researchers that have accumulated more citations over time.

4.2 Journal exposure

The second assumed fairness group that we propose aims to provide a fair exposure to papers from different publication venues in the corpus. Intuitively, this approach aims to surface relevant search results from publication venues that may usually be underrepresented by search systems. For example, within the IR research field, the search results for a particular query may be unfairly dominated by papers from conference proceedings, e.g. SIGIR, and journals that output a lot of material, while other sources, e.g. smaller journals or TREC notebooks, may be underrepresented with respect to their relevance or utility to the user. Moreover, it is also the case that papers that are published in more well-known venues, such as the ACM Digital Library,Footnote 4 are likely to unfairly benefit from rich-get-richer dynamics, compared to lesser-known venues. Therefore, our Journal Exposure strategy aims to provide a fair exposure to papers from venues that are underrepresented in the search results.

In this approach, the group characteristic that we are aiming to be fair to is the paper’s exposure through publication venues. For a given document, d, and the publication venues (e.g. journals), V, that d is published in, the journal coverage score, \(\mathrm {Coverage}(v)\), for a venue, \(v \in V\), is the total number of documents (in the collection) that are published in v, i.e, the coverage of the publication venue. For our proposed Journal Exposure assumed fairness group, d is then represented as a list of \(\mathrm {Coverage}(v)\) scores, one for each of the venues that d is published in, and the list of scores are input into the diversification approaches that we evaluate.Footnote 5 The sub-groups of this assumed fairness group, \(gi \in G_A\), that we aim to be fair to are low coverage and high coverage publication venues. Approaches that use our Journal Exposure assumed fairness group for diversification are denoted with the subscript J in Sect. 7.

4.3 Topical grou**

The third, and final, assumed fairness group that we propose aims to provide a fair exposure to different authors that publish papers on the same research topics. Our intuition for this grou** is that a query’s results may be dominated by, for example, an author that primarily publishes work on a particularly popular sub-topic of the query. This may be problematic for broadly defined queries that can have multiple relevant sub-topics, as per the examples that were presented in Table 1. For example, for the query interactive information retrieval (IIR), it may be the case that the retrieved results are dominated by documents that discuss IIR user studies. Moreover, these results may be further dominated by an author that is particularly well-known for IIR user studies. However, other sub-topics of IIR are also likely to be relevant, for example user modelling or interaction simulation, and the authors that publish in these fields may be under-exposed. We expect that diversifying the rankings over the topics that are discussed in the retrieved documents will likely give more exposure to such under-exposed authors. To account for this potential disparity in exposure for different sub-topics within the relevant search results, we build on a topic modelling approach to generate topical grou**s based on the textual content of the documents. We note that our topical grou** may not be the most appropriate approach for very specific or narrowly defined queries, such as when a searcher is looking for papers to cite. However, as previously discussed in Sect. 3, the majority of the queries in the TREC Fair Ranking task, which are from the query logs of the Semantic Scholar academic search engine, are broadly-defined informational queries.

For generating our topical grou**s, we use a topic modelling approach to identify the main latent topics that are discusses in the documents’ text. The topical grou** is defined as the probability that a document, d, discusses a latent topic, \(z_i \in Z\), where Z is the set of latent topics that are discussed in all of the documents in a collection. A topic, \(z_i\), can then be seen as a group characteristic that we wish to be fair to.

In this approach, the group characteristic that we are aiming to be fair to is the topics that the paper is about. The sub-groups of this assumed fairness group, \(gi \in G_A\), are, therefore, the topics that are discussed by the papers in the collection. With this in mind, when diversifying over topics, a document is represented by its top k topics, where the document’s score for a top k topic, \(z_i\), is \(p(z_i \mid d)\). The document’s score for all other topics that are discussed in the collection is 0. In other words, each document is associated with a fixed number of topics, k, and the probability that a document discusses each of the k topics varies. Approaches that use our Topical assumed fairness group for diversification are denoted with the subscript T in Sect. 7.

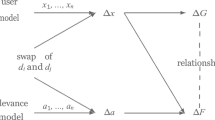

5 Casting fair ranking as search results diversification

As previously discussed in Sect. 1, we argue that generating a fair ranking towards information sources can be cast as a search results diversification task, by viewing the characteristics of groups that we aim to be fair to as latent aspects of relevance and maximising the number of groups that are represented within the top rank positions of the search results (as is the objective of search results diversification). In this section, we present the three search results diversification approaches from the literature that we build on and how we propose to adapt and tailor each of them to generate fair rankings. Section 5.1 presents the implicit diversification approach that we evaluate, while Sect. 5.2 presents the two explicit diversification approaches that we evaluate.

5.1 Implicit diversification for fairness

As our implicit diversification approach to fairness, we leverage the well-known Maximal Marginal Relevance (Carbonell and Goldstein 1998) (MMR) diversification approach. At each iteration of the algorithm, MMR selects from the remaining documents the one with the maximal marginal relevance, calculated as follows:

where q is a query, r is a ranking of the subset of documents in the collection that are candidate documents with respect to their relevance to q, s is the subset of documents in r that have already been selected and \(r \setminus s\) is the set of documents in r that have not been selected. \(\mathrm {Sim}_1\) is a metric that measures the relevance of a document w.r.t. a query and \(\mathrm {Sim}_2\) is a metric to measure the similarity of a document and each of the previously selected documents.

To leverage MMR as a fairness component, we define the dissimilarity function, \(\mathrm {FairSim}\), for two document representations, \(\mathbf {d_i}\) and \(\mathbf {d_j}\), that are output from one of our proposed approaches presented in Sect. 4, as follows:

where \(a \in A_i \cap A_j\) is the set of attributes that are common to both \(\mathbf {d_i}\) and \(\mathbf {d_j}\), \(\mid a_i - a_j \mid \) is the absolute difference in the scores for a particular attribute, and \( \mid A_i \cap A_j \mid \) is the number of attributes that are common to \(\mathbf {d_i}\) and \(\mathbf {d_j}\). If \(d_i\) and \(d_j\) do not have any common attributes then \(\mathrm {FairSim}(\mathbf {d_i}, \mathbf {d_j}) = 0\). \(\mathrm {FairSim}\) is designed to identify to what extent two documents represent the same (or similar) fairness sub-group of an assumed fairness group (\(g \in G_A\)). In practice, an attribute is an index of \({\mathbf {d}}\) that has a non-zero value. However, when deploying \(\mathrm {FairSim}(\mathbf {d_i}, \mathbf {d_j})\) for our Author Experience grou**, we assume that any index that is non-zero in either \(\mathbf {d_i}\) or \(\mathbf {d_j}\) is non-zero in both \(\mathbf {d_i}\) and \(\mathbf {d_j}\).

For example, when deploying our topical grou**s presented in Sect. 4.3, a document can discuss many topics, e.g. user studies and user modelling. The score that represents how much the document is about a particular topic, z, is the probability of observing the topic given the document \(d_i\), \(p(z_i \mid d)\). If \(\mathbf {d_i}\) and \(\mathbf {d_j}\) have three common topics and the topic scores for each are \(\mathbf {d_i} = \{0.3,0.3,0.3\}\) and \(\mathbf {d_j} = \{0.2,0.2,0.2\}\), then \(\mathrm {FairSim}(\mathbf {d_i}, \mathbf {d_j}) = \frac{(0.3-0.2)+(0.3-0.2)+(0.3-0.2)}{3} = 0.1\). However, if the scores are \(\mathbf {d_i} = \{0.3,0.3,0.3\}\) and \(\mathbf {d_j} = \{0.1,0.1,0.1\}\), then \(\mathrm {FairSim}(\mathbf {d_i}, \mathbf {d_j}) = \frac{(0.3-0.1)+(0.3-0.1)+(0.3-0.1)}{3} = 0.2\). In other words, the documents in the second example are less similar than the documents in the first example because there is a greater difference in how strongly they are related to their common topics.

In practice, this is could be viewed as a hybrid approach (as apposed to a purely implicit diversification approach) since instead of calculating the similarity over the entire text of a document, as in the original MMR formulation, MMR calculates the documents’ similarities based on the characteristic scores that are output from our proposed assumed fairness groups approaches. MMR then generates fair rankings by selecting the documents that are most dissimilar from the previously selected documents, with respect to the characteristics of assumed fairness groups.

5.2 Explicit diversification for fairness

The first explicit diversification approach that we build on is xQuAD (Santos et al. 2010). xQuAD is a probabilistic framework for explicit search result diversification that guides the diversification process of an ambiguous query through a set of sub-queries, each having a possible interpretation for the original query. For a given query, q, and an initial ranking, r, xQuAD builds a new ranking, s, by iteratively selecting the highest scored document from r with the following probability mixture model:

where \(\mathrm {P}(d \mid q)\) is the estimated relevance of a document, d, with respect to the initial query q, and \(\mathrm {P}(d, {\bar{s}} \mid q)\) is the diversity of d with respect to s, i.e., how relevant d is to the subtopic queries that are least represented in s. xQuAD’s objective is to cover as many of the interpretations of the queries in the search results, while also ensuring novelty. To generate fair rankings using xQuAD, we leverage the fact that, for a given sub-query, \(q_i\), \(\mathrm {P}\left( d, {\bar{s}} \mid q_{i}\right) \) is calculated as:

where \(\mathrm {P}\left( d \mid q_{i}\right) \) is the probability of document d being relevant to the sub-query \(q_i\) and \(\mathrm {P}\left( {\bar{s}} \mid q_{i}\right) \) provides a measure of novelty, i.e. the probability of \(q_i\) not being satisfied by any of the documents already selected in s. We view the documents’ attributes, i.e., authors, publication venues or topics, as sub-queries and a document attribute’s characteristic score as a measure of the attribute’s fairness sub-group coverage. We then calculate the relevance and novelty of a document, d, with respect to a fairness sub-group, \(g_i\), as follows:

where \(\mathrm {P}\left( d \mid g_{i}\right) \) is the probability of document d being associated to the group \(g_i\) and \(\mathrm {P}\left( {\bar{s}} \mid g_{i}\right) \) is the probability of \(g_i\) not being associated to any of the documents already selected in s. \(\mathrm {P}\left( {\bar{s}} \mid g_{i}\right) \) is obtained using \(1-P(s \mid g_i)\), while \(P(s \mid g_i)\) is directly observable.

In other words, xQuAD iteratively adds documents to s by prioritising (1) documents that belong to assumed fairness sub-groups that have relatively few documents belonging to them, \(\mathrm {P}\left( d \mid g_{i}\right) \), and (2) documents that belong to fairness sub-groups that do not have many documents belonging to them in the partially constructed ranking s, \(\mathrm {P}\left( {\bar{s}} \mid g_{i}\right) \). For example, when deploying our topical assumed fairness groups, if there are relatively few documents that belong to the latent topic interaction simulation then these documents will be prioritised for selection, unless relatively many of the documents that have previously been selected for s also belong to this assumed fairness group. In that case, documents that belong to another, less rare but underrepresented, group will be prioritised. In doing so, the coverage of the assumed fairness groups is maximised in the top rank positions by promoting documents that belong to underrepresented groups.

We now move on to discuss the second explicit diversification approach that we build on for generating fair rankings, namely PM-2 (Dang and Croft 2012). PM-2 is a proportional representation approach that aims to generate a useful and diversified ranked list of search results (of any given size) by sampling documents from a larger list of documents that have been ranked with respect to their relevance to a user’s query. The aim of PM-2 is to generate a list of search results in which the number of documents relating to a query aspect, \(a_i \in A\), that are included in the search results are proportional to the number of documents relating to the aspect in the larger list of documents that the search results are sampled from. In other words, for the query java, if 90% of the documents in the larger ranked list of documents are about java the island then 90% of the search results should also be about the island.

PM-2 selects documents to add to the ranking, s, as follows:

where \(qa\left[ i^{*}\right] \) is \(\frac{v_i}{2s_i+1}\), \(v_i\) is the number of documents that discuss aspect \(a_i\), \(s_i\) is the number of rank positions that are assigned to \(a_i\) (proportional to the popularity of \(a_i\) in the larger list of documents that the search results are sampled from), \(\mathrm {P}\left( d_{j} \mid a_{i}\right) \) is a document’s fairness characteristic score for aspect, \(a_i\), and \(a_{i^{*}}\) is an aspect that has already been selected for s.

When generating fair rankings with PM-2, we view the documents’ attributes as the query aspects, \(a_i \in A\). We view the proportionality of an aspect, \(a_i\), as the fraction of documents in the whole document collection, D, that also contain the aspect and replace \(v_i\) in Equation (11) with the probability, \(\mathrm {p}(a_i \mid D)\), of the aspect, \(a_i\), in the collection D – i.e. the fraction of documents in the collection that contain \(a_i\).

In other words, for each of the attributes in an assumed fairness group in turn, documents that have a relatively large characteristic score for that attribute, but also have characteristic scores for many attributes, are prioritised for selection in the ranking until the allocated portion of the ranking, s (proportional to the frequency of the attribute in the entire collection), is filled by documents that contain \(a_i\). As a consequence, this ensures the promotion of documents that contain group fairness attributes, which are underrepresented with respect to their proportionality in the collection.

6 Experimental setup

In this section, we present our experimental setup for evaluating the effectiveness of leveraging search results diversification to generate fair rankings of search results. We aim to answer the following research questions:

-

RQ1: Is leveraging search results diversification as a fairness component effective for generating fair rankings?

-

RQ2: Which family of search results diversification, i.e. explicit vs. implicit, is most effective as a fairness component?

-

RQ3: Does diversifying over multiple assumed fairness grou**s results in increased fairness?

We evaluate our research questions on the test collections of the 2019 and 2020 TREC Fair Ranking Tracks. As previously stated in Sect. 3, it is appropriate to evaluate the fairness of an IR system over a sequence of possibly repeating queries to allow the system to correct for any potential unfairness in the results of previous query instances. The TREC Fair Ranking Track is designed to evaluate such a scenario within the context of an academic search application.

The 2019 and 2020 Fair Ranking Track test collections both consist of documents (academic paper abstracts) sampled from the Semantic Scholar (S2) Open corpus (Ammar et al. 2018) from the Allen Institute for Artificial Intelligence,Footnote 6 along with training and evaluation queries. Both of the collections are constructed from the same 7903 document abstracts. However, each of the collections have a different set of queries. The approaches that we evaluate in this work are all unsupervised approaches. Therefore, we use the evaluation queries from each of the collections. The task is setup as a re-ranking task, where each of the queries has an associated set of candidate document with relevance judgements and fairness group ground truth labels. The number of candidate documents that are to be re-ranked varies per-query, ranging from 5 to 312. Table 2 provides statistics about the (per-query) candidate documents for the evaluation collections. There are 4040 documents that have relevance judgements for the 635 evaluation queries of the 2019 collection and 4693 documents have relevance judgements for the 200 evaluation queries of the 2020 collection. In our experiments, we evaluate our approaches over 100 instances of each of the queries.

The collections include relevance assessments for two unknown evaluation fairness groups, i.e. the groups were not known by the track participants and did not influence our proposed fairness approaches. Both of the evaluation fairness groups define 2 sub-groups that a system should be fair to. The first evaluation group is the H-index of a paper’s authors. This evaluation group evaluates if a system gives a fair exposure to papers that have authors from a low H-index sub-group (H-index \(<15\)) and a high H-index sub-group (H-index \(\ge 15\)). The second evaluation group is the International Monetary FundFootnote 7 (IMF) economic development level of the countries of the authors’ affiliations. The sub-groups that this group evaluates if a system gives a fair exposure to are papers that have authors from less developed countries and more developed countries.Footnote 8 For the H-index and IMF evaluation groups, a paper can have authors from both of the defined sub-groups. In this case, in our experiments, we assign the paper to the low H-index sub-group or the less developed country sub-group and not to the high H-index sub-group or the more developed country sub-group. For example, in the case of the H-index fairness sub-group, if a paper has three authors and the H-indices of the three authors are 5, 7, and 20, then the paper is assigned to the low H-index fairness sub-group in the ground truth since at least one of the authors has an H-index of \(<15\).

As noted by Biega et al. (2012; Ounis et al. 2006) and apply standard stopword removal and Porter stemming. We deploy the DPH (He et al. 2008) parameter free document weighting model from the Divergence from Randomness (DFR) framework as a relevance-oriented baseline (i.e. there is no explicit fairness component deployed in this approach), denoted as \(\mathrm {DPH}\) in Sect. 7. Moreover, we use the relevance scores from the \(\mathrm {DPH}\) baseline approach as the relevance component for each of the diversification approaches that we evaluate.

As our metrics, we report the mean Disparate Treatment Ratio (denoted as DTR) and mean Disparate Impact Ratio (denoted as DIR) that were proposed by Singh and Joachims (2018). DTR and DIR measure how much a sequence of rankings violates the Disparate Treatment and Disparate Impact constraints, that we introduced in Sect. 1, respectively. For two groups, \(G_0\) and \(G_1\), DTR measures the extent that the groups’ exposures are proportional to their utility. For a given query, q, and a doubly stochastic matrix \(\varvec{\mathrm {P}}\) that estimates the probability of each candidate document being ranked at each rank position over a distribution of rankings that have maximal utility (see Singh and Joachims (2018) for full details of how \(\varvec{\mathrm {P}}\) is computed), DTR is defined as:

where, the utility, \(\mathrm {U}\), of a group \(G_{k}\), is calculated as the sum of the binary relevances, u, of each of the documents, \(d_i\), in \(G_{k}\), and is defined as:

Following Singh and Joachims (2018), we estimate the exposure drop-off of a document at position j (\(Pos_j\)) in a ranking using the position bias user model of DCG (Järvelin and Kekäläinen 2002), i.e., \(\mathrm {Exposure}(Pos_j) = \frac{1}{\log (1+Pos_j)}\).

DIR measures the contribution of each of a group’s members (i.e., documents) to the overall utility of the group, defined as:

where CTR is the sum of the expected click-through rates of the documents in group \(G_k\), and the click-through rate of a document, \(d_i\), is estimated as \(\mathrm {Exposure}(d_i \mid \varvec{\mathrm {P}}) (d_i \text { is relevant}).\)

For DTR and DIR, a value of 1 shows that both of the groups have a proportionate exposure and impact, respectively, within the generated rankings. Values less than or greater than 1 show the amount that one of the groups is being disadvantaged by the rankings, with respect to the utility (i.e, relevance) of the documents in the group. We note again here that the number of candidate documents that are associated to a query varies on a per-query basis. Therefore, the size of the ranking and the depth to which DTR and DIR is calculated also varies per-query.

To test for statistical significance, we use the paired t-test over all of the query instances. We select \(p<0.05\) as our significance threshold and apply Bonferroni correction (Dunn 1961) to adjust for multiple comparisons. Approaches that perform significantly better than the next best performing system with the same assumed fairness groups configuration for an individual metric (e.g., DTR) are denoted with \(\dagger \). For example, for the systems that diversify over the Author Experience (A) and Topical (T) assumed fairness groups together (denoted by subscript AT), a system is compared with the next best performing system in a pairwise manner (e.g., \({PM2}_{{AT}}\) vs. \({MMR}_{{AT}}\)) w.r.t. the specific metric (e.g., DTR). If there is a significant difference in the systems’ performance then the best performing system is denoted by \(\dagger \). Approaches that perform significantly better than the DPH relevance-only approach for an individual metric are denoted with \(\ddagger \).

7 Results

In this section, we report the results of our experiments. When evaluating the effectiveness of our proposed approaches, we are primarily concerned with the suitability of search results diversification for generating rankings that provide a fair exposure to unknown protected groups. In other words, a protected group should receive an exposure that is proportional to the average relevance of the group, with respect to a user’s query. With this in mind, the metrics that we report in this section, namely Disparate Treatment Ratio (DTR) and Disparate Impact Ratio (DIR), consider the exposure that the (authors of) papers from the sub-groups of a protected group receive in proportion to the relevance (utility) of the papers. It is important to note that for both of the metrics, DTR and DIR, a protected group has two sub-groups (e.g., low H-index and high H-index) and a score of 1.0 denotes that both of the sub-groups receive an exposure that is proportional to the utility / relevance of the documents in the sub-group. Values less than or greater than 1 show that one of the sub-groups is disadvantaged by the rankings, with respect to the utility (i.e, relevance) of the documents in the sub-group.

Table 3 presents the performance of our diversification approaches for fair rankings, namely MMR, xQuAD and PM-2, with each of our assumed fairness groups: Author Experience (denoted as A), Journal Exposure (denoted as J) and Topics (denoted as T) individually and combined. The table presents each of the proposed approaches’ performance in terms of DTR and DIR w.r.t. each of the official TREC evaluation groups, namely H-Index and IMF Economic Level, denoted as IMF, for the 2019 and 2020 TREC Fair Ranking Track collections. In addition, the table shows the results of our DPH relevance-only baseline and a random permutation of the result set, r, returned by the relevance-only model,Footnote 9 denoted as Random.

Firstly, addressing RQ1, we are interested in whether leveraging search results diversification as a fairness component is effective for generating fair rankings. We note from Table 3 that all of our proposed fair diversification approaches result in a fairer exposure for the authors of relevant documents than both the relevance-only DPH approach and the Random permutation approach in terms of DTR and DIR, for both of the evaluation fairness groups (H-index and IMF) on both the 2019 and the 2020 collections. Moreover, twelve of the twenty one approaches that we evaluate result in significantly fairer levels of exposure for the H-index evaluation fairness grou** on the 2019 collection and both of the evaluation grou**s on the 2020 collection, in terms of DTR and DIR (\(p<0.05\), denoted as \(\dagger \) in Table 3).

All of the diversification approaches for fairness that we evaluate in this work use our DPH approach for estimating relevance. This shows that, for the diversification approaches that we evaluate, leveraging diversification to integrate a fairness component into the rankings strategy does indeed lead to protected groups receiving a fairer exposure that is more in-line with their utility, or relevance. Therefore, in response to RQ1, we conclude that diversifying over assumed fairness grou**s can indeed result in fairer rankings when the actual protected groups are not known.

Moving to RQ2, which addresses which of the families of search results diversification, i.e. implicit or explicit, is the most effective for deploying as a fairness component. In terms of DTR, xQuAD explicit diversification consistently results in rankings that are the fairest in terms of ensuring that a protected group receives an exposure proportional to the overall utility of the documents from the group. This observation is true when xQuAD is deployed on either of the 2019 or 2020 collections and evaluated for either of the evaluation fairness grou**s (H-index or IMF). On the 2019 collection, \(\textit{xQuAD}_{\textit{J}}\) achieves 5.20 DTR for the H-index evaluation grou** and \(\textit{xQuAD}_{\textit{T}}\) achieves perfect 1.00 DTR for the IMF evaluation grou**. On the 2020 collection, \(\textit{xQuAD}_{\textit{T}}\) achieves 4.48 DTR for the H-index evaluation grou**, while \(\textit{xQuAD}_{\textit{A}}\) and \(\textit{xQuAD}_{\textit{AT}}\) both achieve 1.10 DTR for the IMF evaluation grou**. We note that the 1.10 DTR achieved by \(\textit{xQuAD}_{\textit{A}}\) and \(\textit{xQuAD}_{\textit{AT}}\) for the IMF fairness grou** on the 2020 collection is a 49.7% increase in fairness of exposure compared to the 2.19 DTR of the DPH relevance-only approach.

When deployed on the 2020 Fair Ranking collection, the xQuAD approaches perform significantly better in terms of DTR than the next best performing diversification approach deployed with the same assumed fairness grou**s (denoted as \(\ddagger \) in Table 3). For the H-index evaluation group, \(\textit{xQuAD}_{\textit{T}}\) achieves 4.48 DTR while \(\textit{PM2}_{\textit{T}}\) and \(\textit{MMR}_{\textit{T}}\) only achieve 5.00 DTR. For the IMF evaluation group, \(\textit{xQuAD}_{\textit{AT}}\) achieves 1.10 DTR while \(\textit{PM2}_{\textit{AT}}\) only achieves 1.31 DTR. Moreover, \(\textit{xQuAD}_{\textit{A}}\) achieves 1.10 DTR while \(\textit{PM2}_{\textit{A}}\) only achieves 1.43 DTR. However, we note that, in terms of DTR, the differences between diversification approaches that are deployed with the same assumed fairness grou**s are not significant when the approaches are deployed on the 2019 TREC Fair Ranking Track collection.

Turning our attention to how well explicit and implicit diversification approaches perform in terms of DIR, we note from Table 3 that there is no approach that consistently performs best for both of the evaluation groups or on both of the TREC Fair Ranking collections. For the H-index evaluation grou**, \(\textit{MMR}_{\textit{A}}\) and \(\textit{PM2}_{\textit{A}}\) achieve the best DIR score for on the 2019 and 2020 collections, achieving 1.99 DIR and 1.91 DIR respectively. However, the approaches are not significantly better than the 2.06 DIR (2019) and 1.99 (2020) DIR that is achieved by \(\textit{xQuAD}_{\textit{A}}\).

For the IMF evaluation grou**, on the 2019 collection \(\textit{xQuAD}_{\textit{AJ}}\) and \(\textit{xQuAD}_{\textit{AT}}\) are the best performing approaches and achieve 0.32 DIR. However, they do not perform significantly better than the MMR or PM2 approaches in terms of DIR. Moreover, notably, none of the fair diversification approaches actually perform significantly better than the DPH relevance-only approach or the Random approach for the IMF evaluation grou** on the 2019 collection. When deployed on the 2020 collection, \(\textit{xQuAD}_{\textit{AT}}\) performs best in terms of DIR for the IMF evaluation grou** (0.49 DIR). However, \(\textit{xQuAD}_{\textit{AT}}\) is not significantly better than \(\textit{MMR}_{\textit{AT}}\) or \(\textit{PM2}_{\textit{A}}\) in terms of DIR for the IMF evaluation grou** on the 2020 collection.

These findings provide some evidence that, from the approaches that we evaluate, explicit search results diversification is potentially the more viable diversification approach for develo** fair ranking strategies within an academic search context. This finding is supported by the observation that xQuAD explicit search results diversification is consistently the best performing approach in terms of DTR for both of the evaluation fairness grou**s when deployed on either of the TREC Fair Ranking Track collections.

Therefore, in response to RQ2, we conclude that explicit search results diversification appears to be the most effective approach, within an academic search context, for ensuring that protected groups receive a fair exposure that is proportional to their utility (relevance) to the users (as is measured by DTR). However, more work needs to be done to identify what is the most effective diversification approach for ensuring that each of the members of a protected group contribute a proportionate amount of gain to the protected group’s overall exposure, i.e., the individual exposure of each of the members of the protected group (as is measured by DIR).

Lastly, addressing RQ3, we conclude that, on our experiments, diversifying over multiple assumed fairness groups does not lead to increased fairness. As can be seen from the numbers in bold in Table 3, three out of the four best performing approaches in terms of DTR diversify over a single assumed fairness group, namely \(\textit{xQuAD}_{\textit{T}}\) for the IMF evaluation grou** on the 2019 collection and for the H-index evaluation grou** on the 2020 collection, and \(\textit{xQuAD}_{\textit{A}}\) for the IMF grou** on the 2020 collection. Moreover, in terms of DIR, \(\textit{MMR}_{\textit{A}}\) and \(\textit{PM2}_{\textit{A}}\) achieve the best scores for the H-index evaluation grou** on both the 2019 and 2020 collection. The remaining best performing approaches, namely \(\textit{xQuAD}_{\textit{AJ}}\) and \(\textit{xQuAD}_{\textit{AT}}\) both diversify over two assumed fairness groups. However, none of the approaches perform best for any of the evaluation fairness grou**s of TREC collections when they diversify over all three assumed fairness groups. This suggests that further work is needed to adequately integrate multiple assumed fairness groups in a diversification approach. We expect that explicitly diversifying across multiple dimensions (Yigit-Sert et al. 2021) of the groups will improve this. However, we leave this interesting area of research to future work.

Finally, we note that in our experiments, diversifying over the documents’ authors’ experience seems to be a particularly promising approach for generating fair ranking strategies in academic search. Five of the six best performing diversification approaches in terms of DTR and DIR diversify over this assumed fairness group, either as a single group or in combination with one other assumed fairness group, i.e., \(\textit{MMR}_{\textit{A}}\), \(\textit{xQuAD}_{\textit{A}}\), \(\textit{PM2}_{\textit{A}}\), \(\textit{xQuAD}_{\textit{AJ}}\) and \(\textit{xQuAD}_{\textit{AT}}\). We note however, that our approach for calculating an author’s experience is only a first reasonable attempt to model this assumed fairness grou** and there remains room for improvement. For example, it is possible that when a lesser known researcher is an author on a very highly cited paper, such as a resource paper, this will potentially skew the system’s view of the author’s experience. Moreover, we note that our experiments only investigate the exposure that the groups receive for a single browsing model. In practice, variations in users’ browsing behaviour will potentially lead to varying exposures for individual papers and authors, and for the overall group that the paper and/or author belong to. Furthermore, the diversification approaches that we evaluate in this work are deterministic processes. A next logical step in develo** diversification approaches for fairness would seem to be to introduce a non-deterministic element to proactively compensate for the under, or over, exposure of protected groups. However, we leave the investigation of these interesting questions to future work.

8 Conclusions

In this work, we proposed to cast the task of generating rankings that provide a fair exposure to unknown protected groups of authors as a search results diversification task. We leveraged three well-known search results diversification models from the literature as fair ranking strategies. Moreover, we proposed to adapt search results diversification to diversify the search results with respect to multiple assumed fairness group definitions, such as early-career researchers vs. highly-experienced authors. Our experiments on the 2019 and 2020 TREC Fair Ranking Track datasets showed that leveraging adequately tailored search results diversification can be an effective approach for generating fair rankings within the context of academic search. Moreover, we found that explicit search results diversification performed better than implicit diversification for providing a fair exposure for protected author groups, while ensuring that the group’s exposure is in-line with the utility, or relevance, of the groups’ papers. In terms of Disparate Treatment Ratio (DTR), xQuAD explicit search results diversification was the most effective approach for generating fair rankings w.r.t. both of the TREC Fair Ranking Track evaluation grou**s (H-index and IMF) when the approach was deployed on either of the 2019 or 2020 collections.

This work has provided an in-depth analysis of how search results diversification can be effective as an approach for addressing the important topic of ensuring fairness of exposure in the results of search systems. The search results diversification literature is very broad ranging and, although diversification is not the same task as fairness of exposure, there are potentially many other interesting and useful approaches that can build on the similarities between the tasks to improve the exposure of disadvantaged, or under-represented, societal groups within the results of search engines. In summary, this work has provided a foundation on which future work on integrating fairness into IR systems, and in-particular diversification-based approaches, can build on as this emerging field continues to develop.

Availability of data and material

The datasets are available from the TREC fair Ranking Track, NIST USA. Our assumed fairness groups can be directly calculated from the datasets.

Code availability

All of the diversification approaches that we leverage are in the public domain.

Notes

In the TREC Fair Ranking Track test collection, each paper is only published in a single venue. Therefore, in this work, there is only one non-zero value in the list for each document (the size of the list is equal to the number of publications venues in the collection). However, in practice, it is often the case that a paper can be available through multiple venues (for example through the ACM digital library and through ar**v). Our proposed approach handles such a case without any adaptation.

The threshold IMF economic development level that was used to separate countries into less or more developed has not been disclosed by the TREC Fair Ranking Track organisers.

Note that the size of r is equal to the number of candidate documents that are associated to a query and varies on a per-query basis.

References

Abebe, R., Barocas, S., Kleinberg, J. M., Levy, K., Raghavan, M., & Robinson, D. G. (2020). Roles for computing in social change. In Proceedings of the FAT* Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, January 27–30, 2020, ACM, pp. 252–260. https://doi.org/10.1145/3351095.3372871.

Agrawal, R., Gollapudi, S., Halverson, A., & Ieong, S. (2009). Diversifying search results. In Proceedings of the Second International Conference on Web Search and Web Data Mining, WSDM 2009, Barcelona, Spain, February 9–11, 2009, ACM, pp. 5–14. https://doi.org/10.1145/1498759.1498766.

Ammar, W., Groeneveld, D., Bhagavatula, C., Beltagy, I., Crawford, M., Downey, D., Dunkelberger, J., Elgohary, A., Feldman, S., Ha, V., Kinney, R., Kohlmeier, S., Lo, K., Murray, T., Ooi, H., Peter, M. E., Power, J., Skjonsberg, S., Wang, L. L., Wilhelm, C., Yuan, Z., van Zuylen, M., & Etzioni, O. (2018). Construction of the literature graph in semantic scholar. In: Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2018, New Orleans, Louisiana, USA, June 1-6, 2018, Volume 3 (Industry Papers), Association for Computational Linguistics, pp. 84–91. https://doi.org/10.18653/v1/n18-3011.

Baeza-Yates, R. (2018). Bias on the web. Communication in ACM, 61(6), 54–61. https://doi.org/10.1145/3209581

Belkin, N. J., & Robertson, S. E. (1976). Some ethical implications of theoretical research in information science. InThe ASIS Annual Meeting.

Bender, E. M., Gebru, T., McMillan-Major, A., & Shmitchell, S. (2021). On the dangers of stochastic parrots: Can language models be too big? In Proceedings of the FAccT ’21: 2021 ACM Conference on Fairness, Accountability, and Transparency, Virtual Event / Toronto, Canada, March 3–10, 2021, ACM, pp. 610–623. https://doi.org/10.1145/3442188.3445922.

Biega, A. J., Gummadi, K. P., & Weikum, G. (2018). Equity of attention: Amortizing individual fairness in rankings. In: Proceedings of the 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, SIGIR 2018, Ann Arbor, MI, USA, July 08–12, 2018, ACM, pp. 405–414. https://doi.org/10.1145/3209978.3210063.

Biega, A. J., Diaz, F., Ekstrand, M. D., & Kohlmeier, S. (2020). Overview of the TREC 2019 fair ranking track. CoRR ar**v:abs/2003.11650.

Biega, A. J., Diaz, F., Ekstrand, M. D., Feldman, S., & Kohlmeier, S. (2021). Overview of the TREC 2020 fair ranking track. CoRR ar**v:abs/2108.05135.

Bolukbasi, T., Chang, K., Zou, J. Y., Saligrama, V., & Kalai, A. T. (2016). Man is to computer programmer as woman is to homemaker? debiasing word embeddings. In Proceedings of the Advances in Neural Information Processing Systems 29: Annual Conference on Neural Information Processing Systems 2016, December 5–10, 2016, Barcelona, Spain, pp. 4349–4357.

Broder, A. Z. (2002). A taxonomy of web search. SIGIR Forum, 36(2), 3–10. https://doi.org/10.1145/792550.792552

Calders, T., & Verwer, S. (2010). Three naive bayes approaches for discrimination-free classification. Data Mining and Knowledge Discovery, 21(2), 277–292. https://doi.org/10.1007/s10618-010-0190-x

Carbonell, J. G., & Goldstein, J. (1998). The use of MMR, diversity-based reranking for reordering documents and producing summaries. In Proceedings of the 21st Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, August 24–28, 1998, Melbourne, Australia, ACM, pp. 335–336. https://doi.org/10.1145/290941.291025.

Castillo, C. (2018). Fairness and transparency in ranking. SIGIR Forum, 52(2), 64–71. https://doi.org/10.1145/3308774.3308783

Celis, L. E., Straszak, D., & Vishnoi, N. K. (2018). Ranking with fairness constraints. In: Proceedings of the 45th International Colloquium on Automata, Languages, and Programming, ICALP 2018, July 9–13, 2018, Prague, Czech Republic, LIPIcs, vol. 107, pp. 28:1–28:15. https://doi.org/10.4230/LIPIcs.ICALP.2018.28.

Chen, H., & Karger, D. R. (2006). Less is more: probabilistic models for retrieving fewer relevant documents. In Proceedings of the 29th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Seattle, Washington, USA, August 6–11, 2006, ACM, pp. 429–436. https://doi.org/10.1145/1148170.1148245

Chouldechova, A. (2017). Fair prediction with disparate impact: A study of bias in recidivism prediction instruments. Big Data, 5(2), 153–163. https://doi.org/10.1089/big.2016.0047

Culpepper, J. S., Diaz, F., & Smucker, M. D. (2018). Research frontiers in information retrieval: Report from the third strategic workshop on information retrieval in Lorne (SWIRL 2018). SIGIR Forum, 52(1), 34–90. https://doi.org/10.1145/3274784.3274788

Dang, V., & Croft, W. B. (2012). Diversity by proportionality: an election-based approach to search result diversification. In Proceedings of the 35th International ACM SIGIR conference on research and development in Information Retrieval, SIGIR ’12, Portland, OR, USA, August 12-16, 2012, ACM, pp. 65–74. https://doi.org/10.1145/2348283.2348296.

De-Arteaga, M., Romanov, A., Wallach, H. M., Chayes, J. T., Borgs, C., Chouldechova, A., Geyik, S. C., Kenthapadi, K., & Kalai, A. T. (2019). Bias in bios: A case study of semantic representation bias in a high-stakes setting. In Proceedings of the Conference on Fairness, Accountability, and Transparency, FAT* 2019, Atlanta, GA, USA, January 29–31, 2019, ACM, pp. 120–128. https://doi.org/10.1145/3287560.3287572.

Diaz, F., Mitra, B., Ekstrand, M. D., Biega, A. J., & Carterette, B. (2020). Evaluating stochastic rankings with expected exposure. In Proceedings of the 29th ACM International Conference on Information and Knowledge Management, Virtual Event, Ireland, October 19–23, 2020, ACM, pp. 275–284. https://doi.org/10.1145/3340531.3411962.

Dunn, O. J. (1961). Multiple comparisons among means. Journal of the American statistical association, 56(293), 52–64.

Dwork, C., Hardt, M., Pitassi, T., Reingold, O., & Zemel, R. S. (2012). Fairness through awareness. In: Proceedings of the Innovations in Theoretical Computer Science Conference, Cambridge, MA, USA, January 8–10, 2012, ACM, pp. 214–226. https://doi.org/10.1145/2090236.2090255.

Ekstrand, M. D., Burke, R., & Diaz, F. (2019). Fairness and discrimination in retrieval and recommendation. In: Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR 2019, Paris, France, July 21-25, 2019, ACM, pp 1403–1404, https://doi.org/10.1145/3331184.3331380.

Epstein, R., Robertson, R. E., Lazer, D., & Wilson, C. (2017). Suppressing the search engine manipulation effect (SEME). ACM on Human-Computer Interaction 1(CSCW), 42:1–42:22. https://doi.org/10.1145/3134677.

Gao, R., & Shah, C. (2020). Toward creating a fairer ranking in search engine results. Information Processing and Management 57(1). https://doi.org/10.1016/j.ipm.2019.102138.

Hajian, S., & Domingo-Ferrer, J. (2013). A methodology for direct and indirect discrimination prevention in data mining. IEEE Transactions on Knowledge and Data Engineering, 25(7), 1445–1459. https://doi.org/10.1109/TKDE.2012.72.

Hardt, M., Price, E., & Srebro, N. (2016). Equality of opportunity in supervised learning. In Proceedings of the Neural Information Processing Systems Annual Conference, December 5–10, 2016, Barcelona, Spain, pp. 3315–3323

He, B., Macdonald, C., Ounis, I., Peng, J., & Santos, R. L. T. (2008). University of Glasgow at TREC 2008: Experiments in blog, enterprise, and relevance feedback tracks with Terrier. In Proceedings of the Seventeenth Text REtrieval Conference, TREC 2008, Gaithersburg, Maryland, USA, November 18–21, 2008, NIST Special Publication, vol. 500–277. http://trec.nist.gov/pubs/trec17/papers/uglasgow.blog.ent.rf.rev.pdf

Järvelin, K., & Kekäläinen, J. (2002). Cumulated gain-based evaluation of IR techniques. ACM Transactions on Information Systems, 20(4), 422–446. https://doi.org/10.1145/582415.582418.

Kamiran, F., & Calders, T. (2009). Classifying without discriminating. In Proceedings of the 2nd International Conference on Computer, Control and Communication, IEEE, pp. 1–6. https://doi.org/10.1109/IC4.2009.4909197.

Kamishima, T., Akaho, S., & Sakuma, J. (2011). Fairness-aware learning through regularization approach. In Proceedings of the 11th International Conference on Data Mining Workshops, Vancouver, BC, Canada, December 11, 2011, IEEE Computer Society, pp. 643–650. https://doi.org/10.1109/ICDMW.2011.83.

Kay, M., Matuszek, C., & Munson, S. A. (2015). Unequal representation and gender stereotypes in image search results for occupations. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, CHI 2015, Seoul, Republic of Korea, April 18–23, 2015, ACM, pp. 3819–3828. https://doi.org/10.1145/2702123.2702520.

Kleinberg, J. M., Mullainathan, S., & Raghavan, M. (2016). Inherent trade-offs in the fair determination of risk scores. CoRR ar** Terrier. In Proceedings of the SIGIR 2012 Workshop on Open Source Information Retrieval, OSIR@SIGIR 2012, Portland, Oregon, USA, 16th August 2012, University of Otago, Dunedin, New Zealand, pp. 60–63.

Mehrotra, R., Anderson, A., Diaz, F., Sharma, A., Wallach, H. M., & Yilmaz, E. (2017). Auditing search engines for differential satisfaction across demographics. In Proceedings of the 26th International Conference on World Wide Web Companion, Perth, Australia, April 3-7, 2017, ACM, pp. 626–633. https://doi.org/10.1145/3041021.3054197.

Mehrotra, R., McInerney, J., Bouchard, H., Lalmas, M., & Diaz, F. (2018). Towards a fair marketplace: Counterfactual evaluation of the trade-off between relevance, fairness & satisfaction in recommendation systems. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, CIKM 2018, Torino, Italy, October 22–26, 2018, ACM, pp. 2243–2251. https://doi.org/10.1145/3269206.3272027.

Morik, M., Singh, A., Hong, J., & Joachims, T. (2020). Controlling fairness and bias in dynamic learning-to-rank. In Proceedings of the 43rd International ACM SIGIR conference on research and development in Information Retrieval, SIGIR 2020, Virtual Event, China, July 25–30, 2020, ACM, pp. 429–438. https://doi.org/10.1145/3397271.3401100

Olteanu, A., Garcia-Gathright, J., de Rijke, M., & Ekstrand, M. D. (2019a). In: Proceedings of FACTS-IR. CoRR ar**v:abs/1907.05755.

Olteanu, A., Garcia-Gathright, J., de Rijke, M., Ekstrand, M. D., Roegiest, A., Lipani, A., et al. (2019). FACTS-IR: Fairness, accountability, confidentiality, transparency, and safety in information retrieval. SIGIR Forum, 53(2), 20–43. https://doi.org/10.1145/3458553.3458556.

Ounis, I., Amati, G., Plachouras, V., He, B., Macdonald, C., & Lioma, C. (2006). Terrier: A high performance and scalable information retrieval platform. In Proceedings of the SIGIR 2006 Workshop on Open Source Information Retrieval, OSIR@SIGIR 2006, Seattle, WA, USA, August 2006, pp. 18–25.

Pedreschi, D., Ruggieri, S., & Turini, F. (2008). Discrimination-aware data mining. In Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Las Vegas, Nevada, USA, August 24–27, 2008, ACM, pp. 560–568. https://doi.org/10.1145/1401890.1401959.

Pleiss, G., Raghavan, M., Wu, F., Kleinberg, J. M., & Weinberger, K. Q. (2017). On fairness and calibration. In Proceedings of the Advances in Neural Information Processing Systems Conference, December 4–9, 2017, Long Beach, CA, USA, pp. 5680–5689.

Radlinski, F., & Dumais, S. T. (2006). Improving personalized web search using result diversification. In Proceedings of the 29th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Seattle, Washington, USA, August 6–11, 2006, ACM, pp. 691–692. https://doi.org/10.1145/1148170.1148320.

Radlinski, F., Kleinberg, R., & Joachims, T. (2008). Learning diverse rankings with multi-armed bandits. In Proceedings of the Twenty-Fifth International Conference on Machine Learning, Helsinki, Finland, June 5-9, 2008, ACM, ACM International Conference Proceeding Series, vol. 307, pp. 784–791. https://doi.org/10.1145/1390156.1390255.

Richardson, M., Dominowska, E., & Ragno, R. (2007). Predicting clicks: estimating the click-through rate for new ads. In Proceedings of the 16th International Conference on World Wide Web, WWW 2007, Banff, Alberta, Canada, May 8–12, 2007, ACM, pp. 521–530. https://doi.org/10.1145/1242572.1242643.

Robertson, S. E. (1977). The probability ranking principle in IR. Journal of documentation.

Santos, R. L. T., Peng, J., Macdonald, C., & Ounis, I. (2010). Explicit search result diversification through sub-queries. In: Proceedings of the 32nd European Conference on Information Retrieval, Milton Keynes, UK, March 28-31, 2010. Proceedings, Springer, Lecture Notes in Computer Science, vol. 5993, pp. 87–99. https://doi.org/10.1007/978-3-642-12275-0_11.

Santos, R. L. T., MacDonald, C., & Ounis, I. (2015). Search result diversification. Foundations and Trends in Information Retrieval, 9(1), 1–90. https://doi.org/10.1561/1500000040.

Sapiezynski, P., Zeng, W., Robertson, R. E., Mislove, A., & Wilson, C. (2019). Quantifying the impact of user attentionon fair group representation in ranked lists. In: Companion of The 2019 World Wide Web Conference, WWW 2019, San Francisco, CA, USA, May 13–17, 2019, ACM, pp. 553–562. https://doi.org/10.1145/3308560.3317595.

Singh, A., & Joachims, T. (2018). Fairness of exposure in rankings. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, KDD 2018, London, UK, August 19–23, 2018, ACM, pp. 2219–2228. https://doi.org/10.1145/3219819.3220088.

Singh, A., & Joachims, T. (2019). Policy learning for fairness in ranking. CoRR ar**v:abs/1902.04056.

Spärck-Jones, K., Robertson, S. E., & Sanderson, M. (2007). Ambiguous requests: Implications for retrieval tests, systems and theories. In ACM SIGIR Forum, 41, 8–17. https://doi.org/10.1145/1328964.1328965

Wang, J., & Zhu, J. (2009). Portfolio theory of information retrieval. In Proceedings of the 32nd Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR 2009, Boston, MA, USA, July 19–23, 2009, ACM, pp. 115–122. https://doi.org/10.1145/1571941.1571963.

White, R. (2013). Beliefs and biases in web search. In Proceedings of the 36th International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR ’13, Dublin, Ireland—July 28—August 01, 2013, ACM, pp. 3–12. https://doi.org/10.1145/2484028.2484053.

Woodworth, B. E., Gunasekar, S., Ohannessian, M. I., & Srebro, N. (2017). Learning non-discriminatory predictors. CoRR ar**v:abs/1702.06081.

Yadav, H., Du, Z., & Joachims, T. (2019). Fair learning-to-rank from implicit feedback. CoRR ar**v:abs/1911.08054.

Yang, K., & Stoyanovich, J. (2017). Measuring fairness in ranked outputs. In: Proceedings of the 29th International Conference on Scientific and Statistical Database Management, Chicago, IL, USA, June 27–29, 2017, ACM, pp. 22:1–22:6. https://doi.org/10.1145/3085504.3085526.

Yigit-Sert, S., Altingovde, I. S., Macdonald, C., Ounis, I., & Ulusoy, Ö. (2021). Explicit diversification of search results across multiple dimensions for educational search. The Journal of the Association for Information Science and Technology, 72(3), 315–330. https://doi.org/10.1002/asi.24403.

Zafar, M. B., Valera, I., Gomez-Rodriguez, M., & Gummadi, K. P. (2017). Fairness beyond disparate treatment & disparate impact: Learning classification without disparate mistreatment. In: Proceedings of the 26th International Conference on World Wide Web, WWW 2017, Perth, Australia, April 3–7, 2017, ACM, pp. 1171–1180. https://doi.org/10.1145/3038912.3052660.

Zehlike, M., Bonchi, F., Castillo, C., Hajian, S., Megahed, M., & Baeza-Yates, R. (2017). FA*IR: A fair top-k ranking algorithm. In: Proceedings of the 2017 ACM Conference on Information and Knowledge Management, CIKM 2017, Singapore, November 06–10, 2017, ACM, pp. 1569–1578. https://doi.org/10.1145/3132847.3132938.

Zemel, R. S., Wu, Y., Swersky, K., Pitassi, T., & Dwork, C. (2013). Learning fair representations. In Proceedings of the 30th International Conference on Machine Learning, ICML 2013, Atlanta, GA, USA, 16–21 June 2013, JMLR.org, JMLR Workshop and Conference Proceedings, vol. 28, pp. 325–333. http://proceedings.mlr.press/v28/zemel13.html.

Acknowledgements

We wish to thank the Associate Editor and the three peer reviewers for their thorough comments and helpful suggestions.

Funding

Not applicable.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

None.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

McDonald, G., Macdonald, C. & Ounis, I. Search results diversification for effective fair ranking in academic search. Inf Retrieval J 25, 1–26 (2022). https://doi.org/10.1007/s10791-021-09399-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10791-021-09399-z