Abstract

Low student engagement and motivation in online classes are well-known issues many universities face, especially with distance education during the COVID-19 pandemic. The online environment makes it even harder for teachers to connect with their students through traditional verbal and nonverbal behaviours, further decreasing engagement. Yet, addressing such problems with 24/7 synchronous communication is overly demanding for faculty. This paper details an automated Question-Answering chatbot system trained in synchronous communication and instructor immediacy techniques to determine its suitability and effectiveness in attending to students undergoing an online Chemistry course. The chatbot is part of a new wave of affective focused chatbots that can benefit students’ learning process by connecting with them on a relatively more humanlike level. As part of the pilot study in the development of this chatbot, qualitative interviews and self-report data capturing student-chatbot interactions, experiences and opinions have been collected from 12 students in a Singaporean university. Thematic analysis was then employed to consolidate these findings. The results support the chatbot’s ability to display several communication immediacy techniques well, on top of responding to students at any time of the day. Having a private conversation with the chatbot also meant that the students could fully focus their attention and ask more questions to aid their learning. Improvements were suggested, in relation to the chatbot’s word detection and accuracy, accompanied by a framework to develop communication immediacy mechanics in future chatbots. Our findings support the potential of this chatbot, once modified, to be used in a similar online setting.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Teachers are familiar with the challenges of online courses: completion rates are below 15% (Waldrop, 2013), and students rapidly become disengaged a few weeks into the class and drop out for various reasons, making online course attrition rates difficult to research (Bawa, 2016). One major contributor to low completion rates and student frustrations in online learning courses is the lack of immediate help/response from the instructor when students have doubts to clarify or questions to ask (Skordis-Worrall et al., 2015). While forums and discussion boards have been used to interact and ask questions in online learning, these platforms often do not replicate the real-time interaction (synchronous communication) that on-campus students experience. With synchronous communication technology for online learning only beginning to catch up (Francescucci & Rohani, 2019) recently, it is not surprising that students remain to prefer communicating synchronously in-person (Watts, 2016).

Yet, synchronous communication by itself is insufficient for online student satisfaction and learning outcomes. An instructor’s use of immediacy behaviours is a good predictor of student learning over student demographic or course design (Arbaugh, 2001). These can be observed by asking questions, addressing students by name, using inclusive personal pronouns (we, us), responding frequently, offering praise, and communicating attentiveness (Walkem, 2014). Such techniques delivered through computer mediated communication tools can imbibe a sense of co-presence with students, and in the process promote effective communication (Gorham, 1988), enhance students’ affective and cognitive learning, motivation, and experiences (Roberts & Friedman, 2013). However, on-demand synchronous communication in online and distance education is time-consuming and unfeasible. Turning to machine learning-based artificial intelligence is one option to reduce the effort of on-demand synchronous communication. Question answering (QA) systems that are based on machine learning and natural language processing can ‘learn’, and thus can be ‘trained’ to produce responses that preserves instructor communication immediacy. We are beginning to see QA systems taking over teachers in online learning environments (e.g., Smutny & Schreiberova, 2020), to cater to increased synchronous communication and immediacy needs.

This study aims to explore instructor immediacy as mediated by a QA system used for synchronous communication. Specifically, we strive to determine how communication immediacy can be replicated by an automated chatbot for undergraduate students undergoing an online course. Furthermore, this project seeks to discover whether interacting with the chatbot affects students’ studying behaviour, competency levels, motivation to use the chatbot, and emotional state. In-depth qualitative data will be captured to accommodate the breadth of responses students might have regarding the chatbot. These aims will be studied within the context of Chem Quest (CQ), a fully online, self-directed, three-month Chemistry bridging course designed for students to revise concepts and formulas before they enter university.

1 Review of existing literature

1.1 Instructor presence in online learning: mehrabian’s communication immediacy

Increasing levels of synchronous communication can target declining teacher-student interaction in online distance learning. Using Mehrabian’s concept of communication immediacy to bring the teacher and student psychologically closer is one way of doing so (Mehrabian, 1971). Based on his concept, educators must emphasise both verbal and nonverbal communication techniques in their interaction to build rapport and reduce the social and psychological distance with students. An increase in immediacy can serve as a reward for students, potentially increasing their motivation and engagement levels in class (Liu, 2021). Something as simple as referring to a student by name is interpreted in a very positive light (Rocca, 2007), creating a socially intimate setting where the student is more invested in studying. Students are more likely to see instructors as approachable as well as caring (of students as individuals and about students’ learning) when instructors use immediacy practices in class (Melrose, 2009; Walkem, 2014). Student motivation has been one of the most heavily studied constructs in communication immediacy, some of which include positive correlations with attention span in class (Myers et al., 2014), healthy study behaviours, and academic success (Velez & Cano, 2008).

Another point to consider in communication immediacy is the difficulty in applying synchronous communication in online distance learning. Both verbal and nonverbal communication must be carried across via a different medium, with the potential for a decreased level of information and emotional rapport. Verbal immediacy is particularly appropriate in boosting the frequency of student discussion in the online environment (Ni & Aust, 2008) and has been shown to be a significant predictor of perceived learning (Arbaugh, 2010). Research has shown that immediacy in online teaching practices can affect students’ learning outcomes (Al Ghamdi, 2017), cognitive learning (Goodboy et al., 2009), and of course, motivation and engagement (Chesebro, 2003; Saba, 2018). While instructor immediacy can be displayed through asynchronous communication (email, discussion boards), instructors’ prompt response to students has been clearly identified as an aspect of instructor immediacy (Walkem, 2014). Prompt instructor responses through synchronous communications reflects instructors’ consistent presence and availability, thus creating a sensor of closeness between instructors and students, which students perceive as reassuring (Alharbi & Dimitriadi, 2018). Furthermore, studies such as Lin and Gao’s (2020) found that adding synchronous online sessions afforded more immediacy than asynchronous communication alone, pointing to a combination of both (Fabriz et. al., 2021) to be the most effective way for students to feel motivated and engaged during distance learning.

1.2 Limitations of current Question-Answering (QA) systems

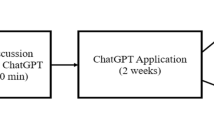

Teaching methods and the design of distance education (Rahimah et al., 2021) need to change to provide synchronous and immediate communication. One way of doing so, in the absence of synchronous communication with an instructor, is through automated QA systems that can be used to answer questions raised by students. However, current implementations of QA systems are far from being able to integrate perfectly into an online learning platform, especially where immediacy is concerned. For one, QA systems that support dialogue respond in a cold and unempathetic manner unlike humans, aimed at leading the person to a particular end result (Deriu et al., 2021; Zhou et al., 2.2 Chatbot purpose and development The chatbot was not designed to provide direct answers to the Level 0–2 questions in CQ. Rather, the system was envisioned to handle clarification questions that may be posed by students when reviewing learning materials, or after feedback had been given upon committing a mistake. This is analogous to a student asking questions to their peers or teacher during the process of revising class materials, or a student seeking clarification from an online search engine. Such a feature would attempt to promote self-regulated learning (Scheu & Benke, 2022), as students would have to be able to adjust their own study behaviours and strategies without relying on peers or faculty. As the design of the chatbot was still in its nascent stage, qualitative data had been collected to provide more insight into its current communication immediacy capabilities. The chatbot was developed and deployed on a conversational Artificial Intelligence (AI) platform called Chatlayer (Sinch Belgium BV, 2022), a deep learning platform that can interpret and answer textual questions based on NLP algorithms. The chatbot’s Chemistry knowledge database was first manually built up using two methods; Firstly, by including 740 questions frequently asked by students that have went through CQ in the previous years, and secondly, by including questions that have a high chance of being asked by future students, as a result of a brainstorming session with several Chemistry instructors. Potential questions include asking for the definition and explanation for all Chemistry-specific nouns in CQ, as well as understanding how to approach answering the MCQ assessments. The QA framework was constructed using this database, where the chatbot would provide information through hints, reminders, definitions, explanations, and video resources as a teaching method to guide the students through CQ depending on what and how the students asked a question. The types of information are summarized in the following list. The chatbot explained certain concepts or content for attempting CQ questions. For example: “Do remember that all non-zero digits are considered significant.” The chatbot provided formulas to the student as a hint. For example: “The formula for Specific Gravity (S.G.) is the Density of a solution divided by the Density of water.” The chatbot provided the definition of certain terminologies. For example: “A catalyst provides an alternate pathway with a lower activation energy for the reaction to occur.” The chatbot reminded the student of certain content previously learned. For example: “Remember that bond forming is an exothermic reaction (sign of H).” The chatbot provided the first step to help students kickstart their question solving process. For example: “Alright, so we can start by finding the atomic number and the electron configuration of each element.” Finally, the chatbot showed video resources to students that helped them better understand the content of the question. Chatlayer’s NLP engine could identify semantically similar questions (Mihalcea et al., 2006; Sindhgatta et al., 2017) from its database and respond correctly, meaning that students need not ask a question word-for-word to elicit an accurate response from the CQ chatbot. This would reflect a real-life scenario where the same question may be expressed by students in multiple ways. For example, the three questions “How many atoms are there in molecules?”, “Why do we say there are atoms in molecules?”, and “I’m not sure if atoms are in molecules or molecules are in atoms”, would be picked up as semantically similar by the NLP engine. The Chatlayer platform also included a fuzzy matching algorithm that allowed the system to detect typographical errors and informal language, as students may not use full sentences or proper grammar. In the event where the chatbot was not able to understand the student, it would respond with a template statement requesting students to email the course instructor for clarification, as a final resort. Otherwise, if students were satisfied with the answers provided by the chatbot, they were redirected to a conversation such as the one shown in Fig. 1 below, where feedback was gathered. Students then rated their experience with the chatbot from 1 (poor support) to 5 (very good support). Located online, students accessed the chatbot at any time, unrestricted by faculty office hours. A more in-depth technical description of how the chatbot has been designed is found in Atmosukarto et al. (2021). The target population consisted of matriculated pre-freshmen in the Food, Chemical and Biotechnology cluster in Academic Year 2021/2022 that were taking the CQ online programme. The target population was invited to take part in this study – to interact with the chatbot while undergoing CQ. 12 students were interested in participating; this group formed our target sample that was at least above the minimum of five participants for an appropriate qualitative depth (Nielsen & Landauer, 1993). As this study was aimed at exploring the experiences of students interacting with a communication immediacy chatbot with no prior literature, the relatively small size of the target sample was acceptable (Queirós et al., 2017). Similarly, although the results derived from this sample could be a good indicator of students’ studying behaviours, motivations, and emotions for future research to follow up on, it was not intended to be generalized (Sharma, 2010). The target sample had one month to complete CQ while interacting with the automated chatbot system. Two sources of qualitative data were collected from the target sample. The first source was two verbal self-report qualitative questionnaires collected during the one-month period. The verbal self-report qualitative questionnaires were audio recordings made by the 12 participants where they noted down their experiences interacting with the chatbot following a set of guiding questions. For both questionnaires, participants were required to verbally rate their motivation level, time spent on CQ, the number of times they used the chatbot and their overall experience. The first self-report questionnaire was obtained after their initial interaction with the chatbot while attempting CQ, whereas the second was obtained after the last time they interacted with the chatbot while completing CQ. Such a method, where participants record their own data without prompts from a researcher, would capture their thoughts and emotions immediately (Káplár-Kodácsy & Dorner, 2020) after interacting with the chatbot. The second source of data came from 1-to-1 semi-structured interviews after the 12 participants have interacted with the chatbot for the last time and completed CQ. Interviews were conducted online through Zoom, a face-to-face teleconferencing software, in view of COVID-19 safe distancing measures, and audio recorded for transcription and analysis. A semi-structured format allowed participants to express their opinions outside of our list of questions (Appendix A) regarding the chatbot system (McIntosh & Morse, 2015). All participants were interviewed by the same researcher for consistency of interaction, with each interview lasting roughly 30 min. The timeline and type of data collected is depicted in Fig. 2 below. All participants were compensated S$80 in cash for providing two verbal self-report qualitative questionnaire recordings and one interview recording; this data would be transcribed semi-verbatim and stored in NVIVO for analysis. Both raw and processed participant data were password protected, and only accessible by the researchers of this project. To prevent identification, only anonymised qualitative data will be shared in the next section. As the primary aim of this study was reliant on the experiential interactions between the target sample and CQ chatbot, thematic analysis was employed (Braun & Clarke, 2006). The six-step thematic analysis would allow the identification of important common themes (Herzog et al., 2019) within the verbal self-report qualitative questionnaire and interview data. Each audio recording was heard a minimum of three times, both during and after transcription, for data familiarisation (Step 1; Herzog et al., 2019). Words or phrases repeated by the participants multiple times were used to generate the initial list of codes (Step 2), before the codes were arranged into several overarching themes (Step 3). Using the relevant aspects of communication immediacy as discussed in the previous section, these themes were reviewed (Step 4) to exclude irrelevant content and refine the list of themes. Finally, the themes were titled (Step 5) in relation to communication immediacy as experienced by the participants and produced in this research paper (Step 6).

2.3 Sample

2.4 Data collection

2.5 Thematic analysis

3 Results

Three major themes emerged from the qualitative data; these were the most talked about topics regarding the effectiveness of the chatbot in delivering Chemistry-related academic content. The three themes raised were regarding (1) how well the chatbot understood students, (2) the emotional and behavioural effect of the chatbot’s replies, and (3) the speed and quality of interaction of the chatbot.

3.1 Chatbot comprehension ability

From the students’ perspective, giving out correct answers was the main reason for the chatbot to exist. There were some students who found the bot useful when working correctly. One student brought up the point that the chatbot was intelligent enough to supplement their learning by highlighting relevant areas to focus on rather than simply supplying students the answer.

“When I figure out how to use it, I think [the] answers that was given were strategic. They would tell you how to get there instead of just saying the answer.”

“Then while using the chat[bot] function, [I] sometimes have some good experiences. I ask about this certain topic, it will give me an answer … sometimes when I’m doing a question, they will link me a video to watch …”

However, the chatbot did not provide a correct response all the time. Even for the same student, using the chatbot at different times and with different questions yielded responses that had varying levels of usefulness. In some instances, as the quotes above support, the chatbot was helpful and educational, while in other instances, the chatbot was completely unhelpful. This inconsistency was not lost on the students, and most of their critical feedback revolved around the limited comprehension ability of the CQ chatbot.

“Because sometimes I find that it is useful then on the downside it does not feel helpful… after using the chat, I either feel more confused or gain more about the topic”

“When I try to ask [a] question, most of the time it gives me basic definition[s]; it cannot answer complex questions.”

As a result of this, participants found the chatbot to be hindering their process of learning Chemistry. Students reported that the chatbot could not understand their questions and was hence dispensing random answers relating to a different topic, informing the students to ask a different question, or suggesting that students contact a course instructor. Obviously, this issue interfered with the educational capability of the CQ chatbot.

“I realized that the chat couldn't really answer a lot of my queries, it didn't understand my vocabulary and the things that I’m asking”

The chatbot’s inability to fully comprehend the student’s questions led to incomplete responses. One student brought up the point that instead of letting them know how to solve a Chemistry question, the chatbot just provided them with a definition.

“It just didn’t show the steps in detail like step one, step two, step three. It just shows like the whole definition.”

3.2 Emotional and behavioural effect

Interacting with the chatbot led to certain emotional and behavioural outcomes, with some more positively perceived than others. There was feedback regarding positive emotional impacts of the chatbot when the chatbot managed to pick up on the students’ questions. In these cases, students reported feeling more confident in their ability to answer Chemistry questions. Their overall proficiency level increased when the chatbot explained specific processes well, or redirected students to more in-depth video resources.

“It is good at explaining definitions… It doesn’t tell you how to approach the question, it doesn’t spoon feed you… the chat compensates for this by recommending other online resources”

“I think what the tutor gave was a more strategic [response] on how to derive the answer, which I think was a very good move because I could still feel that I am solving the question on my own”

A behavioural change brought up by the students was an increase in self-directed learning. By interacting with the chatbot, students felt that they were missing key points when learning Chemistry and sought knowledge by themselves, mainly by studying Chemistry content outside the scope of Chem Quest (CQ), via the Google search engine for example. Although CQ was intended to be a refresher course, some students were inspired to learn beyond the basic concepts covered.

“After using the chat function, I realize that I did not really understand fully or grasp the concept properly and hence have to Google and watch more videos to understand”

Some students reported experiencing negative emotions when interacting with the chatbot. Frustration was commonly brought up, as students were co** with the chatbot providing the wrong type of hints to Chemistry questions. Many were annoyed at the simple functions the chatbot could not do – such as understanding the students’ questions.

“At that point of time, I know that it is [automated] as it keeps on saying the same thing again and again, then I have to reply [to] the same thing again and again which got me frustrated”

Other students were more understanding; they should have phrased their question in different ways to get different types of hints from the chatbot. Yet, they were still unable to obtain a response that was helpful to them. One student, quoted below, believed that their inability to ask questions was the main factor in the chatbot’s inaccurate replies.

“I ask the question, then instead of explaining, it was defining things for me… so, at the end of the day I ask myself if I’m asking the wrong question”

Even though some students found the chatbot useful, others reported a decrease in chatbot usage from the time they first started Chem Quest and when they completed the online course. This group of students was disinclined to use the chatbot since the answers provided were not helpful.

“I felt that it was not useful and slowly [stopped] using it. It is because, when I ask the questions to the chat, it did not really answer it”

3.3 Speed and quality

Students discussed how the chatbot interacted positively with them, including having a warm and encouraging tone that one would expect from a human being. With regards to the chatbot’s all day accessibility, many students praised its speed, saying that they did not have to wait long. While they were positive about the speed of the chatbot, students understood its limitations as well.

“Asked simple questions, like formula required for the questions. Feel that it is very helpful as it is very fast, hence very useful rather than going to google”

However, not all students believed that the chatbot was more useful than search engines. With the chatbot’s sometimes unhelpful responses, students debated whether to ask the chatbot or to use a search engine for help.

“I feel that every time I want to ask a question, there are two things I think of: 1) Is the question worth asking, 2) can I find it on Google”

In terms of interaction, students enjoyed the natural, even informal, conversations with the chatbot. This was reflected when students gave good feedback on the chatbot’s ability to be casual.

“The chat is actually quite interactive, and fun [as] they put in emojis … they [are] also very patient for my answer or doubts that I have”

Despite this, there was some desire to have more human interaction. One issue raised was a problem of memory continuity, as the chatbot was unable to reference past conversations. Similarly, the abundance of template replies indicated a lack of variety, occasionally giving students a very robotic tone.

“If you asked [a] professor or maybe your classmate… there will be human elements in it, but if you ask a chatbot… it will just show template replies which is pretty frustrating”

Despite the positive feedback and the impossibility of a live tutor 24/7, some students preferred the more traditional method of seeking help, i.e., interacting with a human expert. They were hesitant about using the chatbot after experiencing the autogenerated replies.

“That is the only thing I don't like because I prefer the chat function to [be operated] by a real time tutor. Because they will actually answer questions [not] automatically generated by the AI”

4 Discussion

Based on the results of the qualitative data, our chatbot is well positioned to provide Chemistry resources to students learning CQ, leading to a sense of confidence and increase in self-directed studying behaviours. At the same time, the chatbot is fast and personable, moving away from robotic replies that has little effect on student engagement levels (Hasan et al., 2020). The overall findings support the fact that the chatbot showed some degree of synchronous instructor presence, along with high levels of communication immediacy that could lead to an increased interaction quality with the students in CQ (Saba, 2018). This means that there is potential for a modified version of our automated QA system chatbot to be deployed in education. Yet, because of its occasional inconsistency in comprehension ability leading to educationally unhelpful responses, it might be some time before this chatbot is able to fully perform to the best of its abilities, much less replace a real-life instructor. The results do show certain technical issues in the chatbot’s conversational ability – both in understanding students and responding with relevant replies – that require additional progress. Current technology in the chatbot space unfortunately does not allow for longer questions, which is the reason why certain dialogue was not properly understood leading to a reduced response accuracy. This discussion first expands and contextualises these results with Mehrabian’s concept of communication immediacy (Mehrabian, 1971), followed by some educational implications.

With regards to its ability in communication immediacy, the chatbot can be seen to promote synchronous communication between the software and student. The chatbot employed several communication techniques such as inserting emoticons (Fig. 3), using a more casual greeting, and referring to students by name (Rocca, 2007) to be more relatable (Ruan et al., 2020). This is in addition to it replying within seconds during all hours, without needing to schedule a prior meeting. Quick and informal replies could be seen as both (1) students talking to a more approachable chatbot, which would increase the likelihood of them turning to the chatbot again in the future, as well as (2) offering immediate feedback (Liu, 2021), allowing students to correct themselves if they were solving a question wrongly or as affirmation if they were on the right track (Law et al., 2020). According to Winkler and Söllner (2018), such formative and instantaneous feedback would allow students to be more in control of their studying behaviours, strengthening their self-efficacy. As a result of the increased self-regulation of study strategies, students would be more motivated and confident to adopt a deeper approach to learning and try to expand their knowledge base outside of the online course curriculum (Law et al., 2020; Winkler & Söllner, 2018). This can be observed in the qualitative results, where student participants reported wanting to learn beyond the subjects taught in CQ. With the assistance of the CQ chatbot, they were even more assured of their decision.

Additionally, it was possible for the 1-to-1 personal and private interaction between the chatbot and student to be another factor that increases the attentiveness and interest of the student, compared to a larger classroom size where attention may be more diluted. The personal interaction is comparable to the smallest class size possible, where full attention is given to the student, with a potentially simultaneous increase in rapport established (Cunningham-Nelson et al., 2019). On a similar note, the chatbot’s natural privacy feature, where students would be virtually separated from other students’ queries or presence, could prompt more “embarrassing” questions from students – questions that students would be shy to ask in front of their peers (Hwang et al., 2002). This means that more basic questions, such as those relating to the foundations of Chemistry, may be better elicited by a chatbot than in class, improving learning speed. The discussion around chatbot interactions to be private (Medhi Thies et al., 2017) is not an issue purely limited to education – Yadav et al.’s (2019) “Feedpal” chatbot, which was designed to distribute breastfeeding educational materials in India, saw consistent use as women could ask culturally sensitive questions without being identified or shamed.

At the same time, the CQ chatbot could improve in several areas relating to communication immediacy. For one, immediacy in communicating with students is more than replying fast (Alharbi & Dimitriadi, 2018) – the biggest concern of the participants in this study was that the answers were not fully accurate. As per the qualitative results, students raised a good point about feeling like they were not discussing Chemistry questions with an open and knowledgeable expert. There should be a sense of proficiency from the other party to teach Chemistry – this sense was mostly lost when the chatbot repeatedly responded with unhelpful replies. Many students emphasized this in their interviews, where they were hesitant to use the chatbot long-term as it could not compare to an actual person. To complicate matters, the inconsistent chatbot comprehension ability negatively impacted some of the communication immediacy techniques and education strategies designed as integral to the chatbot. The personalisation and hint-by-hint scaffolding system could not be delivered to the students on occasion, leading to students growing more frustrated by the end of CQ, and questioning the credibility of the chatbot despite an overall pleasant experience. This was up to a point where one student commented that they “slowly stopped using it” as the chatbot was not giving them an answer useful to studying CQ. Having a perception that the chatbot was not fully integrated into CQ and their learning goals would decrease the persistence in students testing out and interacting with the chatbot as time went on, as studies with technology enhanced learning tools (TELTs) have supported (Dubey & Sahu, 2021; Peart et al., 2017). Reflecting on the progress of machine learning and AI frameworks for educational chatbots using Satow’s (2017) 6-tiered model below, the CQ chatbot falls somewhere between Level 3 and Level 4 – it was able to meet some student learning objectives (Smutny & Schreiberova, 2020), albeit not all the time.

-

Level 1: Personalized messages from the teaching assistant welcomes new learners

-

Level 2: Teaching assistant advises learning materials, suggests following steps, possible collaborators, and professionals for cooperative learning

-

Level 3: Teaching assistant responses to usual questions posted by students

-

Level 4: Teaching assistant establishes the steps to meet learning objectives and supervises the improvement of learning

-

Level 5: Teaching assistant gives personalized comments

-

Level 6: Teaching assistant offers individualized comments and endorsements, analyses individual learning requests, and provides tutoring instructions

Overall, it was positive to note that some of the communication techniques planned for the CQ chatbot were properly displayed. Yet, as with the chatbot’s limited understanding of students’ dialogue leading to some unhelpful responses, there is certainly room to incorporate the findings collected in this study. Thus, we have condensed these findings into a simple framework for teachers and instructors to consider when develo** an affective-focused educational chatbot. This framework, divided into essential and programmed chatbot features, can be viewed below (Fig. 4).

Essential chatbot attributes – having a private interaction and getting fast/accurate answers – form features that are included in any SaaS chatbots, such as the CQ chatbot (Atmosukarto et al., 2021). As described above, when students know that their questions are kept confidential from other students, as well as get the full attention of the chatbot tutor, may be inclined to ask more embarrassingly simple queries and learn rapidly. Similarly, the speed and usefulness of a chatbot’s response is also a highly regarded attribute, allowing students to perceive that the chatbot is both knowledgeable and willing to help. Any educational chatbot QA system should almost always feature these two attributes; however, these attributes are not commonly at the forefront of interaction quality research until recently (e.g., Gnewuch et al., 2018). We propose that teachers and instructors more deliberately include the essential chatbot attributes category in their interaction quality research to foster non-verbal communication immediacy for the benefit of online studying without 1-to-1 human-to-human interactions. This can be accompanied by data collection, exploring areas such as “Does the student perceive the chatbot interaction to be exclusive?”, “How important is chatbot response speed to students?”, and so on.

On the other hand, programmed chatbot attributes are features that must be purposefully included into a chatbot’s responses as part of verbal communication immediacy, to ensure that a chatbot is affectively focused. These attributes include a casual or informal greeting, and referring to students by name, to enhance the social presence of the chatbot. A student would open the chatbot, read the responses personally directed towards them and potentially connect with the chatbot on a social and psychological level (Rizomyliotis et al., 2022). This is followed by emoticons and expressions to, other than to emphasize the approachable nature of the chatbot, introduce textual variety. In the CQ chatbot for instance, phrases such as “Thanks for your feedback! Good luck completing Chem Quest

”, or “Awesome!

”, or “Awesome!

Glad to see you're getting the hang of it!” pictorially express the CQ chatbot’s intention, along with its Chemistry background. Lastly, asking students proactive questions are an integral part of classroom communication immediacy (Gorham, 1988; Wendt & Courduff, 2018) that can be used in a chatbot-human interaction. One potential application would be to assess the understanding of a chatbot’s response, thereby gauging the current competency of a student. By asking a student “Do you know how to solve the question?” after providing them some hints, the chatbot is able to measure if the hints were at all useful in studying. Such a method eliminates the need for a student to repeatedly ask for more hints, streamlining their interactions with the chatbot.

Glad to see you're getting the hang of it!” pictorially express the CQ chatbot’s intention, along with its Chemistry background. Lastly, asking students proactive questions are an integral part of classroom communication immediacy (Gorham, 1988; Wendt & Courduff, 2018) that can be used in a chatbot-human interaction. One potential application would be to assess the understanding of a chatbot’s response, thereby gauging the current competency of a student. By asking a student “Do you know how to solve the question?” after providing them some hints, the chatbot is able to measure if the hints were at all useful in studying. Such a method eliminates the need for a student to repeatedly ask for more hints, streamlining their interactions with the chatbot.

4.1 Other implications of educational chatbots

There are some other implications when incorporating a chatbot into a pre-university level programme. First, for the past two years, both new and existing undergraduates had to familiarise themselves with online and distance learning, due to COVID-19 (Kukul, 2021). Suffice to say, the global pandemic transformed much of how education have been imagined and delivered, including Chem Quest (Atmosukarto et al., 2021). In practical terms, virtual lessons using teleconferencing software were the norm, and school assignments had been modified to be fully online. Students had to contend with not meeting teachers physically and cope with higher levels of asynchronous communication. Interestingly, this might have conditioned students to be more comfortable with text-based interactions for academic assistance, including the textual responses of the CQ chatbot. Despite this, the pandemic could have reduced students’ motivation to complete CQ and use the chatbot. Gaglo et al. (2021) for example argued that there were many variables affecting students during this period, not limited to a decrease in productivity due to home study, poor perception of e-learning, and even psychological distress, amongst others. Therefore, based on this study’s findings alone, it may be difficult to fully discuss the students’ reactions and expectations when interacting with an educational chatbot.

Second, the educational impact of introducing an automated AI-led chatbot in a previously instructorless course (such as CQ) is another point to consider. Most students preferred face-to-face communication (Gallardo-Echenique et al., 2016), including some from this study. For them, a slight delay in finding a suitable meeting time was not an issue compared to interacting with an automated system. This can be coupled with the cognitive bias of relying on the transmission of academic information. Would the credibility of information rise if delivered by a physical human being as compared to words through a chatbot? What about an online representation of a face, such as through video teleconferencing software? In other settings, such as delivering weather forecasts (Kim et al., 2022b), informing museum visitors (Schmidt et al., 2019), or even getting online shoppers to purchase items (Tan & Liew, 2020), the introduction of AI-led virtual representation of subject-matter experts have been linked to a rise in information credibility. Indeed, Human–Machine Communication studies have begun attempting to conceptualise both immediacy and information creditability for the purposes of education (Edwards et al., 2018). Adding a face, either digitally generated or of a recorded human, may be a direction for the CQ chatbot to step towards, as an option to increase the level of trust students have with this software.

Lastly, if automated chatbots are to be a norm in education, the type of help they provide must be explored in greater depth. One way of doing so is to turn to scaffolding theory (Wood et al., 1976), which emphasises personalized learning. As this chatbot becomes more sophisticated for future runs, there needs to be room for the brains of the chatbot, the AI-led NLP engine, to learn more effective teaching and scaffolding techniques. Utilising a hint and reminder approach may not work as well for more deep learning students for example, who may be more inclined towards debating or finding alternative academic explanations. Changing the chatbot to be a more active participant in learning could encourage a more reflective form of studying and increase engagement levels. Jumaat and Tasir’s (2014) meta-analysis of online instructional scaffolding revealed four main types – procedural, conceptual, strategic, and metacognitive scaffolding – which can be integrated to support students in different ways. Out of these, metacognitive scaffolding is the most appropriate to promote higher order thinking, hel** students reflect on what they have learned, and assisting individual learning management (Reingold et al., 2008; Teo & Chai, 2009). One instance of this, although not a chatbot, would be Ayedoun et al.’s (2020) computer-based approach to teach students with different levels of willingness using varied scaffolding and fading strategies. In moving towards a more adaptive educational chatbot, our system can benefit by incorporating more scaffolding theory and working examples.

4.2 Limitations

There were some limitations we faced when conceptualising and carrying out this research. Firstly, the participants in this study were not behaving in a naturalistic manner. They were explicitly informed that they had to interact with the chatbot as part of the pilot programme. This may have skewed their persistence in engaging with the chatbot; some of the 12 students might not have even used the chatbot as part of their normal studying process.

Next, the chatbot was solely developed to help students in studying Chemistry with CQ. This meant that we were largely dependent on the structure of the CQ programme, including timeline, completion criteria, and mode of assessment. The one-month timeline from start to end may have been too short for us to notice any meaningful interactions between students and chatbot. Compounding this factor was that CQ was optional to complete – we cannot argue how this impacted student engagement with the chatbot. Ultimately, the generalisability of this study’s results is reduced when compared to educational programmes unlike CQ.

5 Conclusion

The Chem Quest chatbot shows potential in increasing communication immediacy during online distance education. Due to the design of its system, the chatbot could deploy synchronous communication techniques such as near-immediate responses and all-day availability, as well as affective techniques such as replying in a friendly and emotive tone and referring to students by name. This reflects the ability of the chatbot to be present and receptive to any form of questioning relating to the CQ programme, which when combined, increases the effectiveness of online or distance education. It is with some hope that the chatbot offsets some of the negative effects of studying during the COVID-19 pandemic due to this.

Yet, it is also clear that the chatbot requires additional development in certain areas of its programming, with its biggest weakness in relation to understanding students’ dialogue. Its ability to provide Chemistry information and hints was greatly affected by the lack of understanding students’ dialogue, where the chatbot would give unhelpful responses, leaving students frustrated and hesitant to ask a new question. This is especially true when there are accessible alternatives – using video resources or internet search engines; the present chatbot can easily be overshadowed if these issues are not resolved.

The contributions of this paper are clear in that both communication immediacy and response accuracy are equally important in conversational chatbots in similar educational settings. At the same time, the infancy of educational chatbot research means that much more can be done to expand the breadth and depth of this field. We propose one major research direction for this project – As teaching constructs such as immediacy were looked at qualitatively in this paper, we could measure these constructs with validated quantitative instruments. For educators, these statistics could be used for longitudinal data analysis of a certain cohort, or as a more computable method of comparing between different cohorts of students. This can be performed in conjunction with a more experimental research methodology, with students randomly being assigned to use the chatbot to complete CQ versus a different mode of academic assistance, such as meeting with faculty or using online search engines. Using such a method, we can determine the effectiveness of the chatbot in delivering Chemistry information in relation to other existing methods.

Data availability

The datasets generated and analysed during the current study are not publicly available due to individual privacy concerns, but anonymized copies are available from the corresponding author on reasonable request.

References

Al Ghamdi, A. (2017). Influence of Lecturer Immediacy on Students’ Learning Outcomes: Evidence from a Distance Education Program at a University in Saudi Arabia. International Journal of Information and Education Technology, 7(1), 35–39. https://doi.org/10.18178/ijiet.2017.7.1.838 35.

Alharbi, S. & Dimitriadi, Y. (2018). Instructional immediacy practices in online learning environments. Journal of Education and Practice, 9(6). https://www.iiste.org/Journals/index.php/JEP/article/view/41141/42304. Accessed 1 Mar 2022.

Arbaugh, J. B. (2001). How Instructor Immediacy Behaviors Affect Student Satisfaction and Learning in Web-Based Courses. Business Communication Quarterly, 64(4), 42–54. https://doi.org/10.1177/108056990106400405

Arbaugh, J. B. (2010). Sage, guide, both, or even more? An examination of instructor activity in online MBA courses. Computers & Education, 55, 1234–1244. https://doi.org/10.1016/j.compedu.2010.05.020

Atmosukarto, I., Cheow, W.S., Iyer, P., Ng, H.T., & Kelly, W.P.Y. (2021). Enhancing Adaptive Online Chemistry Course with AI-Chatbot. International Conference on Teaching, Assessment, and Learning for Engineering (TALE), Wuhan, China. Institute of Electrical and Electronics Engineers (IEEE). https://doi.org/10.1109/TALE52509.2021.9678528.

Ayedoun, E., Hayashi, Y., & Seta, K. (2020). Toward Personalized Scaffolding and Fading of Motivational Support in L2 Learner-Dialogue Agent Interactions: An Exploratory Study. IEEE Transactions on Learning Technologies, 13(3), 604–616. https://doi.org/10.1109/TLT.2020.2989776

Bawa, P. (2016). Retention in Online Courses: Exploring Issues and Solutions—A Literature Review. SAGE Open, 6(1), 1–11. https://doi.org/10.1177/2158244015621777

Benedetto, L., & Cremonesi, P. (2019). Rexy, A Configurable Application for Building Virtual Teaching Assistants. In: Lamas, D., Loizides, F., Nacke, L., Petrie, H., Winckler, M., & Zaphiris, P. (eds.) Human-Computer Interaction – INTERACT 2019. Lecture Notes in Computer Science, 11747. Springer. https://doi.org/10.1007/978-3-030-29384-0_15.

Benedetto, L., Cremonesi, P., & Parenti, M. (2018). A Virtual Teaching Assistant for Personalized Learning. 27th ACM International Conference on Information and Knowledge Management (CIKM 2018), Torino, Italy. http://ceur-ws.org/Vol-2482/paper51.pdf. Accessed 1 March 2022.

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/10.1191/1478088706qp063oa.

Calle, M., Narváez, E., & Maldonado-Mahauad, J. (2021). Proposal for the Design and Implementation of Miranda: A Chatbot-Type Recommender for Supporting Self-Regulated Learning in Online Environments. LALA’21: Iv Latin American Conference on Learning Analytics. http://ceur-ws.org/Vol-3059/paper2.pdf. Accessed 1 August 2022.

Chaudhry, M. A., & Kazim, E. (2022). Artificial Intelligence in Education (AIEd): A high-level academic and industry note 2021. AI and Ethics, 2, 157–165. https://doi.org/10.1007/s43681-021-00074-z

Chesebro, J. L. (2003). Effects of teacher clarity and nonverbal immediacy on student learning, receiver apprehension and affect. Communication Education, 5(2), 135–147. https://doi.org/10.1080/03634520302471

Cunningham-Nelson, S., Boles, W., Trouton, L., & Margerison, E. (2019). A review of chatbots in education: Practical steps forward. In 30th Annual Conference for the Australasian Association for Engineering Education, Australia. https://search.informit.org/doi/10.3316/INFORMIT.068364390172788. Accessed 1 Aug 2022.

Deriu, J., Rodrigo, A., Otegi, A., Echegoyen, G., Rosset, S., Agirre, E., & Cieliebak, M. (2021). Survey on evaluation methods for dialogue systems. Artificial Intelligence Review, 54, 755–810. https://doi.org/10.1007/s10462-020-09866-x

Dubey, P., & Sahu, K. K. (2021). Students’ perceived benefits, adoption intention and satisfaction to technology-enhanced learning: Examining the relationships. Journal of Research in Innovative Teaching & Learning, 14(3), 310–328. https://doi.org/10.1108/JRIT-01-2021-0008

Edwards, C., Edwards, A., Spence, P. R., & Lin, X. (2018). I, teacher: Using artificial intelligence (AI) and social robots in communication and instruction. Communication Education, 67(4), 473–480. https://doi.org/10.1080/03634523.2018.1502459

Fabriz, S., Mendzheritskaya, J., & Stehle, S. (2021). Impact of Synchronous and Asynchronous Settings of Online Teaching and Learning in Higher Education on Students’ Learning Experience During COVID-19. Frontiers in Psychology, 12. https://doi.org/10.3389/fpsyg.2021.733554.

Feng, D., Shaw, E., Kim, J., & Hovy, E. (2006). An Intelligent Discussion-Bot for Answering Student Queries in Threaded Discussions. 11th International Conference on Intelligent User Interfaces, Sydney, Australia. Association for Computing Machinery. https://doi.org/10.1145/1111449.1111488.

Francescucci, A., & Rohani, L. (2019). Exclusively Synchronous Online (VIRI) Learning: The Impact on Student Performance and Engagement Outcomes. Journal of Marketing Education, 41(1), 60–69. https://doi.org/10.1177/0273475318818864

Gaglo, K., Degboe, B.M., Kossingou, G.M., & Ouya, S. (2021). Proposal of conversational chatbots for educational remediation in the context of covid-19. International Conference on Advanced Communications Technology (ICACT), PyeongChang, South Korea. Institute of Electrical and Electronics Engineers (IEEE). https://doi.org/10.23919/ICACT51234.2021.9370946.

Gallardo-Echenique, E., Bullen, M., & Marqués-Molías, L. (2016). Student communication and study habits of first-year university students in the digital era. Canadian Journal of Learning and Technology, 42(1). https://doi.org/10.21432/T2D047

Gazulla, E. D., Martins, L., & Fernández-Ferrer, M. (2022). Designing learning technology collaboratively: Analysis of a chatbot co-design. Education and Information Technologies. https://doi.org/10.1007/s10639-022-11162-w

Gnewuch, U., Stefan, M., Marc, A., & Alexander, M. (2018). Faster is Not Always Better: Understanding the Effect of Dynamic Response Delays in Human-Chatbot Interaction. Twenty-Sixth European Conference on Information Systems (ECIS2018), Portsmouth, UK. AIS Electronic Library (AISeL). https://aisel.aisnet.org/ecis2018_rp/113 Accessed 9 December 2022.

Goodboy, A.K., Weber, K., & Bolkan, S. (2009). The effects of nonverbal and verbal immediacy on recall and multiple student learning indicators. Journal of Classroom Interaction, 44(1), 4–12. https://www.jstor.org/stable/23869286. Accessed 1 March 2022.

Gorham, J. (1988). The relationship between verbal teacher immediacy behaviors and student learning. Communication Education, 37(1), 40–53. https://doi.org/10.1080/03634528809378702

Hasan, M. A., Noor, N. F. M., Rahman, S. S. B. A., & Rahman, M. M. (2020). The Transition from Intelligent to Affective Tutoring System: A Review and Open Issues. IEEE Access, 8, 204612–220463. https://doi.org/10.1109/ACCESS.2020.3036990

Herzog, C., Handke, C., & Hitters, E. (2019). Analyzing Talk and Text II: Thematic Analysis. In: Van den Bulck, H., Puppis, M., Donders, K., & Van Audenhove, L. (Eds.) The Palgrave Handbook of Methods for Media Policy Research. Palgrave Macmillan. https://doi.org/10.1007/978-3-030-16065-4_22.

Hwang, A., Ang, S., Francesco, A. M. (2002). The Silent Chinese: The Influence of Face and Kiasuism on Student Feedback-Seeking Behaviors. Journal of Management Education, 26(1), 70–98. https://doi.org/10.1177/2F105256290202600106.

Jumaat, N.F., & Tasir, Z. (2014). Instructional scaffolding in online learning environment: A meta-analysis. In 2014 International Conference on Teaching and Learning in Computing and Engineering, Kuching, Malaysia (pp. 74–77). https://doi.org/10.1109/LaTiCE.2014.22

Káplár-Kodácsy, K., & Dorner, H. (2020). The use of audio diaries to support reflective mentoring practice in Hungarian teacher training. International Journal of Mentoring and Coaching in Education, 9(3), 257–277. https://doi.org/10.1108/IJMCE-05-2019-0061

Katchapakirin, K., Anutariya, C., & Supnithi, T. (2022). ScratchThAI: A conversation-based learning support framework for computational thinking development. Education and Information Technologies. https://doi.org/10.1007/s10639-021-10870-z

Kim, J., Lee, H., & Cho, Y. H. (2022a). Learning design to support student-AI collaboration: Perspectives of leading teachers for AI in education. Education and Information Technologies, 27, 6069–6104. https://doi.org/10.1007/s10639-021-10831-6

Kim, J., Xu, K., & Merill Jr., K. (2022b). Man vs. machine: Human responses to an AI newscaster and the role of social presence. The Social Science Journal. https://doi.org/10.1080/03623319.2022.2027163.

Kuhail, M. A., Alturki, N., Alramlawi, S., & Alhejori, K. (2022). Interacting with educational chatbots: A systematic review. Education and Information Technologies. https://doi.org/10.1007/s10639-022-11177-3

Kukul, V. (2021). On Becoming an Online University in an Emergency Period: Voices from the Students at a State University. Open Praxis, 13(2), 172–183. https://doi.org/10.5944/openpraxis.13.2.127

Law, Y.K., Tobin, R.W., Wilson, N.R., & Brandon, L.A. (2020). Improving Student Success by Incorporating Instant-Feedback Questions and Increased Proctoring in Online Science and Mathematics Courses. Journal of Teaching and Learning with Technology, 9, 64–78. https://doi.org/10.14434/jotlt.v9i1.29169.

Lin, X., & Gao, L. (2020). Students’ sense of community and perspectives of taking synchronous and asynchronous online courses. Asian Journal of Distance Education, 15(1), 169–179. ISSN-1347–9008.

Liu, W. (2021). Does Teacher Immediacy Affect Students? A Systematic Review of the Association Between Teacher Verbal and Non-verbal Immediacy and Student Motivation. Frontiers in Psychology, 12. https://doi.org/10.3389/fpsyg.2021.713978.

McIntosh, M. J., & Morse, J. M. (2015). Situating and Constructing Diversity in Semi-Structured Interviews. Global Qualitative Nursing Research, 2, 1–12. https://doi.org/10.1177/2333393615597674

Medhi Thies, I., Menon, N., Magapu, S., Subramony, M. & O’Neill, J. (2017). How Do You Want Your Chatbot? An Exploratory Wizard-of-Oz Study with Young, Urban Indians. In: Bernhaupt, R., Dalvi, G., Joshi, A., K. Balkrishan, D., O'Neill, J., & Winckler, M. (eds) Human-Computer Interaction - INTERACT 2017. Lecture Notes in Computer Science, 10513. Springer, Cham. https://doi.org/10.1007/978-3-319-67744-6_28

Mehrabian, A. (1971). Silent messages. Wadsworth Publishing Company Inc.

Melrose, S. (2009). Instructional Immediacy Online. In Rogers, P., Berg, G., Boettcher, J., Howard, C., Justice, L., Schenk, K. (Eds.) Encyclopedia of Distance Learning Second Edition, 1212–1215. IGI Global.

Mihalcea, R., Corley, C., & Strapparava, C. (2006). Corpus-based and Knowledge-based Measures of Text Semantic Similarity. AAAI'06: Proceedings of the 21st national conference on Artificial intelligence – Volume 1, Boston, Massachusetts, 775–780. https://doi.org/10.5555/1597538.1597662.

Myers, S.A., Goodboy, A.K., & Members of COMM 600. (2014). College Student Learning, Motivation, and Satisfaction as a Function of Effective Instructor Communication Behaviors. Southern Communication Journal, 79(1), 14-26. https://doi.org/10.1080/1041794X.2013.815266.

Ni, S.F., & Aust, R. (2008). Examining Teacher Verbal Immediacy and Sense of Classroom Community in Online Classes. International Journal on E-Learning, 7(3), 477–498. https://www.learntechlib.org/primary/p/23633/. Accessed 1 March 2022.

Nielsen, J., & Landauer, T.K. (1993). A mathematical model of the finding of usability problems. INTERACT '93 and CHI '93 Conference on Human Factors in Computing Systems, Amsterdam, The Netherlands. Association for Computing Machinery. https://doi.org/10.1145/169059.169166.

Peart, D.J., Rumbold, P.L.S., Keane, K.M., Allin, L. (2017). Student use and perception of technology enhanced learning in a mass lecture knowledge-rich domain first year undergraduate module. International Journal of Educational Technology in Higher Education, 14(40). https://doi.org/10.1186/s41239-017-0078-6.

Queirós, A., Faria, D., & Almeida, F. (2017). Strengths And Limitations of Qualitative and Quantitative Research Methods. European Journal of Education Studies, 3(9), 369–387. https://doi.org/10.5281/zenodo.887089

Rahimah, Juriah, N., Karimah, N., Hilmatunnisa, & Sandra, T. (2021). The Problem and Solutions for Learning Activities during Covid-19 Pandemic Disruption in Hidayatul Insan Pondok School. Bulletin of Community Engagement, 1(1), 13–20. https://doi.org/10.51278/bce.v1i1.87.

Ravi, S., Kim, J., & Shaw, E. (2007). Mining On-line Discussions: Assessing Technical Quality for Student Scaffolding and Classifying Messages for Participation Profiling. 13th International Conference of Artificial Intelligence in Education, Marina del Rey, USA. https://doi.org/10.1201/b10274-24.

Reingold, R., Rimor, R., & Kalay, A. (2008). Instructor's Scaffolding in Support of Student's Metacognition through a Teacher Education Online Course - A Case Study. Journal of Interactive Online Learning, 7(2), 139–151. http://www.ncolr.org/jiol. Accessed 16 August 2022.

Rizomyliotis, I., Kastanakis, M. N., Giovanisa, A., Konstantoulaki, K., & Kostopoulos, I. (2022). “How mAy I help you today?” The use of AI chatbots in small family businesses and the moderating role of customer affective commitment. Journal of Business Research, 153, 329–340. https://doi.org/10.1016/j.jbusres.2022.08.035

Roberts, A., & Friedman, D. (2013). The Impact of Teacher Immediacy on Student Participation: An Objective Cross-Disciplinary Examination. International Journal of Teaching and Learning in Higher Education, 25(1), 38–46. https://files.eric.ed.gov/fulltext/EJ1016418.pdf. Accessed 1 March 2022.

Rocca, K. (2007). Immediacy in the Classroom: Research and Practical Implications. https://serc.carleton.edu/NAGTWorkshops/affective/immediacy.html. Accessed 1 March 2022.

Ruan, S., He, J., Ying, R., Burkle, J., Hakim, D., Wang, A., … Landay, J.A. (2020). Supporting children's math learning with feedback-augmented narrative technology. IDC '20: Proceedings of the Interaction Design and Children Conference, London, UK, 567–580. https://doi.org/10.1145/3392063.3394400

Rus, V., Cai, Z., & Graesser, A.C. (2007). Experiments on Generating Questions About Facts. In Gelbukh, A. (ed.) Computational Linguistics and Intelligent Text Processing. CICLing 2007. Lecture Notes in Computer Science, 4394. Springer. https://doi.org/10.1007/978-3-540-70939-8_39.

Rus, V., D’Mello, S., Hu, X., & Graesser, A. C. (2013). Recent Advances in Conversational Intelligent Tutoring Systems. AI Magazine, 34(3), 42–54. https://doi.org/10.1609/aimag.v34i3.2485

Saba, A.C. (2018). Student Perceptions of Instructor Immediacy in Online Program Courses [Doctoral dissertation]. Boise State University Theses and Dissertations. https://doi.org/10.18122/td/1505/boisestate.

Satow, L. (2017). Chatbots as Teaching Assistants: Introducing a Model for Learning Facilitation by AI Bots. Accessed 1 August 2022.

Scheu, S., & Benke, I. (2022). Digital Assistants for Self-Regulated Learning: Towards a State Of-The-Art Overview. ECIS 2022 Research-in-Progress Papers, 46. https://aisel.aisnet.org/ecis2022_rip/46. Accessed 1 August 2022.

Schmidt, S., Bruder, G., & Steinicke, F. (2019). Effects of virtual agent and object representation on experiencing exhibited artifacts. Computers & Graphics, 83, 1–10. https://doi.org/10.1016/j.cag.2019.06.002

Sharma, S. (2010). Qualitative Methods in Statistics Education Research: Methodological Problems and Possible Solutions. Eighth International Conference on Teaching Statistics (ICOTS8), Ljubljana, Slovenia. https://iase-web.org/documents/papers/icots8/ICOTS8_8F3_SHARMA.pdf. Accessed 1 August 2022.

Sinch Belgium BV. (2022). Chatlayer by Sinch. https://chatlayer.ai/. Accessed 1 March 2022.

Sindhgatta, R., Marvaniya, S., Dhamecha, T.I., & Sengupta, B. (2017). Inferring Frequently Asked Questions from Student Question Answering Forums. 10th International Conference on Educational Data Mining, Wuhan, China. https://eric.ed.gov/?id=ED596602. Accessed 1 August 2022.

Singapore Institute of Technology (SIT). (2021). Quests. https://www.singaporetech.edu.sg/colead/collaborations/student-resources/quests. Accessed 1 March 2022.

Skordis-Worrall, J., Haghparast-Bidgoli, H., Batura, N., & Hughes, J. (2015). Learning Online: A Case Study Exploring Student Perceptions and Experience of a Course in Economic Evaluation. International Journal of Teaching and Learning in Higher Education, 27(3), 413–422. https://www.isetl.org/ijtlhe/pdf/IJTLHE2041.pdf. Accessed 1 March 2022.

Smutny, P., & Schreiberova, P. (2020). Chatbots for learning: A review of educational chatbots for the Facebook Messenger. Computers & Education, 151, 103862. https://doi.org/10.1016/j.compedu.2020.103862.

Tan, S., & Liew, T. W. (2020). Designing Embodied Virtual Agents as Product Specialists in a Multi-Product Category ECommerce: The Roles of Source Credibility and Social Presence. International Journal of Human-Computer Interaction, 36(12), 1136–1149. https://doi.org/10.1080/10447318.2020.1722399

Teo, Y.H., & Chai, C.S. (2009). Scaffolding Online Collaborative Critiquing for Educational Video Production. Knowledge Management & E-Learning: An International Journal, 1(1), 51–66. https://doi.org/10.34105/j.kmel.2009.01.005.

Velez, J. J., & Cano, J. (2008). The Relationship Between Teacher Immediacy and Student Motivation. Journal of Agricultural Education, 49(3), 76–86. https://doi.org/10.5032/jae.2008.03076

Waldrop, M. (2013). Online Learning: Campus 2.0. Nature, 495, 160–163. https://doi.org/10.1038/495160a

Walkem, K. (2014). Instructional immediacy in elearning. Collegian: The Australian Journal of Nursing Practice, Scholarship and Research, 21, 179–184. https://doi.org/10.1016/j.colegn.2013.02.004

Watts, L. (2016). Synchronous and Asynchronous Communication in Distance Learning: A Review of the Literature. Quarterly Review of Distance Education, 17(1), 23–32. ISSN-1528–3518.

Wen, D., Cuzzola, J., Brown, L., & Kinshuk, D. (2012). Instructor-aided asynchronous question answering system for online education and distance learning. The International Review of Research in Open and Distributed Learning, 13(5), 102–125. https://doi.org/10.19173/irrodl.v13i5.1269.

Wendt, J. L., & Courduff, J. (2018). The relationship between teacher immediacy, perceptions of learning, and computer-mediated graduate course outcomes among primarily Asian international students enrolled in an U.S. university. International Journal of Educational Technology in Higher Education, 15(33). https://doi.org/10.1186/s41239-018-0115-0.

Winkler, R., & Söllner, M. (2018). Unleashing the Potential of Chatbots in Education: A State-Of-The-Art Analysis. 78th annual meeting of the academy of management. https://doi.org/10.5465/AMBPP.2018.15903abstract

Wood, D., Bruner, J. S., & Ross, G. (1976). The Role of Tutoring in Problem Solving. Journal of Child Psychology and Psychiatry, 17(2), 89–100. https://doi.org/10.1111/j.1469-7610.1976.tb00381.x

Yadav, D., Malik, P., Dabas, K., & Singh, P. (2019). Feedpal: Understanding Opportunities for Chatbots in Breastfeeding Education of Women in India. Proceedings of the ACM on Human-Computer Interaction, 3(CSCW), 1–30. https://doi.org/10.1145/3359272.

Zhou, L., Gao, J., Li, D., & Shum, H. (2020). The Design and Implementation of **aoIce, an Empathetic Social Chatbot. Association for Computational Linguistics, 46(1), 53–93. https://doi.org/10.1162/COLI_a_00368[InlineImageRemoved]

Acknowledgements

We thank all the student participants willing to provide data for Chem Quest, the chatbot, as well as this manuscript. We would also like to thank the SIT Centre for Learning Environment and Assessment Development (CoLEAD) for their support in conducting this study.

Funding

This study was fully funded from the Singapore Ministry of Education’s Tertiary Education Research Fund (MOE-TRF, MOE2019-TRF-014).

Author information

Authors and Affiliations

Contributions

Analysis of the data was performed by Jamil Jasin, He Tong Ng, and Wean Sin Cheow. The data was collected and processed by Ching Yee Pua. Indriyati Atmosukarto, Prasad Iyer, Faiezin Osman, and Peng Yu Kelly Wong developed the Chemistry database and chatbot for this study. The first draft of the manuscript was written by Jamil Jasin and Wean Sin Cheow, and all authors commented on subsequent versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors have no competing interests to declare that are relevant to the content of this article.

Ethics approval

This study was approved by SIT’s Institutional Review Board (IRB), application no. 2020126.

Informed Consent

As data was collected from human participants, informed consent has been collected and securely stored.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix A Semi-structured Interview Questions

Appendix A Semi-structured Interview Questions

Background Information

-

What do you enjoy the most for your classes? Traditional in-person classroom or online classes?

-

When you’re studying, what kind of environment makes you focus better? (e.g., busy or a quiet environment)

-

What do you need to learn a subject better? (e.g., notes, good documentation, studying together with friends, a tutor to answer questions live, …)

-

In which moments is your motivation higher?

Regarding Chem Quest

-

How many hours did you spend on Chem Quest?

-

What did you think of the course difficulty level?

-

Walk me through your experience the first time you took Chem Quest.

Regarding Chem Quest Chatbot

-

Could you elaborate more on how you used the chat function/chatbot?

-

What kind of questions did you ask?

-

What do you think of the response from the chatbot?

-

How did you find the overall interaction?

-

On a scale from 1 to 10 how would you rate the effectiveness of the QA system?

-

How satisfied or dissatisfied are you with the chat?

Chatbot Expectations

-

What are the 3 most important things the QA system should do in order to be helpful?

-

What should not go wrong in a QA system?

-

If that was an assistant for all courses (other than Chem Quest), what would you improve for the future?

-

If there were no limits, in an ideal world, how would the absence of a 24/7 tutor be solved?

-

Would you like to share something more?

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jasin, J., Ng, H.T., Atmosukarto, I. et al. The implementation of chatbot-mediated immediacy for synchronous communication in an online chemistry course. Educ Inf Technol 28, 10665–10690 (2023). https://doi.org/10.1007/s10639-023-11602-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10639-023-11602-1