Abstract

A comparative analysis is undertaken to explore the impact of various roughness characterization methods as input variables on the performance of data-driven predictive models for estimating the roughness equivalent sand-grain size \(k_s\). The first type of model, denoted as \(\text {ENN}_\text {PS}\), incorporates the roughness height probability density function (p.d.f.) and power spectrum (PS), while the second type of model, \(\text {ENN}_\text {PA}\), utilizes a finite set of 17 roughness statistical parameters as input variables. Furthermore, a simplified parameter-based model, denoted as \(\text {ENN}_\text {PAM}\), is considered, which features only 6 input roughness parameters. The models are trained based on identical databases and evaluated using roughness samples similar to the training databases as well as an external testing database based on literature. While the predictions based on p.d.f. and PS achieves a stable error level of around 10% among all considered testing samples, a notable deterioration in performance is observed for the parameter-based models for the external testing database, indicating a lower extrapolating capability to diverse roughness types. Finally, the sensitivity analysis on different types of roughness confirms an effective identification of distinct roughness effects by \(\text {ENN}_\text {PAM}\), which is not observed for \(\text {ENN}_\text {PA}\). We hypothesize that the successful training of \(\text {ENN}_\text {PAM}\) is attributed to the enhanced training efficiency linked to the lower input dimensionality.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Hydraulically rough surfaces encountered in engineering applications can arise from a multitude of degradation events, such as erosion, fouling, and ice accretion. It is widely reported that rough surfaces with different morphological properties can lead to a distinction of their hydrodynamic properties to different extents. Accurate prediction of the skin friction exerted by roughness holds crucial economic implications for realistic applications (Chung et al. 2021). However, the intricate nature of the roughness effect presents challenges in achieving this prediction task. The seminal experiment by Nikuradse (1933) on hydraulic pipes roughened with uniform sand grain roughness reveals a correspondence between the roughness skin friction coefficient and the roughness size. In numerous real-world applications, however, characterizing an arbitrary irregular roughness with a single physical size is not feasible due to the intricate interaction of the turbulent flow with the roughness structures. To address this problem, the equivalent sand grain size \(k_s\) is introduced. It is important to note that \(k_s\) is not a physical length scale of the roughness, but rather a hydraulic property associated with the surface. This property represents the size of the uniform sand grain roughness that produces equivalent skin friction coefficient as the interested rough surface in the fully rough regime, where the skin friction coefficient depends solely on the roughness topography but is independent of the Reynolds number. The \(k_s\) value is determined from the roughness function \(\Delta U^+\)—representing the downward shift in the logarithmic region of the inner-scaled mean velocity profile in the fully-rough regime—using the asymptotic function:

Here \(\kappa \) is the von Kármán constant and B is the smooth-wall log-law intercept (Jiménez 2004).

The determination of the equivalent sand grain size \(k_s\) is well recognized to be influenced by the intricate interplay of various flow phenomena, such as flow separation and im**ement on roughness protrusions along the roughness profile. In response to the complexities associated with these effects, researchers have proposed a set of statistical parameters with the goal of effectively capturing the roughness impact from different aspects. Among these parameters, notable examples include the effective surface slope ES (Napoli et al. 2008), the third and forth central moment of the roughness height probability density function (p.d.f.)—skewness and kurtosis—denoted as Sk and Ku, respectively (Flack and Schultz 2010; Busse and Jelly 2023), and the correlation length \(L^\text {Corr}\) of roughness surface geometry (Thakkar et al. 2017). These roughness parameters are frequently incorporated in the literature for formulating empirical correlations. Recently, Yang et al. (2023) successfully replicated skin friction over realistic roughness by generating artificial roughness surrogates with corresponding p.d.f. and PS, demonstrating that the roughness p.d.f. and PS inherently encapsulate sufficient information for determination of surface drag. Consequently, it is suggested that this representation of roughness can serve as model input for achieving accurate predictions of drag.

In recent years, driven by a growing interest in the application of machine learning techniques, researchers have increasingly employed diverse data-driven methodologies to estimate drag penalties in the context of surface roughness. This has led to the emergence of models with considerably larger input dimensions compared to the conventional correlations. Jouybari et al. (2021) provide a comprehensive survey of commonly utilized roughness parameters and leveraged a multi-layer perceptron (MLP) model to recover \(k_s\) from these parameters. In their model, 17 inputs, encompassing roughness parameters as well as their products, are incorporated. Their model, employing an extended set of input variables, exhibits enhanced predictive capabilities in comparison to conventional empirical correlations. The potential of this type of model is further unfolded by Lee et al. (2022) whose investigation elucidated that the utilization of transfer learning can markedly enhance model performance by leveraging knowledge derived from the aforementioned empirical correlations. This is particularly useful in scenarios where training samples are scarce. More recently, an MLP model developed by Yang et al. (2023), employs roughness p.d.f. and PS as model inputs, which can be considered as a higher order statistical representation of roughness. Incorporating the PS of roughness topography can particularly capture the multi-scale nature of naturally occurring roughness. In this study, a model interpretation method revealed that very large-scale roughness structures have a negligible influence on the resulting drag. As an alternative approach to predict drag, Sanhueza et al. (2023) employ Convolutional Neural Networks (CNN) on raw roughness maps to predict surface drag and heat transfer, providing local predictions of drag and heat transfer over roughness patches. While the latter two roughness characterization methods (p.d.f \(+\) PS and raw roughness map) are able to resolve more intricate features, leading to more accurate predictions, it is imperative to acknowledge that obtaining these representations are considerably more challenging in comparison to acquiring the single-valued statistical parameters from realistic rough surfaces. This difficulty may constrain the industrial deployment of these models compared to the empirical symbolic regression or data-driven models that employ statistical parameters. Moreover, roughness parameters inherently reflect human-understandable roughness properties from various perspectives. Data-driven models constructed based on these parameters hold significant potential of develo** explainable white-box models, aligning with the current trend in research known as Explainable Artificial Intelligence (XAI). Hence, in light of the preceding discussions, the utilization of a predictive model should account for a comprehensive consideration of various factors.

The present study undertakes an evaluation of the performance of different types of statistical information as the input to the data-driven models. Specifically, we compare MLP models based on p.d.f. and PS and models utilizing roughness parameters. The first type of model, denoted as \(\text {NN}_\text {PS}\), is designed to predict \(k_s\) with roughness p.d.f. and PS as model input. For the second type of models, denoted as \(\text {NN}_\text {PA}\), we adopt the same 17 roughness parameters as proposed by Jouybari et al. (2021) as input variables. Additionally, a simplified version of the \(\text {NN}_\text {PA}\) model, labeled as \(\text {NN}_\text {PAM}\), is included in the comparison, characterized by a reduced set of only 6 input parameters. Subsequently, these NN models are used to form the ensemble models, which involves combining the predictions of 50 NNs of the same type following the description in (Yang et al. 2023). A detailed description of these ensemble models, denoted as ENN, is provided in Sect. 2.3. The training database, comprising synthetic roughness samples, is generated using a mathematical method. This method relies on the random prescription of p.d.f. and PS to emulate a wide range of naturally occurring irregular roughness (Yang et al. 2023). To fully realize the potential of each model, Bayesian optimization (BO) is applied to optimize the hyperparameters of each model. Subsequently, a comparison of model performance is conducted across all considered models. Finally, a sensitivity analysis is performed on the parameter-based models, namely \(\text {ENN}_\text {PA}\) and \(\text {ENN}_\text {PAM}\), to elucidate their distinct performance.

2 Methodology

2.1 Direct Numerical Simulation

DNS is employed to solve the turbulent flow over rough surfaces in a closed channel driven by constant pressure gradient (CPG). The DNS is performed with a pseudo-spectral Navier–Stokes solver SIMSON (Chevalier et al. 2007). Periodic boundary conditions are applied in the streamwise and spanwise directions of the channel, while the upper and lower walls are covered by the roughness structures with no-slip boundary condition on their surface. The roughness representation in the fluid domain is based on the immersed boundary method (IBM) following Goldstein et al. (1993). The solved Navier–Stokes equation writes

where \({\textbf {u}}=(u,v,w)^\intercal \) is the velocity vector and \(P_x\) is the mean pressure gradient in the flow direction added as a constant and uniform source term to the momentum equation to drive the flow. Moreover, p, \(\mathbf {e_x}\), \(\rho \), \(\nu \) and \({\textbf {f}}_\text {IBM}\) denote pressure fluctuation, streamwise unit vector, density, kinematic viscosity and external body force term due to IBM, respectively. Periodic boundary conditions are applied in the streamwise and spanwise directions. The friction Reynolds number is defined as Re\(_\tau =u_\tau (\textrm{H}-k_{\text {md}})/\nu \), where \(u_\tau =\sqrt{\tau _w/\rho }\) and \(\tau _w=-P_x(\textrm{H}-k_{\text {md}})\) are the friction velocity and the wall shear stress, respectively. H and \(k_{\text {md}}\) are half channel height and the mean (melt-down) roughness height measured from the lowest point of roughness, respectively. In the present work, all simulations are performed at Re\(_\tau =800\). To address the unfavourable computational demands associated with DNS, the concept of minimal channels (Chung et al. 2015) is employed for calculating the \(k_s\) values of the rough surfaces. The effectiveness of minimal channel DNS in analyzing irregular roughness and achieving stable predictions of \(k_s\) is examined in the preceding study (Yang et al. 2022). Therefore, the \(k_s\) values attained through minimal channel DNS in the present work are regarded as the high-fidelity ground truths.

2.2 Roughness Database

The roughness topographies are generated using a mathematical algorithm proposed by Pérez-Ràfols and Almqvist (2019). This generation method offers flexibility in creating roughness profiles with predetermined p.d.f. and PS, while maintaining the random and stochastic nature of the irregular roughness. Utilizing this generation method, a total of 85 artificial roughness samples with significantly diverse configurations of p.d.f. and PS are generated for the training database. The \(k_s\) values of the roughness samples are obtained through DNS. All the roughness samples in the database yield \(k_s^+>50\), which are regarded to be located in the fully rough regime (Jouybari et al. 2021; Yang et al. 2023). It is noteworthy that the current criteria of \(k_s^+>50\) is slightly smaller than the commonly used threshold of \(k_s^+>70\) (Nikuradse 1933; Flack and Schultz 2010). The current value is deliberately chosen as a trade-off of various factors, such as the total computational cost and the number of tested samples. It should be mentioned that only eight out of the 85 training data are located in the range of \(50<k_s^+<70\). Despite the potential uncertainty in measuring the values of \(k_s\) of these samples with the present threshold, the inclusion of these samples has been found to improve the performance of the model (Yang et al. 2023). This improvement is attributed to the fact that the topographical features embedded in these eight samples are considered informative to the training of the model. Bearing this in mind, the current threshold of \(k_s^+>50\) is selected to seek similarity to the reference (Jouybari et al. 2021; Yang et al. 2023). Furthermore, a testing database \({\mathcal{T}}_{{{\text{inter}}}} \) is constructed, consisting of 20 roughness samples that undergo the identical procedure for generation and evaluation. It is worth mentioning that due to the absence of a naturally defined surface plane in roughness, various definitions of the offset of the virtual wall d, also referred to as zero-plane displacement, have been proposed in the extensive literature. In the present database, d is defined as the position where the roughness drag force applies Jackson (1981). However, a different choice of virtual wall position can affect the predicted rough-wall shear stress \(\tau _w\) and thus the resulting \(k_s\) value Chan-Braun et al. (2011). Therefore, it is crucial to consider the different definition of d as a possible source of uncertainty in the training data. In addition to the testing database comprising surfaces generated using the aforementioned algorithm, an external testing database denoted as \({\mathcal{T}}_{{{\text{ext,}}1}} \) is formed with five realistic roughness samples sourced from diverse technical applications, including ice accretion (Velandia and Bansmer 2019), deposits in internal combustion engines (Forooghi et al. 2018), and grid-blasted surfaces (Thakkar et al. 2017). Furthermore, the second external testing database, labeled \({\mathcal{T}}_{{{\text{ext,}}2}} \), incorporates 15 irregular rough surfaces generated in the study by Jouybari et al. (2021). In this database, numerous roughness samples are generated by randomly placing ellipsoidal elements of varying sizes and orientations on a smooth wall, resulting in morphologies distinct from the roughness type employed for model training. The joint distribution of the three most frequently studied roughness parameters—Sk, ES\(_x\), and Ku—is presented in the lower-left and upper-right corners of Fig. 1a for the training and testing databases, respectively. The diagonal of the figure displays histograms representing the distribution of each parameter. The histograms for each database are stacked on top of each other. Figure 1b depicts the distributions of \(k_s\) values, normalized by the 99% p.d.f. confidence interval \(k_{99}\), across the aforementioned datasets against the investigated roughness parameters.

2.3 Machine Learning Models

Schematic illustration of the prediction process of considered models. Left: \(\text {NN}_\text {PS}\); right: \(\text {NN}_\text {PA}\) and \(\text {NN}_\text {PAM}\). The different input roughness features incorporated in \(\text {NN}_\text {PA}\) and \(\text {NN}_\text {PAM}\) are marked with circles and squares, respectively. The specific architectures of each model are documented in Table 1. Here \(R_a\) is the averaged absolute deviation of roughness from \(k_{md}\). Por denotes porosity (the fraction of fluid volume to the entire volume under roughness crest height \(k_c\)). \(\text {Inc}_\text {x,z}=\tan ^{-1}\{Sk(\partial k/\partial x,z)/2\}\) are the inclinations in x and z directions, respectively

As described earlier, the present study compares machine learning models based on two distinct roughness characterization methods. The working principles of the models are displayed in Fig. 2. The input layer of \(\text {NN}_\text {PS}\) (left) receives each 30 values from the discretized p.d.f. and PS profiles each. The input is augmented with three additional parameters to account for roughness scaling, namely the peak-to-trough height \(k_t\), and the largest and smallest roughness wavelengths \(\lambda _{0/1}\). These parameters correspond to the boundaries of the probability density function (p.d.f.) and power spectrum (PS), respectively. In total, 63 quantities (30+30+3) are transferred to the \(\text {NN}_\text {PS}\) model (Yang et al. 2023). On the other hand, \(\text {NN}_\text {PA}\) incorporates a set of 17 roughness parameters along with their product as model input as described in (Jouybari et al. 2021). As demonstrated in the following results, a deterioration in the model performance is achieved for \(\text {NN}_\text {PA}\). The sensitivity analysis reveals that the model complexity, stemming from the high dimensionality of the input vector, results in inaccurate model responses. Having this in mind, a simplified model \(\text {NN}_\text {PAM}\) is developed in the present work. The roughness parameters incorporated by \(\text {NN}_\text {PAM}\) are highlighted by green squares in the right panel of Fig. 2. Notably, all the parameter dot products are excluded in \(\text {NN}_\text {PAM}\). Additionally, the omission of \(\textrm{inc}_{x,z}\) is motivated by the fact that these parameters in the current training database are consistently zero. Consequently, \(\text {NN}_\text {PAM}\) incorporates only 6 roughness parameters from the 17 input variables of \(\text {NN}_\text {PA}\).

The three models are constructed in the fashion of fully connected MLP. L2 regularization is applied to the loss function to mitigate over-fitting. In the figure, the non-dimensionalization of the \(k_s\) value is performed with respect to the reference length scale \(k_\text {ref}\). To maintain consistency between the input and output of each model, the choice of \(k_\text {ref}\) aligns with the non-dimensionalization procedure applied to the input quantities. Consequently, \(k_{99}\) is utilized as \(k_\text {ref}\) for \(\text {NN}_\text {PS}\) while Ra is employed for \(\text {NN}_\text {PA}\) and \(\text {NN}_\text {PAM}\). The non-dimensionalized \(k_s\) values are denoted as \(k_s^*=k_s/k_{\text {ref}}\). The architectures of each model type are individually determined through BO. The technical details of BO are provided in the subsequent section. Following the framework described by Yang et al. (2023), ensemble prediction technique is applied for each type of models: the ENN models are formed by training 50 NNs members with identical BO-optimized architecture but different combination of training and validation data samples. In the end, The final prediction of the ensemble model is the arithmetic averaged predictions among the 50 NN members. These ensemble models, depending on the type of their NN members, are referred to as \(\text {ENN}_\text {PS}=\{\text {NN}_\text {PS,1},\text {NN}_\text {PS,2},..., \text {NN}_\text {PS,50}\}\), \(\text {ENN}_\text {PA}=\{\text {NN}_\text {PA,1},\text {NN}_\text {PA,2},..., \text {NN}_\text {PA,50}\}\) or \(\text {ENN}_\text {PAM}=\{\text {NN}_\text {PAM,1},\text {NN}_\text {PAM,2},..., \text {NN}_\text {PAM,50}\}\).

2.4 Bayesian Optimization of Hyperparameters

MLP models, like other types of neural networks, are distinguished by their layered structure consisting of a large amount interconnected neurons. The architecture of an MLP model, including the number of neurons in each layer, and the choice of activation functions, as well as training parameters such as the learning rate and L2 regularization, are critical hyperparameters that must be carefully selected/optimized prior to training the model. The tuning of these hyperparameters is pivotal for realizing the full potential of the model. Nevertheless, the multitude of potential hyperparameter combinations poses challenges in determining the optimal configuration for each model.

The optimization task for the hyperparameters is formulated as:

where \(\textbf{H}=(H_1,H_2,..., H_p)\) represents the set of \(p>0\) hyperparameters that are to be designed. The objective function f denotes the mean absolute percentage error (MAPE) of the trained model in validation set. In the present work, f is calculated by averaging the MAPE values in the fashion of 10-fold validation. In traditional approaches, such as random optimization or grid search optimization, the tuning of hyperparameters is typically performed without considering the knowledge gained from previous trials. As a result, these methods are often considered inefficient. In this study, Bayesian optimization (BO) is utilized to overcome these limitations and efficiently explore the admissible space of \(\textbf{H}\). This is done by intelligently exploring the hyperparameter configurations based on past evaluations, leading to faster convergence towards the optimal solution. The Bayesian optimization is based on Bayes’ Theorem:

The data set \(\textbf{X}\) consists of the collection of n observed hyperparameters along with their corresponding f values, denoted as \(\textbf{X} = \{\textbf{H}_1, f_1, \textbf{H}_2, f_2,...,\textbf{H}_n,f_n\}\). \(P(f|\textbf{X})\) is the posterior probability or probabilistic surrogate model within the framework of Bayesian optimization, \(P(\textbf{X}|f)\), P(f) and \(P(\textbf{X})\) are likelihood, prior probability for f and \(\textbf{X}\) respectively. The BO process can be summarized as follows:

-

(1)

Initiating BO by randomly testing 10 combinations of hyperparameters and calculate their corresponding f values to form the initial data set \(\textbf{X}\);

-

(2)

Constructing surrogate function \(P(f|\textbf{X})\) using Gaussian process regression (GPR) based on known data set \(\textbf{X}\);

-

(3)

Choosing global best hyperparameters \(\textbf{H}_\text {best}\) of the surrogate function according to a acquisition function, which is randomly selected from lower confidence boundary (LCB), expected improvement (EI) and probability of improvement (PI) for each BO iteration (Hoffman et al. 2011);

-

(4)

Calculating \(f(\mathbf{H_\text {best}})\) and augmenting data set \(\textbf{X}\) by \(\textbf{H}_\text {best}\), return to step 1.

A total of 60 iterations of BO are performed for each type of model. The optimized hyperparameters, denoted as \(\textbf{H}_\text {opt}\), are determined by selecting the data point that yields the minimum value of the objective function \(f(\textbf{H}_\text {opt})\) among the 60 iterations. The considered hyperparameters as well as their admissible ranges are summarized in the Table 1.

3 Results

3.1 Hyperparameters

The evolution of the objective function f during the BO processes are displayed in the Fig. 3. As can be seen, after 10 random searching steps, the newly queried f values are mostly maintained in a relatively low level, indicating the efficiency of the BO framework in nominating competitive hyperparameter combinations. The respective minimum f values for \(\text {NN}_\text {PS}\), \(\text {NN}_\text {PA}\) as well as \(\text {NN}_\text {PAM}\) are marked with open squares in the figure with corresponding color. The optimized hyperparameters for each model type are summarized in Table 1. Substantial differences in model architectures may stem from variations in processing different types of input quantities as well as from the difficulty of map** the input quantities to the objective values. Additionally, the markedly higher number of trainable weights required by the optimized \(\text {NN}_\text {PA}\) underscores the necessity for increased complexity in recovering predictions from the provided inputs. This observation may suggest the challenge of recovering surface drag given the high dimensionality of the input variables. In clear contrast, the number of trainable weights for \(\text {NN}_\text {PAM}\) is considerably smaller. This implies that the modified model necessitates lower model complexity for drag prediction due to the diminished dimensionality of the selected input parameters.

3.2 Final Model Performance

The final ensemble models \(\text {ENN}_{\text {PS}}\), \(\text {ENN}_{\text {PA}}\) and \(\text {ENN}_{\text {PAM}}\) are built with the respective optimized architectures as documented in Table 1. These models are tested with the testing data sets \({\mathcal{T}}_{{{\text{inter}}}} \), \({\mathcal{T}}_{{{\text{ext,}}1}} \) and \({\mathcal{T}}_{{{\text{ext,}}2}} \). The averaged percentage prediction errors Err within each testing set are displayed in the Fig. 4. Acceptable performance is achieved by all the considered models in \({\mathcal{T}}_{{{\text{inter}}}} \). While \(\text {ENN}_\text {PS}\) achieves an averaged percentage error of 9.2%, \(\text {ENN}_\text {PAM}\) exhibits similar performance of 8.6%. The full-sized \(\text {ENN}_\text {PA}\) model, though featured with the highest model complexity, achieves the highest averaged error of 13.0%. Interestingly, while the full-sized \(\text {ENN}_\text {PA}\) is expected to exhibit performance at least in line with \(\text {ENN}_\text {PAM}\) since they share the same subset of input parameters, its actual performance noticeably differs from which of the simplified counterpart. This could be attributed to the diminished training efficiency of \(\text {ENN}_\text {PA}\) due to the increased input dimensionality.

As illustrated in Fig. 1, the training database adequately spans the parameter domain of the external testing data (\( {\mathcal{T}}_{{{\text{ext,}}1\& 2}} \)) in relation to the observed roughness parameters. Consequently, it was expected that the parameter-based models perform similarly for the internal and external test data-sets, as long as the utilized parameters contain adequate information about roughness. However, the results in Fig. 4 show that the performance of \(\text {ENN}_\text {PA}\) and \(\text {ENN}_\text {PAM}\) deteriorate significantly in these testing databases, exhibiting errors higher than 30%. This deterioration in model performance is most likely due to the dissimilarity of the external samples. This can be an indication that rough surfaces with identical values of such parameters but different generation natures may not have same \(k_s\) values. In contrast, \(\text {ENN}_\text {PS}\) achieves a more consistent performance with an average error of around 10%— despite the underlying dissimilarities in the testing databases. The remarkable generalizability of \(\text {ENN}_\text {PS}\) aligns with the fact that roughness p.d.f. and PS provide a more comprehensive representation of topographical features at various length scales compared to the roughness parameters.

In summary, despite the relatively limited generalizability of the both models based on the statistical roughness parameters, it is evident that a similar performance can be achieved by these models within \({\mathcal{T}}_{{{\text{inter}}}} \). This signifies the potential of the parameter-based models in interpolating predictions while lack in the capability of extrapolating their performance to diverse roughness topographies. However, the aforementioned advantages of the characterization method utilizing p.d.f. and PS are accompanied with inherent drawbacks compared to conventional single-valued parameters, particularly in terms of their accessibility. As well understood, converged statistics is one of the essential prerequisites for accurately reflecting the topographical features of roughness. To attain a statistically converged single-valued roughness parameter, the evaluation of a substantial area of roughness samples is necessitated. Similarly, achieving a converged height p.d.f. necessitates calculating of numerous converged frequencies of height within a number of finite small height intervals (bins) along the height p.d.f. axis, i.e. \(k/k_t\in [0,1]\). It is crucial to recognize that these discrete p.d.f. statistics, unlike the independent parameters utilized in the parameter-based models, are correlated. Consequently, a larger testing section is necessary to obtain a converged p.d.f. profile. On the other hand, the challenge in measuring PS across a range of wavenumbers critical for drag prediction lies in the necessity of exploring a sufficiently large area of the roughness sample with a reasonably fine resolution. These practical challenges are concretely reflected in [?], where laboratory experiment results of sandpaper roughness are reproduced by DNS on an artificial surrogate for sandpaper roughness generated based on its p.d.f. and PS. The difficulties in obtaining accurate p.d.f. and PS from a realistic sandpaper stem from issues such as light reflection in white-light interferometry measurements, slow convergence of p.d.f. in perthometer measurement and limited accuracy of PS measurement in 3-D photogrammetry measurements. In conclusion, the utilization of a combination of measurement techniques may be essential for general application scenarios when employing p.d.f. and PS characterization in reality. Therefore, it is essential to appreciate the significant convenience of acquiring the single-valued parameters in a practical sense and to take this aspect into account when selecting suitable type of models.

3.3 Zonal Sensitivity Analysis

As previously discussed, the acquirement of single-valued roughness parameters in realistic applications is easier compared to their p.d.f. and PS. For the sake of simplicity, the single-valued roughness statistical parameters are referred to as roughness parameters in the following. Furthermore, the practical utility and convenience of these parameter-based models present a compelling rationale for continuing the study of this type of model. The model evaluation in the previous section reveals different performances between the two parameter-based models. Additionally, the distinct model architectures—as indicated in Table 1—suggest variations in the underlying perceptron processes for map** roughness information into their predictions. A detailed investigation of these differences thus allows to gain insights into the physics of roughness. To this end, the following content focuses on the investigation of the present parameter-based models, i.e. \(\text {ENN}_\text {PA}\) and \(\text {ENN}_\text {PAM}\), by means of sensitivity analysis. The sensitivity analysis methodology aims to assess the significance of individual input quantities by calculating the Jacobian matrix of the NN output \(k_s^*\) with respect to the input variables, which writes

where \(S_j\) is the sensitivity of the input element \(I_j\), the subscript \(_j\) represents the j-th component of the input vector I. \(k_{s,i}^*\) is the predicted \(k_s^*\) value by the i-th model member. As is illustrated by the equation, sensitivity analysis requires concrete sample points for calculating the Jacobian matrix. In order to include as large the parameter space as possible, roughness topographies in the repository \(\mathcal {U}\) compromising 4200 roughness topographies is utilized. The symbol \(\bigl <\cdot \bigr>\) represents the averaging operation over different sample points. The derivative \(\partial k_{s,i}^*/\partial I_j\) is calculated using an automatic differentiation method. Subsequently the sensitivity scores are normalized though \(S_j^*=S_j/\sum _kS_k\). It is worth noting that the roughness geometries in the repository, which are used for this sensitivity analysis, are generated similarly to the training and internal testing data. This choice is motivated by the fact that all models perform consistently for \(\mathcal {T}_{\text {inter}}\).

As reported in the literature, the impact of roughness in distinct morphological regimes may demonstrate varying correlations with roughness parameters (Napoli et al. 2008; Flack et al. 2020). For instance, a significant transition in roughness effect, marked by ES\(\approx 0.35\), delineates the transition in the dominating drag contribution effect from friction drag to form (pressure) drag (Schultz and Flack 2009). Additionally, variations in the drag mechanisms are reflected by Sk, where positive, zero, and negative Sk values exhibit distinct drag exertion mechanisms (Jelly and Busse 2018). To explore these phenomena jointly leveraging the present data-driven perspective, sensitivity analysis is conducted for \(\text {ENN}_\text {PA}\) and \(\text {ENN}_\text {PAM}\) across different ranges of roughness parameters, controlled by the values of ES\(_x\) and Sk. This approach facilitates an individual examination of the models’ responses to roughness in wavy conditions (ES\(_x<0.35\)) and rough regimes (ES\(_x\ge 0.35\)), grouped by the sign of Sk. In this classification, roughness samples are categorized based on their Sk values into three regions, namely positive (\(Sk\ge 0.1\)), negative (\(Sk\le -0.1\)) and near Gaussian (\(-0.1<Sk<0.1\)). The selection of the threshold value, 0.1, is arbitrary and is chosen to encompass a reasonable number of samples in the near Gaussian region. Consequently, the sensitivity analysis in the present work is performed separately for six different zones (2 ES\(_x\) zones \(\times \) 3 Sk zones) in the parameter space, distinguished based on the values of ES\(_x\) and Sk, hence referred to as zonal. It is, however, crucial to acknowledge that the currently proposed parameter criteria for distinguishing different types of roughness stem from the known transition of the roughness behavior based on these parameters (Schultz and Flack 2009; Jelly and Busse 2018). Obviously, a finer division of the parameter regions based on more parameters can provide a better illustration of the physics of roughness skin friction, especially when extending the analysis to a broader range of roughness types. The exploration of the criteria for classifying similarly behaved roughness based on various parameters can be crucial for develo** roughness predictive models, which is called in the future investigations.

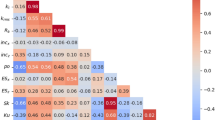

The result of zonal sensitivity analysis for \(\text {ENN}_\text {PA}\) is illustrated in Table 2. The sensitivity analysis outcomes for further ranges of roughness in the database display notable similarities. As can be observed from the figure, the current model identifies only half of the considered roughness parameters as the influential inputs, while the remaining half of the roughness parameters demonstrate nearly negligible sensitivity to the prediction. Wherein, markedly high importance is attributed to the input variable \(Sk \times Ku\) across different zones of the database. This peculiar behavior may stem from the reduced training efficiency attributed to the enhanced complexity of the model arising from the high dimensionality of the input. Consequently, a diminished performance of the model within \({\mathcal{T}}_{{{\text{inter}}}} \) is observed.

Table 3 presents zonal sensitivity analysis results for \(\text {ENN}_\text {PAM}\), offering insightful indications regarding the different impact of roughness across different roughness types. It is evident that, for roughness with ES\(_x < 0.35\), higher sensitivity in the prediction is associated with the ES\(_{x,z}\) values. However, the significance of ES in this region diminishes with increasing Sk values. A plausible interpretation of this behavior is that positively skewed roughness corresponds to peak-dominant roughness, and the ES in the waviness regime directly influences the contribution of form drag (pressure drag) downstream of roughness peaks (Schultz and Flack 2009). In contrast, the near-Gaussian and negatively skewed roughness contains an increasing portion of the wall structures in the form of pits, which are prone to generate stable vortices inside the vacancy and thus result in less pressure drag. It is worth mention that the comparable sensitivity of ES\(_x\) and ES\(_z\) may arise from the inherent constraint of the current isotropic roughness training database. It is anticipated that, with the inclusion of anisotropic surfaces, the model’s response to ES\(_x\) and ES\(_z\) could differ (Busse and Jelly 2020; Jelly et al. 2022). In contrast, for the roughness in rough regime, i.e. ES\(_x\ge 0.35\), the sensitivity of the model to ES is ranked as the last. This aligns with findings in the literature (Schultz and Flack 2009; Napoli et al. 2008), where it is reported that the contribution of pressure drag saturates with increasing ES within this range of values. As a consequence, the roughness effect exhibit lowest sensitivity to ES for the negatively skewed steep roughness. Furthermore, the variation of effect of roughness properties against the evolution of Sk values can be observed. A discernible decrease in sensitivity as Sk increases can be observed from the figures. This saturation effect of Sk is in line with literature (Busse and Jelly 2023). As a result, steep roughness with negative skewness exhibits the highest sensitivity to Sk, whereas positively skewed wavy roughness attains the lowest sensitivity ranking. These sensible model responses across different database divisions may be a crucial factor contributing to its outstanding performance in \({\mathcal{T}}_{{{\text{inter}}}} \). The successful training of this model can be ascribed to its lower complexity and reduced dimensionality of the input, facilitating efficient training with the current limited training data sets.

In conclusion, the zonal sensitivity analysis reveals that the full-sized \(\text {ENN}_\text {PA}\) demonstrates similar behavior across the observed database, with sensitivity concentrated on a few input variabales. This observation suggests a potential insufficiency of training samples for such a complex model. In contrast, the simplified model, \(\text {ENN}_\text {PAM}\), exhibit physically explainable model response in different zones, attributed to improved training efficiency resulting from reduced model complexity. However, it is possible that furnishing the full-sized model ENN\(_\text {PA}\) with an ideally comprehensive training database may enable the model to potentially achieve a physical response. However, given the constraints of the current limited database, which is in close alignment with the challenges in this field of research, the preceding discussion carries significant practical implications for dealing with the scarcity of roughness data. The present analyses under the constraint of limited available data size, suggest the potential for accurate drag predictions on specific types of roughness using low-complexity models trained on similar roughness samples.

4 Conclusions

Three types of ensemble neural network (ENN) models, each employing a distinct representation of roughness as input variables, are trained based on the same database to predict the roughness equivalent sand grain size \(k_s\). The first model (\(\text {ENN}_\text {PS}\)) utilizes the p.d.f. and PS of the roughness height distribution as input. The second model (\(\text {ENN}_\text {PA}\)) incorporates a finite set of roughness statistical parameters as well as their products adopted from the work by Jouybari et al. (2021). Moreover, a simplified model, \(\text {ENN}_\text {PAM}\), is developed using a subset of 6 roughness parameters carefully chosen from the input variables of \(\text {ENN}_\text {PA}\). This selection involves excluding dot products and constant roughness features. The ENN models are regarded as the ensembles of each 50 neural network(NN) members, namely \(\text {ENN}_\text {PS}=\{\text {NN}_\text {PS,1},\text {NN}_\text {PS,2},..., \text {NN}_\text {PS,50}\}\), \(\text {ENN}_\text {PA}=\{\text {NN}_\text {PA,1},\text {NN}_\text {PA,2},..., \text {NN}_\text {PA,50}\}\) and \(\text {ENN}_\text {PAM}=\{\text {NN}_\text {PAM,1},\text {NN}_\text {PAM,2},..., \text {NN}_\text {PAM,50}\}\), respectively. The final predictions of these ENNs are obtained through arithmetic averaging of the predictions of their NN members that are based on same input variables. The NN members within the same type of ENN share identical hyperparameters. The hyperparameters of each type of the NN member are individually optimized with Bayesian optimization (BO). In the comparison of the optimized NN member architectures, it is evident that the \(\text {ENN}_\text {PA}\) model requires higher complexity—reflected by the total number of trainable parameters—to achieve a performance comparable to other models. The optimized models are subsequently evaluated using the testing databases from the current roughness generation algorithm (\({\mathcal{T}}_{{{\text{inter}}}} \)) and from literature (\( {\mathcal{T}}_{{{\text{ext,}}1\& 2}} \)).

It is observed that the performance of \(\text {ENN}_\text {PS}\) remains consistent across the various testing databases with an averaged percentage error around 10%. In contrast, while the parameter-based models, specifically \(\text {ENN}_\text {PA}\) and \(\text {ENN}_\text {PAM}\), exhibit comparable performance on \({\mathcal{T}}_{{{\text{inter}}}} \), their deteriorated performance on the external databases \( {\mathcal{T}}_{{{\text{ext,}}1\& 2}} \) underscores the limited capability of the employed roughness parameters to extrapolate the predictive performance across divers roughness types. Based on the current model experiment, it is demonstrated that the multi-scale characterization achieved by employing p.d.f. and PS of roughness height as input variables leads to the outstanding performance generalizability of the model. However, similar performance within \(\mathcal {T}_{\text {inter}}\) implies that the parameter-based models work effectively within the same type of roughness encountered in the training data. In the end, a sensitivity analysis on the input parameters of the two parameter-based models is carried out, and it is observed that \(\text {ENN}_\text {PAM}\) reproduces sensitivities more in-line with previous physical observations, e.g. it successfully captures saturation effects of both extreme Sk and ES\(_{x,z}\) values on the resulting drag penalty. This potentially contributes to the somewhat better performance of this model compared to \(\text {ENN}_\text {PA}\).

Data Availability

The codes for statistical analysis, Bayesian optimization of NN architecture and model training can be downloaded from the first author’s GitHub repository https://github.com/JiashengY/Active-learning-codes.

References

Busse, A., Jelly, T.O.: Influence of surface anisotropy on turbulent flow over irregular roughness. Flow, Turbul. Comb. 104, 331–354 (2020). https://doi.org/10.1007/s10494-019-00074-4

Busse, A., Jelly, T.O.: Effect of high skewness and kurtosis on turbulent channel flow over irregular rough walls. J. Turbul. 24, 2173761 (2023). https://doi.org/10.1080/14685248.2023.2173761

Chan-Braun, C., García-Villalba, M., Uhlmann, M.: Force and torque acting on particles in a transitionally rough open-channel flow. J. Fluid Mech. 684, 441–474 (2011). https://doi.org/10.1017/jfm.2011.311

Chevalier, M., Schlatter, P., Lundbladh, A., Henningson, D.: SIMSON–A pseudo-spectral solver for incompressible boundary layer flow. Tech. Rep. TRITA-MEK 2007:07, Royal Institute of Technology, Stockholm, Sweden, pp. 1–100 (2007)

Chung, D., Chan, L., MacDonald, M., Hutchins, N., Ooi, A.: A fast direct numerical simulation method for characterising hydraulic roughness. J. Fluid Mech. 773, 418–431 (2015). https://doi.org/10.1017/jfm.2015.230

Chung, D., Hutchins, N., Schultz, M.P., Flack, K.A.: Predicting the drag of rough surfaces. Annu. Rev. Fluid Mech. 53, 439–471 (2021). https://doi.org/10.1146/annurev-fluid-062520-115127

Flack, K.A., Schultz, M.P.: Review of hydraulic roughness scales in the fully rough regime. J. Fluids Eng. 132(4), 4001492 (2010). https://doi.org/10.1115/1.4001492

Flack, K.A., Schultz, M.P., Barros, J.M.: Skin friction measurements of systematically-varied roughness: probing the role of roughness amplitude and skewness. Flow Turbul. Combust. 104(2–3), 317–329 (2020). https://doi.org/10.1007/s10494-019-00077-1

Forooghi, P., Weidenlener, A., Magagnato, F., Böhm, B., Kubach, H., Koch, T., Frohnapfel, B.: DNS of momentum and heat transfer over rough surfaces based on realistic combustion chamber deposit geometries. Int. J. Heat Fluid Flow 69, 83–94 (2018). https://doi.org/10.1016/j.ijheatfluidflow.2017.12.002

Goldstein, D., Handler, R., Sirovich, L.: Modeling a no-slip flow boundary with an external force field. J. Comput. Phys. 105(2), 354–366 (1993)

Hoffman, M., Brochu, E., Freitas, N.: Portfolio allocation for Bayesian optimization. In: Proceedings of the Twenty-Seventh Conference on Uncertainty in Artificial Intelligence. UAI’11, pp. 327–336. AUAI Press, Arlington, Virginia, USA (2011)

Jackson, P.S.: On the displacement height in the logarithmic velocity profile. J. Fluid Mech. 111, 15–25 (1981). https://doi.org/10.1017/S0022112081002279

Jelly, T.O., Busse, A.: Reynolds and dispersive shear stress contributions above highly skewed roughness. J. Fluid Mech. 852, 710–724 (2018). https://doi.org/10.1017/jfm.2018.541

Jelly, T.O., Ramani, A., Nugroho, B., Hutchins, N., Busse, A.: Impact of spanwise effective slope upon rough-wall turbulent channel flow. J. Fluid Mech. 951, 1 (2022). https://doi.org/10.1017/jfm.2022.823

Jiménez, J.: Turbulent flows over rough walls. Annu. Rev. Fluid Mech. 36, 173–196 (2004)

Jouybari, M.A., Yuan, J., Brereton, G.J., Murillo, M.S.: Data-driven prediction of the equivalent sand-grain height in rough-wall turbulent flows. J. Fluid Mech. 912, 8 (2021). https://doi.org/10.1017/jfm.2020.1085

Lee, S., Yang, J., Forooghi, P., Stroh, A., Bagheri, S.: Predicting drag on rough surfaces by transfer learning of empirical correlations. J. Fluid Mech. 933, 18 (2022). https://doi.org/10.1017/jfm.2021.1041

Napoli, E., Armenio, V., DeMarchis, M.: The effect of the slope of irregularly distributed roughness elements on turbulent wall-bounded flows. J. Fluid Mech. 613, 385–394 (2008). https://doi.org/10.1017/S0022112008003571

Nikuradse, J.: Stroemungsgesetze in Rauhen Rohren. VDI-Verl., Berlin (1933). http://slubdd.de/katalog?TN_libero_mab22)500057183

Pérez-Ràfols, F., Almqvist, A.: Generating randomly rough surfaces with given height probability distribution and power spectrum. Tribol. Int. 131, 591–604 (2019). https://doi.org/10.1016/j.triboint.2018.11.020

Sanhueza, R.D., Akkerman, I., Peeters, J.W.R.: Machine learning for the prediction of the local skin friction factors and nusselt numbers in turbulent flows past rough surfaces. Int. J. Heat Fluid Flow 103, 109204 (2023). https://doi.org/10.1016/j.ijheatfluidflow.2023.109204

Schultz, M.P., Flack, K.A.: Turbulent boundary layers on a systematically varied rough wall. Phys. Fluids 21(1), 015104 (2009). https://doi.org/10.1063/1.3059630

Thakkar, M., Busse, A., Sandham, N.: Surface correlations of hydrodynamic drag for transitionally rough engineering surfaces. J. Turbul. 18(2), 138–169 (2017). https://doi.org/10.1080/14685248.2016.1258119

Townsend, A.A.: The structure of turbulent shear flow / A.A.Townsend, 2nd ed. edn., p. 429. Cambridge University Press Cambridge [Eng.] ; New York, ??? (1976). http://www.loc.gov/catdir/toc/cam032/74014441.html

Velandia, J., Bansmer, S.: Topographic study of the ice accretion roughness on a generic aero-engine intake. In AIAA Scitech 2019 Forum (2019). https://doi.org/10.2514/6.2019-1451

Yang, J., Stroh, A., Chung, D., Forooghi, P.: Direct numerical simulation-based characterization of pseudo-random roughness in minimal channels. J. Fluid Mech. 941, 47 (2022). https://doi.org/10.1017/jfm.2022.331

Yang, J., Stroh, A., Lee, S., Bagheri, S., Frohnapfel, B., Forooghi, P.: Prediction of equivalent sand-grain size and identification of drag-relevant scales of roughness : a data-driven approach. J. Fluid Mech. 975, 34 (2023). https://doi.org/10.1017/jfm.2023.881

Yang, J., Velandia, J., Bansmer, S., Stroh, A., Forooghi, P.: A comparison of hydrodynamic and thermal properties of artificially generated against realistic rough surfaces. Int. J. Heat Fluid Flow 99, 109093 (2023). https://doi.org/10.1016/j.ijheatfluidflow.2022.109093

Acknowledgements

J. Yang gratefully acknowledges partial financial support from Friedrich und Elisabeth Boysen-Foundation (BOY-151). P. Forooghi gratefully acknowledges financial support from Aarhus Univesity Research Foundation (starting grant AUFF-F-2020-7-9). S. Lee and S. Bagheri sincerely appreciate financial support by Swedish Energy Agency under grant number 51554-1. This work was performed on the supercomputer HoreKa and the storage facility LSDF funded by the Ministry of Science, Research and the Arts Baden-Württemberg and by the Federal Ministry of Education and Research.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

JY: methodology, investigation, data curation, software, formal analysis, writing—original draft; AS: methodology, data curation, formal analysis, supervision, writing—review and editing; SL: ML methodology, formal analysis, writing—review and editing; SB: conceptualization, ML methodology, formal analysis, writing—review and editing; BF: formal analysis, supervision, resources, funding acquisition, writing—review and editing; PF: conceptualization, methodology, formal analysis, supervision, writing—original draft, writing—review and editing, funding acquisition, project administration

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The calculation of Roughness function \(\Delta U^+\) is based on the premise of out layer similarity Townsend (1976), which states that outer-layer flow is unaffected by the near wall events except for the effect due to the wall shear stress, the downward shift of the velocity profile is approximately a constant value in the logarithmic region and possibly beyond if the outer-flow geometry and Reynolds number are matched. Bearing this in mind, outer layer similarity must be validated prior to the calculation of \(\Delta U^+\). However, due to the nature of minimal channels, the turbulent flow in outer layer cannot be physically resolved. This results in varied mean velocity profiles in the outer layer for minimal channels of different sizes. In this study, the size of the minimal channel is described by its spanwise width \(L_z\), as the streamwise length \(L_x\) is defined by \(L_x=3\times L_z\). The selected spanwise width \(L_z\) is ranged from \(L_z=0.6\text {H}\) to \(2.0\text {H}\), with increments of \(0.1\text {H}\). The outer layer similarities of the flow over the roughness samples in the present training and testing databases are examined in Fig. 5, grouped by the dimension of the minimal channels.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yang, J., Stroh, A., Lee, S. et al. Assessment of Roughness Characterization Methods for Data-Driven Predictions. Flow Turbulence Combust (2024). https://doi.org/10.1007/s10494-024-00549-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10494-024-00549-z