Abstract

Time series analysis is widely applied in action recognition, anomaly detection, and weather forecasting. Time series forecasting remains a key challenge due to the complexity of temporal patterns, overlap** changes within sequences, and the need for advanced predictive models to forecast longer sequences in many scenarios. In this study, a model named the decomposed dimension time-domain convolutional neural network (DDTCN) is proposed. This model is specifically designed to address the challenges associated with long time series data. This paper presents a dimension temporal convolutional network (DTCN) module, which has a strong ability to capture variable correlations, and an adaptive strategy is introduced. Specifically, the model proposed in this paper first decomposes time series trends and is combined with the DTCN to extract variable correlations, thus achieving accurate predictions for complex time series and providing a powerful solution for long-term series forecasting. Experiments are conducted on multiple long-term series datasets covering five practical applications: energy, transportation, economics, weather, and health care . The proposed model is extensively evaluated and compared with traditional time series prediction methods and several benchmarks. The experimental results demonstrate that the proposed model outperforms state-of-the-art methods in most tasks involving multiple long-term series forecasting. Additionally, a pig price dataset is generated to predict agricultural economic trends, where compared to that of state-of-the-art methods, the DDTCN achieves a reduction in prediction error of 25.36%. Hence, this model holds promising prospects for wide-ranging applications.

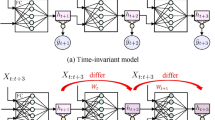

Graphical abstract

Similar content being viewed by others

Data availability and access

We use real-world datasets collected by [17, 48]. The other representative datasets and codes can be found at https://github.com/1zkh/DDTCN.

References

Gasparin A, Lukovic S, Alippi C (2022) Deep learning for time series forecasting: The electric load case. CAAI Trans Intell Technol 7(1):1–25. https://doi.org/10.1049/cit2.12060

Peng Y, Gong D, Deng C, Li H, Cai H, Zhang H (2022) An automatic hyperparameter optimization dnn model for precipitation prediction. Appl Intell 52(3):2703–2719. https://doi.org/10.1007/s10489-021-02507-y

Xu C, Zhang A, Xu C, Chen Y (2022) Traffic speed prediction: spatiotemporal convolution network based on long-term, short-term and spatial features. Appl Intell 52(2):2224–2242. https://doi.org/10.1007/s10489-021-02461-9

Srivastava T, Mullick I, Bedi J (2024) Association mining based deep learning approach for financial time-series forecasting. Appl Soft Comput 111469. https://doi.org/10.1016/j.asoc.2024.111469

Banerjee S, Lian Y (2022) Data driven covid-19 spread prediction based on mobility and mask mandate information. Appl Intell 52(2):1969–1978. https://doi.org/10.1007/s10489-021-02381-8

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez A.N, Kaiser Ł, Polosukhin I (2014) Attention is all you need. Adv Neural Inf Process Syst 30. https://doi.org/10.48550/ar**v.1706.03762

Yang C, Wang Y, Yang B, Chen J (2024) Graformer: A gated residual attention transformer for multivariate time series forecasting. Neurocomputing 127466. https://doi.org/10.1016/j.neucom.2024.127466

Liu Z, Cao Y, Xu H, Huang Y, He Q, Chen X, Tang X, Liu X (2024) Hidformer: Hierarchical dual-tower transformer using multi-scale mergence for long-term time series forecasting. Expert Syst Appl 239:122412. https://doi.org/10.1016/j.eswa.2023.122412

Kitaev N, Kaiser L, Levskaya A (2020) Reformer: The efficient transformer. In: 8th International conference on learning representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780. https://doi.org/10.1162/neco.1997.9.8.1735

Shang K, Chen Z, Liu Z, Song L, Zheng W, Yang B, Liu S, Yin L (2021) Haze prediction model using deep recurrent neural network. Atmosphere 12(12):1625. https://doi.org/10.3390/atmos12121625

Lai G, Chang W-C, Yang Y, Liu H (2018) Modeling long-and short-term temporal patterns with deep neural networks, 95–104. https://doi.org/10.1145/3209978.3210006

Shen L, Li Z, Kwok JT (2020) Timeseries anomaly detection using temporal hierarchical one-class network. In: Larochelle H, Ranzato M, Hadsell R, Balcan M, Lin H (eds.) Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, Virtual

Franceschi J, Dieuleveut A, Jaggi M (2019) Unsupervised scalable representation learning for multivariate time series. In: Wallach HM, Larochelle H, Beygelzimer A, d’Alché-Buc F, Fox EB, Garnett R (eds.) Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, December 8-14, 2019, Vancouver, BC, Canada, pp 4652–4663. https://doi.org/10.48550/ar**v.1901.10738

Zhang Z, Tian J, Huang W, Yin L, Zheng W, Liu S (2021) A haze prediction method based on one-dimensional convolutional neural network. Atmosphere 12(10):1327. https://doi.org/10.3390/atmos12101327

He Y, Zhao J (2019) Temporal convolutional networks for anomaly detection in time series. J Phys: Conference Series 1213(4):042050. https://doi.org/10.1088/1742-6596/1213/4/042050

Wu H, Xu J, Wang J, Long M (2021) Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting. In: Ranzato M, Beygelzimer A, Dauphin YN, Liang P, Vaughan JW (eds.) Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems 2021, NeurIPS 2021, December 6-14, 2021, Virtual, pp 22419–22430. https://doi.org/10.48550/ar**v.2106.13008

Cleveland RB, Cleveland WS, McRae JE, Terpenning I (1990) Stl: A seasonal-trend decomposition. J Off Stat 6(1):3–73

Yue M, Zhang X, Teng T, Meng J, Pahon E (2024) Recursive performance prediction of automotive fuel cell based on conditional time series forecasting with convolutional neural network. Int J Hydrogen Energy 56:248–258. https://doi.org/10.1016/j.ijhydene.2023.12.168

Bai S, Kolter JZ, Koltun V (2018) An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. ar**v:1803.01271. https://doi.org/10.48550/ar**v.1803.01271

Şenol H, Çakır Türk, Bianco F, Görgün E (2024) Improved methane production from ultrasonically-pretreated secondary sedimentation tank sludge and new model proposal: Time series (arima). Bioresource Technol 391:129866. https://doi.org/10.1016/j.biortech.2023.129866

Hamilton JD (2020) Time series analysis. Princeton University Press

Tsay SR (2014) Multivariate time series analysis: With R and financial applications. John Wiley & Sons, Inc., Hoboken

Drachal K (2021) Forecasting crude oil real prices with averaging time-varying var models. Resources Policy 74:102244. https://doi.org/10.1016/j.resourpol.2021.102244

Lütkepohl H (2006) Structural vector autoregressive analysis for cointegrated variables. Allgemeines Statistisches Archiv 90:75–88. https://doi.org/10.1007/s10182-006-0222-4

Yang H, Pan Z, Tao Q, Qiu J (2018) Online learning for vector autoregressive moving-average time series prediction. Neurocomputing 315:9–17. https://doi.org/10.1016/j.neucom.2018.04.011

Hertz JA (2018) Introduction to the theory of neural computation. Crc Press

Shu W, Cai K, **ong NN (2021) A short-term traffic flow prediction model based on an improved gate recurrent unit neural network. IEEE Trans Intell Transportation Syst 23(9):16654–16665. https://doi.org/10.1109/TITS.2021.3094659

Salinas D, Flunkert V, Gasthaus J, Januschowski T (2020) Deepar: Probabilistic forecasting with autoregressive recurrent networks. Int J Forecasting 36(3):1181–1191. https://doi.org/10.1016/j.ijforecast.2019.07.001

Shih S-Y, Sun F-K, Lee H-y (2019) Temporal pattern attention for multivariate time series forecasting. Machine Learn 108:1421–1441. https://doi.org/10.1007/s10994-019-05815-0

Song H, Rajan D, Thiagarajan J, Spanias A (2018) Attend and diagnose: Clinical time series analysis using attention models. In: Proceedings of the AAAI conference on artificial intelligence, vol. 32. https://doi.org/10.1609/aaai.v32i1.11635

Qin Y, Song D, Chen H, Cheng W, Jiang G, Cottrell GW (2017) A dual-stage attention-based recurrent neural network for time series prediction. In: Proceedings of the Twenty-Sixth international joint conference on artificial intelligence, IJCAI-17, pp 2627–2633. https://doi.org/10.24963/ijcai.2017/366

Changxia G, Ning Z, Youru L, Yan L, Huaiyu W (2023) Multi-scale adaptive attention-based time-variant neural networks for multi-step time series forecasting. Appl Intell. 1–20. https://doi.org/10.1007/s10489-023-05057-7

Gao C, Zhang N, Li Y, Lin Y, Wan H (2023) Adversarial self-attentive time-variant neural networks for multi-step time series forecasting. Expert Syst Appl 120722. https://doi.org/10.1016/j.eswa.2023.120722

Challu C, Olivares KG, Oreshkin BN, Ramirez FG, Canseco MM, Dubrawski A (2023) Nhits: Neural hierarchical interpolation for time series forecasting. Proceedings of the AAAI conference on artificial intelligence 37:6989–6997. https://doi.org/10.1609/aaai.v37i6.25854

Zeng A, Chen M, Zhang L, Xu Q (2023) Are transformers effective for time series forecasting? Proceedings of the AAAI conference on artificial intelligence 37:11121–11128. https://doi.org/10.1609/aaai.v37i9.26317

Zhang T, Zhang Y, Cao W, Bian J, Yi X, Zheng S, Li J (2022) Less is more: Fast multivariate time series forecasting with light sampling-oriented mlp structures. ar**v:2207.01186, https://doi.org/10.48550/ar**v.2207.01186

Yin L, Wang L, Huang W, Liu S, Yang B, Zheng W (2021) Spatiotemporal analysis of haze in bei**g based on the multi-convolution model. Atmosphere 12(11):1408. https://doi.org/10.3390/atmos12111408

Lian J, Ren W, Li L, Zhou Y, Zhou B (2023) Ptp-stgcn: pedestrian trajectory prediction based on a spatio-temporal graph convolutional neural network. Appl Intell 53(3):2862–2878. https://doi.org/10.1007/s10489-022-03524-1

Zhou T, Ma Z, Wen Q, Wang X, Sun L, ** R (2022) Fedformer: Frequency enhanced decomposed transformer for long-term series forecasting. In: Chaudhuri K, Jegelka S, Song L, Szepesvári C, Niu G, Sabato S (eds.) International Conference on Machine Learning, ICML 2022, 17-23 July 2022, Baltimore, Maryland, USA. Proceedings of Machine Learning Research, vol. 162, pp 27268–27286

Zhang Y, Yan J (2023) Crossformer: Transformer utilizing cross-dimension dependency for multivariate time series forecasting. In: The Eleventh international conference on learning representations, ICLR 2023, Kigali, Rwanda, May 1-5, 2023

Petropoulos F, Apiletti D, Assimakopoulos V, Babai MZ, Barrow DK, Ben Taieb S, Bergmeir C, Bessa RJ, Bijak J, Boylan JE, Browell J, Carnevale C, Castle JL, Cirillo P, Clements MP, Cordeiro C, Cyrino Oliveira FL, De Baets S, Dokumentov A, Ellison J, Fiszeder P, Franses PH, Frazier DT, Gilliland M, Gönül MS, Goodwin P, Grossi L, Grushka-Cockayne Y, Guidolin M, Guidolin M, Gunter U, Guo X, Guseo R, Harvey N, Hendry DF, Hollyman R, Januschowski T, Jeon J, Jose VRR, Kang Y, Koehler AB, Kolassa S, Kourentzes N, Leva S, Li F, Litsiou K, Makridakis S, Martin GM, Martinez AB, Meeran S, Modis T, Nikolopoulos K, Önkal D, Paccagnini A, Panagiotelis A, Panapakidis I, PavíJM, Pedio M, Pedregal DJ, Pinson P, Ramos P, Rapach D.E., Reade JJ, Rostami-Tabar B, Rubaszek M, Sermpinis G, Shang HL, Spiliotis E, Syntetos AA, Talagala PD, Talagala TS, Tashman L, Thomakos D, Thorarinsdottir T, Todini E, Trapero Arenas JR, Wang X, Winkler RL, Yusupova A, Ziel F, (2022) Forecasting: theory and practice. Int J Forecasting 38(3):705–871. https://doi.org/10.1016/j.ijforecast.2021.11.001

Taylor SJ, Letham B (2018) Forecasting at scale. American Statistician 72(1):37–45. https://doi.org/10.1080/00031305.2017.1380080

Oreshkin BN, Carpov D, Chapados N, Bengio Y (2020) N-BEATS: neural basis expansion analysis for interpretable time series forecasting. In: 8th International conference on learning representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020

Sen R, Yu H-F, Dhillon IS (2019) Think globally, act locally: A deep neural network approach to high-dimensional time series forecasting. In: Wallach H, Larochelle H, Beygelzimer A, Alché-Buc F, Fox E, Garnett R (eds.) Advances in neural information processing systems, vol. 32

Chen J, Yuan C, Dong S, Feng J, Wang H (2023) A novel spatiotemporal multigraph convolutional network for air pollution prediction. Appl Intell 1–14. https://doi.org/10.1007/s10489-022-04418-y

García-Duarte L, Cifuentes J, Marulanda G (2023) Short-term spatio-temporal forecasting of air temperatures using deep graph convolutional neural networks. Stochastic Environ Res Risk Assessment 37(5):1649–1667. https://doi.org/10.1007/s00477-022-02358-0

Zhou H, Zhang S, Peng J, Zhang S, Li J, **ong H, Zhang W (2021) Informer: Beyond efficient transformer for long sequence time-series forecasting. Proceedings of the AAAI conference on artificial intelligence 35:11106–11115. https://doi.org/10.1609/aaai.v35i12.17325

Wu H, Hu T, Liu Y, Zhou H, Wang J, Long M (2023) Timesnet: Temporal 2d-variation modeling for general time series analysis. In: The Eleventh international conference on learning representations, ICLR 2023, Kigali, Rwanda, May 1-5, 2023

Acknowledgements

This work is supported in part by the Scientific Research Platforms and Projects of Guangdong Provincial Education Department (2021ZDZX1078; 2023ZDZX4002) and the Guangzhou Key Laboratory of Intelligent Agriculture (201902010081). The authors thank all the editors and reviewers for their suggestions and comments.

Author information

Authors and Affiliations

Contributions

Conceptualization, Kaihong Zheng and **feng Wang; methodology, Kaihong Zheng and **feng Wang; software, Kaihong Zheng; validation, Kaihong Zheng and **feng Wang; formal analysis, **feng Wang and Wenzhong Wang; investigation, Kaihong Zheng; resources, **feng Wang and Rong** Jiang and Wenzhong Wang; data curation, Kaihong Zheng and Yunqiang Chen; writing—original draft preparation, Kaihong Zheng; writing—review and editing, **feng Wang; visualization, Kaihong Zheng; Supervision, **feng Wang; project administration, **feng Wang; funding acquisition, **feng Wang. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors have no relevant financial or nonfinancial interests to disclose.

Ethical and informed consent for data used

All data used in this paper conform to the ethical and informed consent specifications.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zheng, K., Wang, J., Chen, Y. et al. DDTCN: Decomposed dimension time-domain convolutional neural network along spatial dimensions for multiple long-term series forecasting. Appl Intell 54, 6606–6623 (2024). https://doi.org/10.1007/s10489-024-05526-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-024-05526-7