Abstract

This paper proposes a multichannel environmental sound segmentation method. Environmental sound segmentation is an integrated method to achieve sound source localization, sound source separation and classification, simultaneously. When multiple microphones are available, spatial features can be used to improve the localization and separation accuracy of sounds from different directions; however, conventional methods have three drawbacks: (a) Sound source localization and sound source separation methods using spatial features and classification using spectral features trained in the same neural network, may overfit to the relationship between the direction of arrival and the class of a sound, thereby reducing their reliability to deal with novel events. (b) Although permutation invariant training used in autonomous speech recognition could be extended, it is impractical for environmental sounds that include an unlimited number of sound sources. (c) Various features, such as complex values of short time Fourier transform and interchannel phase differences have been used as spatial features, but no study has compared them. This paper proposes a multichannel environmental sound segmentation method comprising two discrete blocks, a sound source localization and separation block and a sound source separation and classification block. By separating the blocks, overfitting to the relationship between the direction of arrival and the class is avoided. Simulation experiments using created datasets including 75-class environmental sounds showed the root mean squared error of the proposed method was lower than that of conventional methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Various methods such as sound source localization (SSL), sound source separation (SSS), and classification have been proposed in acoustic signal processing, robot audition, and machine learning for use in real-world environments containing multiple overlap** sound events [1,2,3].

Conventional approaches use the cascade method, incorporating individual functions based on array signal processing techniques [4,5,6]. The main problem in this method is accumulation of errors generated at each function. Because each function is optimized independently regardless of the overall task, each output might not be optimal for subsequent blocks.

Recently, deep learning-based end-to-end methods using a single-channel microphone have been proposed [7,8,9]. Environmental sound segmentation, which simultaneously performs SSS and classification, has been reported to achieve segmentation performance superior to the cascade method by avoiding accumulated errors [10, 11]. However, performance deteriorates with overlap** sounds from multiple sources, because a single-channel microphone obtains no spatial features.

Multichannel-based methods have been proposed for automatic speech recognition (ASR) [15, 16], where the model has multiple output layers corresponding to different speakers and is trained to cope with all possible combinations of speakers. While these studies assume mixtures of two or three speakers, it is impractical to extend them to many classes of sounds, such as environmental sounds.

A multichannel environmental sound segmentation method has been proposed [17]. This integrated method deals with SSL, SSS, and classification in the same neural network. Although the method implicitly intends that SSL, SSS and classification are trained simultaneously in a single network, loss function with respect to the direction of arrival (DOA) is not used in training. Thus, spatial features may not be used effectively. Deep learning-based methods for sound event localization and detection (SELD) have been proposed [18,19,20,21]. These methods simultaneously perform SSL and sound event detection (SED) of environmental sounds. Many SELD methods have two branches that perform DOA estimation and SED. These methods are trained using not only loss function to SED outputs but also DOA outputs. However, the DOA and the class do not correlate unless the position and orientation of the microphones remains fixed. If a sufficient dataset is not available, the network overfits to the relationship between the DOA and the class.

Throughout the multichannel-based method, various features, such as complex values of short time fourier transform (STFT), IPD, sine and cosine of IPD, have been used as spatial features [14, 18, 20], but there are no studies comparing them.

This paper proposes a multichannel environmental sound segmentation method comprising two discrete blocks, a sound source localization and separation (SSLS) block and a sound source separation and classification (SSSC) block as shown in Fig. 1. This paper has the following contributions:

-

It is not necessary to set the number of sound sources in advance, because sounds from all azimuth directions are separated simultaneously.

-

Because the SSLS block and the SSSC block are discrete there is no overfitting to the relationship between the DOA and the class.

-

Comparison of various spatial features revealed the sine and cosine of IPDs to be optimum for sound source localization and separation.

2 Related work

This section describes multichannel-based approaches to sound source localization, sound source separation and classification.

2.1 Multichannel autonomous speech recognition

Multichannel-based methods have been proposed for ASR [2.2 Multichannel environmental sound segmentation A multichannel environmental sound segmentation method has been proposed [17]. This method uses magnitude spectra and the sine and cosine of the IPDs as input features to train SSL, SSS and classifications simultaneously in the same network. Although this method implicitly intends that SSL, SSS and classification are trained simultaneously in a single network, the loss function with respect to DOA is not used in training thus spatial features may not be used effectively. Normally, unless the position and orientation of the microphone is always fixed, DOA and the class do not correlate. If a sufficient dataset is unavailable, the network overfits to the relationship between DOA and the class. A combination of SSL, and SSS for classification of bird songs has been proposed [24, 25]. The method comprises SSL, SSS, and classification blocks, and uses the SSL results as spatial cues for bird song classification. However, spatial cues are ineffective if the position and orientation of the microphone differs from that during training. For detection and classification of acoustics of scenes and events (DCASE) [22], deep learning-based methods for SELD have been proposed [18,19,20,21]. These methods simultaneously perform SSL and SED of environmental sounds containing many classes. Many SELD methods have two branches that perform DOA estimation and SED, and calculate the loss to DOA and SED outputs, respectively. A simple SELD method optimizes both of these losses simultaneously, but typically DOA and class do not correlate unless the microphone position and orientation is always fixed. If a sufficient dataset is unavailable, the network overfits to the relationship between the DOA and the class. Therefore, many SELD methods have reported improved performance by training these two branches separately. However, these methods reduce the frequency dimension of the features by using frequency pooling [23] and cannot perform SSS. Additionally, various features, such as complex values of STFT coefficients, IPD, sine and cosine of IPD, have been used as spatial information, but no study has compared them. Conventional multichannel-based methods have drawbacks.

For environmental sounds containing many classes, it is impossible to set a maximum number of sound sources in advance. If a sufficient dataset is unavailable, the network overfits to the relationship between the DOA and the class. Various features, such as complex values, IPD, sine and cosine of IPD, have been used as spatial information, but no study has compared them. To address these issues, this paper proposes a multichannel environmental sound segmentation method that includes discrete SSLS and SSSC blocks. By separating the blocks, this method prevents overfitting to the relationship between DOA and the class. The SSLS block separates sound sources by each azimuth direction among the complex mixture of sounds, so that it is not necessary to set the number of sound sources in advance. Additionally, we compared multiple types of spatial features.2.3 Sound event localization and detection methods for environmental sound

2.4 Issues of related works

3 Proposed method

Figure 2 shows the overall structure of the proposed method which consists of four blocks: (a) feature extraction, (b) sound source localization and separation (SSLS), (c) sound source separation and classification (SSSC), and (d) reconstruction. (a) STFT was applied to the mixed waveforms. STFT coefficients were decomposed into magnitude, sine and cosine of IPDs, and phase spectrograms. (b) The magnitude spectrograms and sine and cosine IPDs were input into the SSLS block. This block separated the magnitude spectrograms for each azimuth direction from the mixture. (c) The outputs of the SSLS block were input into the SSSC block. Because the SSLS block could not fully separate magnitude spectrograms for each azimuth direction from the mixture, it additionally separated magnitude spectrograms for each class from the output of the SSLS block. (d) The time domain signals were reconstructed using inverse STFT.

Complete architecture of the proposed method comprising feature extraction, SSLS, SSSC and reconstruction. STFT was applied to the waveforms. The SSLS block predicted spectrograms of each direction from the input spectrograms. Since the SSLS block could not separate sound sources that arrived from a close direction, the SSSC block performed not only classification but separated magnitude spectrograms for each class from the output of the SSLS block. Inverse STFT was applied to reconstruct the time domain signal

Normally, there is no correlation between DOA and the class unless the position and orientation of the microphone array is fixed. If a sufficient dataset is unavailable, conventional environmental sound segmentation method using the single network overfits to the relationship between the DOA and the class. In contrast, the proposed method prevents such overfitting by explicitly separating the SSLS block from the SSSC block. The method was trained in two stages. Initially, we trained with the SSLS block alone, then the SSSC block was trained using the output of the SSLS block as input with the weights of the SSLS block fixed. It is not necessary to set the number of sound sources in advance, because all sound sources in all azimuth directions are separated simultaneously. Although the SSLS block could not separate sound sources that arrived from a close direction, the SSSC block performed not only classification but simultaneously separated the magnitude spectrograms for each class from the output of the SSLS block.

3.1 Feature extraction

We used the following spectral and spatial features proposed in [26, 27]. The input signals were multichannel time-series waveforms with a sampling rate of 16 kHz. STFT was applied using a window size of 512 samples and a hop length of 256 samples. A reference microphone, p, and other non-reference microphones, q, were selected. Magnitude spectrograms of the reference microphone were used as spectral features. The magnitude spectrograms were normalized to the range of [0, 1]. Meanwhile, sine and cosine of IPDs were used as spatial features as,

where 𝜃t,f,p,q is the IPD between the STFT coefficients xt,f,p, and xt,f,q, at time, t, and frequency, f, of the signals at reference microphone, p, and non-reference microphones, q.

3.2 Sound source localization and separation

Figure 3 shows the overview of the SSLS block. The SSLS block predicted 360 / n spectrograms of each azimuth direction at angular resolution, n. We used Deeplabv3+, which has been originally proposed for semantic segmentation of images [28], for our proposed method. Deeplabv3+ has been reported to improve the segmentation performance for environmental sounds with various event sizes [17].

Figure 4 shows the structure of Deeplabv3+ used for SSLS block. Similar to U-Net [7], which is often used as a conventional model, it has an encoder-decoder structure. The encoder block is a convolutional neural network that extracts high-level features. Xception [29] module was used for feature extraction and it outputs a feature map that is 1/16 of the original spectrogram size. The biggest difference with U-Net is a pyramid structure with dilated convolution [

SSLS block predicted 360 / n spectrograms of each azimuth direction at angular resolution, n. While PIT requires the number of sound sources to be set in advance [15, 16], this method does not require the number of sound sources to be set in advance. The spectrograms in the direction where no sound sources exist were zero. If multiple sources exist in the same direction, the SSLS block cannot separate the sound sources. But the sources that could not be separated by the SSLS block were separated by the SSSC block described below. Note that the SSLS block predicts a spectrogram for each azimuth angle regardless of the class, so the network does not overfit to the relationship between the DOA and the class.

Equation (3) represents the loss function used in the training. X denotes the input spectrograms of the mixed signal and Yssls denotes the magnitude spectrograms of the target sounds. The mean squared error (MSE) between the output of the network and the target sounds was used for the training:

where f(X) is the mask spectrograms generated by the model and Xmag is the magnitude spectra of the reference microphone. The model was trained for 100 epochs at a learning rate of 0.001 using the ADAM optimizer [33].

3.3 Sound source separation and classification

Figure 6 shows the structure of the SSSC block. The output of SSLS block has n outputs for the n directions, but spectrograms separated by SSLS block were input into SSSC block one by one and segmented into each class. Although, the spectrograms of all directions could be input to the SSSC block at the same time as multichannel inputs, the relationship between the direction and class of the sound sources would be overfitted. The output in the direction where a sound source did not exist was not input to the SSSC block as,

where n represents the angle index of the SSLS output. In the normalized magnitude spectra, 0.2 corresponded to a maximum volume of approximately -96 dB. The spectrograms of the mixed sounds were concatenated and input to Deeplabv3+ because the spectrograms separated by SSLS block might be missing necessary information. Unlike Deeplabv3+ described in Section 3.2, the mixed spectrogram and separated spectrogram by SSLS block were input and the spectrograms for each class were output. Note that the outputs of the SSLS block were input to the SSSC block one by one, so that it has no spatial features. Since the SSSC block predicts the spectrogram for each class regardless of the DOA, the network does not overfit to the relationship between the DOA and the class. Equation (5) represents the loss function used in the training. Xsssc denotes the input spectrograms and Y denotes the magnitude spectrograms of the target sound. The MSE loss between the output spectrograms and the targets was used in the training:

where f(Xsssc) is the mask spectrograms generated by the model and Xmag is the magnitude spectra of the reference microphone. The model was trained for 100 epochs at a learning rate of 0.001 using the ADAM optimizer [33].

4 Evaluation

This section describes the dataset, metrics, experimental conditions and experimental results.

4.1 Dataset

To train the network that deals with SSL, SSS, and classification, it was necessary to prepare pairs of mixed sounds and separated sound source signals of which DOA and class are known. Similar datasets, DCASE2020 Task3 dataset [34] and the Free Universal Sound Separation (FUSS) dataset [35] are used for SELD and sound separation in DCASE 2020 Task4, but, DCASE2020 Task3 dataset do not have the separated sound source signals and FUSS dataset do not have DOA labels, respectively. We created a dataset with dry sources of 75 classes using a method previously described [17]. Figure 7 shows the experimental settings for the simulations. Specifically, an 8-channel circular microphone array of radius 0.1 m was used. Dry sources were randomly selected from the corpus described in Table 1 and used as single point sound sources. Distance, d, between the center of the microphone array and a sound source was 1.0 m. The DOA of the sound source, 𝜃, was randomly selected at 5 degree intervals. The impulse response from the sound source to the microphone array was described as h(t,d,𝜃) = [h1(t,d,𝜃),h2(t,d,𝜃),⋯,hm(t,d,𝜃)], where m indicates the number of microphones. The impulse response in free space was simulated. The impulse response was convolved to each dry source, si(t), with a random time delay, tr, as,

in which ∗ indicates a convolution operator. Each simulated sound was added, then sounds recorded in a restaurant and hall were mixed as background noise, n(t), as,

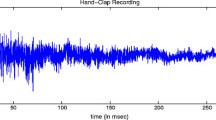

where I indicates the number of sound sources in a mixture. I = 3 was used in this experiment. The background noise, n(t), was added to all time frames of 8 channels to obtain an average signal-to-noise ratio of about 15 dB. These sounds were assumed to be diffuse noise. Each sound was of 4.192 s duration. Figure 8 shows an example of the created dataset. The simulated signals were multichannel signals, but only one channel is shown in Fig. 8. The upper spectrogram was that of the dry source and was defined as the ground truth (Fig. 8a). The lower spectrogram was that of the mixed sound input into the neural network (Fig. 8b). The training and evaluation sets were 10,000 and 1,000 data points, respectively. The dry sources that was used to create the training set was not used to create the evaluation set.

Dataset creation procedure. Each dry source was added after the impulse response was convolved with a random time delay. Mixed sounds were input data, and the sound source of each class before mixing was used as the ground truth. The dry sources that was used to create the training set was not used to create the evaluation set. a Ground truth b Mixed spectrogram

4.2 Metrics

Environmental sound segmentation, in which SSL, SSS, and classification were performed simultaneously, was evaluated by root mean squared error (RMSE) as,

where Y and \(\hat {Y}\) represent the magnitude spectra of the ground truth and separated sound, respectively. N represents the number of time-frequency bins. Silent sections were excluded from the evaluation.

4.3 Comparison between spatial features using SSLS block

To compare sound source separation performance by spatial features, preliminary experiments were conducted. In addition to sine and cosine of IPDs described in Section 3.1, we compared the complex STFT coefficients and IPD. The complex STFT coefficients were defined as,

where xt,f, represents the STFT coefficients of each microphone at time frame, t, and frequency bin, f. IPDs were defined as,

where 𝜃t,f,p,q is the IPD between the STFT coefficients xt,f,p, and xt,f,q, at time, t, and frequency, f, of the signals at reference microphone p and non-reference microphones q. Note that STFT coefficients and IPDs were normalized to the range of [-1, 1].

These spatial features were input into the SSLS block and evaluated against the output of the SSLS block using the RMSE. The angular resolution n was set to 45 degrees, and U-Net was used for the SSLS block in addition to Deeplabv3+. Here, sine and cosine of IPDs are continuous function, while 𝜃t,f,p,q is a periodic function which may make learning unstable.

Table 2 summarizes the results of the simulation experiments. Regardless of the model, the RMSE was smaller using sine and cosine of IPDs. Therefore, this results show that using sine and cosine of IPDs which are continuous functions as spatial features improves the sound source separation performance. We used sine and cosine of IPDs as spatial features in the following evaluation.

4.4 Analysis of the overfitting to the relationship between the DOA and the class

We conducted a preliminary experiments to analyze the overfitting to the relationship between the DOA and the class. Specifically, the RMSE of single-channel and multichannel input were compared. Unlike single-channel input, multichannel input has spatial features due to the DOA in addition to spectral features due to the class. If the network overfits to the relationship between the DOA and the class, the performance should be worse than that of the single-channel input. In addition to the 75-class dataset described in Section 4.1, a 3-class dataset was used. If the number of data is the same, performance degradation due to overfitting is less likely to occur in the 3-class dataset than in the 75-class dataset. In this experiment, we used a single network structure as shown in Fig. 10a to analyze the overfitting when SSL, SSS, and classification are implicitly performed in a single network. Deeplabv3+ and U-Net were used, and sine and cosine of IPDs validated in Section 4.3 were used as spatial features, respectively.

Table 3 shows the results of the experiments trained with 10,000 data points. Comparing the Deeplabv3+ and U-Net, the RMSE of Deeplabv3+ was smaller than that of U-Net in all experimental conditions. Thus, Deeplabv3+ has higher segmentation performance than U-Net. Subsequently, the results of single-channel and multichannel input are compared. The RMSE was smaller for the multichannel input in the 3-class dataset, whereas the RMSE was larger for the multichannel input in the 75-class dataset. This suggests that the network overfitted to the relatinship between the DOA and the class because the number of data was not sufficient for the variance of the 75-class dataset, whereas the spatial features were efficiently exploited in the 3-class dataset.

Figure 9 shows the difference in RMSE between single-channel and multichannel input on the 3-class dataset. Positive values mean that the RMSE of multichannel input was larger than that of single-channel input. When the number of data is not sufficient, the performance is deteriorated compared to the single-channel input because the network is likely to overfit to the relationship between the DOA and the class. Therefore, we found that the network overfits to the relationship between the DOA and the class if sufficient dataset is not available.

Relationship between the number of training data and RMSE difference between single-channel and multichannel input. Positive values mean that the RMSE of multichannel input was larger than that of single-channel input. When the number of data is not sufficient, the performance is deteriorated compared to single channel because the network is likely to overfit the relationship between DOA and class

4.5 Comparison between various model structures

In environmental sound segmentation, it is necessary to optimize both spectral and spatial features, since it is necessary to perform SSL, SSS, and classification simultaneously. However, as previously mentioned, there is no correlation between the DOA and its class, which may lead to overfitting if the dataset is insufficient. Therefore, we compared four different structures, as shown in Fig. 10.

Figure 10a and b show end-to-end structures which simultaneously perform SSL, SSS, and classification in the same neural network. The difference between them is the loss functions used. The single-loss end-to-end structure shown in Fig. 10a uses only per-class losses. It does not use losses related to DOA, which may prevent the use of spatial features. The multi-loss structure as shown in Fig. 10b is an extension of the single-loss end-to-end structure. This is a common structure of SELD methods which simultaneously performs DOA estimation and SED. This structure uses losses related to DOA and the class. However, learning two uncorrelated losses at simultaneously may lead to overfitting to the relationship between the DOA and the class. In contrast, Fig. 10c and d consist of two blocks. Both structures separate the SSLS block, which performs SSL and SSS simultaneously using spatial features, from the block which performs classification using spectral features. The structure shown in Fig. 10c consists of a cascade of the SSLS block and classification block using a convolutional neural network. Since the SSLS block could not separate sound sources that arrive from a close direction, which degrades performance due to errors caused by the SSLS block. The proposed structure uses an SSSC block instead of classification block. Although it is difficult for the SSLS block using spatial features to completely separate sound sources arriving from a close direction, the SSSC block improves separation performance based on the spectral features.

4.6 Results and discussion

Table 4 summarizes the results of the simulation experiments. The baselines were single-loss end-to-end methods using U-Net and Deeplabv3+ [17]. To verify the performance improvement due to spatial features, single-channel inputs were compared with multichannel inputs. Apart from input and output layers, each model had the same structure to compare the performance of the proposed method. The angular resolution, n, in the SSLS block was 45 degrees. In the baseline single-loss end-to-end method, the RMSE was not reduced by the use of multichannel inputs in either model. The single-loss end-to-end structure does not utilize the losses to DOA, and therefore the spatial features may not be well exploited. The multi-loss end-to-end structure, an extension of the single-loss end-to-end method, had a larger RMSE than the single-loss end-to-end structure. Since there is no correlation between DOA and class, optimizing them simultaneously may have overfitted the DOA and class relationship. In contrast, the results of the SSLS + Classification structure showed a relatively small RMSE regardless of the model. This structure separates the SSLS and the classification blocks, which perform SSL and SSS based on spatial features, and thus does not overfit to the relationship between the DOA and the class. However, the SSLS block does not completely separate sounds arriving from a close direction, and thus the errors caused by the SSLS block accumulate. Therefore, no performance improvement was observed compared to the single loss end-to-end using Deeplabv3+. The proposed structure, in which the classification block of the SSLS + Classification structure was replaced by the SSSC block, clearly had a smaller RMSE; the SSLS + Classification structure did not have the ability to correct the errors that occurred in the SSLS, but the SSSC block with inclusion of the separation feature reduced the propagation of errors in the SSLS block.

Figure 11 shows an example of the segmentation results. For clarity, magnitude spectrograms predicted for each class are displayed as a single spectrogram colored for each class. Overlaps of multiple sound sources are displayed in different colors. With the end-to-end approach, performance at the overlap area was degraded. Presumably, the spatial features were not trained effectively because they did utilise losses related to DOA. In contrast, the multi-loss end-to-end structure clearly degraded the performance of the system. Since the DOA and its class were not correlated, it is possible that they did not converge on the global minima. Thus, the method that explicitly separated the SSLS block from the class classification relatively reduced the RMSE. However, as shown in the top panel of Fig. 11d, since the two sound sources represented by orange and blue existed in close directions, the SSLS block could not separate them. The proposed method reduced the RMSE further by providing the SSSC block with a separation function to correct the errors that were not separated by the SSLS block, as shown in Fig. 11e. Figure 12 shows an example of SSLS block output and SSSC output. As shown in Fig. 12b, the SSLS block using spatial features could not separate the sound sources arriving from a close direction, whereas the SSSC block using spectral features was able to improve the segmentation performance. Thus, by explicitly training the SSLS and SSSC blocks in two stages, the spatial features were effectively exploited in the SSLS block and the SSSC block compensated for the drawback of the SSLS block using spectral features.

An example of SSLS outputs and SSSC outputs. SSLS block predicted spectrograms of each direction from the input spectrograms. Although the SSLS block could not separate sound sources arrived from a close direction, the SSSC block separated the magnitude spectrograms for each class from the output of the SSLS block. a Mixture. b Output of SSLS. c Output of SSSC

5 Conclusions

This paper proposed a multichannel environmental sound segmentation method that does not require the number of sound sources to be set in advance and does not overfit to the relationship between the DOA and the class. This method prevents overfitting to the relationship between DOA and the class by explicitly separating the SSLS block from the SSSC block. Simulation experiments using the created datasets including 75-class environmental sounds showed that the proposed method improved the segmentation performance compared to conventional methods. When the sound sources came from a close direction, the SSSC block was able to correct the sound sources that could not be separated by the SSLS block.