Abstract

Mean-semivariance and minimum semivariance portfolios are a preferable alternative to mean-variance and minimum variance portfolios whenever the asset returns are not symmetrically distributed. However, similarly to other portfolios based on downside risk measures, they are particularly affected by parameter uncertainty because the estimates of the necessary inputs are less reliable than the estimates of the full covariance matrix. We address this problem by performing PCA using Minimum Average Partial on the downside correlation matrix in order to reduce the dimension of the problem and, with it, the estimation errors. We apply our strategy to various datasets and show that it greatly improves the performance of mean-semivariance optimization, largely closing the gap in out-of-sample performance with the strategies based on the covariance matrix.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The poor out-of-sample performance of the mean-variance portfolio optimization procedure introduced by Markowitz (1952) is very well known and documented in the literature (see, e.g., Michaud, 1989; Broadie, 1993; Chopra andZiemba, 1993). Given a set of risky assets and a risk-free asset, the optimal mean-variance portfolio weights are given by the closed form solution

where \( \mu \) is the vector of mean returns, \(\Sigma \) is the covariance matrix and \(\gamma \) is the risk aversion coefficient. Analogously, if the investor specifies his preferences through a desired mean portfolio return instead of a risk aversion level, the weights

minimize the portfolio variance given the target return Re. Since \(\mu \) and \(\Sigma \) are unknown in practice, they have to be estimated. The classical plug-in approach consists in computing the sample estimates \( {\hat{\mu }} \) and \( {\hat{\Sigma }} \), and treating them as if they were the true values. However, the plug-in approach leads to disappointing outcomes because of estimation errors (Kan and Zhou , 2007). Moreover, the mean-variance utility framework rests on unrealistic assumptions, and the development of alternative approaches for the optimization and evaluation of investments is an active field of research (see e.g. Grau-Carles et al., 2019; Amde-Manesme & Barthlmy, 2020; Geissel et al., 2022) for some recent works in this area). Nevertheless, the impact of estimation errors can frustrate the attempts to obtain portfolios more in line with the investors’ real preferences. In fact, the problem gets even worse if the variance is replaced by downside risk measures (e.g. semivariance or CVaR), which are likely more suitable to quantify risk but are also more affected by estimation errors (Rigamonti , 2020). While studies on real empirical data have shown the importance of targeting the semivariance (see e.g. Santamaria & Bravo, 2013), up to now limited research has been done to address parameter uncertainty in this framework. Sharma et al. (2017) propose an Omega-CVaR optimization approach to avoid some of the difficulties related to the estimation of the return distribution shape or moments. Recently, fuzzy portfolio selection models have been developed as a novel approach to target various risk measures, including those used in a downside risk framework (see e.g. Chen et al., 2018a, b; Yue et al., 2019).

In this paper, we address the problem by taking as a starting point one of the solutions devised to mitigate the impact of estimation errors in a mean-variance framework. We build on the strategy introduced by Chen and Yuan (2016), who propose to estimate a subspace mean-variance portfolio instead of the theoretically optimal mean-variance portfolio. This is done by considering only the first d eigenvectors of the sample covariance matrix \( {\hat{\Sigma }} \) instead of the full matrix. This idea is consistent with the theoretical background of several mainstream asset pricing models such as the capital asset pricing model and the arbitrage pricing theory. In those models asset returns are driven by systematic risks captured by factors. Methodologically, we exploit a principal component analysis to extract those factors.

If the reduced dimension d is carefully chosen, the systematic error caused by restricting the investment options is outweighed by the lower estimation error achieved thanks to the reduced dimension of the problem. Inspired by their setup, we apply a similar principle to optimize mean-semivariance and minimum semivariance portfolios constructed using the approximation of the semicovariance matrix with the technique introduced by Rigamonti et al. (2021). By focusing on a risk measure that only penalizes the negative deviations of the asset returns we might capture a more realistic reflection of the investor’s preferences.

For the choice of d, Chen and Yuan (2016) rely on a criterion introduced by Bai and Ng (2002) that depends on the length of the estimation window. However, when computing the semicovariance matrix not all information available from the estimation window is incorporated. Therefore, the Bai and Ng (2002) criterion may not be suitable. To overcome this obstacle, we transform the semicovariance matrix in a downside correlation matrix, and select d using the Minimum Average Partial (MAP) rule introduced by Velicer (1976), which does not depend on the length of the estimation window. We apply our portfolio rule to three empirical datasets of different size. The results show that our strategy outperforms the portfolios based on the full semicovariance matrix, and performs well even compared to portfolios that target the variance. Moreover, contrary to existing approaches in the literature, our strategy does not involve complicated algorithms to compute the optimal weights, which are instead obtained through simple closed form solutions.

This paper provides three main contributions. First, we address the problem of parameter uncertainty for portfolios based on the semicovariance matrix. Second, we perform for the first time a principal component analysis (PCA) in a downside variability framework. Finally, we show the effectiveness of MAP for asset allocation decisions.

The remainder of the paper is organized as follows. Section 2 describes the dimension reduction methodology and the asset allocation procedure. Section 3 discusses details of principal component analysis when applied to the semicovariance matrix. Section 4 provides an empirical application to real data. Section 5 concludes.

2 Methodology

2.1 Subspace efficient portfolios

The idea which our approach is based on is analogous to that of Chen and Yuan (2016). For an investor with a certain risk aversion \(\gamma \), given the sample estimates \( {\hat{\mu }} \) and \({\hat{\Sigma }}\) and the number d of eigenvalues to retain, they compute a subspace mean-variance portfolio whose weights are

where \({\hat{\theta }}_{k}\) is an eigenvalue of \( {\hat{\Sigma }} \) and \({\hat{\nu }}_{k}\) the corresponding eigenvector, with \({\hat{\theta }}_{1}\ge {\hat{\theta }}_{2}\ge \cdots \ge {\hat{\theta }}_{d}\). If we consider an investor with a desired mean portfolio return Re instead, the portfolio weights are

For the choice of d, Chen and Yuan (2016) rely on a criterion introduced by Bai and Ng (2002), and estimate it as

where N is the number of assets, T is the estimation window and \( k_{max} \) is prespecified.

An alternative decomposition based on the eigenvalues of the correlation matrix is proposed by Peralta and Zareei (2016). Inspired by the network eigenvector centrality they decompose the weights \({\hat{w}}^{*}_{mv}\) in the following way:

where \( {\hat{\lambda }}_{k} \) is an eigenvalue of the correlation matrix \(\Omega \) and \({\hat{\nu }}_{k}\) the corresponding eigenvector, and \( {\hat{\mu }}^{*} \) for every asset i corresponds to \({\hat{\mu }}_{i}/{\hat{\sigma }}_{i}\), with \({\hat{\mu }}_{i}\) and \({\hat{\sigma }}_{i}\) the estimated mean and standard deviation of that asset respectively. The optimal mean-variance weight for each asset i is then given by \( {\hat{w}}_{mv,i}={\hat{w}}^{*}_{mv,i}/{\hat{\sigma }}_{i}\).Footnote 1

Peralta and Zareei (2016) consider assets as a network and concentrate their attention on the first eigenvector of the correlation matrix \(\Omega \). They set the elements on the diagonal to zero (to discard loops). Thus, they compute the eigenvector centrality values of the network of assets, and build an asset allocation rule based on it. We take a different approach and rewrite Eq. (6) asFootnote 2

so that the weight for each asset i can then be obtained as \( {\hat{w}}_{mv,cor,i} = {\hat{w}}^{*}_{mv,cor,i}/{\hat{\sigma }}_{i} \). In this way we can apply the same dimension reduction technique proposed by Chen and Yuan (2016), but using the correlation matrix instead of the covariance matrix. Equations (4) and (7) give the same result (the mean-variance weights obtained with the plug-in approach) when \( d=N \). However, when \( d<N \), weights \( {\hat{w}}_{cov} \) and \( {\hat{w}}_{cor} \) might differ, as the eigenvalues and eigenvectors of the correlation matrix are different from those of the covariance matrix, and not directly related to them (Jolliffe and Cadima , 2016).

The estimation error in the sample mean is typically so big that minimizing the variance leads to portfolios with a higher Sharpe ratio than those computed via mean-variance optimization (see e.g. Jagannathan & Ma, 2003). Therefore, we also consider the minimum-variance portfolio, whose weights are given by

where \( \mathbf{1} \) is a vector of ones with length equal to the number of risky assets. The true value of \(\Sigma \) is unknown and has to be replaced by the sample estimate or the shrinkage estimate proposed by Ledoit and Wolf (2004). The strategy proposed by Chen and Yuan (2016) can be modified to compute a subspace minimum-variance portfolio, with weights given by

Finally, in the framework of our strategy based on the correlation matrix, the subspace minimum-variance portfolio is computed using

where \( \epsilon \) for every asset i corresponds to \(1/{\hat{\sigma }}_{i}\), and the weight for each asset i can then be obtained as \( {\hat{w}}_{v,cor,i} = {\hat{w}}^{*}_{v,cor,i}/{\hat{\sigma }}_{i} \).

We now need to decide how many eigenvalues should be retained, and this is where the advantage of focusing on the correlation matrix instead of the covariance matrix becomes apparent. Warne and Larsen (2014) test several rules and find that the best performing one for factor analysis appears to be the Minimum Average Partial (MAP) introduced by Velicer (1976). This method requires to perform a PCA on the correlation matrix. Before describing how MAP works, we quickly recall the concept of partial correlation. Partial correlation measures, on a scale from − 1 to 1, the linear association between two variables X and Y, while controlling for the effect of a set of additional variables \( \mathbf{Z} \).Footnote 3 More formally, the partial correlation \( \rho _{X,Y\cdot Z} \) between X and Y controlling for \( \mathbf{Z} \) is given by the correlation coefficient between the residuals obtained from the linear regression of X on \( \mathbf{Z} \) and Y on \( \mathbf{Z} \). If one variable is partialed out of correlation we have a first order partial correlation; if two variables are partialed out we get a second order partial correlation, and so on. First order partial correlations can also be computed, as in Velicer (1976), using the formula

where i and j are any pair of the N variables and \(\nu _{k}\) is one of the N principal components. When we partial out a component we are removing both systematic and individual variability from the data. Removing the former decreases the value of the numerator, while removing the latter decreases the value of the denominator. As k goes from 1 to N, less systematic and more individual variability gets removed when the \(\nu _{k}\) component is partialed out, which means that the numerator decreases slower and the denominator faster. There is a value of k at which more individual than systematic variability gets removed from the data, which makes the denominator drop more than the numerator. This results in \( \rho _{i,j\cdot \nu _{k}} \) stop** going down and starting going up. The MAP criterion involves computing, for every pair of variables, the value of \( \rho _{i,j\cdot \nu _{k}} \), and from these values the average squared partial correlation:

with \( i\ne j \). As k goes from 1 to N, the value of \( MAP_{k} \) decreases until it reaches a minimum before starting to increase, and that k for which \( MAP_{k} \) is minimum is the optimal number of components/factors to retain.

Despite the good performance found by Warne and Larsen (2014), MAP is not commonly employed in finance, and its application is mostly confined to psychometrics. One notable exception is given by Claeys and Vašíček (2004), who use MAP in their study on spillover and contagion effects in the European sovereign bond markets. To the best of our knowledge, we are the first to apply MAP in the context of financial asset allocation.

MAP does not depend on the sample size, and can therefore be directly applied even to mean-semivariance and minimum semivariance optimization problems, that require as input a semicovariance matrix. To understand why this is problematic in this setting, consider the formula for the semivariance of a single asset, provided by Markowitz (1959):

where T is the estimation window, \( r_{t} \) is the return of the asset in period t, and the benchmark B is the value below which the investor considers variability to be downside variability. In Eq. (13) we have the full sample size T, but to compute the sum we only consider the periods in which the return of the asset is lower than B. The computation of the semicovariance matrix \(\Sigma _{B,p}\), described in Markowitz (1959), is based on an analogous principle. This makes the Bai and Ng (2002) criterion unsuitable to be used, since we are not taking advantage of all the information included in the estimation window. Using T as parameter in Eq. (5) would be inappropriate, and so would be using the number of periods used to compute the numerator. Relying on MAP solves this issue.

There is one issue that needs to be carefully considered when implementing MAP. The method tends to overestimate the number of components to retain when factors are correlated (a frequent situation in real datasets) and when the sample size is not big enough. In order to correct for this effect we take advantage of the Kaiser-Guttman criterion, first proposed by Guttman (1954) and then popularized by Kaiser (1960). Applied to a correlation matrix, this rule provides an upper bound for the number of components to be retained by stating that only those with an eigenvalue greater than 1 should be retained.Footnote 4 Like MAP, this rule does not rely on the sample size used for the estimation, and can therefore be applied in our setting. In short, we use MAP to determine the exact number of components to be retained, but restrict this choice to the components associated to eigenvalues greater than 1 in order to prevent overfactoring.

2.2 Semicovariance matrix estimation

As optimization problems that use \(\Sigma _{B,p}\) are intractable (see Estrada, 2008), we need to use as input some matrix that approximates it. One such strategy is proposed by Estrada (2008), who devises a heuristic that involves computing the elements of the semicovariance matrix with respect to when the single assets, and not the portfolio as a whole, underperform the benchmark. This procedure yields an exogenous matrix, contrary to the exact semicovariance matrix \(\Sigma _{B,p}\), which is endogenous, i.e. its terms change every time the portfolio weights change. However, the approximation error in this matrix can be substantial, and this can seriously hurt the portfolio performance (see Cheremushki, 2009; Rigamonti, 2020). We therefore resort to the smoothed semivariance (SSV) estimator introduced by Rigamonti et al. (2021), which provides an extremely precise approximation of the sample semicovariance matrix using a simple re-weighting scheme that simultaneously estimates the optimal portfolio weights and the corresponding semicovariance matrix \({\hat{\Sigma }}_{B}\). Depending on the value of a single parameter \(\theta \) the algorithm can perform a smoothing of different intensity of the estimation. This leads to the estimation of the sample semicovariance matrix when \(\theta \rightarrow 0\), of the sample covariance matrix when \(\theta \rightarrow \infty \), and a mixture of the two with an intermediate value. Since we are interested in the sample semicovariance matrix, we always employ the SSV estimator with \(\theta =1e^{-5}\). While Rigamonti et al. (2021) define the SSV estimator for the minimum semivariance portfolio, we can use it to estimate also the semicovariance matrix of a mean-semivariance portfolio simply by computing the mean-semivariance weights in the re-weighting scheme.

2.3 Optimal portfolios computation and evaluation

As we argued, using the criterion introduced by Bai and Ng (2002) to select a value for d as done in Chen and Yuan (2016) does not seem appropriate. But if we transform \({\hat{\Sigma }}_{B}\) into a downside correlation matrix we can use MAP (constrained by the upper bound set by the Kaiser-Guttman rule) to select an appropriate d and apply Eq. (7) or (10). The downside correlation matrix \( {\hat{P}}_{B} \) can be computed in a way analogous to how the correlation matrix is obtained from the covariance matrix:

where \( {\hat{D}}^{-1/2} \) is a diagonal matrix in which every ith diagonal element is equal to the inverse of the estimated downside deviation \( {\hat{\sigma }}_{B,i} \) of the asset i.

We now have all the necessary inputs to apply our strategy that mitigates the impact of estimation errors in the semicovariance matrix. All the closed-form solutions available for the mean-variance and minimum-variance problems can be applied exactly as they are when using the SSV estimate of the semicovariance matrix. All we have to do is to replace the input parameter, and we obtain the solution to mean-semivariance and minimum-semivariance problems. This is a big advantage over other notable approaches, like those devised by Harlow (1991), Markowitz et al. (1993) and de Athayde (2001), later improved by de Athayde (2003) and recently revisited by Ben Salah et al. (2018). The plug-in mean-semivariance and minimum-semivariance weights are therefore

and

respectively. The subspace mean-semivariance portfolio is computed using

where \( {\hat{\lambda }}_{k} \) and \({\hat{\nu }}_{k}\) are an eigenvalue and the corresponding eigenvector of the estimated downside correlation matrix \({\hat{P}}_{B}\), and the elements of \( {\hat{\mu }}^{*}_{B} \) correspond to \({\hat{\mu }}_{i}/{\hat{\sigma }}_{B,i}\) for each asset i. Thus, the asset’s weight is \( {\hat{w}}_{ms,cor,i} = {\hat{w}}^{*}_{ms,cor,i}/{\hat{\sigma }}_{B,i} \), in which \( {\hat{\sigma }}_{B,i} \) is the downside deviation of the asset i. Analogously, the subspace minimum-semivariance portfolio is given by

where for each asset i \( \epsilon _{B} \) corresponds to \(1/{\hat{\sigma }}_{B,i}\), and the asset’s weight can then be obtained as \( {\hat{w}}_{s,cor,i} = {\hat{w}}^{*}_{s,cor,i}/{\hat{\sigma }}_{B,i} \).

Since the Sharpe ratio measures risk across the full distribution of returns (by the standard deviation), it is not very suitable to evaluate the out-of-sample results in the framework of our research question. Instead, we use as main performance indicator the Sortino ratio (Sortino and Prince , 1994):

where R and \( \sigma _{B} \) are respectively the mean and downside deviation of the portfolio returns.

3 Principal component analysis on the semicovariance matrix

In this section we discuss the interpretation and implications of performing PCA on the semicovariance matrix. If the asset returns follow a symmetric distribution, focusing on the variance or on the semivariance makes no difference, and the investor should focus on the former, which is easier to estimate. However, in the presence of skewed return distributions the semivariance is a more suitable measure of risk, at least theoretically (see Rigamonti, 2020, for a more thorough discussion). The same reasoning holds when we perform PCA. When PCA is performed on the covariance matrix, the principal components explain the variance in the data. If instead we perform PCA on the semicovariance matrix, the principal components explain the semivariance in the data, that is, the variability only below a certain threshold.

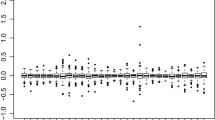

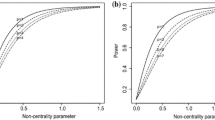

To illustrate this point, we generate 10 series of 500000 returns using the moment-matching algorithm devised by Høyland et al. (2003), first with skewness equal to 0 for all the assets, and then equal to 1.3. The mean, covariance matrix and kurtosis are set in both cases equal to the respective sample moments of the returns of the Fama-French 10 industry portfolios from July 1969 to June 2021. Figure 1a and b show the loading plots obtained by performing PCA on the covariance and semicovariance matrix (with \( B=0 \)) estimated on the series with symmetrically distributed returns. As we can see, the two graphs look very similar, with only minor differences. As a result, analyzing the variability or the downside variability in the data is theoretically equivalent.

Let us now focus on Figure 2a and b. They show the loading plots obtained when performing PCA on the covariance and semicovariance matrix of the positively skewed returns. The two plots look completely different from each other, as the loadings in the first two loading vectors change significantly. This means that the information captured by the principal components is very different, which in turn shows that the information contained in the covariance and semicovariance matrix is different due to the skewness in the data. Moreover, Figs. 1a and 2a are extremely similar (as the covariance matrix cannot appreciate the different skewness), while Figs. 1b and 2b look entirely different.

Loading plot with PCA performed on a) the covariance matrix (left) and b) the semicovariance matrix (right). For all variables skew = 0. The estimation is based on the simulated data in which the mean, covariance matrix and kurtosis are set to the sample moments of the returns of the Fama-French 10 industry portfolios from July 1969 to June 2021

Loading plot with PCA performed on a) the covariance matrix (left) and b) the semicovariance matrix (right). For all variables skew = 1.3. The estimation is based on the simulated data in which the mean, covariance matrix and kurtosis are set to the sample moments of the returns of the Fama-French 10 industry portfolios from July 1969 to June 2021

4 Empirical application

We now provide an out-of-sample empirical application of our strategy. Firstly, we want to check that using MAP improves over using the Bai and Ng (2002) criterion when applied to the semicovariance matrix as if we were working with the covariance matrix like in Chen and Yuan (2016). We then compare our strategy to various competing optimal portfolios to see if we can actually improve the effectiveness of downside risk optimization. We always work with excess returns and set \( B = 0 \), so that we can have the benchmark equal to the risk-free rate, which has some desirable theoretical properties (see Hoechner et al., 2017). The covariance matrix is always estimated using the Ledoit and Wolf (2004) shrinkage estimator.

4.1 MAP vs Bai and Ng (2002) with downside risk

In order to compare the accuracy of MAP and the Bai and Ng (2002) criterion in selecting the optimal number of factors for the downside correlation and semicovariance matrix respectively, we compute the subspace minimum semivariance portfolio with both methods.Footnote 5 We also consider the unrestricted minimum semivariance portfolio to check how much we improve over it. We consider two different datasets of monthly returns: the 30 and 48 industry portfolios (value weighted) from the Kenneth R. French Data Library spanning from July 1969 (previous dates are not considered because of missing data in the 48 industry portfolios) to June 2021. We use a 180-month rolling window, so we obtain 444 out-of-sample returns.

The downside deviation achieved by the three strategies is reported in Table 1. With the 30 industry portfolios, using the Bai and Ng (2002) criterion does not lead to any improvement over the unrestricted portfolio, while our strategy that uses MAP achieves a lower downside deviation. With the 48 industry portfolios the Bai and Ng (2002) criterion improves over the unrestricted portfolio, but much less than our strategy. Overall, as expected, these results show that MAP is better suited in selecting the optimal number of factors for the subspace minimum semivariance portfolio compared to the Bai and Ng (2002) criterion. Investors who dislike negative deviations and employ MAP achieve a superior performance.

4.2 Comparison between portfolio optimization strategies

We now look at how our strategy performs compared to alternative portfolio optimization strategies. In addition to the downside deviation and the Sortino ratio, we also consider two other popular downside risk measures: the Conditional Value at Risk (CVaR) and the Omega-Sharpe ratio. The CVaR is based on the Value at Risk (VaR), to which is considered preferable, as unlike the VaR it is a coherent risk measure (see e.g. Sarykalin et al., 2008; Yamai & Yoshiba, 2002). The Omega-Sharpe ratio is a simple modification of the classical Omega ratio, which leads to the same portfolio ranking but has a more familiar form (Bacon , 2008). Moreover, we look at the mean excess return and the Sharpe ratio. Finally, we compute the turnover rate at each period t as in DeMiguel et al. (2009):

where i indicates a risky asset. We then report the average rate computed between all the periods (indicated as “TO” in the tables). An average turnover of 1 means that at each period, on average, an amount of assets equal to 100% of the value of the portfolio has to be traded.

We aim to determine which strategy works best for a real investor. Therefore, it is important to work with individual assets in order to preserve the coskewness structure, that is even more important than the idiosyncratic skewness (Albuquerque , 2012). As long series of returns are not available for a very large number of individual stocks, we work with weekly data instead of monthly data, that allow for more out-of-sample returns within a given time frame. We obtain our data from the Alpha Vantage API, which provides historical adjusted prices of single stocks starting from November 1999.Footnote 6 The risk-free rates were downloaded from the Kenneth R. French Data Library.Footnote 7 We consider three different datasets: the first includes 28 of the 30 companies that make up the Dow Jones Industrial Average, the second consists of 66 stocks included in the NASDAQ 100, and the third includes 82 components of the S &P 100.Footnote 8 All series span from November 19, 1999, to June 25, 2021 (the last period for which the risk-free rate is available on the Kenneth R. French Data Library), for a total of 1128 observations. We use a 260-period rolling window for the estimation, obtaining 868 out-of-sample returns. To check the robustness of our results, we also consider an expanding window that starts with 260 periods, obtaining once again 868 out-of-sample returns.

We first compute the subspace mean-semivariance portfolio and compare it with the mean-variance and mean-semivariance portfolios, and with the mean-CVaR portfolio with the confidence interval set to 95%. To compute the mean-CVaR portfolio, we rely on the historical return distribution and use the formulation proposed by Rockafellar and Uryasev (2000). We set the target return at 0.1%. The results are shown in Table 2. While our strategy leads to a higher downside deviation compared to the competing strategies, it achieves a much higher mean return and therefore it always significantly outperforms the other strategies in terms of Sortino ratio, which is the measure that we are targeting. Moreover, we also achieve a much higher Sharpe and Omega-Sharpe ratio. The results obtained with the expanding window, shown in Table 3, are completely in line with those obtained with the rolling window, confirming the superiority of our approach. Investors and hedgers who dislike negative deviations in the returns but like upside deviations (and for whom the Sortino ratio is therefore the crucial performance measure) achieve the best results with our strategy.

We now consider the subspace minimum semivariance portfolio, whose competitors are the minimum variance and minimum semivariance portfolios, both with and without short selling, and the long-only minimum CVaR portfolio. The motivation for computing the minimum semivariance portfolio when the goal is to achieve a high Sortino ratio is the same for computing the minimum variance portfolio when the goal is to achieve a high Sharpe ratio: the estimation error in the sample mean is so large that ignoring the mean altogether improves the results (Jagannathan and Ma , 2003). The additional constraint of excluding short selling can dramatically improve the out-of-sample performance when minimizing risk, as shown by Jagannathan and Ma (2003). Kremer et al. (2018) analyze the performance of several investment strategies and they find that the long-only minimum variance is the best risk minimization strategy. On the same grounds we impose the long-only constraint on the minimum CVaR portfolio, as targeting the CVaR without imposing additional constraints is known to perform poorly (Lim et al. 2011).

The results are reported in Table 4. Our strategy leads to a modest improvement over the unrestricted minimum semivariance portfolio with the Dow Jones Industrial Average, and to a large improvement with the other two datasets. Unsurprisingly, reducing the dimension of the problem is especially beneficial with large asset menus. We also improve in terms of the other two downside risk-based measures and achieve a higher Sharpe ratio, except, for the latter measure, with the Dow Jones Industrial Average. However, compared to the other four strategies, we only achieve a good Sortino and Omega-Sharpe ratio with the NASDAQ 100 dataset, while otherwise we always perform equally or worse in terms of every measure. Table 5 shows the results obtained with the expanding window. Our strategy now leads to a portfolio with a worse downside deviation and CVaR even compared to the unrestricted minimum semivariance portfolio with the two smaller datasets, but we achieve the best results in terms of both measures with the S &P 100. We always achieve a higher Sharpe, Omega-Sharpe and Sortino ratio than the unrestricted minimum variance and minimum semivariance portfolios, but we are not competitive compared to the other three strategies.

Overall, our strategy is very competitive in a setting where the investor with a mean-semivariance utility sets a target return. However, when \(\mu \) is ignored and the investor only minimizes risk, the simple solution of excluding short positions appears to be superior in both cases where the variance and the semivariance are minimized.

We conclude our analysis by computing the dynamics of the value of the different portfolios. Given that our strategy has a higher average turnover rate compared to most of the competitor strategies we considered, we compute the dynamics of wealth net of transaction costs. Following Frazzini et al. (2012), we set transactions costs equal to 10 basis points. Figure 3 shows the results for an investor that sets a target return. Our strategy greatly outperforms all the alternatives, especially when using an expanding estimation window. Figure 4 reports the value of the portfolios when risk is minimized without considering the mean return. Our strategy almost always significantly outperform the unrestricted minimum variance and minimum semivariance portfolios. However, as expected from the results in Tables 4 and 5, we generally perform similarly or worse than the strategies that impose a long-only constraint.

5 Conclusions

We propose a novel portfolio strategy for investors and portfolio managers who are in particular sensitive to downside deviations of asset returns. Efficient asset allocations based on downside risk are hard to achieve because it is especially difficult to estimate the necessary inputs. In this paper we propose for the first time a strategy that directly addresses this problem when estimating the semicovariance matrix of a set of assets. In doing so, we provide two additional original contributions by performing PCA on the semicovariance matrix and on the downside correlation matrix, and by using MAP to select the optimal number of components for asset allocation decisions. This idea is consistent with the theoretical asset pricing models such as the capital asset pricing model and the arbitrage pricing theory in which asset returns are driven by systematic risks captured by factors.

The application of our strategy to a variety of datasets shows that it can considerably improve the out-of-sample performance over portfolios constructed using the unrestricted semicovariance matrix. Our suggested criterion, the MAP, greatly increases the Sortino ratio of the resulting portfolios. Overall, our strategy is very competitive in a setting where the investor sets a target return and performs mean-(semi)variance optimization. Given its relatively high turnover compared to most alternatives considered in this study, one direction for future research could be the refinement of our strategy in order to reduce its transaction costs. This would further increase its attractiveness for the investors, hedgers and portfolio managers, in particular those who dislike negative deviations of asset returns.

Notes

We adopt this notation throughout the paper: a w with a * always indicates values that have to be divided by the standard deviation or downside deviation to obtain the portfolio weights.

See Eq. (7) in Peralta and Zareei (2016): \( w^{*}_{mv} = \frac{Re}{\mu '\Sigma ^{-1}\mu }\sum _{k=1}^d \lambda ^{-1}_{k}\nu _{k}\nu '_{k}\mu ^{*} \), obtained from Eq. (2) by replacing \(\Sigma ^{-1}\) and \(\mu \) at the numerator with the inverse of the correlation matrix \( \Omega ^{-1} = \lambda ^{-1}_{k}\nu _{k}\nu '_{k} \) and \(\mu ^{*}\) respectively.

The partial correlation coincides with the conditional correlation only if the two variables are jointly distributed following a multivariate normal, elliptical, multivariate hypergeometric, multivariate negative hypergeometric, multinomial or Dirichlet distribution (Baba et al. , 2004).

While many scholars in the past have used it to determine the exact number of factors to retain, the Kaiser-Guttman rule is not meant (and does not work well) for this purpose, but only to set an upper bound (Gorsuch , 2015). This can limit overfactoring when applying some factor retention method, which in our case is MAP.

The sets of stocks included in each dataset were obtained by looking at the current components of the given stock index, and selecting those for which the prices were already available at the first time period. In this way we obtain the longest possible series for the largest possible dataset.

References

Albuquerque, R. (2012). Skewness in stock returns: Reconciling the evidence on firm versus aggregate returns. The Review of Financial Studies, 25(5), 1630–1673.

Amédée-Manesme, C., & Barthélémy, F. (2020). Proper use of the modified sharpe ratios in performance measurement: Rearranging the cornish fisher expansion. Annals of Operations Research. forthcoming.

Baba, K., Shibata, R., & Sibuya, M. (2004). Partial correlation and conditional correlation as measures of conditional independence. Australian and New Zealand Journal of Statistics, 46(4), 657–664.

Bacon, C. (2008). Practical portfolio performance measurement and attribution (2nd ed.). John Wiley & Sons Ltd.

Bai, J., & Ng, S. (2002). Determining the number of factors in approximate factor models. Econometrica, 70(1), 191–221.

Ben Salah, H., Chaouch, M., Gannoun, A., de Peretti, C., & Abdelwahed, T. (2018). Mean and median-based nonparametric estimation of returns in mean-downside risk portfolio frontier. Annals of Operations Research, 262(2), 653–681.

Broadie, M. (1993). Computing efficient frontiers using estimated parameters. Annals of Operations Research, 45, 21–58.

Chen, J., & Yuan, M. (2016). Efficient portfolio selection in a large market. Journal of Financial Econometrics, 14(3), 496–524.

Chen, W., Gai, Y., & Gupta, P. (2018). Efficiency evaluation of fuzzy portfolio in different risk measures via DEA. Annals of Operations Research, 269(1–2), 103–127.

Chen, W., Wang, Y., & Mehlawat, M. (2018). A hybrid FA-SA algorithm for fuzzy portfolio selection with transaction costs. Annals of Operations Research, 269(1–2), 129–147.

Cheremushkin, S. V. (2009). Why D-CAPM is a big mistake? The incorrectness of the cosemivariance statistics. Available at SSRN: https://ssrn.com/abstract=1336169.

Chopra, V. K., & Ziemba, W. T. (1993). The effect of errors in means, variances, and covariances in optimal portfolio choice. Journal of Portfolio Management, 19(2), 6–11.

Claeys, P., & Vašíček, B. (2004). Measuring bilateral spillover and testing contagion on sovereign bond markets in Europe. Journal of Banking and Finance, 46(1), 151–165.

de Athayde, G. M. (2001). Building a mean-downside risk portfolio frontier. In F. A. Sortino & S. E. Satchell (Eds.), Managing Downside Risk in Financial Markets, chapter 11. Butterworth-Heinemann.

de Athayde, G. M. (2003). The mean-downside risk portfolio frontier: A non-parametric approach. In S. Satchell & A. Scowcroft (Eds.), Advances in Portfolio Construction and Implementation, chapter 13. Butterworth-Heinemann.

de Valk, S., da Mattos, D., & Ferreira, P. (2019). Nowcasting: An r package for predicting economis variables using dynamic factor models. The R Journal, 11(1), 230–244.

DeMiguel, V., Garlappi, L., Nogales, F. J., & Uppal, R. (2009). A generalized approach to portfolio optimization: Improving performance by constraining portfolio norms. Management Science, 55(5), 798–812.

Estrada, J. (2008). Mean-semivariance optimization: A heuristic approach. Journal of Applied Finance, 18(1), 57–72.

Frazzini, A., Israel, R., & Moskowitz, T. (2012). Trading costs of asset pricing anomalies. Available at SSRN: https://ssrn.com/abstract=2294498.

Geissel, S., Graf, H., Herbinger, J., & Seifried, F. T. (2022). Portfolio optimization with optimal expected utility risk measures. Annals of Operations Research, 309, 59–77.

Gorsuch, R. L. (2015). Factor Analysis. Routledge.

Grau-Carles, P., Doncel, L. M., & Sainz, J. (2019). Stability in mutual fund performance rankings: A new proposal. International Review of Economics and Finance, 61, 337–346.

Guttman, L. (1954). Some necessary conditions for common-factor analysis. Psychometrika, 19(2), 149–161.

Harlow, W. V. (1991). Asset allocation in a downside-risk framework. Financial Analysts Journal, 47(5), 28–40.

Hoechner, B., Reichling, P., & Schulze, G. (2017). Pitfalls of downside performance measures with arbitrary targets. International Review of Finance, 17(4), 597–610.

Høyland, K., Kaut, M., & Wallace, S. W. (2003). A heuristic for moment-matching scenario generation. Computational Optimization and Applications, 24(2–3), 169–185.

Jagannathan, R., & Ma, T. (2003). Risk reduction in large portfolios: Why imposing the wrong constraints helps. The Journal of Finance, 58(4), 1651–1684.

Jolliffe, I. T., & Cadima, J. (2016). Principal component analysis: A review and recent developments. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, 374(2065).

Kaiser, H. F. (1960). The application of electronic computers to factor analysis. Educational and Psychological Measurement, 20(1), 141–151.

Kan, R., & Zhou, G. (2007). Optimal portfolio choice with parameter uncertainty. The Journal of Financial and Quantitative Analysis, 42(3), 621–656.

Kremer, P. J., Talmaciu, A., & Paterlini, S. (2018). Risk minimization in multi-factor portfolios: What is the best strategy? Annals of Operations Research, 266(1), 255–291.

Ledoit, A., & Wolf, M. (2004). A well-conditioned estimator for large-dimensional covariance matrices. Journal of Multivariate Analysis, 88(2), 365–411.

Lim, A. E., Shanthikumar, J. G., & Vahn, G. (2011). Conditional value-at-risk in portfolio optimization: Coherent but fragile. Operations Research Letters, 39(3), 163–171.

Markowitz, H. (1952). Portfolio selection. The Journal of Finance, 7(1), 77–91.

Markowitz, H. (1959). Portfolio selection. John Wiley and Sons.

Markowitz, H., Todd, P., Xu, G., & Y. Y. (1993). Computation of mean-semivariance efficient sets by the Critical Line Algorithm. Annals of Operations Research, 45, 307–317.

Michaud, O. (1989). The Markowitz optimization enigma: Is “optimized“ optimal? Financial Analysts Journal, 45(1), 31–42.

Peralta, G., & Zareei, A. (2016). A network approach to portfolio selection. Journal of Empirical Finance, 38, 157–180.

Pla-Santamaria, D., & Bravo, M. (2013). Portfolio optimization based on downside risk: A mean-semivariance efficient frontier from dow jones blue chips. Annals of Operations Research, 205(1), 189–201.

Rigamonti, A. (2020). Mean-variance optimization is a good choice, but for other reasons than you might think. Risks, 8(1).

Rigamonti, A., Weissensteiner, A., Ferrari, D., & Paterlini, S. (2021). Smoothed semicovariance estimation. Available at SSRN: https://ssrn.com/abstract=3786023.

Rockafellar, R. T., & Uryasev, S. (2000). Optimization of Conditional Value-at-Risk. Journal of Risk, 2(3), 21–41.

Sarykalin, S., Serraino, G., and Uryasev, S. (2008). Value-at-Risk vs. Conditional Value-at-Risk in risk management and optimization. INFORMS Tutorials in Operations Research, pp. 270–294.

Sharma, A., Utz, S., & Mehra, A. (2017). Omega-cvar portfolio optimization and its worst case analysis. OR Spectrum, 39, 505–539.

Sortino, F. A., & Prince, L. N. (1994). Performance measurement in a downside risk framework. The Journal of Investing, 3(3), 59–64.

Velicer, W. F. (1976). Determining the number of components from the matrix of partial correlations. Psychometrika, 41(3), 321–327.

Warne, R. T., & Larsen, R. (2014). Evaluating a proposed modification of the guttman rule for determining the number of factors in an exploratory factor analysis. Psychological Test and Assessment Modeling, 56(1), 104–123.

Yamai, Y., & Yoshiba, T. (2002). On the validity of Value-at-Risk: Comparative analyses with expected shortfall. Monetary and Economic Studies, 20(1), 57–85.

Yue, W., Wang, Y., & Xuan, H. (2019). Fuzzy multi-objective portfolio model based on semi-variance-semi-absolute deviation risk measures. Soft Computing, 23(17), 8159–8179.

Acknowledgements

The authors are grateful to Alex Weissensteiner for his suggestions and comments. Lučivjanská acknowledges that this paper is the partial result of the project VEGA under Grant No 1/0526/20.

Funding

Open access funding provided by University of Liechtenstein.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Value net of transaction costs (10 bp) of the minimum variance portfolio with (Min V) and without (Min V lo) short selling, the long-only minimum CVaR portfolio (Min CVar lo), the minimum semivariance portfolio with (Min SV) and without (Min SV lo) short selling and the subspace minimum semivariance portfolio (Min SV MAP) from May 11, 2004, to June 25, 2021

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rigamonti, A., Lučivjanská, K. Mean-semivariance portfolio optimization using minimum average partial. Ann Oper Res 334, 185–203 (2024). https://doi.org/10.1007/s10479-022-04736-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10479-022-04736-x

Keywords

- Semivariance

- Principal component analysis

- Minimum average partial

- Parameter uncertainty

- Portfolio optimization

- Downside risk