Abstract

Studying texts constitutes a significant part of student learning in health professions education. Key to learning from text is the ability to effectively monitor one’s own cognitive performance and take appropriate regulatory steps for improvement. Inferential cues generated during a learning experience typically guide this monitoring process. It has been shown that interventions to assist learners in using comprehension cues improve their monitoring accuracy. One such intervention is having learners to complete a diagram. Little is known, however, about how learners use cues to shape their monitoring judgments. In addition, previous research has not examined the difference in cue use between categories of learners, such as good and poor monitors. This study explored the types and patterns of cues used by participants after being subjected to a diagram completion task prior to their prediction of performance (PoP). Participants’ thought processes were studied by means of a think-aloud method during diagram completion and the subsequent PoP. Results suggest that relying on comprehension-specific cues may lead to a better PoP. Poor monitors relied on multiple cue types and failed to use available cues appropriately. They gave more incorrect responses and made commission errors in the diagram, which likely led to their overconfidence. Good monitors, on the other hand, utilized cues that are predictive of learning from the diagram completion task and seemed to have relied on comprehension cues for their PoP. However, they tended to be cautious in their judgement, which probably made them underestimate themselves. These observations contribute to the current understanding of the use and effectiveness of diagram completion as a cue-prompt intervention and provide direction for future research in enhancing monitoring accuracy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

A significant portion of a student’s learning relies on text-based materials. This includes tasks such as reading a case report to complete a clinical assignment, preparing for an upcoming exam and summarizing research. Comprehension, or the process of deeply understanding a text and integrating its ideas with prior knowledge, is crucial for learning (Kintsch, 1998). If students are given a text about the process of visual signal transfer through the optic nerve, the one with good comprehension ability should capture, recreate, and explain the visual signal transfer process described in the text. Research has shown that students with better comprehension skills tend to perform well academically (Akbasli et al., 2016). Metacomprehension, or the ability to evaluate one’s own comprehension, is important for regulating learning efforts (Wiley et al., 2005). This helps learners distinguish between texts that they have well understood and those that they have not. Accurate monitoring of learning is essential for regulating learning and performance, as it helps steer learning efforts towards areas that require further improvement (Dunlosky & Lipko, 2007; Nelson & Narens, 1990). For instance, being able to assess whether they have fully mastered a topic, if they need additional practice to improve their skills, or if they need more exposure to a particular specialty would allow students to allocate their time and efforts more efficiently (Thiede et al., 2003).

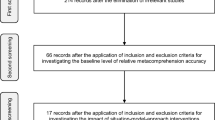

The monitoring accuracy of learners has been studied by comparing their perceived performance in a task with their actual performance (Redford et al., 2012; Thiede et al., 2009, 2022; van Loon et al., 2014). The results suggest that learners often find it difficult to judge their performance accurately. The findings from a recent meta-analysis of 94 empirical studies suggest that learners have a moderate ability to distinguish between texts that are comprehended well and those that are not (relative metacomprehension accuracy = 0.24) (Prinz et al., 2020a). In healthcare, physicians’ self-assessment capabilities and subsequent self-regulated learning efforts are closely linked to the quality of care they provide (Murdoch‐Eaton & Whittle, 2012; Sandars & Cleary, 2011). However, the evidence demonstrates a weak correlation between physicians’ self-rated assessments and standardized external assessments (Davis et al., 2006; Eva et al., 2004). Among health profession trainees, the correlation between self and external assessments of knowledge has been shown to range between 0.02 and 0.65 (Gordon, 1991). Thiede and colleagues (2010) believe that such disparity in self-monitoring occurs because learners fail to recognize and utilize the most informative mental indicators (known as ‘cues’) that guides monitoring decisions (Thiede et al., 2010). This emphasizes the need to enhance the monitoring accuracy of health professionals as early as during their education and training (de Bruin et al., 2017). Prompting students to utilize cues that are more indicative of their level of learning has proven to be an effective intervention for enhancing monitoring accuracy and thereby promoting self-regulated learning (Anderson & Thiede, 2008; de Bruin et al., 2011; Griffin et al., 2008; Redford et al., 2012; Thiede et al., 2010). One such approach is completing diagrams of causal relations described in a learning content (van Loon et al., 2014). It may assist learners in retrieving valid and relevant information from memory and, in the process, provides cues that can predict their level of comprehension. Recent research utilizing diagram completion as a cue-generating activity has demonstrated enhanced monitoring accuracy (Van de Pol et al., 2019; van Loon et al., 2014). Yet, it remains unclear from this research what specific cues learners utilize in making their monitoring decisions, and how the use of these cues varies among individuals. The current study aims to narrow this gap by studying the underlying mental processes while learners engage in a diagram completion task and subsequently evaluate their level of learning.

Improving monitoring accuracy

The quality of monitoring judgments—that is, the monitoring accuracy—is based on how closely learners can predict their actual performance in a forthcoming evaluation or assessment. This monitoring judgement is complex because learners lack direct access to the quality of their cognitive states (Koriat, 1997). When students learn a concept, they cannot directly check how well it is represented in their memory to estimate how well they will recall it in a future test. In such situations, they must base their judgment on ‘cues’ they gather while monitoring their learning. The precision of monitoring judgments therefore relies on the cues that learners prefer to use. Higher accuracy is obtained with cues tied to the mental representation of the text content, which determines the performance of subsequent test results (Prinz et al., 2020a). Utilizing cues representative of text comprehension such as “my ability to explain the text content” will result in better accuracy than using cues such as “my familiarity with the subject” or “easier processing” of the text content.

Monitoring accuracy can be expressed in several ways, including “absolute accuracy”, “relative accuracy”, or “bias index” (Burson et al., 2006; Nelson, 1996). Absolute accuracy is measured as the difference between the predicted (judgement) and actual performance scores. Therefore, it measures the judgment precision as an absolute value. If this difference is smaller (i.e., closer to zero), the absolute accuracy is higher. The bias index is another form of absolute accuracy that measures the extent to which an individual is overconfident or underconfident while making a prediction. The bias index can be positive or negative, indicating the direction and amount of discrepancy between judgement and performance. A positive value denotes overconfidence, whereas a negative value denotes underconfidence (Schraw, 2009). Relative accuracy indicates how well a student is able to discriminate what they have learned or performed on one task compared to another. This measure captures the extent to which learners can judge whether understanding or performance is higher, lower, or similar when comparing between tasks. In real world learning settings, this aspect of metacognitive monitoring is important when deciding what (parts of) tasks need priority or no longer need attention in further regulation of learning. The relative accuracy is usually represented as a gamma correlation, which indicates the strength of the association between judgment and test performance. Its values range from + 1 (perfect positive association) to − 1 (perfect negative association) and a value of ‘zero’ signifies the absence of any association between the judgment and the test performance. An elaboration of these monitoring accuracy measures and their relevance to learning contexts are outlined in Table 1.

According to the construction-integration model of text comprehension (Kintsch, 1998), a learner simultaneously constructs mental representation of texts at multiple levels. First, a surface level is built while the words are being encoded. Second, a text-based level is built as the words are broken down into propositions and connections are made between these propositions. Finally, a situation-model level is constructed as various textual components are linked to one another and to the learner's existing knowledge. The situation model exemplifies the learner's deeper understanding of the text. Several interventions have been developed based on the construction-integration model to aid students in utilizing more appropriate cues to enhance their monitoring accuracy. As a part of these experiments, learners were asked to read expository text materials. This was followed by interventions such as writing a summary of the text (Anderson & Thiede, 2008) (M Gamma = 0.64), preparing keywords that represent the gist of the text content (de Bruin et al., 2011) (M Gamma = 0.27), self-explaining the meaning of content in the text (Griffin et al., 2019) (M Gamma = 0.46), or preparing a visual representation of the connection between concepts in a text (Redford et al., 2012) (M Gamma = 0.41). These interventions helped learners to generate, access, or select situation-model cues (Prinz et al., 2020a). Following the cue-generation interventions, learners were asked to predict their performance (PoP) in a criterion test at the end of the experiment. Monitoring accuracy was then obtained by correlating their PoP with their actual test score in the criterion test. This monitoring accuracy was observed to be greater if the cue-generation task was performed after a delay than if it was performed immediately. The delayed cue generation tasks are assumed to help learners to disregard the surface and text-level mental representation of text content in favor of situation-level comprehension because the former have decayed from working memory after the delay (Anderson & Thiede, 2008; van Loon et al., 2014).

Diagram completion—a generative cue intervention

Diagrams provided alongside textual content are known to improve students’ metacomprehension (Butcher, 2006). Van Loon and colleagues (2014) and Van de Pol and colleagues (2019) investigated the usefulness of asking learners to complete a pre-structured empty diagram illustrating the cause-effect relationships between the concepts given in a text. In these experiments, learners attempted to portray the sequential steps of a causal chain from the studied text. To accomplish the diagram completion task, learners were required to identify the key concepts from the studied text and infer their causal relationships. Engaging in the diagram completion task is therefore expected to provide learners with more valid cues predictive of their learning because of the visual and comprehensive nature of the task. Van Loon and colleagues (2014) observed an increase in relative monitoring accuracy (M Gamma = 0.56) after a delayed completion of diagrams and reasoned that learners based their judgment on cues that indicated an actual understanding of the causal connections in the texts. Van de Pol et al., (2019, Exp. 1) observed a higher monitoring accuracy (M Gamma = 0.43) for causal relations among secondary school students. According to them, cues such as the number of correct causal relations, omissions in the diagrams, and the number of completed text boxes were most predictive of participants’ test performance.

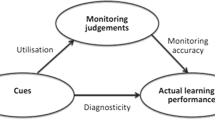

Learners use a variety of cues to monitor their learning and to make predictions of their future performance. The accuracy of their prediction depends upon the quality of these cues. This is known as cue diagnosticity. Thus, cue diagnosticity is the level of a cue’s ability to predict a future performance. However, learners might vary in utilizing these cues. Cue utilization, therefore, describes the degree to which these cues have been utilized when learners make their judgment (Van de Pol et al., 2019; van Loon et al., 2014). Van Loon et al. (2014) observed that the diagram cues such as ‘correct causal relationship’ and the ‘number of omitted text boxes’ were predictive of future test performance (cue diagnosticity). For instance, the correlation between the correct causal relationship and the test performance was highly positive (0.53), indicating that providing a correct causal relationship in the diagram was strongly related to having a higher test score. The participants in that study seemed to have utilized the same set of cues—‘correct causal relationship’ and ‘omission errors’—to support their PoP (cue utilization). They also observed that the correlation between omission errors and PoPs was negative (− 0.64) indicating that the participants provided lower PoPs when their diagram text boxes contained more unfilled text boxes. Similarly, a diagram completion intervention among secondary school students has revealed cues such as the number of correct causal relations, the number of omissions present in the diagram, and the number of boxes completed were significantly correlated with students’ test scores (cue diagnosticity) and the participants used similar sets of cues for their PoP (cue utilization) (Van de Pol et al., 2019). These observations suggest that diagram completion activity generates predictive cues.

Despite the fact that engaging in a diagram completion task provides learners with cues indicative of their level of comprehension, how learners select, interpret, and apply these cues may vary. Previous diagramming studies have tried to establish cue use in a more ad hoc and correlational manner (Van de Pol et al., 2019; van Loon et al., 2014). Although these studies were able to ascertain which cues obtained from the student’s diagrams were diagnostic, they were unable to identify which cues students focused on when making monitoring decisions. In addition, they did not examine the variation in cue use between categories of learners, such as good and poor monitors. For instance, diagramming studies reveal that correct text boxes or omitted text boxes are cues that are diagnostic. However, the accessibility and use of these cues may differ between good and poor monitors. This raises the question of how the monitoring practices of individuals differ, which potentially has implications for the design of adaptive interventions to improve their monitoring accuracy.

This is the first attempt at exploring a diagram intervention and its related issues of cue use in the health professions education context. In order to promote self-regulated learning, medical educators are incorporating instructional approaches to improve students' metacognitive skills. Given that accurate self-monitoring is a necessity for self-regulated learning, an in-depth understanding of the learner's monitoring process in such contexts is crucial, particularly in light of the literature indicating variation in self-assessment accuracy among physicians (Davis et al., 2006; Johnson et al., 2023). Therefore, gaining a deeper understanding of participants’ cue use through a more direct observation of cognitive processes during cue use might help our attempts to improve the monitoring accuracy of learners.

The present study

In order to guide the efforts to enhance the monitoring accuracy of students' comprehension of complex texts, it is important to widen our understanding of learners’ cue use patterns. The present study, therefore, set out to answering the following research questions:

-

(1)

What cues do learners utilize to base their learning monitoring on after a diagram completion activity illustrating causal relationships?

-

(2)

How does the pattern of cue use differ between good and poor monitors of learning?

A set of novel and complex expository texts were developed from an undergraduate optometry curriculum and piloted to choose six texts for the experiment. Participants read these texts sequentially, completed diagrams illustrating the causal relationships in the texts, and predicted how well they would perform on a test designed to assess their comprehension of cause-and-effect relationships. The difference between the predicted and actual performance scores were estimated to determine the monitoring accuracy (i.e., absolute accuracy) and used to categorize the participants as either good or poor monitors. They spoke aloud their thought processes while completing the diagram and during their PoP. These verbal accounts were subjected to a reflexive thematic analysis to determine the types and patterns of cues used by good and poor monitors. This study did not further explore absolute monitoring accuracy, other than employing it to classify learners, and only reported the relative monitoring accuracy to maintain consistency with previous diagramming research. Similarly, the study did not estimate the ‘cue diagnosticity’ or the ‘cue utilisation’ of participants as they were outside the scope of this work.

Methodology

Philosophical stance and researcher positioning

This study employs nested mixed methods research with a qualitative focus and a quantitative component. The researchers adopt a pragmatic philosophical stance in which they assert that the interweaving of qualitative and quantitative methodologies provides a logical grounding, methodological flexibility, and an in-depth understanding of the research questions. In this study, the monitoring accuracy of the participants following the cue-generative diagram completion intervention is estimated quantitatively, and the cue use that guides their judgement of learning is explored qualitatively. BN is an experienced educator in the health professions who is interested in studying the cognitive processes of student learning. ABHdB is a professor of educational psychology, focusing on understanding how to support students’ self-regulation of learning in higher education. PWMVG is an experimental psychologist who has a broad interest in cognitive functions and their role in learning. These backgrounds aided us in the co-construction of interpretations while resolving our research questions, which seek to translate cognitive and educational psychology works into medical education.

Study context and participants

The data was collected between September 2020 and April 2021 from 31 undergraduate optometry students (27 females, 4 males) at the College of Health Sciences, University of Buraimi, Sultanate of Oman. Students in their second professional year were chosen for this study on the assumption that they would have acquired the appropriate background knowledge to comprehend novel concepts of primary eye care. Participants were identified based on voluntary participation and verbal ability. Each participant's verbal proficiency was determined by requesting input from their respective course instructors. Thereafter potential candidates were contacted by email and invited to join. BN is a senior faculty member at the same organization who recruited the participants but has had no teaching or administrative interactions with them in the past or during the course of the study. The research and ethics committee at the College of Health Sciences, University of Buraimi (Ref No:0030/REC/2019), approved this study.

Study instrument: expository text materials

Expository texts provide detailed, organized information on a subject. Thirteen texts describing novel and complex concepts from the optometry curriculum were prepared so that the logical organization of the content led to serial or simultaneous causal relationships (see Appendix A & B for sample texts and diagrams). Each text had five distinct idea units in the causal chain. Hence, five text boxes were included in the diagram that followed the texts. All the text materials were prepared in a single paragraph format with a mean (± SD) word count of 198 ± 28.

A pilot study was carried out to select texts for the experiment. Thirteen second-year optometry students read three texts each, filled in appropriate diagrams, completed their PoP, and took a test. Participants read each text only once and were not allowed to review previously read texts. This ensured that at least three participants read each text. The difficulty level of eight expository texts was comparable, as measured by the mean (± SD) percentage performance on questions about the causal relationship (75.41 ± 11.95%). To balance the variety of text types, we selected three texts that demonstrated serial causal relationships and the remaining three demonstrated both serial and simultaneous causal relationships.

Prediction of performance (PoP)

The participants provided their PoP for their understanding of causal relationships for each text immediately after completing the diagram. The PoP was graded on a 6-point scale ranging from 0% (not at all confident) to 100% (fully confident), with the points on the scale corresponding to 0%, 20%, 40%, 60%, 80%, and 100%. When making their prediction, participants could see the text’s title on the screen, along with the question: How accurately do you believe you will be able to answer questions on the cause-and-effect relationship indicated in the text?

Pre-study instructions

Prior to the study, the researcher instructed all participants to familiarize them with the steps and procedures. A video with a brief explanation of the study protocol, expository texts, cause-and-effect relationships, diagram completion, the PoPs, the post-test format and, the think-aloud method (including a demonstration of the think-aloud) was created and shared with the participants a day before the study. This allowed them to become familiar with the study, but more crucially, it guaranteed that the participants received consistent and neutral instructions. The data collection was initially planned in a one-on-one, face-to-face mode. The Covid-19 pandemic restricted face-to-face interaction, and the experimental procedure was shifted to a Zoom videoconferencing platform combined with the QualtricsXM survey software. Study workflow and procedures were carefully incorporated into the QualtricsXM survey software to assure procedural consistency across participants. Electronic consent from the participants was obtained at the end of the pre-study instructions.

Procedure

On the day of the task, participants joined over Zoom. They were then asked to recall the important think-aloud instructions. Participants were then provided with a quick recap about the expository text, cause-effect relationships, diagram completion, think-aloud procedure, PoP, and the test at the end (Fig. 1). Next, they read two sample texts for practice: one with serial and the other with simultaneous causal relationships. Supported by the researcher, they completed the diagram for the first sample text. The researcher observed them thinking aloud while they were completing the second diagram and during the subsequent PoP. If they were silent for longer than five seconds, they were reminded to resume thinking aloud. Following this, the participants answered sample test questions on causal relationships. After this practice session, the participants were asked to take a five-minute break.

The researcher made sure participants were in a quiet environment before the task. Once they were clear about the study steps, they received an email link to access the original study tasks on QualtricsXM. They had to read six texts one after another at their own pace, but they could not reread passages (Fig. 1). They were also instructed not to take any notes. They were allowed to keep their video and audio turned off during text reading. However, to keep track of their progress, they were required to notify the researcher whenever they progressed from one text to the next. After reading all six paragraphs, they took a 5 to 7 minute break before starting to complete diagrams in the next stage. The order of diagrams followed the order of texts they had read. After completing all six diagrams, participants predicted their performance in the test for all six texts on a visual analogue scale from 0 to 100%. After the PoP, they moved to the final step, where they answered the test questions. They concurrently verbalized their thoughts during diagram completion and PoP.

The researcher was available online to guide participants throughout the task. To ensure a comfortable and undisturbed environment, participants were instructed to find a quiet place, mute their video, and engage in natural think-aloud verbalizations. The entire experiment was audio-recorded using a screen recording software, and digital voice recorders served as backup. After the think-aloud phase, retrospective questioning was conducted to clarify any unclear data (Noushad et al., 2023).

Diagram scoring

Each diagram consisted of five text boxes connected with arrow marks, and one of the boxes (either the first one or the last one) was already filled in for the participants (Appendix A & B). Responses in the diagram text boxes (cause or effect) were scored (Table 2), which is in line with the recommendations by van Loon et al. (2014) and van de Pol et al. (2020). The responses in the four filled-out text boxes in the completed diagrams were scored as ‘correct’ if a correct step in the causal chain was provided. If a box was filled out with only factual information, even if that information contained details from the reading text, it was scored as ‘incorrect’ because it did not represent a correct step in the causal chain. Furthermore, when the participants provided a response that did not come from the text, this was scored as a ‘commission error’. If no response was provided in a diagram textbox, this was scored as an ‘omission’ (Table 2).

Test response scoring

The scoring criteria outlined by van Loon et al. (2014) and van de Pol et al. (2020) were used as the standard for evaluating test responses. The number of valid causal connections given in the test response received a score between 0 and 4 (0 = no correct causal relations, 4 = all correct causal relations). Emphasis was given to comprehension. Therefore, responses were also scored as correct if they indicated that the participants understood what was implied or meant with the original text content, that is, a response indicating gist understanding.

Data analysis

Participant’s monitoring accuracy

Relative monitoring accuracy is a measure of the within-participant correspondence between confidence and accuracy. This refers to the extent to which someone knows which texts are better understood relative to another (Maki et al., 2005). If one text had a higher confidence level than the other, the performance level in the criterion test is also anticipated to be higher for that text. The number of concordances (where the performance prediction and criterion test score move in the same direction) and discordances (where the performance prediction and criterion test score move in the opposite direction) are the basis of determining the relative monitoring accuracy. Therefore, relative monitoring accuracy does not reflect the magnitude of difference between the monitoring judgment and performance (i.e., the degree of judgement precision). On the other hand, absolute accuracy captures the absolute correspondence between the judgment and test performance. A higher absolute accuracy (i.e., difference closer to zero) implies that a learner is capable of predicting his/her performance accurately in a task or assessment, which may indicate the appropriate use of predictive cues during the process of self-monitoring. In real-world learning settings, having an accurate judgment of current level of knowledge and how and where that differs from what is expected (e.g., on the test) is a crucial aspect of metacognitive monitoring and essential to adequately regulate further learning. This aspect of metacognitive monitoring corresponds to absolute accuracy. Thus, research in the diagramming paradigm and metacognition more generally emphasize the significance of measuring absolute monitoring accuracy (De Bruin & van Gog, 2012; Pijeira-Díaz et al., 2023). Consequently, we decided to use absolute accuracy to categorize the participants as good and poor monitors.

Categorizing good vs poor monitors

The purpose of this study is to identify the most diagnostic cues used by the participants. Hence, it is necessary to divide the participants into good and poor monitors. Based on their absolute accuracy scores, we divided the participants into three: the highest, the middle, and the lowest. This divided the total sample of 31 participants into the top one third (good monitors, n = 10) and the lower one third (poor monitors, n = 10), leaving 11 participants in the middle one third.

Reflexive thematic analysis (RTA)

Think-aloud data in this study is a qualitative account of participants’ thought processes verbalized during the diagram completion and in the subsequent PoP. It was analyzed using reflexive thematic analysis (RTA) developed by Braun and Clarke (Braun & Clarke, 2006, 2019, 2021), underpinning a constructivist epistemological approach. This means that the patterns of cue use in the monitoring process were meaningfully constructed by the research team’s thoughtful interpretation of participants’ verbalizations. An ‘experiential’ qualitative data analysis approach was adopted to organize, prioritize, and interpret participants’ verbalizations. An overarching deductive framework was employed to ensure the initial data coding and theme construction were relevant to the research question. At the same time, an inductive approach was constantly shadowed to remain flexible and open to best represent the information presented by the participants. For instance, from the think-aloud data of participants, only those utterances suggestive of cue generation and utilization were considered for coding. Coding utilized both semantic and latent approaches. Semantic coding focuses on the descriptive surface meaning of what participants have communicated. In contrast, latent coding goes beyond the surface level of the data attempting to extract hidden meanings and assumptions (Byrne, 2022). Examples of semantic codes included ‘information processing cue’, ‘readability cue’, and ‘overall low confidence in content correctness’. Examples of latent codes included ‘cautious underestimation’ and ‘falsely overconfident’. For instance, “the overall impression of diagram completion” could be a feeling of ease or difficulty of comprehension. However, it may not always be a reliable comprehension cue unless accompanied by evidence of comprehension, such as a diagram that accurately represents the causal relationship. These relationships were thoroughly examined during the latent coding stage. If there was no correlation between the “feeling of comprehension” and the completed diagram, it was coded as “falsely overconfident”. Likewise, the memory retrieval cues (such as fluency, memorizability, quantity, and quality of retrieval) were analyzed together with the quality of the comprehension cue. For example, if a participant completed a diagram that was incorrect but said that s/he could easily recall the information, it was coded as an “incomplete diagram with the commission and incorrect responses.” However, if the memory retrieval cue was related to the accurate representation of the causal chain in the diagram, it was coded as “comprehension dominant PoP.” These approaches reflected the theoretical assumptions of analysis (constructive and interpretive) by giving due consideration to the semantic and latent meanings of the information provided by the participants. Coding of the transcribed data (both semantic and latent) was carried out manually using ATLAS.ti, a qualitative data analysis program. The program then enabled the comparison of cue use between good and poor monitors by generating a code document table and a Sankey diagram.

The data analysis process followed the six-phase analysis approach recommended by Braun and Clarke (2006). Phase one is to get familiarized with the entire data. One researcher (BN) manually transcribed all participants' think-aloud recordings verbatim and anonymized the transcripts. This allowed the researcher to familiarize himself with the whole dataset beforehand. In the second phase, the pieces of information relevant to the research question, such as cues suggestive of learning monitoring, were identified and coded. In the first part of this phase, only semantic coding was carried out using a broad coding framework to recognize various types of cues utilized by the participants (Table 3). The two main cue categories identified for semantic coding were comprehension-based cues and heuristic cues. The comprehension-based cues also included memory-retrieval cues (Griffin et al., 2009). The cues that arise from processing or constructing a specific text representation were termed as comprehension cues. All other cues that do not relate to the construction of a specific text representation were considered heuristic cues (referred to as surface cues in this study). This approach to classifying cues was adapted because the diagram completion intervention was designed to make comprehension cues more salient or available to learners (Prinz et al., 2020b). Semantic coding was followed by latent coding to identify the underlying patterns of cue use and relevant interpretations concerning the research question. In phase three, the coded data were reviewed, re-reviewed, analyzed, and combined according to their shared meaning to generate prospective themes. Several codes were combined and collated into similar underlying concepts or features. A few other codes were moved to the miscellaneous category as they did not fit within the overall analysis. In phase four, a review and reiteration of codes and initial themes were done to narrow down to the four final themes. These themes were named and defined in phase five. Finally, the report was written in phase six.

Reflexive thematic analysis is considered a reflexive and interpretive account of researchers involved in analyzing qualitative data. This reflective and interpretive data analysis happens at the intersection of the dataset, theoretical assumptions underlying the analysis, and researchers' analytical skills and resources (Braun & Clarke, 2019). Following RTA's interpretive nature, the data analysis was primarily done by the first author (BN). The second (PWMVG) and third author (ABHdB) consistently contributed to sense-checking the ideas and exploring richer interpretations of the data. The researchers' regular team meetings facilitated this process.

Results

Monitoring accuracy

The relative monitoring accuracy in this study was observed to be M Gamma = 0.23 (SD = 0.57) following delayed diagram completion for the examination of causal relationships. This was estimated using the intra-individual gamma correlation between the participant’s PoP and their actual test score. Their overall absolute monitoring accuracy and for the higher, middle and lower one-third split categories are summarized in Table 4. Overall accuracy was estimated as the mean of the absolute accuracy scores of the 31 participants. The absolute monitoring accuracy (0.24) indicates a moderately higher score.

Good monitors vs poor monitors—diagram responses

Table 5 represents the diagram responses of the good and poor monitors. In the group of good monitors, the mean numbers of correct text box responses and correct causal relationships were significantly higher, whereas the poor monitors committed a greater number of commission errors (as determined by independent-samples t tests). That is, good monitors filled in a greater number of text boxes correctly, produced more causal connections, and committed fewer commission errors. The number of completed boxes seems to be a more diagnostic cue for students with high monitoring accuracy since they completed more boxes correctly. In contrast, students with low monitoring accuracy made more incorrect text-box completions (i.e., with commission errors of which they appeared unaware). The two groups did not differ in terms of omission errors (empty text boxes), and they appeared to have been utilized those cues without much difficulty.

Reflexive thematic analysis of think aloud data

This study identified several noticeable differences in cue use patterns between good and poor monitors. The key distinction was the reliance on cues that reflect their situation model level, which is in line with the causal relationship described in the learning text. Good monitors tend to rely more on such cues when making their predictions (PoPs). The diagram completion exercise is known to help reflect their understanding of these causal relationships. However, the inaccuracies in completing the diagram can mislead poor monitors. Another characteristic observed was an underestimation tendency among good monitors and, the negative influence of nondiagnostic cues on the monitoring accuracy of both good and poor monitors. Each theme described below is accompanied by quotes that are illustrative, succinct and representative of the patterns in the data. A visual representation of the cue use pattern is shown in the Sankey diagram of ATLAS.ti. (Fig. 2).

Theme 1—use of comprehension cues is the key to accurate monitoring

In this study, participants who predicted their performance based on comprehension-specific cues showed better accuracy in monitoring their performance (as indicated by the comprehension cue dominant node in Fig. 2). Completing diagrams act as self-tests to assess the situation-level model of comprehension. By filling in the text boxes in the diagram, participants would have been able to evaluate their comprehension level by reconstructing the causal relationship between idea units. (S1_P3025_PoP6).

The diagram is properly completed, and I remember the content clearly. What happens when the corneal tissue is reshaped, the corneal nerves will get damaged. So, it affects the communication between the cornea and the lacrimal gland, okay... it causes the lacrimal gland to produce less amount of tear, which then causes dryness... okay... 95%. (S1_P3025_PoP6).

Good monitors' prediction of their performance was more aligned with their completed diagram, indicating the potential influence of the diagram completion exercise in guiding learners in monitoring (as shown in Fig. 2, the ‘diagram corresponding to PoP’ node). Additionally, the diagram completion process was systematic and complete among good monitors (represented by the ‘DCP-Systematic and complete’ node in Fig. 2), whereas poor monitors had incomplete diagrams due to commission errors and incorrect responses (‘indicated by the 'DCP-Incomplete due to commission and incorrect responses’ node in Fig. 2). Text comprehension is valid when the idea units of the causal chain are restored in an appropriate and logical manner. Similarly, failure to remember idea units in the causal chain may prompt students to scale down their predictions (S1_P3028_PoP4).

As the age increases, some of the endothelial cells will die… (recollects the causal chain) …increasing the size or changing the shape. After that, there is one more thing that happens. Then, the water from the aqueous humor will enter the cornea, which causes corneal swelling. That is the reason for corneal oedema. But I don’t know what is happening in between. So... I will give 70%. Okay. (S1_P3028_PoP4).

Several text and diagram-specific comprehension cues were accessible to participants throughout the diagram completion task. The ability to recall the causal connections was the most important of these cues, and it likely prompted participants to use cues indicating their level of confidence. (S1_P3031_PoP1).

…when I was reading the paragraph, I know that the retinal pigment epithelium, which is the inner layer of the retina. And, also, how it supports the photoreceptor cell function. I know the relation between the age-related macular degeneration and when the person gets older, how it causes the photoreceptor cell death. And... how does the photoreceptor cell death happens too. So... I think...my level of confidence is 90 or 95? Am not sure. Okay...I will put it in between. So... between 90 & 95. Okay (S1_P3031_PoP1).

Reconstructing the causal chain by filling out text boxes and gaining comprehension-specific information from that experience could be a valid source of predictive cues. These cues include overall confidence in the completed diagram (S1 P3004 PoP5), the accuracy of the text box contents, and the boxes that could not be filled out (omission of text boxes). In situations where the text boxes are unfilled or if participants couldn’t recall the idea units in one or more text boxes, monitoring decisions were easier and more accurate.

…this one was easier ...I think I remembered most of the details and most of the essential information I needed to complete the diagram .... and I think it is well organized and easy to understand ...so am gonna put in the scale of .... let me see...btw 85 and 90. Yes. (S1_P3004_PoP5)

I will get myself...hmmm...25%...because I forgot more information...forgot more key words ...and, I don’t complete all the boxes in the diagram ...I will keep myself 25%. (S1_P3008_PoP2)

The evidence, therefore, indicates the value of the diagram completion activity as a means of enabling learners to gain access to valid cues of situation-level comprehension.

Theme 2—commission errors and incorrect responses in the diagram is associated with overconfidence (Poor interpretation of cue manifestation hinders monitoring accuracy)

The completion of the diagram's text boxes was found to serve as a valid cue for participants. However, occasionally, text boxes were filled with information outside the causal chain. If a box only contained factual information, even if it provided textual descriptions, the response was deemed incorrect because it did not reflect the correct steps in the causal chain. Similarly, if the response in the text box was different from the text, it was considered a commission error. Commission errors and incorrect responses suggest that the participant may have lacked true comprehension of the text at the situational level. However, their diagrams included a great deal of information that they believed to be accurate. This may result in an overestimation of learning judgments.

...the paragraph was easy. I understand the content... like...the relationship of cause and effect. Also, I think I did a good job when I filled that information in the diagram. So…95%...Okay. Done. (Note: Of the four text boxes, three were filled with commission errors, while only one had the correct information. The participant scored only 25% on the test against his PoP of 95%) (S1_3006_PoP6).

I could remember all the information, and I completed the diagram fully …I will put it 100 here. Okay. (Note: all the text boxes were filled in with incorrect information and scored ‘zero’ on the test against his PoP of 100%) (S1_3024_PoP5).

The above examples illustrate how inaccurate responses and commission errors can negatively impact the validity of monitoring judgments. Such mistakes in the diagram completion exercise can lead to overconfidence, resulting in poor monitoring accuracy. This trend was evident among poor monitors as illustrated in Fig. 2 (PoP-Falsely overconfident due to commission and incorrect responses node) and Table 5.

Theme 3—tendency for cautious underestimation by good monitors

Good monitors tended to evaluate their performance as somewhat lower than their actual level of perceived performance. When asked about this discrepancy, it was noted that participants did not want to take risk and ending up scoring themselves higher.

...this topic I could remember very well, so I will keep 80%. Because, I remember the information what I read from the paragraph...and...it is an easy topic. But still...I will keep it at 80. (S1_P3032_PoP5)

This diagram was not much of an issue. So, I will give 80. Because I could remember how drusen is formed, and the drusen slowly dysfunctions the RPE. This dysfunction of the RPE will weaken the photoreceptor cells and that causes their cell death. So, those are clear for me...I will give 80. Okay. (S1_P3028_PoP6)

The two participants' excerpts above show their tendency to underrate their performance. They successfully completed the diagram, recreated the causal chain, and earned perfect scores on the test. However, they tended to underrate themselves. This caution was only exhibited by good monitors, especially when they dealt with unfamiliar topics or keywords.

Theme—4: surface cues compromise the monitoring accuracy

If learners rely on cues aligned with characteristics relevant to the criterion test, their evaluations of learning are often accurate. However, surface cues do not indicate situation-level comprehension of a text. The time required to finish the diagram, the difficulty of reading the text, and difficult keywords or vocabulary are examples of surface cues that can affect learners' monitoring judgments. Such cues seemed to influence learners' monitoring judgments in this study.

...I am going to keep 70%. I think…I took more time to remember about what to write in the boxes of the diagram. Also, there were a lot of information that I have read and I might forget some information. So...I will keep 70%. (S1_P3032_PoP4)

In the above example, the participant used surface cues such as ‘the time required to complete the diagram’ and ‘the amount of textual information’ as their primary cue for predicting performance. It was evident that even experienced monitors were susceptible to surface cues. For instance, the following excerpt illustrates how the participant considered ‘prior knowledge and reading text comparison’ surface cues in addition to the comprehension cue. This influence of surface cue appears to have caused underestimation here (Fig. 2; PoP-Surface cue influenced node).

...this was a new topic for me. I have understood the mechanism of how the silver halide in the photochromic lenses helps to block the UV rays. If I get questions based on that, I will not say fully confident. Because, I don’t have a detailed understanding of this topic since it is new for me. So, let me give 75. The highlighted portions in paragraph I clearly understand. Since I understood the paragraph content better than other paragraphs, I will keep it at 80. Should I go to 75? I shouldn’t go to 85. So...I will keep it in the middle...80%. Done. (S1_P3023_PoP6; this participant perfectly filled in the diagram and scored 100% in the test)

Our data suggests that relying primarily on surface cues may lead to poor judgment accuracy. For instance, the participant below (S1_P3006_PoP5) relied primarily on the ‘readability cue’ and the ‘vocabulary cue’ to make a judgment, but the filled-in diagram contained incorrect responses and lacked causal connections. This participant received ‘zero’ score on the test, demonstrating a possible negative association with surface cues for monitoring (Fig. 2; ‘PoP-Surface cue dominant’ node).

...when I read the paragraph, there was some new information. But, when I read it, I read it easily. But I don’t remember a word in it. Coz I haven’t come across this word before. So, this new information I couldn’t remember. And, when I completed the diagram, it was easy, but the only problem is I cannot remember that one word. So, I will keep... 80…coz of that word. (S1_P3006_PoP5)

Based on our data, it seems that surface cues had a distracting effect on participants. This distraction can lead to a decrease in accuracy, especially when participants are overconfident due to commission errors or incorrect responses. Likewise, surface cues causes some underconfidence among good monitors.

Regarding cue use, poor monitors observed to have difficulty in utilizing obvious cues in predicting performance (as demonstrated by the node 'PoP-Inability to utilize obvious cues' in Fig. 2). For instance, despite realizing that they had poorly completed the diagram, they failed to consider such cues and rated themselves higher. Moreover, poor monitors considered multiple cue types than good monitors when making judgments (as indicated by the node ‘PoP-Few cue type use’ in Fig. 2).

Discussion

This study explored the cognitive processes underlying the use of mental cues for performance monitoring between good and poor monitors following a cue-generation intervention. Those that relied on comprehension cues (e.g., the overall impression of the situation model cue, causal relationship cue, omission cue, correctness of textbox content cue, etc.) displayed better monitoring accuracy. On the other hand, it is likely that commission errors and incorrect responses made by participants during diagram completion have led them to become overconfident. The influence of surface cues and the usage of multiple cues was evident in reducing the monitoring accuracy of participants, particularly the poor monitors. The outcomes of this study provide new insights into the possible factors contributing to variations in monitoring accuracy.

These observations advance our understanding of the beneficial effects of a delayed diagram completion intervention. The primary purpose of this study is not to estimate the effect of diagram completion activity on the monitoring accuracy of learners (which has been established by (Van de Pol et al., 2019; van Loon et al., 2014)), but to gain a deeper understanding of the underlying mechanism of cue utilization. Previously, Thiede and colleagues attempted to explore the types of cue use by asking learners to self-report retrospectively after completing a delayed summary generation task (Thiede et al., 2010). Prior studies utilizing the diagram completion paradigm attempted to empirically estimate cue utilization and cue diagnosticity based on participant responses in the completed diagram (Van de Pol et al., 2019; van Loon et al., 2014). This study is the first attempt to obtain a direct account of the cognitive processes underlying cue utilization following a cue generation task using a think-aloud technique. Another contribution of this research is that it attempted to introduce the diagram completion exercise as an instructional strategy for learning complicated texts containing cause-effect relationships in a real-world setting of health professions education. The cue-utilization framework clearly proposes the possible influence of non-diagnostic cue use among poor monitors (Koriat, 1997; Thiede et al., 2010). Therefore, a further focus of this study was to shed light on the pattern of cue use among good and poor monitors of learning.

The two types of monitoring accuracy measures are independent since they focus on different aspects of metacomprehension skills and hence differ in their implications for educators and learners in real-world learning environments. While absolute accuracy provides feedback about learners' monitoring of their actual level of knowledge or skill and helps identify gaps, relative accuracy provides a comparative measure and feedback to learners on whether they can identify differences in knowledge and skill across tasks or topics. The bias index complements the absolute accuracy measure because it informs if learners are over- or underconfident, which could be a sign of dysfunctional cue use, such as reliance on surface cues (Gutierrez de Blume, 2022). Therefore, these accuracy measures help teachers tailor their instructional approaches and provide targeted and constructive feedback to learners on their self-monitoring skills. Teachers could incorporate monitoring accuracy measures more commonly in their teaching and analyse with students how and why they are (in) accurate (Sargeant et al., 2008). This would require explicit support from teachers to make this instruction effective, but if done effectively it would support the development of metacognitive skills (Medina et al., 2017).

The distinction between the good and poor monitors observed in this study was their extent of reliance on the situation-level comprehension cues to base their monitoring decisions on (Thiede et al., 2010). The causal connection between the idea units in the text and the reinforcement of the same when they completed the diagram were the two sources of cues. Good monitors relied on comprehension-specific cues for their judgement (text comprehension, diagram comprehension, or both) compared to poor monitors. Further, the judgements of good monitors were congruent with the completion and correctness of the diagram, indicating that the good monitors may have accurately identified and utilised the situation-model cues from diagram completion (Thiede et al., 2003; Thiede et al., 2010; Van de Pol et al., 2019; van Loon et al., 2014). The diagram completion task acts as a self-test for memory retrieval, providing learners with cues representative of their actual comprehension. The utilization of cues is always dependent on the availability of cues. Those with a higher level of comprehension have access to comprehension-specific cues, but those with a lower level of comprehension do not. It suggests that identifying and interpreting the validity of available cues during monitoring requires additional assistance, particularly for those with impaired comprehension (Thiede et al., 2010; van de Pol et al., 2020).

Poor monitors frequently filled in the diagrams with information from outside the causal chain, that is, with incorrect responses and commission errors. That probably prompted them to base their judgement on an incorrect cue, i.e., the successful completion of the diagram. It suggests that the poor monitors failed to recognise the incorrect responses and commission errors they had incurred while completing the diagram (van de Pol et al., 2020). As indicated previously, such inaccuracies could have prompted the learners to score themselves higher (overconfidence) and compromised the monitoring accuracy (Dunlosky & Rawson, 2012; Dunlosky et al., 2005; Finn & Metcalfe, 2014). In another sense, the mere use of a diagnostic cue, such as ‘successful completion of the diagram’, does not ensure accurate monitoring, unless it accurately reflects situation-level comprehension. Poorly completed diagrams with commission errors or incorrect responses, can compromise the quality of the situation-model (van de Pol et al., 2020). In the current study, this may have led to a discrepancy between the completed diagram and the performance prediction made by the poor monitors. This underscores the significance of accurately interpreting available cues and effectively utilizing them in the judgement process. Good monitors, in contrast, made fewer commission errors and incorrect responses. Van de Pol and colleagues described the term ‘cue manifestation’ as the ability of learners to accurately judge the right interpretation of the cues available for them (e.g., correct causal relations, presence of commission errors, the relevance of omission errors, etc.) (van de Pol et al., 2020). Therefore, monitoring accuracy requires an ability to recognize diagnostic cues, properly infer them and appropriately utilize them to base their judgement. In general, the poor monitors struggled in this area compared to the good monitors.

Good monitors exhibited a tendency to judge themselves a bit lower than their actually perceived performance. This pattern corresponds to the trend observed in the literature, where high and low performers are reported to show biases in their self-judgement, but in different degrees in the absolute scores. Low performers are less accurate in their judgements as a result of a significant overestimation of their own performance. On the other hand, high performers are more accurate in their judgement and in general, underestimate themselves (Dunning et al., 2003; Foster et al., 2017; Saenz et al., 2017). Good performers are known to have better metacognitive awareness compared to poor performers. At times, their metacognitive awareness might not be convincingly enough to judge them correct but, prompting them to lower their level of confidence (Tirso et al., 2019).

Several surface cues relevant to the text and diagram completion task were used by the participants in this study (Table 3). It is evident from the findings that the use of such surface cues had a detrimental impact on the monitoring accuracy of both poor and good monitors, especially the poor monitors. It suggests that a greater dependence on surface cues could result in a decline of monitoring accuracy. This reconfirms the findings of Thiede and colleagues, who observed that those participants who reported using only surface cues had significantly lower gamma values (− 0.03) than those who reported using comprehension-based cues (0.71). In addition, they found that monitoring accuracy declines when participants report using a combination of surface cues and comprehension cues (− 0.33) (Thiede et al., 2010). It also supports an observation drawn from this study where poor monitors relied on multiple cues than good monitors who utilized fewer comprehension-specific cues for performance monitoring. Consideration of multiple cues for monitoring decisions by poor monitors indicates their inability to recognize valid cues, which would further complicate their judgment process. When people attempt to make predictions on the basis of imperfect probabilistic cues, they may be unknowingly biased by their accessible mental representation, which does not necessarily represent the essential information to reach a conclusion (Newell & Shanks, 2014). This emphasizes the need to address the effect of surface cues and the usage of multiple cues when devising interventions to improve monitoring accuracy.

In this work, we observed a lower relative monitoring accuracy (M gamma = 0.23) compared to previous diagram completion studies. This may not qualify as a direct comparison with previous diagram completion research due to the difference in primary research focus, incomparable sample size, and lack of a control group. However, it is worth noting that the unproductive effects of commission errors, incorrect responses, surface cues, and multiple cue use differentiated poor monitors from good monitors, suggesting an inter-individual difference in cue-diagnosticity (van de Pol et al., 2020). According to the cue-utilization framework, those cues that are most diagnostic of students’ text comprehension should be used and non-diagnostic cues should be ignored (Koriat, 1997). Although the diagram completion approach generates valid and diagnostic cues, it can also misguide learners if the diagram is completed using incorrect information. Ultimately, it is the learner's responsibility to accurately interpret and utilize these cues. That remains a challenge, especially for poor performers. Therefore, future interventions could focus on strategies that provide feedback to make students aware of their errors, so as to improve their monitoring accuracy (van de Pol et al., 2020).

The observations from this study have considerable implications for the self-monitoring of health professionals regarding their professional development. Medical trainees who engaged in self-monitoring achieved higher overall performance levels (Jamshidi et al., 2009; Netter et al., 2021), made more performance gains (Halim et al., 2021), showed a positive impact on patient outcomes (Holmboe et al., 2005), and demonstrated improved workplace behaviour (Hale et al., 2016) compared to those trainees who did not engage in self-monitoring. In the medical sciences, self-monitoring is often tested by measuring the accuracy of performance self-judgement in comparison to an expert evaluation or standardized clinical protocols, which have shown varied levels of agreement (Casswell et al., 2016; Moorthy et al., 2006; Quick et al., 2017). In general, novice trainees displayed less accuracy in self-monitoring than their experienced senior trainees or faculty experts (Casswell et al., 2016; de Blacam et al., 2012; Moorthy et al., 2006). These observations have incited the development of interventions to support novices with peer feedback (Evans et al., 2007), expert coaching (Wouda & van de Wiel, 2014), simulated self-training tools (Veaudor et al., 2018), and self-assessment instruments (Andersen et al., 2020). While these studies have shown better agreement in performance monitoring, they lack a deeper understanding of the factors that compromise the monitoring accuracy of learners, as well as the inter-individual and task-specific variations that could guide researchers to construct customized feedback or feedforward interventions to improve monitoring accuracy. In that regard, the observations from the present study provide an essential contribution.

While attempting to draw conclusions from this study, it is necessary to account for some shortcomings. We lacked a thorough understanding of the cue use patterns of learners without a control condition, which would have allowed us to better comprehend the effects of the diagram completion intervention. Nevertheless, the current study has revealed areas where future research should focus on to enhance the monitoring accuracy of learners. Second, cues used for the semantic coding of transcripts have been reported in the literature; however, analyzing the impact of individual cues on monitoring accuracy in the context of diagram completion was not within the scope of this study. It would be valuable for future research to investigate the predictive validity of these cues in authentic learning contexts. Third, we may debate the effectiveness of the think-aloud method for identifying concurrent cognitive processes. Reactivity (the higher cognitive load involved with reporting and thinking simultaneously) and non-veridicality (the non-concordance between actual thought processes and verbalizations) are the two key criticisms of this method. The key to avoiding reactivity and non-veridicality is to provide participants with thorough instructions and training prior to think-aloud. We have attempted to address these issues adequately.

Conclusion

This study aimed to expand the present understanding of the potential sources of errors that learners may make when monitoring their own performances, despite accurate prompts leading to reliable cues. As demonstrated here, the use of cues that reflect the situation-level mental text representation would assist learners in achieving greater monitoring accuracy. Equally significant is guiding learners away from the wrong interpretation of cues that result from commission errors and incorrect responses. The influence of surface cues is an additional obstacle to achieving reliable monitoring. These observations emphasize the importance of supporting learners to select and use diagnostic cues while monitoring their performance.

References

Akbasli, S., Sahin, M., & Yaykiran, Z. (2016). The effect of reading comprehension on the performance in science and mathematics. Journal of Education and Practice, 7(16), 108–121.

Andersen, S. A. W., Frendø, M., Guldager, M., & Sørensen, M. S. (2020). Understanding the effects of structured self-assessment in directed, self-regulated simulation-based training of mastoidectomy: A mixed methods study. Journal of Otology, 15(4), 117–123. https://doi.org/10.1016/j.joto.2019.12.003

Anderson, M. C., & Thiede, K. W. (2008). Why do delayed summaries improve metacomprehension accuracy? Acta Psychologica, 128(1), 110–118. https://doi.org/10.1016/j.actpsy.2007.10.006

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/10.1191/1478088706qp063oa

Braun, V., & Clarke, V. (2019). Reflecting on reflexive thematic analysis. Qualitative Research in Sport, Exercise and Health, 11(4), 589–597. https://doi.org/10.1080/2159676X.2019.1628806

Braun, V., & Clarke, V. (2021). One size fits all? What counts as quality practice in (reflexive) thematic analysis? Qualitative Research in Psychology, 18(3), 328–352. https://doi.org/10.1080/14780887.2020.1769238

Burson, K. A., Larrick, R. P., & Klayman, J. (2006). Skilled or unskilled, but still unaware of it: How perceptions of difficulty drive miscalibration in relative comparisons. Journal of Personality and Social Psychology, 90(1), 60. https://doi.org/10.1037/0022-3514.90.1.60

Butcher, K. R. (2006). Learning from text with diagrams: Promoting mental model development and inference generation. Journal of Educational Psychology, 98(1), 182. https://doi.org/10.1037/0022-0663.98.1.182

Byrne, D. (2022). A worked example of Braun and Clarke’s approach to reflexive thematic analysis. Quality & Quantity, 56(3), 1391–1412. https://doi.org/10.1007/s11135-021-01182-y

Casswell, E. J., Salam, T., Sullivan, P. M., & Ezra, D. G. (2016). Ophthalmology trainees’ self-assessment of cataract surgery. British Journal of Ophthalmology, 100(6), 766–771. https://doi.org/10.1136/bjophthalmol-2015-307307

Davis, D. A., Mazmanian, P. E., Fordis, M., Van Harrison, R., Thorpe, K. E., & Perrier, L. (2006). Accuracy of physician self-assessment compared with observed measures of competence: A systematic review. JAMA, 296(9), 1094–1102. https://doi.org/10.1001/jama.296.9.1094

de Blacam, C., O’Keeffe, D. A., Nugent, E., Doherty, E., & Traynor, O. (2012). Are residents accurate in their assessments of their own surgical skills? The American Journal of Surgery, 204(5), 724–731. https://doi.org/10.1016/j.amjsurg.2012.03.003

de Bruin, A. B., Dunlosky, J., & Cavalcanti, R. B. (2017). Monitoring and regulation of learning in medical education: The need for predictive cues. Medical Education, 51(6), 575–584. https://doi.org/10.1111/medu.13267

de Bruin, A. B., Thiede, K. W., Camp, G., & Redford, J. (2011). Generating keywords improves metacomprehension and self-regulation in elementary and middle school children. Journal of Experimental Child Psychology, 109(3), 294–310. https://doi.org/10.1016/j.jecp.2011.02.005

De Bruin, A. B., & van Gog, T. (2012). Improving self-monitoring and self-regulation: From cognitive psychology to the classroom. Learning and Instruction, 22, 245–252.

Dunlosky, J., & Lipko, A. R. (2007). Metacomprehension: A brief history and how to improve its accuracy. Current Directions in Psychological Science, 16(4), 228–232. https://doi.org/10.1111/j.1467-8721.2007.00509.x

Dunlosky, J., & Rawson, K. A. (2012). Overconfidence produces underachievement: Inaccurate self evaluations undermine students’ learning and retention. Learning and Instruction, 22(4), 271–280.

Dunlosky, J., Rawson, K. A., & Middleton, E. L. (2005). What constrains the accuracy of metacomprehension judgments? Testing the transfer-appropriate-monitoring and accessibility hypotheses. Journal of Memory and Language, 52(4), 551–565.

Dunning, D., Johnson, K., Ehrlinger, J., & Kruger, J. (2003). Why people fail to recognize their own incompetence. Current Directions in Psychological Science, 12(3), 83–87. https://doi.org/10.1111/1467-8721.01235

Eva, K. W., Cunnington, J. P., Reiter, H. I., Keane, D. R., & Norman, G. R. (2004). How can I know what I don’t know? Poor self assessment in a well-defined domain. Advances in Health Sciences Education, 9, 211–224. https://doi.org/10.1023/B:AHSE.0000038209.65714.d4

Evans, A. W., Leeson, R. M., & Petrie, A. (2007). Reliability of peer and self-assessment scores compared with trainers’ scores following third molar surgery. Medical Education, 41(9), 866–872. https://doi.org/10.1111/j.1365-2923.2007.02819.x

Finn, B., & Metcalfe, J. (2014). Overconfidence in children’s multi-trial judgments of learning. Learning and Instruction, 32, 1–9.

Foster, N. L., Was, C. A., Dunlosky, J., & Isaacson, R. M. (2017). Even after thirteen class exams, students are still overconfident: the role of memory for past exam performance in student predictions. Metacognition and Learning, 12(1), 1–19. https://doi.org/10.1007/s11409-016-9158-6

Gordon, M. J. (1991). A review of the validity and accuracy of self-assessments in health professions training. Academic Medicine, 66(12), 762–769. https://doi.org/10.1097/00001888-199112000-00012

Griffin, T. D., Jee, B. D., & Wiley, J. (2009). The effects of domain knowledge on metacomprehension accuracy. Memory & Cognition, 37(7), 1001–1013.

Griffin, T. D., Wiley, J., & Thiede, K. W. (2008). Individual differences, rereading, and self-explanation: Concurrent processing and cue validity as constraints on metacomprehension accuracy. Memory & Cognition, 36(1), 93–103. https://doi.org/10.3758/MC.36.1.93

Griffin, T. D., Wiley, J., & Thiede, K. W. (2019). The effects of comprehension-test expectancies on metacomprehension accuracy. Journal of Experimental Psychology: Learning, Memory, and Cognition, 45(6), 1066. https://doi.org/10.1037/xlm0000634

Gutierrez de Blume, A. P. (2022). Calibrating calibration: A meta-analysis of learning strategy instruction interventions to improve metacognitive monitoring accuracy. Journal of Educational Psychology, 114(4), 681.

Hale, A. J., Nall, R. W., Mukamal, K. J., Libman, H., Smith, C. C., Sternberg, S. B., Kim, H. S., & Kriegel, G. (2016). The effects of resident peer-and self-chart review on outpatient laboratory result follow-up. Academic Medicine, 91(5), 717–722. https://doi.org/10.1097/ACM.0000000000000992

Halim, J., Jelley, J., Zhang, N., Ornstein, M., & Patel, B. (2021). The effect of verbal feedback, video feedback, and self-assessment on laparoscopic intracorporeal suturing skills in novices: A randomized trial. Surgical Endoscopy, 35, 3787–3795. https://doi.org/10.1007/s00464-020-07871-3

Holmboe, E. S., Prince, L., & Green, M. (2005). Teaching and improving quality of care in a primary care internal medicine residency clinic. Academic Medicine, 80(6), 571–577. https://doi.org/10.1097/00001888-200506000-00012

Jamshidi, R., LaMasters, T., Eisenberg, D., Duh, Q.-Y., & Curet, M. (2009). Video self-assessment augments development of videoscopic suturing skill. Journal of the American College of Surgeons, 209(5), 622–625. https://doi.org/10.1016/j.jamcollsurg.2009.07.024

Johnson, W. R., Durning, S. J., Allard, R. J., Barelski, A. M., & Artino, A. R., Jr. (2023). A sco** review of self-monitoring in graduate medical education. Medical Education. https://doi.org/10.1111/medu.15023

Kintsch, W. (1998). Comprehension: A paradigm for cognition. Cambridge University Press.

Koriat, A. (1997). Monitoring one’s own knowledge during study: A cue-utilization approach to judgments of learning. Journal of Experimental Psychology: General, 126(4), 349. https://doi.org/10.1037/0096-3445.126.4.349

Maki, R. H., Shields, M., Wheeler, A. E., & Zacchilli, T. L. (2005). Individual differences in absolute and relative metacomprehension accuracy. Journal of Educational Psychology, 97(4), 723.

Medina, M. S., Castleberry, A. N., & Persky, A. M. (2017). Strategies for improving learner metacognition in health professional education. American Journal of Pharmaceutical Education, 81(4), 78.

Moorthy, K., Munz, Y., Adams, S., Pandey, V., Darzi, A., & Hospital, I. C. S. M. S. (2006). Self-assessment of performance among surgical trainees during simulated procedures in a simulated operating theater. The American Journal of Surgery, 192(1), 114–118. https://doi.org/10.1016/j.amjsurg.2005.09.017

Murdoch-Eaton, D., & Whittle, S. (2012). Generic skills in medical education: Develo** the tools for successful lifelong learning. Medical Education, 46(1), 120–128.

Nelson, T. O. (1996). Gamma is a measure of the accuracy of predicting performance on one item relative to another item, not of the absolute performance on an individual item comments on Schraw (1995). Applied Cognitive Psychology, 10(3), 257–260.

Nelson, T. O., & Narens, L. (1990). Metamemory: A theoretical framework and new findings. In G. H. Bower (Ed.), Psychology of learning and motivation (Vol. 26, pp. 125–173). Elsevier.

Netter, A., Schmitt, A., Agostini, A., & Crochet, P. (2021). Video-based self-assessment enhances laparoscopic skills on a virtual reality simulator: A randomized controlled trial. Surgical Endoscopy, 35, 6679–6686. https://doi.org/10.1007/s00464-020-08170-7

Newell, B. R., & Shanks, D. R. (2014). Unconscious influences on decision making: A critical review. Behavioral and Brain Sciences, 37(1), 1–19. https://doi.org/10.1017/S0140525X12003214

Noushad, B., Van Gerven, P. W. M., & de Bruin, A. B. H. (2023). Twelve tips for applying the think-aloud method to capture cognitive processes. Medical Teacher. https://doi.org/10.1080/0142159X.2023.2289847

Pijeira-Díaz, H. J., van de Pol, J., Channa, F., & de Bruin, A. (2023). Scaffolding self-regulated learning from causal-relations texts: Diagramming and self-assessment to improve metacomprehension accuracy? Metacognition and Learning, 1–28.

Prinz, A., Golke, S., & Wittwer, J. (2020a). How accurately can learners discriminate their comprehension of texts? A comprehensive meta-analysis on relative metacomprehension accuracy and influencing factors. Educational Research Review, 31, 100358. https://doi.org/10.1016/j.edurev.2020.100358

Prinz, A., Golke, S., & Wittwer, J. (2020b). To what extent do situation-model-approach interventions improve relative metacomprehension accuracy? Meta-analytic insights. Educational Psychology Review, 32(4), 917–949.

Quick, J. A., Kudav, V., Doty, J., Crane, M., Bukoski, A. D., Bennett, B. J., & Barnes, S. L. (2017). Surgical resident technical skill self-evaluation: Increased precision with training progression. Journal of Surgical Research, 218, 144–149. https://doi.org/10.1016/j.jss.2017.05.070

Redford, J. S., Thiede, K. W., Wiley, J., & Griffin, T. D. (2012). Concept map** improves metacomprehension accuracy among 7th graders. Learning and Instruction, 22(4), 262–270. https://doi.org/10.1016/j.learninstruc.2011.10.007

Saenz, G. D., Geraci, L., Miller, T. M., & Tirso, R. (2017). Metacognition in the classroom: The association between students’ exam predictions and their desired grades. Consciousness and Cognition, 51, 125–139. https://doi.org/10.1016/j.concog.2017.03.002

Sandars, J., & Cleary, T. J. (2011). Self-regulation theory: applications to medical education: AMEE Guide No. 58. Medical Teacher, 33(11), 875–886.

Sargeant, J., Mann, K., van der Vleuten, C., & Metsemakers, J. (2008). “Directed” self-assessment: Practice and feedback within a social context. Journal of Continuing Education in the Health Professions, 28(1), 47–54.

Schraw, G. (2009). A conceptual analysis of five measures of metacognitive monitoring. Metacognition and Learning, 4, 33–45. https://doi.org/10.1007/s11409-008-9031-3

Thiede, K. W., Anderson, M., & Therriault, D. (2003). Accuracy of metacognitive monitoring affects learning of texts. Journal of Educational Psychology, 95(1), 66.

Thiede, K. W., Griffin, T. D., Wiley, J., & Anderson, M. C. (2010). Poor metacomprehension accuracy as a result of inappropriate cue use. Discourse Processes, 47(4), 331–362. https://doi.org/10.1080/01638530902959927

Thiede, K. W., Griffin, T. D., Wiley, J., & Redford, J. S. (2009). Metacognitive monitoring during and after reading. Handbook of Metacognition in Education, 85, 106.

Thiede, K. W., Wright, K. L., Hagenah, S., Wenner, J., Abbott, J., & Arechiga, A. (2022). Drawing to improve metacomprehension accuracy. Learning and Instruction, 77, 101541. https://doi.org/10.1016/j.learninstruc.2021.101541

Tirso, R., Geraci, L., & Saenz, G. D. (2019). Examining underconfidence among high-performing students: A test of the false consensus hypothesis. Journal of Applied Research in Memory and Cognition, 8(2), 154–165. https://doi.org/10.1016/j.jarmac.2019.04.003

Van de Pol, J., De Bruin, A. B., van Loon, M. H., & Van Gog, T. (2019). Students’ and teachers’ monitoring and regulation of students’ text comprehension: Effects of comprehension cue availability. Contemporary Educational Psychology, 56, 236–249.

van de Pol, J., van Loon, M., van Gog, T., Braumann, S., & de Bruin, A. (2020). Map** and drawing to improve students’ and teachers’ monitoring and regulation of students’ learning from text: Current findings and future directions. Educational Psychology Review, 32(4), 951–977. https://doi.org/10.1007/s10648-020-09560-y

van Loon, M. H., de Bruin, A. B., van Gog, T., van Merriënboer, J. J., & Dunlosky, J. (2014). Can students evaluate their understanding of cause-and-effect relations? The effects of diagram completion on monitoring accuracy. Acta Psychologica, 151, 143–154.

Veaudor, M., Gérinière, L., Souquet, P.-J., Druette, L., Martin, X., Vergnon, J.-M., & Couraud, S. (2018). High-fidelity simulation self-training enables novice bronchoscopists to acquire basic bronchoscopy skills comparable to their moderately and highly experienced counterparts. BMC Medical Education, 18(1), 1–8. https://doi.org/10.1186/s12909-018-1304-1

Wiley, J., Griffin, T. D., & Thiede, K. W. (2005). Putting the comprehension in metacomprehension. The Journal of General Psychology, 132(4), 408–428.

Wouda, J. C., & van de Wiel, H. B. (2014). The effects of self-assessment and supervisor feedback on residents’ patient-education competency using videoed outpatient consultations. Patient Education and Counseling, 97(1), 59–66. https://doi.org/10.1016/j.pec.2014.05.023

Acknowledgements

We would like to thank every participant who was willing to spend a considerable amount of time online for this study, especially during the Covid-19 restrictions. We are also grateful to the dean and faculty colleagues at the College of Health Sciences, University of Buraimi, Sultanate of Oman for their unwavering support.

Funding