Abstract

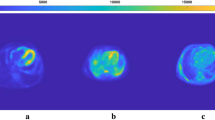

Deep neural networks (DNNs) have already impacted the field of medicine in data analysis, classification, and image processing. Unfortunately, their performance is drastically reduced when datasets are scarce in nature (e.g., rare diseases or early-research data). In such scenarios, DNNs display poor capacity for generalization and often lead to highly biased estimates and silent failures. Moreover, deterministic systems cannot provide epistemic uncertainty, a key component to asserting the model’s reliability. In this work, we developed a probabilistic system for classification as a framework for addressing the aforementioned criticalities. Specifically, we implemented a Bayesian convolutional neural network (BCNN) for the classification of cardiac amyloidosis (CA) subtypes. We prepared four different CNNs: base-deterministic, dropout-deterministic, dropout-Bayesian, and Bayesian. We then trained them on a dataset of 1107 PET images from 47 CA and control patients (data scarcity scenario). The Bayesian model achieved performances (78.28 (1.99) % test accuracy) comparable to the base-deterministic, dropout-deterministic, and dropout-Bayesian ones, while showing strongly increased “Out of Distribution” input detection (validation-test accuracy mismatch reduction). Additionally, both the dropout-Bayesian and the Bayesian models enriched the classification through confidence estimates, while reducing the criticalities of the dropout-deterministic and base-deterministic approaches. This in turn increased the model’s reliability, also providing much needed insights into the network’s estimates. The obtained results suggest that a Bayesian CNN can be a promising solution for addressing the challenges posed by data scarcity in medical imaging classification tasks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Artificial intelligence (AI), which is already having an impact in the field of medicine, will play an even larger role during the next few years [1]. Modern deep neural networks (DNNs) have produced remarkable achievements in data analysis, classification, and image processing. DNNs have drawn more and more the attention of experts as their involvement using medical data can improve the precision of medical applications. If large datasets are available, neural networks can interpret very complex phenomena more effectively than traditional statistical methods. Sadly, their performance is directly correlated with the size of the input [1]. This is a non-trivial criticality where datasets are scarce in nature (i.e., rare diseases or unusual/early-research data), data aggregation is not possible, and/or augmentation capabilities are limited. Deep learning models are also vulnerable to overfitting, especially when constrained by small datasets. This in turn negatively impacts their capacity for generalization [2]. This is an important challenge for situations where dramatic outcomes can result from silent failures (i.e., the network confidently failing to classify data), such as in medical diagnosis [3]. Additionally, no epistemic uncertainty, particularly significant when training data are lacking, is provided in either classification or regression use cases. Many solutions, such as dropout (during training) [4], data augmentation [5], and k-fold cross validation [6], have been proposed in literature to counteract overfitting and correctly assess the performance. Despite these efforts, problems regarding interpretability of the output and the related uncertainty still exist. To mitigate these issues, the Bayesian paradigm can be viewed as a systematic framework for analyzing and training uncertainty-aware neural networks, with good learning capabilities from small datasets and resistance to overfitting [7]. Particularly, Bayesian neural networks (BNNs) are a viable framework for using deep learning in contexts where there is a need to produce information capable of alerting the user if a system should fail to generalize [8]. Many studies have investigated the use of the Bayesian paradigm in medicine for classification tasks. Some applications concern the classification of histopathological images [9], oral cancer images [10], and resting state functional magnetic resonance imaging (rs-fMRI) images for Alzheimer’s disease [11]. More applications of the Bayesian paradigm are available in the thorough review work by Abdullah et al. [12].

Bayesian Neural Networks

The concept behind BNNs comes from the application of the Bayesian paradigm to artificial neural networks (ANNs) in order to render them probabilistic systems. The Bayesian approach to probability (in contrast to the frequentist approach) spans from the meaning behind Bayes’s rule shown in the Eq. 1:

where P(H|D) is called the posterior, P(D|H) the likelihhod, P(H) the prior, and P(D) the evidence. P(D) is obtained by integrating over all the possible parameter in order to normalize the posterior. This step is intractable for practical models and is tackled through various approaches (see also predictive posterior later). H and D respectively represent the hypothesis and the available data. Applying the Bayes’ formula to train a predictor can be thought of as learning from data D [8]. One possible description for a BNN is that of a stochastic neural network trained using Bayesian inference [8]. The design and implementation of a BNN is compound of two steps: the definition of the network architecture and the selection of a stochastic model (in terms of prior distribution on the network’s parameters and/or prior confidence in the predictive capabilities) [8]. The stochastic part in model parametrization can be viewed as the formation of the hypothesis H [8]. Looking at the Eq. 1 also gives a more complete picture of the probabilistic point of view for the training process. Initially, the prior is defined during the network’s construction process. We then proceed at the computation of the likelihood (how good the model fits the data) through some form of probabilistic alternative to forward and back-propagation. Lastly, we normalize the result for the evidence (all the possible models fitting the data) in order to update our prior belief with new found information and construct the new posterior. This process is repeated throughout various epochs, as for classic neural networks, until performance criteria are met. Epistemic uncertainty is included in the posterior [8] during training and at inference. More precisely, once the model is trained, at inference time, an approximate form of the predictive posterior, of which the analytical form is shown in Eq. 2, is used.

where \(P(\hat{y}|\hat{x},D)\) represents new data probability given the known data, \(P(\hat{y}|\hat{x},\theta )\) represents the probability with respect to model parameters, and it considers the effect the known data have on the parameters (\(P(\theta |D)\)). This means that, with the same stochastic model and equal inputs, different outputs can be given, cumulatively providing an epistemic uncertainty profile. True Bayesian inference for large neural networks is intractable (integrals on milions of parameters for evidence and predictive posterior), so alternative methods, such as variational inference [13], Markov Chain Monte Carlo [14], and dropout Bayesian approximation [15], are used in order to render these models computationally feasible. Giving more insight in the world of BNNs is not in the scope of this article, but good resources are available in the literature such as Jospin et al. [8] and Mullachery et al. [\(\sim\)1 s for the CNN, DropCNN, and DropBCNN, while the BCNN required \(\sim\) 6.5 s (reducible to 4.5 s by only taking one Monte Carlo estimate of the gradient). Classifying an image required \(\sim\)1.9 ms for the CNN, DropCNN and DropBCNN and \(\sim\)9.5 ms for the BCNN. Note that, to obtain a useful classification with the corresponding uncertainty profiles, the probabilistic networks need to classify an image for n different times and then vote by majority, so the time for the DropBCNN and BCNN should be considered n times (n = 100 in our case).

Results

Figure 3 shows a representative example of the learning curves for the CNN, DropCNN (dropout layers inactive at evaluation, dropout probability of 25% and 50%), DropBCNN (dropout layers active at evaluation, dropout probability of 25% and 50%), and the BCNN. Table 2 shows the result for accuracy on the four tested networks. Data are shown for accuracy on training, validation, and test set. Moreover, validation-test mismatch is provided as a measure of the capacity of the network to detect out-of-distribution (OOD) data [3). Moreover, although the learning curves for the BCNN seem to provide a worse picture compared to the other models, the BCNN behavior is actually the desired one in order to avoid silent failures in deep learning systems. This is visible in Table 2 where we see the strong reduction in validation-test mismatch (\(\sim\)7%, p-value < 0.05) in terms of accuracy when going to the BCNN from the DropBCNN (p=0.5) (Bayesian approximation) and an even stronger reduction compared to the deterministic model (\(\sim\)15%, p-value < 0.05). This is indication of the improved capability of the BCNN in learning correct features and the ability to spot OOD inputs using the same patients (of the training set) in the validation set. Not only, the BCNN is also capable of achieving comparable accuracies on the test set with respect to the deterministic CNN (see Table 2). The Bayesian models are also able to provide a measure of epistemic uncertainty as seen in Table 6 and Fig. 4. This information, not available when using deterministic networks, is invaluable to assess the reliability of the prediction, especially in medicine. Uncertainty profiles can also be used to improve the performance, give the model the capability to resist adversarial attacks [32], refuse the classification under a certain threshold to avoid failures, and guide the acquisition of more data towards where the epistemic uncertainty is the highest. Both the DropBCNN and BCNN are able to provide uncerainty metrics, but as is possible to see in Table 6, the fully Bayesian model displays a greater discrepancy both between “Correct” and “Incorrect” confidence (\(\sim\)7% more compared to the best DropBCNN with p = 0.5, p-value < 0.05) and between “AL” and “CTRL & ATTR” (\(\sim\)7% more compared to the best DropBCNN with p = 0.5, p-value < 0.05). This is in line with the confusion matrices in Fig. 5 and the metrics of precision, recall, and F1-score showing better prediction capabilities towards the AL classification vs the CTRL and ATTR discrimination for all the models (max p-value < 0.05). Certainly, to take into consideration is the higher computational cost of the BCNN compared to the DropBCNN and CNN. In this sense, the Bayesian approximation can be seen as a way of maintaining a measure of uncertainty while compromising between the better performance of a fully Bayesian model and the lower computational cost of a deterministic CNN.

Study’s Limitations

The main limitation of this work lies in the specific case study (early acquired cardiac PET images from CA patients) approached with the explained methodology. In particular, in the limited dataset and in the fact that the severity of the disease was not accounted for (as a general index across the various subtypes is not available), possibly leading to biased data and dataset split. To better explore the capabilities and potentiality of the Bayesian framework in similar scenarios and to produce a severity metric based on PET acquisitions are objectives of future works. Moreover, better tuning of the models and a major exploration of possible approximations and algorithms to improve Bayesian inference performance and computational cost could also be considered future works.

Conclusion

In the present work, four models were developed to assess, through a CA classification case study, the capability of BCNNs to overcome some of the limitations of deep learning in data scarcity scenarios. The developed BCNN showed comparable accuracy on the test dataset in comparison with the deterministic CNN; it is also able to reduce silent failures by spotting OOD inputs better than the deterministic and approximate bayesian models. Moreover, both the approximate Bayesian DropBCNN and the BCNN provided epistemic uncertainty. It is well known that epistemic uncertainty is fundamental for enriching the prediction and delivering crucial information to improve model performance, better interpret results, and possibly construct thresholds to refuse classification.

Data Availability

Data used in this article are not available due to it being property of the health care institution.

Code Availability

Developed code is available upon request to the corresponding author.

References

F. Piccialli, V. Di Somma, F. Giampaolo, S. Cuomo, G. Fortino, “A survey on deep learning in medicine: Why, how and when?,” Information Fusion, Elsevier, 66:111–137 (2021).

C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. Goodfellow, R. Fergus, “Intriguing properties of neural networks,” ar**v preprint, https://doi.org/10.48550/ar**v:1312.6199 (December 21, 2013).

J. Ker, L. Wang, J. Rao, T. Lim, “Deep learning applications in medical image analysis,” IEEE Access, 6:9375–9389 (2017).

N. Srivastava, G. Hinton, A. Krizhevsky, I. Sutskever, R. Salakhutdinov, “Dropout: a simple way to prevent neural networks from overfitting,” The journal of machine learning research, 15:1:1929–1958 (2014).

C. Shorten, T. M. Khoshgoftaar, “A survey on image data augmentation for deep learning,” Journal of big data, Springer, 6:1:1–48 (2019).

T. Fushiki, “Estimation of prediction error by using k-fold cross-validation,” Statistics and Computing, Springer, 21:137–146 (2011).

S. Depeweg, J.-M. Hernandez-Lobato, F. Doshi-Velez, S. Udluft, “Decomposition of uncertainty in bayesian deep learning for efficient and risk-sensitive learning,” in International Conference on Machine Learning, 1184–1193 (2018).

L. V. Jospin, H. Laga, F. Boussaid, W. Buntine, M. Bennamoun, “Hands-on bayesian neural networks–a tutorial for deep learning users,” IEEE Computational Intelligence Magazine, 17:2:29–48 (2022).

Ł. Raczkowski, M. Możejko, J. Zambonelli, E. Szczurek, “Ara: accurate, reliable and active histopathological image classification framework with bayesian deep learning,” Scientific reports, Nature, 9:1:Article number: 14347 (2019).

B. Song, S. Sunny, S. Li, K. Gurushanth, P. Mendonca, N. Mukhia, S. Patrick, S. Gurudath, S. Raghavan, I. Tsusennaro, S. T. Leivon, T. Kolur, V. Shetty, V. R. Bushan, R. Ramesh, T. Peterson, V. Pillai, P. Wilder-Smith, A. Sigamani, A. Suresh, A. Kuriakose, P. Birur, R. Liang, “Bayesian deep learning for reliable oral cancer image classification,” Biomedical Optics Express, Optica Publishing Group, 12:10:6422–6430 (2021).

S. Yadav, “Bayesian deep learning based convolutional neural network for classification of parkinson’s disease using functional magnetic resonance images,” SSRN, https://doi.org/10.2139/ssrn.3833760 (April 25, 2021).

A. A. Abdullah, M. H. Masoud, T. M. Yaseen, “A review on bayesian deep learning in healthcare: Applications and challenges,” IEEE Access, 10:36538–36562 (2022).

D. M. Blei, A. Kucukelbir, J. D. McAuliffe, “Variational inference: A review for statisticians,” Journal of the American statistical Association, 112:518:859–877 (2017).

C. J. Geyer, “Introduction to markov chain monte carlo,” Handbook of markov chain monte carlo, Chapter 1 20116022, Boca Raton (2011).

Y. Gal, Z. Ghahramani, “Dropout as a bayesian approximation: Representing model uncertainty in deep learning,” in International Conference on Machine Learning, 1050–1059 (2016).

V. Mullachery, A. Khera, A. Husain, “Bayesian neural networks,” ar**v preprint, https://doi.org/10.48550/ar**v:1801.07710 (January 23, 2018).

C. Blundell, J. Cornebise, K. Kavukcuoglu, D. Wierstra, “Weight uncertainty in neural network,” in International Conference on Machine Learning, 1613–1622 (2015).

D. P. Kingma, T. Salimans, M. Welling, “Variational dropout and the local reparameterization trick,” Advances in neural information processing systems 28, NIPS (2015).

A. D. Wechalekar, J. D. Gillmore, P. N. Hawkins, “Systemic amyloidosis,” The Lancet, Elsevier, 387:10038:2641–2654 (2016).

A. Martinez-Naharro, P. N. Hawkins, M. Fontana, “Cardiac amyloidosis,” Clinical Medicine, Royal College of Physicians, 18:Suppl.2:30–35 (2018).

M. Rosenzweig, H. Landau, “Light chain (al) amyloidosis: update on diagnosis and management,” Journal of Hematology & Oncology, Springer, 4:1–8 (2011).

F. L. Ruberg, M. Grogan, M. Hanna, J. W. Kelly, M. S. Maurer, “Transthyretin amyloid cardiomyopathy: Jacc state-of-the-art review,” Journal of the American College of Cardiology, JACC, 73:22:2872–2891 (2019).

M. F. Santarelli, D. Genovesi, V. Positano, M. Scipioni, G. Vergaro, B. Favilli, A. Giorgetti, M. Emdin, L. Landini, P. Marzullo, “Deep-learning-based cardiac amyloidosis classification from early acquired pet images,” The International Journal of Cardiovascular Imaging, Springer, 37:7:2327–2335 (2021).

M. Santarelli, M. Scipioni, D. Genovesi, A. Giorgetti, P. Marzullo, L. Landini, “Imaging techniques as an aid in the early detection of cardiac amyloidosis.,” Current Pharmaceutical Design, Bentham Science, 27:16:1878–1889 (2021).

Y. J. Kim, S. Ha, Y.-i. Kim, “Cardiac amyloidosis imaging with amyloid positron emission tomography: a systematic review and meta-analysis,” Journal of Nuclear Cardiology, Springer, 27:123–132 (2020).

D. Genovesi, G. Vergaro, A. Giorgetti, P. Marzullo, M. Scipioni, M. F. Santarelli, A. Pucci, G. Buda, E. Volpi, M. Emdin, “[18f]-florbetaben pet/ct for differential diagnosis among cardiac immunoglobulin light chain, transthyretin amyloidosis, and mimicking conditions,” Cardiovascular Imaging, JACC, 14:1:246–255 (2021).

J. D. Gillmore, A. Wechalekar, J. Bird, J. Cavenagh, S. Hawkins, M. Kazmi, H. J. Lachmann, P. N. Hawkins, G. Pratt, B. Committee, “Guidelines on the diagnosis and investigation of al amyloidosis,” British journal of haematology, 168:2:207–218 (2015).

J. D. Gillmore, M. S. Maurer, R. H. Falk, G. Merlini, T. Damy, A. Dispenzieri, A. D. Wechalekar, J. L. Berk, C. C. Quarta, M. Grogan, H. J. Lachmann, S. Bokhari, A. Castano, S. Dorbala, G. B. Johnson, A. W. J. M. Glaudemans, T. Rezk, M. Fontana, G. Palladini, P. Milani, P. L. Guidalotti, K. Flatman, T. Lane, F. W. Vonberg, C. J. Whelan, J. C. Moon, F. L. Ruberg, E. J. Miller, D. F. Hutt, B. P. Hazenberg, C. Rapezzi, P. N. Hawkins, “Nonbiopsy diagnosis of cardiac transthyretin amyloidosis,” Circulation, AHA, 133:24:2404–2412 (2016).

S. Imambi, K. B. Prakash, G. Kanagachidambaresan, “Pytorch,” Programming with TensorFlow: Solution for Edge Computing Applications, Springer, 87–104 (2021).

P. Esposito, “Blitz - bayesian layers in torch zoo (a bayesian deep learing library for torch), github.” https://github.com/piEsposito/blitz-bayesian-deep-learning/ (2020).

T. DeVries, W. T. Graham, “Learning confidence for out-of-distribution detection in neural networks,” ar**v preprint, https://doi.org/10.48550/ar**v.1802.04865 (February 13, 2018).

A. Uchendu, D. Campoy, C. Menart, A. Hildenbrandt, “Robustness of bayesian neural networks to white-box adversarial attacks,” in 2021 IEEE Fourth International Conference on Artificial Intelligence and Knowledge Engineering (AIKE), 72–80 (2021).

Funding

Open access funding provided by Università di Pisa within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics Approval

Relating to the data used in this article, both the AIFA (Agenzia Italiana del Farmaco) committee and the institutional ethics committee gave their approval to the study. The research complied with the Helsinki Declaration.

Conflict of Interest

Nicola Martini is presently an employee of Yunu Inc.; his collaboration to the present study occurred before its present affiliation, his contribution to this article reflects entirely and only his own expertise on the matter, and he declares no competing financial or non-financial interests related to the present article. All the other authors do not have competing financial or non-financial interests to disclose concerning the present manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bargagna, F., De Santi, L.A., Martini, N. et al. Bayesian Convolutional Neural Networks in Medical Imaging Classification: A Promising Solution for Deep Learning Limits in Data Scarcity Scenarios. J Digit Imaging 36, 2567–2577 (2023). https://doi.org/10.1007/s10278-023-00897-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10278-023-00897-8