Abstract

Collaborative model-driven development is a de facto practice to create software-intensive systems in several domains (e.g., aerospace, automotive, and robotics). However, when multiple engineers work concurrently, kee** all model artifacts synchronized and consistent is difficult. This is even harder when the engineering process relies on a myriad of tools and domains (e.g., mechanic, electronic, and software). Existing work tries to solve this issue from different perspectives, such as using trace links between different artifacts or computing change propagation paths. However, these solutions mainly provide additional information to engineers, still requiring manual work for propagating changes. Yet, most modeling tools are limited regarding the traceability between different domains, while also lacking the efficiency and granularity required during the development of software-intensive systems. Motivated by these limitations, in this work, we present a solution based on what we call “reactive links”, which are highly granular trace links that propagate change between property values across models in different domains, managed in different tools. Differently from traditional “passive links”, reactive links automatically propagate changes when engineers modify models, assuring the synchronization and consistency of the artifacts. The feasibility, performance, and flexibility of our solution were evaluated in three practical scenarios, from two partner organizations. Our solution is able to resolve all cases in which change propagation among models were required. We observed a great improvement of efficiency when compared to the same propagation if done manually. The contribution of this work is to enhance the engineering of software-intensive systems by reducing the burden of manually kee** models synchronized and avoiding inconsistencies that potentially can originate from collaborative engineering in a variety of tool from different domains.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A fundamental part of engineering software-intensive systems is the set of design decisions that are made based on specific requirements and their realization through various implementation artifacts such as models, prototypes, and source code [78]. However, these engineering artifacts are dynamic and undergo constant refinement, alteration, and updates [24, 34, 54, 74]. As the development progresses, engineers gain a deeper understanding of the project, select various off-the-shelf components, and develop progressively complex prototypes for client review [37, 110]. With each step, the constraints and dependencies between the artifacts, including model elements, are revised, and consistency rules are established [22, 101]. Throughout the entire engineering process, it is crucial for the artifacts to maintain consistency with one another and adhere to the constraints set for the requirements and other related implementation artifacts [22, 101]. Achieving this is not an easy task in general, and it is even more challenging in the context of collaborative, multi-domain, and model-driven engineering [16, 19, 69, 83].

For develo** software-intensive systems, regardless of their size, it is common for multiple teams to collaborate [40, 59, 97]. Each team focuses on a specific aspect of the system and uses separate tools to ensure domain-specific properties are met. As a result, different but related artifacts are created [72]. Despite their differences, all artifacts represent the same system and must be synchronized. If changes to the specifications require new property values in one tool, these changes must be propagated throughout all affected dependencies [84]. Furthermore, if new dependencies are affected, they must also be updated accordingly.

The issue of linking artifacts has been examined in the literature from various angles, and different solutions have been proposed to aid collaboration among engineers. Trace links have been created between related artifacts using various methods [7, 25, 66, 85, 96], which can help manage relationships and constraints between models. However, these links are “passive” and do not automatically propagate changes. Consistency rules can highlight inconsistent data [2, 4, 108], but they may not provide the necessary granularity for all use cases, even when paired with traceability. Change propagation algorithms can determine the ideal propagation paths or predict the impact of a change [3, 46, 77, 101, 109], but manual propagation is still required, and these algorithms can slow down the development process due to their high computation times. Only a few solutions can provide automatic change propagation across linked artifacts [29, 32, 36, 43, 70, 87], but they require the use of a limited set of tools and often cannot handle multi-tool and multi-domain systems. These limitations compromise the engineering of software-intensive systems by hiding dependencies or potential inconsistencies, ultimately affecting the quality of the final products.

Our work aims to overcome the limitations discussed above by proposing a solution that integrates traceability [23], consistency checking [39], and change propagation [48], while also considering a finer granularity of engineering artifacts. Our solution uses “reactive links” to automatically propagate fine-grained changes, specifically at the level of model properties, across multi-domain artifacts of software-intensive systems. These links establish connections between related properties in different artifacts. Whenever a change in any artifact affects a linked property, our solution immediately applies its impact on all related artifacts, as configured by the users. These reactive links prevent unnoticed inconsistencies that could persist in the system until they lead to severe faults.

In this paper, we investigate three research questions. Firstly, we look into whether our proposed solution can cover all the use cases present in our evaluation scenarios. Then, we investigate whether the reactive links can be flexibly applied in diverse engineering projects. Finally, we discuss the performance of the reactive links in terms of memory and run time. Our solution was evaluated against these three research questions through three engineering scenarios that encompass various domains, tools, and change propagation cases.Footnote 1 The results demonstrate the feasibility and performance of the reactive links in these scenarios, including a notable enhancement in maintaining constraints and consistency among artifacts. We identified potential areas for expanding the links to improve the collaborative model-driven engineering process significantly. Furthermore, we also recognized that there is room for future improvements, and we compiled a list of potential ways to enhance and customize the solution for different use cases.

Our solution’s primary contribution is its ability to substantially minimize the overhead needed to uphold the dependencies and consistency between various models in collaborative engineering. Our solution can assist engineers toward the following benefits: (i) reducing the probability of errors during the modeling process, such as product validation, by identifying any dependency violation among model artifacts and (ii) reducing the risk of models using obsolete or inconsistent data by instantaneously propagating changes as soon as they are detected in any of the artifacts.

This paper is an extension of our previous work [84], specifically, we: (i) consider new link operators to deal with collections and textual values in the properties that need to be propagated; (ii) recommend a process for creating and maintaining the reactive links; (iii) address the problem of circular links and floating point precision; (iv) add a new case study from an industry partner [67]; (v) provide more details of the solution and the implementation used in the evaluation; and (vi) extend the results to evaluate the flexibility and memory impact of our approach.

This paper is structured into sections. First, in Sect. 2, an example of the risks involved in synchronizing related artifacts is introduced. Next, in Sect. 3, a proposed solution is described to address these risks. In Sect. 4, the study design and research questions are presented, along with an explanation of how the proposed solution is evaluated using two engineering scenarios. Then, in Sects. 5 and 6, the evaluation results and potential threats to the validity of the study are discussed, respectively. Additionally, Sect. 7 provides an overview of related work in the literature. Finally, in Sect. 8, the paper concludes with a final assessment of the suitability of the proposed solution for the identified problem.

2 Motivational example

In this section, we present an illustrative example that demonstrates the risks involved in synchronizing related artifacts. This example is extracted from one of the engineering scenarios of our subject systems (described in detail in Sect. 4.2).

Let us consider the development of a hydraulic actuator, illustrated in Fig. 1, which involves three teams working collaboratively. The first team, depicted on the upmost part of the figure, comprises requirement engineers who use a requirements tool. The second team consists of physicists who use a complex calculator to determine the parameter values that best describe the system. This team is represented on the right-hand side of the figure through a capture from their calculator worksheet. The third team, on the left-hand side in the figure, comprises graphical designers who create prototypes of system iterations using a graphical modeling tool. This example is illustrated in Fig. 1.

To provide more context for the example, let us take a closer look at the hydraulic actuator being developed. The actuator consists of a cylinder with a piston that is connected to a pump. For the purposes of this example, we focus solely on the cylinder. One of the requirements for this cylinder, marked in Fig. 1 with a red frame, specifies that the pressure within it should not exceed the atmospheric pressure, which is a maximum of 160 bar. This threshold is critical for ensuring the safe and accurate operation of the piston. If this number is significantly higher than the maximum pressure within the cylinder, the client has to needlessly spend much more on the components of the system. On the other hand, if the pressure within the cylinder ever exceeds this specification, the hydraulic actuator is likely to become unsafe and unusable.

The team of physicists responsible for designing the hydraulic actuator uses a dedicated calculator worksheet for the cylinder, which takes pressure as input and calculates other significant data such as the diameter of the piston cylinder. The goal of the team is to find the minimum cylinder diameter that ensures the safe operation of the actuator while taking into account the threshold value for pressure. The resulting diameter is then communicated to the graphical modeling team, who use it to update their design. The updated design includes threshold values that are communicated to the client when selecting off-the-shelf parts.

2.1 Problem statement

Ideally, the three models upon which the development of the hydraulic actuator is based would be fully synchronized. The dependencies between these models are represented in Fig. 1 with gray arrows. However, this is not often the case in practice, particularly when teams with varying levels of insight collaborate on different models. Human error is not uncommon, and if each team is solely responsible for one tool and one model, violations of constraints and inconsistencies may go unnoticed.

As an example, let us assume that a mistake occurs when the maximum required piston pressure is entered, resulting in a value of only 60 bar in the calculator worksheet. This value is then used to determine the piston diameter, resulting in a system that is actually unsafe. Despite the requirement constraint of a maximum of 160 bar, engineers incorporate this value into the graphical design without realizing the mistake. However, neither the requirements engineering team nor the graphical modeling team has the insight or responsibility to verify the correctness of the values obtained from the computation. This incorrect value can remain undetected in the system until the quality assurance team notices the issue late in the development process and reports it. Correcting the mistake requires the collaboration of all the teams involved and additional reviews, which lengthens the process and increases the costs and effort required. If left undetected, the mistake could result in the client acquiring improper and unsafe components based on incorrect information.

2.2 The limitations of the existing solutions

This section provides a brief overview of the existing solutions proposed in the literature to address the problem, which are discussed in detail in Sect. 7. However, it is important to note that these existing solutions have their own limitations and do not fully address the problem at hand. While each solution may solve a part of the problem, none of them provides a complete solution, and each has its own drawbacks.

2.2.1 Trace links

Two trace links can be utilized to establish a connection between (i) the pressure requirement and the computation and (ii) the computation result and the cylinder diameter in the graphical design. However, there are two major drawbacks to this approach. Firstly, the two relationships represented here have different roles. In the case of (i), the aim is to maintain consistency with a threshold, while in the case of (ii), the properties’ values are expected to be equal. The engineers can either create two different trace matrices only for these cases, or choose general trace types that do not provide all the necessary information. Secondly, the traces are merely passive links that cannot propagate information or verify constraints, they only indicate the relationships between artifacts. Ultimately, it is still the engineers’ responsibility to ensure the constraint fulfillment.

2.2.2 Consistency rules

Another option for engineers is to establish consistency rules. However, this approach requires a way to connect artifacts across tool boundaries. If the system can be reduced to one metamodel, this is not a problem. But when connecting artifacts across models, consistency checking can be combined with traceability to maintain most of the downsides discussed earlier, particularly the lack of granularity.

Trace links are defined at the artifact level, such as connecting requirements to the calculator artifacts that use them. However, since there is only one requirement that specifies the maximum piston pressure, setting a consistency rule using traces can result in latency and redundancy. Every trace is checked for a parameter that is only specified once, potentially resulting in false inconsistencies for requirements that do not specify a maximum piston pressure. Furthermore, the rules must be carefully defined to avoid false results. Marking a requirement as inconsistent just because it does not, and should not, specify a piston pressure can create more problems than it solves.

2.2.3 Change propagation

Assuming that engineers have a change propagation algorithm to handle the system under development, this is still a challenging task, especially when dealing with multi-domain data. There are two key issues associated with these algorithms. Firstly, change propagation algorithms can be computationally expensive and time-consuming. They typically require an overview of the system in the form of a matrix with all the dependencies between artifacts, which must be parsed to simulate the propagation. This process can be particularly taxing for even small systems, such as the hydraulic actuator, which involve a significant number of artifacts. Secondly, change propagation is not always automated. While propagation algorithms can suggest ways to handle constraints and resolve inconsistencies, engineers must still manually apply the necessary changes. This manual intervention prolongs the overall time required to return the system to a consistent state and does not fully address the risk of human error.

2.2.4 Automatic knowledge propagation

Automatically propagating links are an appealing solution in theory for resolving inconsistencies between computation results and graphical designs. However, in practice, the solutions proposed in this area are often domain-specific. For instance, many of the available solutions are limited to systems described entirely using Unified Modeling Language (UML), which is not the case for the problem at hand. While some solutions not dependent on UML exist, they are typically tailored to narrow domains and cannot handle the combination of artifacts present in our scenario, which includes requirements, computations, and graphical elements.

One limitation of the existing solutions for propagation links is their inability to handle constraint violations. Although they may ensure that values remain consistent, they cannot address constraints such as the maximum piston pressure requirement in our example. This creates a gap in the system’s ability to ensure that all constraints are met and increases the risk of unsafe or improper components being produced. A more comprehensive approach is needed to address both consistency and constraint violations in the system.

3 Proposed solution

Our solution aims to mitigate the primary shortcomings of the existing solutions discussed in the section above, while leveraging and improving on their respective advantages.

3.1 Working assumptions

Before our solution can be considered feasible, the environment and use case must fulfill a set of conditions, which we assume to be true.

Firstly, one of the main features of the reactive links is specifically their fine granularity. They can connect two artifact property values and check their consistency with each other or propagate changes from one value to the other. This feature makes reactive links not feasible for coarser traceability, change propagation, and consistency checking use cases. Thus, we assume that the purpose of the use of the reactive links is to create and synchronize fine-grained values stored in specific properties of the artifacts.

We also assume that the models to be linked can be stored in a common environment with a uniform and extensible artifact representation. This environment can be any technology that allows multiple tools and models to be stored together and extended with additional information. These aspects are discussed in more detail in Sects. 3.2 and 4.3. Besides storing the data, we assume that this common environment offers a mechanism of communication with the original tools in which the models were developed. This mechanism serves to ensure that the models in a tool are consistent and synchronized with the information in the common environment. The specific representation of the data (e.g., UML format) and the tool-side view of it are not particularly important as long as the common environment representation supports the addition of reactive links.

3.2 Artifacts representation

As stated above, the majority of knowledge propagation solutions necessitate a UML representation. However, our solution is tailored to function in a scenario where multiple domains and tools are employed, as described in the motivational example. To achieve this, we established a generic representation, presented in Fig. 2, which can handle various types of models and artifacts.

Figure 2 presents a metamodel defined to represent the artifacts in a generic way. On the left side of the figure, the metamodel describes the elements to represent the artifacts/models. On the right side, the links added by our solution are shown. This metamodel is based on a model type that contains model elements. Each element is assigned a type and has properties, which in turn have a type and a set of possible values. Elements are a subclass of values, as a model element can also be a value. With this approach, we aim to provide a flexible and adaptable representation of artifacts that can be used across multiple domains and tools, regardless of the type of model being used.

To allow the specification of the links, the artifacts to be linked need not only to be represented according to this metamodel, but also to be stored in a common environment. As illustrated in Fig. 3, this common environment provides an integration between the different tools used in the toolset. This is required to allow the artifacts created and managed by different tools to interact with each other, while also providing an overview representation of the cross-domain modeling information. In the absence of such an environment, the artifacts are only visible within their original tools, which is a problem already discussed in the example (Sect. 2). In this case, our solution cannot obtain or provide any knowledge to related artifacts in other sections of the model.

To bridge the gap between the individual tools and the common environment, each tool has to be equipped with a plugin or integration component that would enable it to communicate with the common environment. This component must fulfill three tasks:

-

1.

Translating the artifacts from their in-tool representation to our metamodel (Fig. 2), so that the artifact data and any subsequent changes to it are visible in the common environment.

-

2.

Translating any changes of their artifacts from the new metamodel back into the tool metamodel, so that tool users can access any changes to the data stored in the common environment.

-

3.

Continuously observing and applying any changes that occur to the data, so that the two representations of the data are synchronized.

Details of the implementation for the common environment and the tools equipped with plugins are presented in Sect. 4.3.

3.3 Linking Granularity

As discussed in Sect. 2, trace links establish connections at the model level. However, the target information of these links is usually stored at the level of property values. This means that changes to other properties that are not relevant to the links may still trigger propagation or consistency checking, even when they have no actual impact on the linked details.

Unlike existing trace links, which operate at a high level of abstraction and may trigger unnecessary propagation or consistency checks, our solution enables engineers to link specific properties that have a correspondence. For example, in our illustrative example (Sect. 2), rather than linking the entire requirement to the computation, our solution allows engineers to link only the property that stores the constraint value (160 bar) to the corresponding property in the computation. This granular approach ensures that only changes to relevant properties trigger link execution, while other changes do not impact the links, therefore not slowing down the system with unnecessary link executions. On the right side of Fig. 2, we show that a link is associated with two properties, which enable the traceability with finer granularity. More implementation details are provided in Sect. 4.

3.4 Propagation operators

To ensure that the linked properties are updated appropriately, it is necessary to define the corresponding operation that will be triggered upon a relevant change. However, existing solutions suffer from a significant limitation in that they are designed to handle only one specific operation. This can range from simply updating values directly to flagging constraint violations, which must then be manually resolved by engineers at a later stage. This greatly limits the application of such solutions for different practical scenarios, as discussed in our motivational example.

Our solution combines both constraint checking and change propagation into a single mechanism. When setting a link, the user selects the operator to be applied after a property change. An operator determines which action is automatically performed on the linked properties. Some operators can also check whether a requirement constraint or consistency rule is violated. Table 1 presents a selection of the link operators available in our solution, specifically for numerical property values.

Of the operators presented, the assignment (\(=\)), equals (\(==\)), and not equals (\(!=\)) can be applied to any property type, ranging from numbers to reference properties for which the value is another model element. Additionally, our solution can be extended with other specialized operators depending on the property types encountered in the models to which it is applied. Each data type, such as a set, or a number, has several specific characteristics to be considered when checking the consistency of dependent properties. For example, one number can be greater than another, checked with a > operator, but the same operator is meaningless for sets. The opposite is true for the “includes” operator, which applies to collection-typed properties, but not to numerical values. In order to increase the flexibility of our solution, we have extended the operators list with a selection of set-based and string-based operators, such as the “includes” operator, as seen in Table 2. Depending on the prevalent data types and dependencies in the target system, our solution can be extended with type-specific or custom operators, the semantics of which focus on these specific cases.

3.5 Propagation direction

While designing the link operations, we also realized that, in many cases, the execution of the link depends on which end of the link was changed. For instance, considering the hydraulic actuator discussed in Sect. 2, engineers can set a link connecting the piston cylinder diameter in the computation and the graphical model. They expect that the value in the graphical design always corresponds to the computation, so they choose an assignment operator (\(=\)) while creating the link. However, the piston diameter in the computation is a result and can only be changed if the input changes.

The expected reaction to a change can further differ depending on the artifacts linked. In the case of linking computational output and the graphical model parameters, the users may want to leave room for experimentation. If the computation input changes, the new result will be propagated immediately. But the graphical model may be used in the communication with the client, during which the client may request different ad hoc experiments that temporarily invalidate the dependency between the two properties. In this case, the graphical designers may want the changes to only be visible on their side of the model, since new input values will later override them as soon as the feedback from the client interaction is applied to the model.

From a different perspective, going back to the initial example, the model may have certain constants or stead-fast parameters specified in the requirements, which are then used during the computation. If any of these parameters change in the requirements, the change has to be mirrored in the computational model. However, if one of the physicists working on the computation accidentally changes one of these values, they would not expect the requirement specification to be changed accordingly. Quite the opposite, they would expect this discrepancy to be immediately flagged as an inconsistency in the overall model.

Finally, for the sake of completeness, the model will most likely contain instances where the engineers will expect bidirectional propagation to occur. This can happen in two situations: (i) when applying a constraint checking link, for example, using a “greater than” operator, or (ii) when the two corresponding values are both editable input values in two different tools or artifacts. This second case can occur in tools that are not well integrated, such as when the same value is required in two different computations that the calculator tool cannot link. For example, the dimensions of the piston are required both in the computations regarding the cylinder properties, and in the computations that determine the safety parameters of the piston rod. Alternatively, one value could be required as an input in two different tools and expected to be synchronized regardless of the source of the change. For example, the length of the piston is required both in the computations and in the graphical model, but the value should always be equal between the two. Unlike the diameter of the cylinder, the piston length is not open to experimentation in client communications.

The links should, therefore, hold the knowledge about the direction of propagation. This means that the link will react depending on the origin of the change. Table 3 presents the three propagation directions supported by our solution, which fully cover the various scenarios described above. The engineers must decide the trigger direction for each link during the link creation process. However, if the editing of existing links is enabled, this direction can be changed at any point during the development process.

3.6 Additional propagation transformations

Although direct propagation or constraint checking can satisfy many use cases, some require additional transformations. For instance, the hydraulic actuator requirements impose a constraint for safety against rod buckling. The constraint requires that the hydraulic force be less than twice the buckling force, both of which are computation results in different calculator worksheets. Implementing this constraint with a simple link would necessitate an intermediate property value that computes the double of the buckling force. Our linking solution allows engineers to specify simple transformations to be applied during link execution. In this scenario, engineers can add the “2*” operation during link setup, and the operation will be performed after each relevant change.

These simple transformations can vary from small computational changes, such as the example above, to type changes and aggregation operations for collection-type properties. For example, such a transformation could be used to parse a textual requirement description for the numerical specification of a constant that is required in the computation. Or alternatively, as will be captured later in the evaluation (Sect. 5), a computation result from the calculator can be automatically converted to a string and inserted into the Java-based source code of a component.

3.7 Propagation triggers

Another design decision of our solution is when exactly the link actions are triggered. Table 4 presents the two kinds of triggers supported in our solution. The manual propagation is the least intrusive, but requires more user interaction and care. While it allows the engineers to maintain closer control of the change propagation, every link has to be manually activated. As a result, impactful changes could still be overlooked. Even worse, some value propagations might be incomplete, which could happen if the propagation of a change results in a subsequent change. For example, a change in a requirement-specified constant requires the engineers to manually trigger a propagation link to propagate the new value to the computation. But this input change could result in a new computation result that, if involved in one or more links, would require the engineer to manually trigger those links as well.

The option of automatic propagation requires no user intervention after its creation and allows for chain propagation. In chain propagation, one link evaluation triggers another to ensure that the full impact of a change is propagated throughout the system without any additional user input. This can prevent cases in which the change propagation is incomplete, but it may reduce the control engineers have on the propagation. The result is the possibility that intermediary or experimental values are propagated throughout the system, when the engineers only intended to test them locally.

There are advantages and disadvantages to both options, which should be considered and balanced with respect to the engineers’ goals, the types of artifacts involved, the criticality of the requirement constraints or consistency rules, and the implementation details.

3.8 Type-specific challenges: floating point precision

The reactive links can, as previously noted, propagate changes and check for simple inconsistencies between a wide variety of data types. However, each data type may have specific features and challenges to be considered for a proper implementation of the linking system. One challenge is the floating point precision in real number computations.

Computations containing varying floating point precision levels are very common in practice [64, 89]. In the context of our motivational example, the precision levels differ from one tool to the next. In bridging the gap between different tools for effective cross-tool collaboration, precision levels add another layer of complexity. If the reactive links do not consider this problem, this may lead to rounding errors. These can cause issues from propagating inconsistent data and generating incorrect computation results, to inadvertently creating endless propagation loops if each tool performs a slightly different rounding on the same property value. In order to prevent this situation, the reactive links can be paired with floating point precision identification and tuning solutions. These may be different forms of static analysis [42] to identify possible precision-induced faults before they affect the system. Alternatively, a wide variety of solutions have been proposed over the years to algorithmically [6, 21, 26, 114] or automatically [63, 64, 89, 90] compute the lowest precision level necessary for an accurate and fast result in mixed-precision computations.

The pitfalls that may result from ignoring this aspect may be preventable by using unidirectional links, carefully avoiding circular propagation paths, and additional checks to ensure the propagated values are correct. However, these conditions are hardly achievable in a real-world, incrementally evolving system. Hence, we recommend that an approach to handle heterogeneous floating point precision is taken into consideration if reactive links are to be used in practice for numerical values. The specific choice as to which of the above approaches is best suited to be used in combination with the links will depend greatly on the other implementation aspects considered, as well as the specific characteristics of the context in which they will be used. For our prototype, we have decided to disregard this issue, as we will further detail in Sect. 4.3.4.

3.9 Addressing link circularity

Independently of the data types linked, one potential issue are circular dependencies. These occur when a selection of property values within the model are all interconnected in a cyclical network. Collaborative systems developed by highly heterogeneous teams and tools may feature large numbers of links between all the different artifacts involved. Additionally, new links are created throughout the development process, as new artifacts and properties are modeled. On top of that, in the cases when a property value appears in three or more artifacts, the changes to this property value have to be propagated in all directions, regardless of where the change originates. Thus, preventing cycles in the link network becomes a complex task.

Circular dependencies predominantly affect automatically triggered links. By default, an automatic propagation across one link will trigger all the links connected to the property value that was changed as a result. Therefore, for automatically triggered links, a circular dependency is likely to cause an infinite propagation cycle. In this case, each link triggers the next in the cyclical network. As a result, the propagation gets stuck in going around this loop. Moreover, in the absence of a solution to this circular propagation issue, this infinite propagation cycle can even occur in bidirectional propagation links, i.e., bidirectional links with the “assignment” (\(=\)) operator, where the same value could end up being propagated back and forth across one link forever.

A simple solution specifically to this last problem is to prevent a propagation event when the value of the target property value would not change. For example, if the propagation link wants to assign the value “1” to a property value that is already “1”, the propagation would make no effective difference, so it can be skipped. Therefore, both in a bidirectional propagation link and in a cyclical link network, the propagation would stop when a full round of propagation is completed.

There are some cases that might still fail despite additional checks. This could happen when we use simple transformations in the links, which change the value propagated so that a full round is never identified. One example of such a situation is the previously mentioned case concerning varying floating point precision levels between tools, in which rounding errors may cause endless propagation.

The linking service can also prevent circular infinite propagation cycles by detecting and managing circular links. In the landscape of reactive links, we can consider the artifact properties to be vertices in a graph, with the links acting as directed edges. Hence, for the purpose of identifying circular links that may lead to propagation loops, we can consider the network of links to be a directed graph.

Providing a visualization of the linking network can also support engineers in avoiding the creation of cyclical link networks. Even in cases where one person is solely responsible for creating and maintaining the reactive links, a large network of links can quickly become overwhelming. Due to the similarity to directed graphs, different visualization techniques [12, 103] and tools [1, 9] can be adapted to represent a link network overview. Additional interactivity [33, 106] with this overview provides even more support to the engineers. However, manually checking and examining the spread of links in the system for each new link created may still be time-consuming and overwhelming, depending on the size and distribution of the network. Several surveys based on graph visualization offer some insights into supporting engineers through this task [10, 44, 52].

Another alternative is to enhance the linking system with logic that detects and prevents circular paths in the link network without needing additional human input. As the linking system already stores all the links, different graph-based algorithms can be used to examine these links for cycles. Such algorithms are based on the concept of strongly connected components, namely subgraphs in which each vertex can be accessed from each other vertex [104]. The solution is to identify all the strongly connected components in a graph and recursively replace them with a placeholder vertex, resulting in an acyclic graph [47]. The accurate and efficient identification of cycles and strongly connected components has been an active area of research for decades, with multiple algorithms being proposed both for static graphs, where all the edges are known, and for dynamic graphs, where new edges are added incrementally. For static graphs, the most common solutions use a depth-first search [104], which aims to parse the same vertex twice, hence finding a cycle. Alternatively, repeatedly deleting each vertex that does not have a predecessor results in a graph formed solely of strongly connected components [61]. When the edges are added dynamically, these approaches can be applied repeatedly after each new edge is added. However, the field of incremental cycle detection and strongly connected component management contains extensive variations that improve the efficiency of these algorithms. Most of these approaches enhance the algorithms with topologically sorted vertices [11, 13, 45], two-way searches [45, 81], or other methods to reduce the search space necessary to identify possible new cycles [14, 81].

Different approaches are proposed to potentially reduce cycles [49, 98, 105], which can also be employed and adapted to manage existing cycles and prevent, where possible, endless propagation loops. Namely, reducible cycles [49] can be partitioned into two acyclic subgraphs. One is an acyclic subgraph formed by the edges and vertices accessible from the source vertex, which, in our case, could be the property value that was changed to trigger the propagation. The other is formed by the edges with vertices in topological order. While not all cycles are reducible [49, 98], in some applications not all cycles can, or should, be prevented. For example, strongly connected components in the link network might be desired in certain complex multi-tool development environments where the changes have to be propagated regardless of their artifact of origin. In these cases, reducible loops can be used to stop potential endless propagation loops.

Similarly to the issue of floating point precision, the most appropriate mechanism to prevent or manage cycles in link networks will heavily depend on the context in which the links are used. While some users will want to prevent cycles altogether, others may want to allow them but be able to manage or visualize them. Equally, the performance cost of the cycle management must also be considered. In our prototype, we have chosen to implement the algorithm proposed by Tarjan et al. [104], in which we are performing a depth-first search in order to identify any potential cycles before each link is added to the network. We present more details on this implementation choice in Sect. 4.3.4.

3.10 Linking process

Using reactive links helps the engineers automate the management of dependencies and change propagation within their complex development systems. While this reduces the overhead of constantly checking the consistency of the model, the benefits of the links have to be balanced with the drawbacks of creating, managing, and evaluating the network of links. The creation of the links is akin to identifying dependencies in the system, and therefore, the existing process applied can easily be expanded to include this step. However, as each team and company has specific process definitions according to their needs, priorities, and the type of products they develop, the exact linking process will also vary accordingly. In the following, we are presenting our recommendation for a possible linking process.

We assume that the development teams already follow a process that includes an early analysis and identification of the dependencies between models. This is often the case in safety-critical processes, as hidden or lost dependencies may quickly become significant safety risks. This process is usually triggered by a set of new requirements received from the client. For exemplification, we use the scenario introduced in Sect. 2 and consider the requirements shown in Fig. 1 to be the new set of requirements coming from the client. The requirements engineering team elicits and specifies these new requirements. Then, the new design is modeled, followed by a preliminary requirements analysis, at which point dependencies and traceability links can be identified. We propose that this is the step where most of the links are created as well.

The dependencies identification step is often the hardest step in the process, while also playing a crucial role in traceability identification and further safety and consistency checks. In smaller projects, the engineers can conceivably analyze the artifacts and check for dependencies manually. However, this task can easily become not only time-consuming and tedious, but also overwhelming and error-prone. According to various studies, such as Wang et al. [111], the pairwise comparison of all the requirements in a project can take around 12 h. In the case of model-driven engineers, this estimation would also need to be extended to include the artifacts that are part of the models, and for fine-granular dependencies, even the collection of properties each of these artifacts has.

To mitigate this burden, a variety of solutions offer automatic support for dependency identification. Most of these solutions are based on content analysis [65, 79, 91, 111], where the text associated with the artifacts is automatically processed to find common concepts that may correspond to dependencies. Other solutions, such as Savić et al. [92], parse the concrete syntax tree enriched with additional information. Likewise, multiple solutions use different annotations [99, 113] or the results from tracking the engineer activity [62] to increase the accuracy of the dependency prediction. It must be noted that most of the available solutions are developed with a focus on requirements or code dependency identification. While many are applicable in the context of heterogeneous model-driven engineering as well, they may require additional or specialized training data and development. Additionally, for most of these solutions, a dependency is synonymous with a trace link, connecting two artifacts, instead of artifact property values. As a result, the automatic identification of reactive links would either need to be trained to find more fine-granular dependencies or be used in combination with a manual effort from the engineers. Even in the latter case, the use on an automatic dependency identification solution would greatly improve the workload associated with identifying reactive links.

In terms of the process of setting a specific link, after the dependency is identified, translating it into a link is straightforward. The engineers need to define which are the two property values linked, and the behavior of the link is defined by the link properties described in the above sections. In our example, they would select the maximum piston pressure value specified in the requirements and the property describing the same concept in the parameter of the complex calculator. They would connect these two property values through a reactive link and select the appropriate operators. We can assume they would want an asymmetrical link that propagates changes from the requirements into the calculator and detects inconsistencies in the reverse direction. Therefore, they would choose the assignment operator(“=”) for the propagation direction and the equality operator(“==”) for the reverse. Most of the information necessary is already discovered when the dependency is identified. Additional reasoning may be needed depending on the features of the plugins and the source of the property values, for example, whether they are editable. However, this reasoning would also be necessary in the absence of the links and would need to be repeated every time a change affects the dependency.

After the reactive links are created, the development process proceeds as usual. In our working assumption, after the specification of the new requirements and design modeling, the engineering teams begin the development of the new tasks. During this development, new dependencies may be identified. We recommend that these are captured in reactive (or trace, as may be the case) links as soon as possible, to avoid incomplete or incorrect dependencies later on. The development process is usually iterative, with a few new artifacts being created and developed at a time. As a result, we expect that the identification and capturing of new links during the development time is a less daunting task than the initial dependency identification step. Hence, this can be performed manually, or using the same set of solutions as for the previous analysis step.

The link management is even more straightforward in most cases. After the link properties are identified and the link is set, the engineers’ intervention necessary depends only on the propagation trigger selected. For automatic propagation, no further intervention is necessary at all, as the link will automatically trigger every time the dependency is touched by a change. For manual propagation, engineers have to manually trigger the links. However, this operation can be done as part of the commit process, during which engineers acknowledge their changes are complete and should be visible to the other teams working on the same project.

When a link becomes redundant or needs to change its function, the interaction necessary also depends on the trigger selected. If the engineers do not need any more propagation to occur along one dependency with manual triggers, no interaction is needed. The link can be deleted, but it can also be ignored and no longer triggered. For automatic propagation, the engineers have to manually edit or delete the link.

Lastly, the reaction, namely the actual propagation operations through the links, is already optimized as a result of the link design. In automatic triggering, the links are only evaluated when a change directly affects one of the property values connected. As a result, the links will never be triggered unless propagation is really necessary, in cases engineers would otherwise have to manually change the model elements themselves. Additionally, the links represent simple operations, ranging between direct propagation and simple constraint checking. Even the transformations supported are quite simple since we are only connecting two values in the model. Therefore, the actual evaluation of the link is not expected to be significantly resource-consuming.

4 Study design

The goal of our study is to evaluate the proposed solution in terms of feasibility, flexibility, and performance. In the following, we present the research questions, three different engineering scenarios, and the implementation aspects of our solution.

4.1 Research question

Based on the goal described above, we have defined three research questions (RQs), as follows:

RQ1: Feasibility-Does the proposed solution cover all linking cases of our three multi-domain and multi-tool scenarios and keep artifacts synchronized? We focus on exploring three scenarios in order to evaluate the behavior of our solution when dealing with a wide variety of constraints and consistency rules between different models. We observe the change propagation as part of three scenarios and check the correctness of the result.

RQ2: Flexibility-To what extent can the proposed solution be applied in diverse engineering projects? Different engineering project involves different tools and model types that need to be linked. To evaluate the flexibility of our solution, we study the behavior of the reactive links in the context of different property value types. For this, we identify dependencies between different property types that are captured in our engineering scenarios and explore how the links can be applied in each such context.

RQ3: Performance-How do the reactive links influence the performance of the system in terms of memory usage and run time as compared to manual propagation? To evaluate performance, we first discuss the engineering effort necessary to set up and maintain the links in comparison with manually propagating changes. After this, we compare the number of artifacts added by the reactive links as compared to the model artifacts contained in each of the engineering scenarios considered. Then, we measure the performance, in terms of execution times, of the links in order to determine whether they provide a significant improvement in comparison with the scenario in which developers manually maintain the cross-domain consistency of the system.

4.2 Subject systems

For evaluating our solution, we have considered three engineering scenarios. The name, number of domains, artifacts, properties, and links for each scenario are presented in Table 5. The scenarios Hydraulic actuator and Robotic arm have three and five domains, i.e., different numbers of engineering tools, respectively. They were developed by our collaborators working for Linz Center of Mechatronics based on real-world projects they have contributed to in the mechatronics industry. The scenario Agricultural machines has two domains that have to be synchronized after each change during the development. This scenario was developed by our collaborators working for Flanders Make, based on an ongoing project with their industry partners. Altogether, the scenarios of our study have seven distinct types of artifacts (i.e., models) managed in different tools: requirements, physical computations, graphical design, coordinates in a spreadsheet, source code, interface definition on GitHub, and UML-like system design.

The number of links in Table 5, totaling 98, provides us with a diverse range of types, constraints, and rules. This diversity of links enables us to thoroughly evaluate our solution. The requirements for these links were provided by either engineers or clients. Further details regarding the various domains and artifacts can be found in Fig. 4. This figure also shows the number of reactive links that exist between different types of artifacts. In the subsequent section, we provide a detailed explanation of the three scenarios.

4.2.1 Hydraulic actuator

This scenarioFootnote 2 consists of a piston within a hydraulic cylinder, a pump, and a valve system assuring the connection between the two main components. For develo** this project, the engineers model the pump starting with the initial requirements. Next, the teams go through iterations of model refinement. Finally, the engineers check that the building blocks of the system follow the requirement constraints and the safety conditions. There were three teams that collaborate in the engineering process, each with a separate set of responsibilities and expertise, as follows:

-

Requirement engineers using our requirements tool.Footnote 3 This tool allows these engineers to specify and manage the requirements, in which they can specify quality measurements and constraints. In the context of the common environment (see Sect. 3.2), these additional constraints are stored as custom property values associated with the requirement they are part of.

-

Physicists use TechCalc,Footnote 4 a computation calculator that has worksheets corresponding to the components of the system. Engineers select the input parameters in the worksheets, and the calculator applies the appropriate formulas to compute the rest of the parameters to characterize the components.

-

Graphical designers use SolidWorksFootnote 5 to manage a virtual model of the system, visible on the right of Fig. 4 (a) based on the data received from the other two teams.

The actuator development process has four stages:

-

(i)

initial setup, the requirements are collected and the preliminary computations and graphical components are set up;

-

(ii)

threshold computation, the values of the constraints are used to determine the most extreme component measurements for which the system works safely, as presented in our motivating example;

-

(iii)

model development, the teams model the system based on the requirements and the progressive feedback they receive from the client;

-

(iv)

selection of the off-the-shelf components, a multi-stage process in which the client selects off-the-shelf components for the project and communicates the characteristics to the teams, then engineers ensure the components will interact correctly.

4.2.2 Robotic arm

This projectFootnote 6 consists of a factory setup composed of several robotic arms that should pick up an object from the respective receiving bays and transport it to the target locations. The development is based on a basic setup, which is then adapted to the factory requirements provided by the client. For the development of this product, the collaboration is more complex. While the hydraulic actuator consists only of hardware, the robotic arm also requires a software program to control its movement. Therefore, the teams consist of:

-

Requirement engineers using the same requirements tools as the previous scenario.

-

Mathematicians translate the real-life setup of the bays and movement trajectory into relative coordinates respective to each robot base, stored in Microsoft Excel.Footnote 7

-

Physicists, also using TechCalc, with a focus on the movement of the robot and the components necessary for the correct trajectory and functionality to be achieved.

-

Graphical designers produce visualizations of the arm using SolidWorks.

-

A team of software engineers who write and maintain the code to control the robotic arm’s movement. The code is written in Java, using the editor IntelliJ,Footnote 8 and provides a basic setup of two different controllers. The most appropriate one is selected through configurations.

For this evaluation, we focused on the steps required for the basic setup to be specialized toward the specific requirements of a client. The models of the system are set up, including the correspondences between the artifacts. The requirements specify the absolute coordinates of the loading bay and the drop point, as well as the robot base location and the requirements for its functioning for each robotic arm. The mathematicians then compute the coordinates from the perspective of the robot, which will influence the computations. The result of the computations specifies the components required for the building of the robot, as well as the deflection of the arm, which is necessary to select the most appropriate controller code for each robot.

4.2.3 Agricultural machines

This scenario comes from the agricultural domain, based on the development process of an industry partner of Flanders Make [67]. The agricultural machines built by this company are composed of hardware components (e.g., sensors) and software components (e.g., embedded systems). The communication between these two types of components is assured through message interfaces. During the development process, two collaborating teams develop these separate, but tightly-knit parts of the product. As a result, the message interfaces used for the communication between these two parts must be synchronized and maintained by both teams. The process layout is as follows:

-

Software engineers use GitHub as a tool to collaboratively develop the software code for the product, as well as maintain the communication interface on the software side. The interfaces here are modeled as Robot Operating System (ROS) protocol [82] files, containing the expected attributes’ type and name.

-

Mechatronics engineers use Visio-based UML diagrams to model the product from the hardware perspective, including the connections and actions of the sensors involved, and the interface through which the sensor activity is communicated to the software components. These are modeled as UML classes, with each attribute as a separate field.

The industry synchronizes the interfaces using naming conventions. In our evaluation, we extend the two models with two types of links. Firstly, we add links that check that the type and name of each attribute are identical, to highlight any naming convention violation. Then, we add a propagation link for each interface, which automatically adds new required fields from the software interfaces into the hardware models.

This additional scenario allows us to better evaluate the flexibility of the reactive links. On the one hand, unlike the other two scenarios that are heavily focused on numerical property values, the Agricultural Machines feature strings and sets. The reactive links are extended to handle these additional data types and use cases, by simply adding new operators. As such, the links can be easily applied in a completely different setting with very minimal changes required, if any change at all. Additionally, the two tools used in this scenario are completely different from the toolset used in the other two scenarios. The new adapters involved, particularly the GitHub adapter, function differently from the ones we have used in our previous evaluations. As described in Sect. 4.3.2, the GitHub adapter does not allow bidirectional model synchronization. In our evaluation, we are discussing the impact of this factor on the reactive links and show they can still be used effectively.

4.3 Implementation aspects

To evaluate our proposed solution, we present an implementation used in the evaluation study.

4.3.1 DesignSpace as common environment

We begin by outlining the common environment, which is a server that stores all artifacts. For this purpose, we have selected DesignSpaceFootnote 9 [27, 51], a versatile platform that facilitates the storage and reasoning of engineering knowledge in a multi-tool and multi-user environment. Any tool that can be connected to DesignSpace can upload its resultant models and their respective metamodel to the server. The data is continuously synchronized and kept consistent, as specified by the engineers.

DesignSpace stores engineering data in a uniform representation, based on a DesignSpace-specific meta-metamodel. Subsequently, the server manages the models stored, enhancing them with additional information where necessary. All the subsequent operations, especially cross-tool and cross-domain operations, are applied at the server level. The meta-metamodel used by DesignSpace is presented on the left side of Fig. 2.

In more detail, DesignSpace stores the model and metamodel information of each domain together. In our context, the metamodel represents the structure of the data, while the model stores the information itself. For example, in the Requirements tool, the metamodel stores the structure of a requirement, while the model stores each requirement specification itself. Storing both the metamodel and model uniformly allows for extensions and connections across the boundaries of domains, as new information can be added to the structure of the data when necessary. Thus, the metamodel is stored in InstanceTypes, which describe the structure of the model elements. Each property in the metamodel is described by a PropertyType. The type of a property can be a generic type, such as string in the previous example, or a reference to a custom InstanceType defined in DesignSpace. After the metamodel is defined through InstanceTypes and PropertyTypes, the model itself is uploaded. Each artifact of the model is stored in an Instance of a specific InstanceType. When an Instance is created, the data of the artifact is stored in the properties defined in the metamodel as property values. If necessary, more properties can be defined dynamically later.

Let us return to our motivational example. In the calculator, this is a parameter, a number stored in a uniform unit of measurement and inserted in any formula needed for calculations. One TechCalc is connected to DesignSpace, the adapter automatically fetches or creates the InstanceType Parameter, with a set of PropertyTypes defining the characteristics of such an artifact (e.g., name as a string, value as a number, unit as an enum value, etc.). Then, the adapter creates the Instance for the maximum piston pressure and assigns the data of this parameter to the Properties of this Instance. Hence, the Property name is set to “maximum piston pressure”, the value is set to 160.0, and the unit is set to “bar”.Footnote 10 Similarly, the requirements tool adapter creates the metamodel for Requirement and stores the artifacts in Instances of this Requirement InstanceType. The requirement Instance corresponding to the maximum piston pressure specification is then enhanced with a dynamically created property that stores the value of 160.0. This property value is then linked to the value of the calculator parameter via reactive links.

4.3.2 Uploading data to DesignSpace

To collect the tool-specific data, DesignSpace uses plugin adapters made corresponding with the design goals specified by Sun et al. [100]. Each tool is provided with a custom-built adapter, responsible for translating between the tool’s internal metamodel to the DesignSpace data representation described above. The structure of the tool metamodel is first captured in InstanceTypes and PropertyTypes, before the tool-specific data is uploaded as Instances of these InstanceTypes. This data is automatically uploaded to DesignSpace when the tool is connected to the server for the first time. Then, any change to the data is propagated to the adapter to synchronize the tool and DesignSpace representations. Therefore, if the user changes something in the tool, the adapter sends this to the DesignSpace server. Conversely, if the change originates in DesignSpace, the adapter mirrors it in the original tool.

Our solution is event-based, which means that any change to an artifact results in notifications being sent to the services on the server, i.e., DesignSpace, and the tools connected to it. The communication between the server and the client-side extension is done via gRPC [38]. This communication protocol uses proto requests and responses to send messages across tools, platforms, and interfaces. The messages encode all the information necessary for the receiver to understand and apply the change in their local data.

It must be noted that not all plugins offer two-way communication with the server. While such a bidirectional communication is standard, the GitHub plugin we are using for the Agricultural machines scenario is specifically unidirectional. Namely, any update on the GitHub side will be synchronized and visible in DesignSpace. However, the plugin does not transport the DesignSpace changes back to the GitHub tool. This is a limitation we have to carefully consider when specifying links, especially propagation links, as the GitHub data, much like the TechCalc computation results, is effectively not editable.

4.3.3 Collaboration in DesignSpace

As previously mentioned, DesignSpace is focused on a multi-tool (and consequently multi-domain) and multi-user collaboration. Therefore, it offers a variety of collaboration features for different working styles and preferences. In this paper, we are focusing on the default collaboration features.

When using DesignSpace, each user has access to one or more workspaces, which group the artifacts of one tool/domain used by the user. The workspaces are modeled in a tree of inheritance levels, where each workspace can have one parent workspace and several child workspaces. The data in a workspace is private, only visible in this specific workspace, until the user commits their changes, making them visible to the parent workspace. Alternatively, the changes in a parent workspace can be pulled in the child workspace. By default, these committing and updating actions are manually triggered by the user, but they can be automated, in which they are automatically performed when a change is detected.

We find that the intrusiveness of automatic propagation is reduced significantly enough for the benefits of this propagation trigger, e.g., chain propagation, to outweigh its downsides.Footnote 11 Specifically, each collaborator can use a separate workspace, children of a workspace managed by the team manager. The link propagation triggered by a change will be visible to the collaborators of the user who performed the change only after the change is committed, and the collaborators pull the changes from the parent workspace. Each team member can choose the level of intrusiveness they prefer using the automation settings for the committing and updating actions mentioned before. Therefore, in the following, we will consider only automatic propagation links for our evaluations.

4.3.4 Implementation of link

We have extended the DesignSpace meta-metamodel to include the linking metamodel that specifically targets reactive links for our solution, presented on the right side of Fig. 2. These links are represented by specialized instances that can augment and enrich the metamodels uploaded by the tool adapters. Linking instances have a consistent and unified model for all use cases. The instances are of the type Link and are depicted in Fig. 5. This figure also illustrates the link using our motivating example (discussed in Sect. 2).

As mentioned in the solution description, the aim of the links is to assure granularity by linking property values directly. This is uniquely identified by the instances of Property. Additionally, operator and operation store the link properties, for each of the two ends of the link, together with direction and trigger, which completes the instances. The fields IsConsistent store the current status of each link instance.

Engineers also have the ability to specify simple transformations that need to be applied during propagation. These transformations are executed as the first step when a relevant change occurs. To facilitate this process, we are utilizing ARL [55], which is also used for unit transformations during propagation.

To establish a new link, engineers can choose and define the relevant instances and properties to be linked, along with the operators and simple transformations. This information is available for selection via a simple prototype graphical user interface (GUI). A capture of the GUI can be seen in Fig. 6. Once the user makes their selection from the form lists and inserts operations where necessary, the data is transported through a specific gRPC call to the server, where a new link instance is created.

Our solution also features a mechanism to prevent cycles from occurring in the link network. In order to achieve this, we have implemented the algorithm proposed by Tarjan et al. [104], which performs a depth-first search in the network to identify any cycles. We perform this search every time a new link is created. If a cycle is found, we reject the new link and do not allow the cycle to be closed. The prototype we use for the evaluation shows a warning when the link is rejected. While this solution can become quite time-intensive for long link chains, we have found these situations to be very rare in our scenarios.

Additionally, two of our scenarios extensively deal with numeral values. However, we noticed that in most cases, the propagation links between tools with different levels of precision are unidirectional or asymmetrical. This, in combination with the cycle prevention, results in floating point precision differences becoming a somewhat negligible threat to our evaluation.

4.3.5 The link reaction process

The execution of actions related to a reactive link is triggered by a change in one of the property values it connects. It goes through the following steps:

-

1.

The change notification is received, and the changed property value is selected from it.

-

2.

The changed value is converted to the standard unit of measurement, if a unit is specified, e.g., a value in cm is converted to m.

-

3.

The operation is performed if one is specified.

-

4.

The operator is checked.

-

5.

For propagation, the value is converted to the linked property’s unit and then set as the corresponding value unless the values are already equal.

-

6.

For constraint checking, the opposite value is also converted to the standard unit, and then, the two are compared. The result of the comparison is stored in the corresponding consistency flag.

5 Results and discussion

After conducting our evaluation study, we were able to assess the feasibility, flexibility, and performance of our solution. To accomplish this, we established reactive links for three engineering scenarios. These links connect all related artifact properties according to requirements and consistency rules. In the following sections, we present each use case we identified, along with practical examples from the scenarios. Additionally, we examine how these links can be applied to various types of property values. Furthermore, we discuss the impact of the links on memory consumption and execution time, taking into account different link distributions. By doing so, we provide a comprehensive analysis of the benefits and potential challenges of incorporating these reactive links into our solution.

5.1 RQ1-Feasibility

In this section, we analyze how our solution works for seven different cases, which shows the feasibility of our reactive links.

5.1.1 One-to-one bidirectional propagation

Our first consideration is the most prevalent use case in our scenarios. It involves establishing a connection between two property values and bidirectionally propagating any changes made between them. An example of such a scenario can be found in the hydraulic actuator case. In this instance, the piston cylinder’s diameter must remain consistent between the TechCalc computations and the SolidWorks graphical design. To achieve this, we connected the two properties using a link with an assignment operator in both directions. The outcome is that any alteration made to the cylinder diameter in either the computational or graphical model is instantaneously propagated to the other end of the link.

5.1.2 One-to-one constraint checking

A similar situation occurs when we examine the consistency of the relationship between two property values. In the example detailed in Sect. 2, concerning the hydraulic actuator scenario, the requirement constraint on the piston chamber pressure can be linked to the computation’s value using comparison operators on both directions of the link. Upon setting up this link, if a developer unintentionally sets the piston chamber pressure too high in the computation, the issue will be flagged in both of the involved tools. Similarly, if the requirement changes, the link will be activated to ensure that the value set in the computation still complies with the requirement.

5.1.3 One-to-one unidirectional or asymmetrical linking

In certain situations, the requirement specifies precise values to be utilized in other tools. In such cases, any changes in the specification should be propagated to the other tools employing the corresponding value, while the specifications themselves should not be altered via link propagation. To address this concern, our solution enables the utilization of a unidirectional link. The link is established in the same way as a bidirectional propagation link, with the caveat that we only add the assignment operator for the direction of the link that should propagate. The other direction’s operator will be left blank and disregarded. Alternatively, we can use asymmetrical links by setting the operator of the other direction to equals. In this case, modifications to the specification will be propagated, and any changes in the implementation leading to a constraint violation will prompt the detection of inconsistencies.

A similar situation is captured in the Agricultural machines scenario, where the communication between the hardware and software components of the machines is assured through interfaces with strict naming conventions. As previously mentioned, the GitHub plugin does not push any changes back into GitHub, resulting in the software interfaces being effectively final. Therefore, a link assuring the consistency of the interface attributes naming would use a unidirectional or asymmetrical setup. Any change in the software interface is propagated into the Visio representation, while changes in the Visio representation are checked for consistency against the GitHub data.

5.1.4 Links with operations

Additional use cases arise when values need to be slightly altered instead of being propagated directly. Consider the robotic arm scenario, where the minimum arm length is computed using TechCalc based on other worksheet parameters. However, the actual arm length used in the graphical model should be 20% larger than the calculated value. In such instances, the engineers set the links between these corresponding property values similarly to previous cases, but with a notable modification. Each link now specifies the required operation to compute the desired value in each direction.

5.1.5 One-to-many constraint checking

The previously mentioned use cases primarily focused on individual links. It is important to note, however, that links can interact with one another or be combined to yield more intricate results. An example demonstrating this concept is verifying whether a value falls within a specific interval specified in the requirements.

To address this use case in our solution, we create a pair of comparison links. These two links correspond to the lower and upper bounds of the interval. For example, in the hydraulic actuator scenario, the characteristic coefficient of the piston cylinder computed in a worksheet must remain within a predefined interval for the specific component in use. The engineers use two links to connect this result to the required interval boundaries. Any deviation from the specified range results in an inconsistency being flagged. Furthermore, the developer receives immediate feedback by being promptly notified whether the value is too low or too high.

5.1.6 One-to-many propagation

In our solution, links can achieve more intricate use cases with one-to-many propagation paths. An example of this can be observed in the hydraulic actuator scenario, where the length of the piston cylinder is explicitly defined in the requirements and utilized in both the TechCalc and SolidWorks models. To establish this one-to-many connection, we create links between each property in these two models and the corresponding requirement. Consequently, whenever a change is made to the requirement, it is automatically propagated to both models simultaneously, streamlining the process into a single step. This synchronization ensured that all relevant components remained consistently updated with the most recent requirement adjustments.

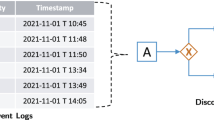

5.1.7 Chain of propagations