Abstract

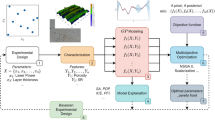

The use of adhesive joints in various industrial applications has become increasingly popular due to their beneficial characteristics, including their high strength-to-weight ratio, design flexibility, limited stress concentrations, planar force transfer, good damage tolerance, and fatigue resistance. However, finding the best process parameters for adhesive bonding can be challenging. This optimization problem is inherently multi-objective, aiming to maximize break strength while minimizing cost and constrained to avoid any visual damage to the materials and ensure that stress tests do not result in adhesion-related failures. Additionally, testing the same process parameters several times may yield different break strengths, making the optimization process uncertain. Conducting physical experiments in a laboratory setting is costly, and traditional evolutionary approaches like genetic algorithms are not suitable due to the large number of experiments required for evaluation. Bayesian optimization is suitable in this context, but few methods simultaneously consider the optimization of multiple noisy objectives and constraints. This study successfully applies advanced learning techniques to emulate the objective and constraint functions based on limited experimental data. These are incorporated into a Bayesian optimization framework, which efficiently detects Pareto-optimal process configurations under strict budget constraints.

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the authors but restrictions apply to the availability of these data, which were used under license from Flanders Make vzw for the current study, and so are not publicly available. Data are, however, available from the authors upon reasonable request and with permission from the aforementioned institution.

Notes

Other noise factors could not be controlled in the simulator, so they are not further discussed.

References

Brockmann W, Geiß PL, Klingen J, Schröder KB (2008) Adhesive bonding: materials, applications and technology. Wiley, New York

Cavezza F, Boehm M, Terryn H, Hauffman T (2020) A review on adhesively bonded aluminium joints in the automotive industry. Metals. https://doi.org/10.3390/met10060730

Licari JJ, Swanson DW (2011) Adhesives technology for electronic applications: materials, processing, reliability. William Andrew, Norwich

Correia S, Anes V, Reis L (2018) Effect of surface treatment on adhesively bonded aluminium–aluminium joints regarding aeronautical structures. Eng Fail Anal 84:34–45. https://doi.org/10.1016/j.engfailanal.2017.10.010

da Silva LFM, Ochsner A, Adams RD, Spelt JK (2011) Handbook of adhesion technology. Springer, Berlin

Pocius AV (2021) Adhesion and adhesives technology: an introduction. Carl Hanser Verlag GmbH Co KG, Munich

Budhe S, Banea MD, De Barros S, Da Silva LFM (2017) An updated review of adhesively bonded joints in composite materials. Int J Adhes Adhes 72:30–42

Habenicht G (2008) Applied adhesive bonding: a practical guide for flawless results. Wiley, West Sussex

Chiarello F, Belingheri P, Fantoni G (2021) Data science for engineering design: state of the art and future directions. Comput Ind 129:103447. https://doi.org/10.1016/j.compind.2021.103447

Zhou A, Qu B-Y, Li H, Zhao S-Z, Suganthan PN, Zhang Q (2011) Multiobjective evolutionary algorithms: a survey of the state of the art. Swarm Evolut Comput 1(1):32–49. https://doi.org/10.1016/j.swevo.2011.03.001

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multiobjective genetic algorithm: Nsga-ii. IEEE Trans Evol Comput 6(2):182–197. https://doi.org/10.1109/4235.996017

Corbett M, Sharos PA, Hardiman M, McCarthy CT (2017) Numerical design and multi-objective optimisation of novel adhesively bonded joints employing interlocking surface morphology. Int J Adhes Adhes 78:111–120. https://doi.org/10.1016/j.ijadhadh.2017.06.002

Labbé S, Drouet J-M (2012) A multi-objective optimization procedure for bonded tubular-lap joints subjected to axial loading. Int J Adhes Adhes 33:26–35. https://doi.org/10.1016/j.ijadhadh.2011.09.005

Brownlee AE, Wright JA (2015) Constrained, mixed-integer and multi-objective optimisation of building designs by NSGA-II with fitness approximation. Appl Soft Comput 33:114–126. https://doi.org/10.1016/j.asoc.2015.04.010

Jain H, Deb K (2013) An evolutionary many-objective optimization algorithm using reference-point based nondominated sorting approach, part ii: Handling constraints and extending to an adaptive approach. IEEE Trans Evol Comput 18(4):602–622

Li K, Chen R, Fu G, Yao X (2018) Two-archive evolutionary algorithm for constrained multiobjective optimization. IEEE Trans Evol Comput 23(2):303–315

Gao Y-L, Qu M (2012) Constrained multi-objective particle swarm optimization algorithm. In: International Conference on Intelligent Computing. Springer, Berlin, pp 47–55

Chugh T, Sindhya K, Hakanen J, Miettinen K (2017) A survey on handling computationally expensive multiobjective optimization problems with evolutionary algorithms. Soft Comput. https://doi.org/10.1007/s00500-017-2965-0

Frazier PI (2018) Bayesian optimization. In: Recent advances in optimization and modeling of contemporary problems. Informs, pp 255–278

Rojas Gonzalez S, van Nieuwenhuyse I (2020) A survey on kriging-based infill algorithms for multiobjective simulation optimization. Comput Oper Res 116:104869. https://doi.org/10.1016/j.cor.2019.104869

Hernández-Lobato JM, Gelbart M, Hoffman M, Adams R, Ghahramani Z (2015) Predictive entropy search for bayesian optimization with unknown constraints. In: International Conference on Machine Learning. PMLR, pp 1699–1707

Gelbart MA, Adams RP, Hoffman MW, Ghahramani Z et al (2016) A general framework for constrained Bayesian optimization using information-based search. J Mach Learn Res 17(160):1–53

Garrido-Merchán EC, Hernández-Lobato D (2019) Predictive entropy search for multi-objective Bayesian optimization with constraints. Neurocomputing 361:50–68

Feliot P, Bect J, Vazquez E (2017) A Bayesian approach to constrained single-and multi-objective optimization. J Global Optim 67(1):97–133

Rojas Gonzalez S, Jalali H, Nieuwenhuyse IV (2020) A multiobjective stochastic simulation optimization algorithm. Eur J Oper Res 284(1):212–226. https://doi.org/10.1016/j.ejor.2019.12.014

Miettinen K (1999) Nonlinear multiobjective optimization, vol 12. Springer, Berlin

Forrester A, Sobester A, Keane A (2008) Engineering design via surrogate modelling (a practical guide), 1st edn. Wiley, West Sussex

Horn D, Dagge M, Sun X, Bischl B (2017) First investigations on noisy model-based multi-objective optimization. In: International Conference on Evolutionary Multi-Criterion Optimization. Springer, Berlin, pp. 298–313. https://doi.org/10.1007/978-3-319-54157-0_21

Loeppky JL, Sacks J, Welch W (2009) Choosing the sample size of a computer experiment: a practical guide. Technometrics 51(4):366–376

Zeng Y, Cheng Y, Liu J (2022) An efficient global optimization algorithm for expensive constrained black-box problems by reducing candidate infilling region. Inf Sci 609:1641–1669

Jones DR, Schonlau M, Welch WJ (1998) Efficient global optimization of expensive black-box functions. J Global Optim 13(4):455–492. https://doi.org/10.1023/A:1008306431147

Williams CK, Rasmussen CE (2006) Gaussian processes for machine learning, vol 2. MIT press, Cambridge

Knowles J (2006) Parego: a hybrid algorithm with on-line landscape approximation for expensive multiobjective optimization problems. IEEE Trans Evol Comput 10(1):50–66. https://doi.org/10.1109/TEVC.2005.851274

Emmerich MT, Deutz AH, Klinkenberg JW (2011) Hypervolume-based expected improvement: monotonicity properties and exact computation. In: 2011 IEEE Congress of Evolutionary Computation (CEC). IEEE, pp 2147–2154

Couckuyt I, Deschrijver D, Dhaene T (2014) Fast calculation of multiobjective probability of improvement and expected improvement criteria for pareto optimization. J Global Optim 60(3):575–594

Zhan D, **ng H (2020) Expected improvement for expensive optimization: a review. J Global Optim 78(3):507–544. https://doi.org/10.1007/s10898-020-00923-x

Daulton S, Balandat M, Bakshy E (2021) Parallel Bayesian optimization of multiple noisy objectives with expected hypervolume improvement. Adv Neural Inform Process Syst 34:2187–2200

Kleijnen JP (2018) Design and analysis of simulation experiments. Springer, Berlin

Ankenman B, Nelson BL, Staum J (2010) Stochastic kriging for simulation metamodeling. Oper Res 58(2):371–382. https://doi.org/10.1109/WSC.2008.4736089

Quan N, Yin J, Ng SH, Lee LH (2013) Simulation optimization via kriging: a sequential search using expected improvement with computing budget constraints. IIE Trans 45(7):763–780. https://doi.org/10.1080/0740817X.2012.706377

Loka N, Couckuyt I, Garbuglia F, Spina D, Van Nieuwenhuyse I, Dhaene T (2022) Bi-objective Bayesian optimization of engineering problems with cheap and expensive cost functions. Eng Comput 39:1923–1933

Zitzler E, Thiele L (1998) Multiobjective optimization using evolutionary algorithms—a comparative case study. In: Parallel Problem Solving from Nature—PPSN V: 5th International Conference Amsterdam, The Netherlands September 27–30, 1998 Proceedings 5. Springer, Berlin, pp 292–301

Emmerich MT, Giannakoglou KC, Naujoks B (2006) Single-and multiobjective evolutionary optimization assisted by gaussian random field metamodels. IEEE Trans Evol Comput 10(4):421–439

Daulton S, Balandat M, Bakshy E (2020) Differentiable expected hypervolume improvement for parallel multi-objective Bayesian optimization. Adv Neural Inform Process Syst 33:9851–9864

Koch P, Wagner T, Emmerich MT, Bäck T, Konen W (2015) Efficient multi-criteria optimization on noisy machine learning problems. Appl Soft Comput 29:357–370

Qin S, Sun C, ** Y, Zhang G (2019) Bayesian approaches to surrogate-assisted evolutionary multi-objective optimization: a comparative study. In: 2019 IEEE Symposium Series on Computational Intelligence (SSCI), pp 2074–2080

Chugh T, ** Y, Miettinen K, Hakanen J, Sindhya K (2016) A surrogate-assisted reference vector guided evolutionary algorithm for computationally expensive many-objective optimization. IEEE Trans Evol Comput 22(1):129–142

Sobester A, Forrester A, Keane A (2008) Engineering design via surrogate modelling: a practical guide. Wiley, West Sussex

Wilson J, Hutter F, Deisenroth M (2018) Maximizing acquisition functions for Bayesian optimization. In: Advances in Neural Information Processing Systems, vol. 31. Curran Associates, Inc

Yarat S, Senan S, Orman Z (2021) A comparative study on PSO with other metaheuristic methods. Springer, Cham, pp 49–72

Li M, Yao X (2019) Quality evaluation of solution sets in multiobjective optimisation: a survey. ACM Comput Surv 52(2):26

López-Ibánez M, Paquete L, Stützle T (2010) Exploratory analysis of stochastic local search algorithms in biobjective optimization. In: Experimental Methods for the Analysis of Optimization Algorithms. Springer, Berlin, pp. 209–222. https://doi.org/10.1007/978-3-642-02538-9_9

Acknowledgements

This work was supported by the Flanders Artificial Intelligence Research Program (FLAIR), the Research Foundation Flanders (FWO Grant 1216021N), and Flanders Make vzw.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Constrained expected improvement (CEI)

The Constrained Expected Improvement (CEI [36]) uses one OK metamodel to approximate the expensive objective and one metamodel to approximate each expensive constraint independently. Then, for a constrained optimization problem with c constraints

the objective value of point \(\textbf{x}\) can be treated as a Gaussian random variable \({\mathcal {N}}\left( \widehat{y}(\textbf{x}), \widehat{s}\mathbf (x) \right)\) and the ith constraint value of \(\textbf{x}\) can be also treated as a Gaussian random variable \({\mathcal {N}}\left( \widehat{g}_i(\textbf{x}), \widehat{e}_i\mathbf (x) \right) , \; i=1, 2, \dots , c\). For this, \(\widehat{y}\) and \(\widehat{s}\) are the GP prediction and standard error of the objective function, respectively, and \(\widehat{g}_i\) and \(\widehat{e}_i\) are the GP prediction and standard error of the ith constraint function respectively.

Then, from Eq. 2 we can transform the constraint and derive the Probability of Feasibility as

In case the GP standing for the objective and constraint function are mutually independent, the CEI can be obtained by combining the EI (to be consistent with our work we used MEI instead and the predictors defined in Eq. 12 and Eq. 13) and PoF as

and

Note that this equation is different from our proposed Eq. 19 in the derivation of the PoF. Figure 12 shows that our proposed cMEI-SK got on average better Pareto fronts (higher hypervolume values) than that of CEI. This suggests that considering the uncertainty predicted by the GP may lead the optimization to points that do not cause an increase in the hypervolume. This was more evident when the improvement was measured with MEI (and fitting only one GP to the scalarized objectives).

Appendix B: Wilcoxon test results

See Fig. 13

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Morales-Hernández, A., Rojas Gonzalez, S., Van Nieuwenhuyse, I. et al. Bayesian multi-objective optimization of process design parameters in constrained settings with noise: an engineering design application. Engineering with Computers (2024). https://doi.org/10.1007/s00366-023-01922-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00366-023-01922-8