Abstract

In this paper we deal with parametric estimation of the copula in the case of missing data. The data items with the same pattern of complete and missing data are combined into a subset. This approach corresponds to the MCAR model for missing data. We construct a specific Cramér–von Mises statistic as a sum of such statistics for the several missing data patterns. The minimization of the statistic gives the estimators for the parameters. We prove asymptotic normality of the parameter estimators and of the Cramér–von Mises statistic.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

When dealing with data from applications, especially from ecology or reliability, one can make the observation that missing values occur rather often. Unfortunately, there is no simple rule for handling missing data in the context of multivariate distributions. General strategies for statistical inference in the presence of missing data are discussed in the popular book by Little and Rubin (2019), see also Graham (2009). In the maximum-likelihood methodology, the EM-algorithm is the method of choice and provides good results in many cases. Many approaches dealing with missing data work by replacing missing data values with plausible values. These imputation methods are discussed in a survey paper by Van Buuren et al. (2006). Imputation is typically based on the idea of calculating the missing components by using its conditional distribution given the observed components.

As is well known, the copula model together with the (one-dimensional) marginal distribution functions define the multivariate distribution functions. A vast number of papers deal with the estimation of one-dimensional distributions. In this paper, the focus is on the parametric estimation of the copula. Here we do not consider imputation methods. We regard the data as given in a special structure, where the set of multivariate data is divided into a certain number of subsets of data with the same pattern of missingness. This approach corresponds to the MCAR model (missing completely at random model) in which the missingness of data items is independent of the data values. The several models for datasets including missing data are explained in Van Buuren et al. (2006) and Little and Rubin (2019), among others. For the data structure under consideration, an adapted Cramér–von Mises statistic is constructed which serves as an approximation measure and describes the discrepancy between the model and the data. Each pattern of missing data leads to a certain Cramér–von Mises statistic. The final statistic is then established as a linear combination of these partial statistics. The Cramér–von Mises statistic has numerical advantages over the Kullback–Leibler statistic (maximum-likelihood estimation) since the computation of the density is not required. The aim of the paper is to prove theorems on the almost sure convergence and on the asymptotic normality of the minimum distance estimators for the copula.

Because of the complexity of the multivariate distribution, we cannot expect that the underlying distribution of the sample vectors coincides with the hypothesis distribution. Thus, it makes sense to assume that the underlying copula does not belong to the parametric family under consideration. The reader finds an extensive discussion about goodness-of-approximation in the one-dimensional case in Liebscher (2014). Considering approximate estimators is another aspect of this paper. Since as a rule there is no explicit formula for the estimators, we have to evaluate the estimator for the copula parameters by a numerical algorithm and receive the estimator as a solution of an optimization problem only at a certain (small) error. These approximate estimators are the subject of the considerations in Sect. 5.

Concerning the estimation of the parameters of the copula, two types of estimators are studied mostly in the literature: maximum pseudo-likelihood estimators and minimum distance estimators. In our approach, minimum distance estimators on the basis of Cramér–von Mises divergence are the appropriate choice. In the case of complete data, minimum distance estimators for the parameters of copulas were examined in the papers by Tsukahara (2005) and by the author (2009). The asymptotic behaviour of likelihood estimators was investigated in papers by Genest and Rivest (1993), Genest et al. (1995), Chen and Fan (2005), and Hofert et al. (2012), among others. Joe (2005) published results on the asymptotic behaviour of two-stage estimation procedures. An application of the EM-algorithm to fitting Gaussian copulas in the presence of missing data can be found in the paper by Kertel and Pauly (2022). Rather few papers deal with estimation of copula parameters in the context of missing data. In the paper by Di Lascio et al. (2015), the authors studied the imputation method for parametric copula estimation. Hamori et al. (2019) considered the estimation of copula parameters in the missing at random model using only complete cases to estimate the actual parameter. In Wang et al. (2014), the estimation of the parameter of Gaussian copulas was examined under the missing completely at random assumption applying a special method tailored only for Gaussian copulas.

The paper is organized as follows: In Sect. 2 we introduce the data structure and the distribution functions of the subsets. The following Sect. 3 introduces the empirical marginal distribution functions appropriate for the data structure and provides a law of iterated logarithm for them. The Cramér–von Mises divergence and its estimator are considered in Sect. 4. Section 5 provides the definition of approximate minimum distance estimators in our context. Moreover, we give the results on almost sure convergence and on asymptotic normality of the estimators of the copula parameters. The problem of goodness of approximation is discussed there, too. Section 6 contains a small simulation study. Section 7 provides the computational results of a data example. The asymptotic normality result of the Cramér–von Mises divergence can be found in Sect. 8. The proofs of the results are located in Sect. 9.

2 Data structure

Let \({\textbf{X}}=(X^{(1)},\ldots ,X^{(d)})^{T}\) be a d-dimensional random vector representing the data without missing values. In the case of a complete observation, we denote the joint distribution function by H and the marginal distribution functions of \(X^{(j)}\) by \(F_{1},\ldots ,F_{d}\). Assume that \(F_{j}\) is continuous (\(j=1,\ldots ,d\)). According to Sklar’s theorem (Sklar 1959), we have

where \(C:[0,1]^{d}\rightarrow [0,1]\) is the uniquely determined d-dimensional copula. The reader can find the theory of copulas in the popular monographs by Joe (1997) and by Nelsen (2006).

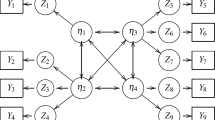

Next we describe the structure of the data, including missing values. The sample breaks down into m subsets of data items with the same pattern of missing data, where m does not depend on the sample size n. Every pattern is modeled as a binary nonrandom vector \({\textbf{b}}=(b_{1},\ldots ,b_{d})^{T}\in \{0,1\}^{d}\), called the missing indicator vector, which has at least two components equal to 1. \(b_{j}=1\) means that the j-th component is observed whereas \(b_{j}=0\) means that the j-th component is missing. Now let \( {\textbf{b}}^{(1)},\ldots ,{\textbf{b}}^{(m)}\in \{0,1\}^{d}\) be the pattern vectors of the data subsets. \(J_{\mu }=\{l:b_{l}^{(\mu )}=1\}\) denotes the set of numbers of observed non-missing components. To give an example, the pattern \({\textbf{b}}^{(\mu )}=(0,1,0,1)^{T}\) of data subset \(\mu \) means that the data items of this subset have a non-missing second component and a non-missing fourth one, whereas components 1 and 3 are missing (\(J_{\mu }=\{2,4\}\)). Let \({\textbf{1}}=(1,\ldots ,1)^{T}\in {\mathbb {R}}^{d}\).

\(n_{\mu }=n_{\mu }(n)\) is the non-random number of sample items in the subset \(\mu \). In this paper the crucial assumption is that for all data subsets, the distribution function of the data items coincides with the corresponding multivariate marginal distribution functions resulting from H. More precisely, we assume that H is the underlying distribution function of the data, and therefore, the distribution function of data subset \(\mu \) is given by

where \({\bar{\textbf{y}}}_{l}=y_{l}\) for \(l\in J_{\mu }\), and \({\bar{\textbf{y}}} _{l}=\infty \) for \(l\notin J_{\mu }\). \((y_{j},j\in J_{\mu })\) denotes a vector of components of \({\textbf{y}}\in {\mathbb {R}}^{d}\) with ascending indices from \( J_{\mu }\). The data structure and the subset distribution functions are then as follows:

\(d_{\mu }\) is the dimension of the data in the subset \(\mu \). Let C be the copula of distribution function H. The copulas of the subsets are determined by

for \({\textbf{u}}=(u_{1},\ldots ,u_{d})^{T}\in [0,1]^{d}\), where \( {\textbf{a}}\odot {\textbf{b}}=\hbox {diag}({\textbf{a}})~{\textbf{b}}\) is the Hadamard product of vectors \({\textbf{a}},{\textbf{b}}\in {\mathbb {R}}^{d}\). Define \({\bar{F}} ({\textbf{x}})=(F_{1}(x_{1}),\ldots ,F_{d}(x_{d}))^{T}\) for \({\textbf{x}} =(x_{1},\ldots ,x_{d})^{T}\). Then we have

In the case \({\textbf{b}}^{(\mu )}\ge {\textbf{b}}^{(\nu )}\), i.e. \(b_{j}^{(\mu )}\ge b_{j}^{(\nu )}\) for \(j=1,\ldots ,d\), the function \(\psi _{\nu \mu }\) selects the components of subset \(\mu \), which are also present in subset \( \nu \): \(\psi _{\nu \mu }(y_{j},j\in J_{\mu })=(y_{j},j\in J_{\nu })\). The function \({\bar{\psi }}_{l\mu }\) selects the component l of the corresponding full data vector from data of subset \(\mu \): \({\bar{\psi }}_{l\mu }(y_{j},j\in J_{\mu })=y_{l}\).

The usage of the missing indicators and the sets \(J_{\mu }\) is shown in an example.

Example

We consider \(d=5\) and \({\textbf{b}}^{(\mu )}=(1,0,1,1,0)^{T}\Rightarrow \)

Further let \(\nu =2,\mu =3,J_{2}=\{2,4\},J_{3}=\{2,3,4\}\). Then \(\psi _{23}((y_{2},y_{3},y_{4})^{T})=(y_{2},y_{4})^{T}\), \({\bar{\psi }} _{43}((y_{2},y_{3},y_{4})^{T})=y_{4}\).

The requirements on the data following the MCAR model for missing data are summarized in Assumption \({\mathcal {A}}_{\text {MCAR}}\):

Assumption

\({\mathcal {A}}_{MCAR}\): The data structure of Table 1 is given. Moreover (1) is valid where \(J_{\mu }=\{l:b_{l}^{(\mu )}=1\}\).

This data structure is present also in the situation, where m samples having the same underlying distribution are given. These samples may originate from several sources.

3 Empirical distribution functions

In this section we consider the empirical marginal distribution functions and their convergence properties. Let \({\tilde{n}}_{j}\) be the number of data items where the j-th component is present:

We introduce \({\bar{n}}_{\nu }\) to be the number of data items where at least the non-missing components of data subset \(\nu \) are present

The inequality \({\textbf{b}}^{(\mu )}\ge {\textbf{b}}^{(\nu )}\) means that data subset \(\mu \) has at least the non-missing components of data subset \(\nu \).

Notice that \({\bar{\psi }}_{j\mu }({\textbf{Y}}_{\mu i})\) and \(\psi _{\nu \mu }( {\textbf{Y}}_{\mu i})\) have the distribution functions \(F_{j}\) and \(H_{\nu }\), respectively. Next we consider estimators for the marginal distributions and the joint distribution functions:

for \(z\in {\mathbb {R}},{\textbf{y}}\in {\mathbb {R}}^{d_{\nu }},\nu =1,\ldots ,m\). We pose the following assumption on \(n_{\mu }\).

Assumption

\({\mathcal {A}}_{n}\): For \(\mu =1,\ldots ,m\),

with constants \(\gamma _{\mu }\in (0,1]\). \(\square \)

For empirical distribution functions, the following law of iterated logarithm holds true (cf. Kiefer 1961, for example).

Proposition 3.1

Suppose that Assumptions \({\mathcal {A}}_{MCAR}\) and \({\mathcal {A}}_{n}\) are fulfilled.

-

(a)

Then we have

$$\begin{aligned} \max _{j=1,\ldots ,d}\sup _{t\in {\mathbb {R}}}\left| F_{nj}(t)-F_{j}(t)\right| =O\left( \sqrt{\frac{\ln \ln n}{n}}\right) \ \ a.s. \end{aligned}$$ -

(b)

Moreover,

$$\begin{aligned} \max _{\mu =1,\ldots ,m}\sup _{{\textbf{y}}\in {\mathbb {R}}^{d_{\mu }}}\left| {\hat{H}}_{n\mu }({\textbf{y}})-H_{\mu }({\textbf{y}})\right| =O\left( \sqrt{ \frac{\ln \ln n}{n}}\right) \ a.s. \end{aligned}$$for \(n\rightarrow \infty \).

4 Cramér–von Mises divergence

Let \({\mathcal {F}}=\{{\mathcal {C}}(\cdot \mid \theta )\}_{\theta \in \Theta }\) be a parametric family of copulas. \(\Theta \subset {\mathbb {R}}^{q}\) is the parameter space. In this paper we want to approximate the sample copula C by the family \({\mathcal {F}}\). For this purpose, we consider the Cramér–von Mises divergence as a measure for the discrepancy between the copula C and \({\mathcal {F}}\). Define the model copula for subset \(\mu \):

for \(u\in [0,1]^{d},\theta \in \Theta ,\mu =1,\ldots ,m\). Let \({\bar{F}} _{\mu }^{*}(y_{j},j\in J_{\mu })=(F_{j}(y_{j}))_{j\in J_{\mu }}\), and \( {\check{F}}_{n\mu }^{*}(y_{j},j\in J_{\mu })=({\hat{F}}_{nj}(y_{j}))_{j\in J_{\mu }}\). \({\bar{F}}_{\mu }^{*}\) is the vector of the marginal distribution functions in subset \(\mu \), \({\check{F}}_{n\mu }^{*}\) is its empirical counter-part. We introduce the population version of the divergence as

where \(H_{\mu }\) is as in (2). We pose the following assumption on \(w_{\mu }\):

Assumption

\({\mathcal {A}}_{W}\): Assume that \(w_{\mu }:[0,1]^{d_{\mu }}\rightarrow [0,+\infty ),\mu =1,\ldots ,m\) are Lipschitz-continuous weight functions for data subsets \(\mu \). \(\square \)

By assumption \({\mathcal {A}}_{W}\), the functions \(w_{\mu }\) are bounded. An example of such a weight function is given by

where \(a,{\bar{w}}_{\mu }>0\) are constants. The divergence \({\mathcal {D}}\) is the weighted sum of the squared discrepancies between the sample copula and the parametric model copula within the concerning data subsets. In general, smaller values of the divergence \({\mathcal {D}}\) show a better approximation by \({\mathcal {F}}\). Observe that \(C_{\mu }({\bar{\textbf{u}}})-{\mathcal {C}}_{\mu }({\bar{\textbf{u}}}\mid \theta )=0\) for \({\bar{\textbf{u}}}\in {\mathcal {B}}:=\{ {\textbf{u}}:u_{j}=0\) for at least one j, or \(u_{j}=1\) for all j except one \(\}\). To put more emphasis on the fit in the boundary regions in the neighbourhood of \({\mathcal {B}}\), the weight functions can be defined in a suitable way similarly to (4).

The concept of a weighted divergence has already been applied by some authors. For instance, Rodriguez and Viollaz (1995) studied the asymptotic distribution of the weighted Cramér–von Mises divergence in the one-dimensional case. Medovikov (2016) examined weighted Cramér–von Mises tests for independence employing the weighted Cramér–von Mises statistic with independence copula as the model copula. We refer to the thorough discussion about weights in Medovikov’s paper where also further references can be found. The \(L^{p}\)-distance and the Kolmogorov–Smirnov distance are alternatives to \({\mathcal {D}}\), see Liebscher (2015), for example.

Next, we construct an estimator for \({\mathcal {D}}(C,{\mathcal {C}}(\cdot \mid \theta ))\) in the situation where the data structure is as introduced in Sect. 2:

for \(\theta \in \Theta \), where \({\hat{H}}_{n\mu }\) is defined in Sect. 3. This estimator has the advantage of being just a sum and does not require to compute an integral. Genest et al. (2009) found that the use of the Cramér–von Mises statistic leads to more powerful goodness-of-fit tests in comparison to other test statistics like the Kolmogorov–Smirnov one. The next section is devoted to the estimation of the parameter \(\theta \) using the divergence (5).

5 Parameter estimation by the minimum distance method

Let the data structure be as in Sect. 2. Consider the family \({\mathcal {F}}=({\mathcal {C}}(\cdot \mid \theta ))_{\theta \in \Theta }\) of copulas with the parameter set \(\Theta \subset {\mathbb {R}}^{q}\). Throughout the paper, we assume that \(C\notin {\mathcal {F}}\). Many authors call this case the misspecification one. In our opinion, this term is not appropriate for the situation here. If we consider multivariate data, then typically, C does not belong to any parametric family. In this section the aim is to estimate the parameter \(\theta _{0}\) which gives the best approximation for the copula in the case of a unique minimizer of \({\mathcal {D}}\) defined in (3):

\({\mathcal {D}}(C,{\mathcal {C}}(\cdot \mid \theta ))\) as in the previous section. It should be highlighted that in general, \(\theta _{0}\) depends on the choice of the discrepancy measure. There is no “true parameter”. Considering \({\mathcal {D}}(C,{\mathcal {C}}(\cdot \mid \theta ))\), \(\theta _{0}\) depends on the weight functions \(w_{\mu }\), and these functions should be chosen prior to the analysis. In the case of constant weight functions, it seems to be reasonable to choose the weights \(w_{\mu }\) such that the summands in (3) for the estimator of \(\theta _{0}\) are roughly equal.

The estimator \({\hat{\theta }}_{n}\) is referred to as an approximate minimum distance estimator (AMDE) if

holds true (\(\widehat{{\mathcal {D}}}_{n}\) as in the previous section), where \( \{\varepsilon _{n}\}\) is a sequence of random variables with \(\varepsilon _{n}\rightarrow 0\ a.s.\) Note that \({\hat{\theta }}_{n}\) is an approximate minimizer of \(\theta \mapsto \widehat{{\mathcal {D}}}_{n}(\theta )\). We refer to Liebscher (2009), where the estimator was introduced. In the case of unique \(\theta _{0}\), \({\hat{\theta }}_{n}\) is an estimator for \(\theta _{0}\). Tsukahara (2005) examined properties of a similar (non-approximate) minimum distance estimator.

Let \(\Vert \cdot \Vert \) be the Euclidean norm, and \(d(x,A)=\inf _{y\in A}\Vert x-y\Vert \) for \(x\in {\mathbb {R}}^{q}\) and subsets \(A\subset {\mathbb {R}} ^{q}\). The following theorem provides the result about the consistency of the AMDE including the case of sets of minimizers of \({\mathcal {D}}\).

Theorem 5.1

Assume that Assumptions \({\mathcal {A}}_{MCAR}\), \({\mathcal {A}}_{n}\) and \({\mathcal {A}} _{W} \) are satisfied. Let \(\theta \mapsto C({\textbf{u}}\mid \theta ))\) be continuous on \(\Theta \) for every \({\textbf{u}}\in [0,1]^{d}\). Suppose that \(\Theta \) is compact.

-

(a)

Then

$$\begin{aligned} \lim _{n\rightarrow \infty }d({\hat{\theta }}_{n},\Psi )=0\quad a.s., \end{aligned}$$where \(\Psi =\arg \min _{\theta \in \Theta }{\mathcal {D}}(C,{\mathcal {C}}(\cdot \mid \theta ))\subset {\mathbb {R}}^{q}\).

-

(b)

If in addition, the condition

$$\begin{aligned} {\mathcal {D}}(C,{\mathcal {C}}(\cdot \mid \theta ))>{\mathcal {D}}(C,{\mathcal {C}} (\cdot \mid \theta _{0}))\text { \ for all }\theta \in \Theta \backslash \{\theta _{0}\} \end{aligned}$$(6)(i.e. \(\Psi =\{\theta _{0}\}\)) is satisfied, then

$$\begin{aligned} \lim _{n\rightarrow \infty }{\hat{\theta }}_{n}=\theta _{0}\quad a.s. \end{aligned}$$

Part (a) of Theorem 5.1 gives sufficient conditions for the almost sure convergence of AMDE \({\hat{\theta }}_{n}\) to the set of minimizers of \( {\mathcal {D}}\) w.r.t. \(\theta \) whereas part b) is the ordinary consistency result. The proof is based on a result from Lachout et al. (1994). The assumption that \(\Theta \) is compact is not as problematic as it seems. In many cases with infinite \(\Theta \), a continuous bijective function can be used to transform the parameter onto a finite interval. Then it can be verified that the consistency holds for the transformed parameter, and hence for the original parameter on suitable intervals. The assumption on compactness of \( \Theta \) is posed to reduce the technical efforts in the proofs.

The next Theorem 5.2 states that \({\hat{\theta }}_{n}\) is asymptotically normally distributed in the case \(\Psi =\{\theta _{0}\}\) under appropriate assumptions. The following assumption on partial derivatives of the copula is needed in this theorem.

Assumption \({\mathcal {A}}_{C}\): \({\bar{\mathcal {C}}} _{k}(\cdot \mid \theta ),{\bar{\mathcal {C}}}_{kl}(\cdot \mid \theta ),\mathcal { {\tilde{C}}}_{j}({\textbf{u}}\mid \cdot ),{\tilde{\mathcal {C}}}_{jk}({\textbf{u}} \mid \theta )\) denote the partial derivatives \(\frac{\partial }{\partial \theta _{k}}{\mathcal {C}}(\cdot \mid \theta ),\frac{\partial ^{2}}{\partial \theta _{k}\partial \theta _{l}}{\mathcal {C}}(\cdot \mid \theta ),\frac{\partial }{\partial u_{j}}{\mathcal {C}}({\textbf{u}}\mid \cdot ),\frac{\partial ^{2}}{\partial \theta _{k}\partial u_{j}}{\mathcal {C}}({\textbf{u}}\mid \theta )\), respectively. We assume that these derivatives exist, and for \( k,l=1,\ldots ,q,j=1,\ldots ,d\), the functions \(({\textbf{u}},t)\longmapsto {\bar{\mathcal {C}}}_{kl}({\textbf{u}}\mid t)\), \(({\textbf{u}},t)\longmapsto {\tilde{\mathcal {C}}}_{jk}({\textbf{u}}\mid t)\) are continuous on \( [0,1]^{d}\times U(\theta _{0})\), where \(U(\theta _{0})\subset \Theta \) is a neighbourhood of \(\theta _{0}\). \(\theta _{0}\) is an interior point of \( \Theta \). Moreover, the partial derivatives of \(w_{\mu }:[0,1]^{d_{\mu }}\rightarrow [0,+\infty )\) are denoted by \(w_{\mu l},l\in J_{\mu }\), and assumed to exist and be continuous. \(\square \)

If Assumption \({\mathcal {A}}_{C}\) is satisfied, then we use the notations

for \(k,l=1,\ldots ,q\), \(j\in J_{\mu }\), where \({\tilde{\textbf{u}}}_{\mu }= {\textbf{u}}\odot {\textbf{b}}^{(\mu )}+\mathbf {1-b}^{(\mu )},{\textbf{u}}\in [0,1]^{d}\). Define \({\mathcal {H}}=({\mathcal {H}}_{kl})_{k,l=1,\ldots ,q}\) as the Hessian matrix of \(\theta \longmapsto {\mathcal {D}}(C,{\mathcal {C}}(\cdot \mid \theta ))\) at \(\theta =\theta _{0}\):

Now we give the theorem:

Theorem 5.2

Assume that \(\varepsilon _{n}=o_{{\mathbb {P}}}(n^{-1})\), and the matrix \({\mathcal {H}}\) is positive definite. Suppose that Assumptions \( {\mathcal {A}}_{C}\) and the assumptions of Theorem 5.1b) are satisfied. Then

Here \(\Sigma ={\mathcal {H}}^{-1}\Sigma _{D}{\mathcal {H}}^{-1}\),

Here \(\text {cov}(\cdot ,\cdot )\) is the cross-covariance matrix.

In Liebscher (2009) this result was proved in the case \(m=1\) and for complete data. Theorem 5.2 corrects some typos in the formula for \(\Sigma \) in the author’s 2009 paper. Tsukahara (2005) proved consistency and asymptotic normality for his minimum distance estimator in the case where the copula C of \(X_{i}\) belongs to a small neighbourhood of a member of the parametric family. The covariance structure of the estimator \(\hat{\theta }_{n}\) is rather complicated. One potential approach is to estimate \(\Sigma \) by substituting distribution functions with their empirical counterparts, and \(\theta _{0}\) by \({\hat{\theta }}_{n}\). In view of the sophisticated structure of this estimator, one may use alternative techniques like bootstrap to get approximate values for the covariances.

To compare the various fitting results, we introduce the approximation coefficient

where

\(\widehat{{\mathcal {D}}}_{n}^{0}(\theta )\) is the Cramér–von Mises divergence when the independence copula \(\Pi \) is used for fitting. \(\Pi \) is the reference copula in the definition of \({\hat{\rho }}\). The approximation coefficient is defined in analogy to the regression coefficient of determination and describes the grade of improvement of the fit using model \( {\mathcal {F}}\) in comparison to the independence copula. Obviously, we have \( {\hat{\rho }}\le 1\). In the case where the independence copula is included in the model family \({\mathcal {F}}\), the inequality \(0\le {\hat{\rho }}\le 1\) is fulfilled. Values \({\hat{\rho }}\) close to 1 indicate that the approximation is good. In case of models with very small dependencies, the value of \({\hat{\rho }} \) could be close to zero. Then it is recommended to use another reference copula instead of \(\Pi \).

6 A small simulation study

A simulation study should show that the estimation method studied in this paper lead to a reasonable performance of the estimators. Here we simulated the following data with 100,000 repetitions of it:

three-dimensional data vectors \({\textbf{Y}}_{\mu j}\) having copula C and marginal normal distributions N(1, 0.6), N(2, 0.3), N(3, 0.8), respectively. Copula C is determined by

where \(C^{(1)}\) is the Clayton (3) copula and \(C^{(2)}\) is the Frank (2) one (for formulas, see Nelsen (2006)).

Half of the data are complete and the remaining data vectors have a missing third component. In the place of C, we considered the Frank, Clayton, Joe and Gumbel-Hougaard copula. Since the second summand in \(\widehat{{\mathcal {D}} }_{n}\) (\(\mu =2\)) is expected to be smaller than the first one (smaller dimension!), we chose \(w_{1}=0.3\) and \(w_{2}=0.7\). The results are summarized in Table 2.

The values \(\theta _{0}\) were computed using the computer algebra system Mathematica. The results of Table 2 indicate that the optimization leads to a reasonable approximation of copula C in the case of the considered copula families \({\mathcal {F}}\). The approximation becomes more precise when n increases; i.e. the average lies closer to \(\theta _{0}\) and the standard deviation is smaller. Unfortunately, comparisons with results for other data structures are not very useful, since the divergence is constructed in accordance to the data scheme of Sect. 2. Note that \(\theta _{0}\) depends on the choice of the divergence. Further computations have revealed that the summands in \({\hat{D}}_{n}\) for the several subsets differ only slightly when the weights \(w_{j}\) selected as above.

7 A data example

Here we consider a dataset from the TRY plant trait database, see Kattge et al. (2020). This dataset was already used for modelling and fitting in Liebscher et al. (2022). See this paper for a detailed description of the dataset. Here we restrict the considerations to three variables according to Table 2 and to 9 herb species: ‘Ac.mi’, ‘Be.pe’, ‘Ce.ja’, ‘Ga.mo’, ‘Ga.ve’, ‘Pl.la’, ‘Ra.ac’, ‘Ra.bu’, ‘Ve.ch’ (Table 3).

A first analysis of the dataset shows the various frequencies of the missing data patterns provided in Table 4.

Two patterns are sorted out because of too few data items. Therefore, we consider only two missing data patterns (\(m=2\)) with equal weights \(w_{\mu }=0.5\). Now we want to fit product copulas to the ecological dataset (variables RCNC, LCNC, HW). Let \(C^{(1)},C^{(2)},C^{(3)}\) be given copulas taken from parametric Archimedean copula families like the Frank, Clayton, Gumbel families (for formulas, see Nelsen 2006). The product copulas are defined by

In these formulas \(t_{j}\) is the parameter of \(C^{(j)}\), and \(\alpha _{j},\beta _{j}\in [0,1]\). Product copulas were studied in Liebscher (2008) in detail. Table 5 summarizes the fitting results.

From Table 5 and further computational results, we see that the approximation is fairly good for model C*F in the case of the product of 2 copulas, and for model C*N*N in the case of the product of 3 copulas. In the latter case, a slightly better approximation coefficient is obtained in comparison to the product of 2 copulas.

8 Convergence of the CvM-divergence

In this section we give the theorem on asymptotic normality of the CvM-divergence \(\widehat{{\mathcal {D}}}_{n}({\hat{\theta }}_{n})\).

Theorem 8.1

Assume that the assumptions of Theorem 5.2 are satisfied. Then

The formula for \(\Sigma _{0}\) is given in the proof.

In the case \(m=1\) and for complete data, this theorem was already proven in Liebscher (2015). Theorem 8.1 can be used to construct tests about the divergence \({\mathcal {D}}(C,{\mathcal {C}}(\cdot \mid \theta ))\). We refer to the discussion in the author’s paper (2015).

9 Proofs

9.1 Proof of Theorem 5.1

Let \(\Phi _{n}:\Theta \rightarrow {\mathbb {R}}\) be a random function, and \( {\hat{\theta }}_{n}\) be an estimator satisfying

Here \(\{\varepsilon _{n}\}\) is a sequence of random variables with \( \varepsilon _{n}\rightarrow 0\ a.s.\) Theorem 2.2 of the paper Lachout et al. (2005) leads to the following proposition.

Proposition 9.1

Assume that \(\Theta \) is compact, and \(\lim _{n\rightarrow \infty }\sup _{t\in \Theta }\left| \Phi _{n}(t)-\Phi (t)\right| =0\) a.s. holds for a continuous function \(\Phi \).

-

(a)

Then

$$\begin{aligned} \lim _{n\rightarrow \infty }d({\hat{\theta }}_{n},\Psi )=0\quad a.s., \end{aligned}$$where \(\Psi =\text {argmin}_{t\in \Theta }\Phi (t)\subset {\mathbb {R}}^{q}\), \(d(\cdot ,\cdot )\) as above.

-

(b)

Moreover, if in addition, \(\Phi (\theta )>\Phi (\theta _{0})\) holds for all \(\theta \in \Theta \backslash \{\theta _{0}\}\), then

$$\begin{aligned} \lim _{n\rightarrow \infty }{\hat{\theta }}_{n}=\theta _{0}\quad a.s. \end{aligned}$$

Let \(\Phi _{n}(\theta )=\widehat{{\mathcal {D}}}_{n}(\theta )\) and \(\Phi (\theta )={\mathcal {D}}(C,{\mathcal {C}}(\cdot \mid \theta ))\). In this section, the aim is to apply Proposition 9.1 in order to prove the strong consistency result for \({\hat{\theta }}_{n}\). The following lemma justifies the strong uniform consistency assumption in Proposition 9.1.

Lemma 9.2

Assume that assumptions of Theorem 5.1 are fulfilled. Then

Proof

Notice that

Utilizing the Lipschitz continuity of copulas with Lipschitz constant 1 and the triangle inequality, we obtain

where

Since \(\theta \longmapsto \left( H_{\mu }(\textbf{y})-C_{\mu }({\bar{F}}_{\mu }^{*}(\textbf{y})\mid \theta )\right) ^{2}w_{\mu }({\bar{F}}_{\mu }^{*}(\textbf{y}))\) is continuous for \(\textbf{y}\in {\mathbb {R}}^{d_{\mu }}\) by assumptions, and the envelope function \(w_{\mu }({\bar{F}}_{\mu }^{*}(.))\) is integrable, the strong Glivenko–Cantelli theorem (see Van der Vaart (1998), Theorem 19.4 and Example 19.8) implies

as \(n\rightarrow \infty \). Further, by Assumption \({\mathcal {A}}_{W}\) (L is the Lipschitz-constant of \(w_{\mu }\)),

An application of Proposition 3.1 leads to the lemma. \(\square \)

Proof of Theorem 5.1

Theorem 5.1 is a direct consequence of Proposition 9.1 and Lemma 9.2. \(\square \)

9.2 Auxiliary statements

The following lemma about the convergence of the marginal empirical distribution functions can be stated.

Lemma 9.3

Proof

The assertion follows from the Dvoretzky–Kiefer–Wolfowitz inequality, see van der Vaart (1998, p. 268).

In the following we derive central limit theorems. First we consider

with functions \(g_{\mu }:{\mathbb {R}}^{d_{\mu }}\rightarrow {\mathbb {R}}^{\kappa }\) and provide a central limit theorem for \(W_{n}\). \(\square \)

Proposition 9.4

Suppose that assumption \({\mathcal {A}}_{n}\) is fulfilled, and \( {\mathbb {E}}\left\| g_{\mu }(\textbf{Y}_{\mu 1})\right\| ^{2}<\infty \) for \(\mu =1,\ldots ,m\). Then we have

where \(\Sigma _{W}=\sum _{\mu =1}^{m}\gamma _{\mu }\text {cov}(g_{\mu }( {\textbf{Y}}_{\mu 1}))\), and \(\text {cov}(Z)\) is covariance matrix of random vector Z.

Proof

Applying the multivariate central limit theorem (see Serfling 1980, Theorem 1.9.1B), we obtain that \(W_{n}^{(1)},\ldots ,W_{n}^{(m)}\) are asymptotically normally distributed. Since these summands \(W_{n}^{(\mu )}\) of \(W_{n}\) are independent, we can conclude the asymptotic normality of \( W_{n}\). For the covariance matrix of \(W_{n}\), we obtain

as \(n\rightarrow \infty \). \(\square \)

Let \(\Lambda _{\mu \nu }:{\mathbb {R}}^{d_{\mu }}\times {\mathbb {R}}^{d_{\nu }}\rightarrow {\mathbb {R}}^{\kappa }\) be measurable functions for \(\mu ,\nu =1,\ldots ,m\), \(\Lambda _{\mu \nu }=(\Lambda _{\mu \nu }^{(1)},\ldots ,\Lambda _{\mu \nu }^{(\kappa )})^{T}\). Next we derive a central limit theorem for the U-statistic

Let \(\theta _{\mu \nu }={\mathbb {E}}\Lambda _{\mu \nu }({\textbf{Y}}_{\mu 1}, {\textbf{Y}}_{\nu 2})\) for all \(\mu ,\nu \). We introduce

\({\tilde{h}}_{\mu \nu }=({\tilde{h}}_{\mu \nu }^{(1)},\ldots ,{\tilde{h}}_{\mu \nu }^{(\kappa )})^{T}\). Note that \({\mathbb {E}}{\tilde{h}}_{\mu \nu }({\textbf{Y}} _{\nu 1})={\mathbb {E}}{\tilde{h}}_{\nu \mu }({\textbf{Y}}_{\mu 1})=0\) for all \(\mu ,\nu \). Proposition 9.5 provides the central limit theorem for \( U_{n}\). In the proof we use Hájek’s projection principle.

Proposition 9.5

Suppose that \({\mathbb {E}}\Lambda _{\mu \nu }^{(L)}({\textbf{Y}} _{\mu 1},{\textbf{Y}}_{\nu j})^{2}<+\infty \) for all \(\mu ,\nu ,L=1,\ldots ,\kappa ,j=1,2\). We have

where \(\Sigma _{U}=\sum _{\nu =1}^{m}\gamma _{\nu }\sum _{\mu =1}^{m}\sum _{ {\bar{\mu }}=1}^{m}\gamma _{\mu }\gamma _{{\bar{\mu }}}{\mathbb {E}}{\tilde{h}}_{\mu \nu }({\textbf{Y}}_{\nu 1}){\tilde{h}}_{{\bar{\mu }}\nu }^{T}({\textbf{Y}}_{\nu 1})\).

Proof

Define

We obtain (\({\mathbb {V}}\) denotes the variance)

Hence \(U_{n}^{ {{}^\circ } }\overset{{\mathbb {P}}}{\longrightarrow }0\) holds, and

where \(\delta _{\mu \mu }=1\) and \(\delta _{\nu \mu }=0\) for \(\nu \ne \mu \). Now we split the sum in (8) into two parts. Define

Notice that \({\mathbb {E}}{\tilde{\Lambda }}_{\mu \nu }({\textbf{Y}}_{\mu i},\textbf{ Y}_{\nu j})=0\). Then we have

The next step is to show \({\tilde{U}}_{n}=o_{{\mathbb {P}}}(1)\). Later we prove asymptotic normality of \({\bar{U}}_{n}\). Observe that

for \(i\ne j\) or \(\mu \ne \nu \). Therefore, identity

holds for \(l\ne j\). Thus, by this equation and similar identities, we have

for \((i=k,j\ne l)\vee (j=l,i\ne k)\vee (\mu =\nu ,i=l,j\ne k)\vee (\mu =\nu ,j=k,i\ne l)\). Obviously, this equation holds for different indices i, j, k, l. On the other hand, we have

Consequently, for \(\mu \ne \nu \), it can be derived

In a similar way, we obtain

Hence \({\tilde{U}}_{n}\overset{{\mathbb {P}}}{\longrightarrow }0\) holds true. Notice that

On the other hand, it follows that

where

An application of Proposition 9.4 to the sum \(U_{n}^{(1)}\) leads to \( U_{n}^{(1)}\overset{d}{\longrightarrow }{\mathcal {N}}(0,\Sigma _{U})\), where

Analogously, one shows that

for \(\mu =1,\ldots ,m\) with an appropriate \(\Sigma _{0}\), which implies \( U_{n}^{(2)}\overset{{\mathbb {P}}}{\longrightarrow }0\). Therefore, we have \( {\bar{U}}_{n}\overset{{\mathcal {D}}}{\longrightarrow }{\mathcal {N}}(0,\Sigma _{U})\). By (9) and \({\tilde{U}}_{n}\overset{{\mathbb {P}}}{\longrightarrow } 0\), the proof is complete. \(\square \)

9.3 Proof of Theorem 5.2

Throughout this section we suppose that the assumptions of Theorem 5.1b), and Assumption \({\mathcal {A}}_{C}\) are satisfied. Here \(\theta _{0}\) is the unique minimizer of \({\mathcal {D}}\) defined in (3). We introduce \({\mathcal {H}}_{n}(\theta )=({\mathcal {H}}_{nkl}(\theta ))_{k,l=1,\ldots ,q}\) as the Hessian of \(\widehat{{\mathcal {D}}}_{n}(\theta )\):

\(\nabla _{\theta }\psi (\theta )\) denotes the gradient of function \(\psi \) w.r.t. \(\theta \), and \(\nabla _{\theta }\psi (\theta _{0})\) is the abbreviation for \(\left. \nabla _{\theta }\psi (\theta )\right| _{\theta =\theta _{0}}\). Observe that

Let \({\tilde{\theta }}_{n}\) be a minimizer of \(\widehat{{\mathcal {D}}}_{n}(\cdot ) \). Since \(\nabla _{\theta }\widehat{{\mathcal {D}}}_{n}({\tilde{\theta }}_{n})=0\), we can use the Taylor formula to derive (note that \(\theta _{0}\) is an interior point of \(\Theta \))

where \(t_{nk}^{*}=\theta _{0}+\eta _{nk}\left( {\tilde{\theta }}_{n}-\theta _{0}\right) \) and \({\mathcal {H}}_{n}^{*}=({\mathcal {H}}_{nkl}(t_{nk}^{*}))_{k,l=1,\ldots ,q}\). Here \(\eta _{nk}\in (0,1)\) is a random variable, \( k=1,\ldots ,q\).

Taking identity (10) into account, Theorem 5.2 is proven in three steps: we show the asymptotic normality of \(\nabla _{\theta } \widehat{{\mathcal {D}}}_{n}(\theta _{0})\), we prove that \({\mathcal {H}} _{n}^{*}\) converges in probability to a certain matrix, and we show that \({\tilde{\theta }}_{n}-{\hat{\theta }}_{n}\) is \(o_{{\mathbb {P}}}(n^{-1/2})\). The following lemma includes the first step.

Lemma 9.6

We have

with \(\Sigma _{D}\) as in Theorem 5.2.

Proof

We decompose \(-\nabla _{\theta }\widehat{{\mathcal {D}}} _{n}(\theta _{0})\) and obtain

Further we define

and \(A_{4n}^{*}=\left( A_{4nk}^{*}\right) _{k=1,\ldots ,q}\), \( A_{4n}=\left( A_{4nk}\right) _{k=1,\ldots ,q}\). Obviously, \(\nabla _{\theta }{\mathcal {D}}(C,{\mathcal {C}}(\cdot \mid \theta _0))=0\) such that \({\mathbb {E}}A_{1n}=0\). The next step is to show that \(A_{2n}+\left( A_{3n}-A_{3n}^{*}\right) +\left( A_{4n}-A_{4n}^{*}\right) =o_{{\mathbb {P}}}(n^{-1/2})\). Note that copulas are Lipschitz continuous. Since the partial derivatives \({\bar{\mathcal {C}}}_{\mu l}^{\circ }(\cdot \mid \theta _{0})\) are Lipschitz continuous by assumption \({\mathcal {A}}_{C}\) and the weight functions \(w_{\mu }\) are Lipschitz continuous by assumption \( {\mathcal {A}}_{W}\), we obtain

by applying Proposition 3.1. Let \(\tau _{n}:=\max _{j=1,\ldots ,d}\sup _{z\in {\mathbb {R}}}\left| {\hat{F}}_{jn}(z)-F_{j}(z)\right| \). Observe that \({\tilde{\mathcal {C}}}_{\mu l}^{ {{}^\circ } }(\cdot \mid \theta )\) is uniformly continuous on \([0,1]^{d_{\mu }}\) for \( \theta \in U(\theta _{0})\) in view of the Heine-Cantor theorem. Further by Lemma 9.3 and the mean value theorem, we obtain

On the other hand by Lemma 9.3 and the mean value theorem, we derive (\({\check{F}}_{n\mu \eta }^{**}(y):={\bar{F}}_{\mu }^{*}(y)+\eta \left( {\check{F}}_{n\mu }^{*}(y)-{\bar{F}}_{\mu }^{*}(y)\right) \) for \( y\in {\mathbb {R}}^{d_{\mu }}\))

since \(w_{\mu }\), \(w_{\mu l}\), \({\tilde{\mathcal {C}}}_{\mu lk}^{ {{}^\circ } }(\cdot \mid \theta _{0})\) and \({\tilde{\mathcal {C}}}_{\mu k}^{ {{}^\circ } }(\cdot \mid \theta _{0})\) are uniformly continuous on \([0,1]^{d_{\mu }}\). Identities (12)-(14) imply

In the remaining part of the proof, we show the asymptotic normality of \( \sqrt{n}A_{n}\), where \(A_{n}=A_{1n}+A_{3n}^{*}+A_{4n}^{*}\). We have

where

Now we decompose \(\sqrt{n}A_{n}\) such that we obtain

where

and

In the following we prove that \({\bar{A}}_{n}=o_{{\mathbb {P}}}(n^{-1/2})\). Note that \({\mathbb {E}}\Lambda _{\mu }^{(1)}({\textbf{Y}}_{\mu i})=0\). We have

Moreover, in view of Proposition 9.5,

with certain (finite) covariance matrices \(\Sigma _{\mu \nu k},\Sigma _{\mu \nu kl}\) \((k=1,\ldots ,q,l\in J_{\mu }\cap J_{\nu },\mu ,\nu =1,\ldots ,m)\). Hence by Assumption \({\mathcal {A}}_{n}\),

and by equations (11), (15), (16),

An application of the central limit theorem in Proposition 9.5 gives the asymptotic normality of \(\sqrt{n}\nabla _{\theta }\widehat{ {\mathcal {D}}}_{n}(\theta _{0})\). To derive a formula for the covariance matrix, we consider

where

By Proposition 9.5 we obtain the formula for the covariance matrix \( \Sigma _{D}\). \(\square \)

Next we deal with the convergence of \({\mathcal {H}}_{n}^{*}\) and prove the following lemma.

Lemma 9.7

Suppose that \(t_{nk}^{*}\rightarrow \theta _{0}\) for \( k=1,\ldots ,q\). Then we have

for \(k,l=1,\ldots ,q\).

Proof

Notice that \({\bar{\tau }}_{n}:=\sup _{y\in {\mathbb {R}}^{d_{\mu }}}\left\| {\check{F}}_{n\mu }^{*}(y)-{\bar{F}}_{\mu }^{*}(y)\right\| \rightarrow 0\) a.s. Moreover, \({\mathcal {C}}_{\mu },\mathcal { {\bar{C}}}_{\mu k}\), and \({\bar{\mathcal {C}}}_{\mu kl}\) are uniformly continuous on \([0,1]^{d_{\mu }}\times U(\theta _{0})\) in view of the Heine-Cantor theorem, and we obtain

for \(k,l=1,\ldots ,q\). We define

Therefore, and by Theorem 3.1, we have

In view of the law of large numbers, we have

a.s. for \(k,l=1,\ldots ,q\). This completes the proof. \(\square \)

Now we are in a position to prove Theorem 5.2.

Proof of Theorem 5.2

First we derive the convergence rate of \({\tilde{\theta }}_{n}-{\hat{\theta }}_{n}\). Note that \(\nabla _{\theta } \widehat{{\mathcal {D}}}_{n}({\tilde{\theta }}_{n})=0\), and \(\theta _{0}\) is an interior point of \(\Theta \). Then the Taylor formula leads to

where \(t_{nk}^{**}={\tilde{\theta }}_{n}+\eta _{nk}\left( {\hat{\theta }} _{n}-{\tilde{\theta }}_{n}\right) ,0\le \eta _{nk}\le 1\) and \({\mathcal {H}} _{n}^{**}=({\mathcal {H}}_{nkl}(t_{nk}^{**}))_{k,l=1,\ldots ,q}\). In view of Theorem 5.1, the estimators \({\tilde{\theta }}_{n}\) and \( {\hat{\theta }}_{n}\) are strongly consistent such that \(t_{nk}^{**}\rightarrow \theta _{0}\) a.s. Using Lemma 9.7, we obtain \(\mathcal { H}_{n}^{**}\rightarrow {\mathcal {H}}\ a.s.\). Hence

where \(\lambda _{\text {min}}(A)\) is the smallest absolute eigenvalue of the matrix A. \(\lambda _{\text {min}}({\mathcal {H}})\) is positive by assumption. Therefore, it follows that

Using (10) and Lemmas 9.6, 9.7, an application of Slutsky’s theorem leads to

In view of (18), the proof of Theorem 5.2 is complete. \(\square \)

9.4 Proof of Theorem 8.1

First we prove a lemma on asymptotic normality of \(\sqrt{n}\left( \widehat{ {\mathcal {D}}}_{n}(\theta _{0})-{\mathcal {D}}(C,{\mathcal {C}}(\cdot \mid \theta _{0}))\right) \), which is crucial for the proof of asymptotic normality of \( \sqrt{n}\left( \widehat{{\mathcal {D}}}_{n}({\hat{\theta }}_{n})-{\mathcal {D}}(C, {\mathcal {C}}(\cdot \mid \theta _{0}))\right) \) in Theorem 8.1.

Lemma 9.8

Let the assumptions of Theorem 5.2 be satisfied. Then

Proof

Define

We obtain

where

Analogously to the proof of Lemma 9.6, we can derive

where

Further,

where

The convergence of the "\(o_{{\mathbb {P}}}(1)\)"-term is again proven analogously to the proof of Lemma 9.6. An application of the central limit theorem in Proposition 9.5 gives the asymptotic normality of \( \sqrt{n}\nabla _{\theta }\widehat{{\mathcal {D}}}_{n}(\theta _{0})\). Eventually, we derive the formula for the covariance matrix as follows

Moreover, we have

\(\square \)

Proof of Theorem 8.1

Analogously to (), we have

where \(t_{nk}^{\#}={\tilde{\theta }}_{n}+\eta _{nk}\left( \theta _{0}-\tilde{ \theta }_{n}\right) ,0\le \eta _{nk}\le 1\) and \({\mathcal {H}}_{n}^{\#}=( {\mathcal {H}}_{nkl}(t_{nk}^{\#}))_{k,l=1,\ldots ,q}\). An application of Theorem 5.2 leads to

An application of Lemma 9.8 completes the proof. \(\square \)

References

Chen X, Fan Y (2005) Pseudo-likelihood ratio tests for semiparametric multivariate copula model selection. Can J Stat 33:389–414. https://doi.org/10.1002/cjs.5540330306

Di Lascio FML, Giannerini S, Reale A (2015) Exploring copulas for the imputation of complex dependent data. Stat Methods Appl 24:159–175. https://doi.org/10.1007/s10260-014-0287-2

Genest C, Rivest L-P (1993) Statistical inference procedures for bivariate Archimedian copulas. J Am Stat Assoc 88:1034–1043. https://doi.org/10.1080/01621459.1993.10476372

Genest C, Ghoudi K, Rivest L-P (1995) A semiparametric estimation procedure of dependence parameters in multivariate families of distributions. Biometrika 82:543–552. https://doi.org/10.1093/biomet/82.3.543

Genest C, Rémillard B, Beaudoin D (2009) Goodness-of-fit tests for copulas: a review and a power study. Insur Math Econ 44:199–213. https://doi.org/10.1016/j.insmatheco.2007.10.005

Graham JW (2009) Missing data analysis: making it work in the real world. Annu Rev Psychol 60:549–576. https://doi.org/10.1146/annurev.psych.58.110405.085530

Hamori S, Motegi K, Zhang Z (2019) Calibration estimation of semiparametric copula models with data missing at random. J Multivariate Anal 173:85–109. https://doi.org/10.1016/j.jmva.2019.02.003

Hofert M, Mächler M, McNeil AJ (2012) Likelihood inference for Archimedean copulas in high dimensions under known margins. J Multivariate Anal 110:133–150. https://doi.org/10.1016/j.jmva.2012.02.019

Joe H (1997) Multivariate models and dependence concepts. Chapman & Hall, London

Joe H (2005) Asymptotic efficiency of the two-stage estimation method for copula-based models. J Multivariate Anal 94:401–419. https://doi.org/10.1016/j.jmva.2004.06.003

Kattge J, Bönisch G, Díaz S, Lavorel S, Prentice IC, Leadley P, Tautenhahn S, Werner GDA, Aakala T, Abedi M, Acosta ATR, Adamidis GC, Adamson K, Aiba M, Albert CH, Alcántara JM, Alcázar CC, Aleixo I, Ali H, Wirth C (2020) TRY plant trait database-enhanced coverage and open access. Glob Change Biol 26:119–188. https://doi.org/10.1111/gcb.14904

Kertel M, Pauly M (2022) Estimating Gaussian copulas with missing data with and without expert knowledge. Entropy 24:1849. https://doi.org/10.3390/e24121849

Kiefer J (1961) On large deviations of the empiric D. F. of vector chance variables and a law of the iterated logarithm. Pac J Math 11:649–660

Lachout P, Liebscher E, Vogel S (2005) Strong convergence of estimators as \(\varepsilon _{n}\)-minimisers of optimisation problems. Ann Inst Stat Math 57:291–313. https://doi.org/10.1007/BF02507027

Liebscher E (2008) Construction of asymmetric multivariate copulas. J Multivariate Anal 99:2234–2250. https://doi.org/10.1016/j.jmva.2008.02.025

Liebscher E (2009) Semiparametric estimation of the parameters of multivariate copulas. Kybernetika 6:972–991

Liebscher E (2015) Goodness-of-approximation of copulas by a parametric family. In: Stochastic models, statistics and their applications. Springer Proceedings in Mathematics & Statistics, vol 122, pp 101–109. https://doi.org/10.1007/978-3-319-13881-7_12

Liebscher E, Taubert F, Waltschew D, Hetzer J (2022) Modelling multivariate data using product copulas and minimum distance estimators: an exemplary application to ecological traits. Environ Ecol Stat 29:315–338. https://doi.org/10.1007/s10651-021-00525-0

Little RJA, Rubin DB (2019) Statistical analysis with missing data, 3rd edn. Wiley, Hoboken

Medovikov I (2016) Non-parametric weighted tests for independence based on empirical copula process. J Stat Comput Simul 86:105–121. https://doi.org/10.1080/00949655.2014.995657

Nelsen RB (2006) An introduction to copulas. Lecture notes in statistics, vol 139, 2nd edn. Springer, Berlin

Rodriguez JC, Viollaz AJ (1995) A Cramer–von Mises type goodness of fit test with asymmetric weight function. Commun Stat 24:1095–1120. https://doi.org/10.1080/03610929508831542

Serfling RJ (1980) Approximation theorems of mathematical statistics. Wiley, New York

Sklar A (1959) Fonctions de répartition à n dimensions et leurs marges. Publ Inst Stat Univ Paris 8:229–231

Tsukahara H (2005) Semiparametric estimation in copula models. Can J Stat 33:357–375. https://doi.org/10.1002/cjs.5540330304

Van Buuren S, Brand JPL, Groothuis-Oudshoorn CGM, Rubin DB (2006) Fully conditional specification in multivariate imputation. J Stat Comput Simul 76:1049–1064. https://doi.org/10.1080/10629360600810434

Van der Vaart AW (1998) Asymptotic statistics. Cambridge University Press, Cambridge

Wang H, Fazayeli F, Chatterjee S, Banerjee A (2014) Gaussian copula precision estimation with missing values. International Conference on Artificial Intelligence and Statistics 2014

Acknowledgements

The author would like to thank the two anonymous reviewers for their valuable hints leading to improvements of the paper.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author has no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liebscher, E. Fitting copulas in the case of missing data. Stat Papers 65, 3681–3711 (2024). https://doi.org/10.1007/s00362-024-01535-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-024-01535-3