Abstract

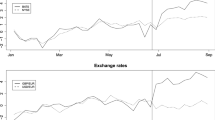

We consider the detection of multiple change-points in a high-dimensional time series exhibiting both cross-sectional and temporal dependence. Several test statistics based on the celebrated CUSUM statistic are used and discussed. In particular, we propose a novel block wild bootstrap method to address the presence of cross-sectional and temporal dependence. Furthermore, binary segmentation and the moving sum algorithm are considered to detect and locate multiple change-points. We also give some theoretical justifications for the moving sum method. An extensive numerical study provides insights on the performance of the proposed methods. Finally, our proposed procedures are used to analyze financial stock data in Korea.

Similar content being viewed by others

References

Andreou E, Ghysels E (2009) Structural breaks in financial time series. In: Andersen TG, Davis RA et al (eds) Handbook of financial time series. Springer, Berlin, pp 839–870

Andrews DWK (1991) Heteroskedasticity and autocorrelation consistent covariance matrix estimation. Econometrica 59(3):817–858

Baek C, Gates KM, Leinwand B, Pipiras V (2021) Two sample tests for high-dimensional autocovariances. Comput Stat Data Anal 153(C):107067

Bai J (2010) Common breaks in means and variances for panel data. J Econom 157(1):78–92

Bhattacharjee M, Banerjee M, Michailidis G (2019) Change point estimation in panel data with temporal and cross-sectional dependence. ar**v:1904.11101

Chang J, Yao Q, Zhou W (2017) Testing for high-dimensional white noise using maximum cross-correlations. Biometrika 104(1):111–127

Chernozhukov V, Chetverikov D, Kato K (2013) Gaussian approximations and multiplier bootstrap for maxima of sums of high-dimensional random vectors. Ann Stat 41(6):2786–2819

Chernozhukov V, Chetverikov D, Kato K (2017) Central limit theorems and bootstrap in high dimensions. Ann Probab 45(4):2309–2352

Cho H (2016) Change-point detection in panel data via double CUSUM statistic. Electron J Stat 10(2):2000–2038

Cho H, Fryzlewicz P (2018) hdbinseg: change-point analysis of high-dimensional time series via binary segmentation. University of Bristol, Bristol

Csörgö M, Csörgö M, Horváth L et al (1997) Limit theorems in change-point analysis. Wiley, New York

Eichinger B, Kirch C (2018) A MOSUM procedure for the estimation of multiple random change points. Bernoulli 24(1):526–564

Fryzlewicz P (2014) Wild binary segmentation for multiple change-point detection. Ann Stat 42(6):2243–2281

Horváth L, Hušková M (2012) Change-point detection in panel data. J Time Ser Anal 33(4):631–648

Horváth L, Kokoszka P, Zhang A (2006) Monitoring consistency of variance in conditionally heteroskedastic time series. Econom Theory 22(3):373–402

Hsu C-C (2007) The MOSUM of squares test for monitoring variance changes. Financ Res Lett 4(4):254–260

Hušková M, Slabỳ A (2001) Permutation tests for multiple changes. Kybernetika 37(5):605–622

Jirak M (2015) Uniform change point tests in high dimension. Ann Stat 43(6):2451–2483

Kuelbs J, Philipp W (1980) Almost sure invariance principles for partial sums of mixing \(B\)-valued random variables. Ann Probab 8(6):1003–1036

Lavielle M (2005) Using penalized contrasts for the change-point problem. Signal Process 85(8):1501–1510

Leadbetter MR, Lindgren G, Rootzén H (2012) Extremes and related properties of random sequences and processes. Springer, New York

Lee T, Baek C (2020) Block wild bootstrap-based CUSUM tests robust to high persistence and misspecification. Comput Stat Data Anal 150:106996

Li H, Munk A, Sieling H (2016) FDR-control in multiscale change-point segmentation. Electron J Stat 10(1):918–959

Acknowledgements

The authors thank the anonymous Reviewers and the Associate editor for their comments which helped improving the paper. Marie-Christine Düker gratefully acknowledges financial support from the National Science Foundation 1934985, 1940124, 1940276, 2114143. Seok-Oh Jeong’s work was supported by the Basic Research Program from the National Research Foundation of Korea (NRF-2021R1F1A1063440). Changryong Baek was supported by the Basic Science Research Program from the National Research Foundation of Korea (NRF-2022R1F1A1066209).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Technical results and their proofs

Appendix A: Technical results and their proofs

In this appendix, we generalize a result in Leadbetter et al. (2012). Before we formally state our result, the following paragraph attempts to clarify how our result complements those in Leadbetter et al. (2012). Leadbetter et al. (2012) consider stationary Gaussian processes \(\{\xi (t)\}_{0 \le t \le T}\) with covariance function \(r(v) = \mathbb {E}(\xi (t)\xi (t+v))\) which is assumed to satisfy

for some \(\alpha \in (0,2]\). Chapter 12.3 in Leadbetter et al. (2012) establishes convergence results for the supremum over an increasing interval \(M(T) = \sup _{0 \le t \le T} \xi (t)\). One aims to find functions a(T), b(T) such that

However, results for multivariate Gaussian processes are only established for the case \(\alpha =2\) in (A.1); see Chapter 11.2 in Leadbetter et al. (2012). For our results, we need to consider multivariate Gaussian processes in the case \(\alpha = 1\).

Let \(\{{\varvec{\xi }}(t) = (\xi _{1}(t),\dots ,\xi _{p}(t))' \}_{0 \le t \le T}\) be a jointly Gaussian process with zero means, variances one and covariance function \(r_{ij}(v) = \textrm{Cov}(\xi _{i}(t),\xi _{j}(t+v))\). In order to establish results for the joint probability of the suprema \(M_{k}(T) = \sup _{0 \le t \le T} \xi _{k}(t)\), we suppose

The final result can then be stated as follows.

Theorem A.1

Let \(u_{k} = u_{k}(T) \rightarrow \infty \) as \(T \rightarrow \infty \), so that

and suppose that the jointly stationary Gaussian process \(\{{\varvec{\xi }}(t)\}_{0 \le t \le T}\) satisfies (A.2)–(A.4). Then,

Most of the required results and techniques to prove Theorem A.1 are already provided in Leadbetter et al. (2012). For completeness, we present its proof here. The proof is based on a series of lemmas which provide an approximation of the probability of suprema over an increasing interval. Following the notation in Leadbetter et al. (2012), we introduce for a fixed \(h>0\), \(n = [T/h]\).

Proof

By Lemma 2 below

Then, since \(nh \le T \le (n+1)h\), it follows

where (A.6) follows by (A.23) in Lemma 5 and (A.7) by Theorem 12.2.9 in Leadbetter et al. (2012). Furthermore, the last relation (A.8) is valid since by (A.5) and \(n = [T/h]\) we get \(\mu _{k} \sim \frac{\tau _{k}}{T}\) and \(n \sim \frac{T}{h}\). \(\square \)

The following Lemma 2 is crucial in proving Theorem A.1. Its proof will be given by a series of lemmas below the statement.

Lemma 2

Proof

The result follows by Lemmas 3 and 4 below. \(\square \)

Recall that for a fixed \(h>0\), \(n = [T/h]\), and divide [0, nh] into n intervals of length h. We further split those intervals into subintervals \(I_{r}\) and \(I^{*}_{r}\) of length \(h-\varepsilon \) and \(\varepsilon \), respectively. The following lemma corresponds to Lemma 12.3.2 in Leadbetter et al. (2012).

Lemma 3

Suppose \(u \rightarrow \infty \), \(q \rightarrow 0\), \(u^2q \rightarrow a>0\), (A.2) and (A.5) hold. Then,

with \(\tau _{k}\) as defined in (A.5) and \(\rho (a) \rightarrow 0\) as \(a \rightarrow 0\).

Proof

We consider (A.9) and (A.10) separately.

Proof of (A.9): By Boole’s inequality

where (A.11) follows under assumption (A.2) by Theorem 12.2.9 in Leadbetter et al. (2012) and since \(n \mu _{k} \sim T \frac{\mu _{k}}{h} \rightarrow \frac{\tau _{k}}{T}\).

Proof of (A.10): Following the proof of Lemma 12.3.2 (ii) in Leadbetter et al. (2012), we get

where \(H_{1}(a)\) is a constant depending on the limit \(u^2q \rightarrow a>0\) and satisfying \(\rho (a):= 1-H_{1}(a) \rightarrow 0\) as \(a \rightarrow 0\); see the proof of Lemma 12.3.2 in Leadbetter et al. (2012). Each probability in (A.12) can be dealt with separately to get (A.13). With explanations given below, we get for the first probability in (A.12)

where the second inequality in (A.14) is a consequence of \(1-\Phi (u) \sim \varphi (u)/u\), where \(\Phi \) and \(\varphi \) denote the Gaussian distribution and density functions respectively. The last relation in (A.14) follows by (12.2.18) in Leadbetter et al. (2012).

For the second probability in (A.12), one can write

where (A.15) follows by Lemma 12.2.4 (i) in Leadbetter et al. (2012) with \(\alpha =1\).

Similarly, the third probability in (A.12) satisfies,

by (12.2.18) in Leadbetter et al. (2012). \(\square \)

The following lemma corresponds to Lemma 12.3.3 in Leadbetter et al. (2012).

Lemma 4

Let \(r(v) \rightarrow 0\) as \(v \rightarrow \infty \), \(u^2q \rightarrow a>0\), (A.2), (A.3) and (A.5) be satisfied. Then, as \(T \rightarrow \infty \),

Proof

We prove (A.16) and (A.17) separately.

Proof of (A.16): Follows by the proof of relation (11.2.4) in Leadbetter et al. (2012) which is part of the proof of Lemma 11.2.1.

Proof of (A.17): Simple application of triangular inequality yields

The first probability in (A.18) can be bounded as

where we used for (A.19) the same arguments as in the proof of (A.10). The second probability in (A.18) satisfies

where (A.20) is due to stationarity and the relation (A.21) follows under assumption (A.2) by Theorem 12.2.9 in Leadbetter et al. (2012). \(\square \)

Lemma 5

Suppose (A.4). Then,

Proof

The statement coincides with Lemma 11.2.2 in Leadbetter et al. (2012) and can be proven without assuming (A.2). Therefore, we omit the details. Note that (A.22) is only necessary to prove (A.23). \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Düker, MC., Jeong, SO., Lee, T. et al. Detection of multiple change-points in high-dimensional panel data with cross-sectional and temporal dependence. Stat Papers 65, 2327–2359 (2024). https://doi.org/10.1007/s00362-023-01484-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-023-01484-3

Keywords

- Change point analysis

- High-dimensional time series

- Block wild bootstrap

- CUSUM

- Binary segmentation

- Moving sum algorithm