Abstract

In this paper we study the existence and uniqueness of Nash equilibria (solution to competition-wise problems, with several controls trying to reach possibly different goals) associated to linear partial differential equations and show that, in some cases, they are also the solution of suitable single-objective optimization problems (i.e. cooperative-wise problems, where all the controls cooperate to reach a common goal). We use cost functions associated with a particular linear parabolic partial differential equation and distributed controls, but the results are also valid for more general linear differential equations (including elliptic and hyperbolic cases) and controls (e.g. boundary controls, initial value controls,...).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Nash equilibria are solutions of a noncooperative multiobjective optimization strategy first proposed by Nash (see [1]). Since it originated in game theory and economics, the notion of player is often used. For an optimization problem with N objectives or functionals \(J_i\) to minimize, a Nash strategy consists in having N players or controls \(v_i\), each optimizing his own criterion. However, each player has to optimize his criterion given that all the other criteria are fixed by the rest of the players. When no player can further improve his criterion, it means that the system has reached a Nash equilibrium state.

There are many problems involving Nash equilibra governed by partial differential equations. As explained in [2], they arise in very many fields of Environment and Engineering. The authors of the mentioned paper give, as an example, a case where the solution to the state equation corresponds to the concentration of chemicals in a lake and each control corresponds to a local agent or local plant (each having its own interest or functional to minimize, sometimes over different domains).

Of course, there are other strategies for multiobjective optimization, such as the Pareto cooperative strategy [3], the Stackelberg hierarchical strategy [4] or the Stackelberg–Nash strategy [2]. For the case of the Stackelberg–Nash strategy the problems under consideration may also include requirements of approximate or exact controllability (see [2, 5, 6]).

To the best of our knowledge, [7] and [8] are the first articles dealing with the theoretical and numerical study of Nash equilibria for differential games associated to partial differential equations. Based on [7] and [9], we deal here with a general linear case with N cost functions and controllers and show how, in some cases, the Nash equilibria (solution to differential games associated to multiobjective optimization problems with several noncooperative controllers), are also the solution of single-objective optimization problems (where all the controllers cooperate to reach a common goal). We use cost functions associated with linear parabolic partial differential equations and distributed controls, but other kinds of linear differential equations (e.g. elliptic, hyperbolic,..) and controls (e.g. boundary controls, initial value controls,...) can be also used, using the same technique (see, for instance, [10, 11]). A preliminary preprint with some of the results studied here can be seen in [12].

The fact that a noncooperative game (i.e a competition-wise problem) can be seen as a (cooperative) single-objective optimization problem (i.e. a noncompetition-wise problem) is very interesting, not only because of the curious noncooperative-cooperative equivalence, but also because of the huge amount of software to compute solutions and literature written about the latter kind of problems, that could be used in the framework of, apparently, a different type of problems.

The solutions of classical optimal control problems governed by parabolic partial differential equation are solutions of optimality systems typically given by a forward parabolic system (the state system) coupled with a backward parabolic system (the adjoint system). In the case of Nash equilibria with N players acting on similar parabolic problems, there are also associated optimality systems which are now typically given by a forward parabolic system (the state system) coupled with N backward parabolic systems (the adjoint system). Optimality systems composed of more than one forward and/or backward coupled parabolic differential equations arise also in other contexts as, for instance, insensitizing control of parabolic problems (see [13,14,15]).

This paper deals with the case of deterministic differential equations. There are not many works in the literature regarding Nash equilibra associated to the multiobjective control of problems governed by stochastic differential equations (with noise generating disturbances in the cost functionals). An example is [16], where the authors study Nash equilibra in some stochastic differential games.

In Sect. 2 we formulate the problem and give an optimality system providing a necessary and sufficient condition for the Nash equilibria. The existence and uniqueness of Nash equlibria is studied in Sect. 3. In Sect. 4 we show the equivalence, in some cases, between the noncooperative multiobjective differential games defining the Nash equlibria and suitable (cooperative) single-objective optimization problems. Finally, in Sect. 5 we give a summary of the major results of the paper.

2 Formulation of the Problem

Let us consider \(T>0\), \(\Omega \subset \mathbb {R}^d\) a bounded and smooth open set with \(d\in \{ 1,2,3\}\), and two subsets \(\Gamma _1\), \(\Gamma _2\subset \partial \Omega \) such that \(\partial \Omega =\Gamma _1 \cup \Gamma _2\). We define \(Q=\Omega \times (0,T)\), \(\Sigma _1=\Gamma _1\times (0,T)\), \(\Sigma _2=\Gamma _2\times (0,T)\) and the control Hilbert spaces \(\mathcal{U}_i=L^2(\omega _i \times (0,T))\) and \({\mathcal U}=\mathcal{U}_1\times \cdots \times \mathcal{U}_N\), where \(N\in \mathbb {N}\) and \(\omega _i\subset \Omega \), for \(i\in \{ 1, \ldots ,N\}\). Finally, we consider the functionals \(J_i:{\mathcal U}\rightarrow \mathbb {R}\), with \(i\in \{ 1, \ldots ,N\}\), given by

for every \(v=(v_1, \ldots ,v_N)\in \mathcal{U}\), where \(\alpha _i >0\), \(\rho _i , \eta _i \in L^\infty (\Omega )\) such that \(\rho _i, \eta _i\ge 0\), the function \(y=y(v)\) is defined as the solution of

with \(f,g_1,g_2,y_0,y_{i,\textrm{d}}\) and \(y_{i,\textrm{T}}\) being smooth enough functions and \(\chi _\omega :\Omega \rightarrow \mathbb {R}\) the characteristic function (with values 1 in \(\omega \) and 0 in \(\Omega \setminus \omega \)) for any \(\omega \subset \Omega \).

This generalizes the typical examples in the literature of 2 controls (instead of N), \(\rho _i =k_i\chi \omega _{\textrm{d},i}\) and \(\eta _i =l_i \chi \omega _{\textrm{T},i}\), where \(k_i,l_i>0\), \(\omega _{\textrm{d},i}, \omega _{\textrm{T},i}\subset \Omega \). A special case is when \(\omega _{\textrm{T}1}\cap \omega _{\textrm{T},2}\ne \emptyset \) and+/+or \(\omega _{\textrm{d},1}\cap \omega _{\textrm{d}2} \ne \emptyset \). This case is a competition-wise problem, with each control (or player) trying to reach (possibly) different goals over a common domain. In some sense this is the case where the behavior of the solution y associated to a Nash equilibrium is most difficult to forecast.

Remark 1

Most of the results to follow are also valid for more general linear operators such as, for instance,

The technique is also valid for different type of controls such as, for instance, boundary or initial controls. \(\square \)

Now, given \(i\in \{ 1, \ldots ,N\}\), for every \((w_1, \ldots ,w_{i-1},w_{i+1}, \ldots ,w_N)\in \mathcal{U}_1\times \cdots \times \mathcal{U}_{i-1}\times \mathcal{U}_{i+1} \times \cdots \times \mathcal{U}_N\) we consider the optimal control problem

The (unique) solution \(u_i(w_1, \ldots ,w_{i-1},w_{i+1}, \ldots ,w_N)\) of problem \((\mathcal{C}\mathcal{P}_i)\) is characterized by

Therefore, a Nash equilibrium is a N-tuple \((u_1, \ldots ,u_N)\in \mathcal{U}\) such that \(u_i=u_i(u_1, \ldots u_{i-1},u_{i+1}, \ldots ,u_N)\) for all \(i\in \{ 1, \ldots ,N\}\), i.e. \((u_1, \ldots ,u_N)\) is a solution of the coupled (optimality) system:

In the linear case studied here, this system of equations is a necessary and sufficient condition for u to be a Nash equilibrium. In general this system is only a necessary condition, although in some nonlinear cases (see, e.g. [17]), the functionals are convex and system (2) is also a sufficient condition.

Following [7] it is easy to prove that, if \(i\in \{ 1, \ldots ,N\}\),

where for any \(v=(v_1, \ldots ,v_N)\in \mathcal{U}\) the function \(p_i=p_i(v)\) is the solution of the adjoint system

and \(y=y(v)\) is the solution of (1).

Therefore, system (2) is equivalent to the (optimality) system

3 Existence and Uniqueness of Solution of Nash Equlibria

Let us consider \(\alpha =(\alpha _1, \ldots ,\alpha _N)\). It is obvious that

is an affine map** of \(\mathcal{U}\). Therefore, there exist a linear continuous map** \(\mathcal{A}_{\alpha }\in \mathcal{L}(\mathcal{U},\mathcal{U})\) and a vector \(b\in \mathcal{U}\) such that

Let us identify map** \(\mathcal{A}_{\alpha }\): For every \(v=(v_1, \ldots ,v_N)\in \mathcal{U}\), the linear part of the affine map** in relation (3) is defined by

where \(\widetilde{p}_i=\widetilde{p}_i(v)\), \(i \in \{ 1, \ldots ,N\}\), is the solution of

and \(\widetilde{y}=\widetilde{y}(v)\) is the solution of

Proposition 1

Map** \(\mathcal{A}_{\alpha }:\mathcal{U}\rightarrow \mathcal{U}\) is linear and continuous. Furthermore, if \({\displaystyle \min _{i\in \{ 1, \ldots ,N\}} \{ \alpha _i\}}\) is sufficiently large, it is also \(\mathcal{U}\)-elliptic, i.e., there existe \(C>0\) such that

where \((\cdot ,\cdot )\) and \(||\cdot ||\) represent the canonical scalar product and norm of the Hilbert space \(\mathcal{U}\), respectively.

Proof

It is obvious that \(\mathcal{A}_{\alpha }\) is a linear map** and it is easy to show that it is continuous (see [18]).

Let us consider \(v=(v_1, \ldots ,v_N)\in \mathcal{U}\) and \(w=(w_1, \ldots ,w_N)\in \mathcal{U}\). We have then

Let us focus on the term \(\int _{\omega _i\times (0,T)} \widetilde{p}_i(v)w_i\textrm{d}x\textrm{d}t\), following the approach in [7] and [19]. We have

Then,

Since the map** \(v\rightarrow \widetilde{y}(v)\) is linear and continuous from \(\mathcal{U}\) to \({\mathcal C}([0,T];L^2(\Omega ))\) (see, e.g., [18]), it is easy to prove there exist a constant \(c>0\) such that \(||\widetilde{y}(v)||_{L^2(Q)}+||\widetilde{y}(T;v)||_{L^2(\Omega )}\le c || v||\). Therefore,

with

Notice that \(C>0\) if \({\displaystyle \min _{i\in \{ 1, \ldots ,N\}} \{ \alpha _i\}>\sum _{i=1}^N c^2(||\rho _i||_{L^\infty (\Omega )}+||\eta _i||_{L^\infty (\Omega )})}\), which proves that \(\mathcal{A}_{\alpha }\) is \(\mathcal{U}\)-elliptic in that case and completes the proof. \(\square \)

Remark 2

Let us consider \(\alpha =(\alpha _1, \ldots ,\alpha _N)\). Operator \(\mathcal{A}_{\alpha } :{\mathcal U}\rightarrow {\mathcal U}\) can be rewritten as

where \(\mathcal{D}_{\alpha }:{\mathcal U} \rightarrow {\mathcal U}\) is the homeomorphism defined by

and

with

If \(N=1\) (classical single-objective optimization problem), then operator \(\mathcal{B}\) is non-negative. But for \(N\ge 2\) (which is the case of the multiobjective problem of interest in this work) this property does not hold in general. For instance, if \(N=2\) (just for the sake of simplicity) and \(\eta _1=\eta _2=0\), then

Let us consider \(v_1\) positive and \(v_2\) negative. Then it is well-known that \(\widetilde{y}(v_1,0)>0\) and \(\widetilde{y}(0,v_2)<0\) in Q. Let us suppose \(\widetilde{y}(v_1,v_2)= \widetilde{y}(v_1,0)+\widetilde{y}(0,v_2)>0\) (if \(\widetilde{y}(v_1,v_2)=0\) we can change \(v_1\) or \(v_2\); and the case \(\widetilde{y}(v_1,v_2)<0\) can be done similarly).

Now, if \(\rho _1= 1\) and

then \((\mathcal{B}v,v)<0\). \(\square \)

Let us identify b: The constant part of the affine map** (3) is given by the function \(b\in \mathcal{U}\) defined by \(b=-(\overline{p}_1\chi _{\omega _1}, \ldots ,\overline{p}_N\chi _{\omega _N}),\) where \(\overline{p}_i\), \(i\in \{1, \ldots ,N\}\), is the solution of

and \(\overline{y}\) is the solution of

Notice that, for any \(v\in \mathcal{U}\), \(y(v)=\widetilde{y}(v)+\overline{y}\) and \(p_i(v)=\widetilde{p}_i(v)+\overline{p}_i\).

Theorem 1

If \({\displaystyle \min _{i\in \{ 1, \ldots ,N\}} \{ \alpha _i\}}\) is sufficiently large, there exist a unique Nash equilibrium of the problem defined in Sect. 2.

Proof

As showed above, the Nash equilibria are characterized by the solutions of (2), which are also characterized by the solutions \(u\in \mathcal{U}\) of

where \(a_{\alpha }(\cdot ,\cdot ):\mathcal{U}\times \mathcal{U}\rightarrow \mathbb {R}\) is defined by

and \(L:\mathcal{U}\rightarrow \mathbb {R}\) by

Proposition 1 proves that map** \(a_{\alpha }(\cdot ,\cdot )\) is bilinear, continuous and, if \({\displaystyle \min _{i\in \{ 1, \ldots ,N\}} \{ \alpha _i\}}\) is sufficiently large, it is also \(\mathcal{U}\)-elliptic. Furthermore, map** L is (obviously) linear and continuous. Thus, by the (well-known) Lax-Milgram Theorem, system (2) has a unique solution or, equivalently, there exists a unique Nash equilibrium of the problem defined in Sect. 2, if \({\displaystyle \min _{i\in \{ 1, \ldots ,N\}} \{ \alpha _i\}}\) is sufficiently large. \(\square \)

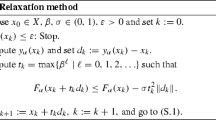

The discretization of the problem considered above and the development of suitable algorithms to get a numerical solution approximating the Nash equilibra can follow the approaches in [7] and [19]. Due to the properties proven in Proposition 1, the conjugate gradient algorithm is well-suited for the numerical resolution. All the details about how to do this can be seen in [7].

We have seen that, if \(\alpha =\big ( \alpha _1 , \ldots , \alpha _N\big )\in (0,\infty )^N\) and \({\displaystyle \min _{i\in \{ 1, \ldots ,N\}} \{ \alpha _i\}}\) is sufficiently large, equation (2), or equivalently \(\mathcal{A}_{\alpha } u =b\), has a unique solution \(u\in \mathcal{U}\). The following mathematical question arises: Is there existence and uniqueness of solution for some \(\alpha \in \mathbb {R}^N\) not satisfying those requirements? The answer is yes, as we can see in the following theorem.

Theorem 2

Let us take \(\widetilde{\alpha }=\big ( \widetilde{\alpha }_1 , \ldots , \widetilde{\alpha }_N\big )\in \Phi = \{ \big ( \alpha _1 , \ldots , \alpha _N\big ) \in \mathbb {R}^N: \alpha _i\ne 0 \ \forall \ i\in \{ 1, \ldots ,N\} \}\) and \(\alpha =\gamma \widetilde{\alpha }\), with \(\gamma >0\). Then, the equation \(\mathcal{A}_{\alpha } u =b\) has a unique solution for every \(\gamma >0\) except, at most, a sequence of values of \(\gamma \) converging to 0.

Proof

Following Remarks 2 and 4, if \(\alpha =\gamma \widetilde{\alpha }\), equation (2), or equivalently \(\mathcal{A}_{\alpha } u =b\), can be rewritten as

where \(\left( \mathcal{D}_{\alpha }\right) ^{-1}:{\mathcal U} \rightarrow {\mathcal U}\) is the homeomorphism defined by

and the operator \(\left( \mathcal{D}_{\alpha }\right) ^{-1}\mathcal{B}:{\mathcal U} \rightarrow {\mathcal U}\) is compact (this property can be easily proven by applying the Aubin–Lions Compactness Lemma; see, for instance, [20]).

Then, according to the Fredholm’s alternative (see Theorem 6.6 of [21]), equation (5) has a unique solution for any \(b\in {\mathcal U}\) (i.e. \(-1\) belongs to the resolvent set of \(\left( \mathcal{D}_{\alpha }\right) ^{-1}\mathcal{B}\) or, equivalently, it does not belong to its spectrum) if, and only if, \(v=0\) is the unique solution to the homogeneous equation

i.e. \(-1\) is not an eigenvalue of \(\left( \mathcal{D}_{\alpha }\right) ^{-1}\mathcal{B}\). Now, if \(\sigma \) is the spectrum of \(\left( \mathcal{D}_{\alpha }\right) ^{-1}\mathcal{B}\), then \(\sigma \setminus \{ 0\}\) is, at most, a sequence converging to 0 (see Theorem 6.8 of [21]), which proves the theorem. \(\square \)

Remark 3

The condition "for any \(b\in {\mathcal U}\)" used in the proof of Theorem 2 is stronger than necessary, since b cannot be any arbitrary element of \({\mathcal U}\) (due to the smoothness property of the parabolic equations). This implies that the equation \(\mathcal{A}_{\alpha } u =b\) could have a unique solution for more elements \(\alpha \in \mathcal{U}\) (not only for those proven in the proof of the mentioned theorem). \(\square \)

4 Equivalent Single-Objective Control Problems

In this section we will show that, in some cases, the solution of noncooperative differential games defining Nash equilibria, are the solution of suitable optimization problems, where all the controls cooperate to minimize a suitable single-objective cost function.

Let us consider the subfamily of problems defined in Sect. 2, for which there exists \(\rho \in L^\infty (Q)\) and \(\eta \in L^\infty (\Omega )\) such that \(\rho _i=\rho \) and \(\eta _{i}=\eta \) in Q, for all \(i\in \{ 1, \ldots ,N\}\). Therefore, in this case the functional \(J_{i}\), with \(i\in \{1, \ldots ,N\}\), is given by

with \(y=y(v)\) being the solution of (1).

As in the general case studied in Sect. 2, a Nash equilibrium is a N-tuple \((u_1, \ldots ,u_N)\in \mathcal{U}=\mathcal{U}_1\times \cdots \times \mathcal{U}_N\) solution of (2), where

for any \(v=(v_1, \ldots ,v_N)\in \mathcal{U}\) and \(p_i=p_i(v)\) is now the solution of

Therefore, system (2) is equivalent in this case to

Again

and now

where \(\widetilde{p}=\widetilde{p}(v)\) is the solution of

Proposition 2

For the family of problems studied in Sect. 4 map** \(\mathcal{A}_{\alpha }:\mathcal{U}\rightarrow \mathcal{U}\) is linear, continuous self-adjoint and \(\mathcal{U}\)-elliptic.

Proof

Following the proof of Proposition 1, \(\mathcal{A}_{\alpha }\) is a linear and continous map**. Furthermore, given \(v=(v_1, \ldots ,v_N)\in \mathcal{U}\) and \(w=(w_1, \ldots ,w_N)\in \mathcal{U}\),

and

which proves that \(\mathcal{A}_{\alpha }\) is self-adjoint and \(\mathcal{U}\)-elliptic. \(\square \)

Remark 4

Notice that, as in Remark 2, \(\mathcal{A}_{\alpha } v\) can be rewritten as

and now, with the assumptions given in this section, operator \(\mathcal{B}\) is self-adjoint and non-negative, since

The constant part of the affine map** (3) is given by the function \(b\in \mathcal{U}\) defined by \(b=-(\overline{p}_1\chi _{\omega _1}, \ldots ,\overline{p}_N\chi _{\omega _N}),\) where \(\overline{p}_i\), \(i\in \{1, \ldots ,N\}\), is now the solution of

and \(\overline{y}\) is the solution of (4).

Theorem 3

There exist a unique Nash equilibrium of the problem defined in Sect. 4.

Proof

The proof follows the one of Theorem 1, taking into account that in this case \(\mathcal{A}_{\alpha }\) is unconditionally \(\mathcal{U}\)-elliptic. \(\square \)

The discretization of the problem considered above and the development of suitable algorithms to get a numerical solution approximating the Nash equilibra are given in [7], where numerical examples are also showed.

Theorem 4

The (unique) Nash equilibrium \(u=(u_1, \ldots ,u_N)\in \mathcal{U}\) of the problem defined in Sect. 4 is the (unique) solution of the following optimal control problems:

-

Find \(u\in \mathcal{U}\) such that \({\displaystyle J(u)=\min _{v\in \mathcal{U}}J(v)}\), where

$$\begin{aligned} J(v)= & {} \sum _{i=1}^N \frac{\alpha _i}{2}\int _{\omega _i\times (0,T)} |v_i|^2\textrm{d}x \textrm{d}t \\{} & {} +\frac{1}{2}\sum _{i=1}^N\left( \int _{Q} \rho |y(0, \ldots ,v_i, \ldots ,0)-y_{i,\textrm{d}}|^2 \textrm{dx}\textrm{d}t \right. \\{} & {} \left. + \int _{\Omega } \eta |y(T; 0, \ldots ,v_i, \ldots ,0)-y_{i,\textrm{T}}|^2 \textrm{d}x \right) \\{} & {} +2\sum _{i,j=1 (i<j)}^N\left( \int _{Q} \rho y(0, \ldots ,v_i, \ldots ,0) y(0, \ldots ,v_j, \ldots ,0) \textrm{dx}\textrm{d}t \right. \\{} & {} \left. \int _{\omega _{T}} \eta y(T;0, \ldots ,v_i, \ldots ,0)y(T;0, \ldots ,v_j, \ldots ,0)\textrm{d}x\right) . \end{aligned}$$ -

Given \(j,p\in \{ 1, \ldots ,N\}\), find \(u\in \mathcal{U}\) such that \({\displaystyle J_{j,p}(u)=\min _{v\in \mathcal{U}}J_{j,p}(v)}\), where

$$\begin{aligned} J_{j,p}(v)= & {} \sum _{i=1}^N \frac{\alpha _i}{2}\int _{\omega _i\times (0,T)} |v_i|^2\text {d}x \text {d}t \\{} & {} +\frac{1}{2}\int _{Q} \rho |y(v)-y_{j,\text {d}}|^2 \text {dxd}t + \frac{1}{2}\int _{\Omega } \eta |y(T;v)-y_{p,\textrm{T}}|^2 \textrm{d}x \\{} & {} +2\sum _{i=1 (i\ne j)}^N\int _{Q} \rho (y_{j,\textrm{d}}-y_{i,\textrm{d}})\widetilde{y}0, \ldots ,v_j, \ldots ,0) \textrm{dxd}t \\{} & {} +2\sum _{i=1 (i\ne p)}^N \int _{\Omega } \eta (y_{p,\textrm{T}}-y_{i,\textrm{T}})\widetilde{y} (T;0, \ldots ,v_j, \ldots ,0) \textrm{dx}. \end{aligned}$$

Proof

We have seen previously that there exists a unique Nash equilibrium, which is the solution \(u\in \mathcal{U}\) of

Then, because of the properties of \(\mathcal{A}_{\alpha }\) given in Theorem 2, we have (see, e.g., [22, Theorem 2.44]) that

with

Then, using that \(\widetilde{y}(v)={\displaystyle \sum _{i=1}^N \widetilde{y}(0, \ldots ,v_i, \ldots ,0)}\), we have that

Hence, using that \(y=\widetilde{y}+\overline{y}\) we have that

where

is a constant (independent of v), which completes the proof of the first part of the theorem.

In order to prove the second part of the theorem, given \(j\in \{ 1, \ldots ,N\}\), let us focus on the following terms of \(\widetilde{J}(v)\):

Something similar can be done with other terms of \(\widetilde{J}(v)\), so that

where

which completes the proof. \(\square \)

5 Conclusions

This paper studies Nash equilibria of noncooperative differential games with several players (controllers), each one trying to minimize his own cost function defined in terms of a general class of linear partial differential equations. We give results of existence and uniqueness of Nash equilibria and show how, in some cases, the corresponding Nash equilibria (solution to competition-wise problems, with each control trying to reach possibly different goals), are also the solution of suitable single-objective optimization problems (i.e. cooperative-wise problems, where all the controls cooperate to reach a common goal). A natural question arises: Are there Nash equilibria associated to nonlinear problems than can be also characterized as the solutions of single-objective problems? This is an open problem for interested researchers. One should take into account that in the linear case studied here, the optimality system (2) is a necessary and sufficient condition for the Nash equilibria, but in general it is only a necessary condition. Most of the works dealing with nonlinear problems find solutions of the corresponding optimality system (which are not proved to be Nash equilibria). However, in [17] the existence of Nash equilibria is proved for a nonlinear problem, by showing that the functionals are convex and the optimality system is also a sufficient condition.

References

Nash, J.F.: Noncooperative games. Ann. Math. 54, 286–295 (1951)

Díaz, J.I., Lions, J.L.: On the approximate controllability of Stackelberg-Nash strategies. In: Díaz, J.I. (ed.) Ocean Circulation and Pollution Control - A Mathematical and Numerical Investigation, pp. 17–27. Springer, Berlin (2004)

Pareto, V.: Cours d’Économie Politique. Rouge, Lausanne (1896)

Von Stackelberg, H.: Marktform und Gleichgewicht. Springer, Berlin (1934)

Guillén-González, F., Marques-Lopes, F., Rojas-Medar, M.: On the approximate controllability of Stackelberg-Nash strategies for stokes equations. Proc. Am. Math. Soc. 141(5), 1759–1773 (2013)

Araruna, F.D., Fernández-Cara, E., Guerrero, S., Santos, M.C.: New results on the Stackelberg-Nash exact control of linear parabolic equations. Syst. Control Lett. 104, 78–85 (2017). https://doi.org/10.1016/j.sysconle.2017.03.009

Ramos, A.M., Glowinski, R., Periaux, J.: Nash equilibria for the multi-objective control of linear partial differential equations. J. Optim. Theory Appl. 112(3), 457–498 (2002). https://doi.org/10.1023/A:1017981514093

Ramos, A.M., Glowinski, R., Periaux, J.: Pointwise control of the burgers equation and related Nash equilibrium problems: a computational approach. J. Optim. Theory Appl. 112(3), 499–516 (2002). https://doi.org/10.1023/A:1017907930931

Ramos, A.M.: Numerical methods for nash equilibria in multiobjective control of partial differential equations. In: Barbu, V., Lasiecka, I., Tiba, D., Varsan, C. (eds.) Analysis and Optimization of Differential Systems. SEC 2002. IFIP - The International Federation for Information Processing, vol 121, pp. 333–344. Springer, Boston. https://doi.org/10.1007/978-0-387-35690-7_34, ISBN: 978-1-4757-4506-1 (Print), 978-0-387-35690-7 (online) (2003)

de Jesus, I.P.: Remarks on hierarchic control for a linearized micropolar fluids system in moving domains. Appl. Math. Optim. 72, 493–521 (2015). https://doi.org/10.1007/s00245-015-9288-2

de Jesus, I.P.: Hierarchical control for the wave equation with a moving boundary. J. Optim. Theory Appl. 171(3), 336–350 (2002). https://doi.org/10.1007/s10957-016-0984-0

Ramos, A.M.: Nash equilibria strategies and equivalent single-objective optimization problems. The case of linear partial differential equations (2022). ar**v:1908.11858

Lions, J.L.: Quelques notions dans l’analyse et le contrôle de systèmes à données incomplètes. In: Proceedings of the XIth Congress on Differential Equations and Applications, First Congress on Applied Mathematics, University of Málaga, Málaga, pp. 43–54 (1990)

De Teresa, L.: Insensitizing controls for a semilinear heat equation. Commun. Partial Differ. Equ. 25(1 & 2), 39–72 (2000)

Bodart, O., González-Burgos, M., Pérez-García, R.: Existence of insensitizing controls for a semilinear heat equation with a superlinear nonlinearity. Commun. Partial Differ. Equ 29(7 & 8), 1017–1050 (2004). https://doi.org/10.1081/PDE-200033749

Herrera, B., Ivorra, B., Ramos, A.M.: RaBVIt-SG, an algorithm for solving feedback Nash equilibria in multiplayers stochastic differential games. ResearchGate Preprint (2019). https://doi.org/10.13140/RG.2.2.19217.79208

Ramos, A.M., Roubicek, T.: Nash equilibria in noncooperative predator-prey games. Appl. Math. Optim. 56(2), 211–241 (2007). https://doi.org/10.1007/s00245-007-0894-5

Lions, J.L., Magenes, E.: Problèmes aux Limites Non-Homogènes et Applications, vol. I and II. Dunod, Paris (1968)

Carvalho, P.P., Fernández-Cara, E.: On the computation of Nash and Pareto equilibria for some bi-objective control problems. J. Sci. Comput. 78, 246–273 (2019). https://doi.org/10.1007/s10915-018-0764-0

Crespo, M., Ivorra, B., Ramos, A.M.: Existence and Uniqueness of Solution of a Continuous Flow Bioreactor Model with Two Species. Revista de la Real Academia de Ciencias Exactas, Físicas y Naturales. Serie A. Matemáticas 110, 357–377 (2016). https://doi.org/10.1007/s13398-015-0237-3

Brezis, H.: Functional Analysis, Sobolev Spaces and Patial Differential Equations. Springer, New York (2011)

Ramos, A.M.: Introducción al análisis matemático del método de elementos finitos. Editorial Complutense, Madrid (2012)

Acknowledgements

The research of the author was partially supported by the Spanish Government under Projects PID2019-106337GB-I00, MTM2015-64865-P, and the Research Group MOMAT (Ref. 910480) of the Complutense University of Madrid.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing Interests

The authors have not disclosed any competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ramos, A.M. Nash Equilibria Strategies and Equivalent Single-Objective Optimization Problems. The Case of Linear Partial Differential Equations. Appl Math Optim 87, 30 (2023). https://doi.org/10.1007/s00245-022-09944-2

Accepted:

Published:

DOI: https://doi.org/10.1007/s00245-022-09944-2