Abstract

This paper considers what liberal philosopher Michael Sandel coins the ‘moral limits of markets’ in relation to the idea of paying people for data about their biometrics and emotions. With Sandel arguing that certain aspects of human life (such as our bodies and body parts) should be beyond monetisation and exchange, others argue that emerging technologies such as Personal Information Management Systems can enable a fairer, paid, data exchange between the individual and the organisation, even regarding highly personal data about our bodies and emotions. With the field of data ethics rarely addressing questions of payment, this paper explores normative questions about data dividends. It does so by conducting a UK-wide, demographically representative online survey to quantitatively assess adults’ views on being paid for personal data about their biometrics and emotions via a Personal Information Management System, producing a data dividend, a premise which sees personal data through the prism of markets and property. The paper finds diverse attitudes based on socio-demographic characteristics, the type of personal data sold, and the type of organisation sold to. It argues that (a) Sandel’s argument regarding the moral limits of markets has value in protecting fundamental freedoms of those in society who are arguably least able to (such as the poor); but (b) that contexts of use, in particular, blur moral limits regarding fundamental freedoms and markets.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Apps of so-called challenger banks, allow their users to monitor and control their spending, change and buy currency, receive money-off rewards when shop** or dining, buy and sell stock in companies, purchase and monitor cryptocurrency investments, and invest in precious metals. For the many users of Revolut, Monzo, and similar banking apps, this is not news. It is through this mundane prism of everyday user control, via apps, over their property and assets, that the emergent technology of Personal Information Management Systems (PIMS) should be viewed. In principle PIMS apps (also commonly known as Personal Data Stores) allow people to control, store, share and potentially sell personal data to organisations and receive nano-payments (Charitsis et al. 2018; Lanier 2013). This includes selling personal data about their emotions, a potentially highly revealing and organisationally desired data type, and the focus of this paper. Notably a longstanding PIMS app, ‘digi.me’, already allows sharing of inferences about emotion through one of its trusted bundled apps (‘Happy, Not Happy’), which allows users to track emotion patterns of their social media posts. Another of digi.me’s trusted apps is ‘UBDI’, where users can ‘participate in research studies and earn cash and UBDI when you choose to share opinions and anonymous insights from your data with non-profits, academic institutions or companies’ (Digi.me 2021). PIMS are attracting significant commercial investment, with notable PIMS platforms under development including Solid/Inrupt, led by Web inventor, Sir Tim Berners-Lee; Databox and MyDex in the UK; services such as digi.me, Meeco.me and CitizenMe; and the more global MyData. Other initiatives such as the US-based Data Dividend Project are explicitly focused on creating small amounts of revenue for Americans in exchange for their data. The Data Dividend Project and similar projects hold that people have a right to a share of profit made by means of their personal data.

Like many governments world-wide, this depiction of liberalised personal data is receiving British governmental backing, due to intentions by its Department for Digital, Culture, Media and Sport (replaced by the Department for Science, Innovation and Technology in February 2023) and their National Data Strategy to help people manage, make active choices, and control their personal data (DCMS and DSIT 2020). Indeed, PIMS are regarded by policymakers as both a market-friendly way of generating personal and organisational value from personal data, and an ethical one, given the granularity of control over personal data that they afford users (Sharp 2021). Such liberalisation of personal data is also of keen interest to finance regulators, such as the UK’s Financial Conduct Authority (2019), that recognise the use of algorithmic trading, machine learning and AI techniques to continue economic liberalisation and enable new markets and services.

Yet, liberalisation quickly bumps into the ‘should we’ question, in that while people should understandably be at liberty to choose to do what they like with their data, what of the broader public good if people are able to sell digital bits and insights about themselves? Some argue that certain parts of life (such as our bodies and body parts) should be beyond the reach of markets due to ethical considerations (Sandel 2012). Others argue that technologies such as PIMS can enable a fairer, paid, exchange of data between the individual and organisations, even for highly personal data about our bodies and emotions (Boddington 2021), although advocates of this view should be morally comfortable with the nature of choice and agency when people are living in economically constricted circumstances. Data ethics, of course, is a burgeoning field (Floridi et al. 2018), and when applied to emotions, biometrics and AI, routinely explores questions of bias, accuracy, autonomy, dignity, privacy, transparency, accountability and objectification (Lagerkvist et al. 2022; Podoletz 2022; Valcke et al. 2021). More rarely addressed in the data ethics field are normative questions about data dividends, encompassing rights, liberty and less individualistic ideas about the civic good.

To explore such questions, this paper focuses on people’s opinions of PIMS and data dividends in reference to various organisational uses and types of emotion and biometric data. By means of a UK-wide, demographically representative online survey to ascertain the national picture on using a PIMS to be paid for sharing one’s biometric and emotion data, our core question is this: what is the public’s views on generating data dividends from their biometric and emotion data via a PIMS, and what are the implications of these findings? As will be explored, specifics matter. Important factors influencing whether people are willing to sell such data include their demographic segmentation: adults who are older, White, female, not working, in lower socio-economic groups and with lower household incomes are far less comfortable than other demographics in selling their biometric and emotion data. Other important factors comprise the type of personal data sold (only a small minority are willing to sell data from which emotions, moods and mental states can be inferred); and the type of organisation sold to (majorities are willing to sell their emotion data to the UK’s National Health Service (NHS), for academic scientific research, to mental health charities, and to private drug and health companies, but far fewer are willing to sell to organisations that could seek to manipulate them). We argue that with these findings, the moral edge (and aversion to) allowing data about human subjectivity to be in reach of markets is blurred. As these emerging technologies are rolled out, the moral edge may well blur further, given wider governmental and industrial initiatives to liberalise personal data, and given that younger adults are already more in favour of selling their biometric and emotion data (assuming that their attitudes do not change as they age).

2 On emotion and PIMS

Our focus on data about biometrics and emotion provides a compelling example to explore the moral limits of markets in that such data is increasingly socially and commercially valuable but also highly privacy invasive. With technical roots in affective computing (Picard 1997), biometric and emotion data have scope to naturalise and enhance our experience of technologies (which is pro-social). Moreover, with care regarding both data processing and assumptions made from biometric data, there is scope for these systems to be of therapeutic value. Yet such data simultaneously provide scope for new harms by exposing intimate dimensions of human life (providing cues about users’ mental states) and the body itself (in cases of emotion and affect inferences from biometrics). Emotion recognition technologies raise concerns about coded bias in procedures for classifying emotions (algorithms), simplistic taxonomies of emotion expressions, the inferences that can be made about a person (Barrett et al. 2019; Crawford 2021; Stark and Hutson 2021), and numerous legal and cross-cultural issues (Mantello and Ho 2022; Podoletz 2022; Smith and Miller 2022), but this is not preventing their deployment worldwide in everyday objects, services and situations. Indeed, there is a rapidly emerging global market for data about emotions, with emotion recognition of keen interest to the technology industry, and diverse sectors that perceive economic, social and security-based value in understanding emotional and mental states (McStay 2018; Boddington 2021; Crawford 2021). Moreover, datafied insights about emotions and mental disposition look to become more prominent across cases away from smartphones and laptops. Although still at an embryonic stage, emotion recognition systems, and those that pertain to emulate empathy, are becoming increasingly present in everyday objects and practices such as mixed reality applications, home assistants, cars, music platforms, wearables, toys, marketing and insurance. Such ‘emotional AI’ technologies (that use machine training to read and react to human emotions and feeling through text, voice, computer vision and biometric sensing, thereby simulating understanding of affect, emotion and intention) are also being used to gauge the emotionality of workplaces, hospitals, prisons, classrooms, travel infrastructures, restaurants and chain stores (McStay 2018).

Like these emotional AI technologies, PIMS are neither new, nor have they yet properly come of age. Indeed, PIMS and personal data markets have origins in the original Internet boom of the late 1990s and early 2000s under the auspice of ‘infomediaries’ (Hagel and Singer 1999). In a modern Internet environment widely characterised as ‘extractivist’ (Sadowski 2019) that sees the primary resource of big data industries as people themselves (Gregory and Sadowski 2021), and where data protection laws have had debatable effectiveness in protecting people, PIMS purport to do data protection differently. PIMS promise to put users in more control over their personal data (including their biometric and emotion data). At least in theory, PIMS enable people to store and share their personal data with different organisations in a highly granular, controlled fashion, thereby enabling individuals to better manage their personal data relationships with multiple organisations (European Data Protection Supervisor 2020; IAPP 2019). As the European Data Protection Supervisor (2016: 5–6) puts it, ‘In principle, individuals should be able to decide whether and with whom to share their personal information, for what purposes, for how long, and to keep track of them and decide to take them back when so wished.’ PIMS providers claim that organisations will also benefit from this data governance arrangement in accessing better quality data about current and potential customers, as people would supply legally obtained personal data that is potentially richer and more intimate in nature. Indeed, if PIMS fulfil their promise of encouraging users to consent to supply richer, more intimate data (including their biometrics and emotion data), this would enable organisations to create better targeted marketing; bypass ad blockers; and acquire potential customers (Janssen et al. 2020).

As well as increasing users’ privacy and control over their personal data, some PIMS providers also provide the ability to ‘transact’ (or otherwise monetise) their personal data (Janssen et al. 2020) via a more distributive economic model based on data dividends, which are small payments made to users and the PIMS provider to access specific parts of users’ digital identity. The Data Dividend Project (2021) is among the better-known initiatives, alerting Americans to the fact that, ‘Big tech and Data Brokers are making billions off your data per year. They track and monetize your every move online. Without giving you a dime.’ This initiative is an arguably libertarian response to a problem diagnosed in Marxist media politics, Smythe’s (1977) diagnosis of the ‘audience-as-commodity’, where information about audiences is generated and circulated to create revenue for organisations. (Also see Fuchs (2013) and Sadowski (2019) for Marxist critiques of modern digital and recursive features of the audience-as-commodity thesis.) Indeed, as a decentralised Internet gave way to plutocratic centralisation, so structural criticisms of PIMS followed. Mechant et al. (2021), for example, argue that that while design and promotion of PIMS services may promise freedom from exploitation, PIMS are another instance of the exploitative capitalist apparatus. This is supported by criticism that PIMS are predicated on ‘engaged visibility’ over ‘technical anonymity’ (Draper 2019: 188), meaning that data privacy is less about removal of data from the ‘market’ than re-routing data. Assessing the funding pitches of five start-ups, Beauvisage and Mellet (2020: 7) see a trend: observation of platform profits, start-ups acting as brokers on subscribers’ behalf, redirection of profit, and emphasis on consent. Damningly, Beauvisage and Mellet see perhaps the glaring problem: current offers by start-ups do not, and arguably cannot, work since start-ups are powerless in face of the platforms. Other criticisms of PIMS touch upon political philosophy, assessing the liberal principle of autonomy and personal responsibility, preferring collective solutions and obligations (Draper 2019; Viljoen 2020).

Criticisms of PIMS temporarily to one side, several studies find that people say they want more control over their personal data (Eurobarometer 2019; Hartman et al. 2020; IAPP 2019). This reasonable suggestion should be balanced against practical challenges, not least: inadequate personal data literacy (Brunton and Nissenbaum 2015); that people skip reading privacy policies and consent material (Obar and Oeldorf-Hirsch 2020); that people are fatigued in handling online privacy matters (Hargittai and Marwick 2016); that people care but they are resigned to the status quo (Draper and Turow 2019); and that people do not easily change their behaviour, a situation characterised as the ‘privacy paradox’ (Acquisti et al. 2016; Orzech et al. 2016). However, user-based studies on PIMS themselves, all UK-based, show that people are open to the idea of PIMS (Hartman et al. 2020; Sharp 2021), although these studies have not queried into the sharing of biometric or emotion recognition data via PIMS. The only user-based studies that we are aware of that combine attitudes to PIMS and emotion technologies include an earlier national online omnibus survey (n = 2065, January 15–18, 2021) that we conducted on UK adults’ views on controlling their online personal data (Bakir et al. 2021). This focused on the idea of managing data about biometrics and emotion, rather than data sales and dividends, which is the subject of this paper. Its findings provide early context, however, indicating that younger adults are far more receptive to using a PIMS to store and share data (71% of 18–24-year-olds are willing to do so), but that this willingness decreases with each successive older age group, with only an average of 35% of those aged over 55 willing to do so. The survey also finds that younger adults are more positive about the novel uses in physical contexts that PIMS allow, including sharing biometric and emotion data, the topic of this paper. However, due to its status as a commodity, the scope to sell personal and intimate data entails quite different debates to those about control over sharing data. Thus, with younger adults appearing receptive to sharing data about their biometrics and emotion via a PIMS, and given that PIMS are receiving policy support and commercial investment as a solution to combat extractive excesses of the Internet environment, we ask, what are the moral limits of markets for biometric and emotion data, if any?

3 The moral limits of markets

Perhaps the most extreme turn towards emotion data is the ‘expanding Internet of Bodies’ where use of multi-modal data feeds from our personal biosignals (for instance, from connected implants embedded into humans) becomes normalised across all walks of life. In her exploration of this scenario, artist and curator Ghislaine Boddington (2021) lays out her stall on ‘personal body data ownership’. She notes the positive uses of big data to analyse and create solutions for social good, but expresses distaste for status quo data extractivism by private companies of personal body data, noting that revenue streams from sale of our personal data worth tens of thousands of pounds yearly per person never reaches us as individuals. Echoing the pro-PIMS literature, she argues that technologies such as personal data stores can enable a fairer exchange of data between the individual and the organisation, even when it concerns highly personal data about our bodies and emotions garnered from bodily implants, by paying us for the behavioural and emotion data outputs that we choose to supply (Boddington 2021).

However, we argue that the question of what should and should not be for sale requires deeper reflection. This very question has been distilled by Sandel’s (2012) famous philosophical investigations into the moral limits of markets. He does not argue that principles of capital, property and exchange are bad, just that certain parts of life should remain beyond capitalism’s reach. For Sandel (2012), when market principles are uncritically applied to uses of the body (such as prostitution or organ sales), this raises questions about what should be in scope for markets. Defining the moral limits of markets, Sandel provides two criteria: fairness and corruption. On fairness, the key question is to what extent is a person (or group of people) free to choose (or being coerced) to trade things under conditions of inequality and dire economic necessity (2012: 111)? Sandel’s second criterion of corruption argues that certain things and services involve a degrading and objectifying view of humans and human being, meaning that exchanges cannot be resolved by appeals to fair bargaining conditions. In short, because emotions are core to human experience, decision-making and communication, Sandel would argue that datafied emotions should be kept away from domains of market exchange.

Also key is that while PIMS support principles of informational self-determination and human agency over decisions and property, they also cast privacy (a human right) in terms of market and commodity logics. This risks encouraging unequal application of human rights due to pre-existing different levels of financial incomes (creating privacy ‘haves’ and ‘have nots’) (Spiekermann et al. 2015). Indeed, the United Nations stipulates that universal human rights, such as privacy (physical and digital), should not be contingent on financial income (OHCHR 2021), a situation that is challenged by PIMS apps. Seen at this high normative level, liberal principles of self-determination collide with collective interests, including social inequality through processing of personal data. Privacy ‘haves’ and ‘have nots’ connect with a broader social problem of ‘cumulative disadvantage’ (Gandy 2009), where data inequalities tend to compound and become greater than the sum of each example of questionable data processing. Indeed, there is a rich sociological and social sciences literature (largely US-focused) on data-driven discrimination concerning the marginalised, poorest and most vulnerable people (for instance, see Benjamin 2019; Curto et al. 2022; Eubanks 2018; Fisher 2009; Gangadharan 2012, 2017; Madden et al. 2017). While this US-focused research highlights how racism, sexism and poverty shape data-driven systems and people’s experiences of them, other studies (some British-based) surface additional forms of marginalisation such as age, disability, and those labelled by local authorities as being ‘at risk’ (Dencik et al. 2018; Kennedy et al. 2020, 2021; Stypinska 2022).

So far, we have discussed factors informing the moral limits of markets, including ethically debatable business models, political interest in innovation through data, marketisation of privacy, controversies about emotion data, self-determination, extractivism, studies that show some lay public interest in PIMS and in emotion recognition technologies, but also studies that document data inequalities. Drawing on the above philosophical, sociological and critical data studies, we investigate the moral limits of markets in relation to attitudes of UK citizens, data types, and contexts of use. We explain, in the following section, how we investigate this.

4 Methods

This paper assesses UK-based adults’ views on potentially being paid for personal data on their biometrics and emotions. It represents the culmination of a wider empirical project on PIMS that ran across 2020–21. Initial stages of the project conducted interviews with data governance actors (to unearth issues regarding PIMS needing exploration); and interviews with a UK-based company develo** a PIMS product (Cufflink) and access to its white papers (to understand the PIMS provider perspective). The research team also trialled a range of PIMS apps (to understand the aims, affordances and limitations of this emerging technology). We followed this with six online focus groups with a purposive sample (Miles et al. 2014) of older and younger UK-based adults (n = 35), as age has proven to be the main demographic differentiator in studies on public attitudes towards emotional AI (McStay 2020). The focus groups (each 2 h long) explored participants’ perceived benefits and concerns with such technologies (namely using PIMS to share and potentially sell personal data, including emotion data, in different scenarios). By phrasing all questions neutrally, and prompting participants to reflect on benefits as well as concerns, we took care to avoid social desirability bias. We also conducted our aforementioned UK survey (n = 2065, online omnibus implemented by survey company ICM Unlimited across January 15–18, 2021) on UK adults’ attitudes towards sharing their biometric and emotion data via a PIMS (rather than this paper’s focus on selling such data) (Bakir et al. 2021). Informed by these various angles (that contributed key themes for further study, and an understanding of how best to ask lay audiences comprehensible questions on complex emerging technologies), the project’s final empirical stage (that we discuss in this paper) comprised a second survey, structured around attitudes towards selling emotion and biometric data via a PIMS (n = 2070, online omnibus implemented by survey company Walnut Unlimited across 29 September–1 October 2021). Both surveys, implemented by a professional survey company, are demographically representative, with data collected in compliance with International Organisation for Standardization (ISO) standards, ISO 20252 (for market, opinion and social research) and ISO 27001 (for securely managing information assets and data). The survey questions were provided by us, as detailed below. These datasets, and full methodological details, are available in the UK Data Archive repository (Bakir et al. 2021). Ethical approval for all stages of the research was obtained from our university ethics committee prior to empirical research taking place, fully informed consent was achieved from participants, and all data were anonymised.

Our project’s focus group findings on benefits and concerns contributed several themes to be explored in this paper. Younger participants regarded the benefits of PIMS as including greater control over data sold, with the choice to be paid for sharing emotion data via PIMS seen by some as better than the current situation where emotion data is already collected without payment. Some also saw benefit in personalisation (especially among younger participants) who saw value in selling their emotion data to enable, for instance, the functionality of wellness apps on their phones. Older participants noted the benefit of selling their emotion data for academic psychological research. Concerns raised only by younger participants included the accuracy of the data; and discomfort in making the active choice to sell one’s own emotion data as opposed to acceptance of (or resignation to) the extractivist nature of dominant contemporary data practices. A concern raised only by older participants was that emotions should not be exploited for commercial ends. Both younger and older participants were concerned about the potential for being manipulated by means of the sold data (Bakir et al. 2021).

Along with insights from the academic literature, we used these themes to design our second UK-wide demographically representative survey (the subject of this paper), focussing on the topic of participants’ views on selling biometric and emotion data. Online surveys, of course, are blunt tools, partly due to difficulties of presenting complex topics, intersubjectivity, and minimal control over whether respondents are distracted (Rog and Bickman 2009). Also noteworthy is that online delivery of the survey means that our respondents had a minimum baseline of online literacy, and hence would be more digitally literate than the average UK population. Yet, positively, absence of intersubjective sensitivities avoided interviewer bias, a common problem with ethical and privacy-related research. Moreover, the research generated a respectable weighted sample of hard-to-reach participants, balanced across gender, socio-economic groups, household income, employment status, age and ethnicity, and covering all age groups above 18 years old and all UK regions. This demographically representative national survey directs much-needed empirical attention towards groups (such as those based on ethnicity, age and socioeconomic background) that are under-represented among employees within platform companies (who design such products), and who are commonly discriminated against and marginalised in society (Benjamin 2019; Cotter and Reisdorf 2020; Stypinska 2022).

This survey’s closed-ended, multiple-choice questions focus on whether participants would be happy to be paid for sharing, in a controlled fashion (via a PIMS), their biometric and emotion personal data. In introducing the survey to participants, we explain that while it is difficult to state how much money a person might make from selling their emotion, mood and mental wellbeing data over the course of a year, we gauge somewhere between £20 and £500, depending on what is sold and to whom. The lower end of this estimate is near to what US Facebook users were worth to Facebook in 2016 (the Facebook estimates, taken from Facebook’s earnings reports on revenue from targeted ads and number of active users, increase for following years) (Gibbs 2016; Shapiro 2019). We reason that more granular and identifiable types of biometric and emotion data may garner far higher rates, such as for medical research.

To address payment for data about emotion and mental life, our survey inquired into three areas. Firstly, how comfortable respondents are with the idea of selling personal data about their emotions, moods and mental wellbeing in most circumstances. Possible responses are: ‘Almost totally comfortable, in nearly all circumstances’; ‘Fairly comfortable, in most circumstances’; ‘Fairly uncomfortable, in most circumstances’; ‘Almost totally uncomfortable, in nearly all circumstances’; and ‘Don’t know’. Secondly, we asked if participants would be prepared to sell to an organisation a wide range of named personal data types, some of which allow emotions, moods and mental states to be inferred. The question explains that insights about emotions, moods and mental states can be inferred through a variety of ways such as a change in voice tone or the filters we use on image-based social media sites. A wide range of data types are presented in the possible responses of what the participant would be prepared to sell, including those that are routinely ‘extracted’ but do not reveal emotion (such as age, gender, hobbies); those that are routinely ‘extracted’ and from which emotion can be inferred (such as social media content, e.g. archive of Facebook posts and likes); more recent personal data types from which emotion can be inferred (such as biometric data like facial expressions, voice and heart rate); and other forms of personal data that help add accuracy to the inferred emotion (contextual data such as location and who a person is with). Thirdly, prompted from our prior focus group work that uncovered willingness to sell to some organisations (such as for academic psychological research) but not others (such as for commercial ends), we devised questions that focus on selling personal data about emotions, moods and mental states to different types of organisations (covering a range of profit and non-profit organisations). For these questions, participants are given three possible response choices of: being willing to sell non-identifying data (for less money); being willing to sell identifying data (for more money); and being unwilling to sell any data.

5 Survey findings

5.1 Half would not sell their emotion data, but there are demographic nuances

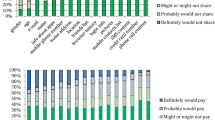

Our survey finds that half (50%) of the UK adult population are not comfortable with the idea of selling personal data about their emotions, moods and mental wellbeing, but a large minority are comfortable with this premise (43%) (see Table 1). There are large demographic differences. In line with previous surveys on emotion data and on PIMS (McStay 2020; Bakir et al. 2021), age makes the biggest difference, with successively younger adults far more comfortable with this premise than older adults. For instance, an average of 64.5% in the two youngest age groups are comfortable compared to an average of just 22% comfortable in the two oldest age groups (see Table 1). Majorities of those aged under 45 years old are comfortable with this premise, whereas majorities of those aged 45 years and older are uncomfortable (see Tables 1, 3). Employment status also makes a big difference: 54% of those in full time work are comfortable compared to just 30% of those not working; and 63% of those not working are uncomfortable compared to just 39% of those in full time work (see Tables 2, 3). Household income also makes a big difference. Half (50%) of those in the two highest household income brackets (> £41,000) are comfortable with this premise, compared to just 38% comfortable in the second and third lowest income brackets. Notably, majorities of those in these two lower income brackets are uncomfortable with this premise (see Tables 2, 3). Another large difference is to be found in ethnicity: a small majority (53%) of White adults are uncomfortable with being paid for emotion data with far fewer non-White adults uncomfortable (33%). Most non-White adults (61%) are comfortable with this premise, with far fewer White adults comfortable (41%) (see Tables 1, 3). Socio-economic grou** makes a small difference, with small majorities in C1 (53%), and DE (52%) uncomfortable (compared to 48% uncomfortable in C2 and AB) (see Tables 2, 3). Gender also makes a difference: a small majority (54%) of females are uncomfortable but fewer males (46%) are uncomfortable (see Tables 1, 3).

5.2 What data is about, matters

Survey participants were asked to consider whether they would be willing to sell a wide range of different types of personal data, some of which allow emotions, moods and mental states to be inferred (see Table 4). The question’s preamble advised that insights about emotions, moods and mental states can be inferred in multiple ways including a change in voice tone, or the filters we use on image-based social media sites. Given this framing, we find a small majority (52%) willing to sell personal data about their age and gender to any organisation, but only a minority are willing to sell all other personal data types listed. The personal data types that people are least willing to sell include those from which emotion can be inferred, namely wearable data (heart rate and other data about the body) (only 28% are willing to sell this), social media content (19%), facial expressions (17%) and voice assistant data (15%). A third (33%) are not willing to sell any of the personal data types that we listed (see Table 4).

5.3 Organisation type matters too

Organisation type influences whether someone is willing to sell their emotion data. When asked to consider whether they would sell personal data (anonymised, identified, or at all) about their emotions, moods and mental state to a wide range of different types of organisations, just under two thirds are in favour of selling to the UK’s National Health Service (NHS) (65%) and for academic scientific research (61%), and a smaller majority would do so to mental health charities (58%) and to private drug and health companies (51%). Far fewer are willing to sell such data to organisations that could seek to manipulate them, including to advertisers and marketers (only 41% are willing), social media and other technology firms (only 38% are willing), or political parties and campaigners during elections or referenda (only 37% are willing) (see Table 5).

Of those willing to sell personal data about emotions, mood and mental states, when asked if they would sell identifying data (to be paid more) or non-identifying data (to be paid less), respondents opted to be paid more for data of an arguably more confidential sort, especially for the non-profit organisations. Exceptions were selling to advertisers and marketers, and social media and other tech firms (see Table 6). There are no noticeable demographic patterns within these findings, or any particularly large demographic divergences.

Also of interest are the demographic characteristics of those majorities willing to sell their personal data about emotions, moods and mental state to different types of organisation. Table 7 shows that majorities of all demographic groups are willing to sell to the NHS, but this does not hold for the other organisations. For academic scientific research, there is no longer a majority of older adults who are willing to sell: whereas an average of 55.5% of those aged 65 + are willing to sell to the NHS, this figure drops to 46% in terms of willingness of this age group to sell to academic scientific research. For mental health charities, the demographic groups where there is no longer a majority willing to sell expands to those not working. For private drug and health companies, the demographic groups where there is no longer a majority willing to sell expands still further to include those with household income groups of up to £28,000, those in the lowest socio-demographic groups, and females. For the remaining for-profit organisations in Table 7, although there is no overall majority willing to sell, the demographic groups where majorities are repeatedly unwilling to sell are White, older, outside the higher household income groups, employed part-time or not in work.

6 Discussion

The survey findings present a nuanced picture of people’s willingness to sell their biometric and emotion data. Overall, half (50%) of the UK adult population are seemingly not comfortable with the idea of selling such data, but a large minority are comfortable with this premise (43%). However, there are clear demographic differences. Majorities uncomfortable with selling their biometric or emotion data in most circumstances comprise older adults (those aged 45 years or older); of White ethnicity; female; those not working; those in lower socio-economic groups (C1, D or E); and with lower household incomes (of £14,001–£28,000) (see Table 3). While not forming an outright majority, more than not in the lowest income bracket are also uncomfortable with selling such data in most circumstances (see Table 2). Several of these demographic differences speak to different financial circumstances (namely, employment status, socio-economic group and household income). When we explored people’s willingness to sell to different types of organisation, demographic nuances are again observable. While majorities of all demographic groups are willing to sell to the NHS, this does not hold for the other organisations, with participants’ age (older adults), economic circumstances (those not in work, those in part-time work, and those with lower household incomes), and ethnicity (White) repeatedly characterising those unwilling to sell to other organisations (see Table 7). Indeed, whether queried about willingness to sell their biometric and emotion data in most circumstances (Table 3), or to specific organisations (Table 7), the common demographic markers of being uncomfortable with this premise point to age, to poorer financial circumstances, and to ethnicity. We discuss the marker of poorer financial circumstances first (and in relation to Sandel’s fairness argument), followed by the marker of age (in relation to Sandel’s corruption argument). We do not discuss the marker of ethnicity as the data signals are less clear: this is because for some of the survey questions, some ethnicities among the non-White category align more with White ethnicity in terms of whether or not a majority would sell their emotion data, with very large divergences among sub-groups. For instance, on the question of willingness to sell emotion data to political parties and campaigning groups, alongside the minority (35%) of White ethnicity willing to sell, a minority of Asian or Asian British (47%) are willing to sell, but a majority of Black or Black British (52%) would sell, as would a large majority of Mixed (63%) and Chinese ethnicities (69%).

That more of those in poorer financial circumstances are uncomfortable with selling biometric or emotion data certainly connects with the idea that data dividends would encourage unequal application of human rights due to differential financial incomes (creating privacy ‘haves’ and ‘have nots’) (Spiekermann et al. 2015). Although we are not able to say that privacy ‘haves/have nots’ motivated the responses in the survey, we think that it is reasonable to believe that those who may be more susceptible to needing the money that sale of biometric and emotion data could generate (i.e. those who are poorer) are more sensitised to (and turned off by) the prospect of this means of economic augmentation and potentially data exploitation (assuming the real value of the data is more than what a person would be paid for it). For context, previous UK-based surveys find that wealthier people tend to see more benefit to digital and datafied technologies in their lives than people who are not wealthy (57% compared to 43%) (Doteveryone 2018). Similarly, UK-based focus groups find that participants with experiences of poverty are excluded from digital services because they could not afford to access them (Kennedy et al. 2021). American research also finds the poor subject to more surveillance, including digital surveillance, than other subpopulations, and are less likely to use privacy-enhancing tools or practices (Madden et al. 2017). Simply put, the poor may be sensitised to the idea that selling their biometric and emotion data is exploitative; may feel unable to engage with such exchanges due to lack of access to digital technologies; and may be unwilling to engage with such exchanges as it represents yet another form of privacy-invasive surveillance.

With these various explanations in play, the survey’s core finding that levels of comfort with selling one’s emotion data is contingent upon financial circumstances suggests need for further study and reflection, especially if one sides with putting individuals’ benefits (freedom of choice) over the collective good. With all of these explanations variously pointing to economic exploitation and inequalities, we should recollect that Sandel’s (2012) moral limits of markets thesis, a motivating idea for this paper, is partially based on the principle of fairness (namely, scope for free choice, especially when people are challenged economically). Consequently, Sandel’s argument regarding the moral limits of markets has value in protecting the fundamental freedoms of those in society who are arguably least able to. Indeed, market advocates should answer the question of how general welfare is advanced through incentives for an exchange deemed by the weaker party not to be in their interests.

Sandel’s second test for the moral limits of markets thesis is based on corruption, where certain services involve a degrading and objectifying view of humans and human being. That far more older than younger adults are uncomfortable with selling their biometric or emotion data may simply reflect that older adults in the UK are far less comfortable than younger adults with the idea of sharing their emotion data, as shown repeatedly in national surveys exploring a wide variety of near horizon use cases (McStay 2020; Bakir et al. 2021). Our focus group findings on selling emotion data via a PIMS add the insights that older adults (but not younger adults) raise the concern that emotions should not be exploited for commercial ends. One reason for an age-based continuum is that younger people’s attitudes might be interpreted in the context of emerging forms of payment systems, communities built around payment, and normalisation of transactional identities (Swartz 2020), even if the latter is assisted by resignation to processing of personal data (Draper and Turow 2019). With minimal studies on older people’s views and experiences of AI discrimination (Stypinska 2022), the idea that older people find the premise of selling their emotion data via a PIMS as out of bounds is meaningful. This is a finding that suggests that older people may be taking a moral stance against such emerging technologies. This adds to other, more generalised, explanations (from wider studies of older people and AI) that point to older people’s lack of awareness of what algorithms do (Gran et al. 2020), and lower access to digital services (The British Academy 2022) than younger people: conceivably, such data inequalities may impede older people from seeing the benefits of such data exchanges. Other studies also observe age discrimination in AI and Machine Learning systems and technologies (Chu et al. 2022), including (older) age-related inaccuracies of biometric systems (Rosales and Fernández-Ardèvol 2019): conceivably, such discriminatory experiences may also feed older adults’ lack of comfort with these emerging technologies. These various explanations, of course, are not mutually exclusive.

Beyond the headline findings of who is comfortable or not with selling their biometric and emotion data, this paper is interested in testing the moral absolute of not being able to sell such data under any circumstances, by factoring for a range of data types and organisations. Selling data about bodies and emotions may easily be argued to be ‘corrupt’ but we were interested in a more granular assessment of types of data sold and organisation sold to. Inclusion of multiple types of personal data, some of which allow emotion, mood and mental state to be inferred, generated large differences in what types of personal data people say they are willing to sell to any organisation. A small majority (52%) are willing to sell personal data about their age and gender to any organisation, but only a small minority are willing to sell data from which emotions, moods and mental states can be inferred (ranging from 28% for data from wearables to just 17% for facial expressions and 15% for voice assistant data), suggesting relevance for Sandel’s corruption argument (see Table 4). Applied, it supports the principle that selling data about bodies and emotions is a degrading and objectifying view of humans and human being.

On organisations that respondents would be willing to sell to, findings here are nuanced, leading to what we see as the paper’s most important finding: the type of organisation sold to impacts on whether people are willing to sell their emotion data. A large majority (just under two thirds) are in favour of selling to the NHS and for academic scientific research, with smaller majorities willing to sell to mental health charities, and to private drug and health companies. However, far fewer are willing to sell their emotion data to organisations that could seek to manipulate them (see Table 5). So far, no surprise, but especially when placed in context of the corruption charge against PIMS and selling of data about bodies and emotions, differences in attitude to type of organisation blurs any suggestion that the moral limits of markets for selling intimate data are crisp and absolute. We reiterate that surveys are blunt tools, and add that citizen polling in itself is not a good basis for policymaking, but the finding sits well with European and British policy initiatives that seek to create more social value from opt-in uses of personal and sensitive data (we have in mind the EU’s proposed Data Governance Act (European Commission 2020), the UK’s National Data Strategy (DCMS and DSIT 2020), and longstanding UK policy interest in data trusts). PIMS-based approaches to crowd-sourced health research, academic research, and not-for-profits groups (such as mental health charities) do seem to be of interest to the British public, especially given how all the organisations have been ranked in our survey. Notably, organisations that are extractive, for-profit, and politically motivated fared less well, perhaps providing a cut-off for policymakers to the types of organisations where data dividends are acceptable and might be allowed. Interestingly, with just over half (51%) of UK adults willing to sell to private drug and health companies (a category that comes just below the not-for-profits), this perhaps indicates scope for exemptions under certain circumstances (see Table 5).

With willingness to sell biometric and emotion data (in nearly all circumstances or to specific organisations) also related to age (older adults are far less comfortable than younger adults); ethnicity (White adults are less comfortable than non-White adults); and gender (women are less comfortable than men) (see Tables 3, 6), this raises questions about the universality of moral limits of data markets. With different groups having different outlooks, seemingly not informed by income or employment status, a question regarding the appropriateness of moral absolutes is raised, especially where self-determination is a factor. We echo the observation made by various critical data scholars that to understand experiences of, and attitudes towards, data practices, we should decentre data and acknowledge, for instance, the relationship between datafication and inequalities, and how they both shape each other (Gangadharan and Niklas 2019). As noted earlier, given that many studies that might help us understand how inequalities shape data-driven systems and people’s experiences of them are from the USA, we call for more research in the UK context to attend to the lived realities of digital inequalities. In addition to qualitative exploration of age-based, ethnic, gender and socio-economic dimensions in the UK, it would be interesting to replicate the survey in other countries, not least as different countries allow different exposure to industries extracting emotion data (Bakir and McStay 2023).

7 Conclusion

Counterintuitively, care must be taken not to read too much into public opinion, especially when it comes to designing governance, policy and law. Insights into public opinion should be balanced by lay people’s lack of awareness regarding the fuller implications of choices and decisions. Experts, of course, should design governance, but the questions asked, and the opinion-based findings generated here, are suggestive and highlight the importance of attending to demographic diversity as well as to the crucial contexts of data type sold and organisation type sold to. While survey-based research is limited in understanding the reasons behind the opinions expressed, we recommend that further qualitative work is needed to explore the demographic differences (especially regarding household income, socio-economic and employment status variations, but also age, ethnicity and gender) regarding levels of comfort with the idea of being paid for biometric and emotion data, as well as further comparative quantitative and qualitative work in countries beyond the UK. Of particular value would be intersectional work that explores, for instance, the views of those who are older, not working, in lower socio-economic groups, with lower household incomes, White and female (these representing the combined characteristics of our survey participants who are uncomfortable with the idea of selling their biometric or emotion data via a PIMS, as summarised in Table 3). As critical data studies have observed (Gangadharan and Niklas 2019; Kennedy et al 2021; The British Academy 2022), how different, multiple inequalities shape people’s experiences of, and understanding of, data practices is a crucial missing gap in current scholarship. Also of value would be research that recruits participants through non-digital means (a limitation of our study), especially given our findings around income and age, which are likely an understatement of the scale of the problem (for instance, in 2020, 99% of people in the UK aged 16–44 were recent internet users, compared to just 54% of those aged 75 and over; and only 60% of those earning under £12,000 per year were found to be internet users) (The British Academy 2022).

With one moral limit of markets being the question of whether data should be sold at all (and with the above caveats in mind), the data presented suggest context-constrained interest by the public in the premise, even in cases involving mental disposition, mood and emotion. Yet, it must not be missed that the profile of majorities uncomfortable with selling such data to any organisation includes those not working and the poorer (Table 3). Furthermore, the profiles of majorities unwilling to sell to mental health charities includes those not working; and the profiles of majorities unwilling to sell to private drug and health companies includes those not working, those in lower household income groups, and those in the lowest socio-demographic groups (Table 7). For the remaining for-profit organisations in Table 7, the demographic groups where majorities are unwilling to sell also repeatedly includes those outside the higher household income groups, those employed part-time or those not in work. Given wider concerns about extractive logics and capitalism, the general corruption-based aversion found in this study regarding selling emotion data (see Table 4), and the aversion to selling emotion data to both for-profit and politically motivated organisations (see Table 5), we suggest that the moral limit is not sharp and distinct, certainly in relation to our study. Sandel’s moral edge is blurred by not-for-profit organisations, with our study finding majority support across all demographic groups for selling data about emotion to the UK’s NHS (and majority support across most demographic groups for selling data about emotion to academic and charity-based not-for-profit research) (see Tables 5, 7). Indeed, it is telling that majorities of less well-off survey respondents (as indicated by household income, employment type and socio-demographic group) are willing to sell to the NHS and to academic scientific research (see Table 7), although this leaves open the possibility that such non-profit organisations are the least bad option for those potentially desperate for money.

To conclude, this paper adds to the substantial literature on data ethics by turning the focus to data dividends, and for a data type increasingly sought by organisations: namely biometrics and emotions. Given that sale of emotion data contradicts Sandel’s fairness criterion (for those in poorer financial circumstances) as well as his corruption criterion (very few are willing to sell data from which emotion, mood and mental state can be inferred); and a majority is unwilling to sell to organisations that are extractive, for-profit, and politically motivated), it recommends that sale of data about biometrics and emotions to profit-motivated companies should be prohibited. Yet, with non-profits, the debate takes on a different nature because they are outside of what Sandel sees as corrupting market logics, due to different motivations. This ethical question becomes one closer to medical ethics, that grapples with whether money is an undue inducement in research. We do not resolve this here but recognise that even with non-profit motivations on behalf of organisations, the question about the morality of selling biometric and emotion data is not fully remedied. Yet, with research potential and pro-social gains possible through opt-in mass profiling (notwithstanding inducement concerns), there is an argument to be made for a blurring of the moral limits of paid transactions and markets.

Data availability

Datasets for the entire project, and full methodological details, are available in the UK Data Archive repository. The reference is: Bakir, V, McStay, A and Laffer, A (2021). UK attitudes towards personal data stores and control over personal data, 2021. [Data Collection]. Colchester, Essex: UK Data Service. https://doi.org/10.5255/UKDA-SN-855178. Available from: https://reshare.ukdataservice.ac.uk/855178/. In this paper, we have presented selected data of pertinent results, and have stated where the full results can be publicly accessed.

References

Acquisti A, Taylor C, Wagman L (2016) The economics of privacy. J Econ Lit 54(2):442–492. https://doi.org/10.1257/jel.54.2.442

McStay A (2018) Emotional AI: the rise of empathic media. London: Sage

McStay A (2020) Emotional AI, soft biometrics and the surveillance of emotional life: an unusual consensus on privacy. Big Data Soc 7(1). https://doi.org/10.1177/2053951720904386

Bakir V, McStay A (2023) Optimising emotions, incubating falsehoods: how to protect the global civic body from disinformation and misinformation. Cham: Palgrave Macmillan. https://doi.org/10.1007/978-3-031-13551-4

Bakir V, McStay A, Laffer A (2021) UK attitudes towards personal data stores and control over personal data, 2021. [Data Collection]. Colchester, Essex: UK Data Service Reshare. Reference number 10.5255/UKDA-SN-855178

Barrett LF, Adolphs R, Marsella S, Martinez AM, Pollak SD (2019) Emotional expressions reconsidered: challenges to inferring emotion from human facial movements. Psychol Sci Public Interest 20(1):1–68. https://doi.org/10.1177/1529100619832930

Beauvisage T, Mellet K (2020) Datassets: assetizing and marketizing personal data. In: Birch K, Muniesa F (eds) Turning things into assets. MIT Press, Cambridge, pp 75–95

Benjamin R (2019) Race after technology: abolitionist tools for the new Jim Code. Polity, Cambridge

Boddington G (2021) The Internet of Bodies—alive, connected and collective: the virtual physical future of our bodies and our senses. AI Soc. https://doi.org/10.1007/s00146-020-01137-1

Brunton F, Nissenbaum H (2015) Obfuscation: a user’s guide for privacy and protest. MIT Press, Cambridge

Charitsis V, Zwick D, Bradshaw A (2018) Creating worlds that create audiences: theorising personal data markets in the age of communicative capitalism. TripleC Commun Capital Crit 16(2):820–834. https://doi.org/10.31269/triplec.v16i2.1041

Chu CH, Nyrup R, Leslie K, Shi J, Bianchi A, Lyn A et al (2022) Digital ageism: challenges and opportunities in artificial intelligence for older adults. Gerontologist. https://doi.org/10.1093/geront/gnab167

Cotter K, Reisdorf BC (2020) Algorithmic knowledge gaps: a new dimension of (digital) inequality. Int J Commun 14:745–765

Crawford K (2021) The atlas of AI: power, politics, and the planetary costs of artificial intelligence. Yale University Press, New Haven

Curto G, Jojoa Acosta MF, Comim F et al (2022) Are AI systems biased against the poor? A machine learning analysis using Word2Vec and GloVe embeddings. AI Soc. https://doi.org/10.1007/s00146-022-01494-z

Data Dividend Project (2021) Homepage. https://www.datadividendproject.com/. Accessed 1 Jan 2023

DCMS, DSIT (2020) UK national data strategy. https://www.gov.uk/government/publications/uk-national-data-strategy. Accessed 31 May 2023

Dencik L, Hintz A, Redden J, Warne H (2018) Data scores as governance: investigating uses of citizen scoring in public services project report. Data Justice Lab, Cardiff University, UK. https://orca.cardiff.ac.uk/id/eprint/117517/. Accessed 1 Jan 2023

Digi.me (2021) Ubdi. https://digi.me/ubdi/#slide-0. Accessed 1 Jan 2023

Doteveryone (2018) People, power and technology: the 2018 digital attitudes report. https://understanding.doteveryone.org.uk. Accessed 1 Jan 2023

Draper NA (2019) The identity trade: selling privacy and reputation online. New York University Press, New York

Draper NA, Turow J (2019) The corporate cultivation of digital resignation. New Media Soc 21(8):1824–1839. https://doi.org/10.1177/1461444819833331

Eubanks V (2018) Automating inequality: how high-tech tools profile, police, and punish the poor. St Martin’s Press, New York

Eurobarometer (2019) Survey 487b. Charter of Fundamental Rights. https://europa.eu/eurobarometer/surveys/detail/2222. Accessed 1 Jan 2023

European Commission (2020) Proposal for a regulation of the European Parliament and of the Council on European data governance (Data Governance Act). Last modified April 22, 2022. https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A52020PC0767 Accessed 1 Jan 2023

European Data Protection Supervisor (2016) Opinion 9/2016: EDPS Opinion on personal information management systems. https://edps.europa.eu/sites/default/files/publication/16-10-20_pims_opinion_en.pdf Accessed 1 Jan 2023

European Data Protection Supervisor (2020) Personal information management systems. TechDispatch. https://edps.europa.eu/sites/default/files/publication/21-01-06techdispatch-pimsen0.pdf. Accessed 1 Jan 2023

Financial Conduct Authority (2019) The future of regulation: AI for consumer good. https://www.fca.org.uk/news/speeches/future-regulation-ai-consumer-good. Accessed 1 Jan 2023

Fisher L (2009) Target marketing of subprime loans: racialized consumer fraud and reverse redlining. Brooklyn Law J 18(1):121–155

Floridi L, Cowls J, Beltrametti M, Chatila R, Chazerand P, Dignum V, Luetge C, Madelin R, Pagallo U, Rossi F, Schafer B, Valcke P, Vayena E (2018) An ethical framework for a good AI society: opportunities, risks, principles, and recommendations. Mind Mach 28:689–707. https://doi.org/10.1007/s11023-018-9482-5

Fuchs C (2013) Digital labour and Karl Marx. Routledge, New York

Gandy OH (2009) Coming to terms with chance: engaging rational discrimination and cumulative disadvantage. London and New York: Routledge

Gangadharan SP (2012) Digital inclusion and data profiling. First Monday 17(5). http://firstmonday.org/ojs/index.php/fm/article/view/3821/3199. Accessed 1 Jan 2023

Gangadharan SP (2017) The downside of digital inclusion: expectations and experiences of privacy and surveillance among marginal Internet users. New Media Soc 19(4):597–615. https://doi.org/10.1177/1461444815614053

Gangadharan SP, Niklas J (2019) Decentering technology in discourse on discrimination. Inf Commun Soc 22(7):882–899. https://doi.org/10.1080/1369118X.2019.1593484

Gibbs S (2016) How much are you worth to Facebook? The Guardian, 28 January. https://www.theguardian.com/technology/2016/jan/28/how-much-are-you-worth-to-facebook. Accessed 31 May 2023

Gran AB, Booth P, Bucher T (2020) To be or not to be algorithm aware: a question of a new digital divide? Inf Commun Soc. https://doi.org/10.1080/1369118X.2020.1736124

Gregory K, Sadowski J (2021) Biopolitical platforms: the perverse virtues of digital labour. J Cult Econ. https://doi.org/10.1080/17530350.2021.1901766

Hagel J III, Singer M (1999) Net worth: sha** markets when customers make the rules. Harvard Business Review Press, Boston

Hargittai E, Marwick A (2016) ‘What can I really do?’ Explaining the privacy paradox with online apathy. Int J Commun 10:3737–3757

Hartman T, Kennedy H, Steedman R, Jones R (2020) Public perceptions of good data management: findings from a UK-based survey. Big Data Soc 7(1):1–16. https://doi.org/10.1177/2053951720935616

IAPP (2019) Personal information management systems: a new era for individual privacy? https://iapp.org/news/a/personal-information-management-systems-a-new-era-for-individual-privacy. Accessed 1 Jan 2023

Janssen H, Cobbe J, Singh J (2020) Personal information management systems: a user-centric privacy utopia? Internet Policy Rev 9(4):1–25. https://doi.org/10.14763/2020.4.1536

Kennedy H, Oman S, Taylor M, Bates J, Steedman R (2020) Public understanding and perceptions of data practices: a review of existing research. Living with data, University of Sheffield. http://livingwithdata.org/current-research/publications/. Accessed 1 Jan 2023

Kennedy H, Steedman R, Jones R (2021) Approaching public perceptions of datafication through the lens of inequality: a case study in public service media. Inf Commun Soc 24(12):1745–1761. https://doi.org/10.1080/1369118X.2020.17361

Lagerkvist A, Tudor M, Smolicki J et al (2022) Body stakes: an existential ethics of care in living with biometrics and AI. AI & Society. https://doi.org/10.1007/s00146-022-01550-8

Lanier K (2013) Who owns the future? Simon & Schuster, New York

Madden M, Gilman M, Levy K, Marwick A (2017) Privacy, poverty, and big data: a matrix of vulnerabilities for poor Americans. Washington University Law Review 95(1): 053. https://openscholarship.wustl.edu/law_lawreview/vol95/iss1/6. Accessed 1 Jan 2023

Mantello P, Ho M‑T (2022) Curmudgeon corner: why we need to be weary of emotional AI. AI & Society. https://doi.org/10.1007/s00146-022-01576-y

Mechant P, De Wolf R, Van Compernolle M, Joris G, Evens T, De Marez L (2021) Saving the web by decentralizing data networks? A socio-technical reflection on the promise of decentralization and personal data stores, 2021 14th CMI international conference—critical ict infrastructures and platforms. pp 1–6. https://doi.org/10.1109/cmi53512.2021.9663788

Miles MB, Huberman AM, Saldana J (2014) Qualitative data analysis. Sage, London

Obar JA, Oeldorf-Hirsch A (2020) The biggest lie on the Internet: ignoring the privacy policies and terms of service policies of social networking services. Inf Commun Soc 23(1):128–147. https://doi.org/10.1080/1369118x.2018.1486870

OHCHR (2021) OHCHR and privacy in the digital age. https://www.ohchr.org/en/issues/digitalage/pages/digitalageindex.aspx. Accessed 1 Jan 2023

Orzech KM, Moncur W, Durrant A, Trujillo-Pisanty D (2016) Opportunities and challenges of the digital lifespan: views of service providers and citizens in the UK. Inf Commun Soc 21(1):14–29. https://doi.org/10.1080/1369118X.2016.1257043

Picard RW (1997) Affective computing. MIT, Cambridge

Podoletz L (2022) We have to talk about emotional AI and crime. AI Soc. https://doi.org/10.1007/s00146-022-01435-w

Rog DJ, Bickman L (2009) The SAGE handbook of applied social research methods. SAGE, Newcastle upon Tyne

Rosales A, Fernández-Ardèvol M (2019) Structural ageism in big data approaches. Nordicom Rev 40(s1):51–64. https://doi.org/10.2478/nor-2019-0013

Sadowski J (2019) When data is capital: datafication, accumulation, and extraction. Big Data Soc 6(1):1–12. https://doi.org/10.1177/2053951718820549

Sandel M (2012) What money can’t buy: the moral limits of markets. Penguin, London

Shapiro, RJ (2019) What Your Data Is Really Worth to Facebook. Washington Monthly. https://washingtonmonthly.com/2019/07/12/what-your-data-is-really-worth-to-facebook/. Accessed 31 May 2023

Sharp E (2021) Personal data stores: building and trialling trusted data services. BBC Research & Development. https://www.bbc.co.uk/rd/blog/2021-09-personal-data-store-research . Accessed 1 Jan 2023

Smith M, Miller S (2022) The ethical application of biometric facial recognition technology. AI Soc 37:167–175. https://doi.org/10.1007/s00146-021-01199-9

Smythe DW (1977) Communications: blindspot of Western Marxism. Can J Political Soc Theory 1(3):1–27

Spiekermann S, Acquisti A, Böhme R, Hui LK (2015) The challenges of personal data markets and privacy. Electron Mark 25:161–167. https://doi.org/10.1007/s12525-015-0191-0

Stark L, Hutson J (2021) Physiognomic Artificial Intelligence. SSRN. https://papers.ssrn.com/sol3/papers.cfm?abstractid=3927300. Accessed 1 Jan 2023

Stypinska J (2022) AI ageism: a critical roadmap for studying age discrimination and exclusion in digitalized societies. AI Soc. https://doi.org/10.1007/s00146-022-01553-5

Swartz L (2020) New money: how payment became social media. Yale University Press, New Haven

The British Academy (2022) Understanding digital poverty and inequality in the UK. Digital Society. https://www.thebritishacademy.ac.uk/publications/understanding-digital-poverty-and-inequality-in-the-uk/. Accessed 1 Jan 2023

Valcke P, Clifford D, Steponėnaitė VK (2021) Constitutional challenges in the emotional AI Era. In: Micklitz HW, Pollicino O, Reichman A, Simoncini A, Sartor G, De Gregorio G (eds) Constitutional challenges in the algorithmic society. Cambridge University Press, Cambridge

Viljoen S (2020) Data as Property? Phenomenal World, https://phenomenalworld.org/analysis/data-as-property. Accessed 1 Jan 2023

Funding

This work was supported by Economic and Social Research Council (ES/T00696X/1) and Innovate UK (TS/T019964/1).

Author information

Authors and Affiliations

Contributions

The funded projects on which this paper was based were led by AM. VB and AM co-devised and analysed the interviews and surveys. Alexander Laffer co-devised (with VB and AM) the focus groups, conducted the focus groups, thematically coded the transcripts, and led on data archiving. The writing of the manuscript was led by VB, and contributed to by AM, and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose. On behalf of all authors, the corresponding author states that there is no conflict of interest.

Ethics approval

We have included, as a separate file, the approval from Bangor University ethics committee for the entire project (which included three empirical stages involving human beings—interviews, focus groups, and surveys). We also include the information sheet and informed consent forms that we used for all participants in our interviews and focus groups.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bakir, V., Laffer, A. & McStay, A. Blurring the moral limits of data markets: biometrics, emotion and data dividends. AI & Soc (2023). https://doi.org/10.1007/s00146-023-01739-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00146-023-01739-5